A video description method and system based on an information loss function

A technology of information loss and video description, applied in neural learning methods, character and pattern recognition, instruments, etc., can solve problems such as model learning problems, recognition errors, and discriminative word recognition errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

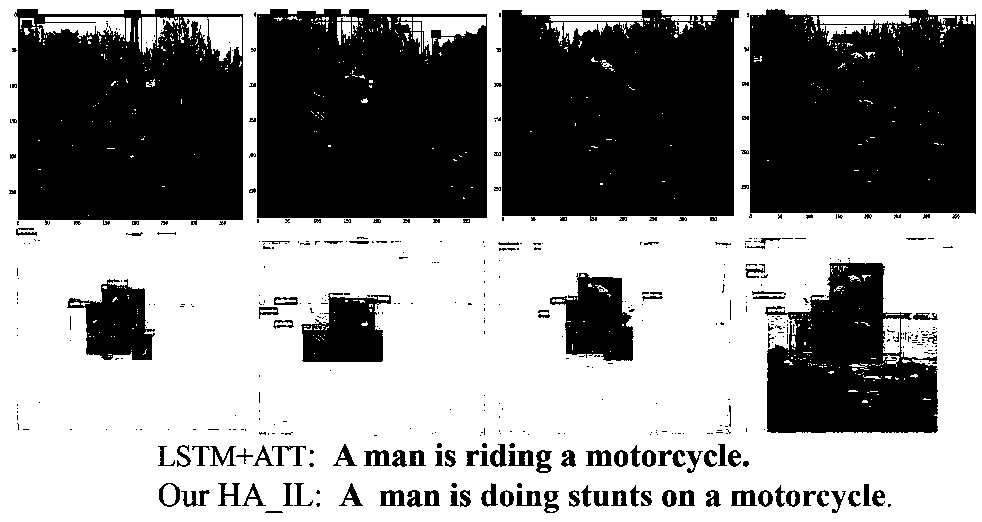

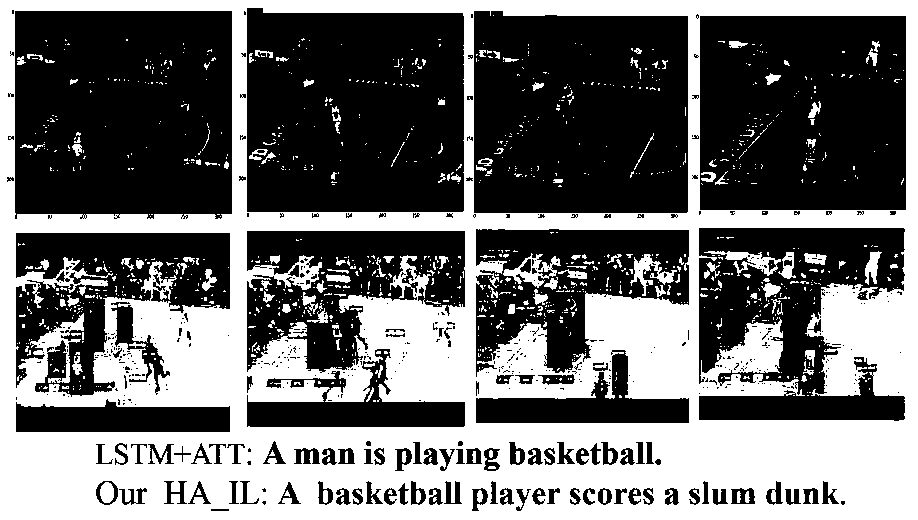

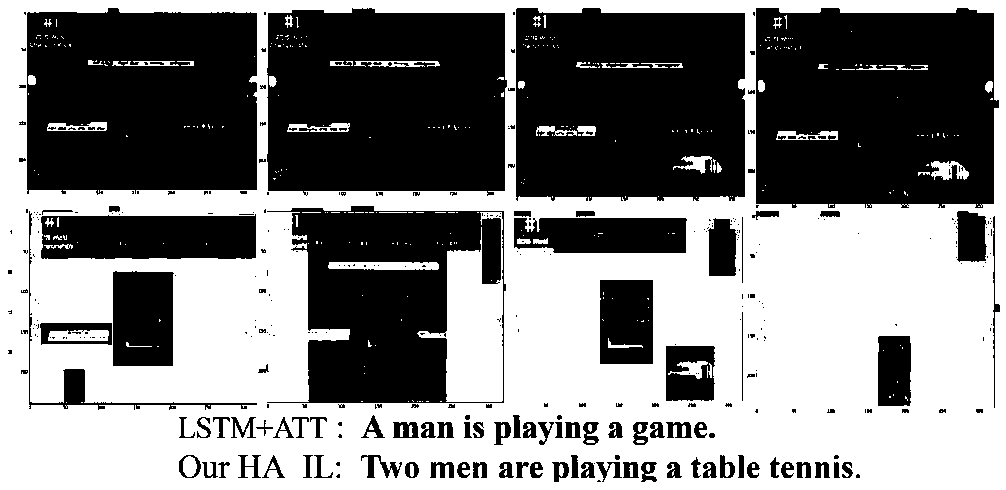

[0074] The purpose of the present invention is to overcome the problems of semantic word recognition errors and missing details in the language description generated by the above-mentioned existing video description method, and propose a video description method based on an information loss function. The method includes: 1) A learning strategy called information loss function is used to overcome the problem of description ambiguity caused by biased data distribution. 2) An optimized model framework includes a hierarchical visual representation and a hierarchical attention mechanism to fully exploit the potential of the information loss function.

[0075] Specifically as Figure 5 As shown, the present invention discloses a video description method based on an information loss function, which includes:

[0076] Step 1, obtain the training video, and input the training video to the target detection network, the convolutional neural network and the action recognition network res...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com