Multi-unmanned aerial vehicle path collaborative planning method and device based on hierarchical reinforcement learning

A multi-drone, reinforcement learning technology, applied in the field of aircraft

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055]In order to make the purpose, technical solution and advantages of the present invention clearer, a clear and complete description will be given below in conjunction with the schematic structural diagram of the device of the present invention and the detailed steps of the algorithm.

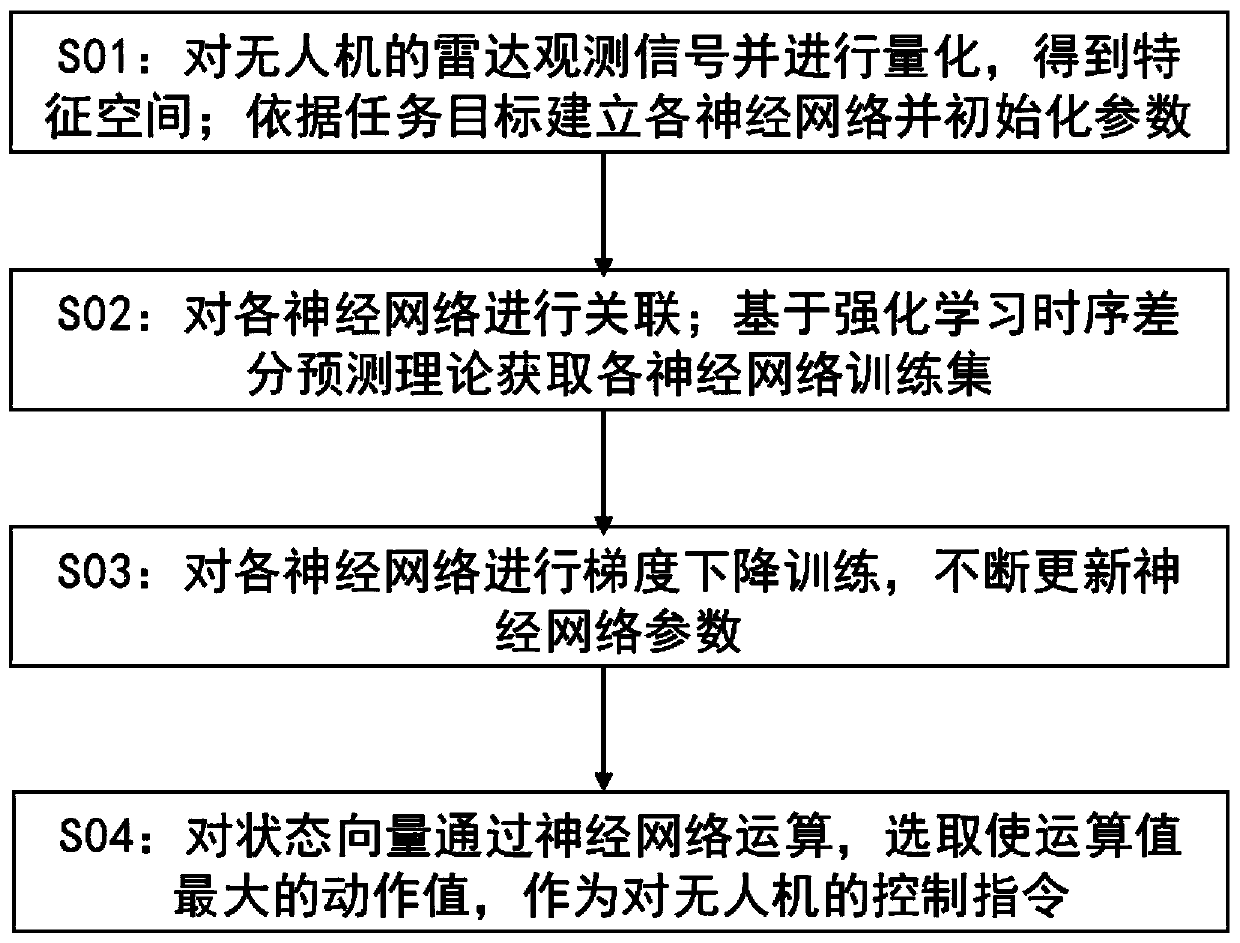

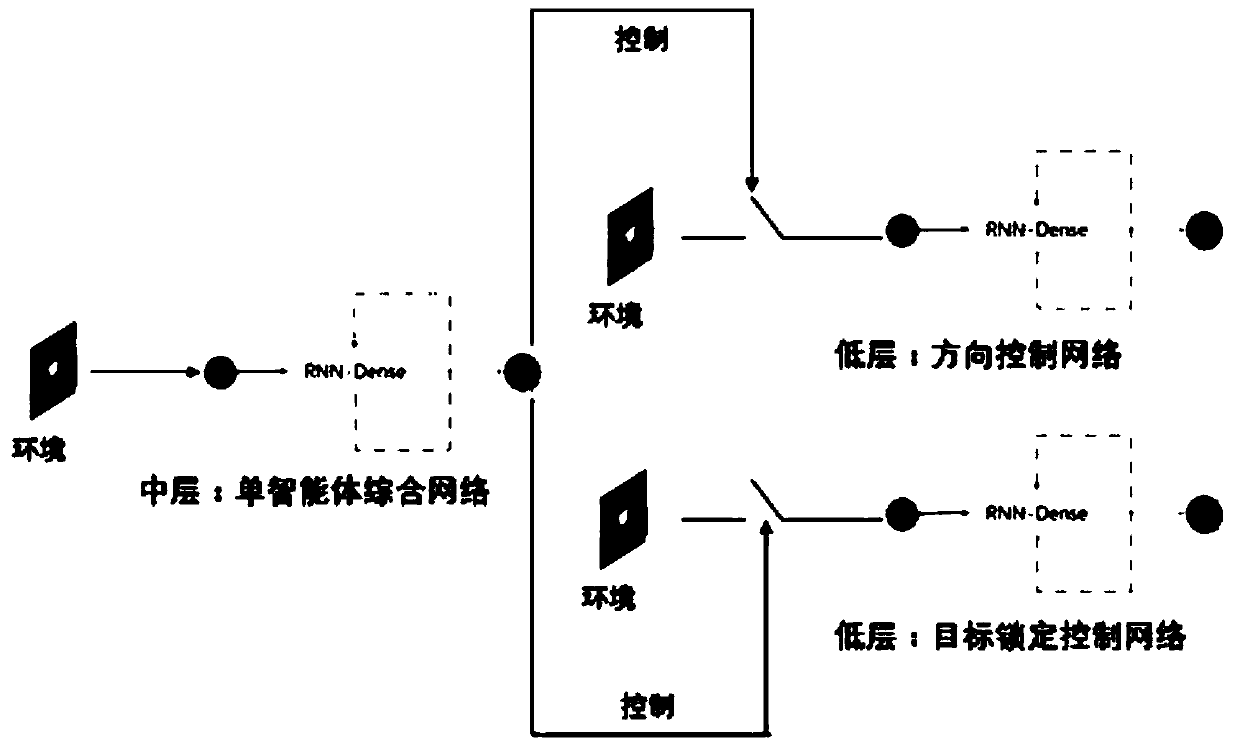

[0056] The present invention provides a multi-UAV cooperative path planning method in the air based on layered reinforcement learning. The problem considered is: for a single UAV, the shortest and safest path can be found; Satisfy certain conditional constraints, which are generally set according to the needs of actual tasks, for example: logistics robots keep flying in the same column as much as possible, and deliver a large batch of goods to the same distribution point.

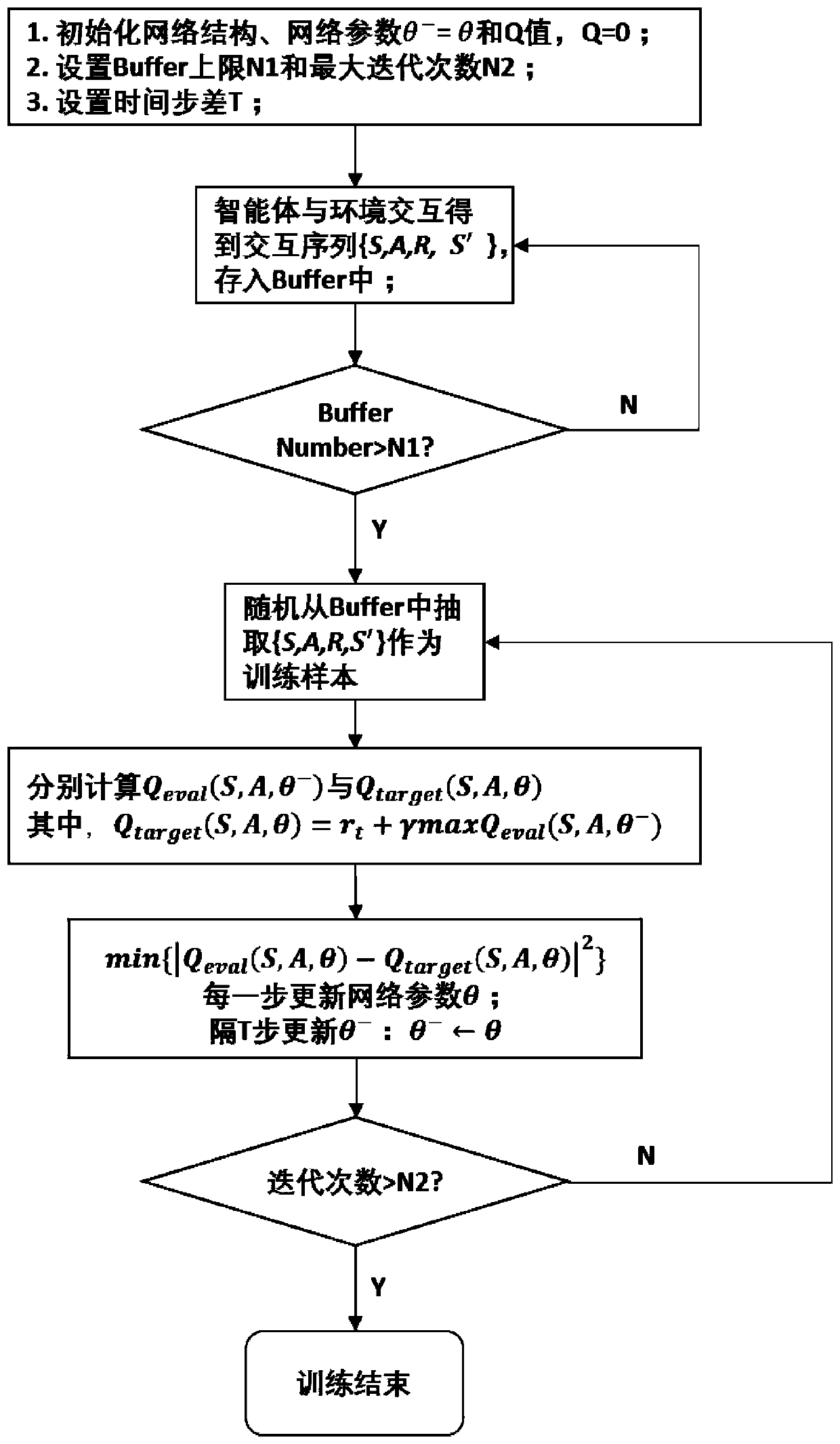

[0057] In order to eliminate the "dimension disaster" problem in the classical reinforcement learning Q-learning method, the neural network is used to store calculation parameters to improve real-time performance, and the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com