Coarse-to-fine video target behavior identification method

A recognition method and behavior technology, applied in character and pattern recognition, instruments, biological neural network models, etc., can solve problems such as increasing storage consumption and calculation amount

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022]The present invention will be further described below in conjunction with the accompanying drawings. The following examples will help those skilled in the art to further understand the present invention, but do not limit the present invention in any form. It should be noted that those skilled in the art can make several modifications and improvements without departing from the concept of the present invention. These all belong to the protection scope of the present invention.

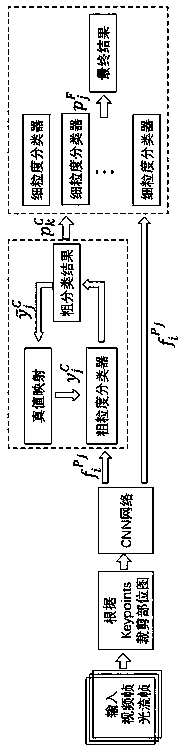

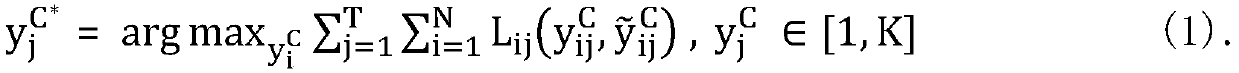

[0023] A coarse-to-fine video target behavior recognition method, which clusters some similar behavior categories into the same coarse-grained category, and trains different fine-grained classifiers so that different fine-grained classifiers have the ability to recognize the fine-grained classifiers. Differential properties between granular similar behaviors. The feature representations of fine-grained classifiers are weighted with body part information, and the feature representations of these ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com