Guided hair extraction method based on motion similarity

An extraction method and similarity technology, applied in the field of dynamic simulation and editing of hair, can solve problems such as unintuitive modeling process and difficulty in directly obtaining hair movement results, and achieve fast editing speed and high fidelity effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0054] Below in conjunction with accompanying drawing and specific embodiment the present invention is described in further detail:

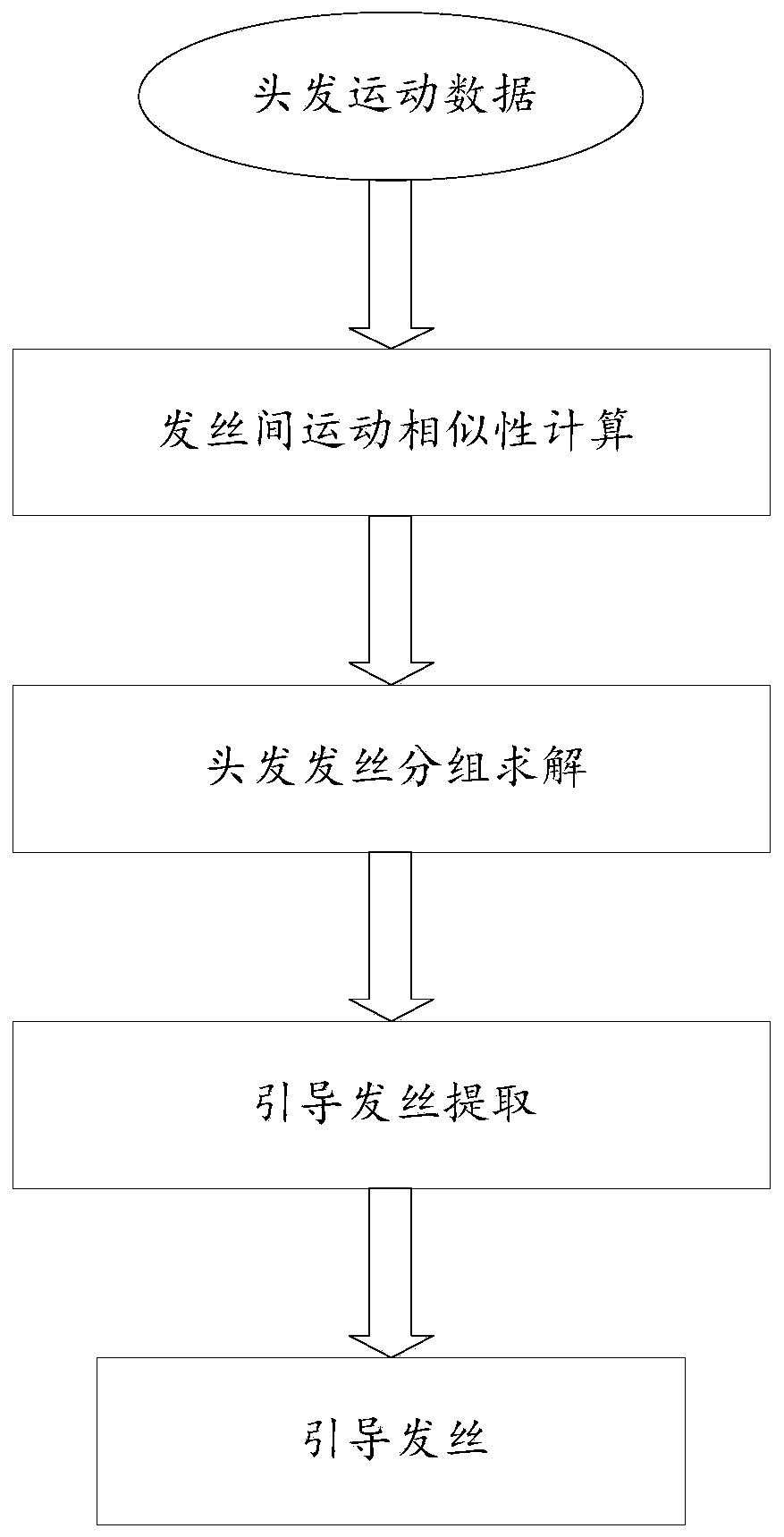

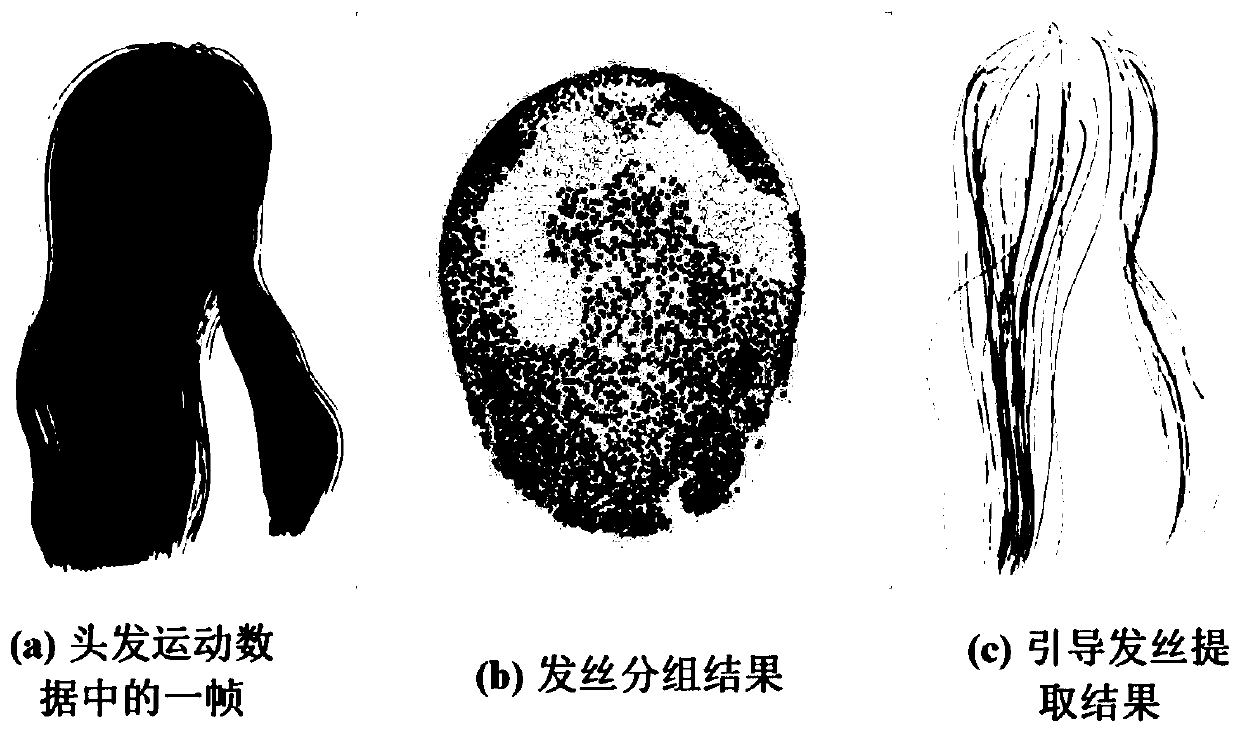

[0055] like figure 1 As shown, a guided hair extraction method based on motion similarity includes the following steps:

[0056] Step 1: Input hair motion data and build a graphical model to reflect the local motion similarity of hair strands.

[0057] This step consists of two processes:

[0058] One is to establish the basic topology of the graph, and the other is to define weights to measure motion similarity.

[0059] Among them, the specific process of establishing the basic topology structure of the graph is as follows:

[0060] I. Build a graph for all hairs at the level of hair particles rather than at the level of hair strands. Each node in the graph represents a hair particle, and the edges of the graph are initialized using the proximity between hair particles.

[0061] II. Define p i (f) represents the position of the hair parti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com