Action feature representation method fusing rotation quantity

A rotation and motion technology, applied in the field of motion recognition, can solve the problems of mutual interference of similar motions, slow recognition accuracy and speed, and achieve the effect of avoiding mutual interference and improving the accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

[0038] A motion feature representation method that integrates the amount of rotation, specifically according to the following steps:

[0039] Step 1: Action Feature Extraction,

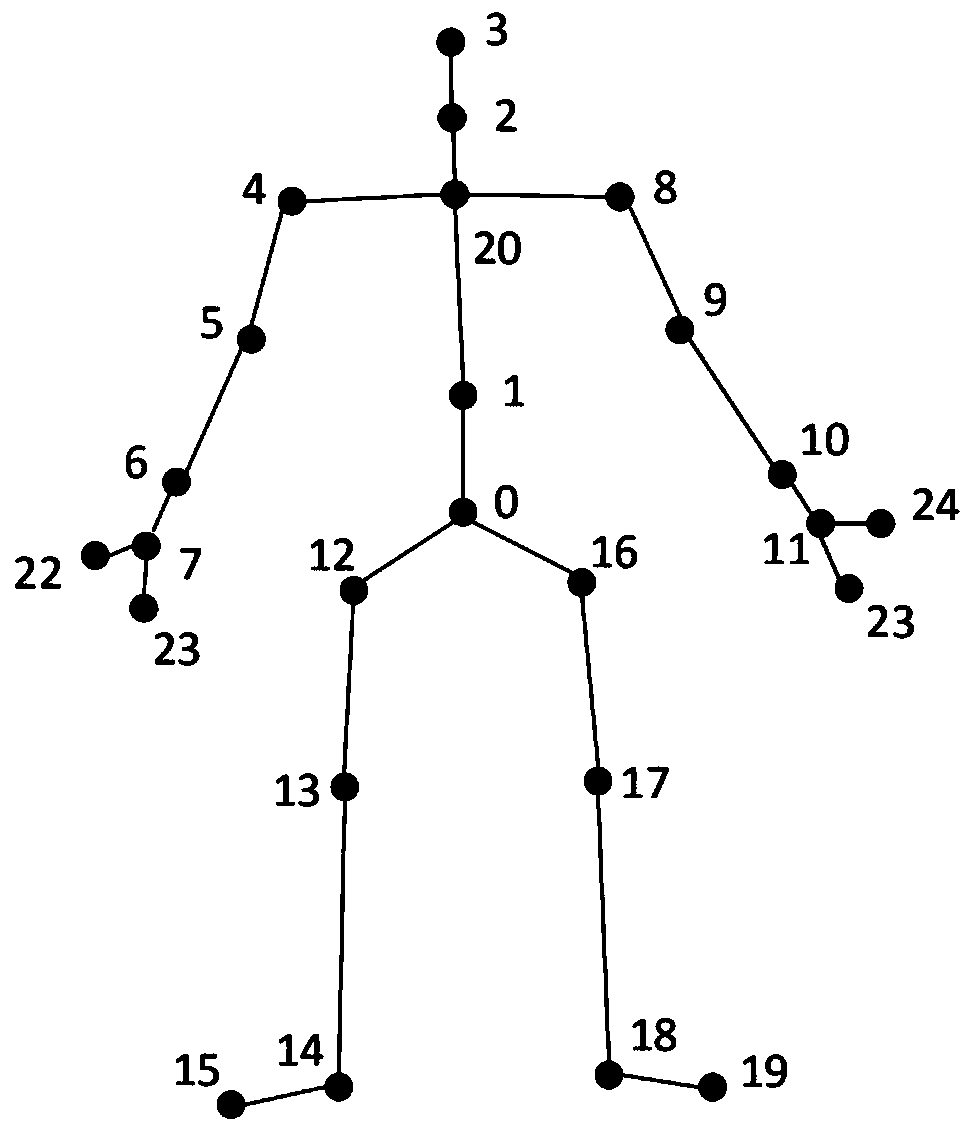

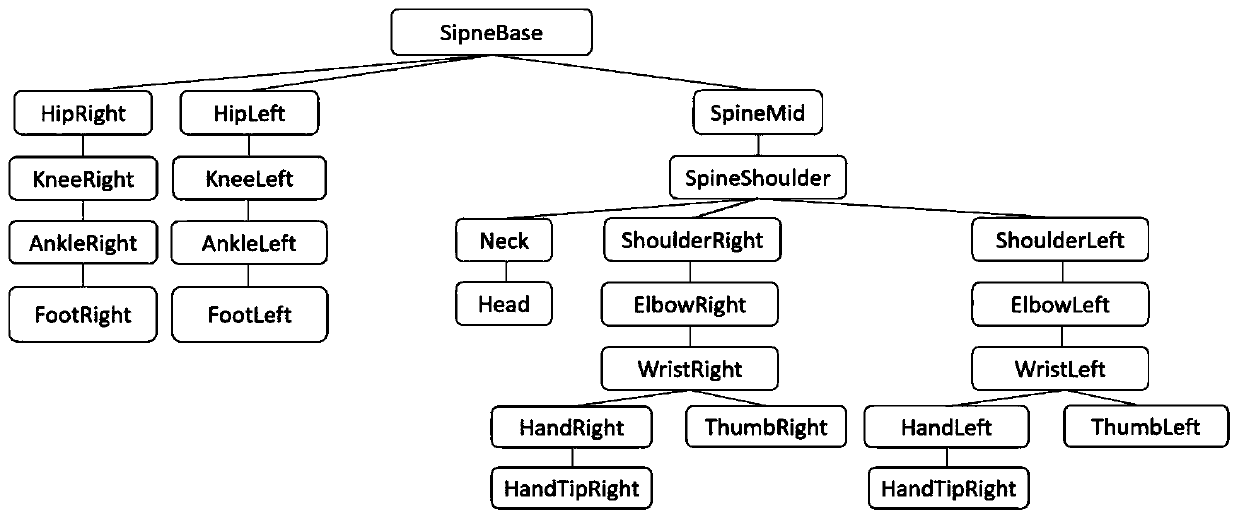

[0040] Use the Microsoft Kinect2.0 infrared depth sensor to collect human skeleton information, including human skeleton information including the three-dimensional space coordinates of multiple skeleton points of the human body, and the topological structure information of the human body with SpineBase as the root node;

[0041] Step 2: The overall feature representation of the action,

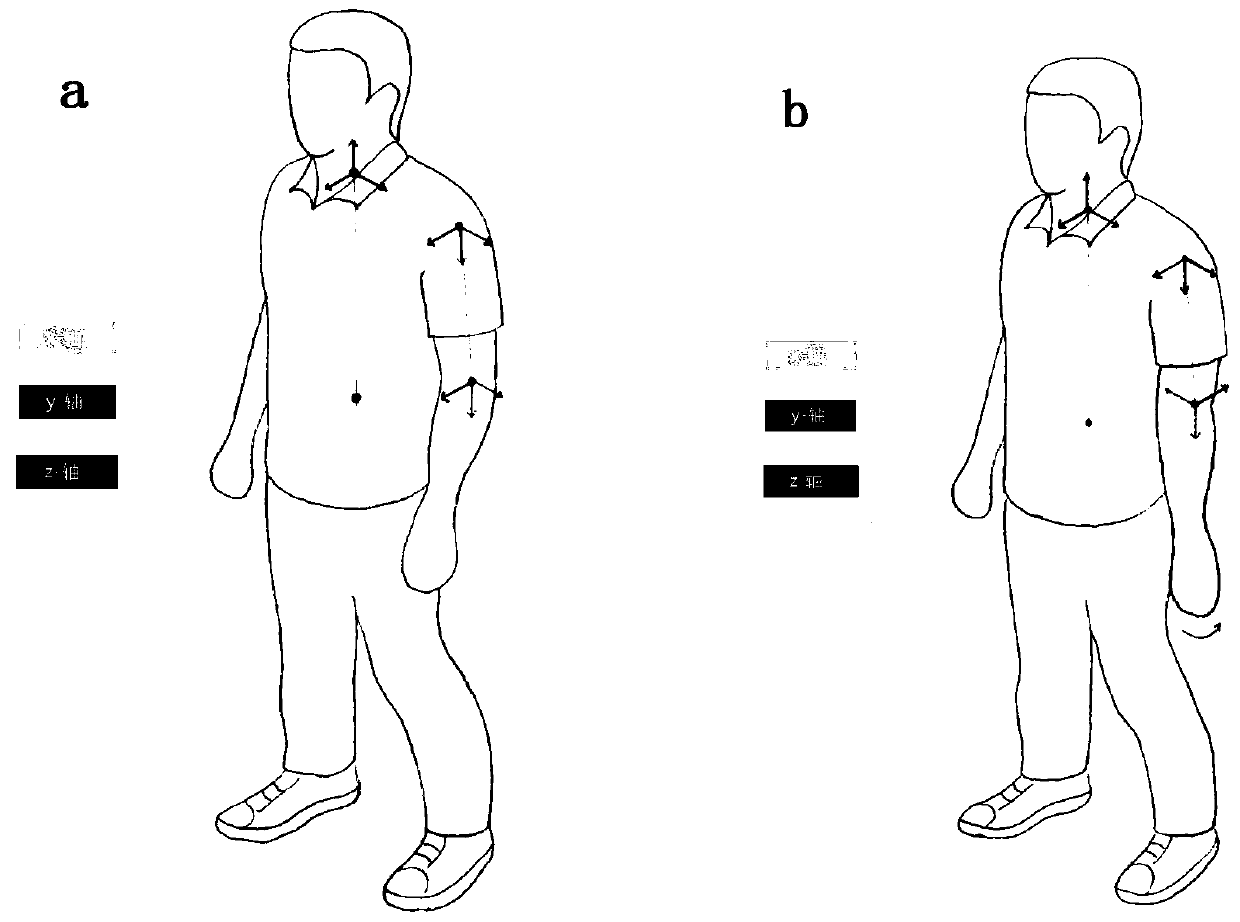

[0042] According to the coordinates of human skeleton joint points in step 1, the human body posture matrix group is calculated, and the calculation formula of human body posture is:

[0043] R F =(R i,j ) M×M (1)

[0044] Among them, R F is the matrix of the human body pose...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com