Method for batch normalization layer pruning in deep neural networks

一种深度神经网络、模型的技术,应用在深度神经网络领域,能够解决占用大运算资源、庞大运算能力、困难等问题,达到规模降低、确保准确性、减少硬件规格的效果

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

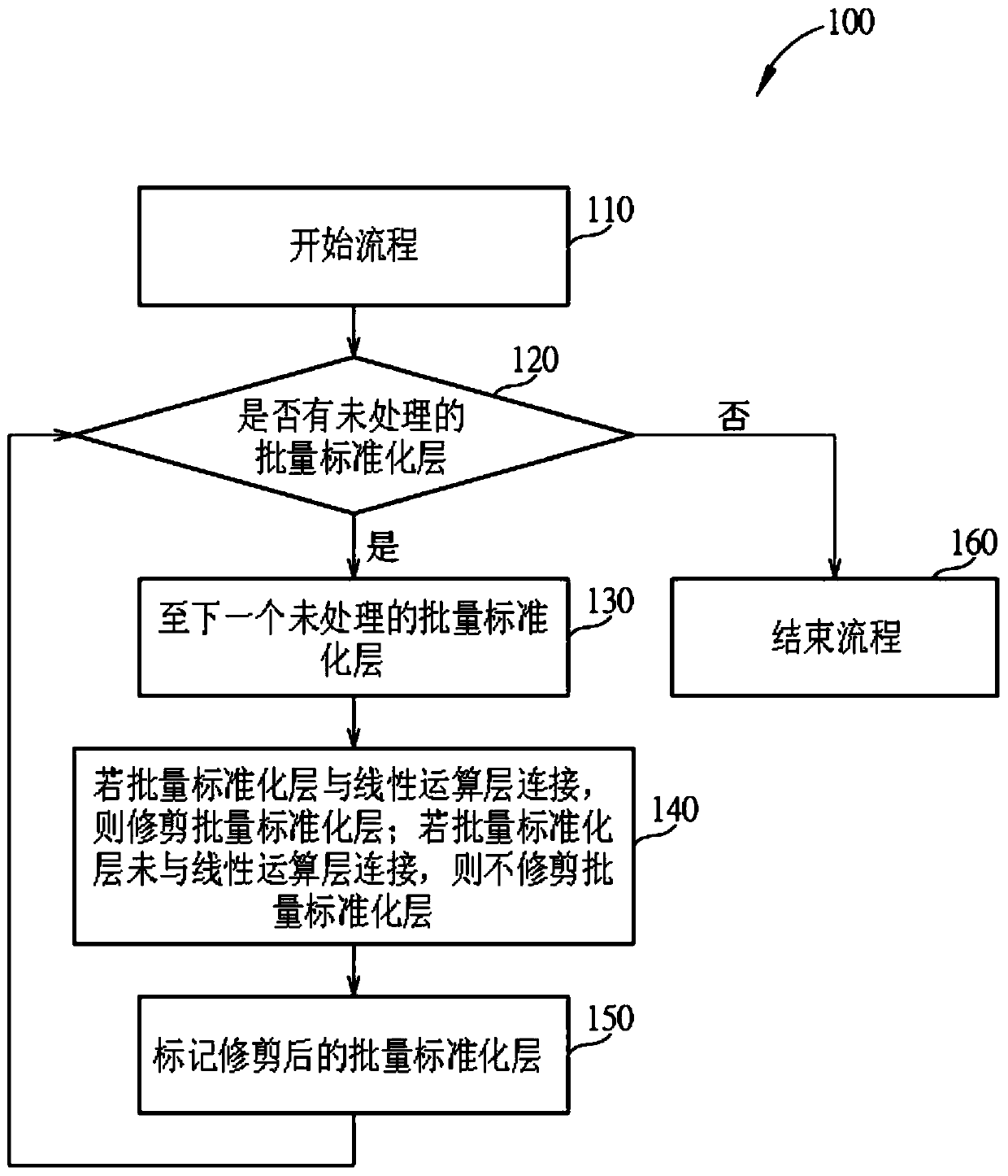

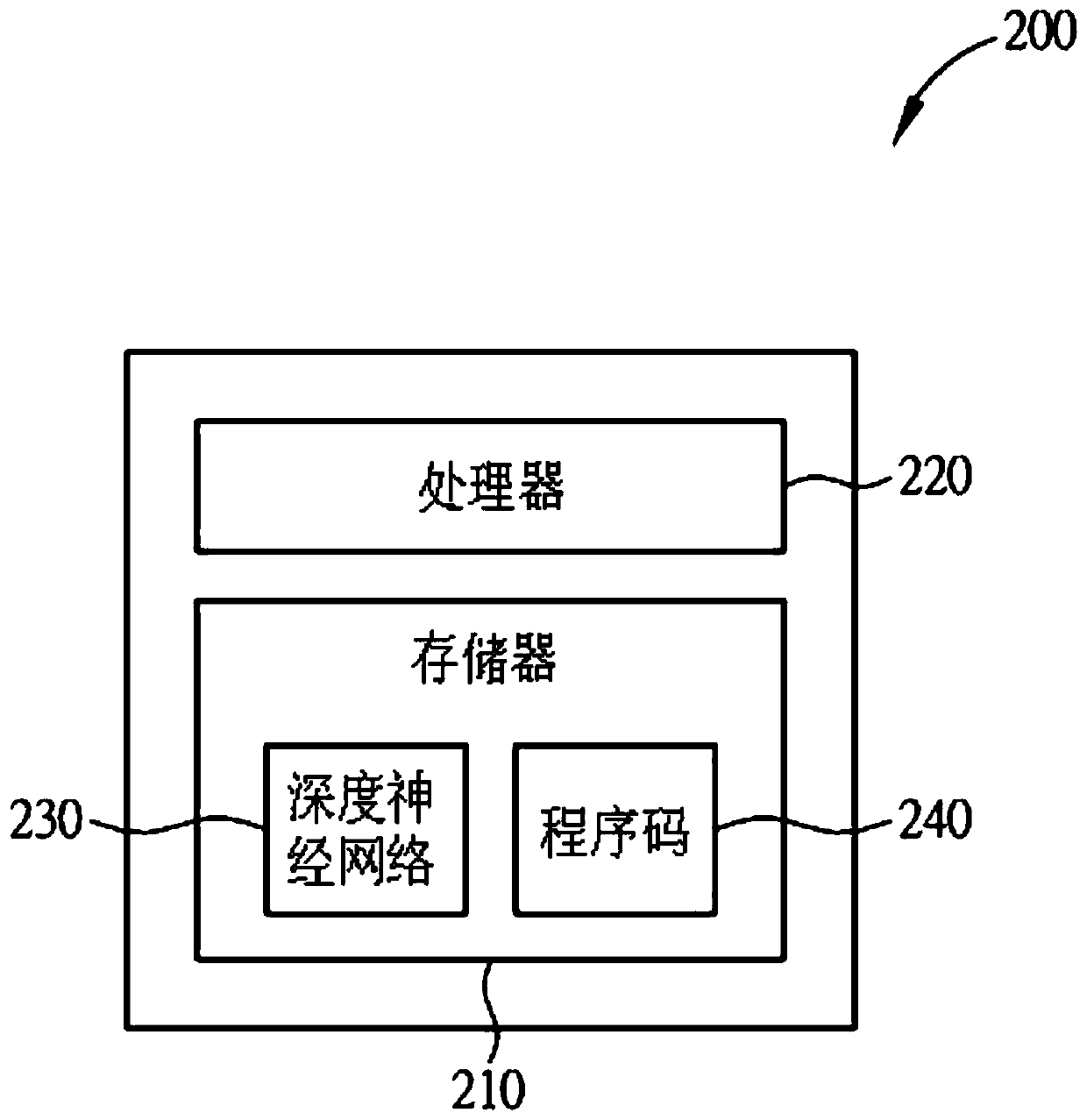

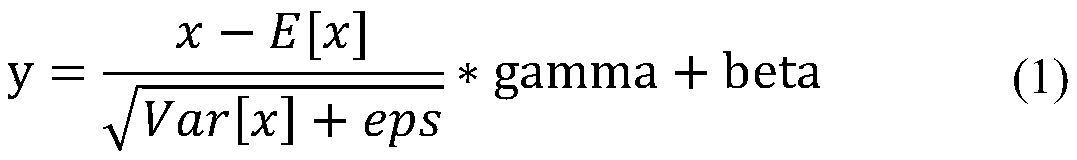

[0015] In order to solve the problem of implementing deep neural network training with batch normalization layer in devices with limited computing resources, we propose a new batch normalization layer pruning technique, which combines linear operation layer pruning with (linear operation layer) any batch normalization layer connected to losslessly compress deep neural network models. Linear operation layers include but are not limited to convolution layers, dense layers, depthwise convolution layers and group convolution layers. In addition, the batch normalization layer pruning technique does not change the structure of other layers in the neural network model, so the batch normalization layer pruning technique can be directly implemented into the existing neural network model platform.

[0016] Before disclosing the details of the batch normalization layer pruning technique, the main claims (but not all claims) of this patent application are summarized here.

[0017] Embodi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com