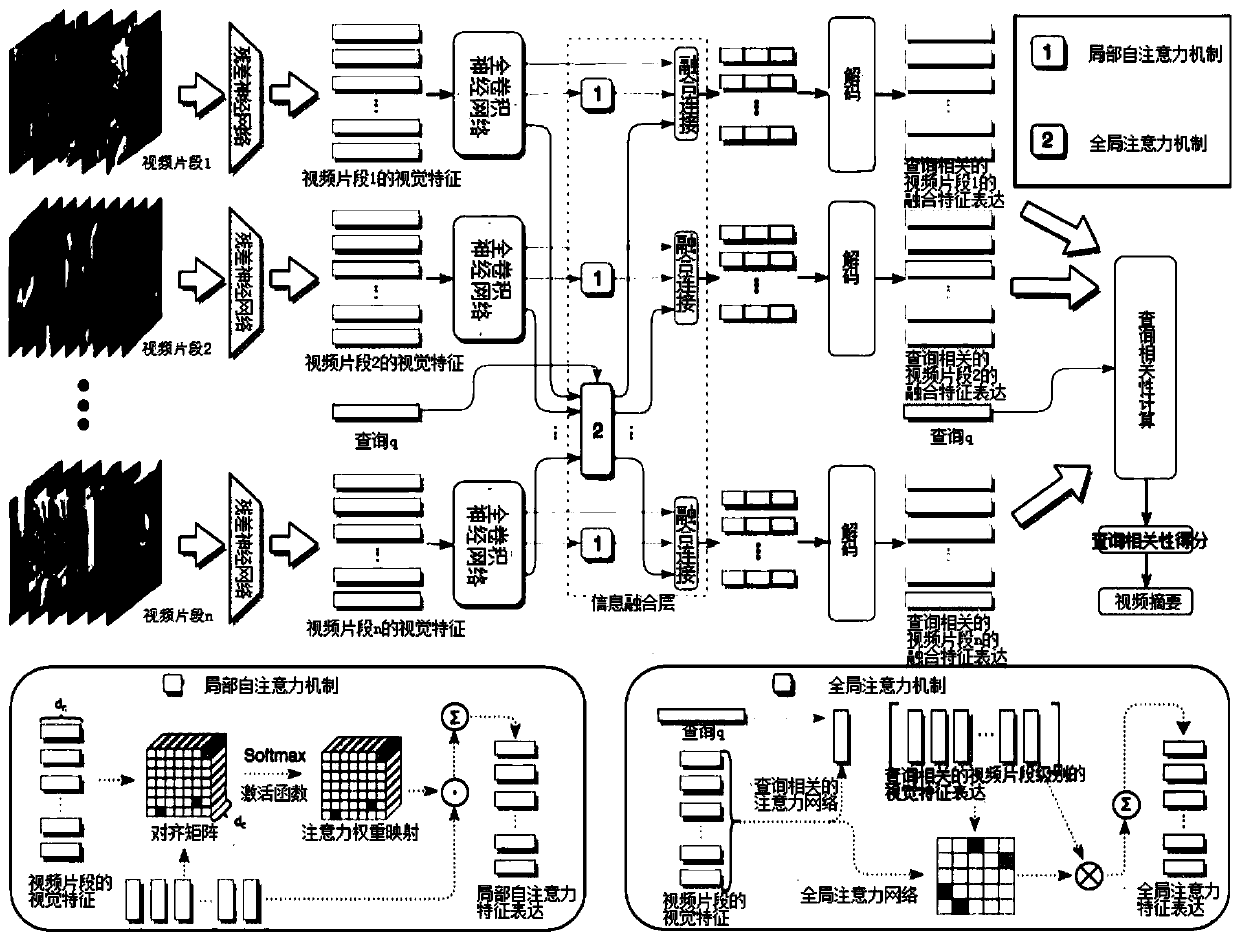

Method for generating query-oriented video abstract by using convolutional multilayer attention network mechanism

A technology of video summarization and attention, applied in image communication, selective content distribution, electrical components, etc., can solve the problems of long model calculation time, inability to easily deal with long-distance relationship of video, etc., and achieve the effect of reducing the number of parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

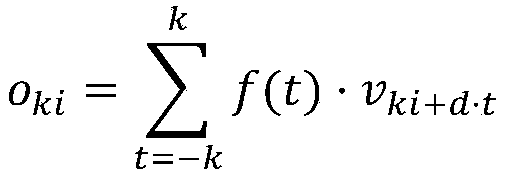

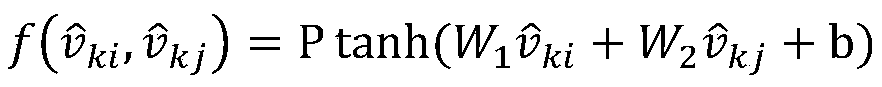

Method used

Image

Examples

Embodiment

[0065] The invention is experimentally validated on the query-oriented video summarization dataset proposed in (Sharghi, Laurel, and Gong 2017). The dataset consists of 4 videos containing different daily life scenes, each lasting 3 to 5 hours. The dataset provides a set of concepts for user queries, of which the total number of concepts is 48; there are 46 queries in the dataset, each query consists of two concepts, and there are four scenarios for the query, that is, 1) all the concepts in the query appear In the same video; 2) all the concepts in the query appear in the video but not the same photo; 3) some concepts in the query appear in the video; 4) all the concepts in the query do not appear in the video. The dataset provides annotations annotated on video shots, with each shot labeling several concepts. Then the present invention carries out the following preprocessing for the video summary data set for query:

[0066] 1) Sample the video to 1fps, then resize all fra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com