Action recognition method based on adaptive context region selection

An action recognition and context technology, applied in the fields of computer vision and action recognition, can solve problems such as affecting the performance of action recognition methods, reducing the accuracy of action recognition, etc., to achieve effective utilization, reduce risks, and improve efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

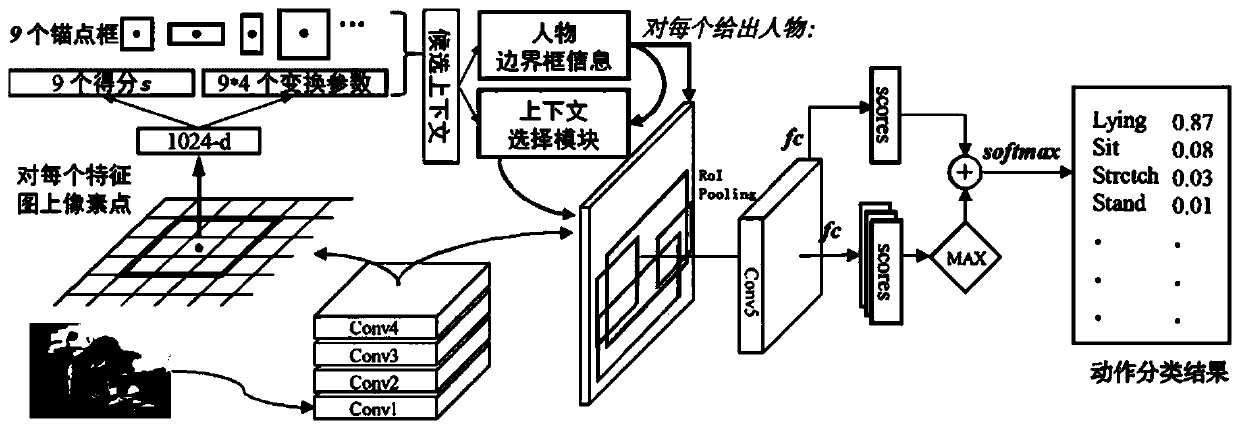

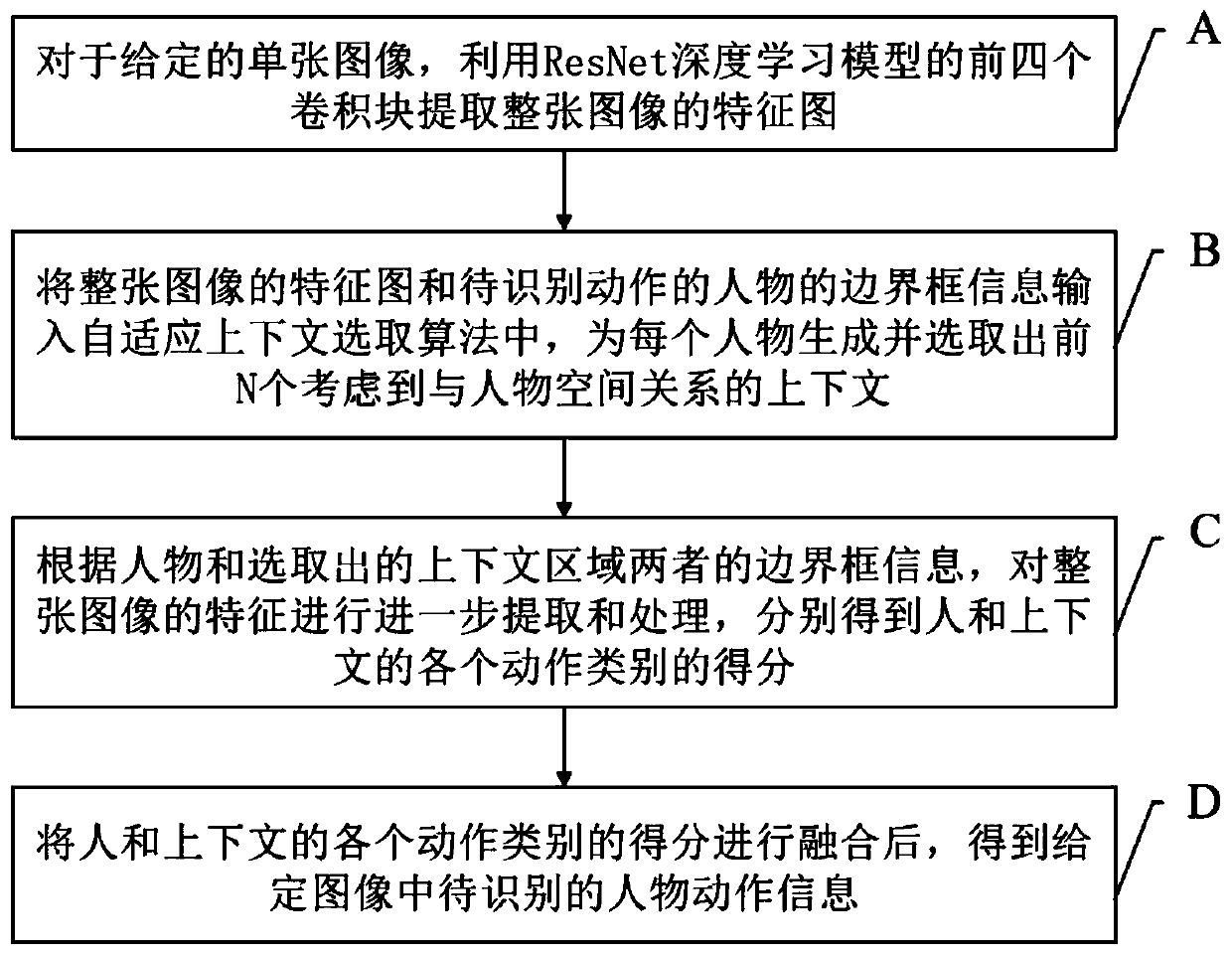

[0058] Such as figure 2 As shown, the present invention provides an action recognition method based on adaptive context region selection. The main purpose of the present invention is to use the spatial position information of the person to adaptively select the context from the candidate regions generated by the network to help recognize the person's action . It mainly includes the following four steps:

[0059] Step A: For a given single image, use the first four convolution blocks of the ResNet deep learning model to extract the feature map of the entire image;

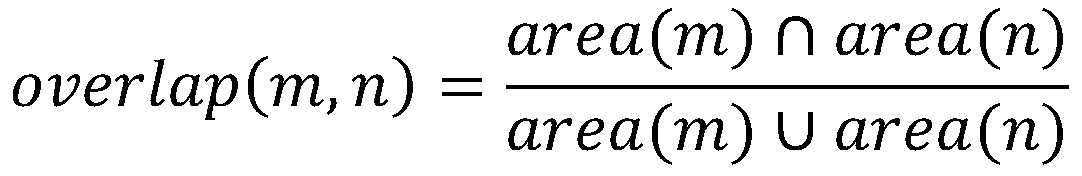

[0060] Step B: Input the feature map of the entire image and the character bounding box n information of the action to be recognized into the adaptive context selection algorithm, generate and select the first N area bounding boxes considering the spatial relationship with the character for each character, as context area;

[0061] Step C: According to the character bounding box n and the selected bounding box o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com