A hand-eye calibration system and method for a 3D vision measurement system

A hand-eye calibration and visual measurement technology, which is applied in the field of robot vision sensing, can solve the problems of complicated steps, high professional level requirements of technicians, and large workload and time consumption, so as to reduce workload and working time and achieve high stability , The effect of low professional level requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

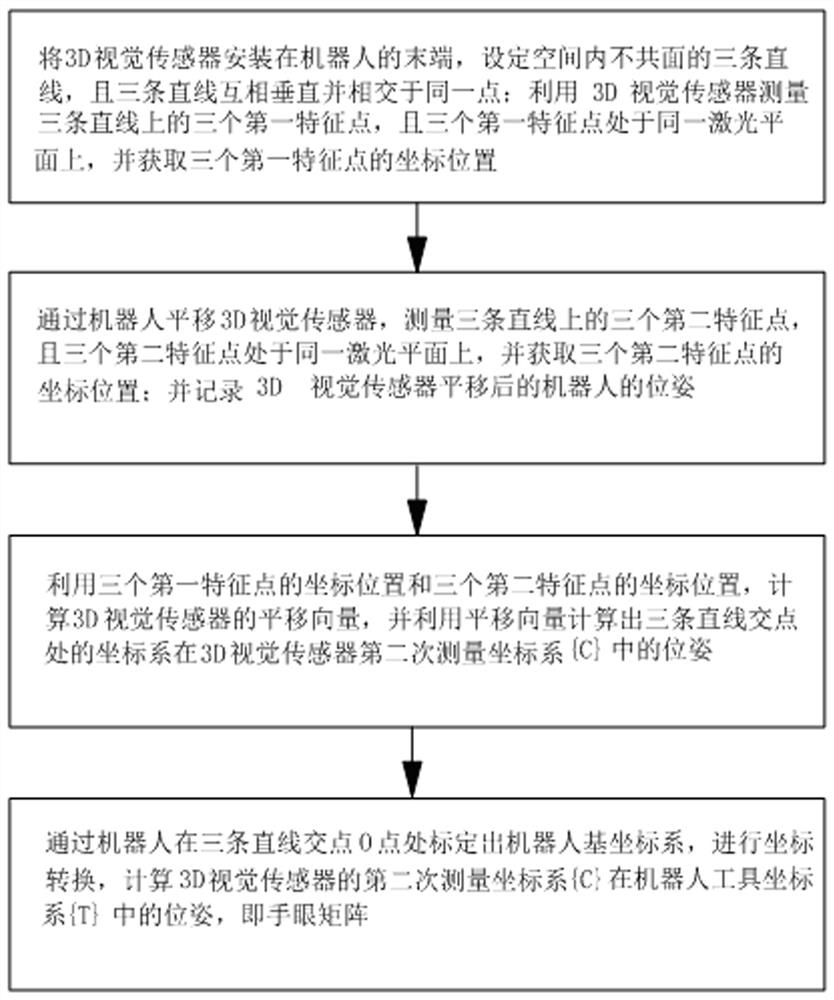

[0062] like Figure 1 to Figure 3 As shown, a hand-eye calibration method for a 3D vision measurement system includes the following steps:

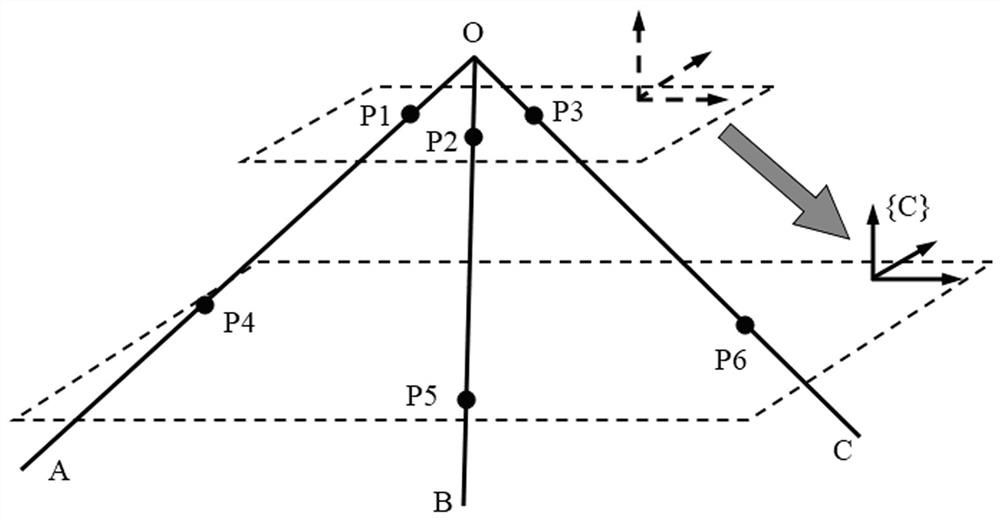

[0063] Step 1. Install the 3D vision sensor on the end of the robot, set three straight lines in the space that are not coplanar, and the three straight lines are perpendicular to each other and intersect at the same point; use the 3D vision sensor to measure the three first feature points on the three straight lines , and the three first feature points are on the same laser plane, and the coordinate positions of the three first feature points are obtained;

[0064] Step 2. Use the robot to translate the 3D vision sensor, measure three second feature points on three straight lines, and the three second feature points are on the same laser plane, and obtain the coordinate positions of the three second feature points; and record the 3D The pose of the robot after the vision sensor is translated;

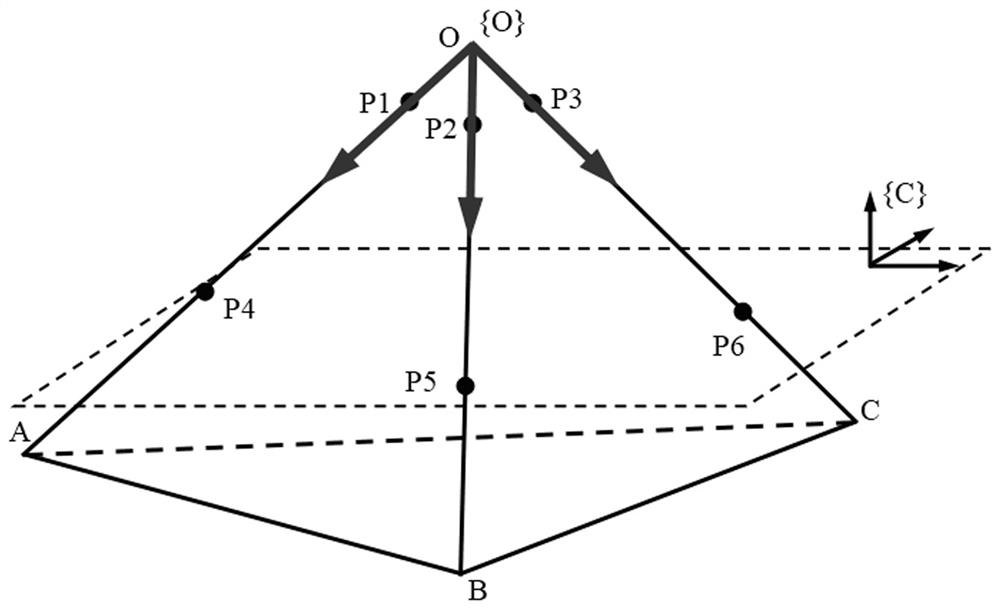

[0065] Step 3. Utilize the coordinate pos...

Embodiment 2

[0107] like Figure 2 to Figure 4 As shown, a hand-eye calibration system for a 3D vision measurement system, including:

[0108] The first acquisition coordinate module 1, the first acquisition coordinate module 1 installs the 3D vision sensor on the end of the robot, sets three straight lines that are not coplanar in the space, and the three straight lines are perpendicular to each other and intersect at the same point; using 3D vision The sensor measures three first feature points on three straight lines, and the three first feature points are on the same laser plane, and obtains the coordinate positions of the three first feature points;

[0109] The second acquisition coordinate module 2, the first acquisition coordinate module 1 uses the robot to translate the 3D vision sensor, measures three second feature points on three straight lines, and the three second feature points are on the same laser plane, and acquires three The coordinate position of the second feature poi...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap