Pixel-level object grasping detection method and system for asymmetrical three-finger grasper

A detection method and detection system technology, applied in the field of robotics, can solve the problems that the asymmetrical three-finger gripper cannot exchange finger positions, and the true value of the gripping scheme cannot truly represent the grippable properties of objects, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

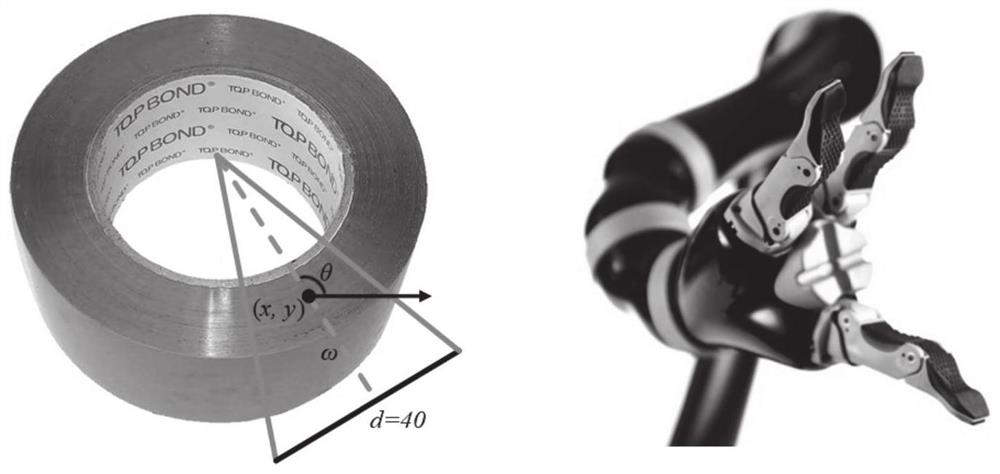

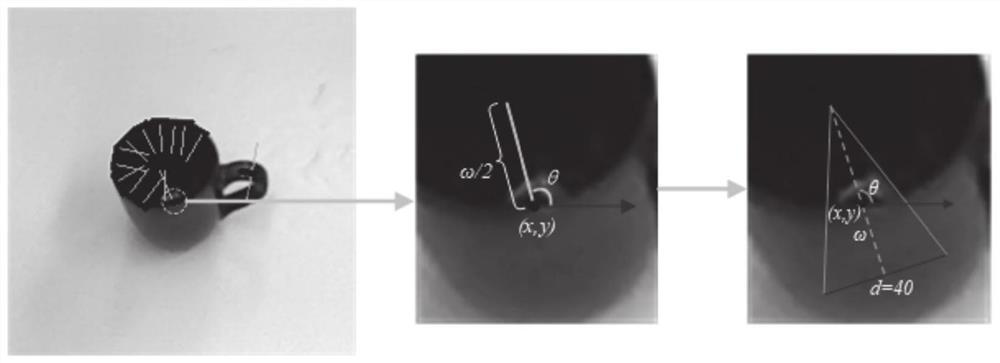

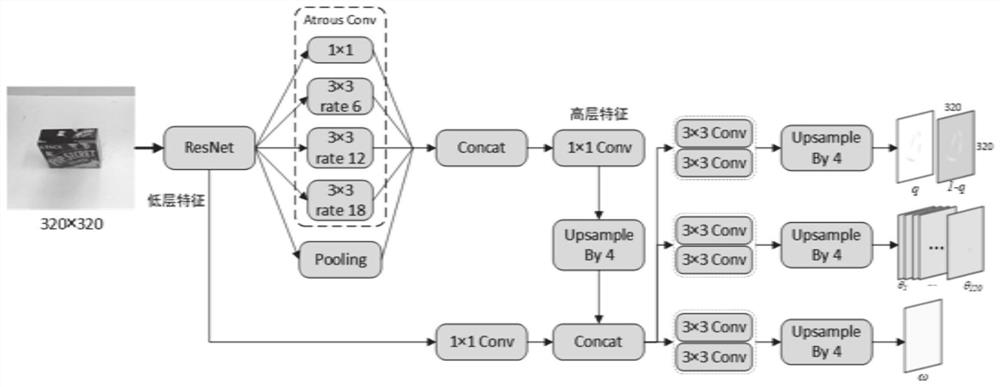

[0036] Since there is no grasping model specially suitable for the asymmetrical three-finger grasper, the present disclosure designs a oriented triangular grasping model in view of the fact that the grasper cannot perform symmetrical grasping. This disclosure uses traditional convolution and atrous convolution for grasping detection; in order to improve the scale invariance of the network, a spatial pyramid network is used to obtain feature maps with different receptive fields, and a feature fusion unit is used to fuse the low-level and high-level features of the network ; in order to complete end-to-end grasp detection, a grasp model detection unit is designed to directly output each parameter of the directed triangular grasp scheme.

[0037] The present disclosure proposes a pixel-level target grasping detection method for an asymmetric three-finger grasper. Aiming at the technical vacancy of grasping detection based on asymmetric three-finger gripper, this method uses the c...

Embodiment 2

[0104] Based on Embodiment 1, this embodiment provides a pixel-level target detection system for an asymmetric three-finger gripper, including an image acquisition device and a server:

[0105] an image acquisition device configured to acquire an original image containing an object that can be grasped by the asymmetric three-finger gripper, and transmit it to a server;

[0106] The server is configured to execute the steps of the pixel-level object grasping detection method for an asymmetric three-finger grasper described in Embodiment 1.

Embodiment 3

[0108] This embodiment provides a pixel-level target grabbing detection system for an asymmetrical three-finger grabber, including:

[0109] Image acquisition module: configured to acquire an original image containing a target to be captured;

[0110] Grasping plan labeling module: configured to mark the grabbing points for the target in the original image according to the constructed triangular grabbing model, and generate a grabbing plan;

[0111] Image data processing module: configured to perform data enhancement and clipping on each image and its annotation capture scheme to obtain a processed image;

[0112] Grasp detection module: configured to input the processed image to the trained grasp detection network constructed by deep convolutional neural network, and output the grasping scheme with the highest grasping confidence as the final directed triangular grasping Take the plan.

[0113] Among them, the grasping scheme labeling module: including the grasping point of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com