Cloud computing multistage scheduling method and system and storage medium

A scheduling method and cloud computing technology, applied in the field of cloud computing, can solve the problem of high average completion time of Coflow

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

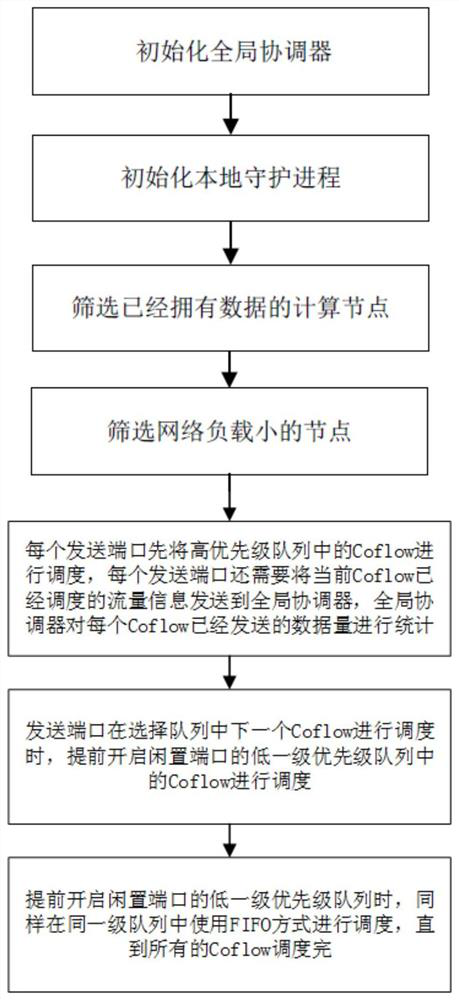

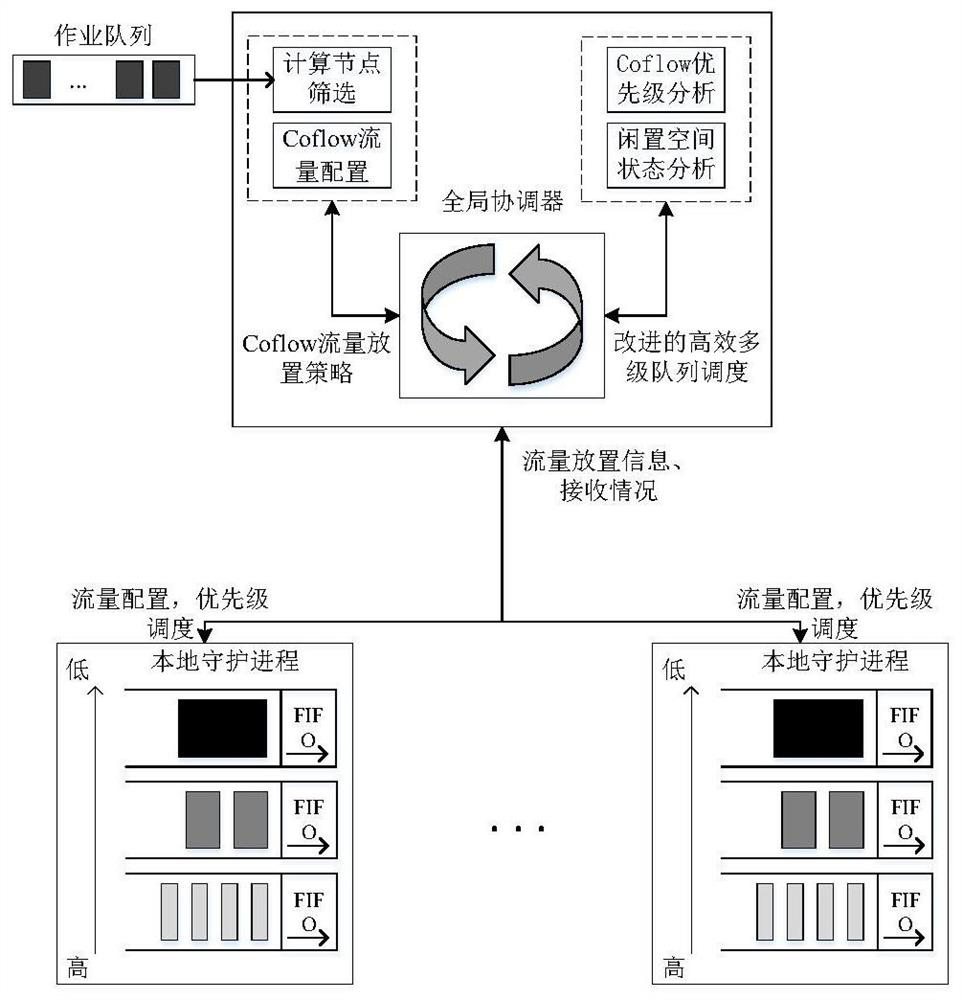

[0049] Embodiment 1. A cloud computing multi-level scheduling method, comprising the following steps:

[0050] Select the receiving node and notify the sending node of Coflow, so that the sending node will send the scheduled Coflow traffic to the selected receiving node;

[0051] Receive the sent data flow size information of each Coflow sent by the sending node, determine the priority of different Coflows according to the received information, and send the priority of the Coflow to the sending node, so that the sending node Coflow is scheduled in the local multi-level queue according to the priority of the above Coflow.

[0052] The execution subject of the method in this embodiment is independent of the sending node and the receiving node, and can be set in, for example, a global coordinator or an integrated coordinator.

Embodiment 2

[0053] Embodiment two, on the basis of implementing one, the method for selecting the receiving node in this embodiment is: monitor the job that produces Coflow and utilize the Coflow flow placement strategy to select the receiving node to be placed for Coflow flow, and the Coflow flow placement strategy includes preliminary Screen the computing nodes that already have data, and screen the nodes with low network load among the computing nodes that are initially screened out.

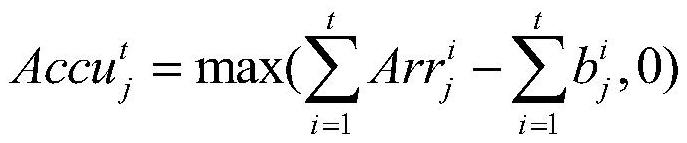

[0054] Preliminary screening of computing nodes that already have data is represented by formula (3). in, The representation of C n Whether the i-th data stream in can select node j as a potential target node, if the value is 1, it means yes, otherwise it is 0. all All nodes will be selected as the set of candidate computing nodes for task i.

[0055]

[0056] Among the computing nodes initially screened out, the nodes with small network load are screened again. exist In the case of , it can ...

Embodiment 3

[0069] Embodiment three, a kind of cloud computing multi-level scheduling method, comprises the following steps: A kind of cloud computing multi-level scheduling method, is characterized in that, comprises the following steps:

[0070] Obtain the receiving node selected by the global coordinator, and send the scheduled Coflow traffic to the selected receiving node;

[0071] Send the data flow size information sent by each Coflow to the global coordinator, so that the global coordinator determines the priorities of different Coflows according to the received information and returns the priorities of the different Coflows;

[0072] Scheduling the Coflow in the local multi-level queue according to the received priority of the Coflow.

[0073] The method provided in this embodiment is deployed on the sending node, in which a global coordinator is added for convenience of description, which cannot be understood as a limitation on the execution subject, and can be implemented by oth...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com