Neural network asynchronous training-oriented learning rate adjustment method

A neural network and adjustment method technology, which is applied in the field of learning rate adjustment for asynchronous training of neural networks, can solve the problems of reduced network accuracy, difficulty in defining hyperparameters, and no exact theoretical basis for numerical setting, etc., to achieve learning rate balance, The effect of improving the network convergence speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0062] The present invention will be further described below in conjunction with the accompanying drawings. It should be noted that this embodiment is based on the technical solution, and provides detailed implementation and specific operation process, but the protection scope of the present invention is not limited to the present invention. Example.

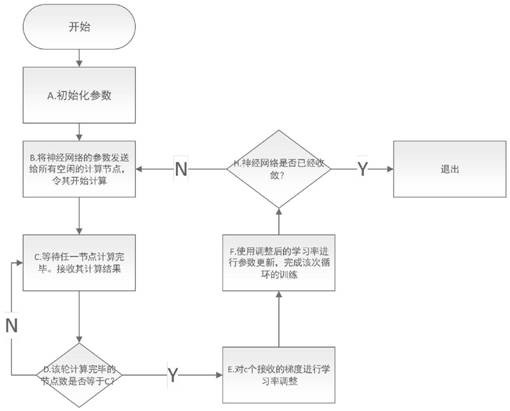

[0063] Such as figure 1 As shown, the present invention is a learning rate adjustment method for neural network asynchronous training, said method comprising the following steps:

[0064] S1 initialization parameters;

[0065] S2 sends the parameters of the neural network to all idle computing nodes: for all nodes that have completed the calculation in the previous cycle and submitted the calculation results, the parameter server will send them the updated parameters respectively, and let them start the next round The calculation of ; after this step, the whole enters the next round of calculation, the current round t glob = ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com