An Intelligent Virtual Reference Frame Generation Method

A virtual reference and reference frame technology, applied in deep learning and 3D video coding fields, can solve the problems of further improvement of coding efficiency and lack of multi-view video coding methods, so as to facilitate storage and transmission, reduce coding bits, and improve quality Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] In order to make the purpose, technical solution and advantages of the present invention clearer, the implementation manners of the present invention will be further described in detail below.

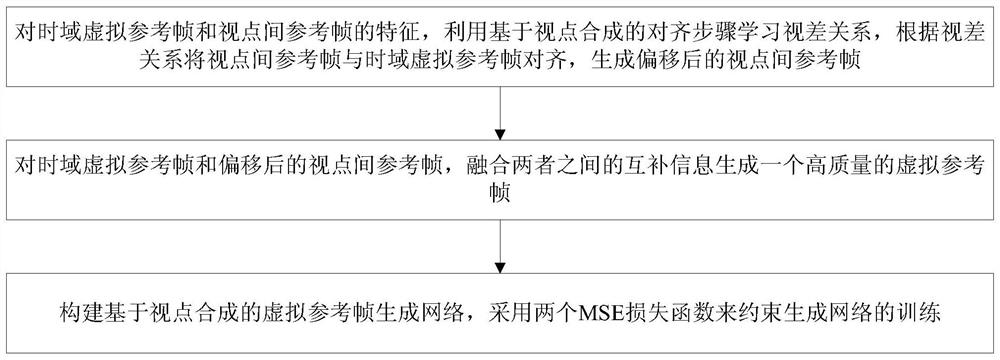

[0037] The embodiment of the present invention provides an intelligent virtual reference frame generation method, builds a virtual reference frame generation network based on viewpoint synthesis, generates a high-quality virtual reference frame and provides a high-quality reference for the current coded frame, and improves prediction Accuracy, thereby improving the coding efficiency. Method flow see figure 1 , the method includes the following steps:

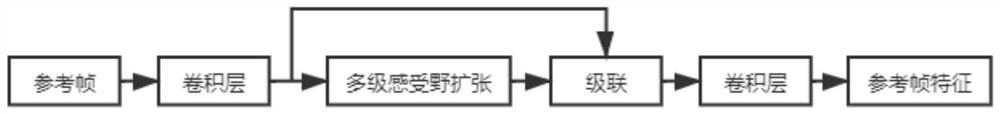

[0038] 1. Feature extraction

[0039] The temporal virtual reference frame F t and the inter-view reference frame F iv As the input of the virtual reference frame generation network, their features f are extracted separately using the same branch shared by the two parameters t ,f iv , the extraction process see figure 2 . ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com