A Method for Image Caption Generation Based on Conditional Embedding Pretrained Language Model

A language model and pre-training technology, applied to neural learning methods, biological neural network models, computer components, etc., can solve problems such as not being able to learn from image information at all times, and achieve good robustness and self-adaptability

Active Publication Date: 2022-03-01

HANGZHOU DIANZI UNIV

View PDF18 Cites 0 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

The method of the present invention solves the problem that the pre-trained language model cannot always learn from image information when performing downstream tasks

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

Embodiment 1

[0060] like Image 6 As shown, the target detected by the target detection algorithm includes: flower vase lavender, construct a keyword set W={flower vase lavender}, and compose the input sequence S with the keyword set and the special characters improved in steps 1-2. Input it into the CE-UNILM model, and the predicted result is: a flower in a vase of purple lavender.

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

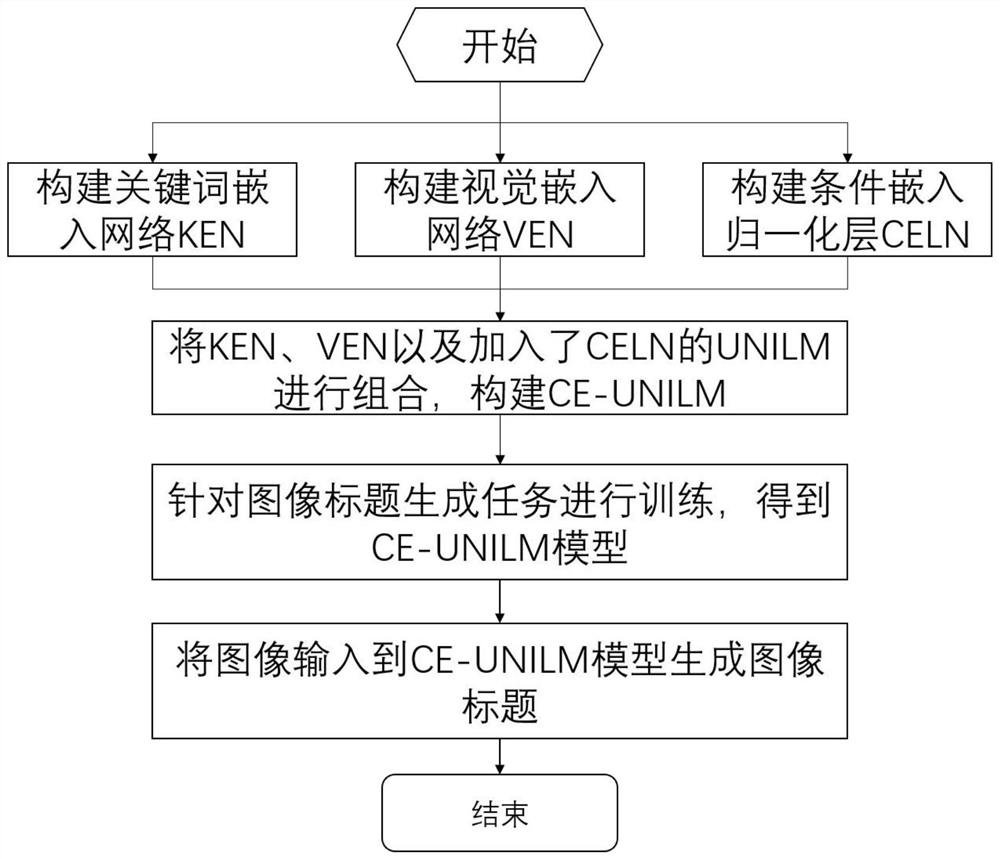

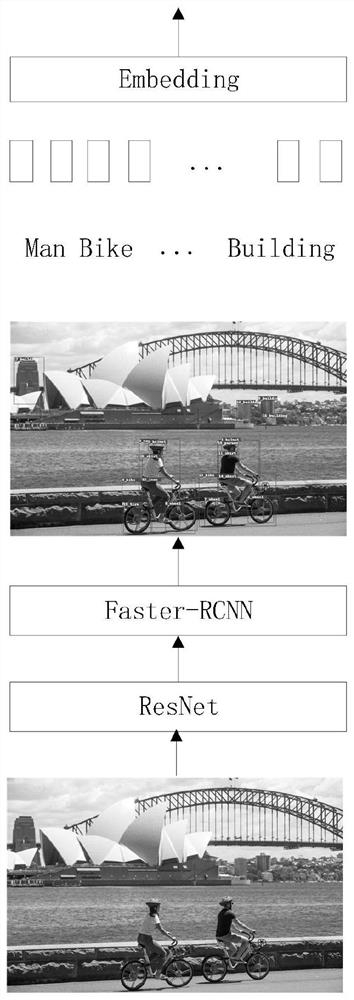

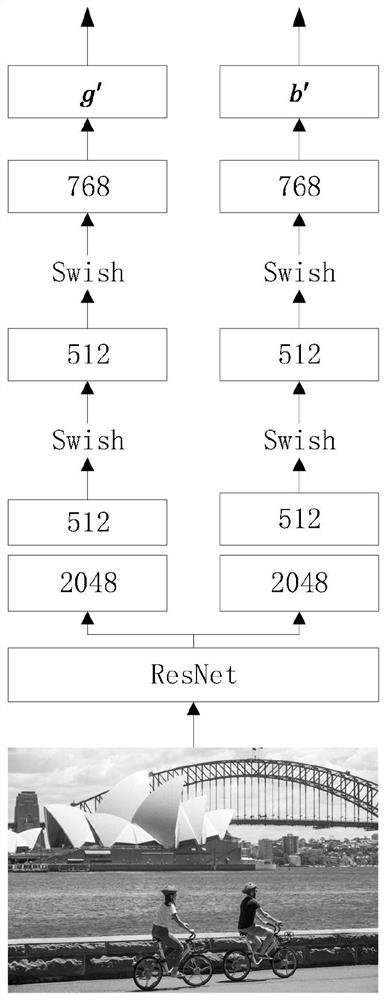

The invention discloses a method for generating image captions based on a conditional embedding pre-training language model. The present invention proposes a network based on a pre-trained language model, called CE‑UNILM. At the input end of the pre-trained language model UNILM, KEN is constructed. KEN uses the method of target detection to detect the target of the image, and uses the result as key text information to input through keyword embedding. Extract image features by constructing VEN, encode the image, and input it through conditional embedding. At the same time, the CELN proposed by the present invention is an effective mechanism to adjust the pre-trained language model for feature selection through visual embedding, and apply CELN to the transformer in the unified pre-trained language model. The results show that this method is more robust and adaptive.

Description

technical field [0001] The invention belongs to the technical field of image description, and relates to a method for generating an image title, in particular to a method for generating an image title based on a conditional embedded pre-trained language model. Background technique [0002] Large-scale pre-trained language models have greatly improved the performance of text understanding tasks and text generation tasks, which has also changed researchers' research methods, making adjustments to pre-trained language models for downstream tasks a mainstream method. There are more and more researches on image-text, speech-text, etc., and the specific applications include image subtitles, video subtitles, image question answering, video question answering, etc. [0003] Compared with the traditional encoding-decoding task process, the results of the pre-trained language model on natural language processing tasks are excellent. This is because articles and sentences are inherent...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More Patent Type & Authority Patents(China)

IPC IPC(8): G06K9/62G06V10/40G06N3/04G06N3/08G06V10/774G06V10/764

CPCG06N3/08G06V10/40G06N3/044G06F18/2411G06F18/214

Inventor 张旻林培捷李鹏飞姜明汤景凡

Owner HANGZHOU DIANZI UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com