Object Tracking Method Based on Self-Attention Transformation Network

A target tracking and attention technology, applied in the field of target tracking, can solve the problem of insensitive parameter setting, and achieve the effect of stable tracking effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

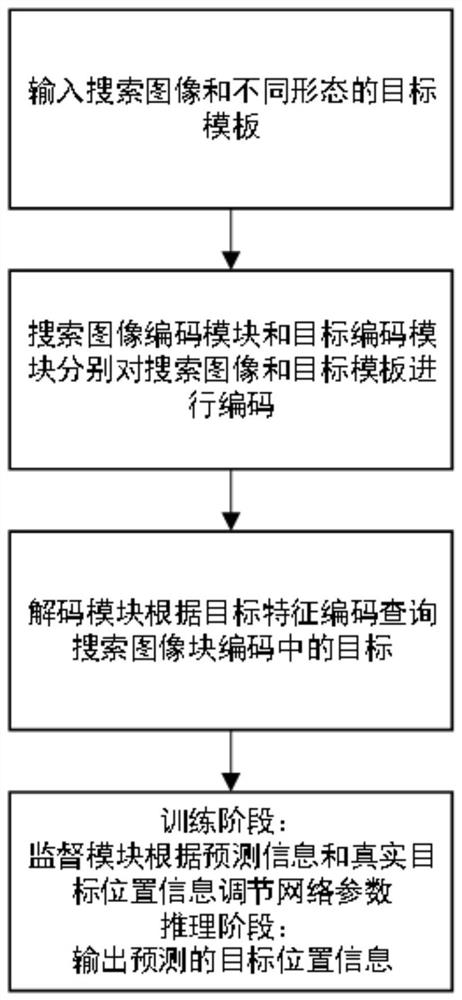

[0028] Below in conjunction with accompanying drawing and specific implementation case, the present invention is described in further detail:

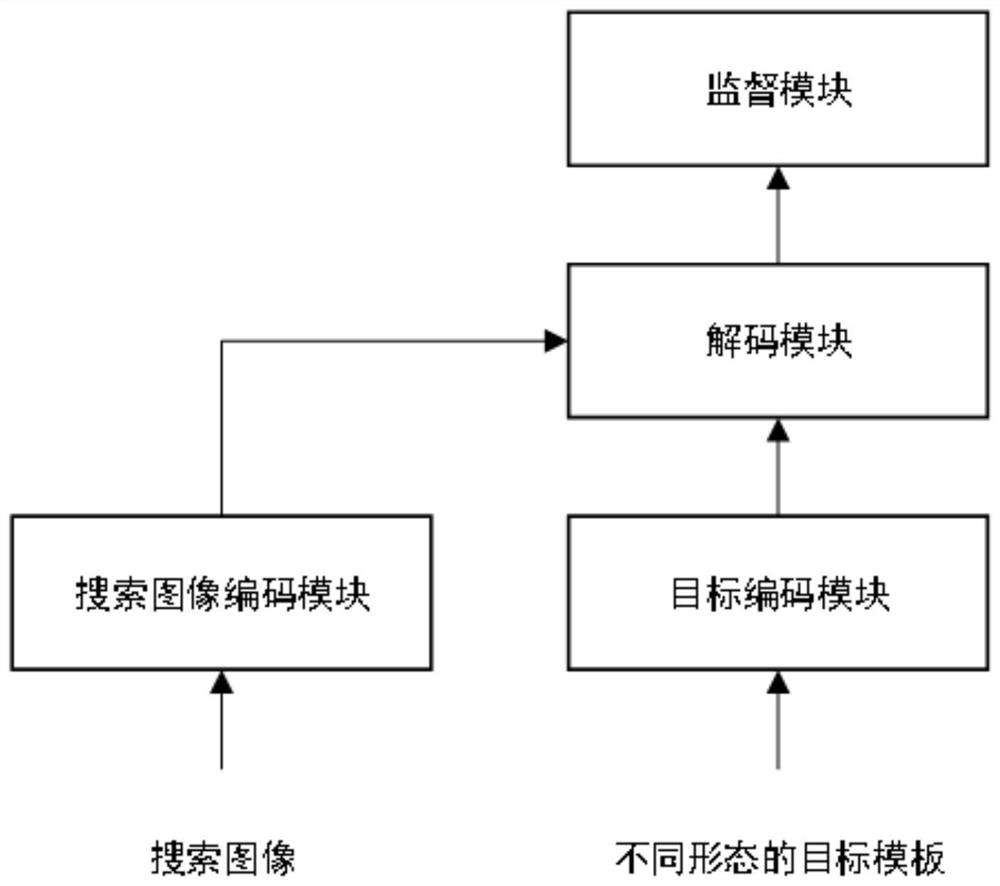

[0029] refer to figure 1, the present invention discloses a target tracking method based on a self-attention transformation network, which is realized by using a search image coding module, a target coding module, a decoding module, and a supervision module;

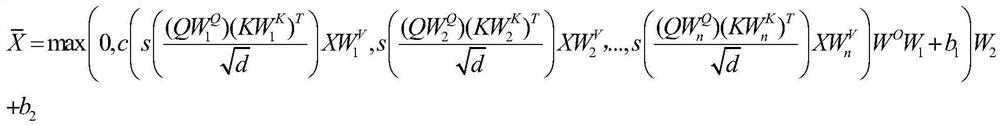

[0030] The described search image encoding module is implemented by a multi-head self-attention network and a feed-forward network in series, and the multi-head self-attention network is formed by parallel connection of n self-attention networks. When the search image block is input into the search image encoding module, the search The image encoding module calculates and searches image block encoding through multi-head self-attention network and feedforward network, and searches image block encoding The calculation formula is:

[0031]

[0032] Wherein X is the search imag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com