Gesture detection method and system based on space-time sequence diagram

A gesture detection and sequence diagram technology, applied in the field of computer vision recognition, can solve the problems of affecting the detection and recognition effect, poor effect, ignoring the connection between two hands, etc., and achieve the effect of good clustering effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

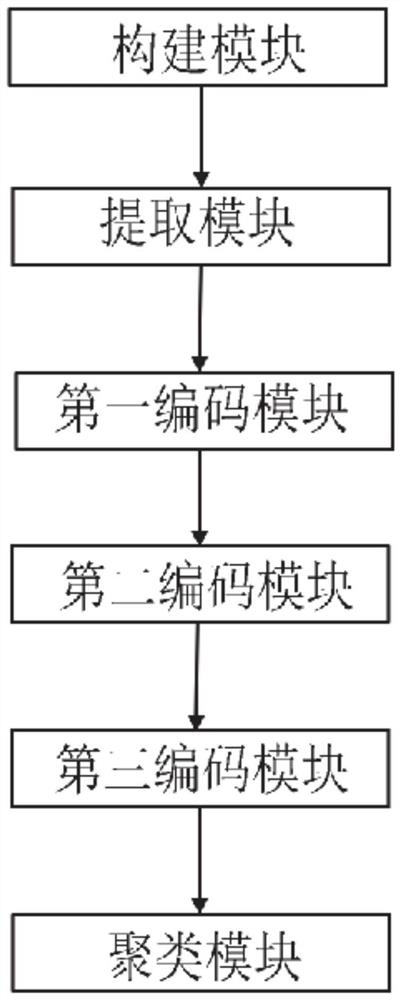

[0042] Such as figure 1 As shown, Embodiment 1 of the present invention provides a gesture detection system based on a spatio-temporal sequence graph, the system includes:

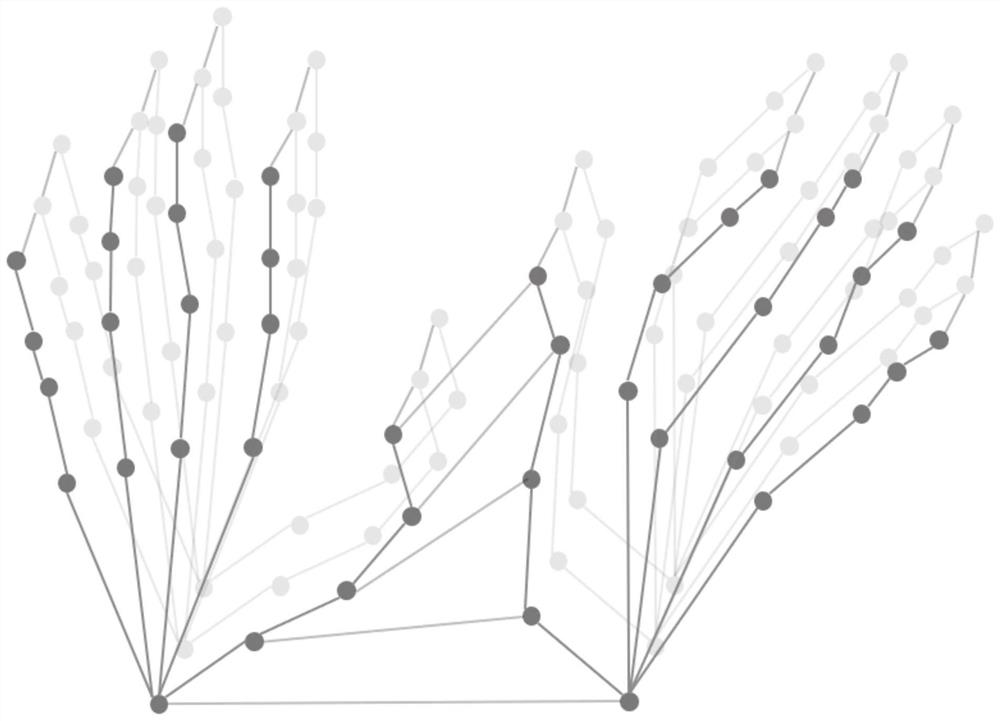

[0043] Building blocks for constructing a spatiotemporal sequence graph of hand joints;

[0044] An extraction module is used to extract the feature relationship between each joint point and its adjacent joint points;

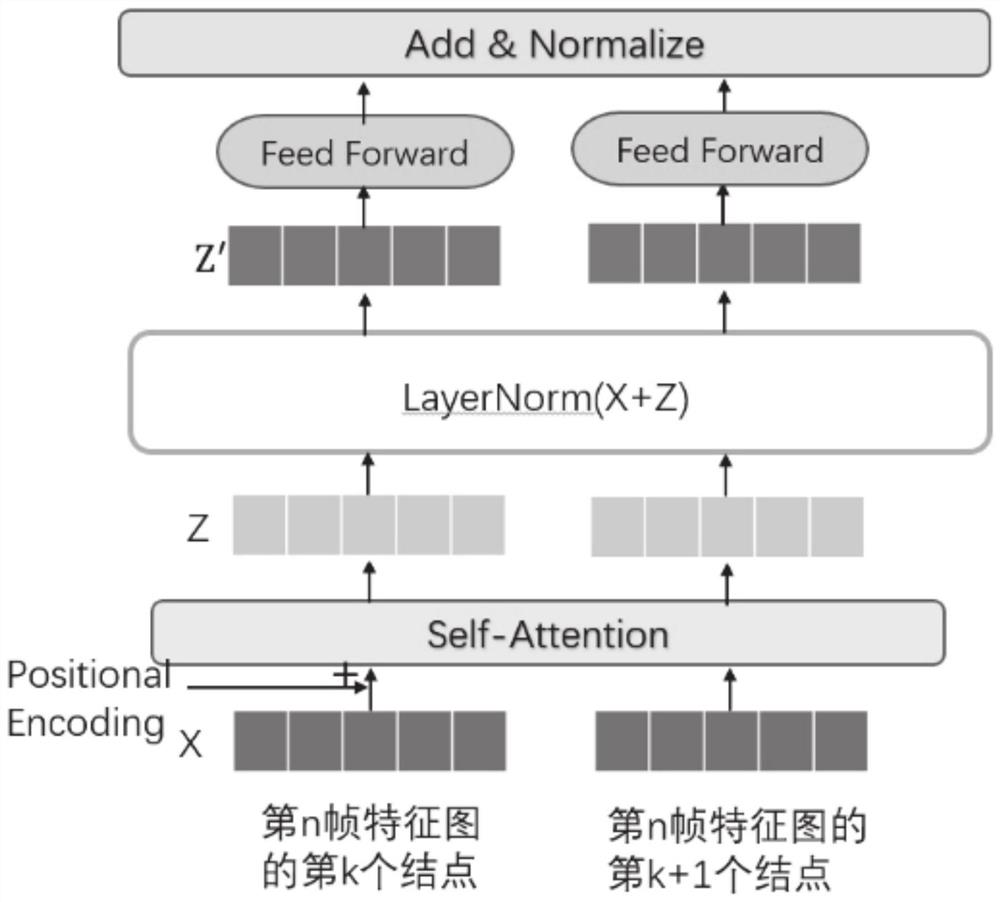

[0045] The first encoding module is used to perform a position encoding operation on the feature relationship to obtain a position encoding vector;

[0046] The second encoding module is used to combine the position encoding vector and the feature relationship, and encode to obtain the action vector;

[0047] The third encoding module is used to perform time-series encoding on the action vector to obtain the space-time relationship vector between the joint point and other joint points;

[0048] The clustering module is used for performing cluster analysis on the action vector and the spa...

Embodiment 2

[0074] Embodiment 2 of the present invention provides a non-transitory computer-readable storage medium, the non-transitory computer-readable storage medium includes instructions for executing a gesture detection method based on a time-space sequence diagram, the method includes:

[0075] Construct the time-space sequence diagram of the joint points of the hand, extract the feature relationship between each joint point and its adjacent joint points; perform position encoding operation on the feature relationship, and obtain the position encoding vector;

[0076] Combining the position encoding vector and the feature relationship, the encoding is obtained to obtain the action vector; the time sequence encoding is performed on the action vector to obtain the space-time relationship vector between the joint point and other joint points;

[0077] The action vector and the space-time relationship vector are clustered and analyzed to realize the classification and recognition of gest...

Embodiment 3

[0079] Embodiment 3 of the present invention provides an electronic device, which includes a non-transitory computer-readable storage medium; and one or more processors capable of executing the instructions of the non-transitory computer-readable storage medium . The non-transitory computer-readable storage medium includes instructions for performing a gesture detection method based on a spatio-temporal sequence graph, the method comprising:

[0080] Construct the time-space sequence diagram of the joint points of the hand, extract the feature relationship between each joint point and its adjacent joint points; perform position encoding operation on the feature relationship, and obtain the position encoding vector;

[0081] Combining the position encoding vector and the feature relationship, the encoding is obtained to obtain the action vector; the time sequence encoding is performed on the action vector to obtain the space-time relationship vector between the joint point and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com