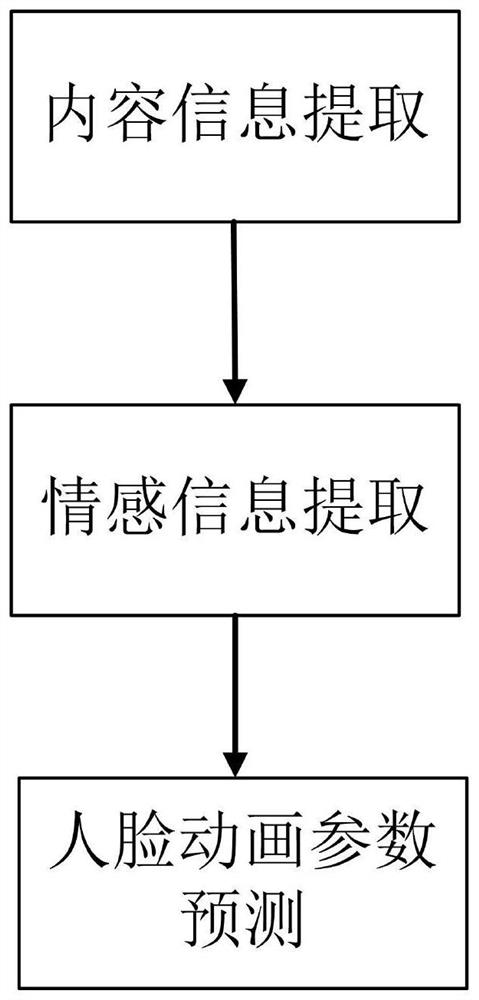

Virtual human animation synthesis method and system based on global emotion coding

An animation synthesis and virtual human technology, applied in voice analysis, voice recognition, instruments, etc., can solve problems such as inability to realize virtual human animation, inability to automatically extract input voice emotion, and insufficient emotional control effect of generated animation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

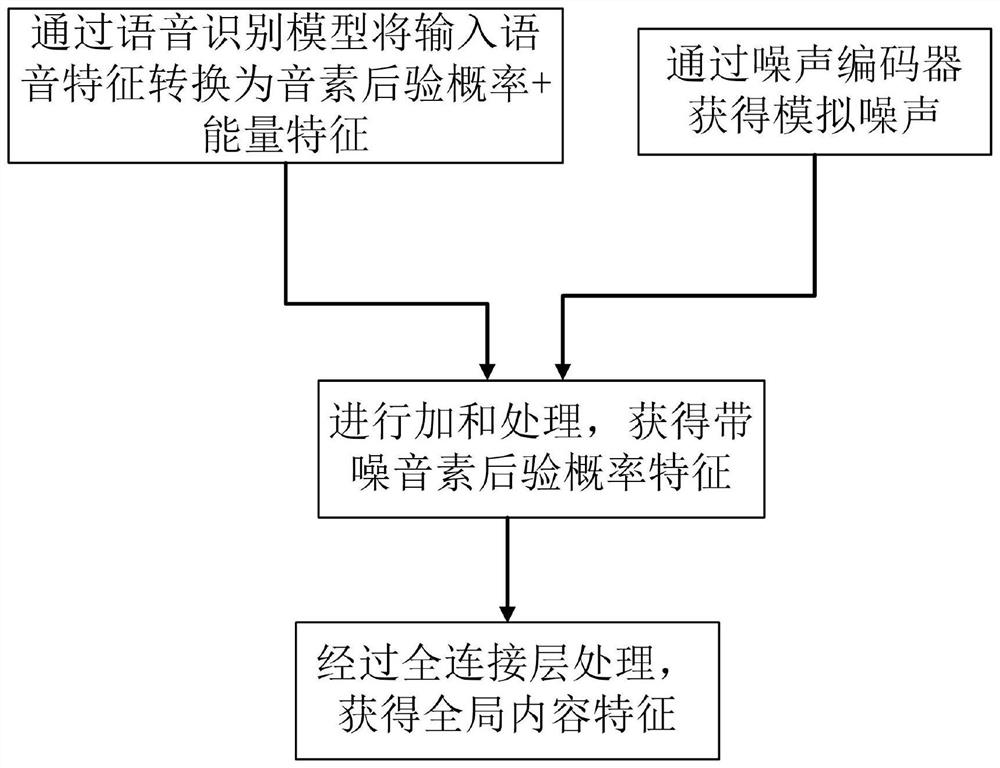

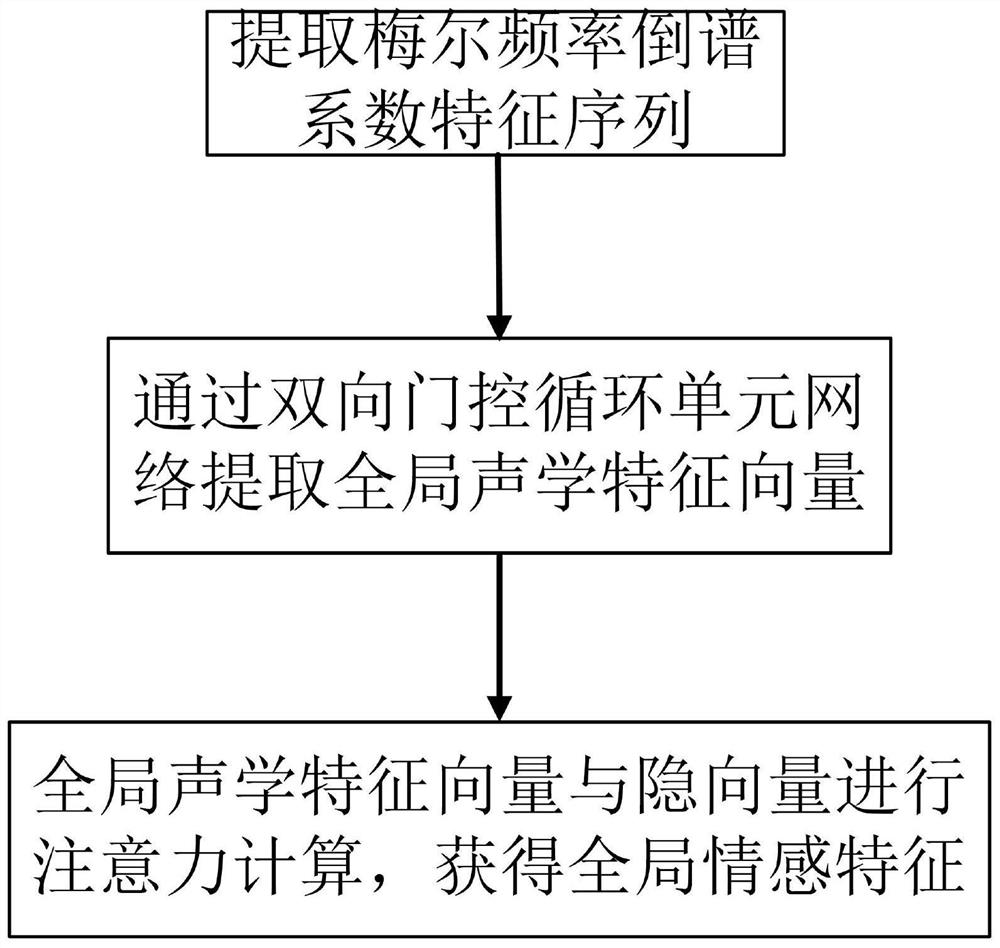

[0038] In order to have a clearer understanding of the technical features, purposes and effects of the present invention, the specific implementation manners of the present invention will now be described with reference to the accompanying drawings.

[0039] In the process of description, acronyms of key terms will be involved, which are explained and explained in advance here:

[0040] LSTM: Long Short-Term Memory, long short-term memory network, is an implementation of Recurrent Neural Network (RNN);

[0041] MFCC: Mel Frequency Cepstral Coefficient, Mel Frequency Cepstral Coefficient, is a feature commonly used in speech, which mainly contains information in the frequency domain of speech;

[0042] PPG: Phonetic Posterior grams, that is, the posterior probability of the phoneme, is an intermediate representation of the result of speech recognition, indicating the posterior probability that each frame of speech belongs to each phoneme;

[0043] GRU: Gated Recurrent Unit, a ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com