Patents

Literature

259 results about "Cepstrum coefficients" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

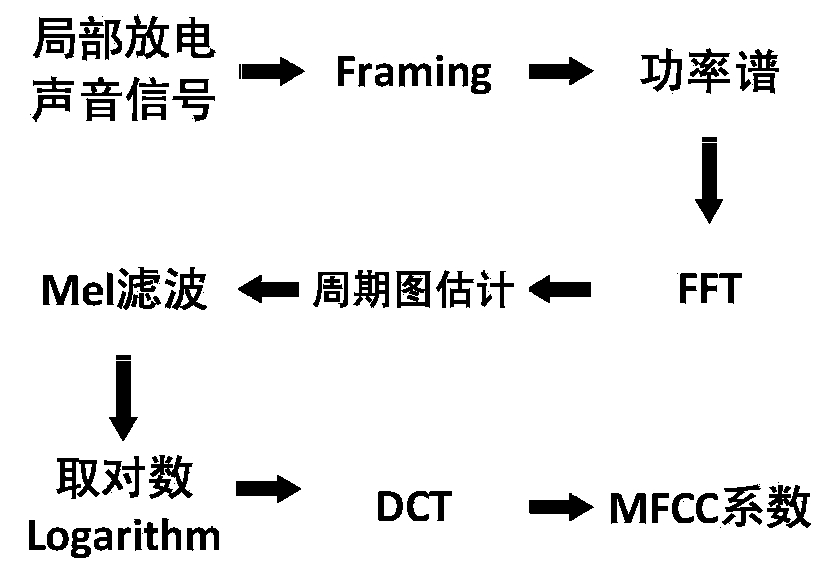

The cepstral coefficients are the coefficients of the Fourier transform representation of the logarithm magnitude spectrum.

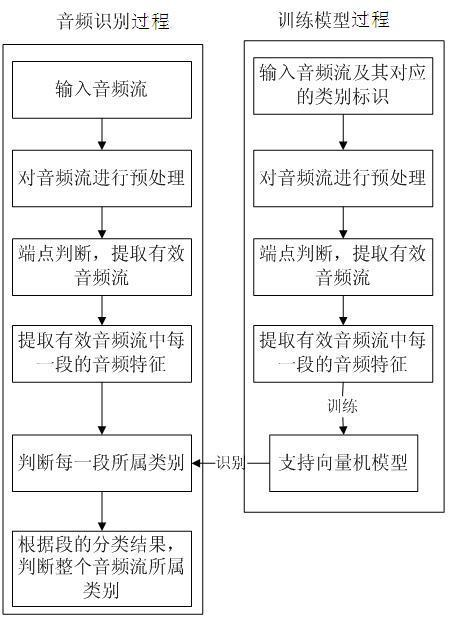

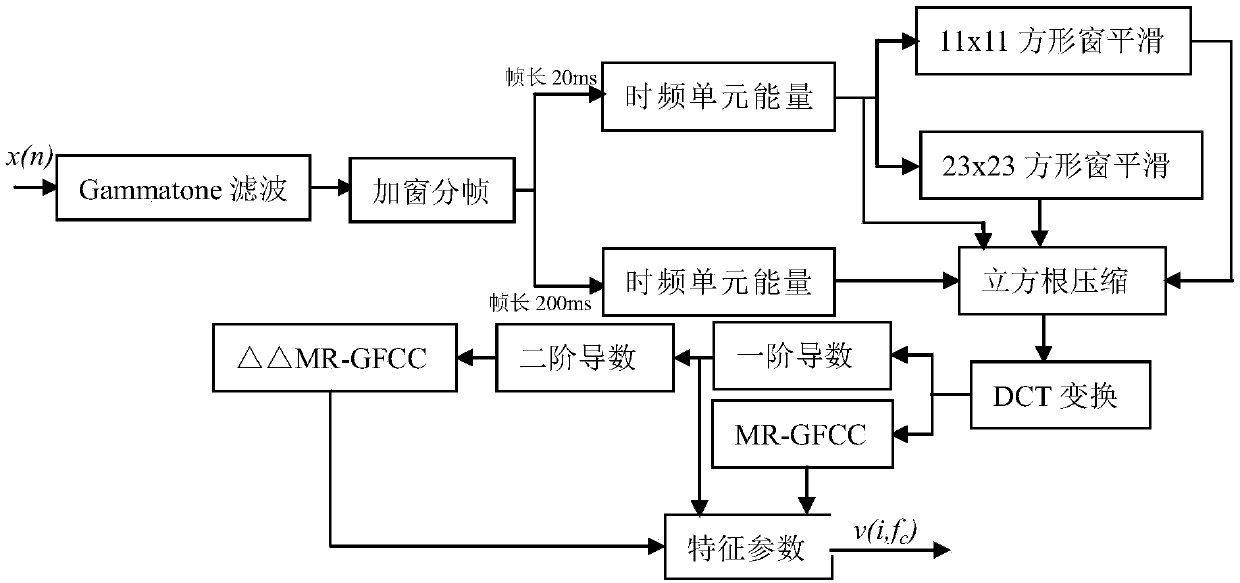

Method for identifying local discharge signals of switchboard based on support vector machine model

InactiveCN102426835AGuaranteed reliabilityEnsure safetySpeech recognitionZero-crossing rateFeature parameter

The invention discloses a method for identifying local discharge signals of a switchboard based on a support vector machine model. The method comprises a model training process and an audio identifying process, and particularly comprises the following steps of: preprocessing audio signals; extracting effective audios according to short-time energy and a zero-crossing rate; segmenting the effective audios and extracting characteristic parameters such as Mel cepstrum coefficients, first order difference Mel cepstrum coefficients, high zero-crossing rate and the like of each segment of the audios; training a sample set by using a support vector machine tool, and establishing a corresponding support vector machine model; after preprocessing audio signals to be identified and extracting and segmenting the effective audios, classifying and identifying segment-characteristic-based samples to be tested according to the support vector machine model; and post-processing classification results, and judging whether partial discharge signals exist. By using the method, the existence of the partial discharge signals of the switchboard is accurately identified, the happening of major accidents involving electricity is prevented and avoided, economic losses caused by insulation accidents are reduced, and the power distribution reliability is improved.

Owner:SOUTH CHINA UNIV OF TECH

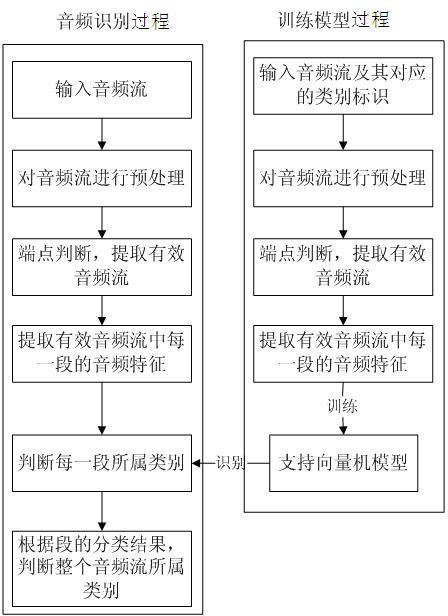

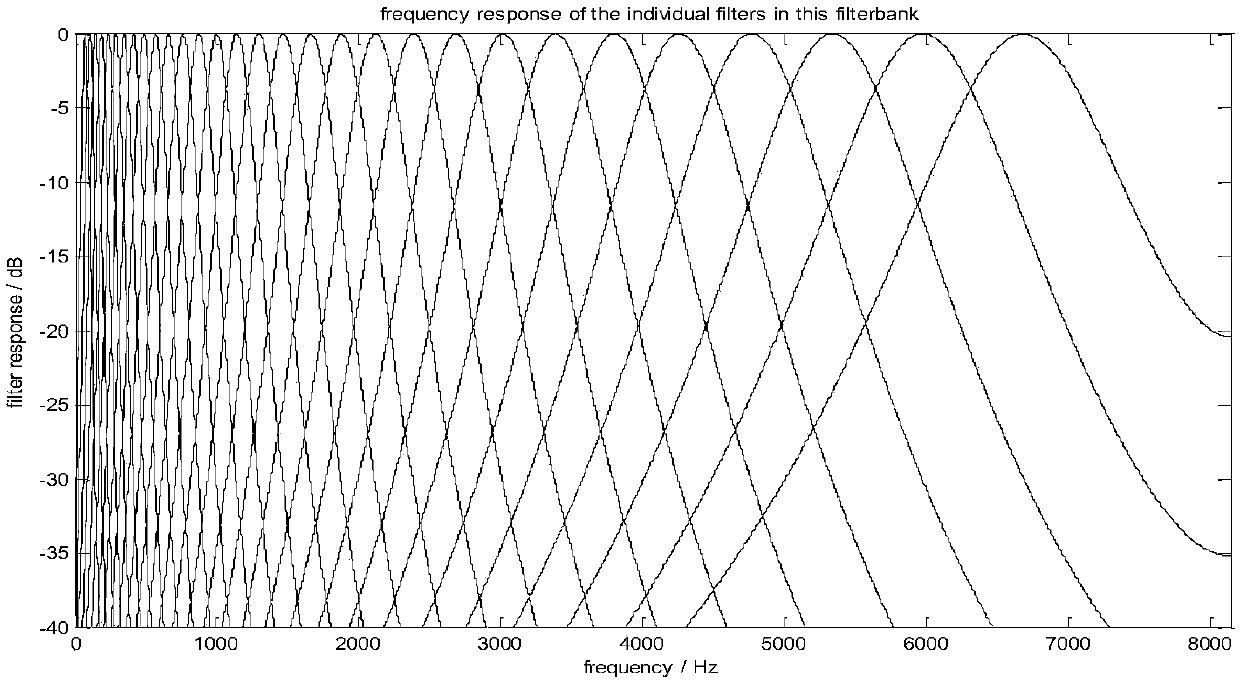

Voice enhancing method based on multiresolution auditory cepstrum coefficient and deep convolutional neural network

ActiveCN107845389AReduce complexityCompatible with auditory perception characteristicsSpeech recognitionMasking thresholdHuman ear

The invention discloses a voice enhancing method based on a multiresolution auditory cepstrum system and a deep convolutional neural network. The voice enhancing method comprises the following steps:firstly, establishing new characteristic parameters, namely multiresolution auditory cepstrum coefficient (MR-GFCC), capable of distinguishing voice from noise; secondly, establishing a self-adaptivemasking threshold on based on ideal soft masking (IRM) and ideal binary masking (IBM) according to noise variations; further training an established seven-layer neural network by using new extracted characteristic parameters and first / second derivatives thereof and the self-adaptive masking threshold as input and output of the deep convolutional neural network (DCNN); and finally enhancing noise-containing voice by using the self-adaptive masking threshold estimated by the DCNN. By adopting the method, the working mechanism of human ears is sufficiently utilized, voice characteristic parameters simulating a human ear auditory physiological model are disposed, and not only is a relatively great deal of voice information maintained, but also the extraction process is simple and feasible.

Owner:BEIJING UNIV OF TECH

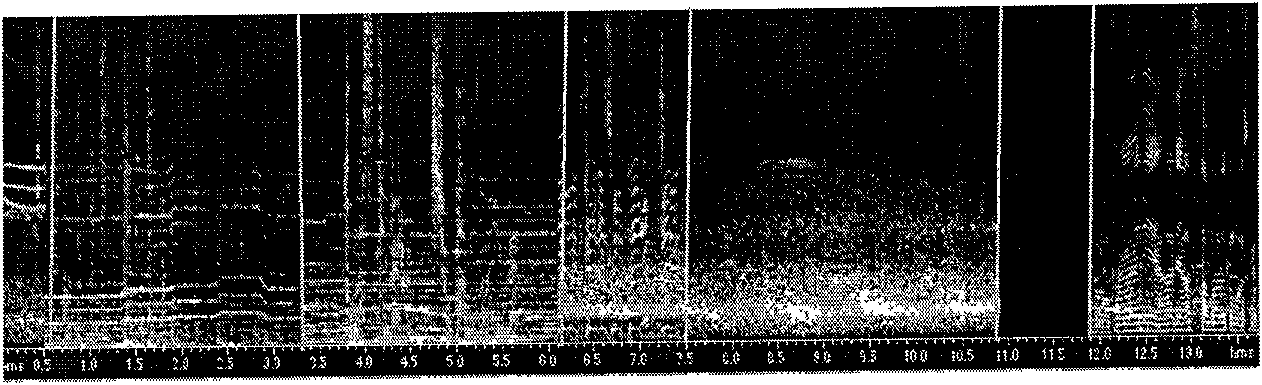

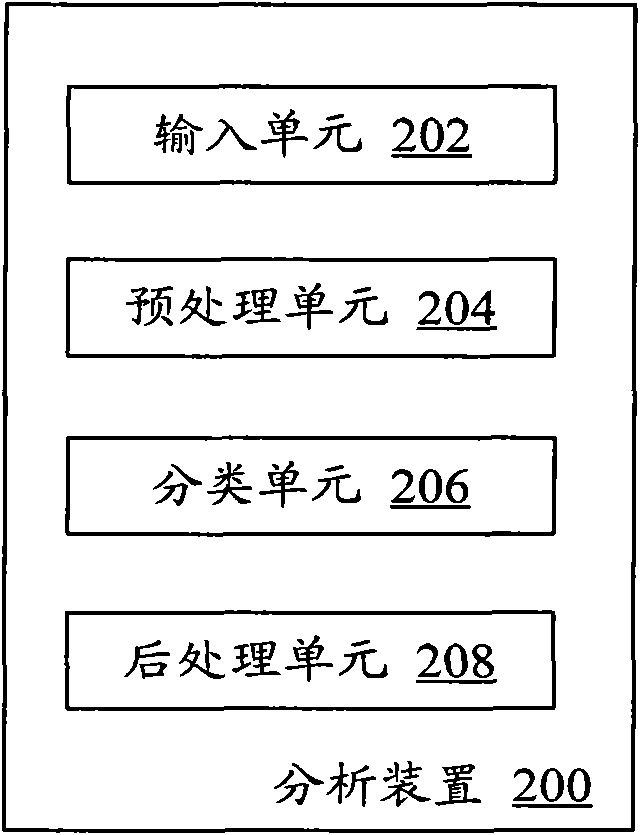

Device and method for analyzing audio data

InactiveCN101685446AQuick searchShorten the timeSpeech analysisSpecial data processing applicationsTone FrequencyZero-crossing rate

The invention provides a device for analyzing audio data by using an SVM method. The device is characterized by comprising an input unit, a preprocessing unit, a classifying unit and a post processingunit, wherein the input unit is used for inputting audio stream; the preprocessing unit is used for preprocessing the audio stream to obtain a characteristic parameter of each frame; the classifyingunit analyzes the category to which each frame belongs according to the characteristic parameter; and the post processing unit carries out post processing on the classifying result of the classifyingunit to obtain the final subsection result. The characteristic parameter comprises short time average energy, subband energy, zero-crossing rate, Mel frequency domain cepstrum coefficient, delta Mel frequency domain cepstrum coefficient, spectrum flux and fundamental tone frequency. The invention realizes quick retrieval of splendid contents, and can save the time of audiences and meet the watching demand of the audiences.

Owner:SONY CHINA

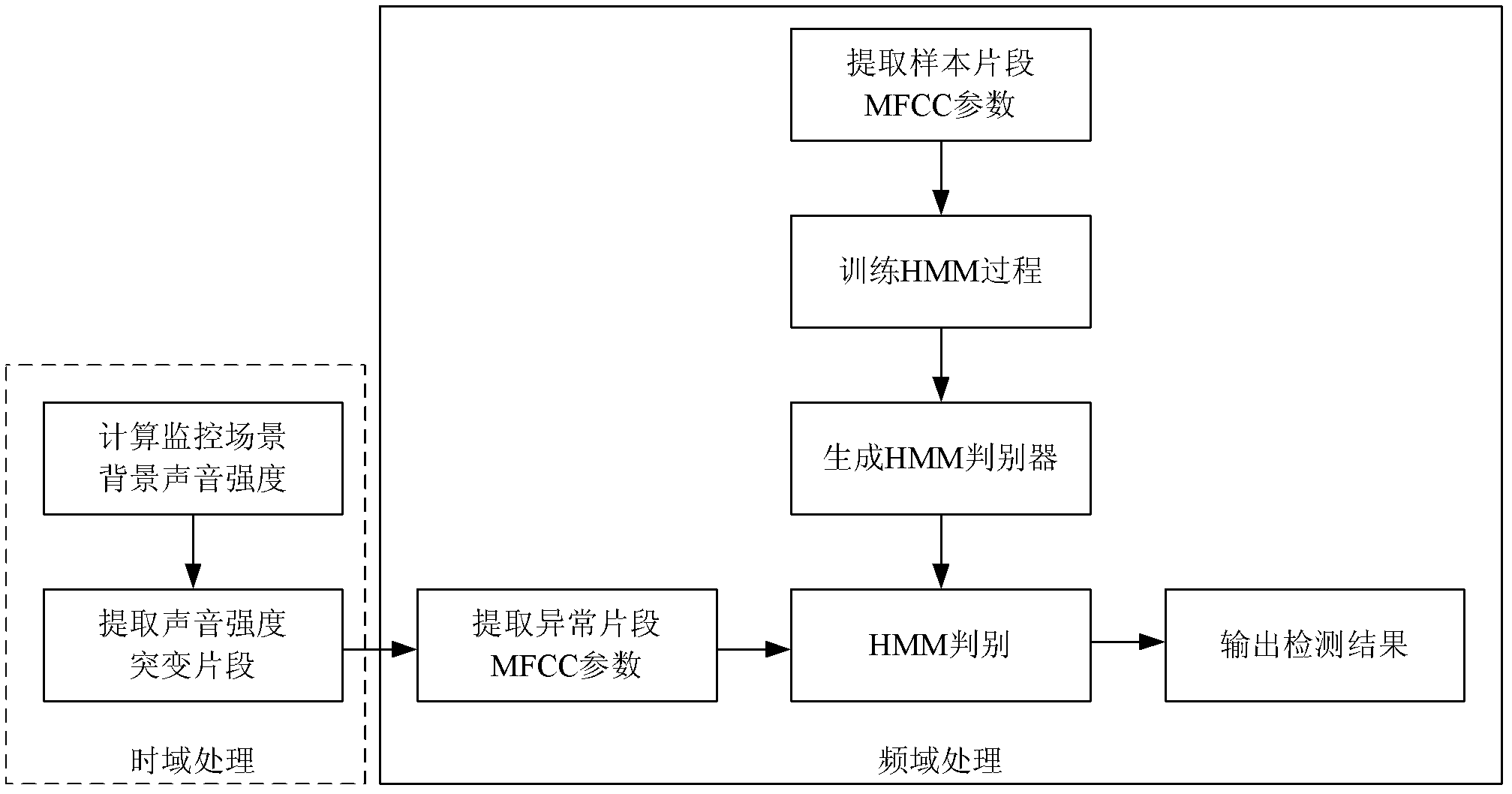

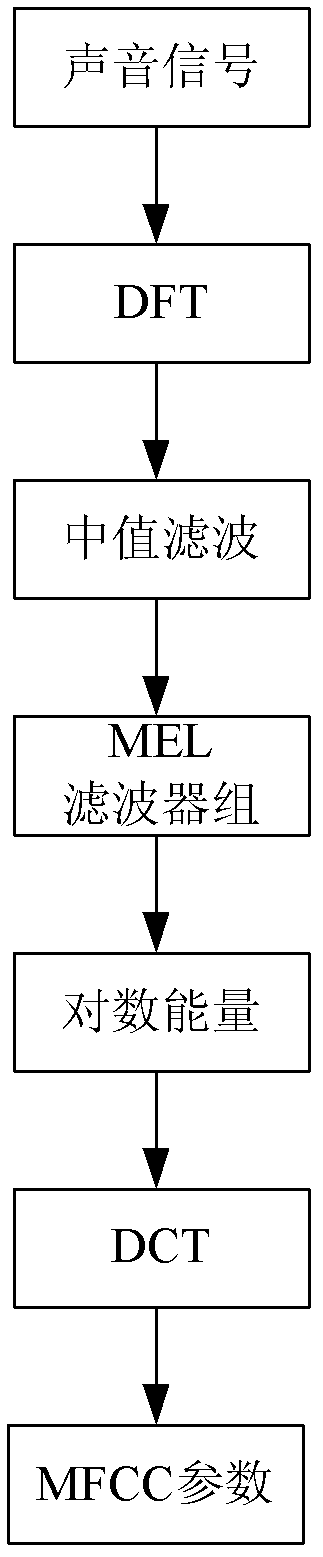

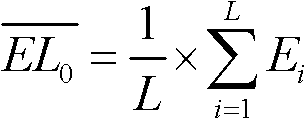

Abnormal voice detecting method based on time-domain and frequency-domain analysis

ActiveCN102664006AIncrease flexibilityImprove noise immunitySpeech recognitionTime domainMel-frequency cepstrum

The invention relates an abnormal voice detecting method based on time-domain and frequency-domain analysis. The method includes computing the background sound intensity of a monitored scene updated in real time at first, and detecting and extracting suddenly changed fragments of the sound intensity; then extracting uniform filter Mel frequency cepstrum coefficients of the suddenly changed fragments; and finally using the extracted Mel frequency cepstrum coefficients of sound of the abnormal fragments as observation sequences, inputting a trained modified hidden Markov process model, and analyzing whether the abnormal fragments are abnormal voice or not according to frequency characteristics of voice. Time sequence correlation is improved when the hidden Markov process model is added. The method is combined with time-domain extraction of suddenly changed energy frames and verification within a frequency-domain range, the abnormal voice can be effectively detected, instantaneity is good, noise resistance is high, and robustness is fine.

Owner:NAT UNIV OF DEFENSE TECH

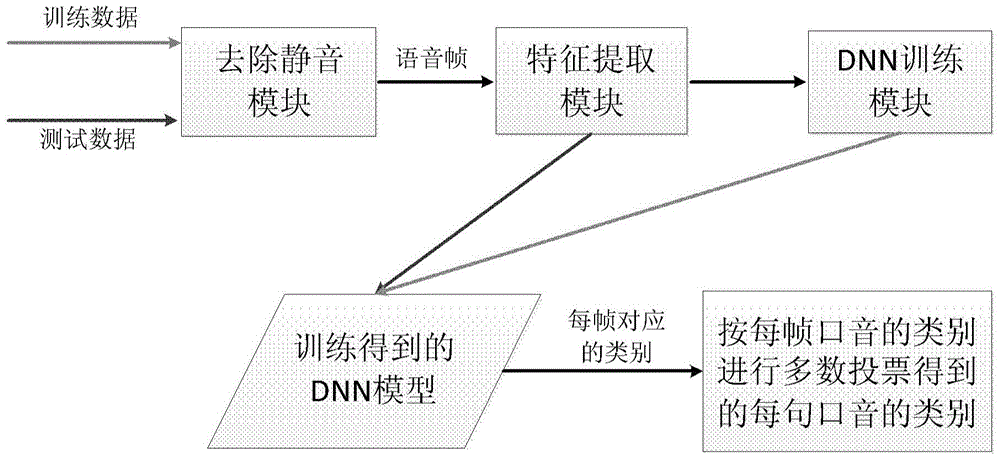

Deep-learning-technology-based automatic accent classification method and apparatus

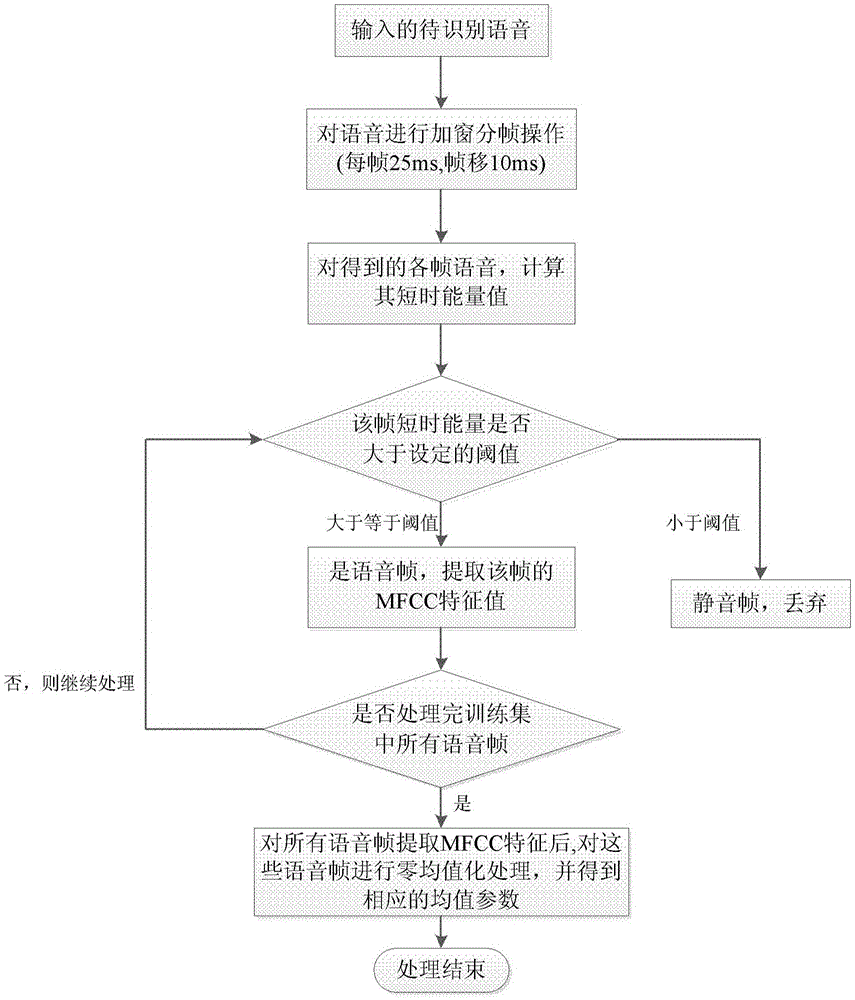

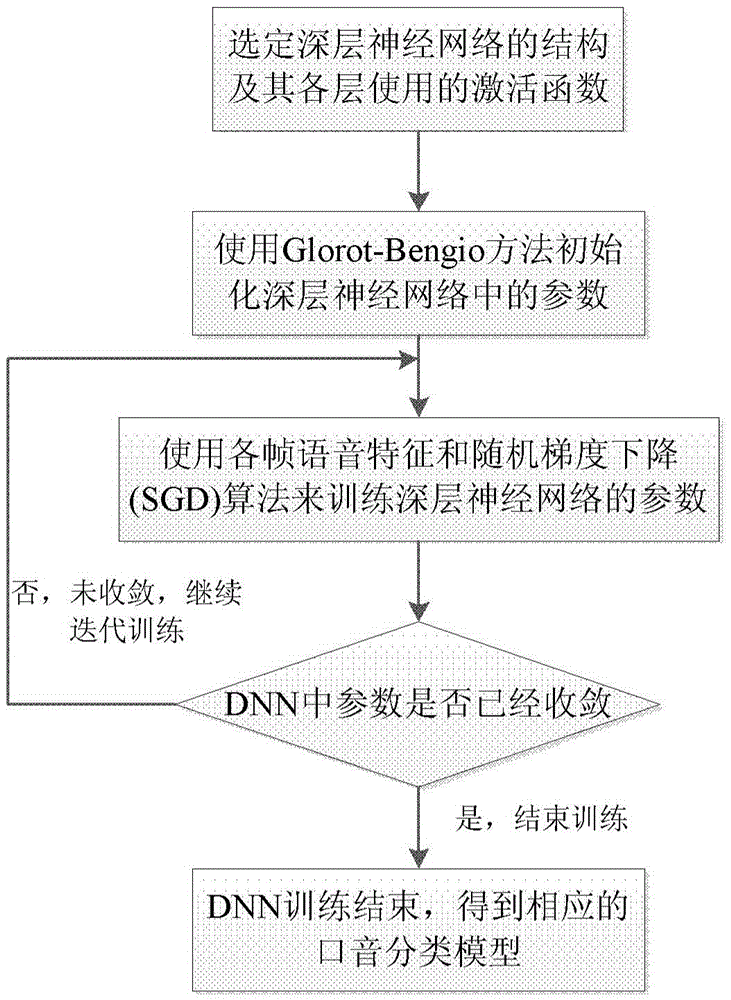

InactiveCN105632501AImprove performanceImprove classification effectSpeech recognitionHidden layerFeature extraction

The invention discloses a deep-learning-technology-based automatic accent classification method and apparatus. The method comprises: mute voice elimination is carried out on all accent voices in a training set and mel-frequency cepstrum coefficient (MFCC) feature extraction is carried out; according to the extracted MFCC feature, deep neural networks of various accent voices are trained to describe acoustic characteristics of various accent voices, wherein the deep neural networks are forward artificial neural networks at least including two hidden layers; probability scores of all voice frames of a to-be-identified voice at all accent classifications in the deep neural networks are calculated and an accent classification tag with the largest probability score is set as a voice identification tag of the voice frame; and the voice classification of each voice frame in the to-be-identified voice is used for carrying out majority voting to obtain a voice classification corresponding to the to-be-identified voice. According to the invention, context information can be utilized effectively and thus a classification effect better than a traditional superficial layer model can be provided.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +2

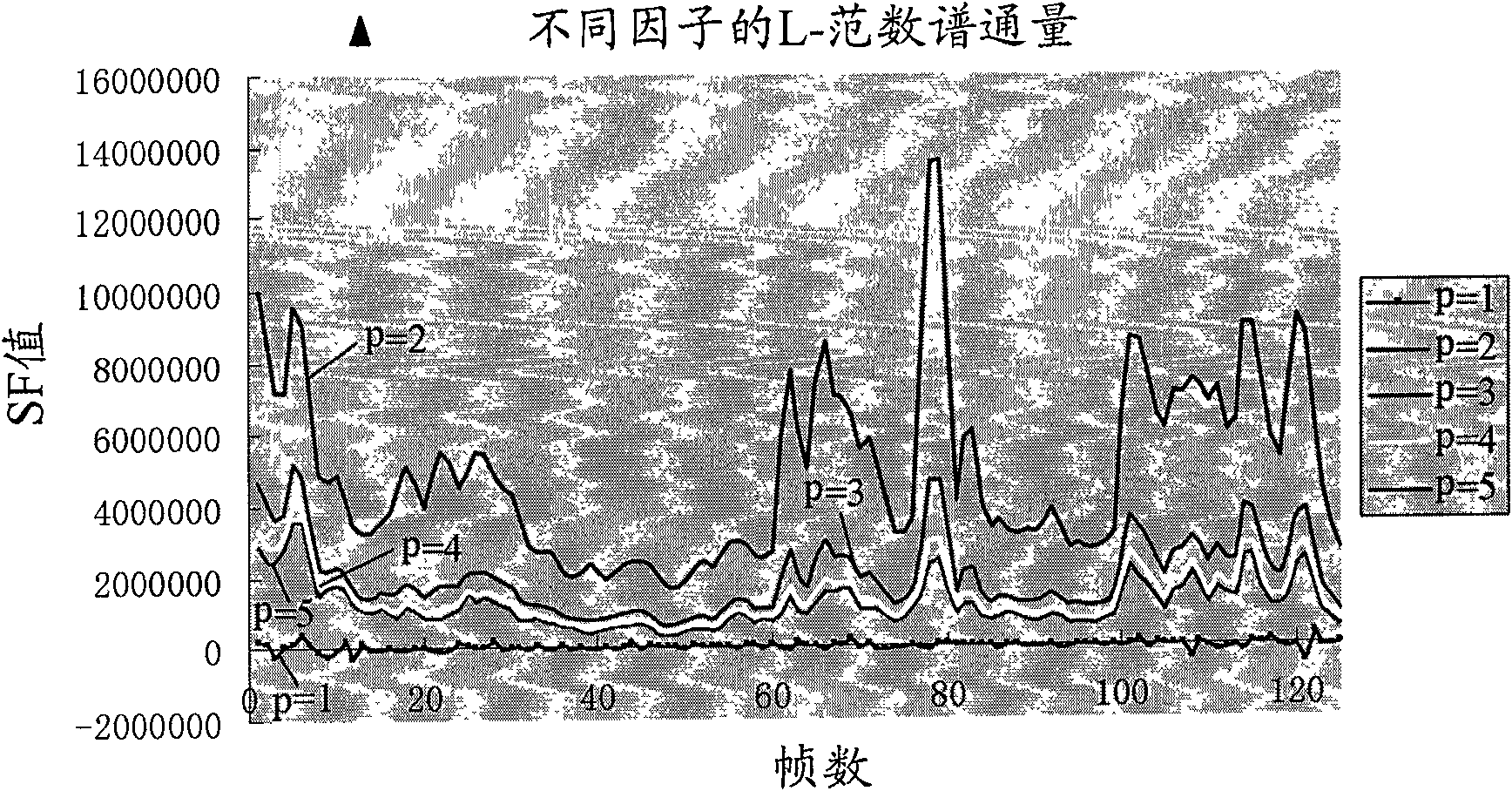

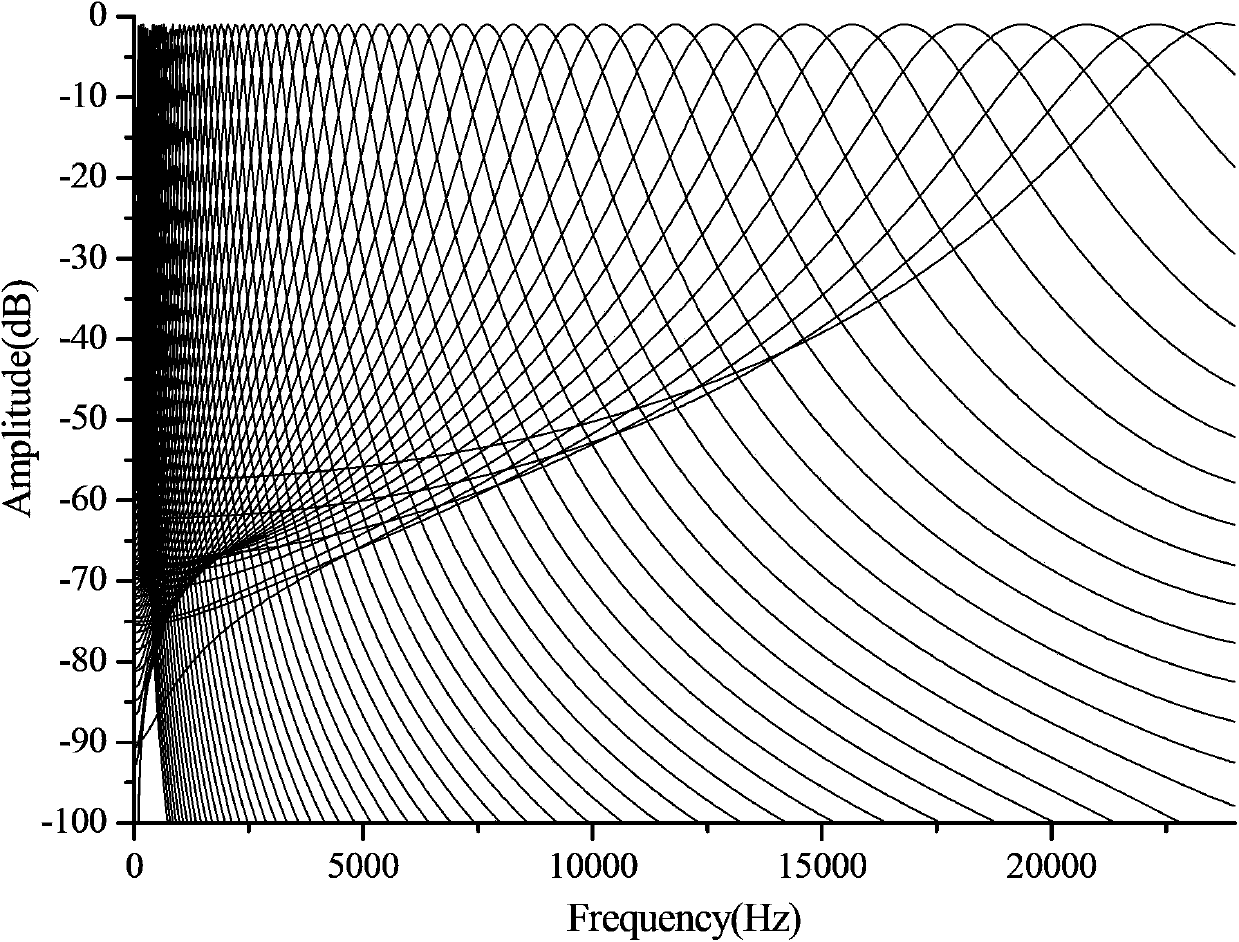

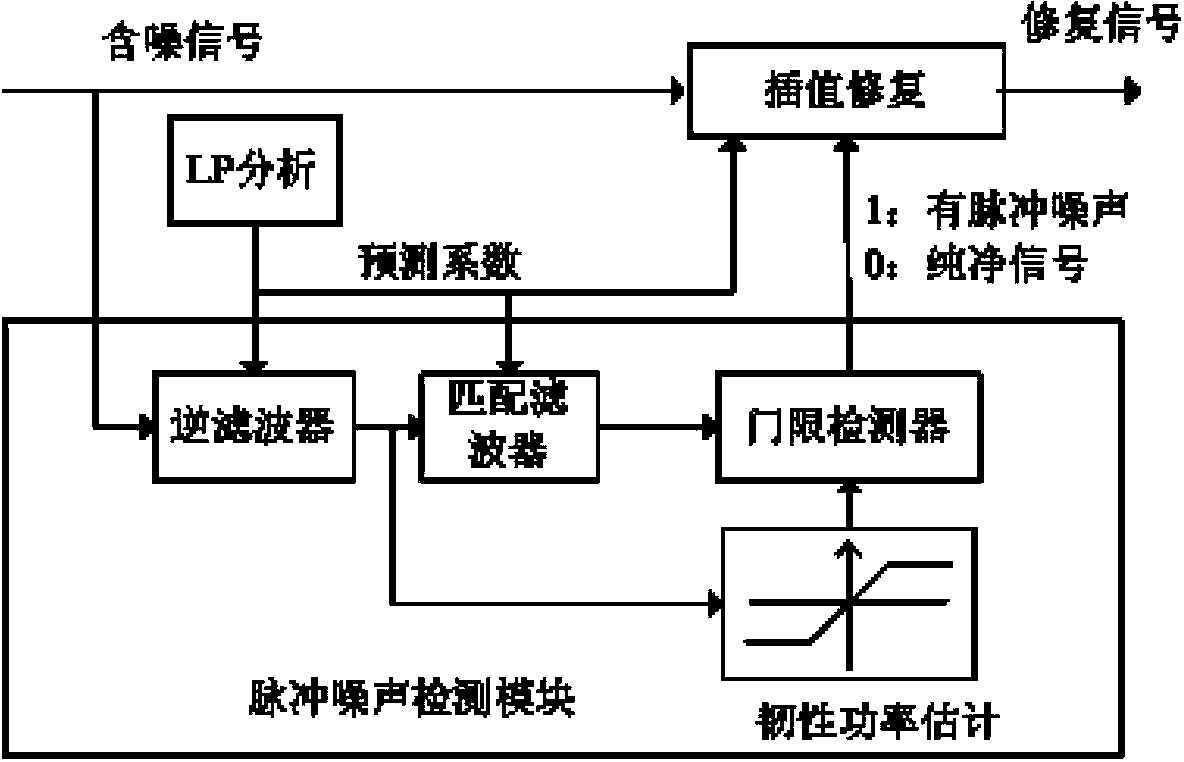

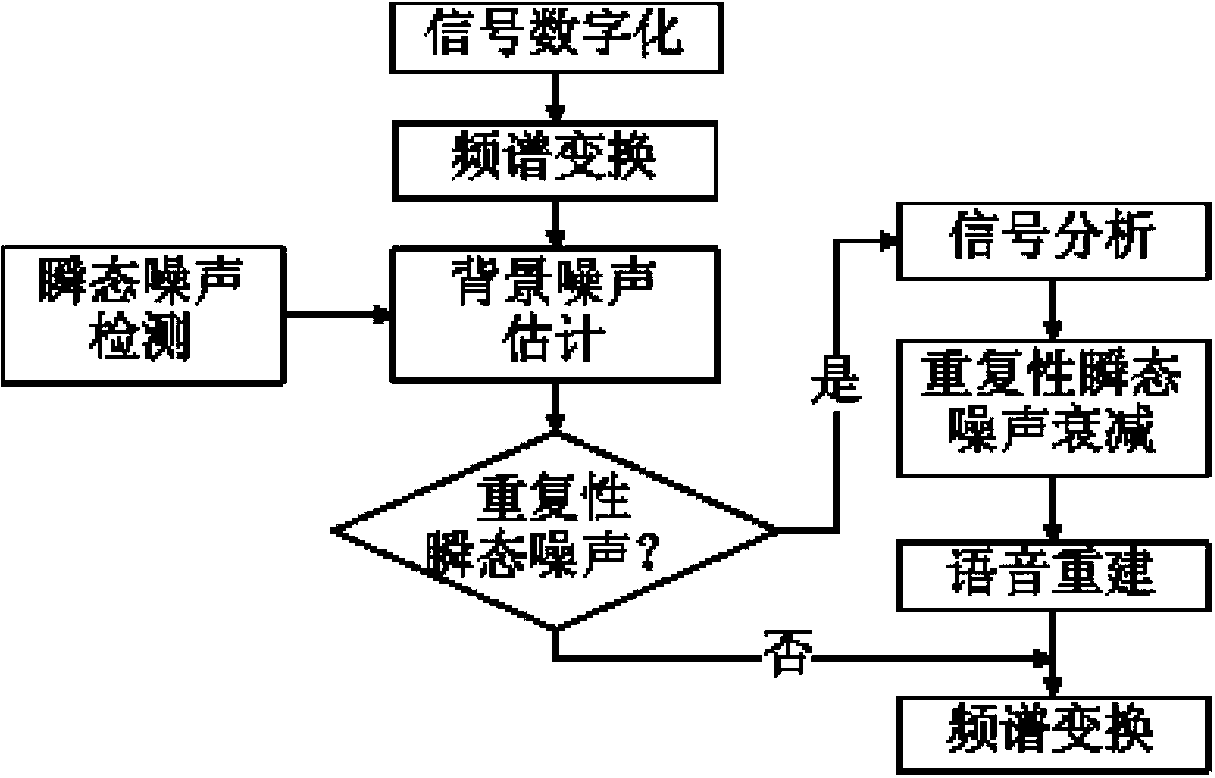

Method for suppressing transient noise in voice

The invention discloses a method for suppressing transient noise in voice, and belongs to the technical field of signal processing. The method for suppressing transient noise in voice is characterized by comprising a gamma through frequency cepstrum coefficient extraction module, a transient noise detection module and a voice signal reconstruction module, wherein the input end of the gamma through frequency cepstrum coefficient extraction module receives a voice signal containing noise, the output end of the gamma through frequency cepstrum coefficient extraction module is connected with the input end of the transient noise detection module, the output end of the transient noise detection module is connected with the input end of the voice signal reconstruction module, the input end of the voice signal reconstruction module receives the voice signal containing noise and is also connected with the output end of the transient noise detection module, and the voice signal reconstruction module outputs the voice with noise removed.

Owner:DALIAN UNIV OF TECH

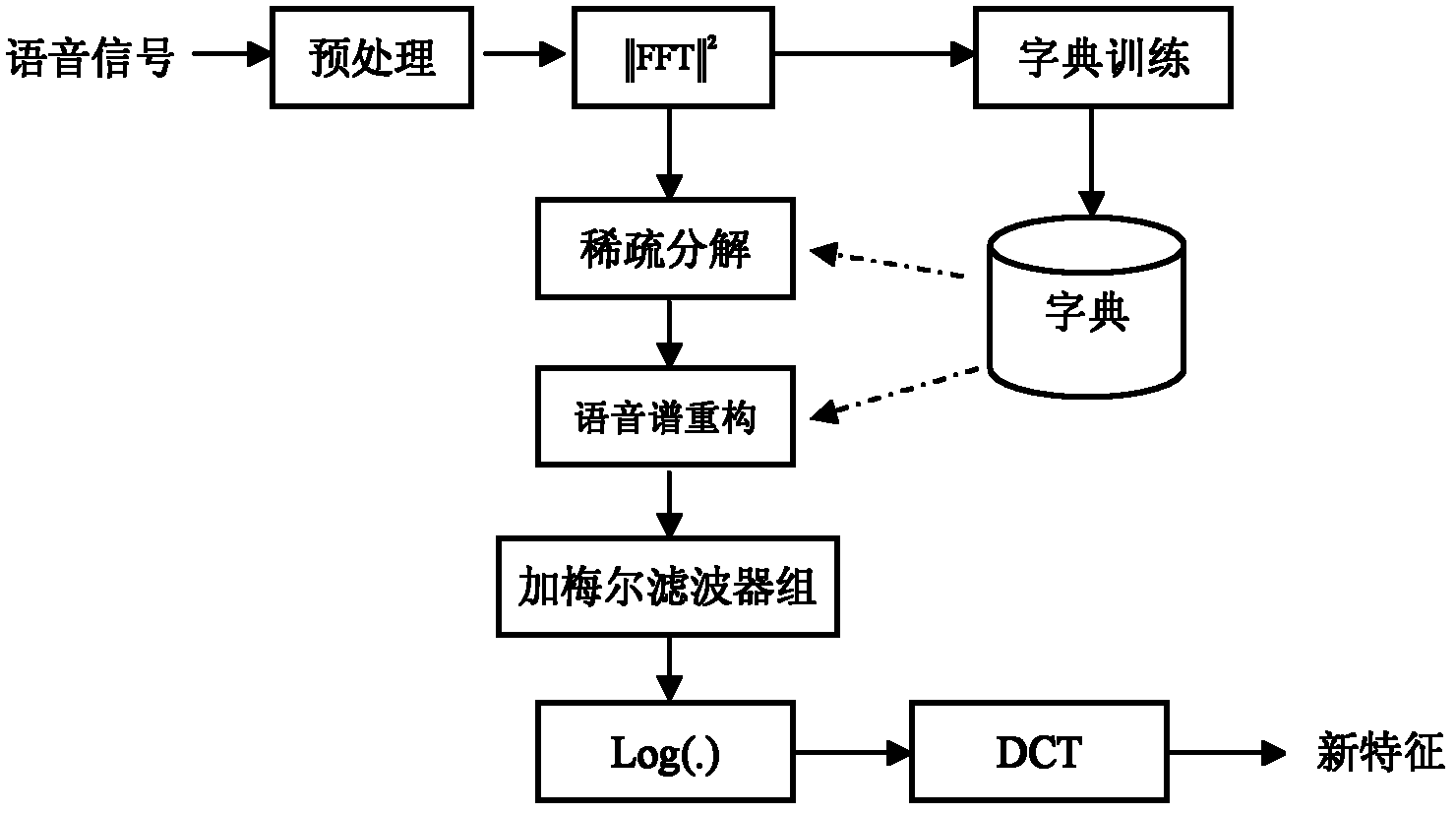

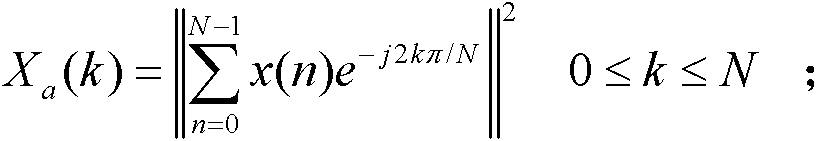

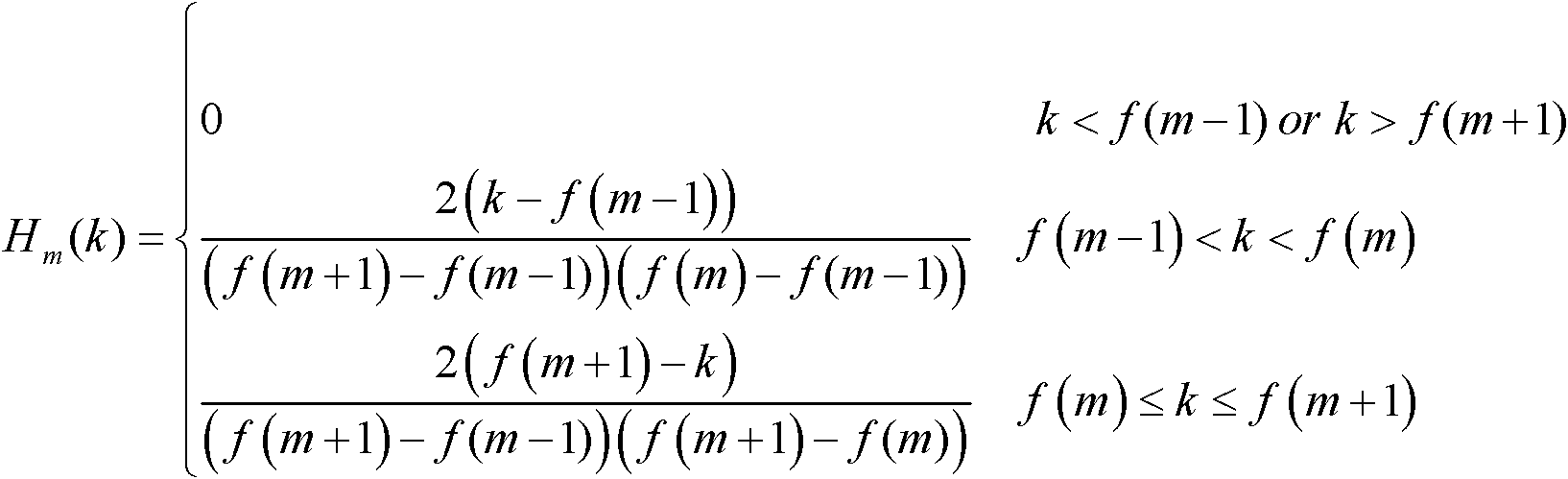

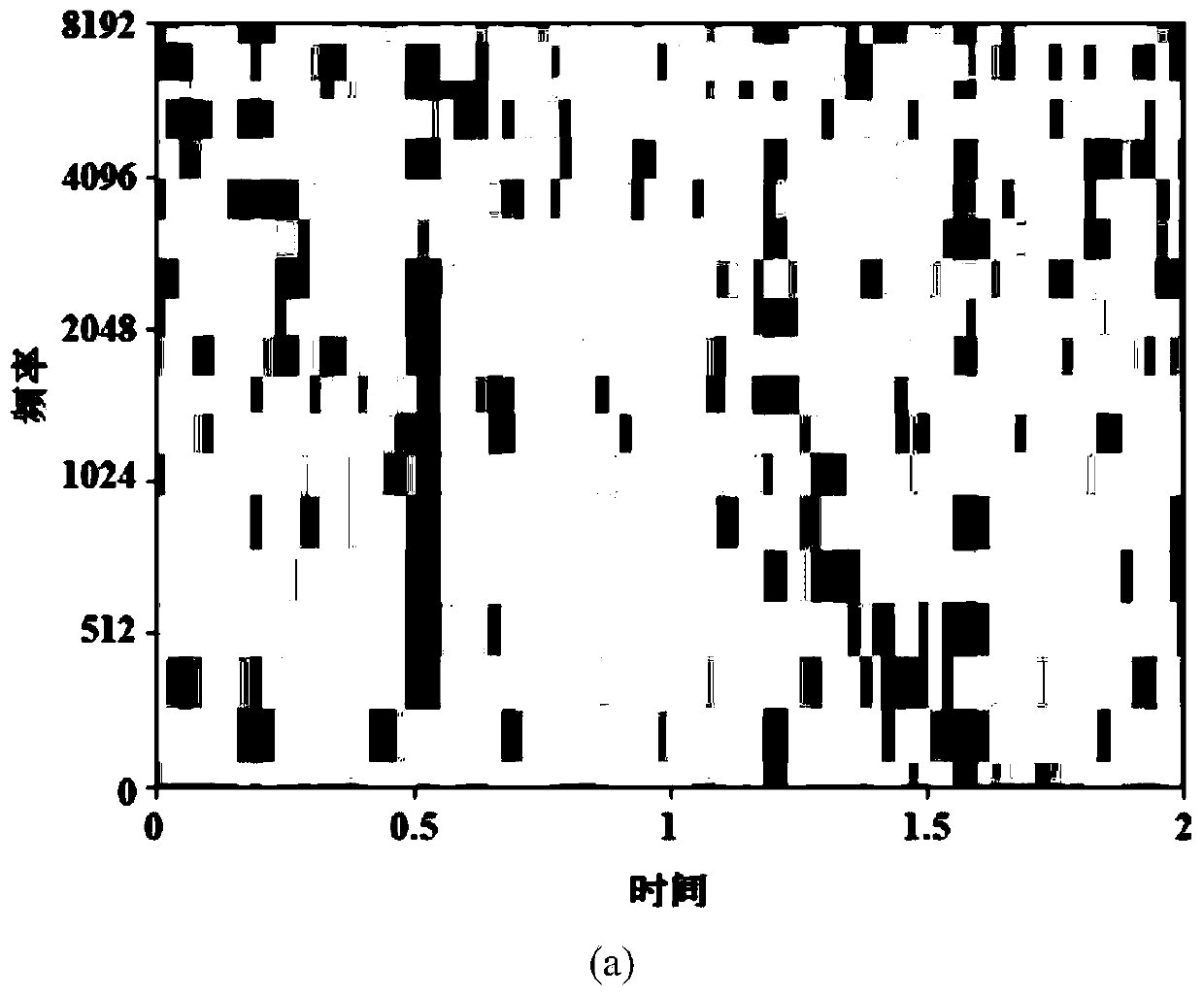

A Robust Speech Feature Extraction Method Based on Sparse Decomposition and Reconstruction

The invention discloses a robust speech characteristic extraction method based on sparse decomposition and reconfiguration, relating to a robust speech characteristic extraction method with sparse decomposition and reconfiguration. The robust speech characteristic extraction method solves the problems that 1, the selection of an atomic dictionary has higher the time complexity and is difficult tomeet the sparsity after signal projection; 2, the sparse decomposition of signals has less consideration for time relativity of speech signals and noise signals; and 3, the signal reconfiguration ignores the prior probability of atoms and mutual transformation of all the atoms. The robust speech characteristic extraction method comprises the following detailed steps of: step 1, preprocessing; step 2, conducting discrete Fourier transform and solving a power spectrum; step 3, training and storing the atom dictionary; step 4, conducting sparse decomposition; step 5, reconfiguring the speech spectrum; step 6, adding a Mel triangular filter and taking the logarithm; and step 7, obtaining sparse splicing of Mel cepstrum coefficients and a Mel cepstrum to form the robust characteristic. The robust speech characteristic extraction method is used for the fields of multimedia information processing.

Owner:哈尔滨工业大学高新技术开发总公司

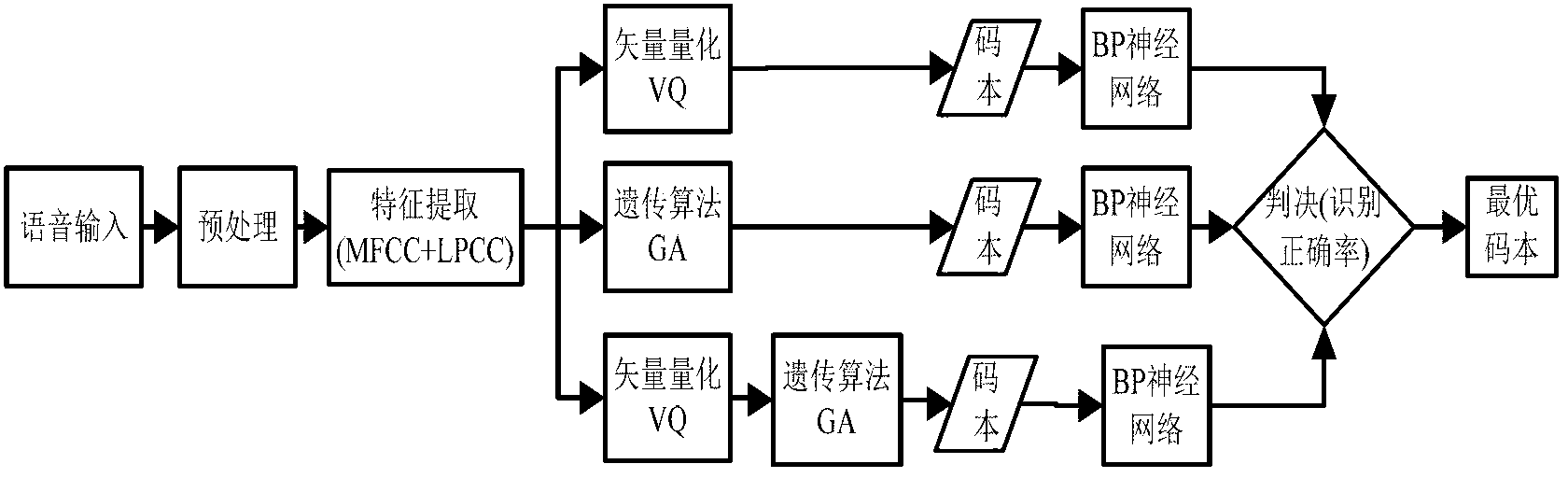

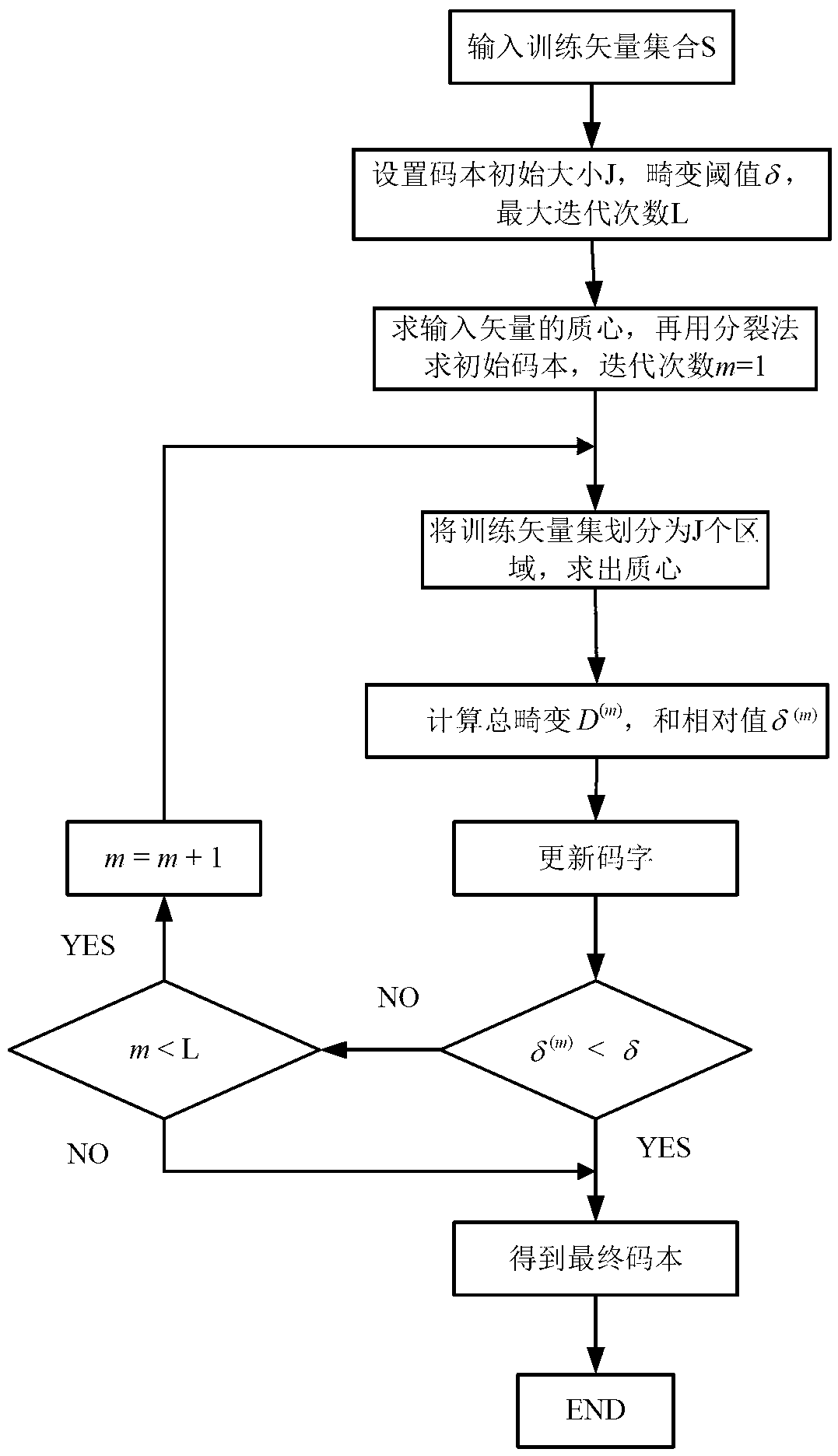

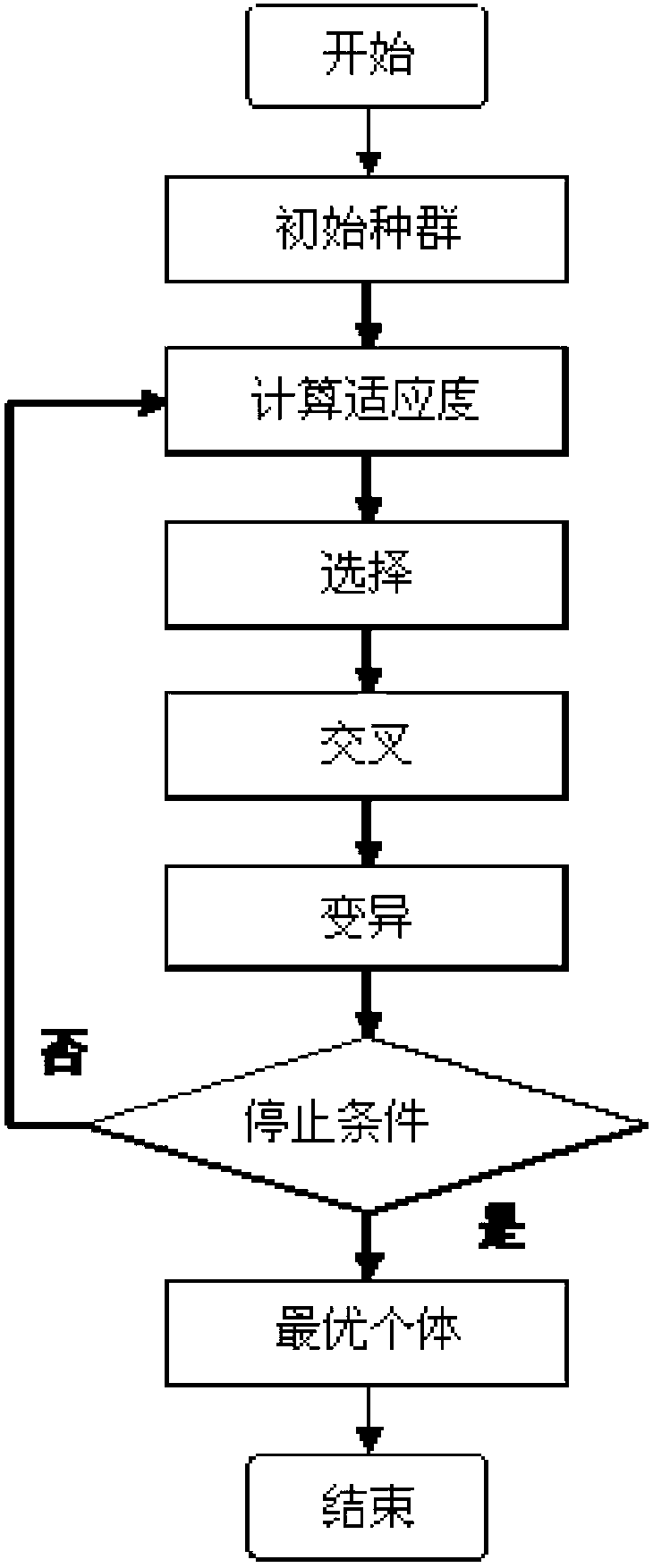

Optimal codebook design method for voiceprint recognition system based on nerve network

InactiveCN102800316AImprove adaptabilityImprove stabilitySpeech recognitionNerve networkParallel algorithm

The invention relates to an optimal codebook design method for a voiceprint recognition system based on a nerve network. The optimal codebook design method comprises following five steps: voice signal input, voice signal pretreatment, voice signal characteristic parameter extraction, three-way initial codebook generation and nerve network training as well as optimal codebook selection; MFCC (Mel Frequency Cepstrum Coefficient) and LPCC (Linear Prediction Cepstrum Coefficient) parameters are extracted at the same time after pretreatment; then a local optimal vector quantization method and a global optimal genetic algorithm are adopted to realize that a hybrid phonetic feature parameter matrix generates initial codebooks through three-way parallel algorithms based on VQ, GA and VQ as well as GA; and the optimal codebook is selected by judging the nerve network recognition accuracy rate of the three-way codebooks. The optimal codebook design method achieves the remarkable effects as follows: the optimal codebook is utilized to lead the voiceprint recognition system to obtain higher recognition rate and higher stability, and the adaptivity of the system is improved; and compared with the mode recognition based on a single codebook, the performance is improved obviously by adopting the voiceprint recognition system of the optimal codebook based on the nerve network.

Owner:CHONGQING UNIV

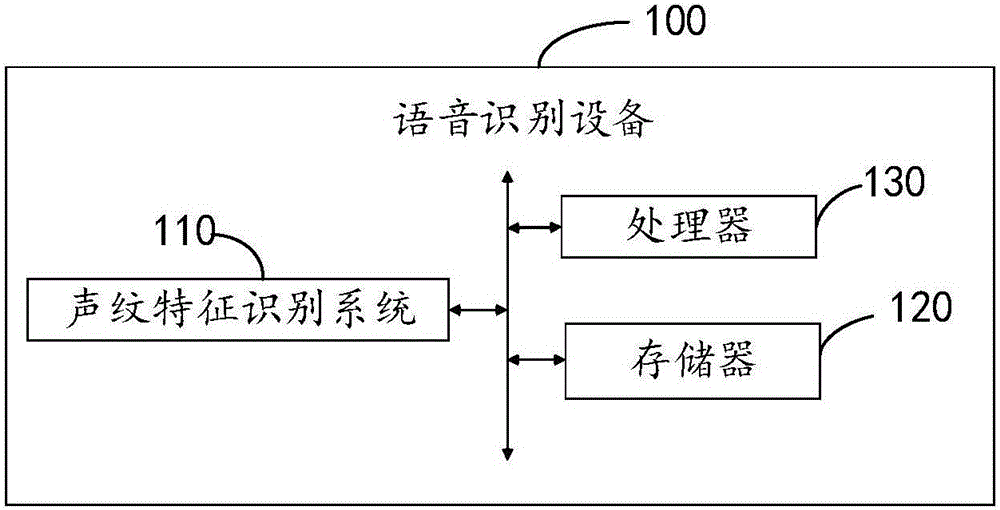

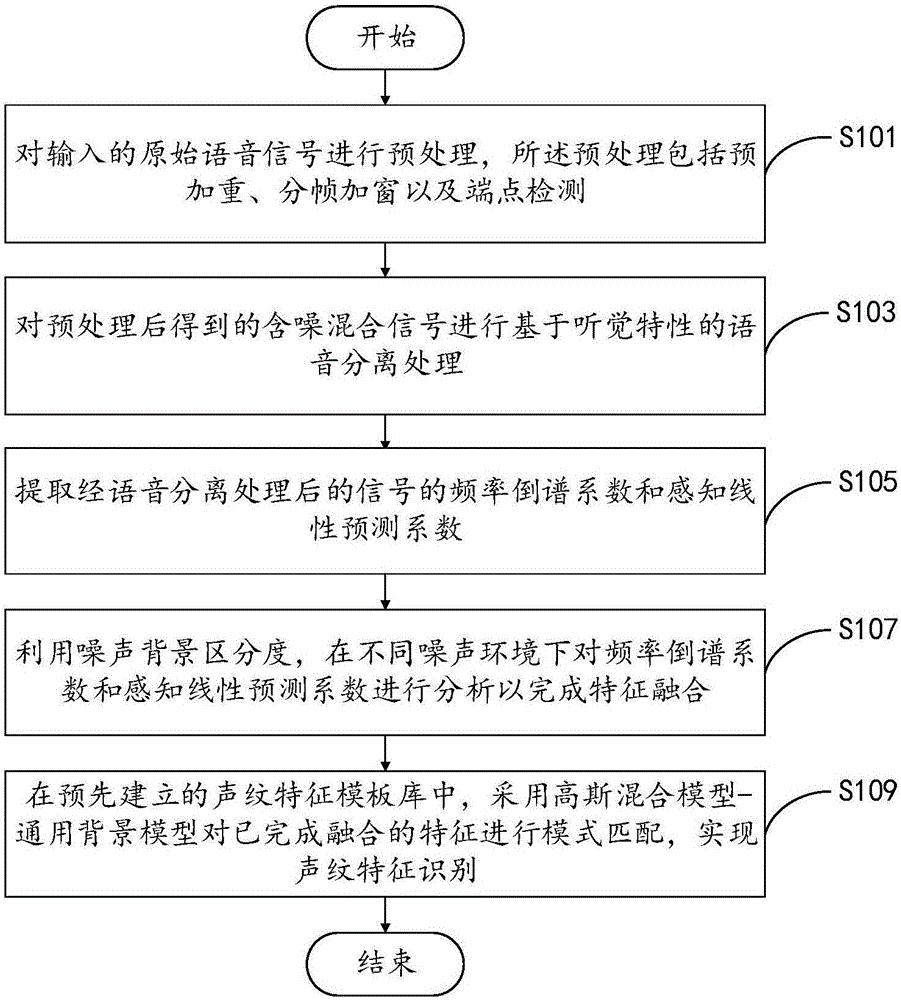

Vocal print feature recognition method and system

InactiveCN106782565ASolve the problem of lower voiceprint recognition rateImprove accuracySpeech analysisMixed noiseHuman auditory system

The embodiment of the invention provides a vocal print feature recognition method and a vocal print feature recognition system. The method comprises the following specific steps: after speech separation treatment based on hearing characteristics on pretreated noise-containing mixed noise, extracting a frequency cepstrum coefficient and a perceptual linear prediction coefficient of a signal, by utilizing the background distinction degree of the noise, analyzing the frequency cepstrum coefficient and the perceptual linear prediction coefficient under different noise environments so that the feature fusion is completed, and finally, in a pre-established vocal print feature template library, carrying out mode matching on the features after fusion by adopting a gaussian mixture model-universal background model (GMM-UBM). According to the vocal print feature recognition method, the human auditory system features and the traditional vocal print recognition method are combined, the problem that the vocal print recognition ratio under noise is low is solved from the point of bionics, and the vocal print feature recognition accuracy and system robustness under the noise environment are effectively promoted.

Owner:重庆重智机器人研究院有限公司

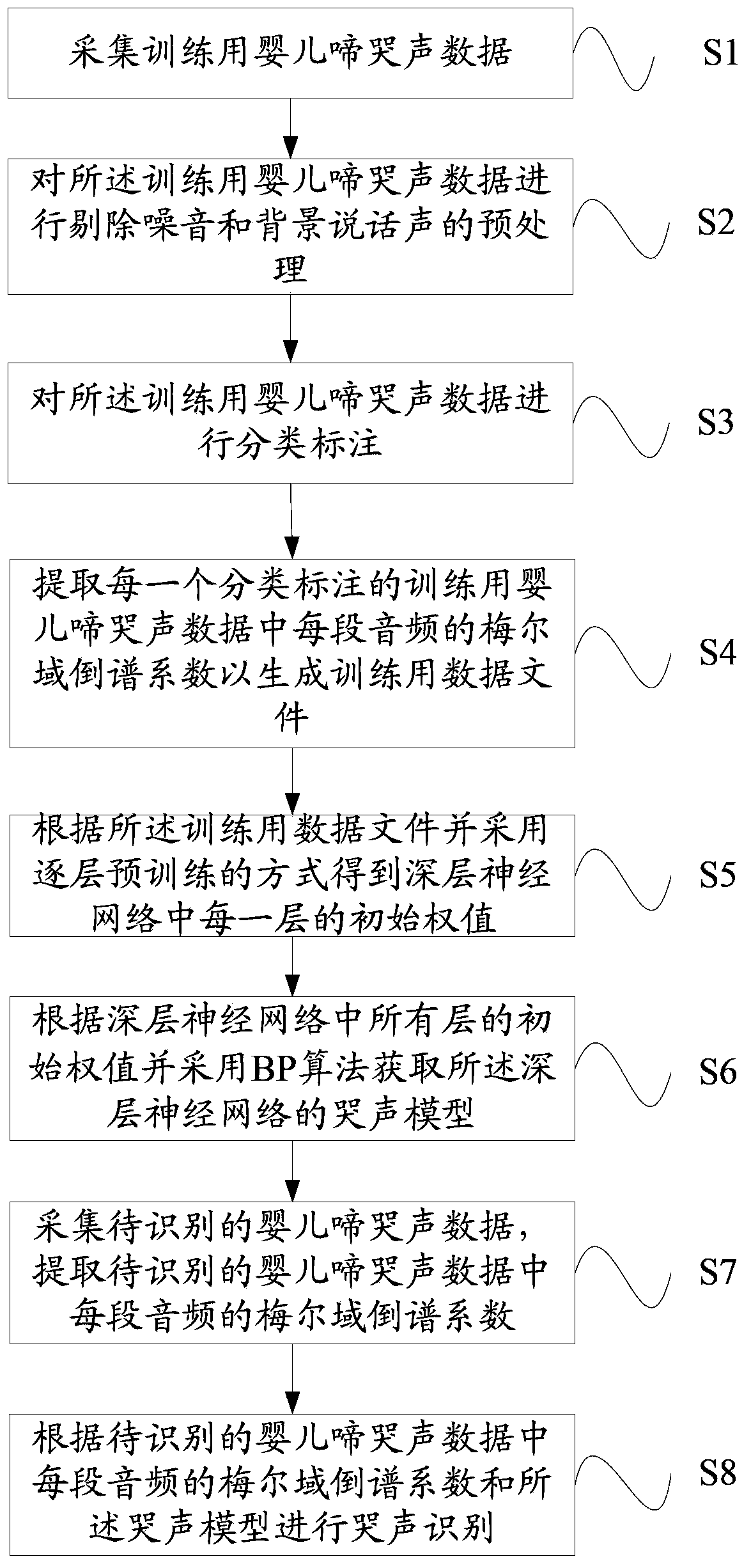

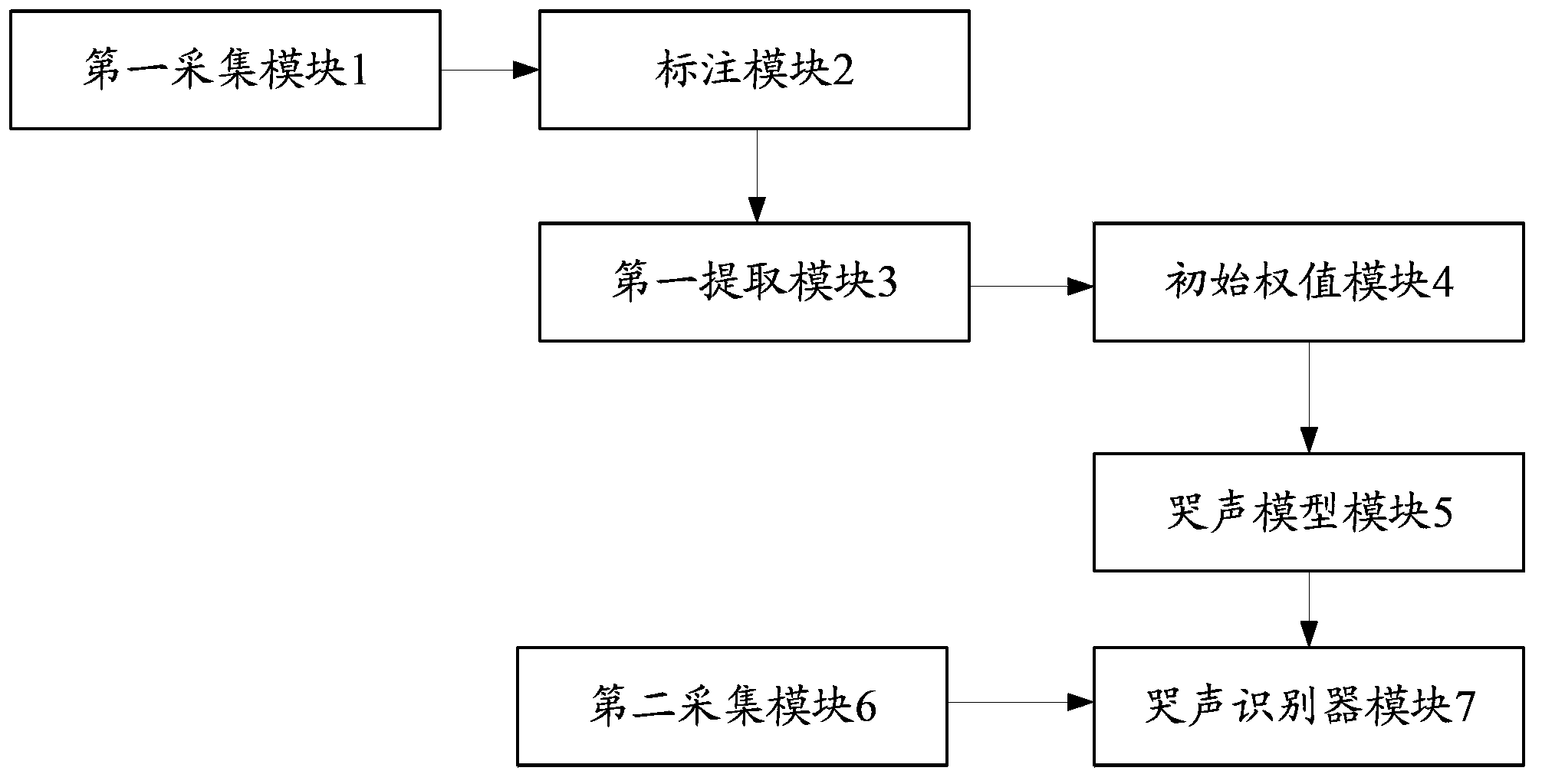

Deep neural network-based baby cry identification method and system

The invention provides a deep neural network-based baby cry identification method and a deep neural network-based baby cry identification system. The method comprises the following steps of acquiring baby cry data for training; performing classification and labeling on the baby cry data for training; extracting a Mel-domain cepstrum coefficient of each segment of audio in each piece of classified and labeled baby cry data for training to generate a data file for training; obtaining an initial weight of each layer in a deep neural network in a layer-wise pre-training way according to the data file for training; acquiring a deep neural network-based cry model according to the initial weights of all the layers in the deep neural network by virtue of a BP (back-propagation) algorithm; acquiring baby cry data to be identified, and extracting a Mel-domain cepstrum coefficient of each segment of audio in the baby cry data to be identified; performing cry identification according to the Mel-domain cepstrum coefficient of each segment of audio in the baby cry data to be identified and the cry model. According to the method and the system, the baby cry identification rate can be increased.

Owner:SHANGHAI ZHANGMEN TECH

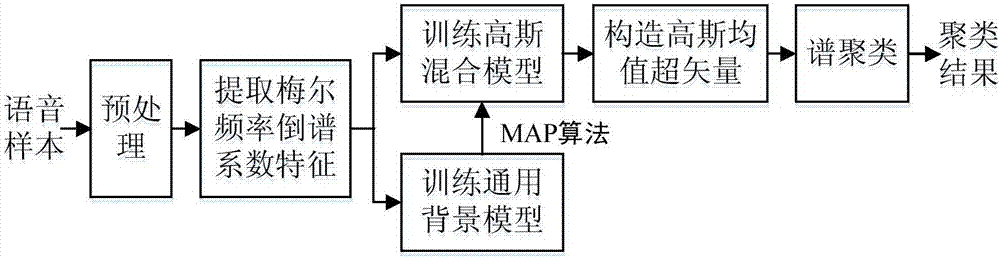

Recording device clustering method based on Gaussian mean super vectors and spectral clustering

InactiveCN106952643AEffectively describe the difference in characteristicsSpeech recognitionSpecial data processing applicationsDevice typeMean vector

The invention provides a recording device clustering method based on Gaussian mean super vectors and spectral clustering. The method comprises the steps that the Melch frequency cepstrum coefficient MFCC characteristic which characterizes the recording device characteristic is extracted from a speech sample; the MFCC characteristics of all speech samples are used as input, and a common background model UBM is trained through an expectation maximization EM algorithm; the MFCC characteristic of each speech sample is used as input, and UBM parameters are updated through a maximum posteriori probability MAP algorithm to acquire the Gaussian mixture model GMM of each speech sample; the mean vector of all Gaussian components of each GMM is spliced in turn to form a Gaussian mean super vector; a spectral clustering algorithm is used to cluster the Gaussian mean super vectors of all speech samples; the number of recording devices is estimated; and the speech samples of the same recording device are merged. According to the invention, the speech samples collected by the same recording device can be found out without knowing the prior knowledge of the type, the number and the like of the recording devices, and the application scope of the method is wide.

Owner:SOUTH CHINA UNIV OF TECH

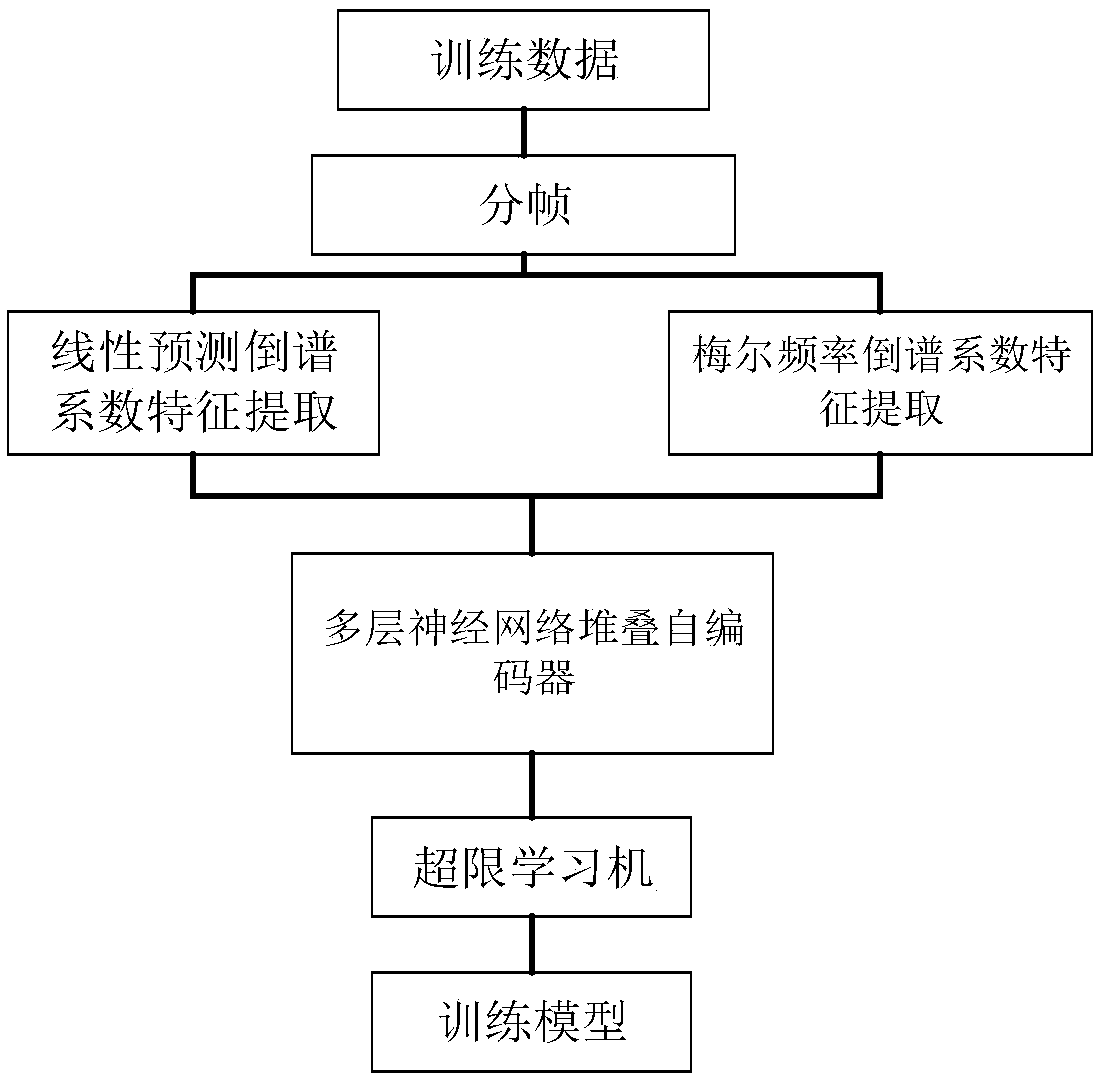

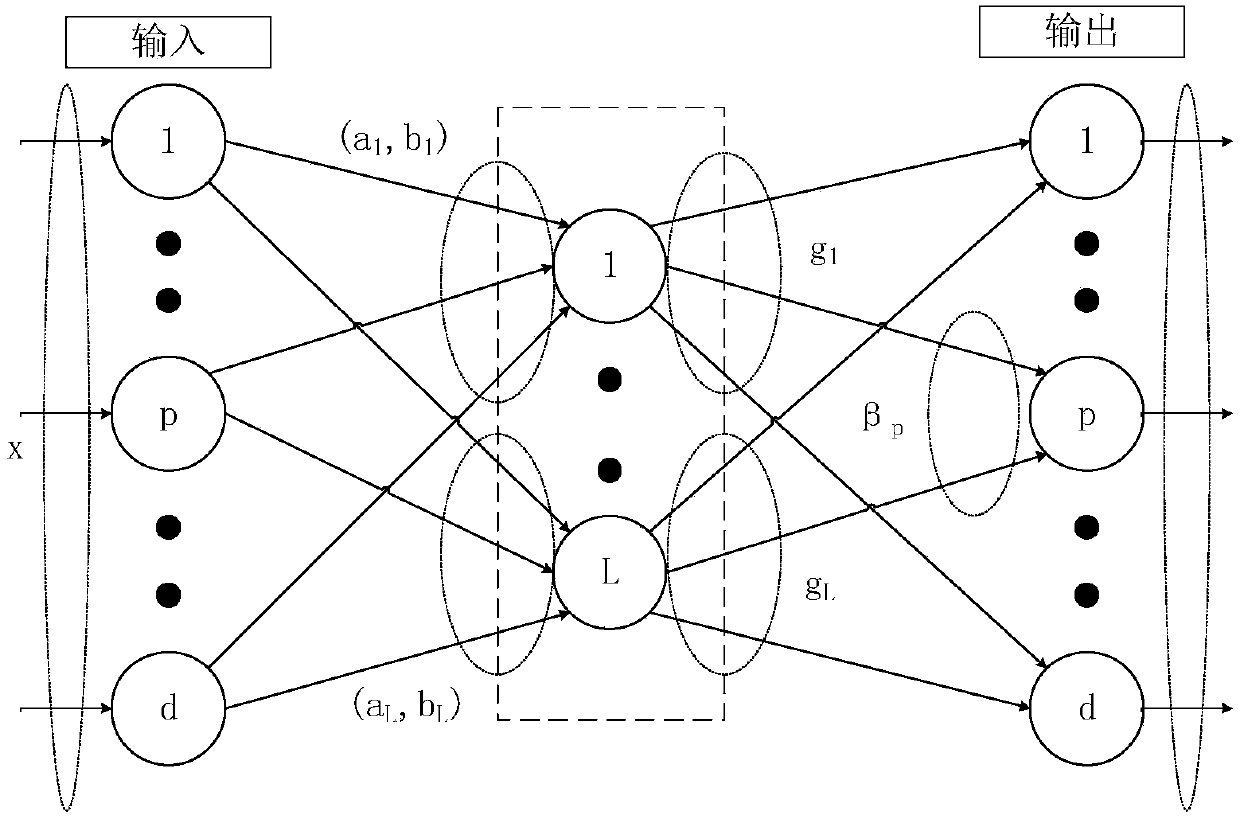

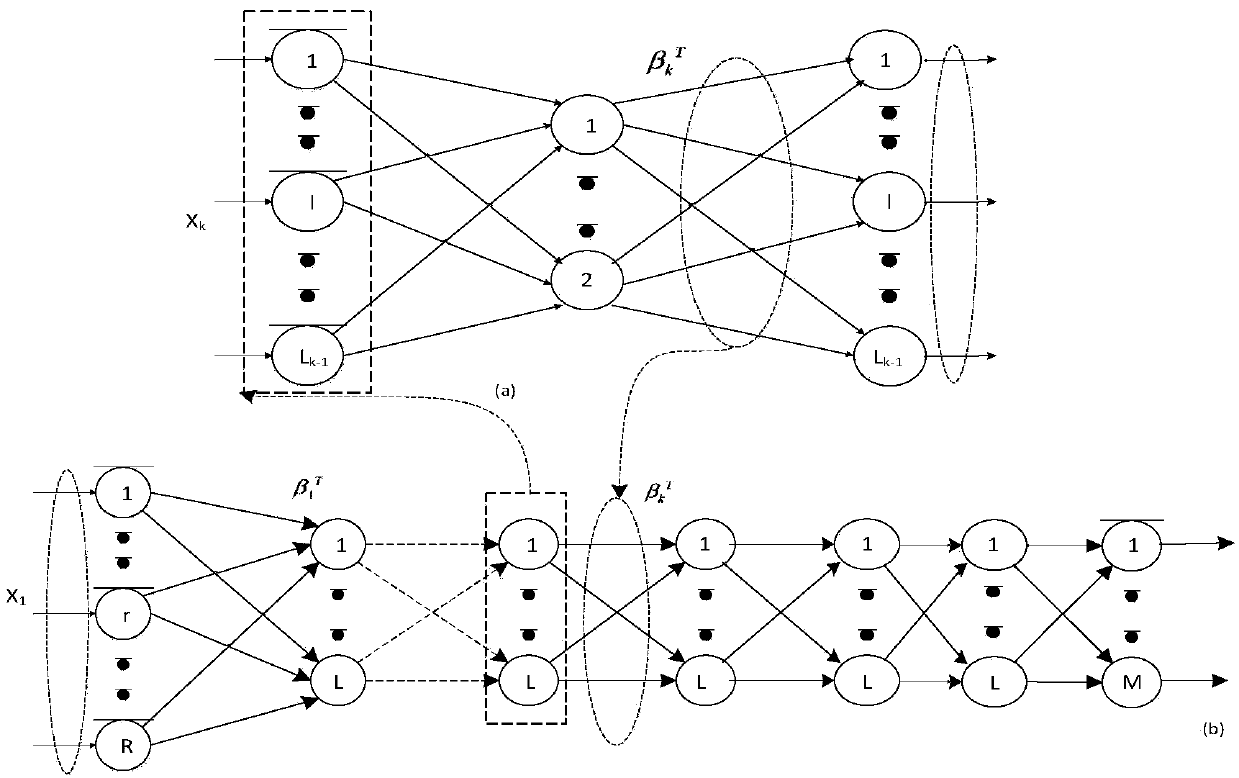

Speech recognition method based on neural network stacking autoencoder multi-feature fusion

ActiveCN107610692AEasy to identifyImprove computing efficiencySpeech recognitionNeural learning methodsLearning machineTime domain

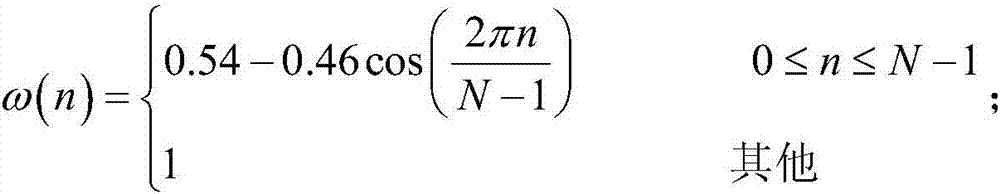

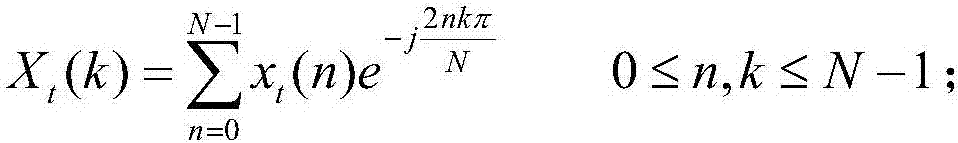

The invention relates to a speech recognition method based on neural network stacking autoencoder multi-feature fusion. Firstly, the original sound data is framed and windowed, and the typical time-domain linear predictive cepstrum coefficient feature and the frequency-domain Mel frequency cepstrum coefficient feature are respectively extracted from the framed and windowed data; then the extractedfeatures are spliced, the initial feature representation vector of acoustic signals is constructed and the training feature library is created; then the multi-layer neural network stacking autoencoder is used for feature fusion and learning; the multi-layer autoencoder adopts the over-limit learning machine algorithm to learn training; and finally the extracted features are trained using the over-limit learning machine algorithm to get the classifier model; and the constructed model is finally used to test sample classification and identification. The method adopts multi-feature fusion basedon the over-limit learning machine multi-layer neural network stacking autoencoder, which has higher recognition accuracy compared with the traditional single feature extraction method.

Owner:HANGZHOU DIANZI UNIV

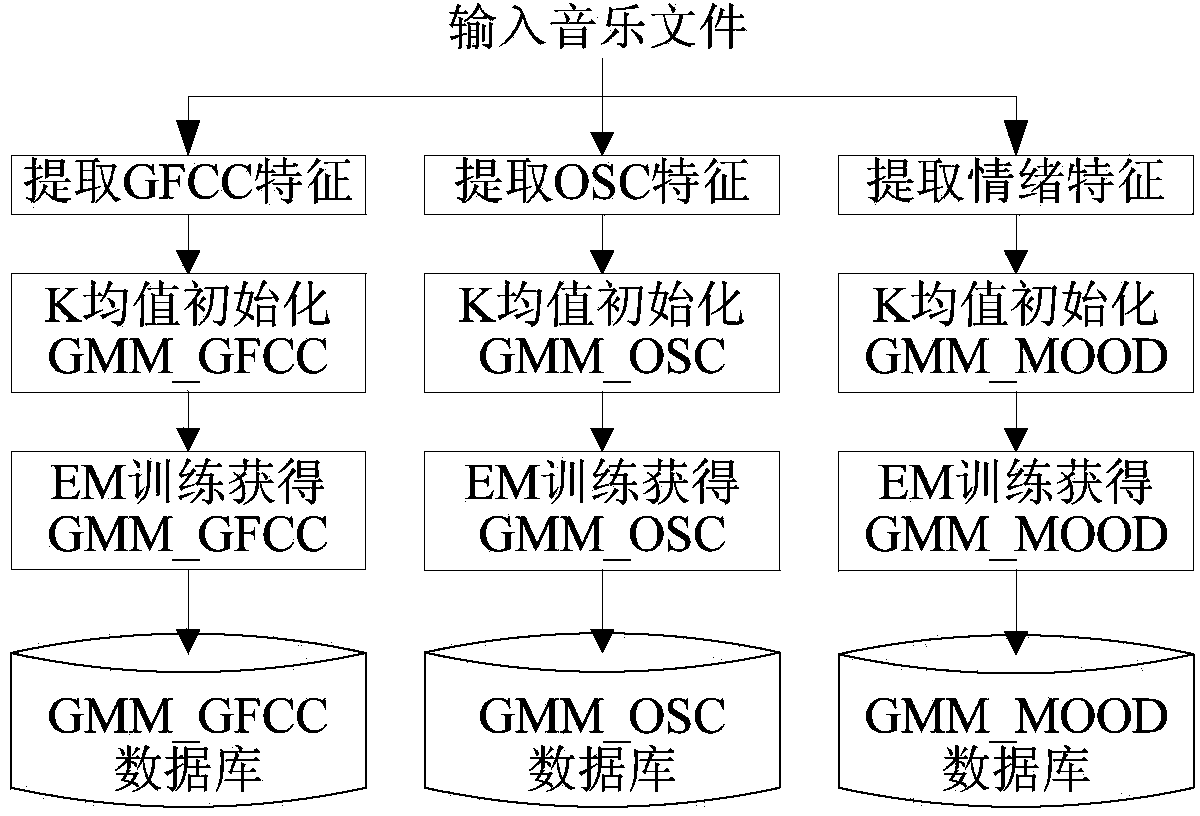

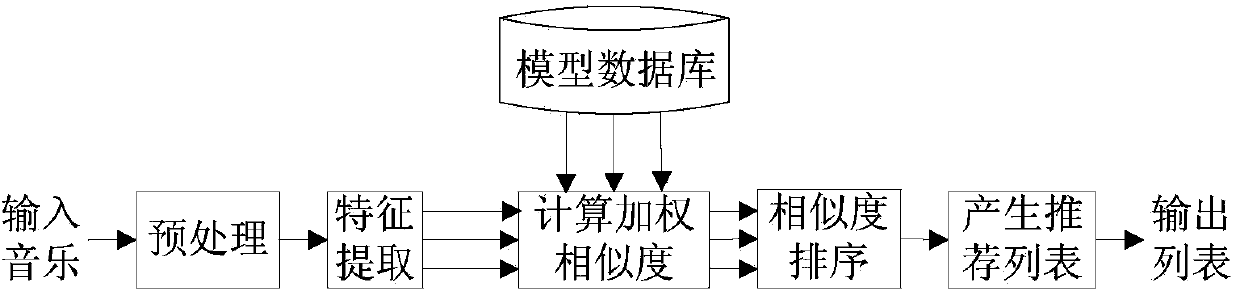

Music recommendation method based on similarities

InactiveCN103440873AGet full and deepImprove accuracySpeech analysisExpectation–maximization algorithmFrequency spectrum

The invention discloses a music similarity detection method based on mixed characteristics and a Gaussian mixed model. According to the basic thought, the method comprises the steps of using a gamma-tone cepstrum coefficient for conducting similarity detection, and using weighting similarities of various characteristics as a final detection result; providing a modulation spectrum characteristic based on a frame shaft, using the characteristic for representing a music long-time characteristic, and using the combination of the long-time characteristic and a short-time characteristic as the input of modeling in the next step; using the Gaussian mixed model for conducting modeling on the music characteristics, firstly, utilizing a dynamic K mean value method for conducting initialization on the model, then, using an expectation-maximization algorithm for conducting model training, obtaining accurate model parameters, and finally using a log-likelihood ratio algorithm for obtaining the similarities between the pieces of music. According to the music similarity detection method, the obtaining of the music characteristics is more sufficient and thorough, the accuracy degree of music recommendation is improved, the characteristic vector dimensionality can be reduced, the information memory content of a music database is reduced, and the accuracy degree of the music recommendation is improved.

Owner:DALIAN UNIV OF TECH

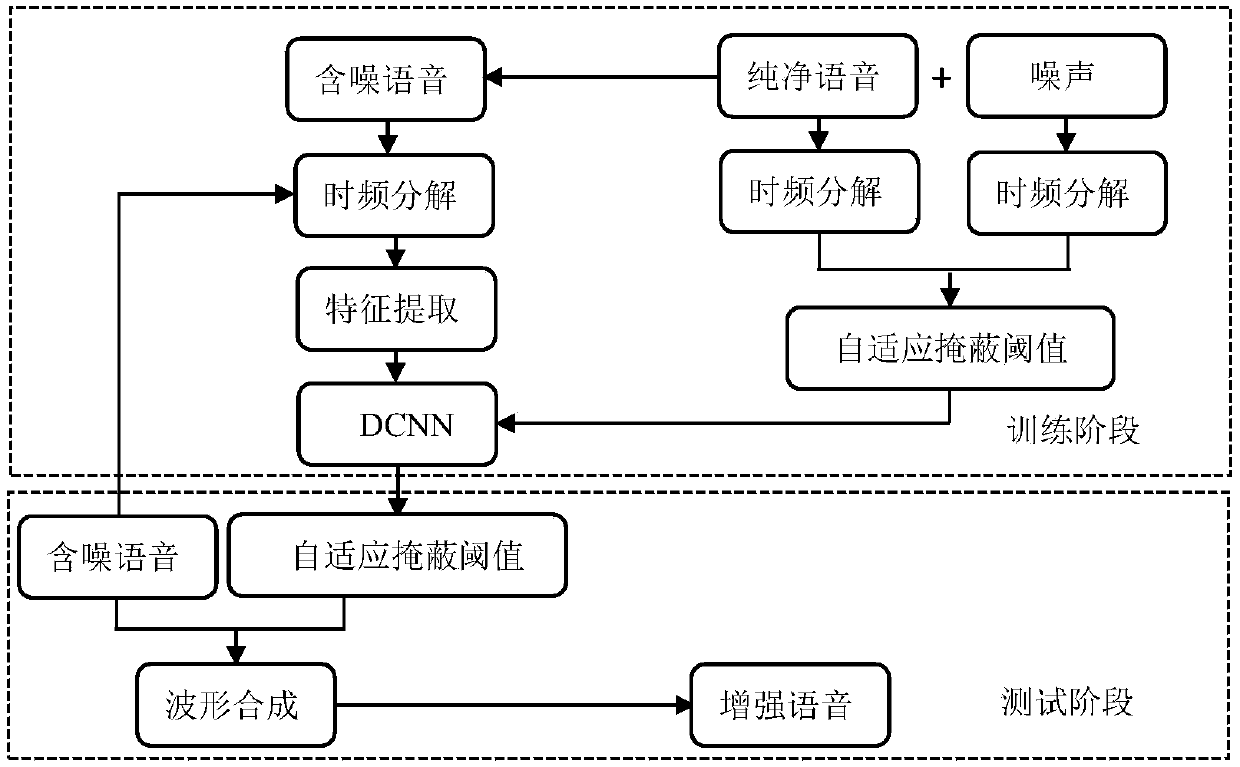

Voice signal characteristics extracting method based on tensor decomposition

InactiveCN103117059AEnhanced Representational CapabilitiesGood effectSpeech recognitionFrequency spectrumFeature extraction

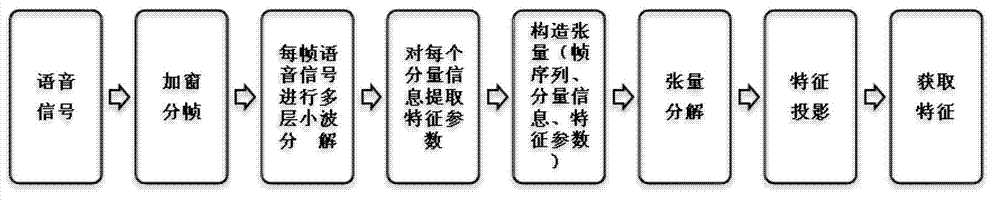

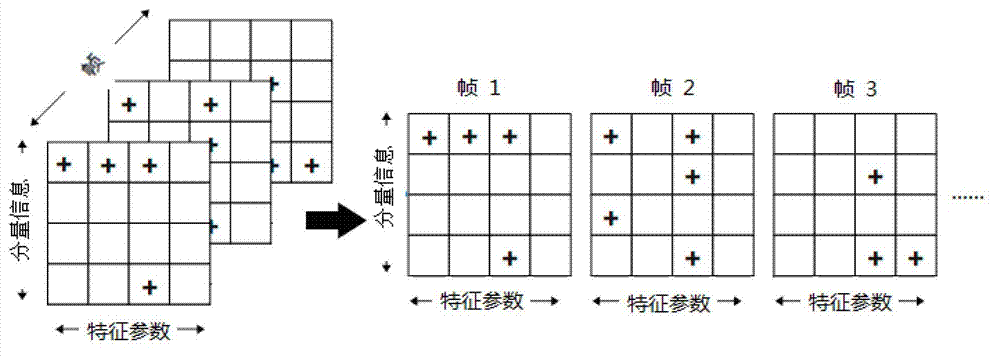

The invention discloses a voice signal characteristics extracting method based on tensor decomposition and belongs to the technical field of voice signal processing. The voice signal characteristics extracting method based on the tensor decomposition comprises the following steps: having multi-layer wavelet decomposition to voice signals after framing, respectively extracting MR frequency cepstrum coefficients, the corresponding first order difference coefficient and second order difference coefficient from a plurality of component information after the wavelet decomposition to form a characteristic parameter vector, establishing a third order voice tensor and having tensor decomposition to the third order voice tensor, and calculating component information order and characteristic projection of characteristic parameters. Marticulated results are characteristics carried by each frame of voice signals. Compare with the traditional characteristic parameters, the voice signal characteristics extracting method based on the tensor decomposition has the advantages of enhancing representational ability to the voice signals, acquiring characteristics which carries more comprehensive voice signals, and improving the effects of voice signal processing systems such as voice identifying signal processing system, speaker identifying signal processing system.

Owner:INNER MONGOLIA UNIV OF SCI & TECH +1

Output-based objective voice quality evaluation method

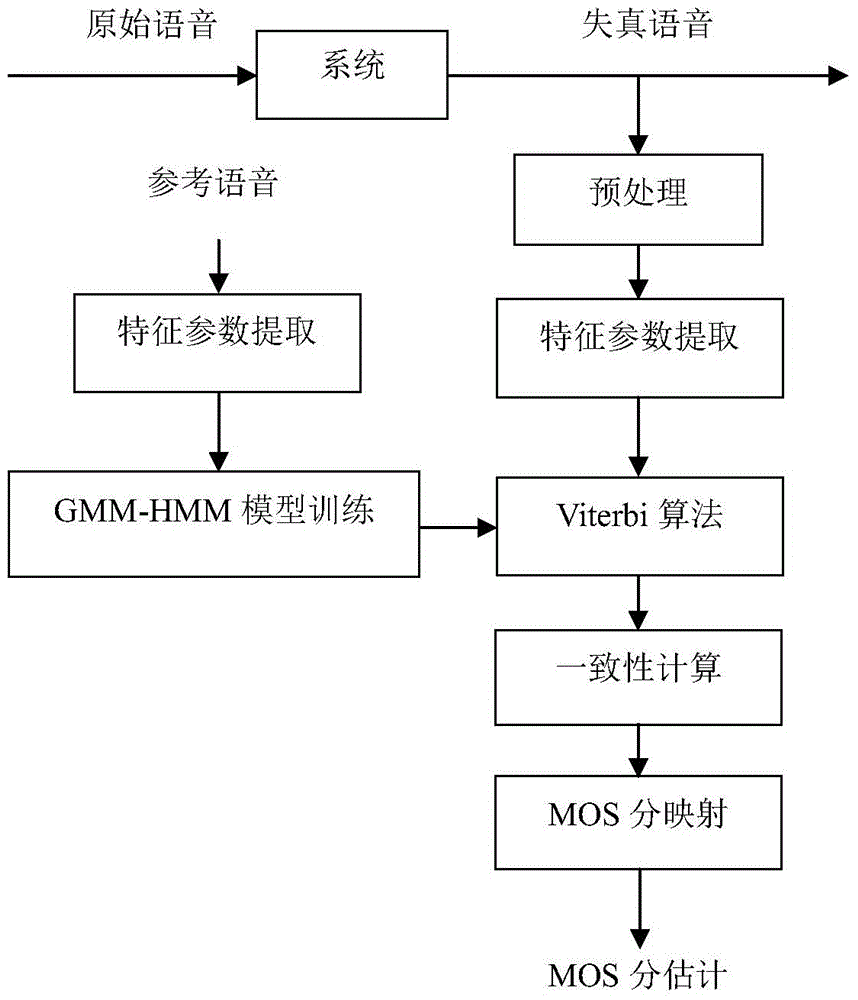

The invention provides an output-based objective voice quality evaluation method. The method includes the following steps that firstly, inhomogeneous linear prediction cepstrum coefficients of clean voice are extracted and used for training a GMM-HMM model, and a reference model is built for the clean voice through training; then the consistency measure between the reference model and the inhomogeneous linear prediction cepstrum coefficient vector of the distortion voice can be obtained through the reference model and the inhomogeneous linear prediction cepstrum coefficient vector of the distortion voice; finally, the mapping relation between the subjective MOS and the consistency measure is built through a multivariate nonlinear regression model, an objective prediction model of the MOS can be obtained, and objective evaluation is conducted on the voice quality through the objective prediction model. According to the output-based objective voice quality evaluation method, the mapping relation between the subjective MOS and the objective measure is built, and a subjective MOS prediction model is obtained, so that the score is closer to the subjective quality.

Owner:HUNAN INST OF METROLOGY & TEST +1

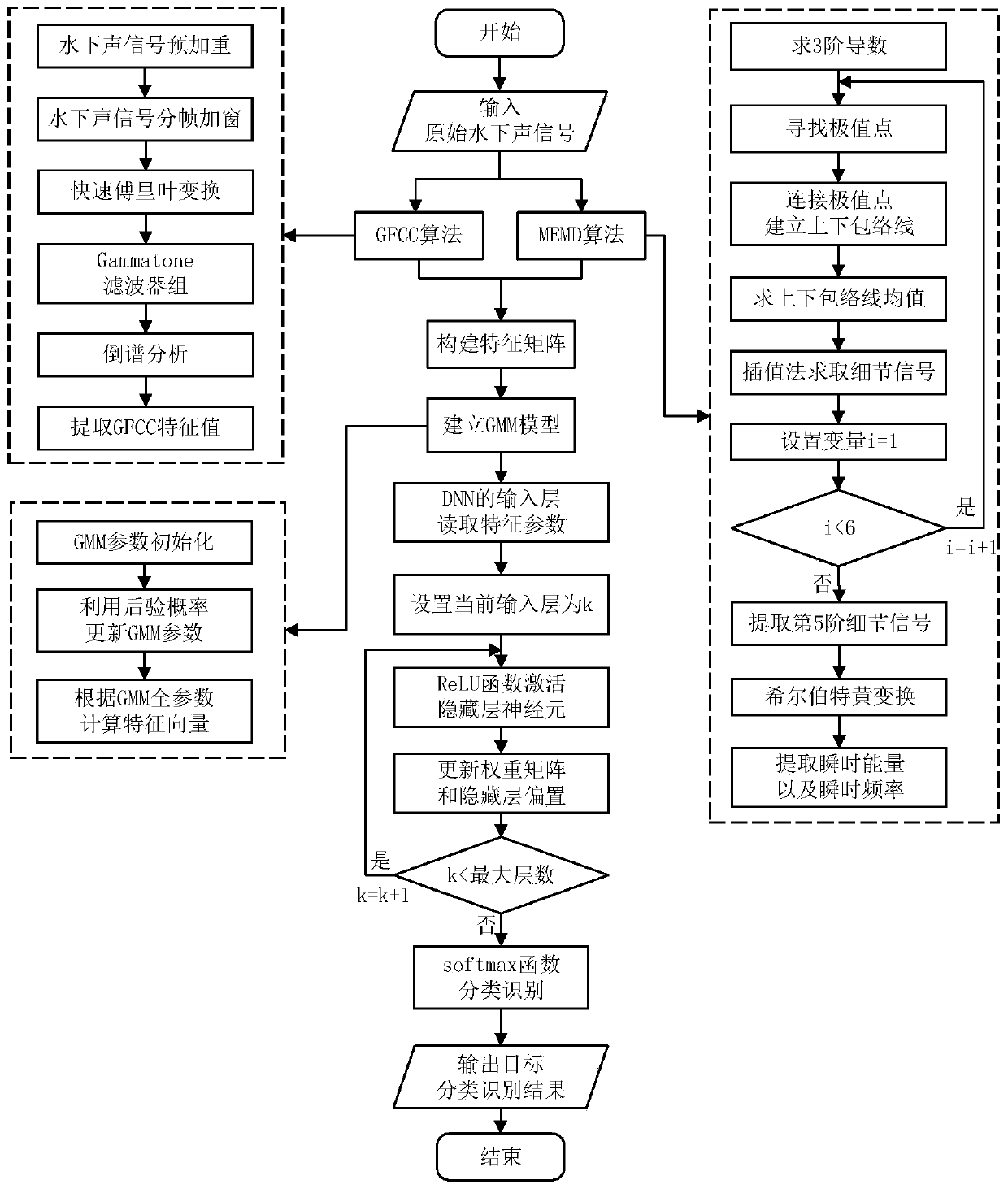

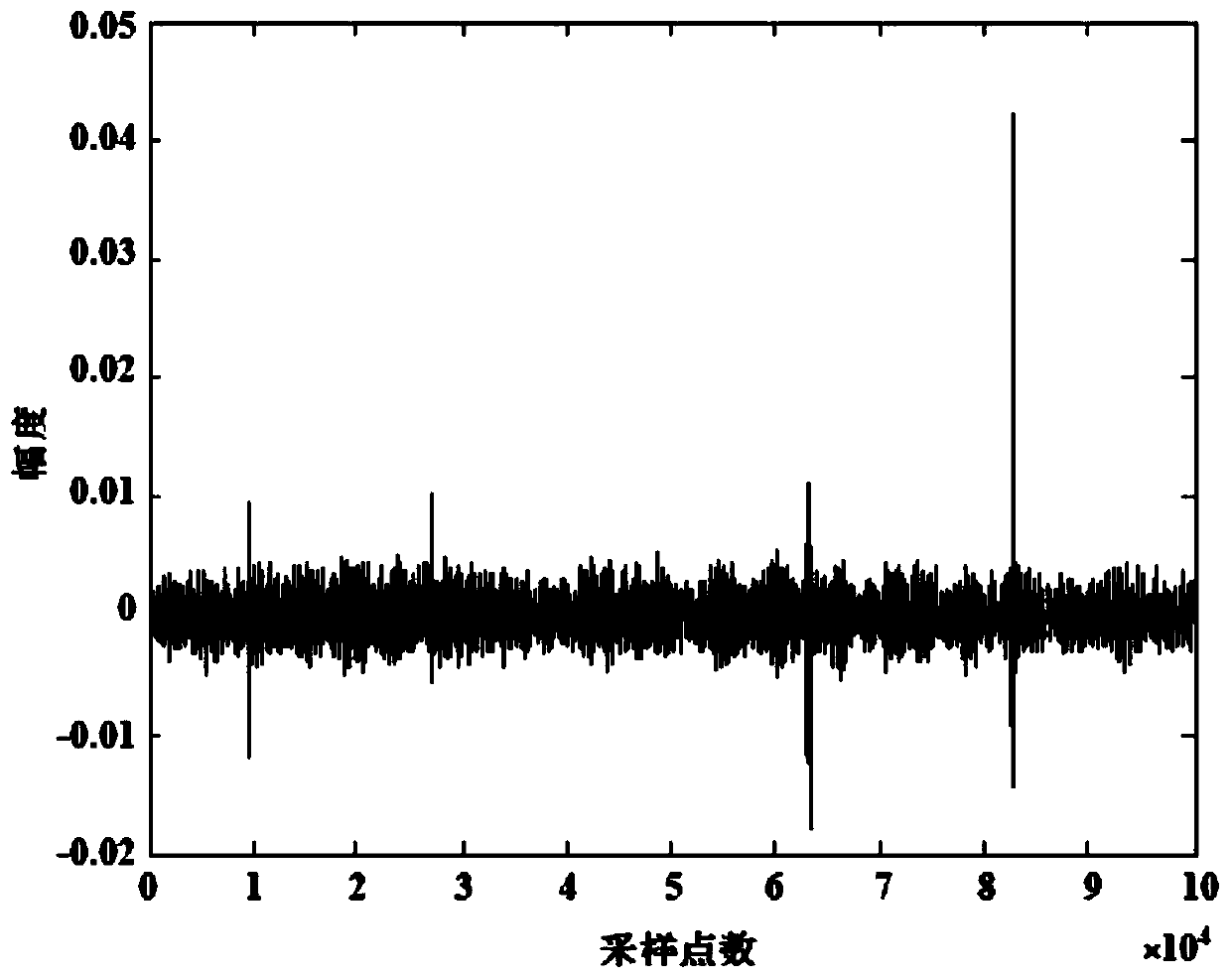

Underwater acoustic signal target classification and recognition method based on deep learning

ActiveCN109800700AEffective Voiceprint FeaturesImprove robustnessBiological neural network modelsCharacter and pattern recognitionFeature extractionDecomposition

The invention belongs to the technical field of underwater acoustic signal processing, and particularly relates to an underwater acoustic signal target classification and recognition method based on deep learning. The method comprises the following steps: (1) carrying out feature extraction on an original underwater acoustic signal through a Gammatone filtering cepstrum coefficient (GFCC) algorithm; (2) extracting instantaneous energy and instantaneous frequency by utilizing an improved empirical mode decomposition (MEMD) algorithm, fusing the instantaneous energy and the instantaneous frequency with characteristic values extracted by a GFCC algorithm, and constructing a characteristic matrix; (3) establishing a Gaussian mixture model GMM, and keeping the individual characteristics of theunderwater acoustic signal target; And (4) finishing underwater target classification and recognition by using a deep neural network (DNN). According to the underwater acoustic signal target classification and recognition method, the problems that a traditional underwater acoustic signal target classification and recognition method is single in feature extraction and poor in noise resistance can be solved, the underwater acoustic signal target classification and recognition accuracy can be effectively improved, and certain adaptability is still achieved under the conditions of weak target acoustic signals, long distance and the like.

Owner:HARBIN ENG UNIV

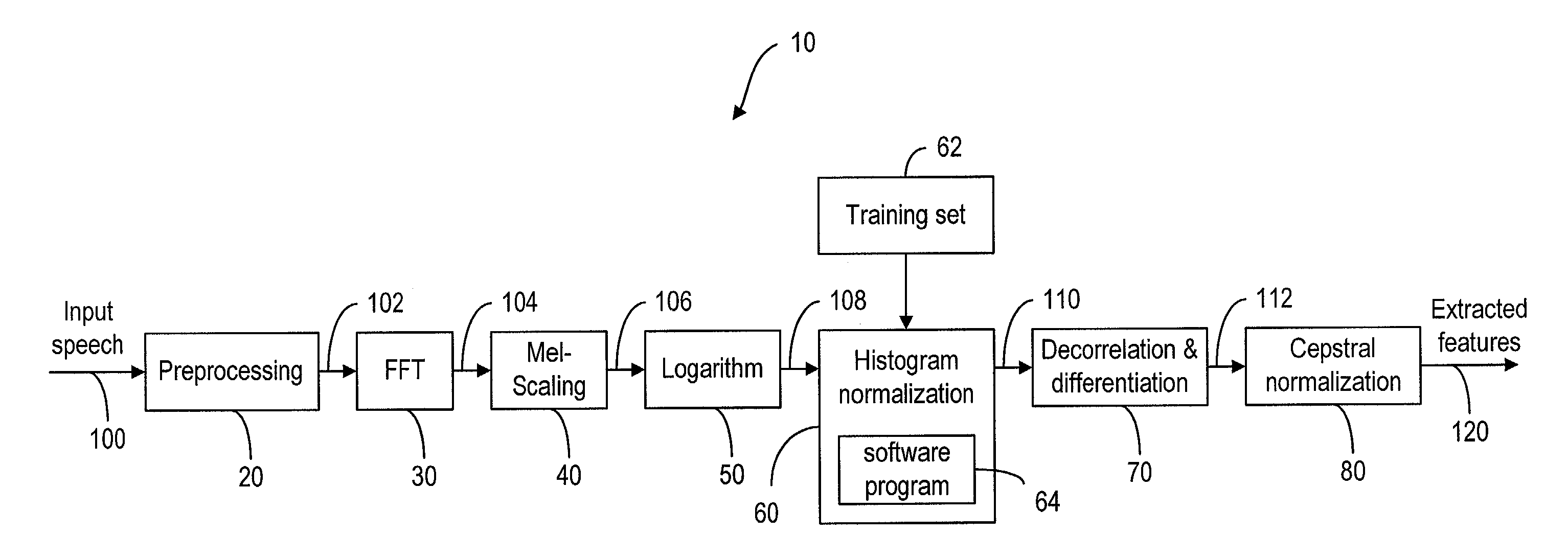

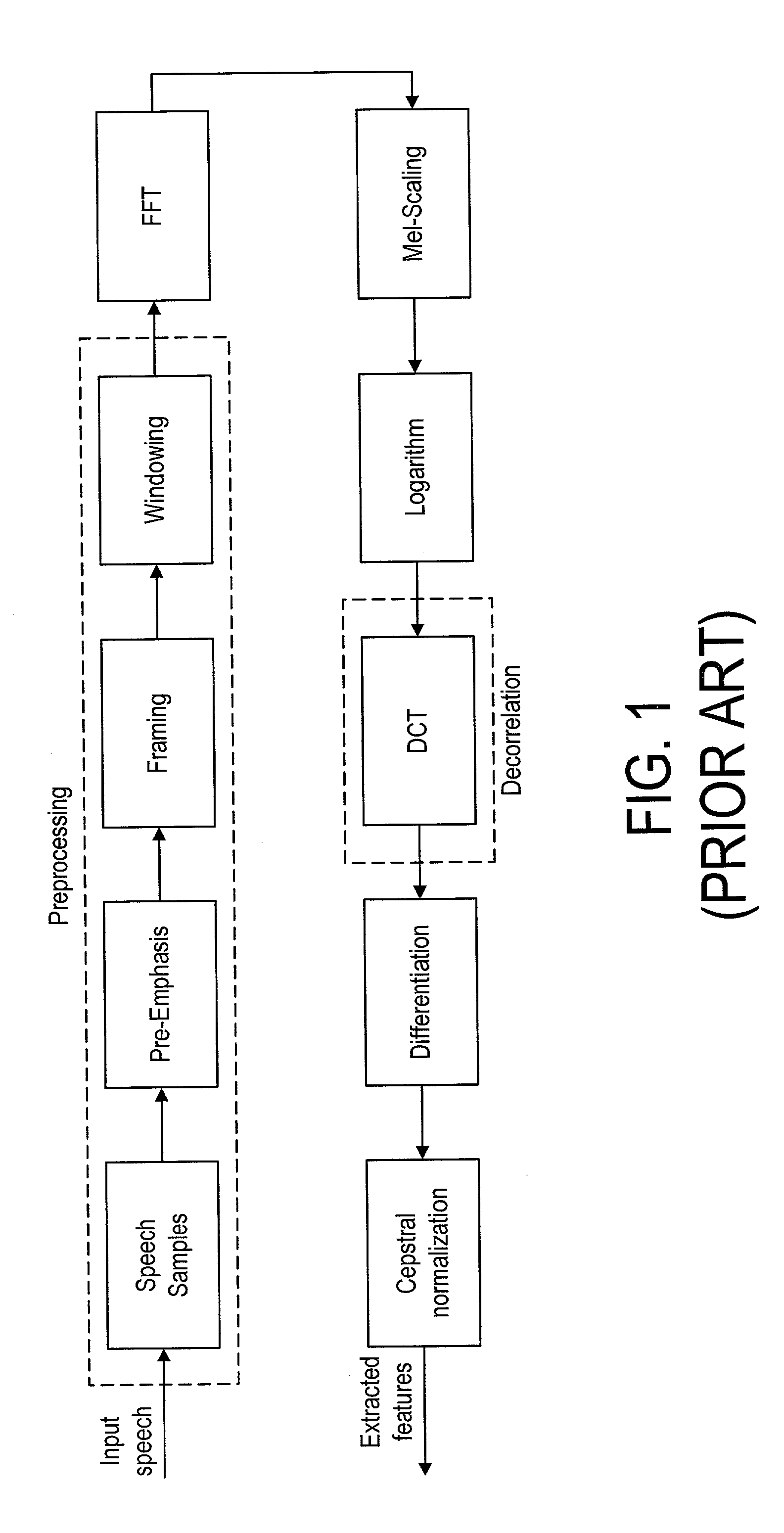

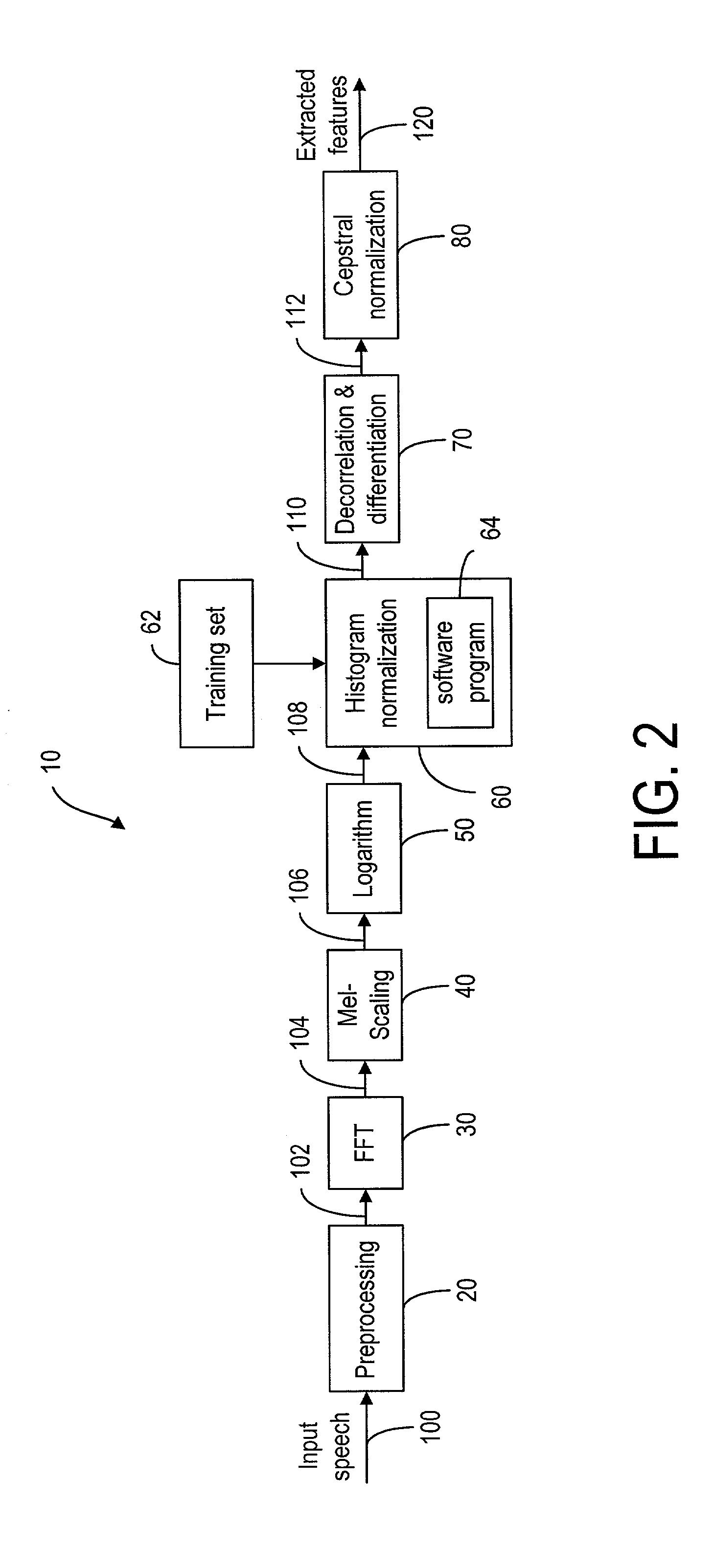

On-line parametric histogram normalization for noise robust speech recognition

A method for improving noise robustness in speech recognition, wherein a front-end is used for extracting speech feature from an input speech and for providing a plurality of scaled spectral coefficients. The histogram of the scaled spectral coefficients is normalized to the histogram of a training set using Gaussian approximations. The normalized spectral coefficients are then converted into a set of cepstrum coefficients by a decorrelation module and further subjected to ceptral domain feature-vector normalization.

Owner:RPX CORP

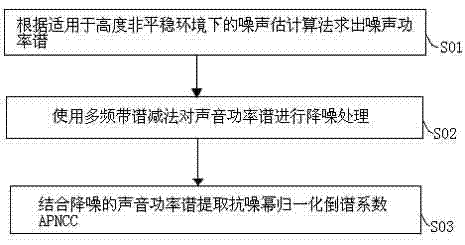

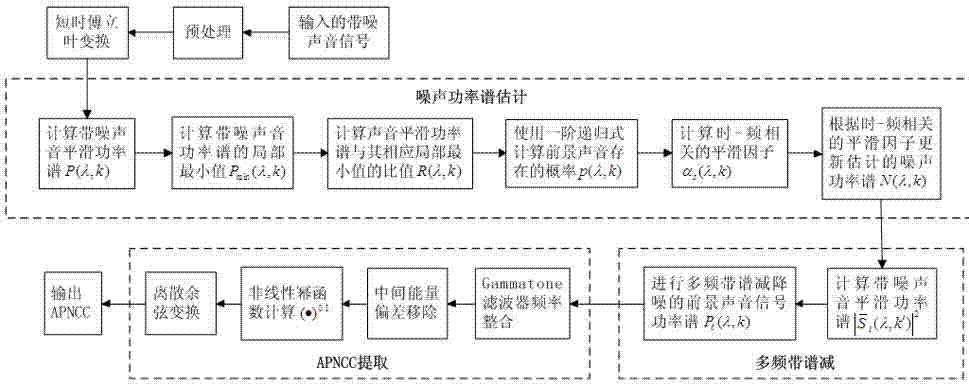

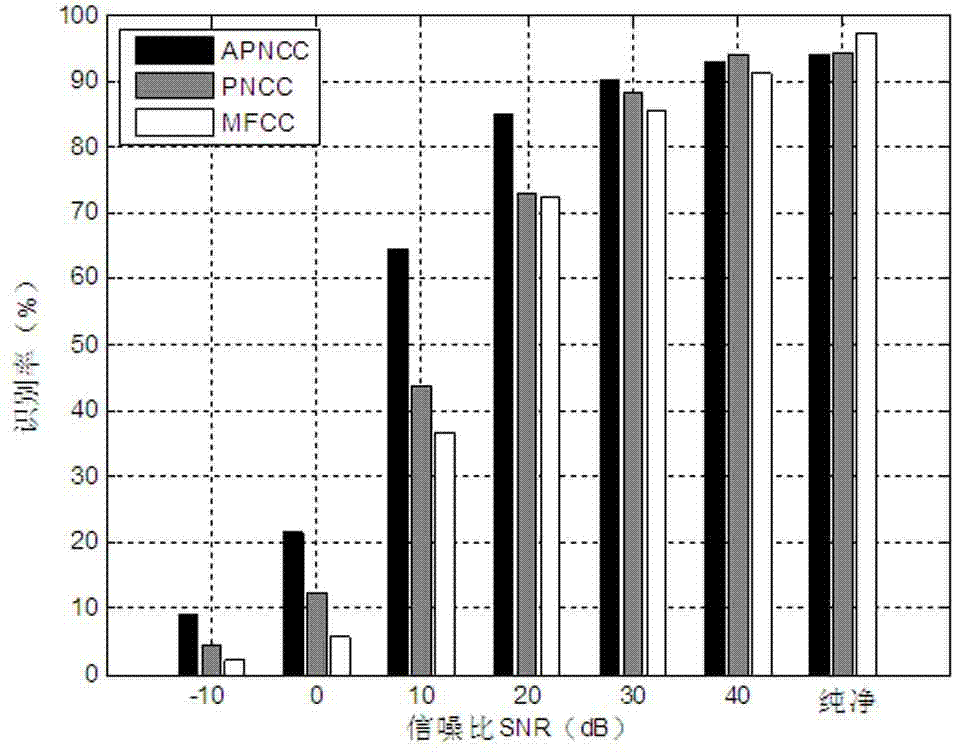

Bird voice recognition method using anti-noise power normalization cepstrum coefficients (APNCC)

InactiveCN102930870AThe average recognition effect is goodNoise robustnessSpeech recognitionMulti bandNoise power spectrum

The invention provides a bird voice recognition technology based on novel noise-proof feature extraction by aiming at the problem of bird voice recognition in various kinds of background noise in ecological environment. The bird voice recognition technology comprises the following steps of firstly, obtaining noise power spectrums by a noise estimation algorithm suitable for highly nonstationary environment; secondly, performing the noise reduction on the voice power spectrums by a multi-band spectral subtraction method; thirdly, extracting anti-noise power normalization cepstrum coefficients (APNCC) by combining the voice power spectrums for noise reduction; and finally, performing contrast experiments under the conditions of different environments and signal to noise ratios (SNR) on the voice of 34 species of birds by means of extracted APNCC, power normalization cepstrum coefficient (PNCC) and Mel frequency cepstrum coefficients (MFCC) by a support vector machine (SVM). The experiments show that the extracted APNCC have a better average recognition effect and higher noise robustness and are more suitable for bird voice recognition in the environment with less than 30 dB of SNR.

Owner:FUZHOU UNIV

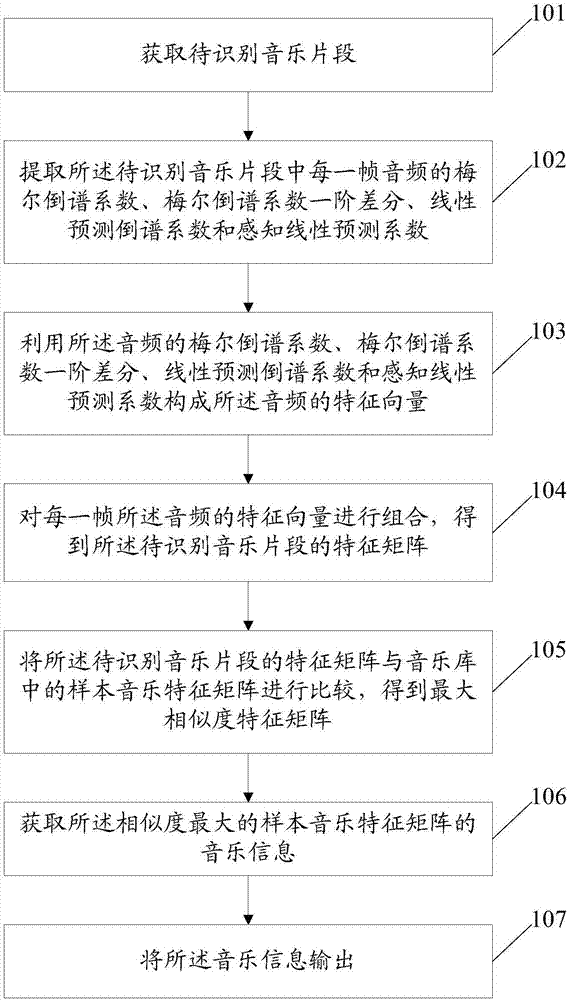

Music identifying method and system

ActiveCN106919662AImprove recognition resultsImprove noise immunitySpecial data processing applicationsFeature vectorPerceptual linear prediction

The invention discloses a music identifying method and system. The method comprises steps of acquiring a to-be-identified music clip, extracting a mel-frequency cepstrum coefficient of each frame of the to-be-identified music clip, a mel-frequency cepstrum coefficient first difference, a linear prediction cepstrum coefficient and a sensing linear prediction coefficient, forming an audio feature vector according to the above coefficients of the audio, combing feature vectors of each frame audio to achieve a feature matrix of the to-be-identified music clip, comparing the feature matrix of the to-be-identified music clip and a feature matrix of a sampling music in a music library to achieve a maximum-similarity feature matrix, acquiring music information of a maximum sampling music feature matrix, and outputting the music information. The maximum-similarity feature matrix belongs to a sampling music having the maximum similarity to the to-be-identified music. The music identifying method and system have great noise resisting property and great identifying efficiency and ideal identifying effect.

Owner:FUDAN UNIV

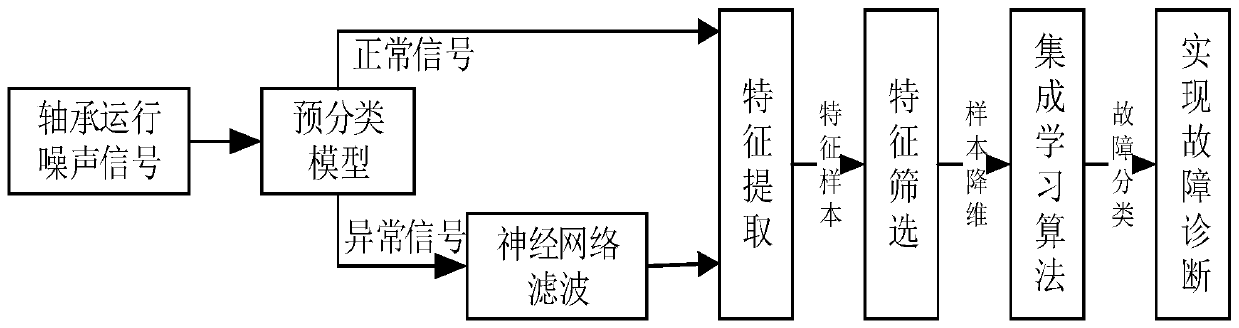

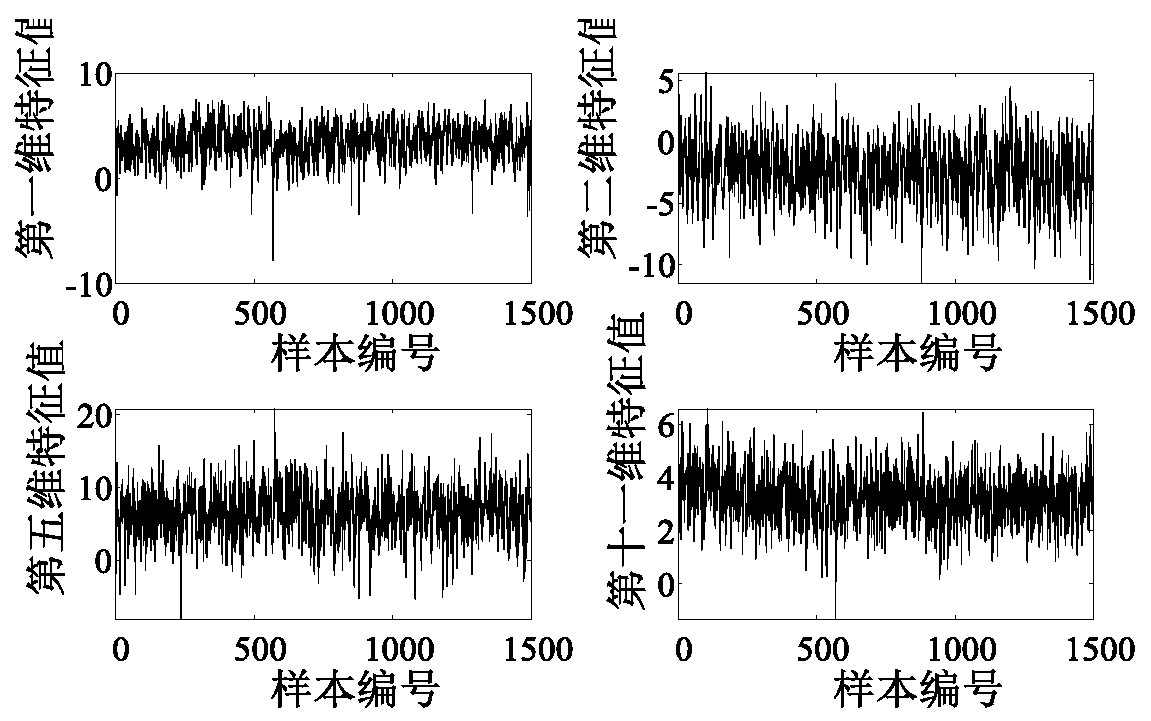

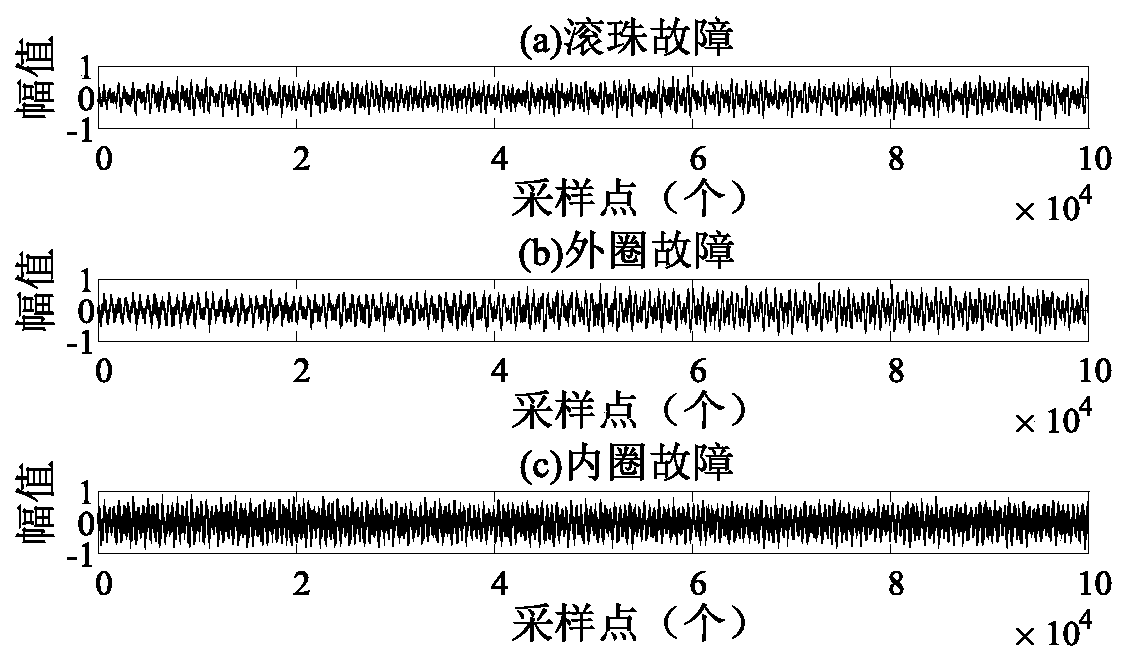

Noise diagnosis algorithm for rolling bearing faults of rotary equipment

ActiveCN110132598AEffective diagnosisEfficient detectionMachine part testingCharacter and pattern recognitionPrincipal component analysisEngineering

The invention discloses a noise diagnosis algorithm for rolling bearing faults of rotary equipment. Firstly, a sound pick-up device collects running noise signals of a rolling bearing, and the signalsare subjected to preliminary fault judgment through a bearing normality and anomaly pre-classification model based on an anomaly detection algorithm; secondly, according to a fault pre-judgment result, the abnormal signals (the faults occur) pass through a neural network filter to filter normal components in the signals of the bearing, the output net abnormal signals are connected to a subsequentfeature extraction module, and the normal signals (no faults occur) are directly connected to the feature extraction module; the feature extraction module extracts Mel-cepstrum coefficients (MFCC) ofthe signals to serve as eigenvectors, feature reconstruction is carried out by utilizing a gradient boosted decision tree (GBDT) to form composite eigenvectors, and principal component analysis (PCA)is used for carrying out dimensionality reduction on features; and finally, feature signals are input into an improved two-stage support vector machine (SVM) ensemble classifier for training and testing, and at last, high-accuracy fault type diagnosis is achieved. According to the algorithm, the bearing faults can be effectively detected and relatively high fault identification accuracy is kept;and the algorithm has relatively high effectiveness and robustness for detection and classification of the bearing faults.

Owner:CHINA UNIV OF MINING & TECH

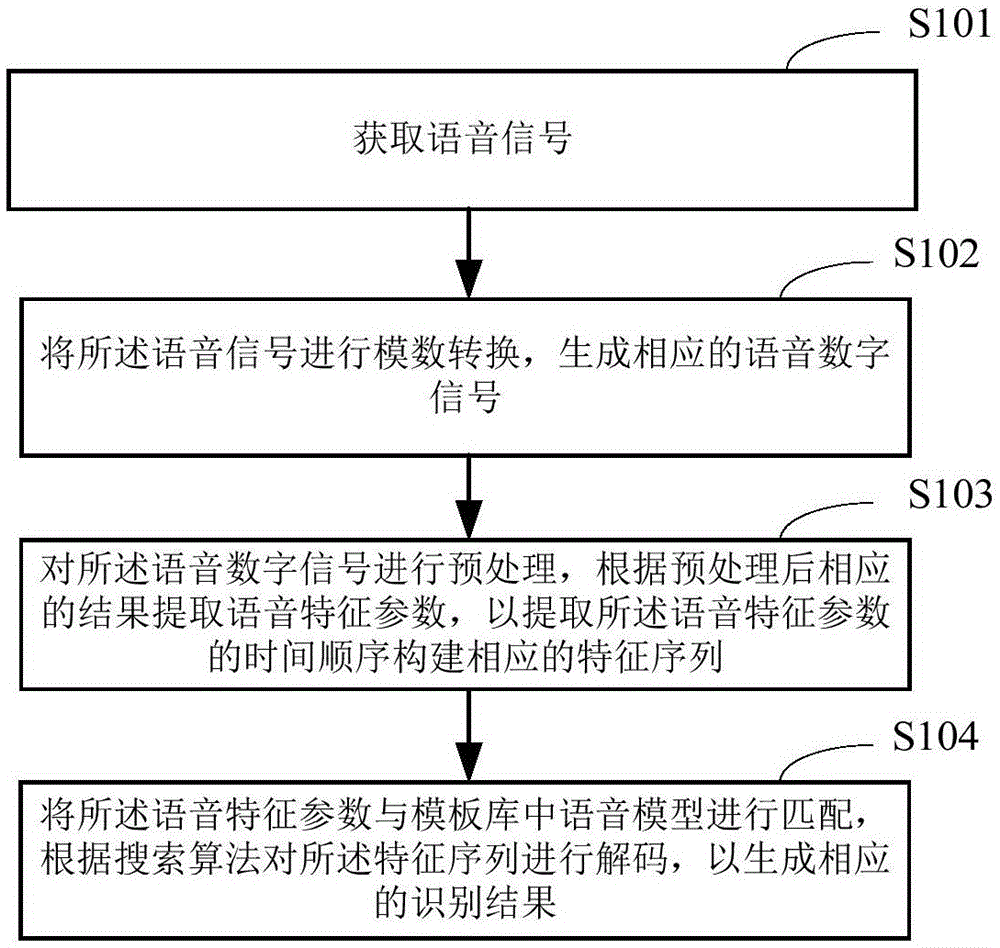

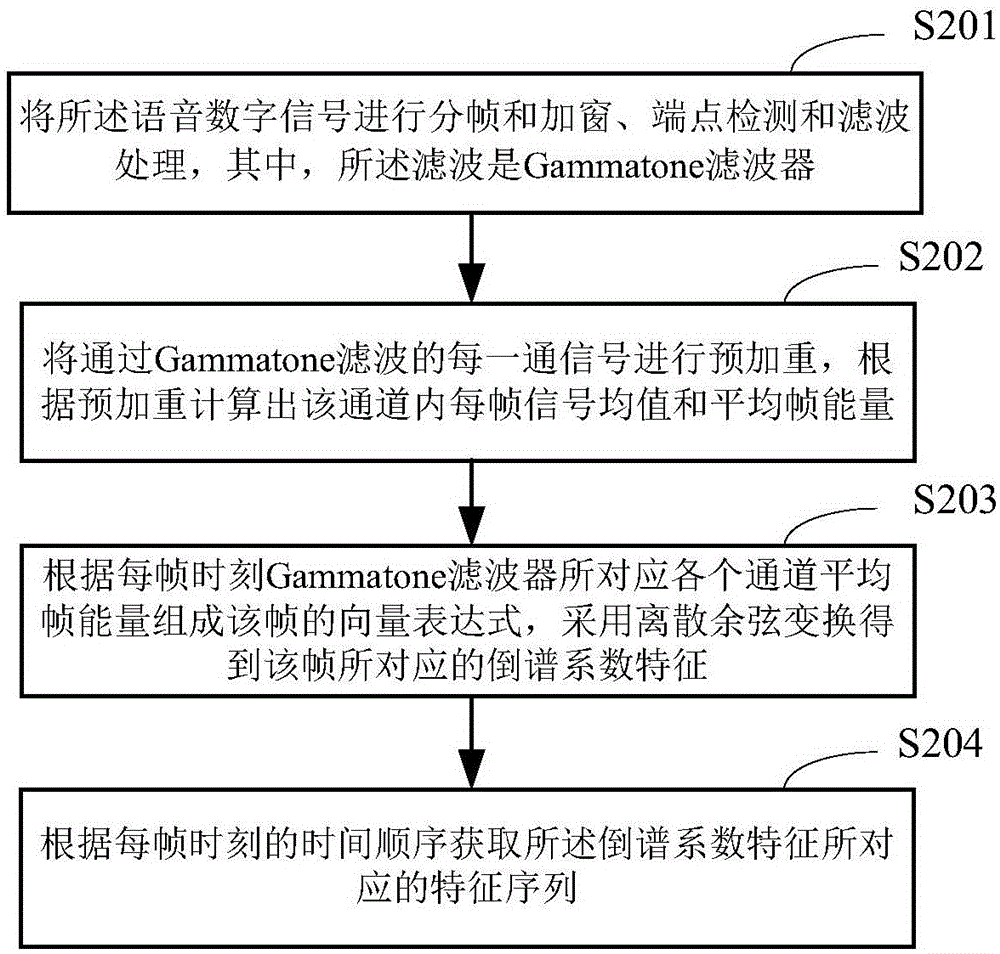

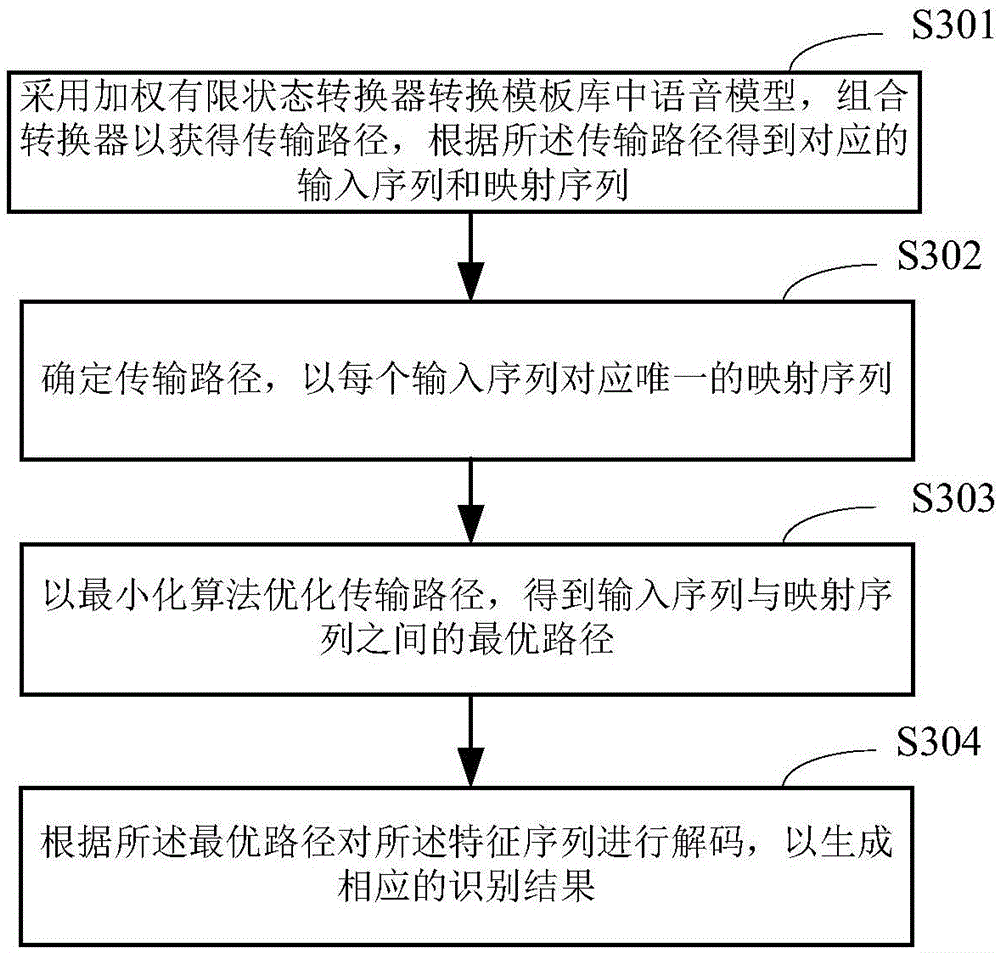

Speech recognition method and system

InactiveCN105118501AImprove Noise RobustnessSmall amount of calculationSpeech recognitionChronological timeSpeech identification

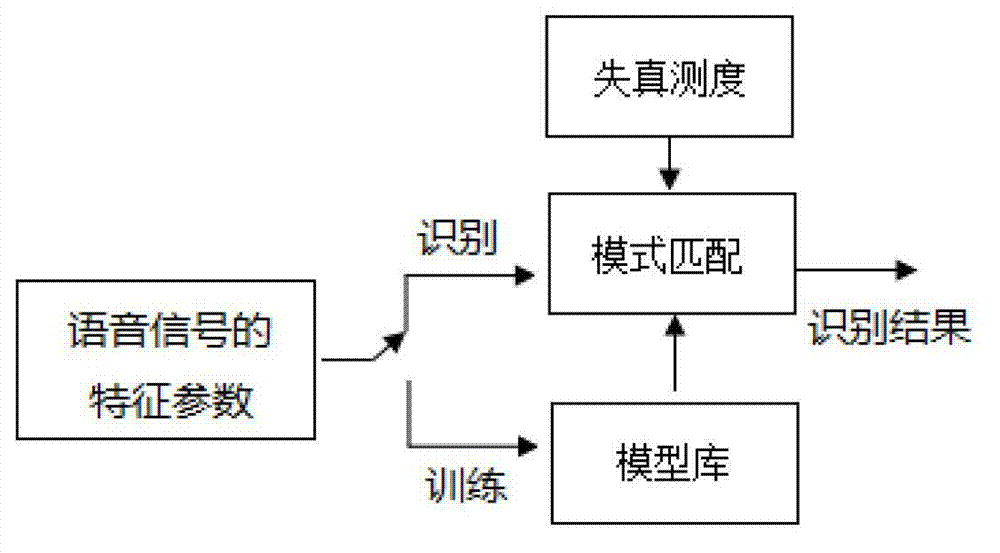

The invention belongs to the speech recognition technical filed and relates to a speech recognition method and system. The method includes the following steps that: speech signals are acquired; analog-digital conversion is performed on the speech signals, so that corresponding speech digital signals can be generated; preprocessing is performed on the speech digital signals, and speech feature parameters are extracted according to corresponding preprocessing results, and a time sequence of extracting the speech feature parameters is utilized to construct a corresponding feature sequence; the speech feature parameters are matched with speech models in a template library, and the feature sequence is decoded according to a search algorithm, and therefore, a corresponding recognition result can be generated. According to the speech recognition method and system of the invention, time-domain GFCC (gammatone frequency cepstrum coefficient) features are extracted to replace frequency-domain MFCC (mel frequency cepstrum coefficient) features, and DCT conversion is adopted, and therefore, computation quantity can be reduced, and computation speed and robustness can be improved; and the mechanism of weighted finite state transformation is adopted to construct a decoding model, and smoothing and compression processing of the model is additionally adopted, and therefore, decoding speed can be increased.

Owner:徐洋

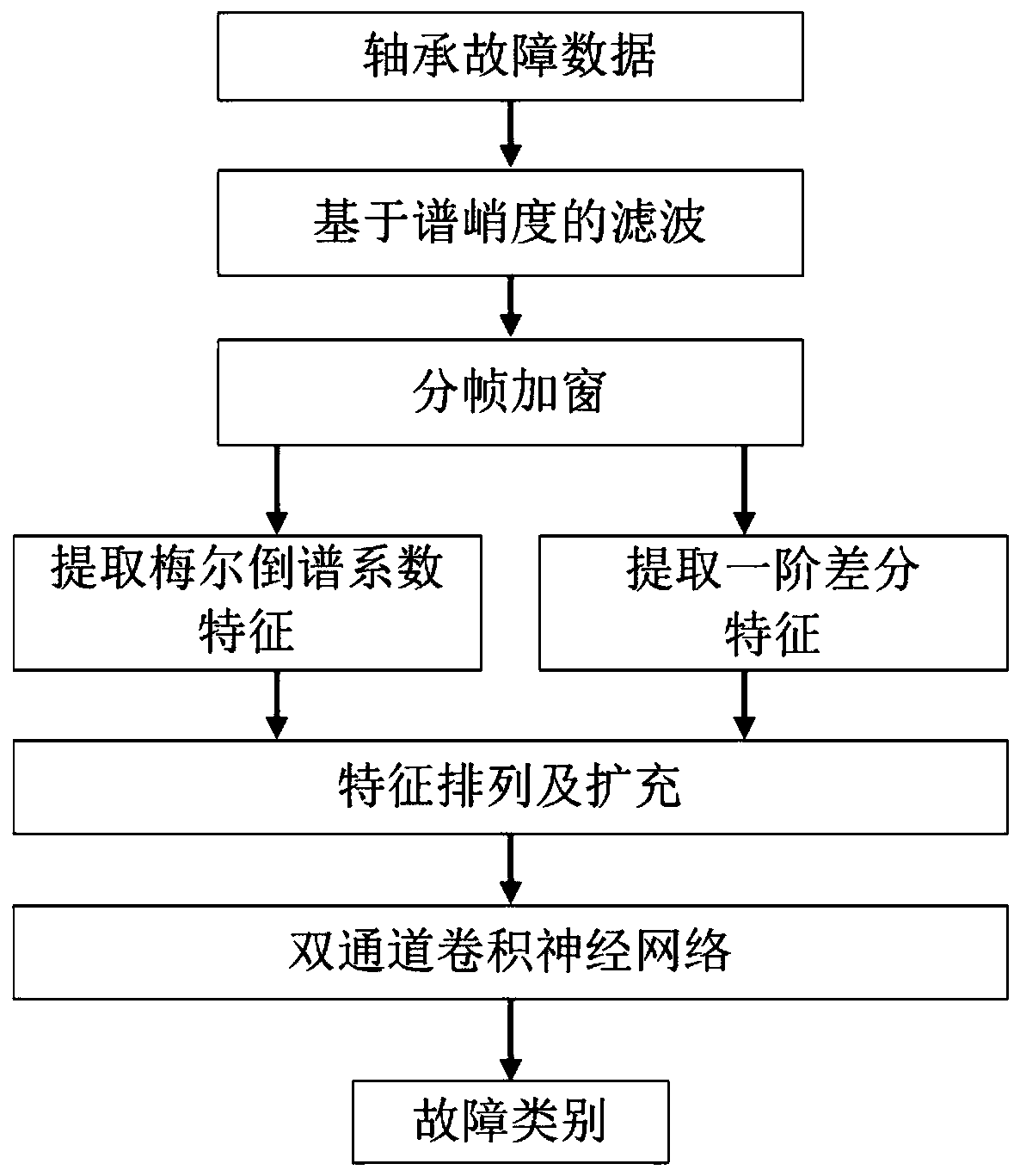

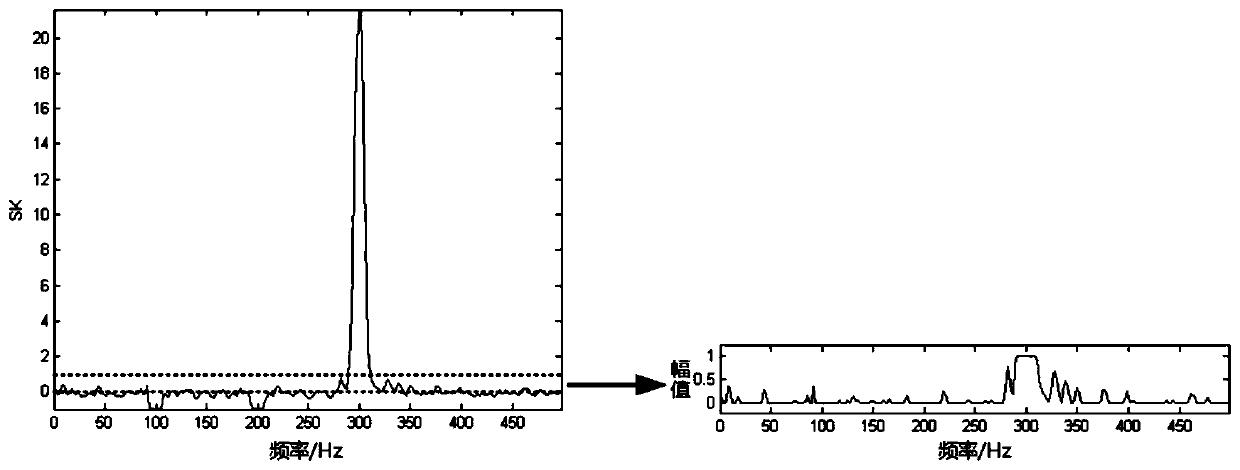

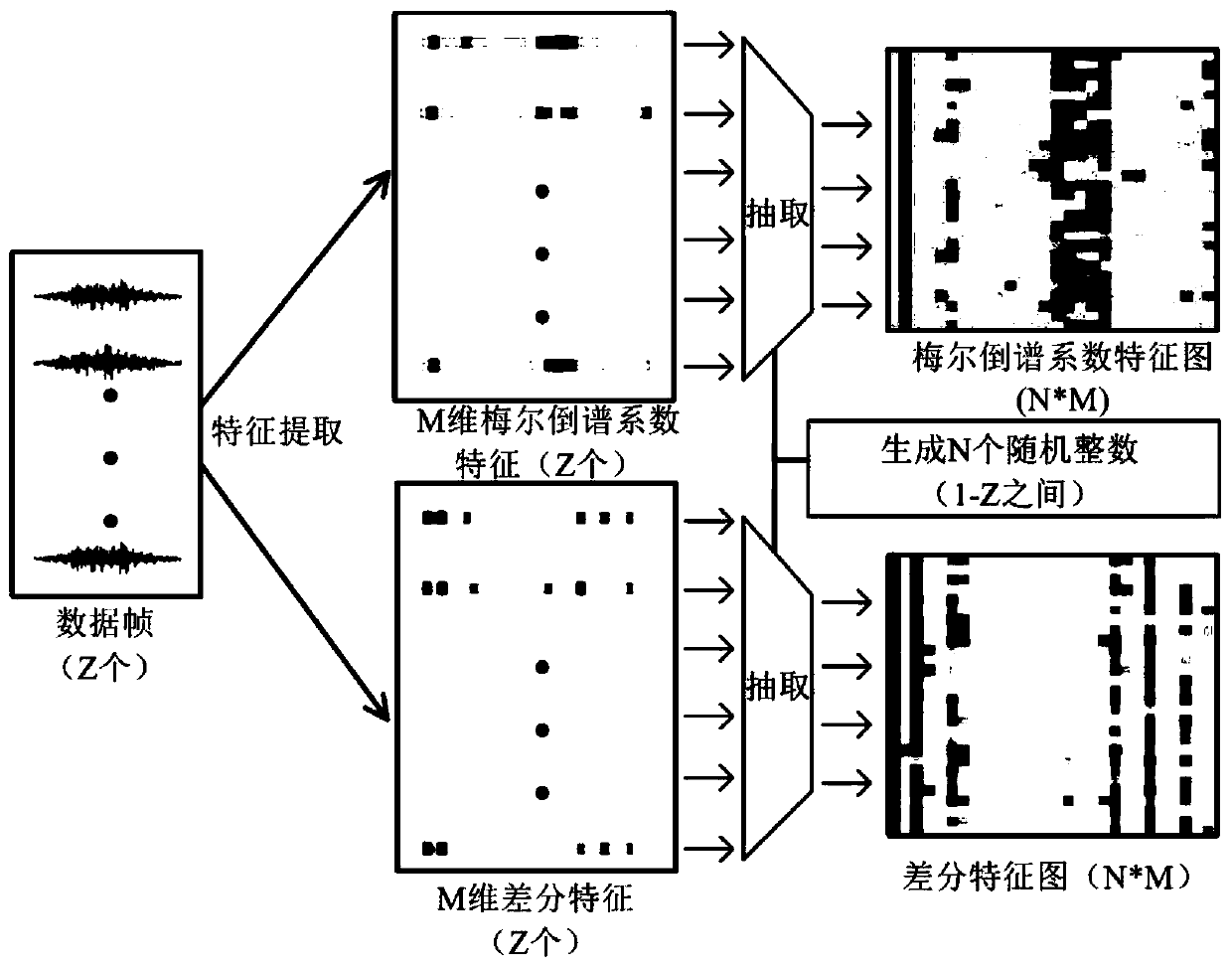

Rolling bearing fault classification method and system based on spectral kurtosis and neural network

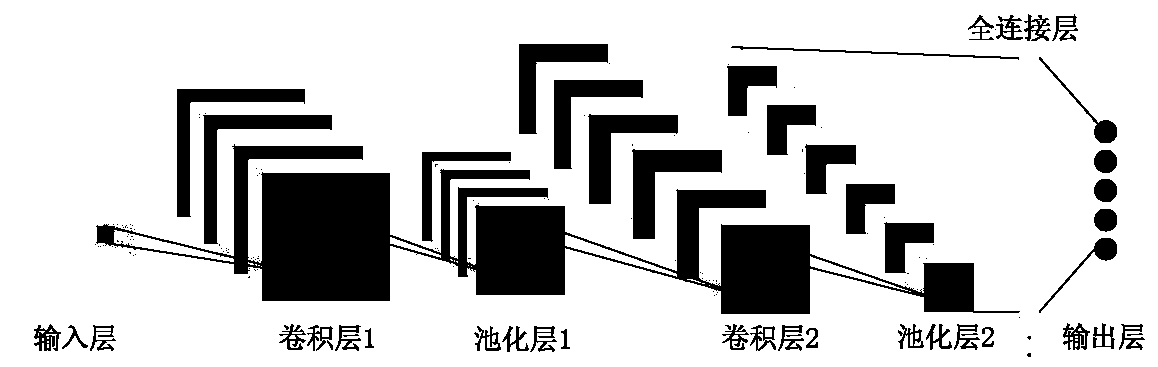

ActiveCN110017991AFilter out noise interferenceExtract fault signal componentsMachine part testingClassification methodsConvolutional neural network

The invention provides a rolling bearing fault classification method and system based on spectral kurtosis and neural network. The method comprises the following steps: filtering the bearing fault signals based on the spectral kurtosis; extracting Mel cepstrum coefficient characteristics and differential characteristics of the filtered bearing fault signals to obtain a Mel cepstrum coefficient characteristic set and a differential characteristic set; randomly extracting a plurality of characteristics from the Mel cepstrum coefficient characteristic set and the differential characteristic set respectively, and sequentially arranging the characteristics according to the extraction sequence to form a Mel cepstrum coefficient characteristic diagram and a differential characteristic diagram which are represented by a two-dimensional matrix with preset size, so as to form a training set; inputting the Mel cepstrum coefficient characteristic diagram and the differential characteristic diagramin the training set into corresponding channels of a double-channel convolutional neural network for training to obtain a rolling bearing fault classification model; and carrying out fault classification on the bearing fault signals received in real time by using the rolling bearing fault classification model.

Owner:SHANDONG UNIV

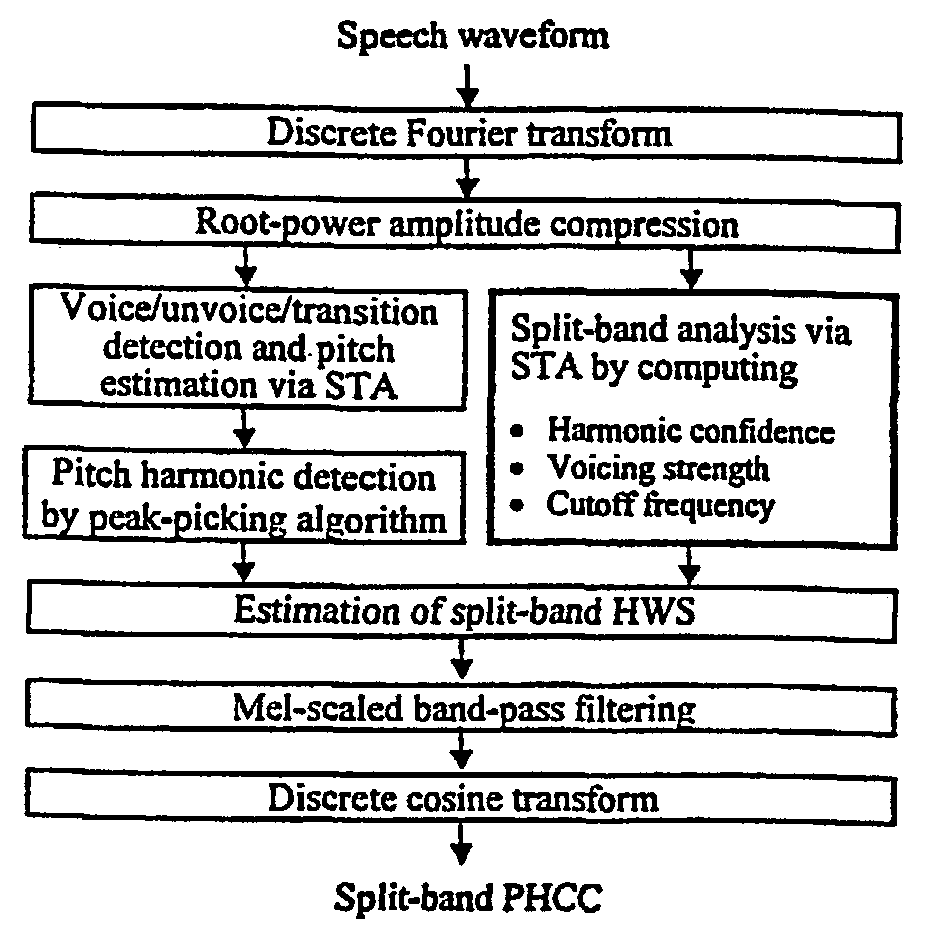

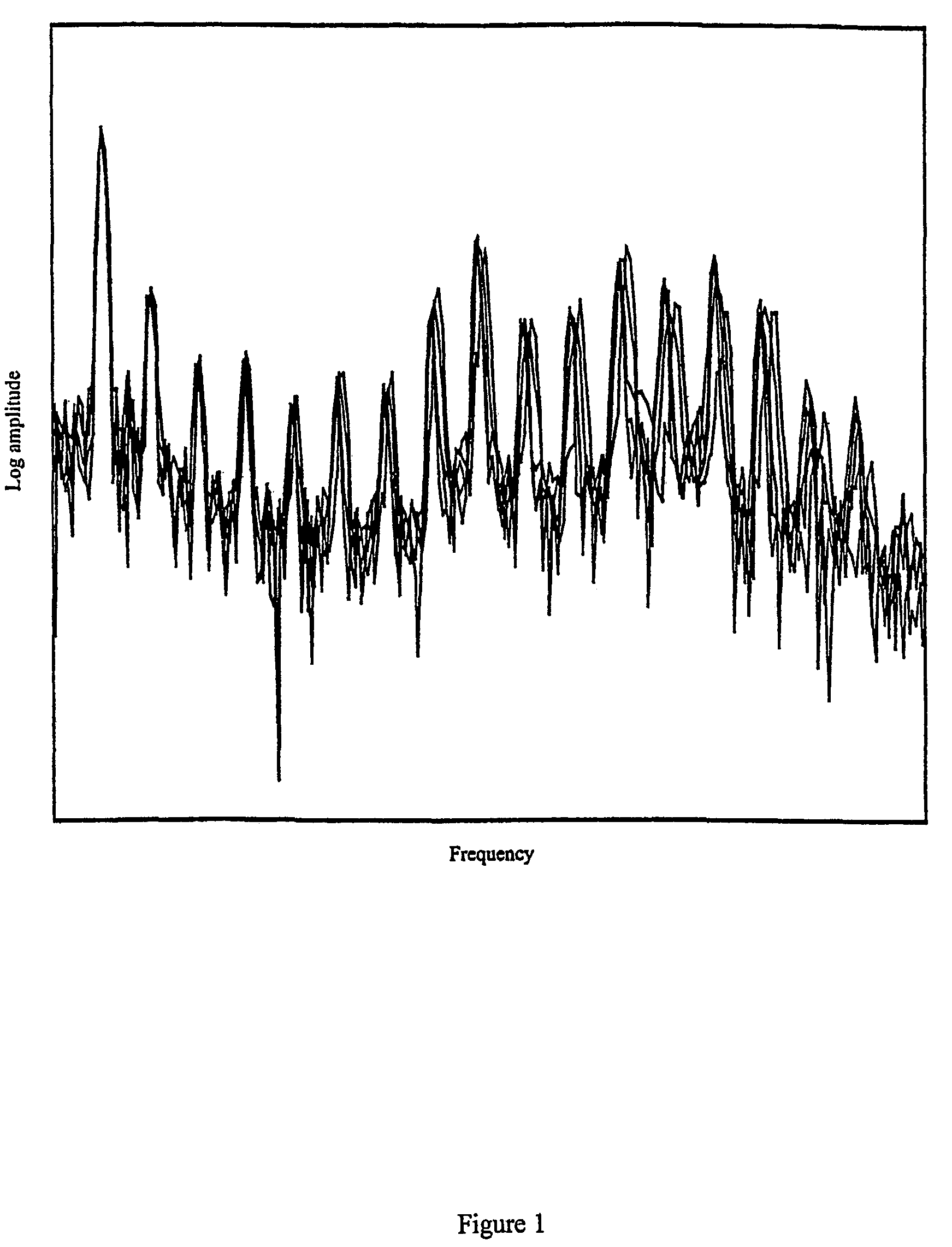

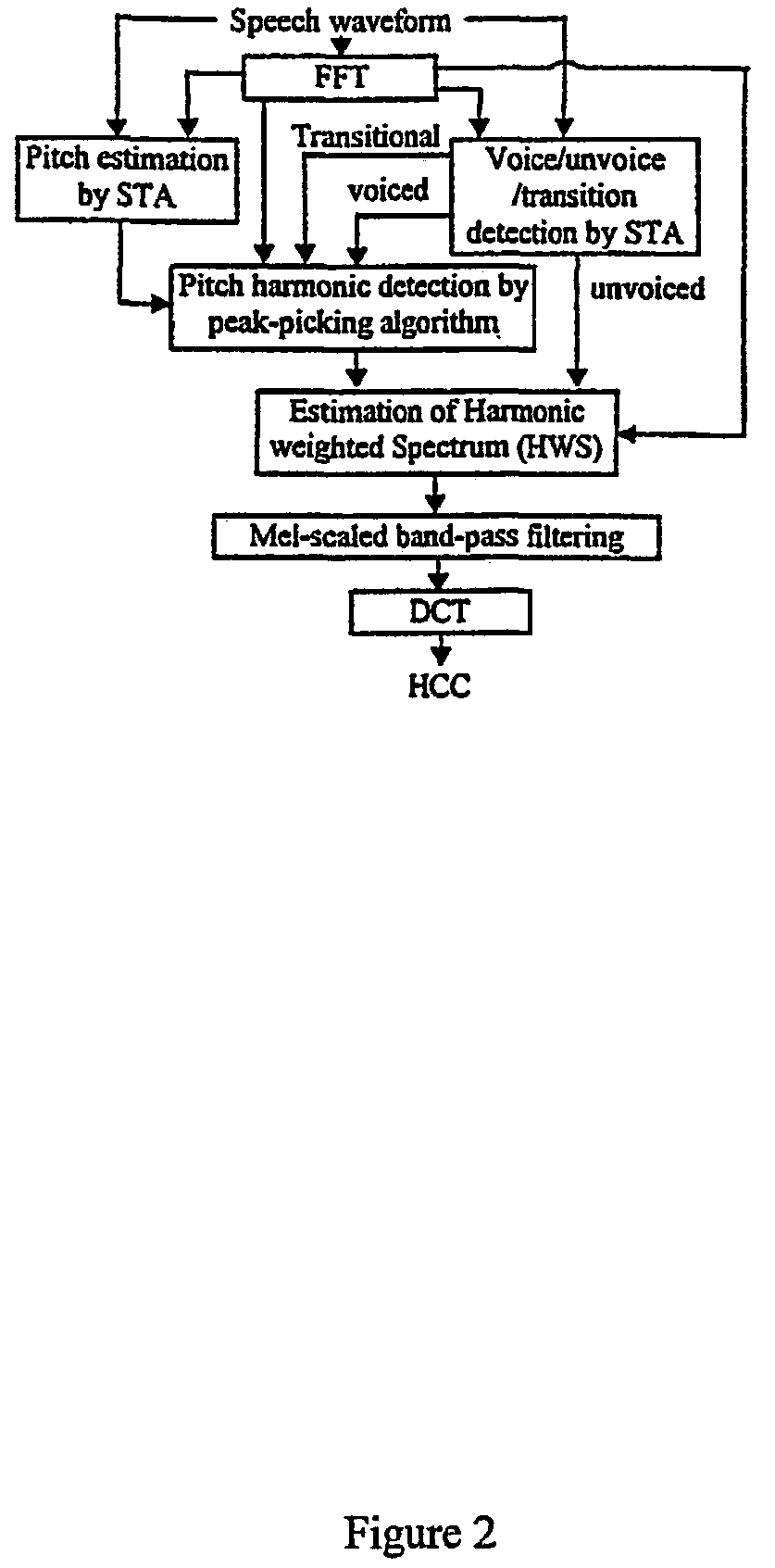

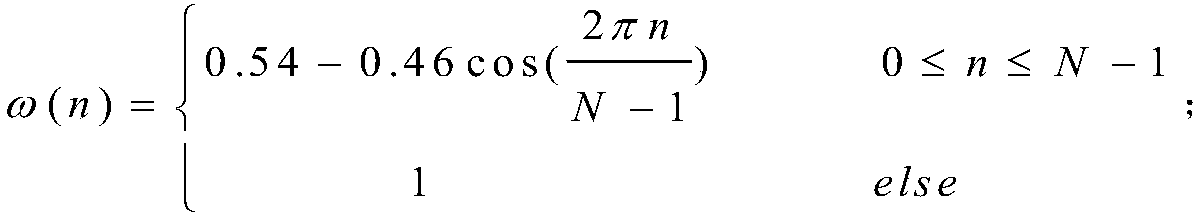

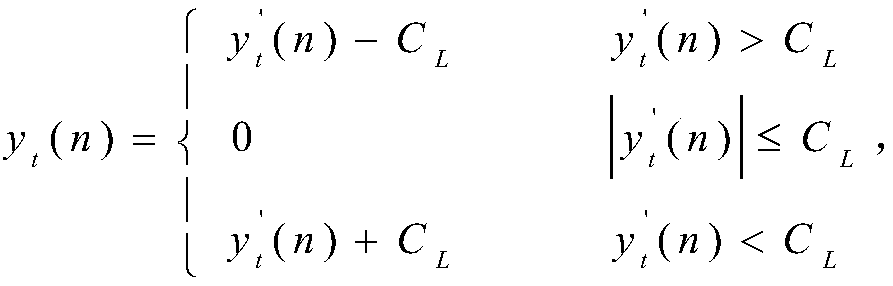

Perceptual harmonic cepstral coefficients as the front-end for speech recognition

ActiveUS7337107B2Accurate and robust pitch estimationReduce errorsSpeech recognitionBandpass filteringHarmonic

Pitch estimation and classification into voiced, unvoiced and transitional speech were performed by a spectro-temporal auto-correlation technique. A peak picking formula was then employed. A weighting function was then applied to the power spectrum. The harmonics weighted power spectrum underwent mel-scaled band-pass filtering, and the log-energy of the filter's output was discrete cosine transformed to produce cepstral coefficients. A within-filter cubic-root amplitude compression was applied to reduce amplitude variation without compromise of the gain invariance properties.

Owner:RGT UNIV OF CALIFORNIA

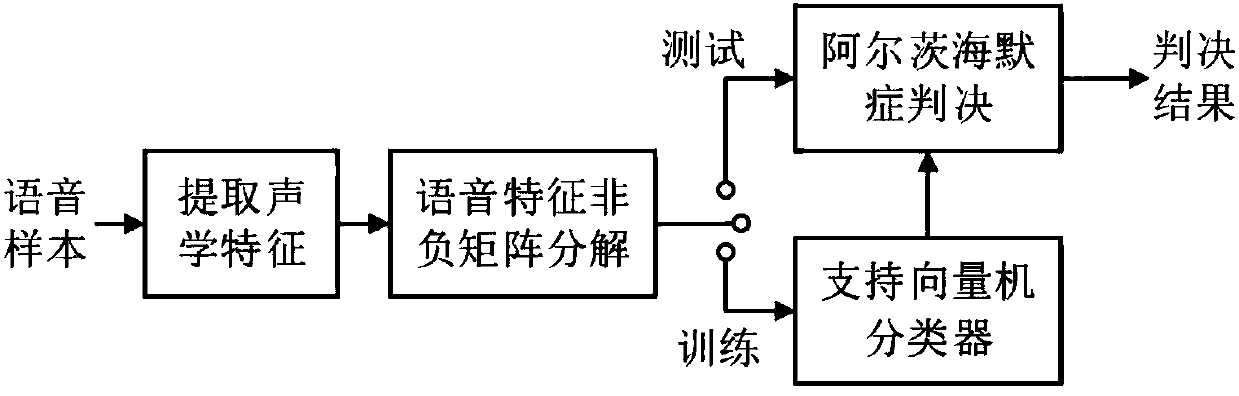

Alzheimer's disease preliminary screening method based on speech feature non-negative matrix decomposition

InactiveCN108198576ACharacterize the difference in characteristicsThe result is validSpeech analysisSupport vector machine classifierScreening method

The invention discloses an Alzheimer's disease preliminary screening method based on speech feature non-negative matrix decomposition. The Alzheimer's disease preliminary screening method includes thefollowing steps: extracting acoustic features including fundamental frequency, energy, harmonic-to-noise ratios, formants, glottal waves, linear prediction coefficients, and constant Q cepstrum coefficients, from speech samples of Alzheimer's patients and normal humans, and splicing the features into a feature matrix; using the non-negative matrix decomposition algorithm to decompose the featurematrix, and obtaining the dimensionality-reduced feature matrix; using the dimensionality-reduced feature matrix as an input, and training a support vector machine classifier; and inputting the dimensionality-reduced feature matrix of a test speech sample into the trained support vector machine classifier, and determining whether the test speech is speech of normal humans or speech of Alzheimer'spatients. The invention adopts non-negative matrix decomposition to perform dimensionality reduction transformation on high-dimensional input acoustic features, the dimensionality-reduced feature matrix has better discrimination, and the method can obtain more excellent effects in Alzheimer's disease preliminary screening.

Owner:SOUTH CHINA UNIV OF TECH

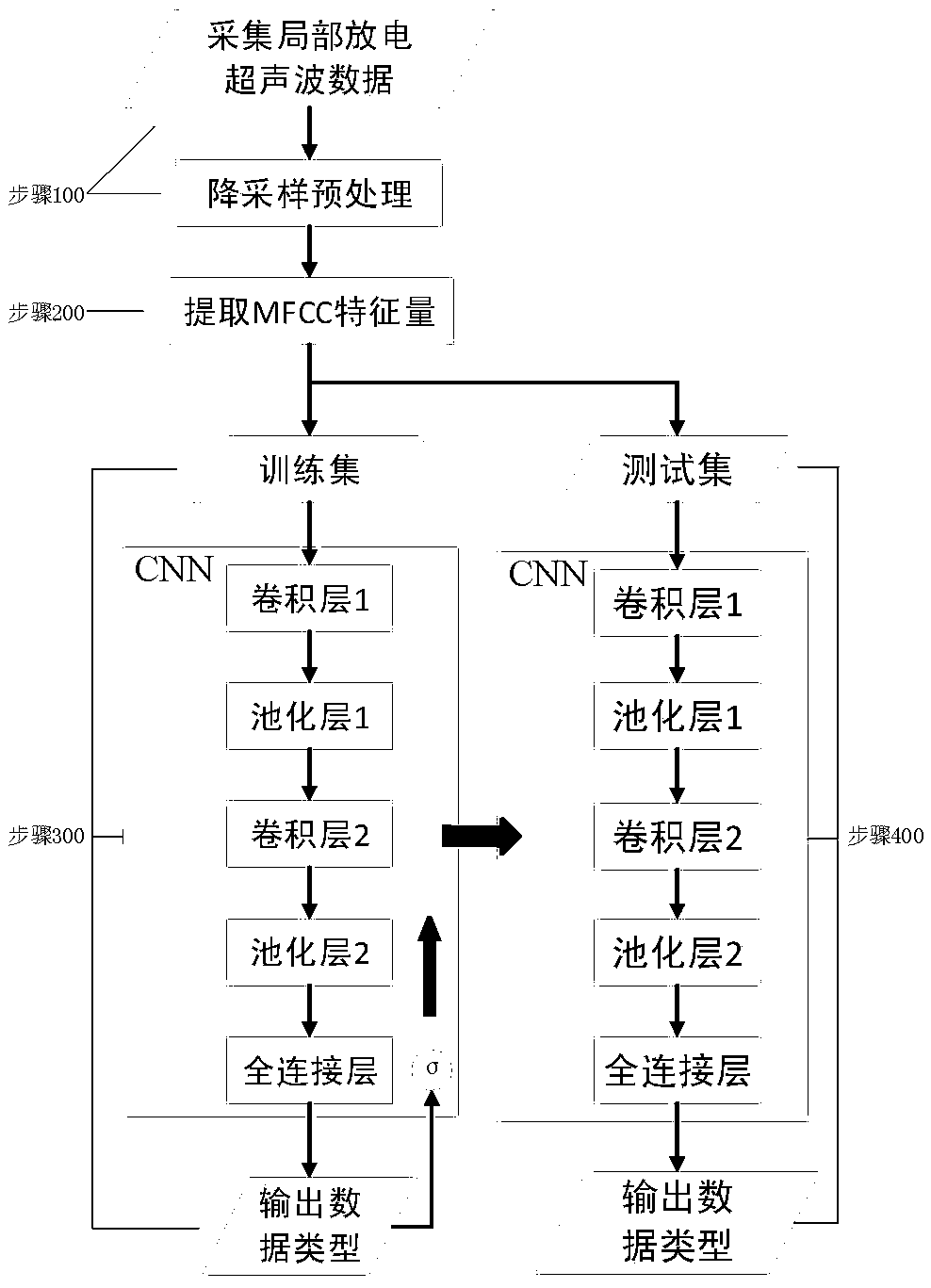

Judgment method of ultra-high voltage equipment local discharge detection data

ActiveCN109856517AImprove accuracyReduce or avoid manual interventionTesting dielectric strengthPattern recognitionUltra high voltage

The invention discloses a judgment method of ultra-high voltage equipment local discharge detection data. The judgment method comprises the following steps: sampling a continuous ultrasonic frequencysignal to reduce to the continuous sound wave frequency signal capable of being heard by the human ear; continuously intercepting a frame sound wave frequency signal with a set time length; extractinga Mayer frequency cepstrum coefficient of the frame sound wave frequency signal as a to-be-identified fault discharge feature; sending the extracted to-be-identified fault discharge feature into a CNN convolution neural network, enabling the to-be-identified fault discharge feature to enter a fault classifier of a CNN convolution neural network output classification layer through CNN convolutionneural network analysis, wherein the CNN convolution neural network identifies the to-be-identified fault discharge feature and outputs the to-be-identified fault discharge feature according to the fault classifier formed by learning the known fault discharge feature in advance. The mode learning and identification are performed on the fault type by directly using the convolution neural network CNN, the identification accuracy rate is improved, and the manual intervention is reduced or avoided.

Owner:STATE GRID CORP OF CHINA +1

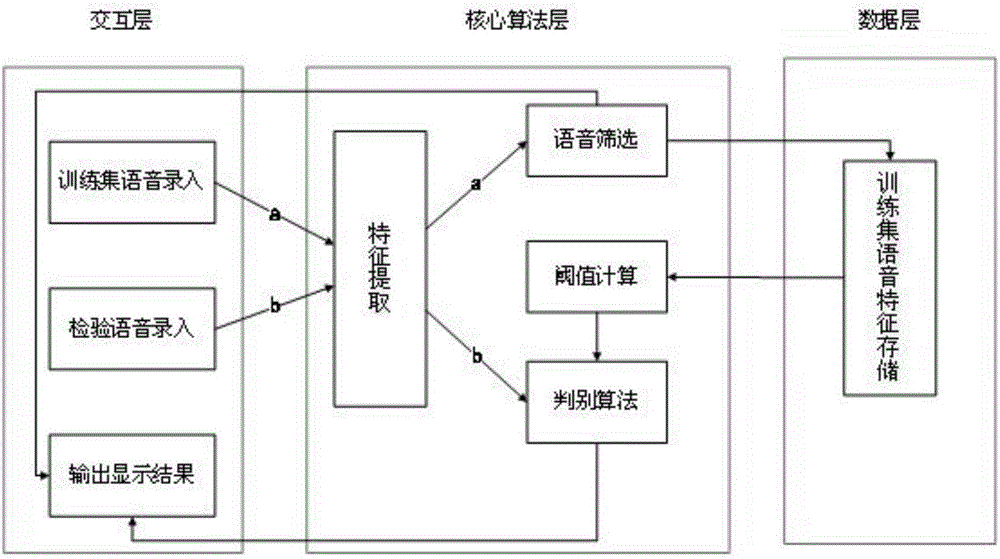

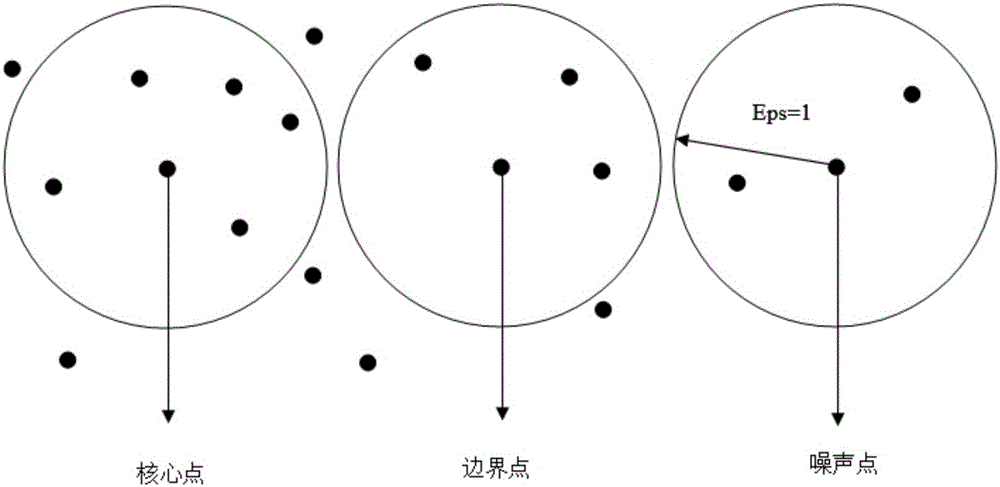

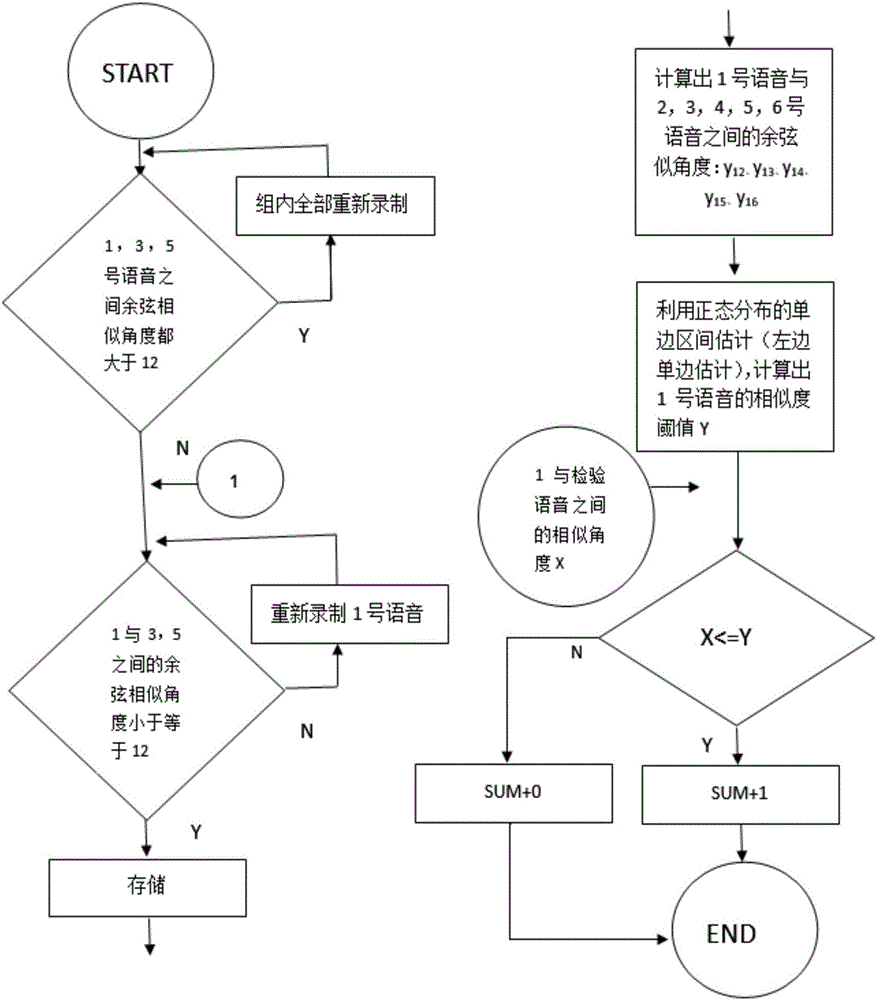

Voiceprint identification method bases on DBSCAN algorithm

The invention discloses a voiceprint identification method bases on a DBSCAN algorithm. The method comprises steps of voice feature extraction, voice fragment similarity evaluation, training set voice screening and examined voice determination calculation. The voice feature extraction adopts a mel-cepstrum coefficient for feature extraction. The voice fragment similarity evaluation adopts cosine similarity for similarity calculation. The training set voice screening adopts a fixed threshold for screening. The examined voice determination utilizes an improved DBSCAN algorithm. The voiceprint identification method bases on the DBSCAN algorithm does not require a big training set, only needs some screened training voices as a training set, and utilizes distribution features of the training voices for examined voice determination. The user experience is good and the identification rate is high.

Owner:HOHAI UNIV

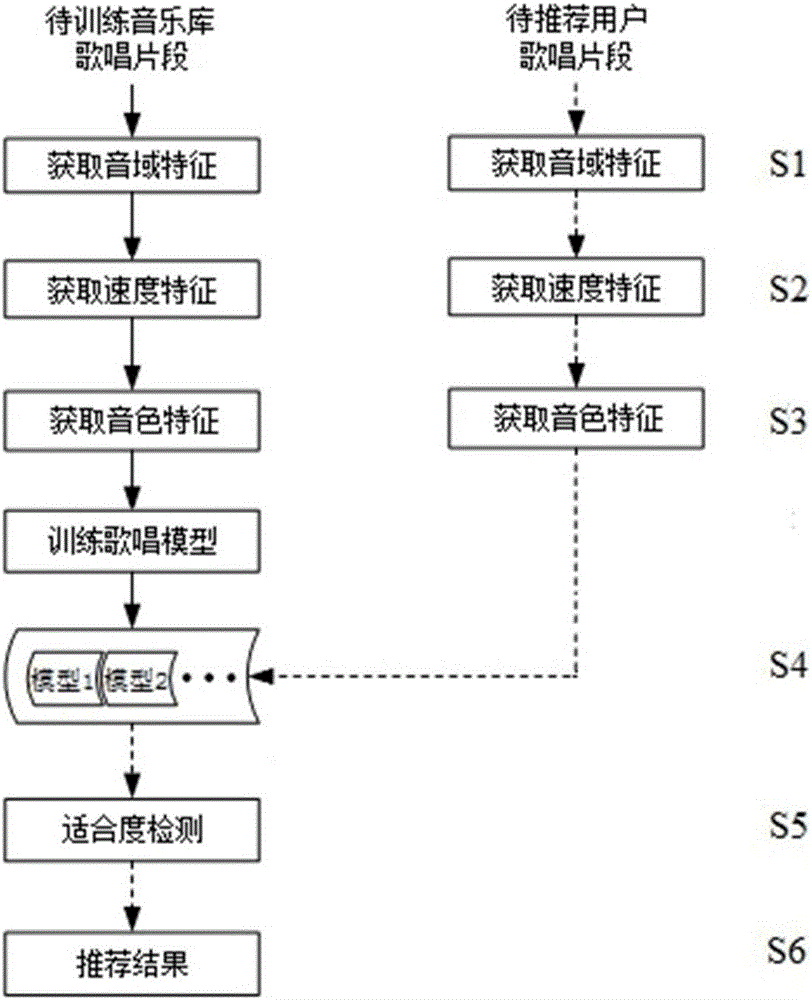

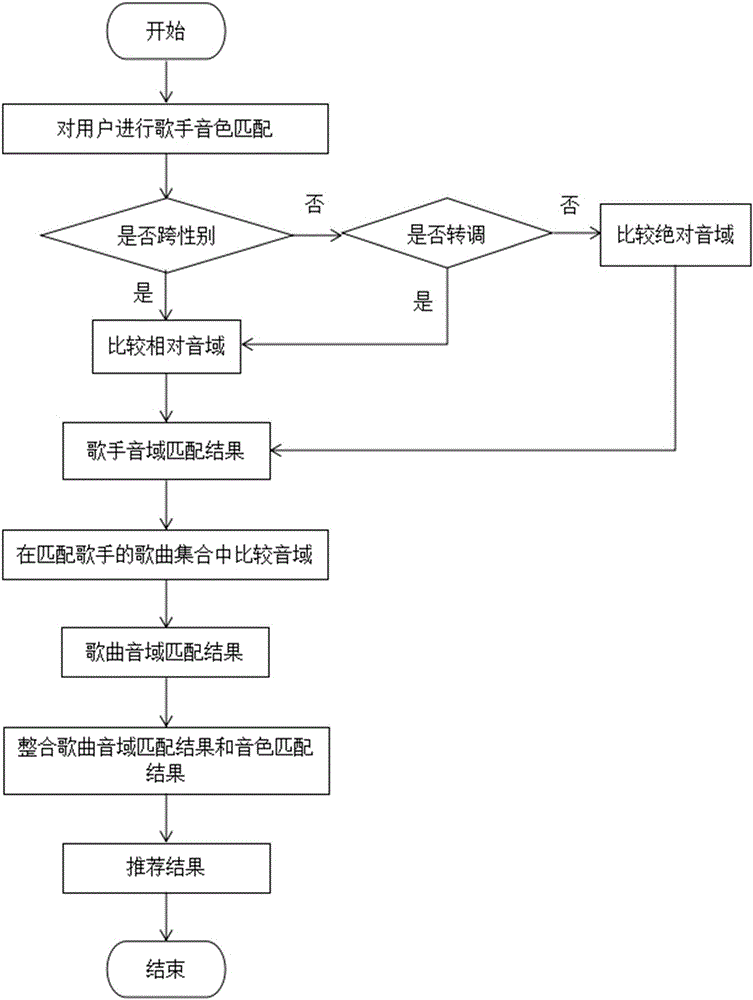

Individualized song recommending system based on vocal music characteristics

ActiveCN106095925AVerify accuracyVerify feasibilitySpecial data processing applicationsMel-frequency cepstrumPersonalization

The embodiment of the invention discloses an individualized song recommending system based on vocal music characteristics. The method includes the following steps of extracting characteristics, wherein the voice register characteristics, speed characteristics and tone characteristics of singing data are extracted, the voice register characteristics include absolute voice register and relative voice register, the speed characteristics include the number of beats per minute, and the tone characteristics include a Gaussian mixture model of Mel frequency cepstrum coefficient training; recommending songs systematically, wherein a corresponding song in a music library is found through a key sound matching algorithm according to a snatch sung by a user, and voice register conformity detection, song conformity detection and singer conformity detection are conducted. Singer recommending and song recommending are conducted according to extracted user characteristics. By means of the method, whether a current song is suitable for being sung by the user or not can be evaluated, and singers matched with the user vocal music ability and songs suitable for being sung by the user are further recommended. From the perspective of user singing, the traditional music recommending range is expanded, and higher practical value is achieved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

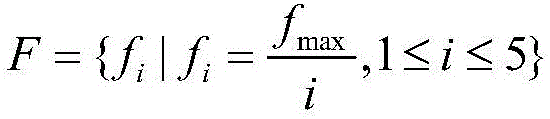

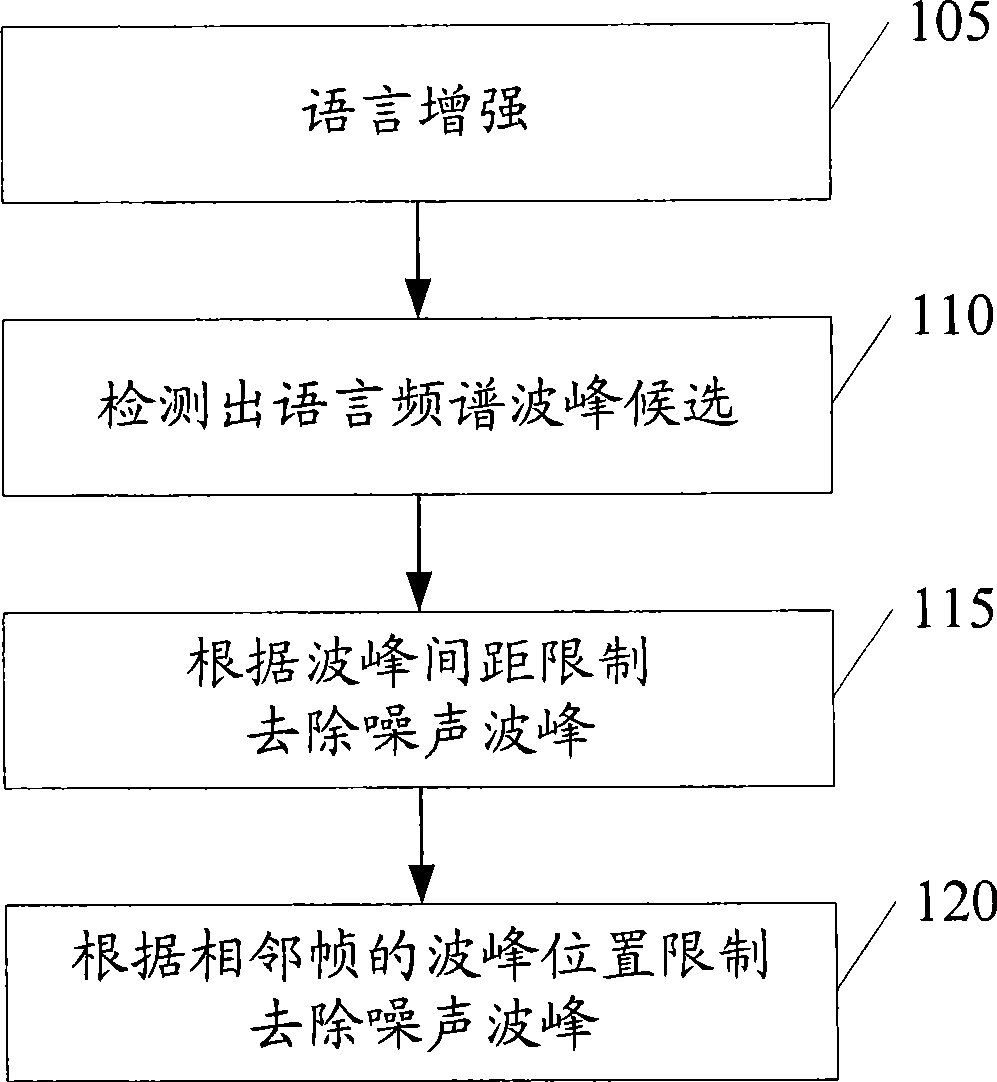

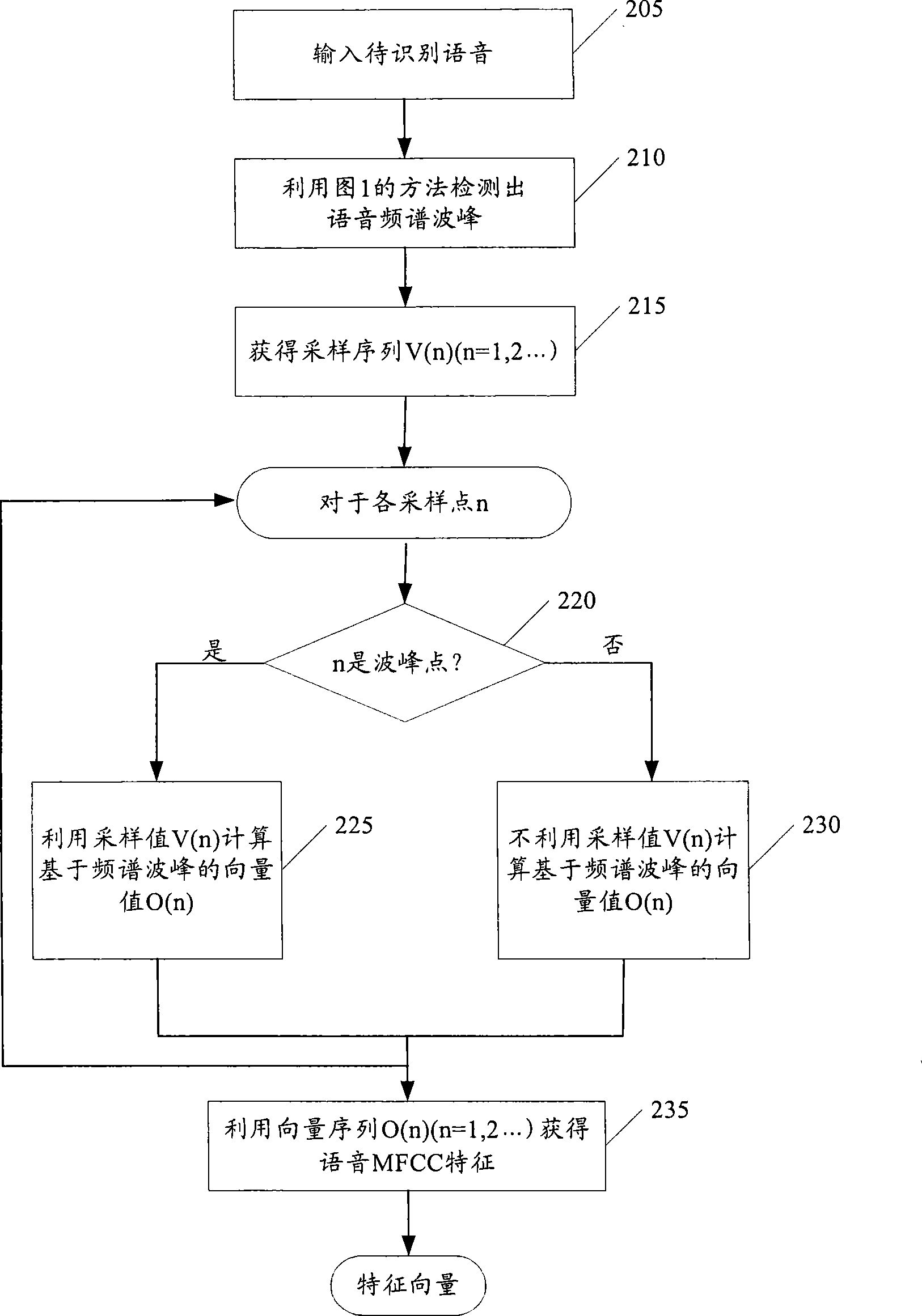

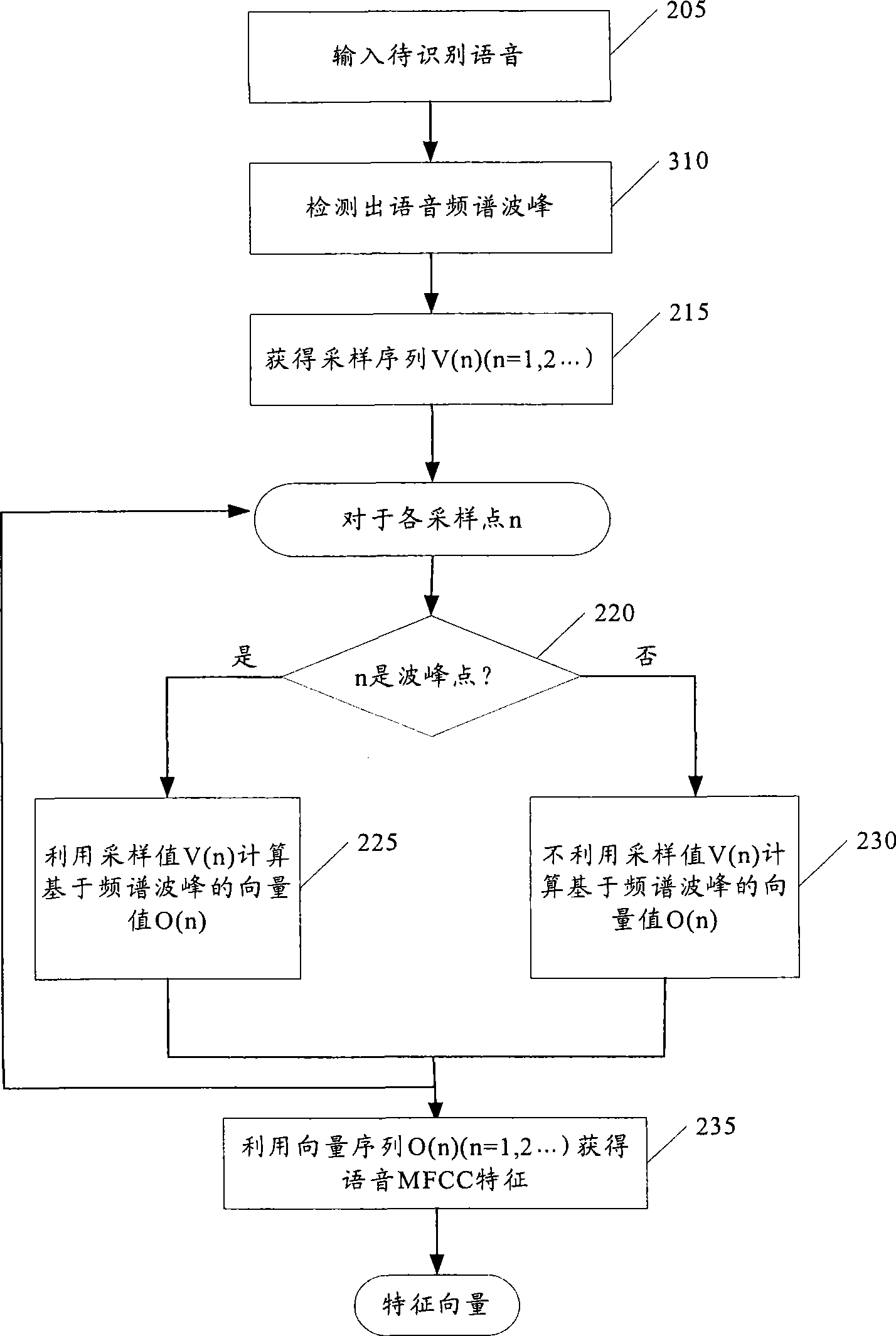

Method and system for detecting phonetic frequency spectrum wave crest and phonetic identification

InactiveCN101465122AImproved robustness against noiseSpeech recognitionFrequency spectrumSpeech identification

The invention provides a method and a device for detecting voice frequency spectrum wave crest as well as a voice recognition method and a system thereof. The method for detecting voice frequency spectrum wave crest comprises the following steps: detecting voice frequency spectrum wave crest candidates from the power spectrum of the voice; eliminating noise wave crests in the voice frequency spectrum wave crest candidates according to the spaces between the wave crests and adjacent wave crest positions; and detecting the voice frequency spectrum wave crest. In the invention, during the detection of the voice frequency spectrum wave crest, the limitations of the spaces between the wave crests and adjacent wave crest positions are utilized to remove the noise wave crests. Furthermore, the acquired power value of the voice frequency spectrum wave crest replaces the entire power spectrum, and is used for extracting the mel cepstrum coefficient characteristics of voice, so as to increase the noise-proof robustness of voice recognition under the circumstances that voice characteristic dimension is not increased.

Owner:KK TOSHIBA

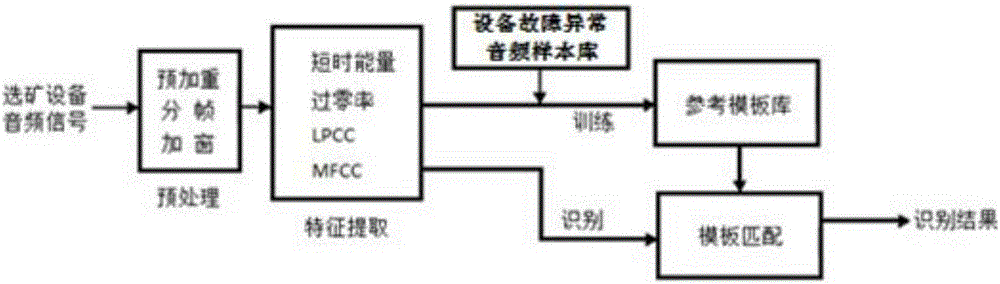

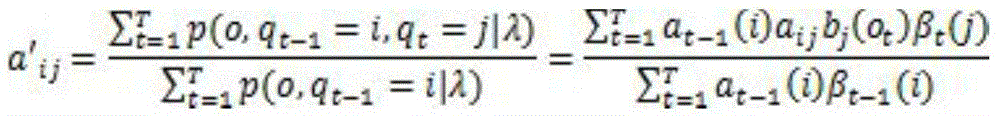

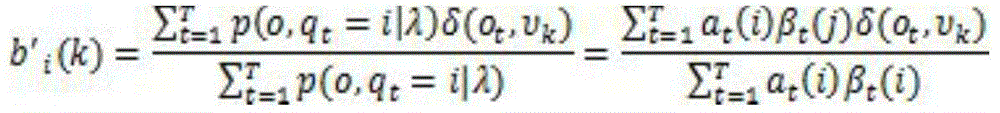

Ore dressing equipment fault abnormity audio analyzing and identifying method based on HMM

InactiveCN105244038AImprove fault recognition rateSpeech analysisTransfer probabilityHide markov model

The invention provides an ore dressing equipment fault abnormity audio analyzing and identifying method based on an HMM (Hidden Markov Model), and relates to the digital audio processing technical field. The method includes the steps: inputting an ore dressing equipment audio signal in a WAV format, pre-processing a collected audio sample, extracting a linear prediction cepstrum coefficient (LPCC), a Mel frequency cepstrum coefficient (MFCC) and other characteristics and taking the characteristics as characteristic parameters, carry out training by a Baum-Welch algorithm, obtaining a state transfer probability matrix through training, conducting identification by means of a Viterbi algorithm, and realizing identification by calculating the maximum probability of an unknown audio signal during a transfer process as well as by a model corresponding to the maximum probability. The method can effectively detect abnormal sound in an audio signal so as to effectively identify fault abnormities of ore dressing equipment.

Owner:JINLING INST OF TECH

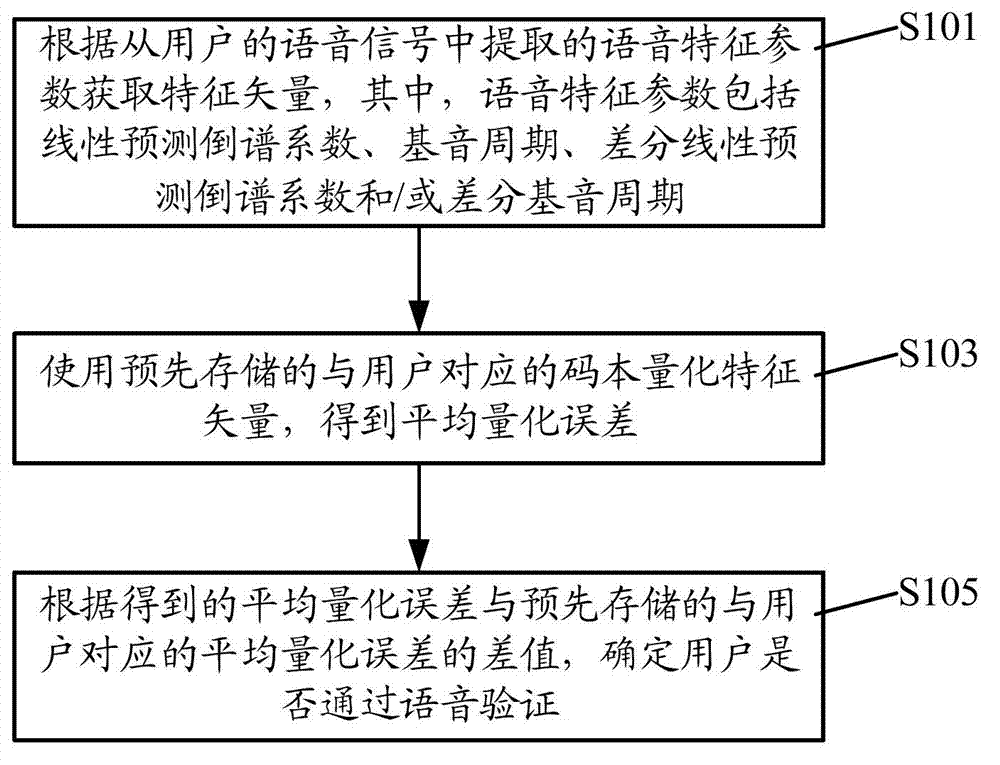

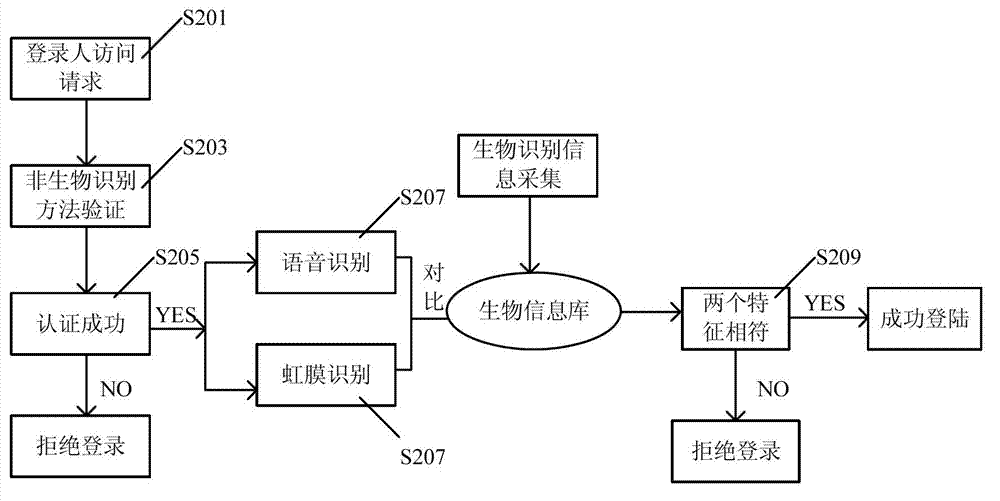

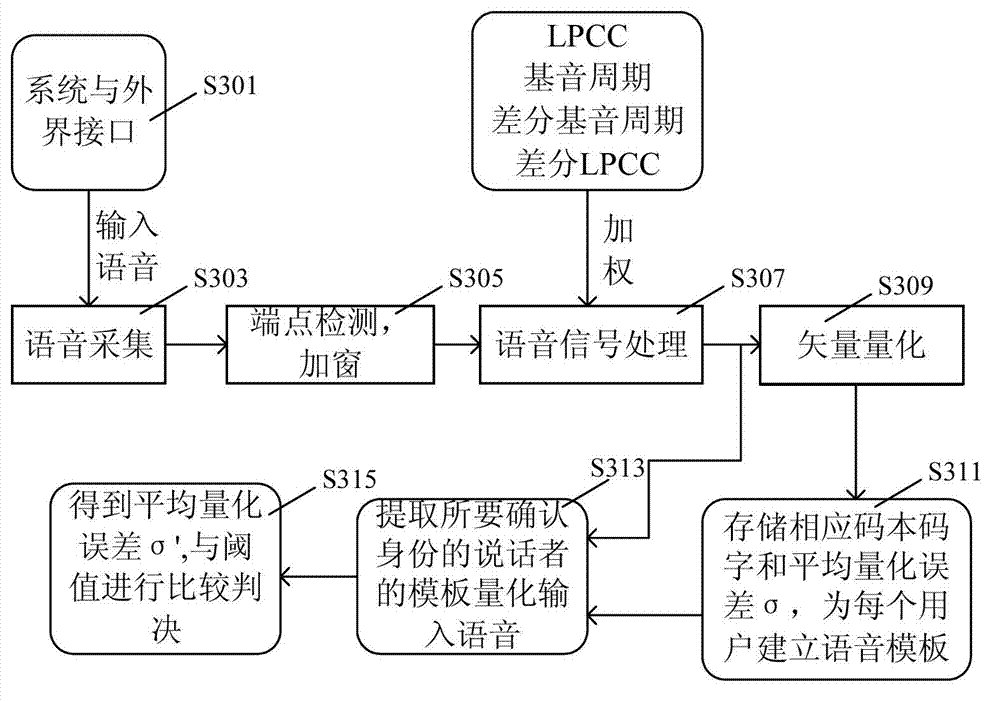

User verification method and device

InactiveCN103207961AQuick verificationVerified and reliableDigital data authenticationSpeech recognitionUser verificationSpeech verification

The invention discloses a user verification method and device. The method comprises the following steps of: acquiring characteristic vectors from voice characteristic parameters extracted from voice signals of a user, wherein the voice characteristic parameters comprise linear prediction cepstrum coefficients, pitch periods, differential linear prediction cepstrum coefficients and / or differential pitch periods; calculating an average quantization error according to a pre-stored codebook quantization characteristic vector corresponding to the user; and determining whether the user passes voice verification according to the difference between the average quantization error and the pre-stored average quantization error corresponding to the user. The method provided by the invention can determine whether the user passes voice verification so as to quickly and reliably verify the identity of the user by processing the voice characteristic parameters extracted from the voice signals of the user and comparing the processed information with the pre-stored information, thereby improving system security in a login step.

Owner:DAWNING INFORMATION IND BEIJING

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com