Pre-training framework with two-stage decoder for language understanding and generation

A decoder and pre-training technology, applied in the field of language understanding and generation of pre-training frameworks, can solve the problems of insufficient ability to generate text, not smooth, insufficient subsequent generation ability, etc., so as to achieve convenient access to context information and good pre-training quality. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

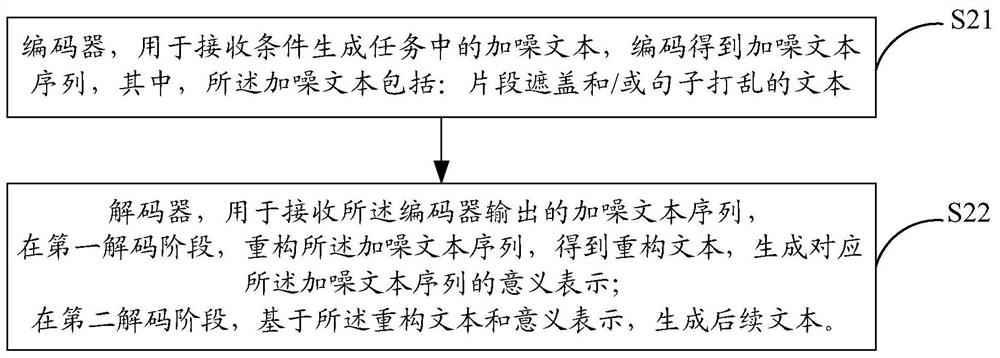

[0056] As an implementation manner, the condition generation task includes: a text summarization task;

[0057] Encoder, used to receive the text of the text summarization task, and encode to obtain the text sequence;

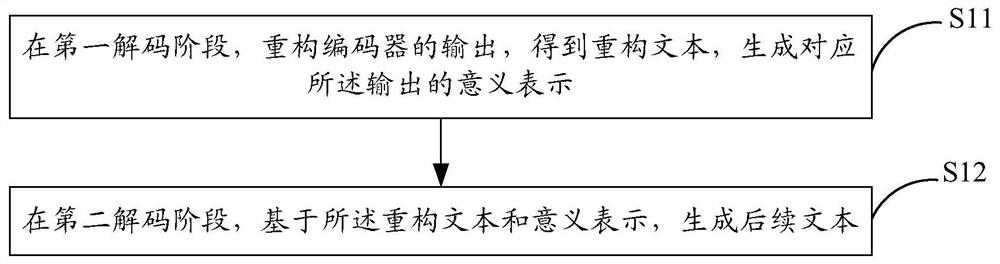

[0058] a decoder for receiving the text sequence output by said encoder,

[0059] In the first decoding stage, the text sequence is reconstructed to obtain a coherent text sequence intelligible after reconstruction, and a meaning representation of the text sequence is generated;

[0060] In a second decoding stage, based on the coherent text sequence and meaning representation, a text summary is generated.

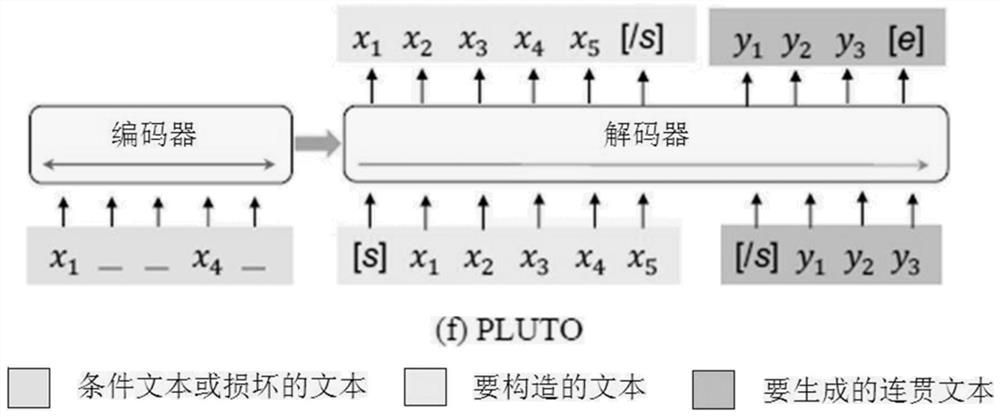

[0061] In this embodiment, if Figure 4 In the summarization task shown in (b), x represents questions (in text summarization tasks, these questions refer to the overall text). After being encoded by the encoder, it is input to the decoder for two-stage decoding to obtain a coherent text sequence that can be reconstructed and understandable, as well as t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com