Non-autoregressive speech recognition training decoding method based on parameter sharing and system thereof

A speech recognition and training method technology, applied in speech recognition, speech analysis, instruments, etc., can solve the problems of non-autoregressive model training difficulties, etc., and achieve the effects of improving decoding accuracy, improving training speed, and low delay

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Example Embodiment

[0051] DETAILED DESCRIPTION OF THE PREFERRED EMBODIMENTS It should be understood that the specific embodiments described herein are intended to illustrate and explain the present invention and is not intended to limit the invention.

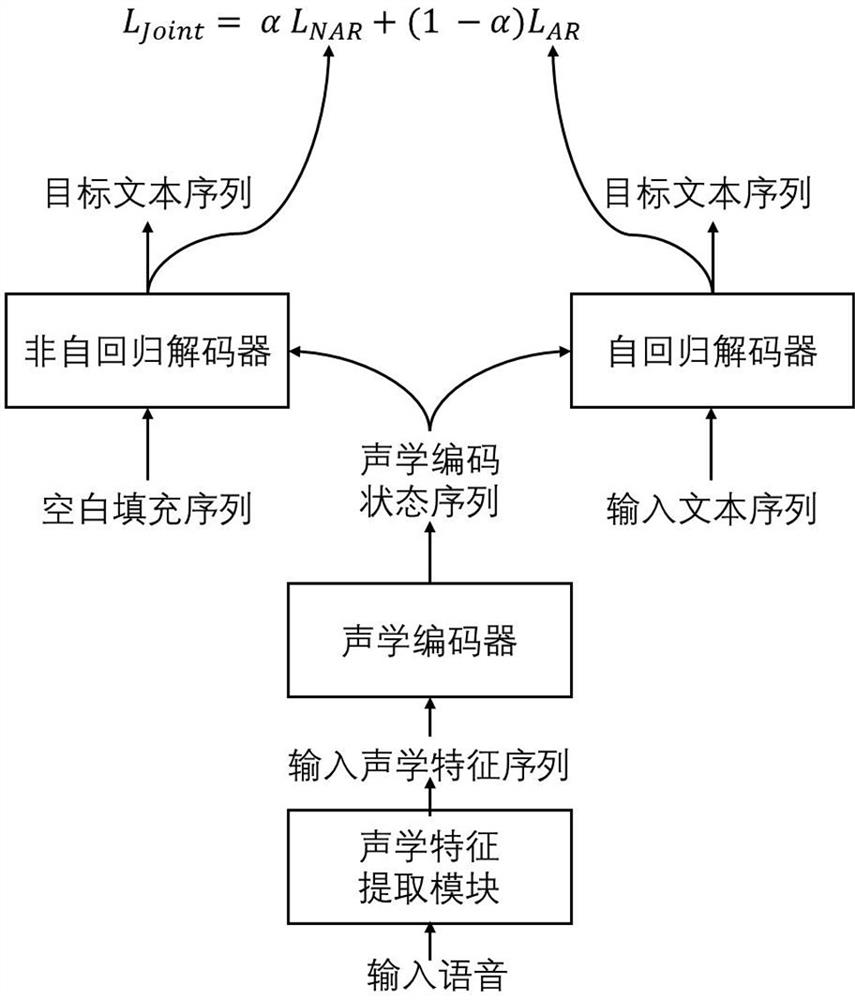

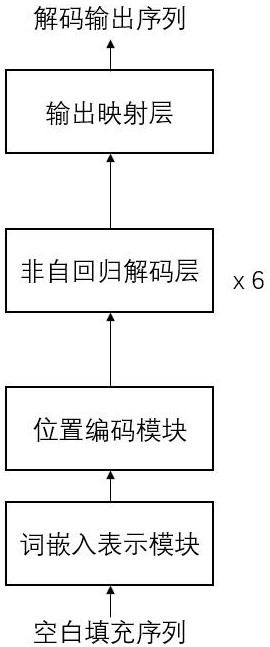

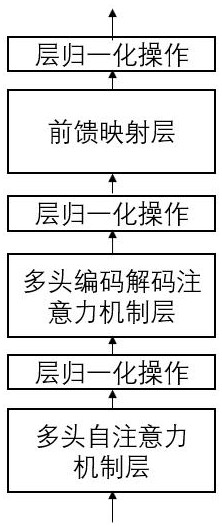

[0052] Based on two-step decoding parameters shared non-self-regression model and training method, models based on self-focus transform networks include an acoustic encoder based on self-focus mechanism, decoder based on self-focus mechanism, such as figure 1 As shown, including the following steps:

[0053] Step 1, obtain voice training data and corresponding text annotation training data, and extract a series of speech training data, constitute a speech feature sequence;

[0054] The goal of speech recognition is to convert a continuous speech signal into a text sequence. During the identification process, the coefficient composition of the specific frequency component is extracted by the discrete Fourier transformation by adding the waveform signa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com