Method for recognizing and retrieving action semantics in video

A technology in motion semantics and video, applied in character and pattern recognition, instruments, computer components, etc., can solve problems such as large amount of calculation, and achieve the effect of improving accuracy and reducing calculation amount

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

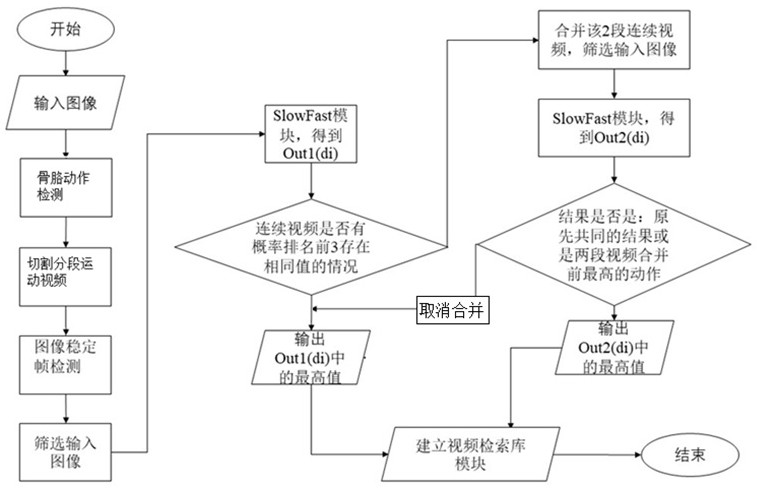

[0048] On the basis of the original SlowFast algorithm, the present invention proposes to determine the input image of the slow channel according to the image stability index and improve the detection accuracy of the slow module; rely on the fast detection of skeletal motion to determine the input video segment of the fast channel and reduce the calculation amount of the fast channel hybrid algorithm.

[0049] A method for action semantic recognition retrieval in a video of the present invention, the video adopts V={Im(f i )}, where Im is the image, f i It is for the image from 1~F imax number of F imax is the maximum number of frames of video V. That is Im(f i ) represents the number f in V i images, such as figure 1 As shown, a method for semantic recognition and retrieval of actions in a video includes the following steps:

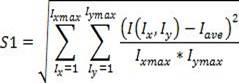

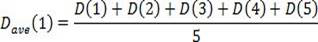

[0050] Step 1, using the OpenPose toolbox to extract the key points of the human skeleton in the video image to obtain the three-dimensional coo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com