Image marking method and system

An image labeling and image technology, applied in the field of deep learning, can solve the problems of poor image labeling effect and low efficiency, and achieve the effect of improving labeling speed and labeling accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

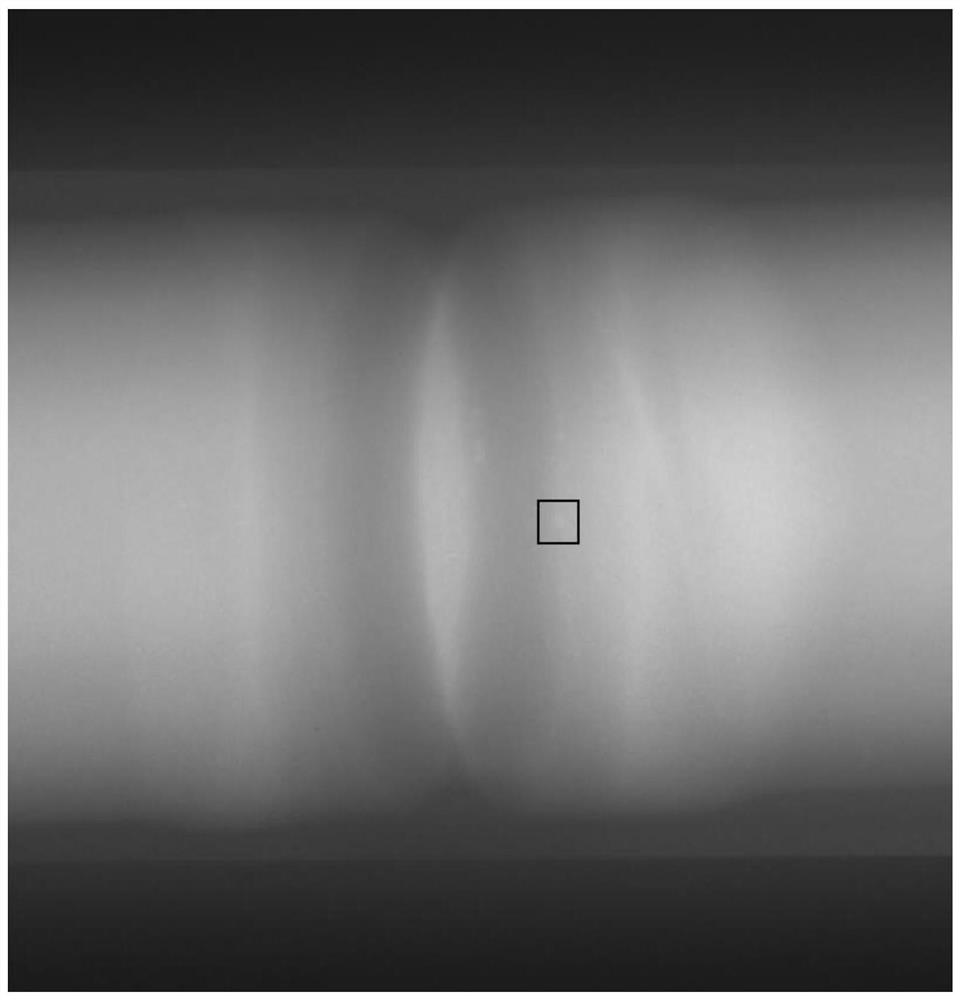

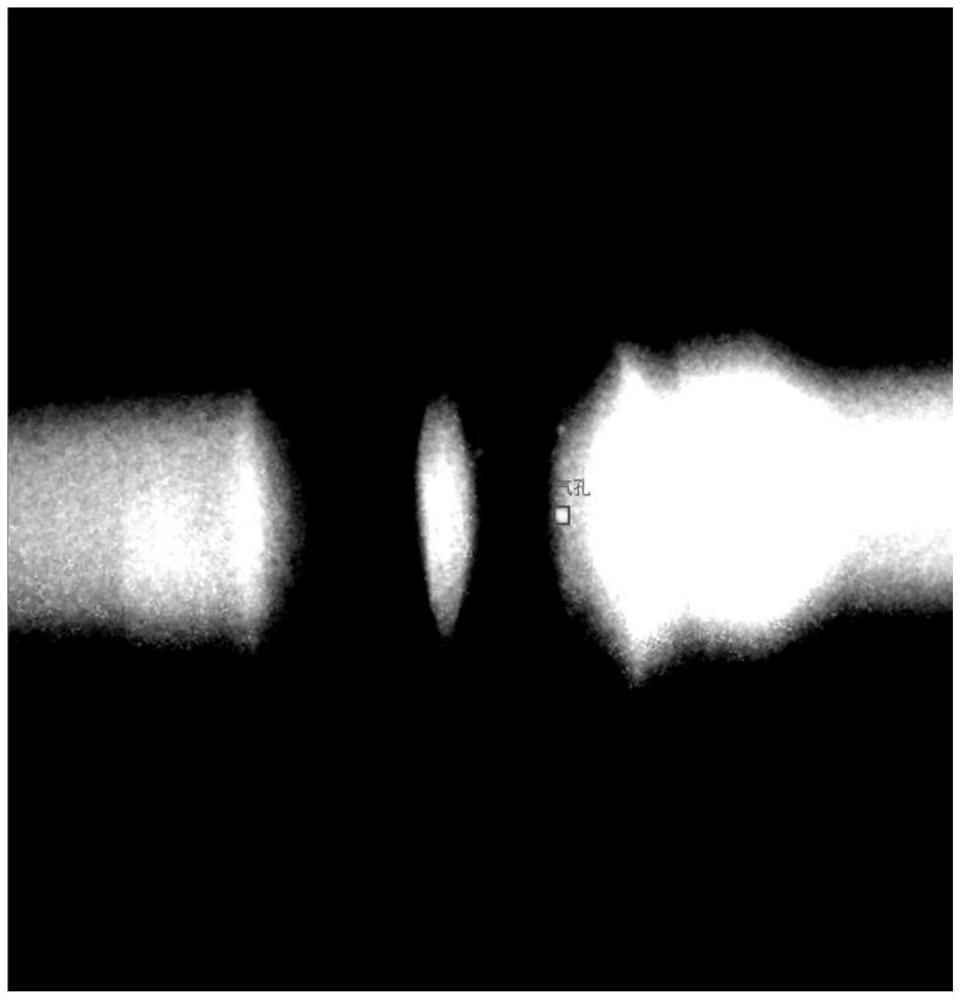

[0035] Such as figure 1 The radiographic image (black and white) of the weld seam is shown, and the object to be marked is the air hole (marked by a black frame). The contrast between the air hole and the background is not obvious, and effective marking cannot be performed. This solution provides an image annotation method, which greatly highlights the object to be annotated through local contrast adjustment. The annotated image is as follows: figure 2 As shown, the stomatal image can be clearly highlighted and the labeling accuracy is improved.

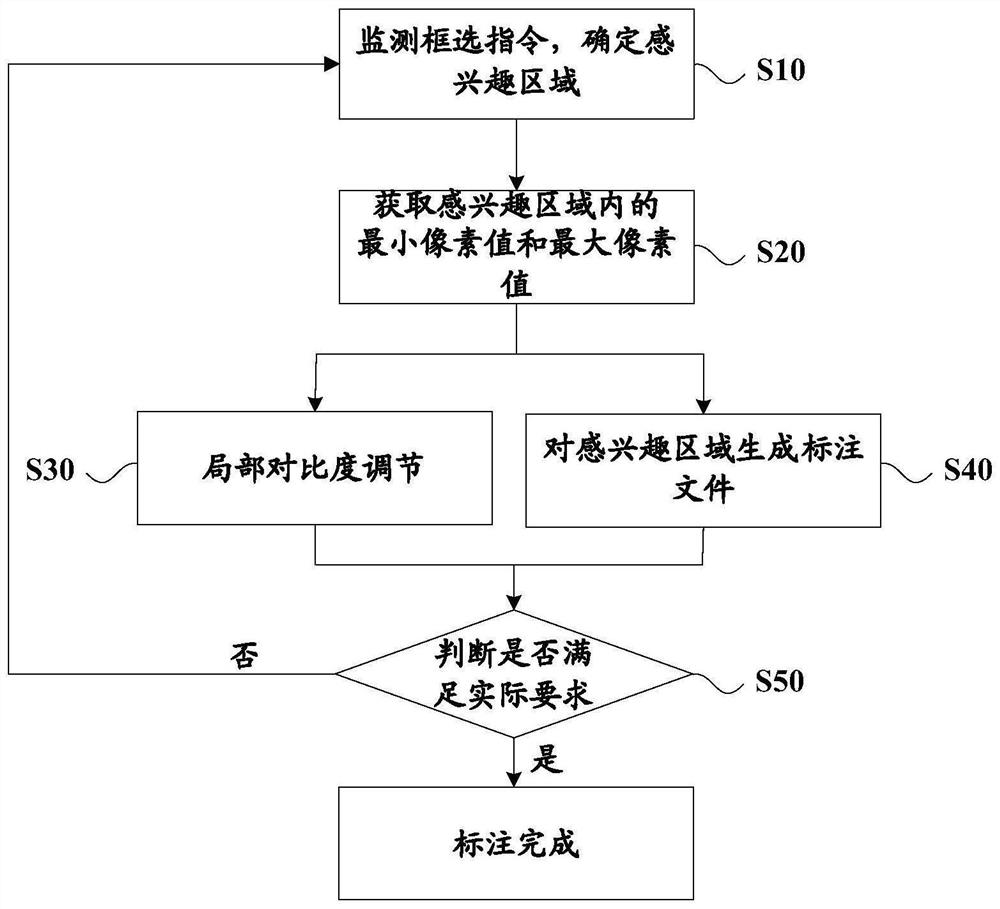

[0036] See image 3 , this program mainly includes the following steps:

[0037] S10. Monitoring the frame selection instruction to determine the region of interest;

[0038] S20. Obtain the minimum pixel value and the maximum pixel value in the region of interest;

[0039] S30, local contrast adjustment;

[0040] S40. Generate an annotation file for the region of interest;

[0041] S50, judging whether the actual requirements...

Embodiment 2

[0051] The above embodiments specifically process black and white images. On the basis of the above embodiments, this embodiment provides an image labeling method for processing color images, such as Figure 4 Shown is a multi-channel color traffic image, the object to be marked is a car (marked in a black box), the contrast between the car and the background is not obvious, and the color of the car cannot be seen clearly. This solution greatly highlights the object to be marked through local contrast adjustment, the color of the car is clearly visible, and the marking accuracy is improved. The marked image is as follows Figure 5 shown.

[0052] see Figure 4-5 , determine the minimum pixel value Rmin=149, Gmin=148, Bmin=146 and maximum pixel value Rmax=175, Gmax=173, Bmax=185 of each channel in the rectangular box, and then the R channel pixels of each pixel point in the whole picture The value is compared with 149 and 175. If it is less than or equal to 149, it is set to ...

Embodiment 3

[0055] On the basis of the first and second embodiments above, this embodiment provides an image tagging system to implement the steps of the image tagging method described in the first or second embodiment above, please refer to Figure 6 , the system consists of:

[0056] A monitoring module 61, configured to monitor the frame selection instruction of the image to be marked, so as to determine the region of interest;

[0057] An acquisition module 62, configured to acquire the minimum pixel value and the maximum pixel value in the region of interest;

[0058] The contrast adjustment module 63 is used to compare the pixel value of each pixel point in the set area with the minimum pixel value min and the maximum pixel value max, and set its pixel value to 0 if it is less than or equal to the minimum pixel value min; For a pixel equal to the maximum pixel value max, set its pixel value to 2 n -1, n is the bit depth of a single channel; for pixels between the minimum pixel val...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com