Full connection layer compression method and device, electronic equipment, accelerator and storage medium

A technology of fully connected layer and compression method, which is applied in the field of neural network to achieve the effect of improving real-time recognition and reducing storage overhead.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

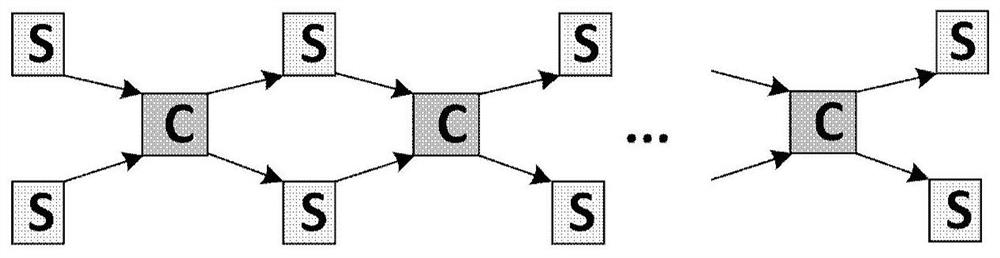

[0042] Due to the excellent flexible programming and outstanding performance-to-power ratio of Field Programmable Gate Array (FPGA, Field Programmable Gate Array), current mainstream CNN forward inference accelerators mostly adopt FPGA-based acceleration solutions. The pipeline style architecture is a common accelerator overall architecture. This architecture can customize the computing structure of each layer to fully match the computing needs of this layer, so it is conducive to fully utilizing the computing potential of the chip. In order to ensure better pipeline performance, combined with the data supply and demand relationship and flow mode of each layer of CNN, the data between layers is usually used as follows: figure 1 The storage method of the ping-pong shown. Among them, part C represents the calculation part, and part S represents the ping-pong storage part. According to the Roofline theory, if the chip bandwidth cannot meet the needs of computing data, the FPGA'...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com