Occlusion handling for computer vision

a computer vision and object technology, applied in image analysis, image enhancement, instruments, etc., can solve problems such as system failure, system failure, and system failure, and achieve the effect of avoiding system failure, avoiding system failure, and avoiding system failur

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026]The word “exemplary” or “example” is used herein to mean “serving as an example, instance, or illustration.” Any aspect or embodiment described herein as “exemplary” or as an “example” is not necessarily to be construed as preferred or advantageous over other aspects or embodiments.

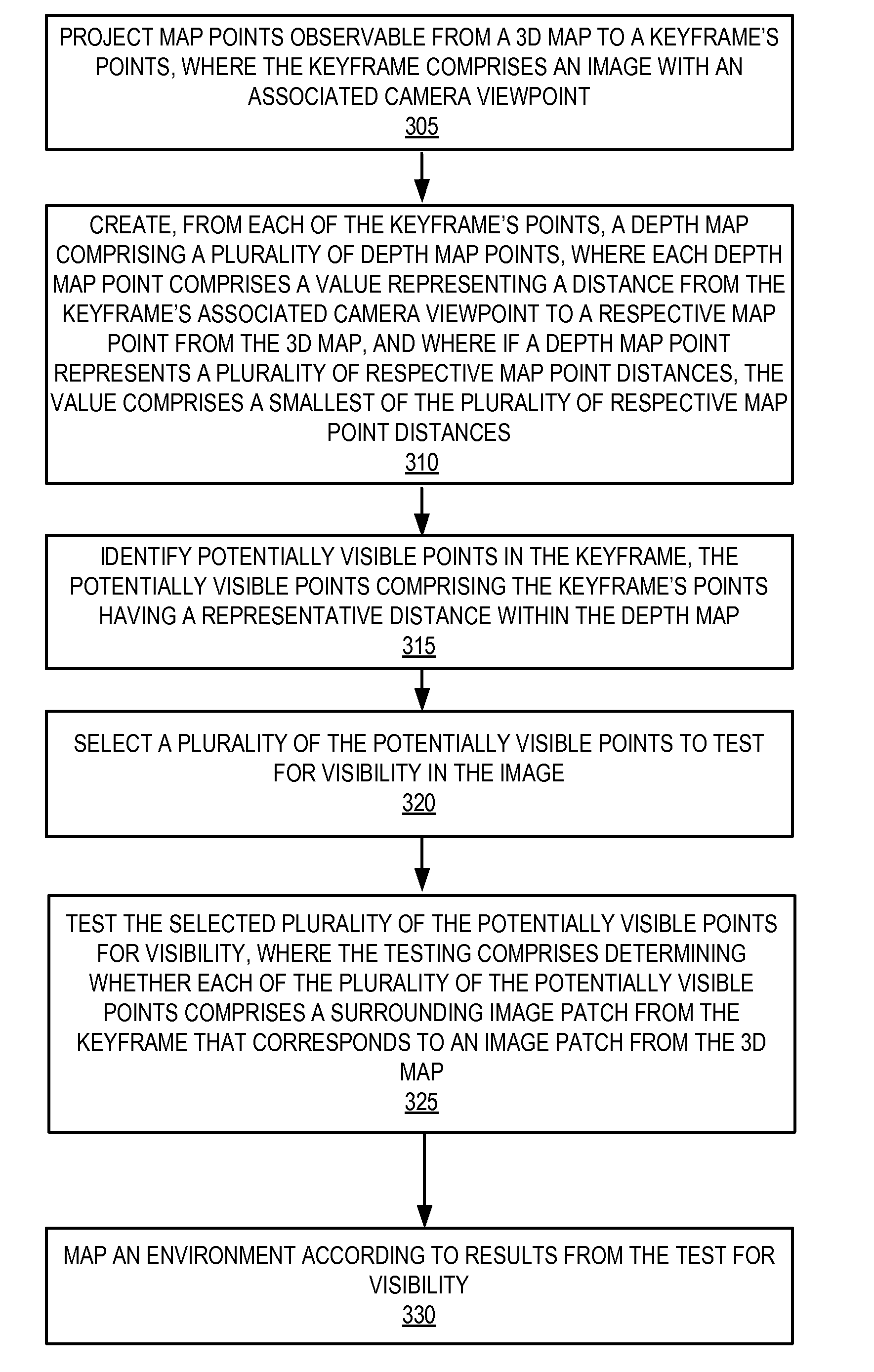

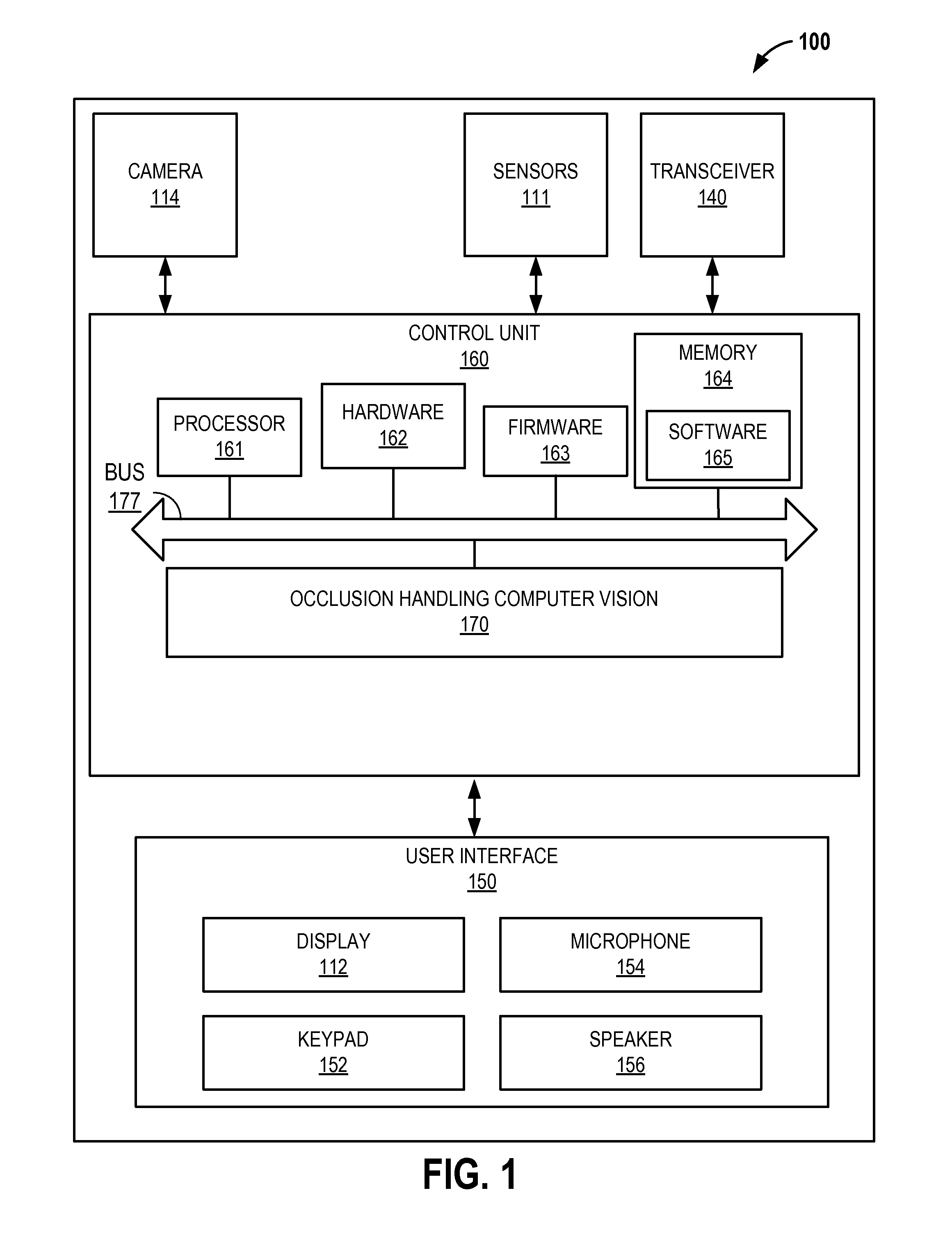

[0027]In one embodiment, Occlusion Handling for Computer Vision (described herein as “OHCV”) filters occluded map points with a depth-map and determines visibility of a subset of points. 3D points in a 3D map have a known depth value (e.g., as a result of Simultaneous Localization and Mapping (SLAM) or other mapping method). In one embodiment, OHCV compares depth of 3D points to depths for equivalent points (e.g., points occupying a same position relative to the camera viewpoint) in a depth mask / map. In response to comparing two or more equivalent points, points with a greater depth (e.g., points farther away from the camera position) are classified as occluded. Points without a corresponding depth ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com