Patents

Literature

63 results about "Simultaneous localisation and mapping" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Robust sensor fusion for mapping and localization in a simultaneous localization and mapping (SLAM) system

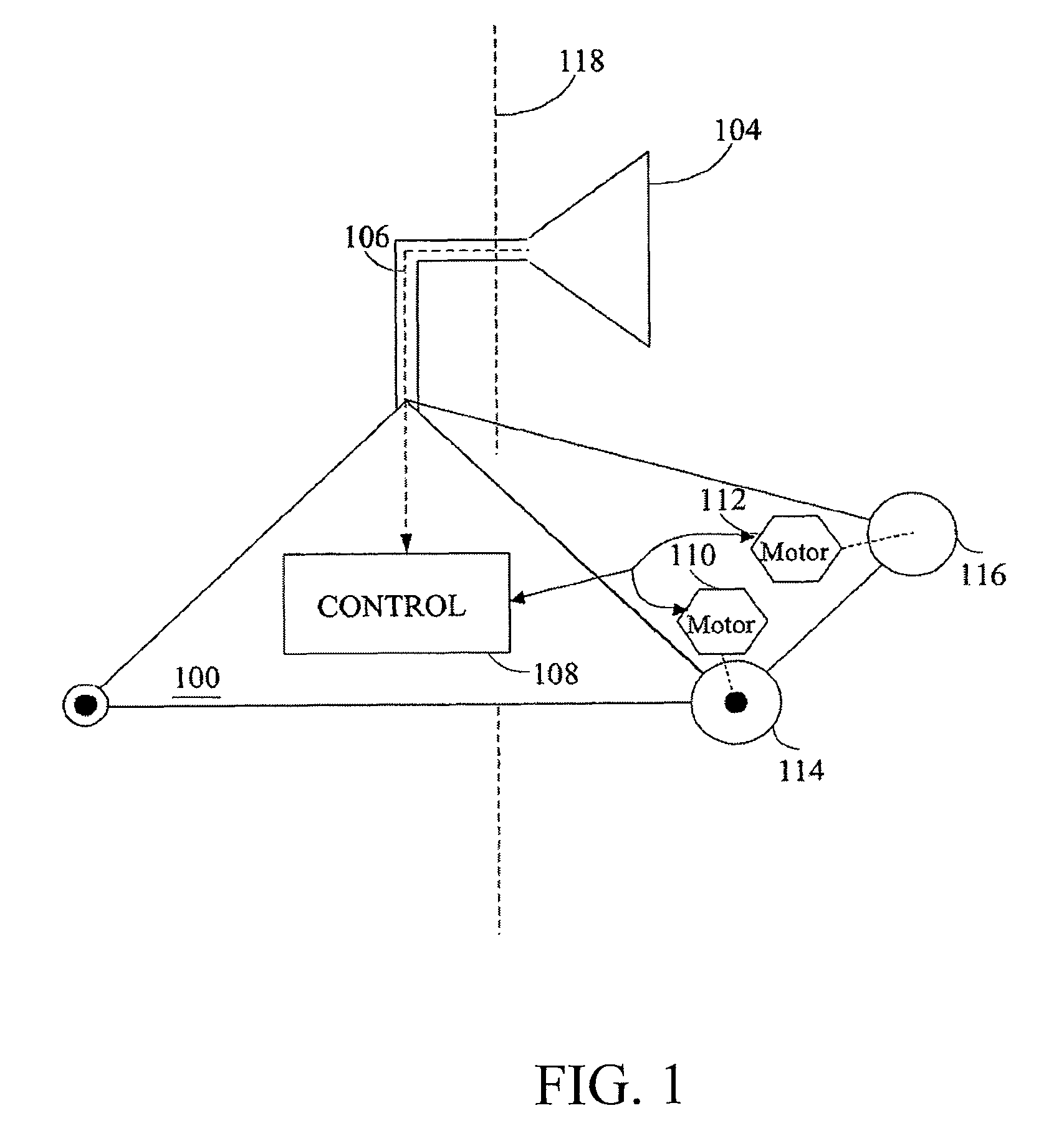

This invention is generally related to methods and apparatus that permit the measurements from a plurality of sensors to be combined or fused in a robust manner. For example, the sensors can correspond to sensors used by a mobile, device, such as a robot, for localization and / or mapping. The measurements can be fused for estimation of a measurement, such as an estimation of a pose of a robot.

Owner:IROBOT CORP

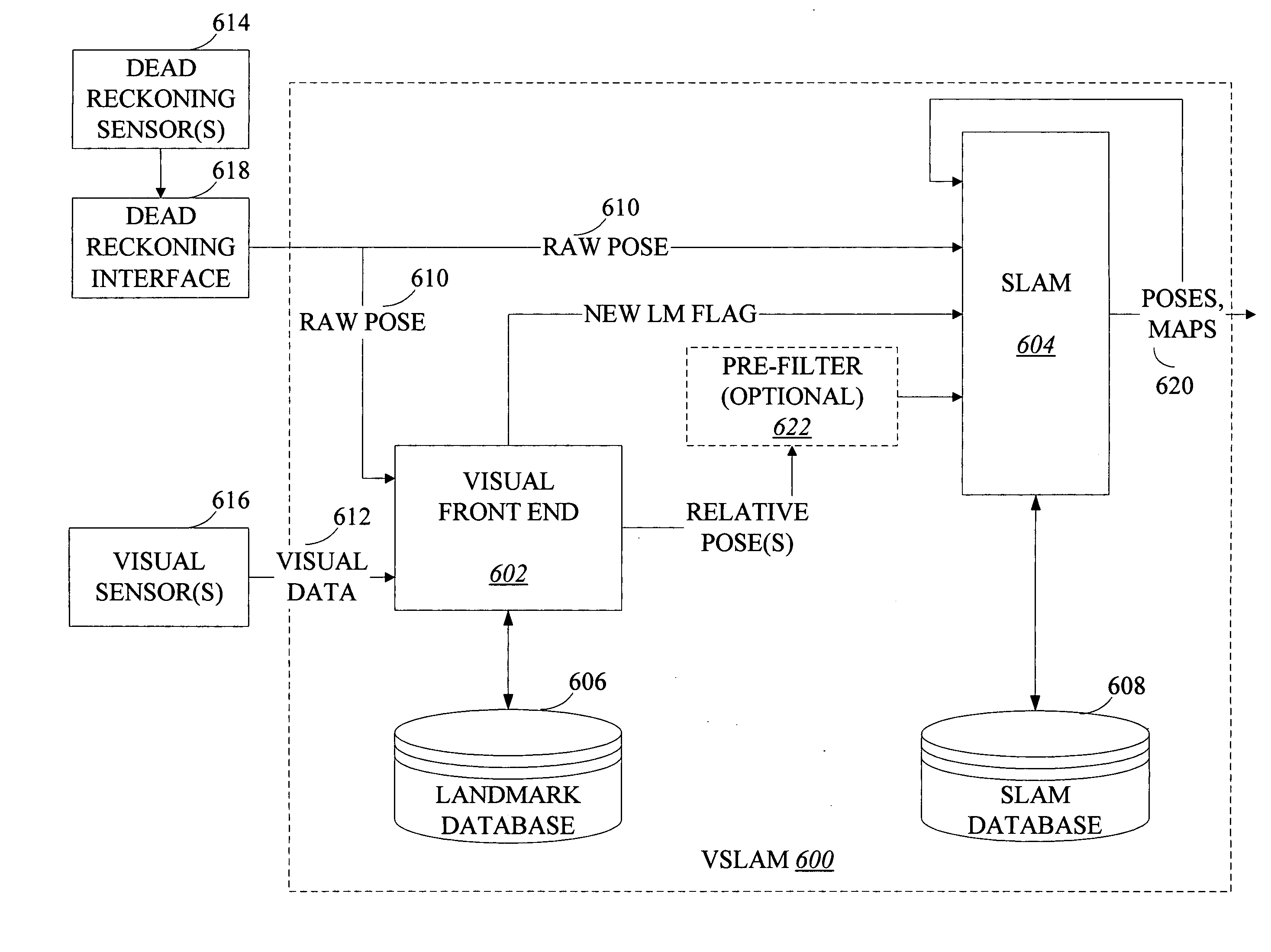

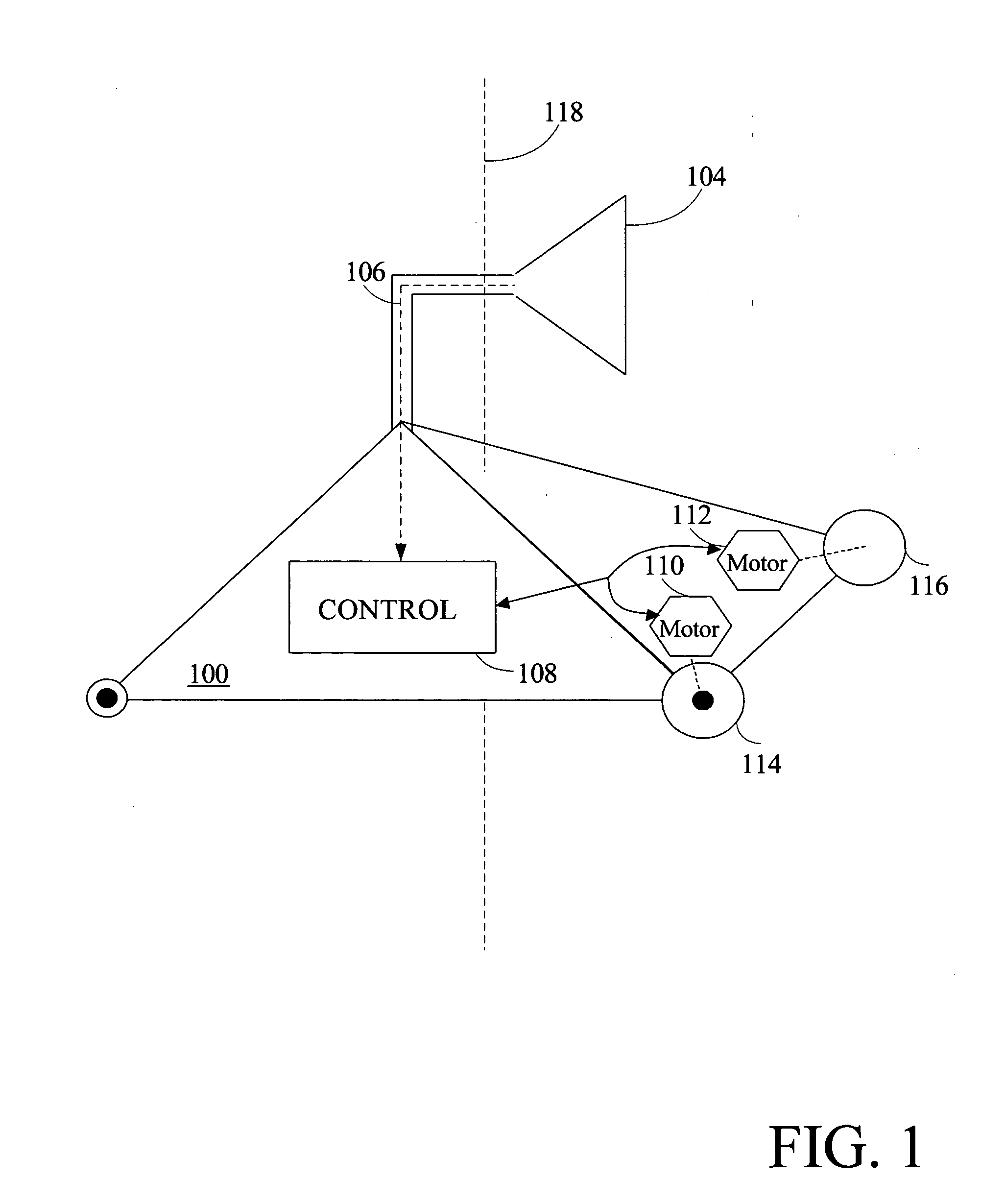

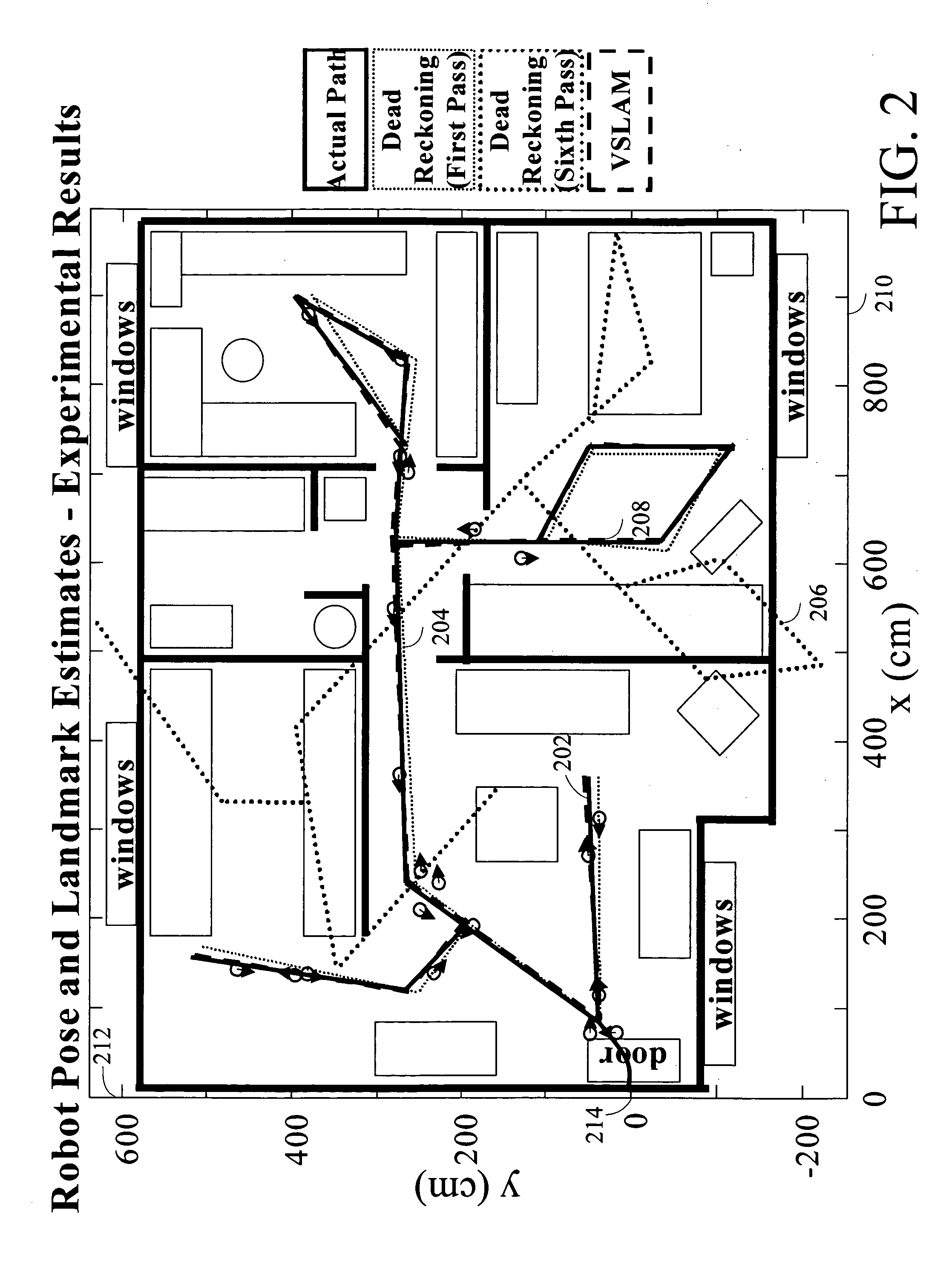

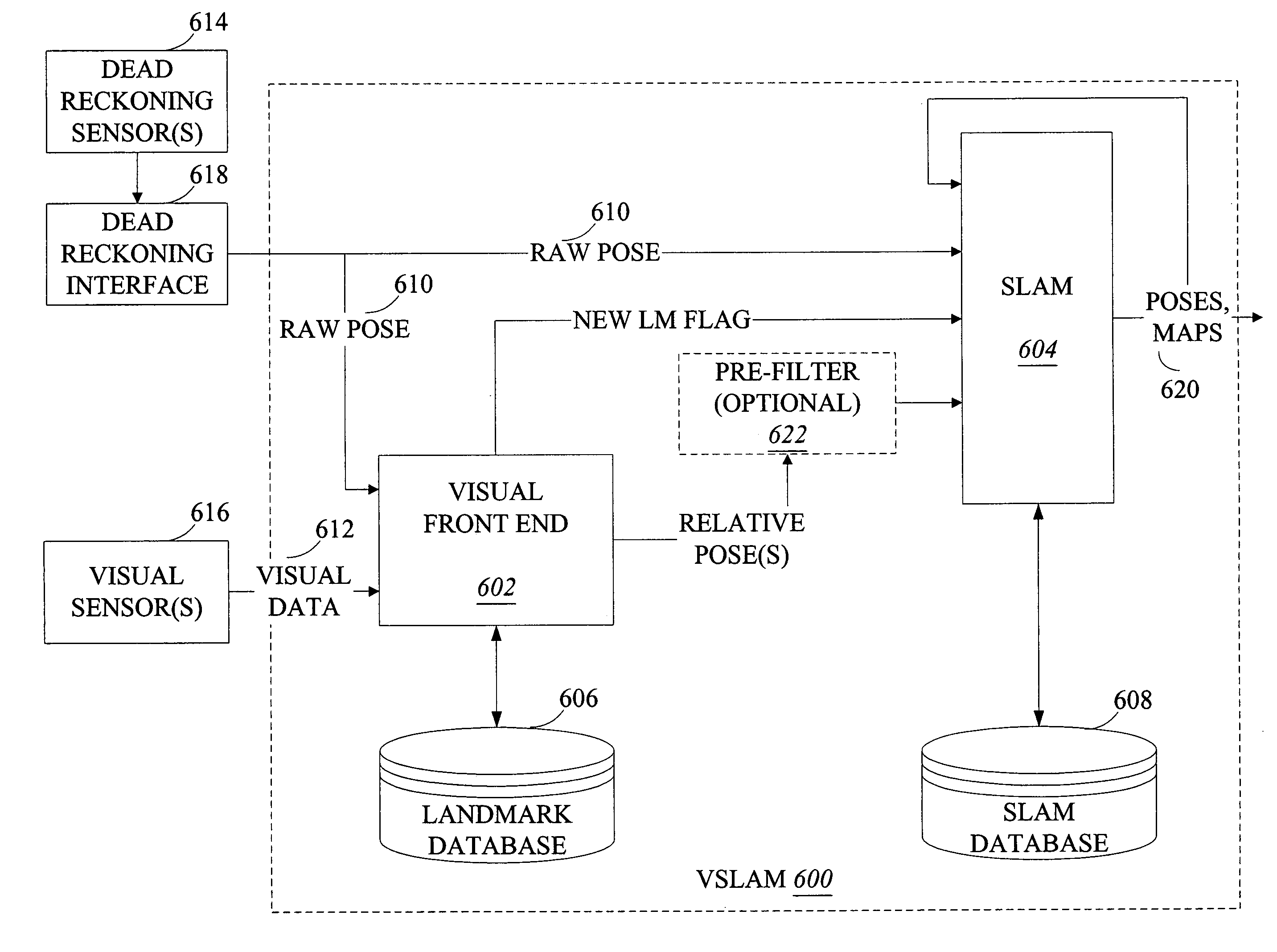

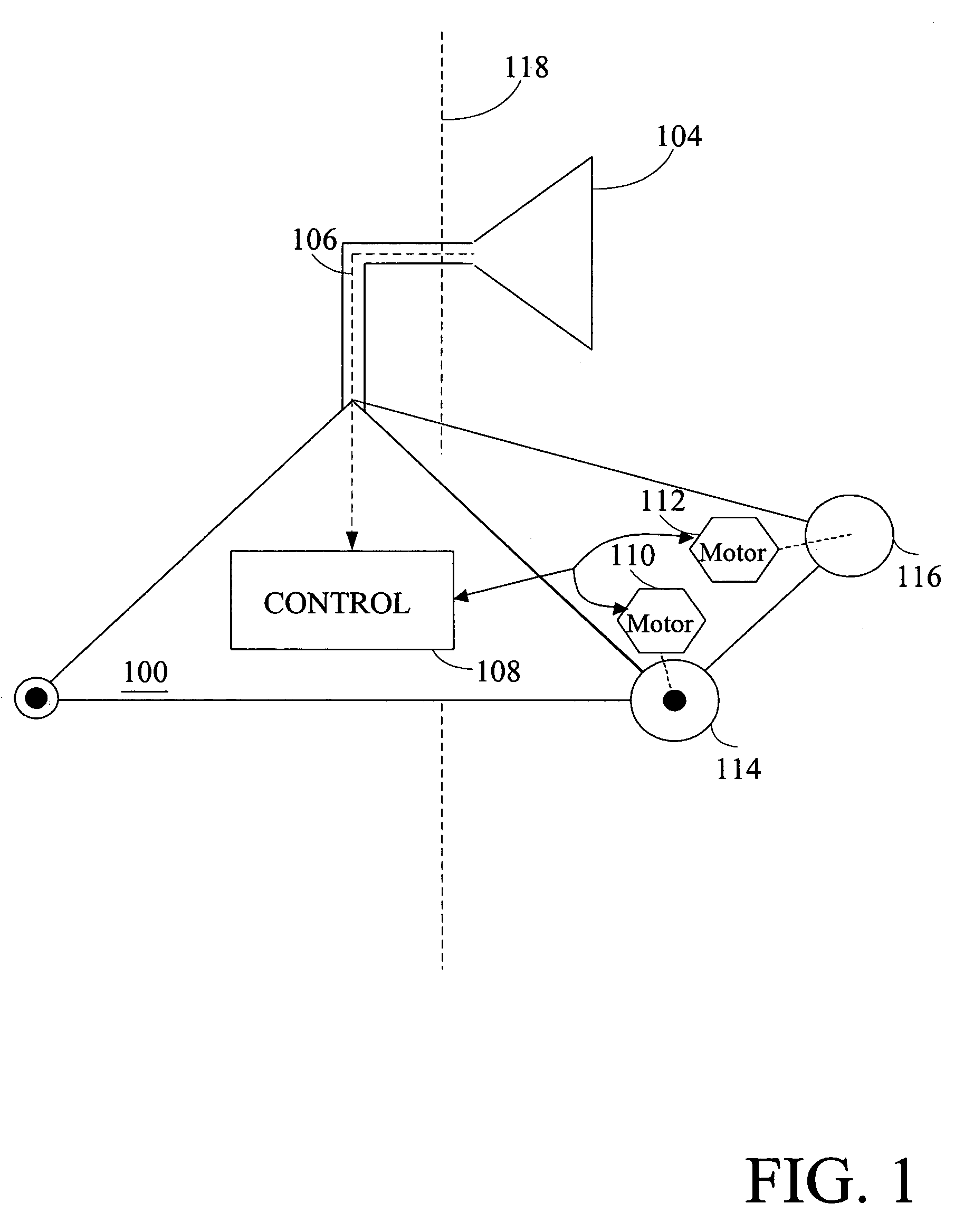

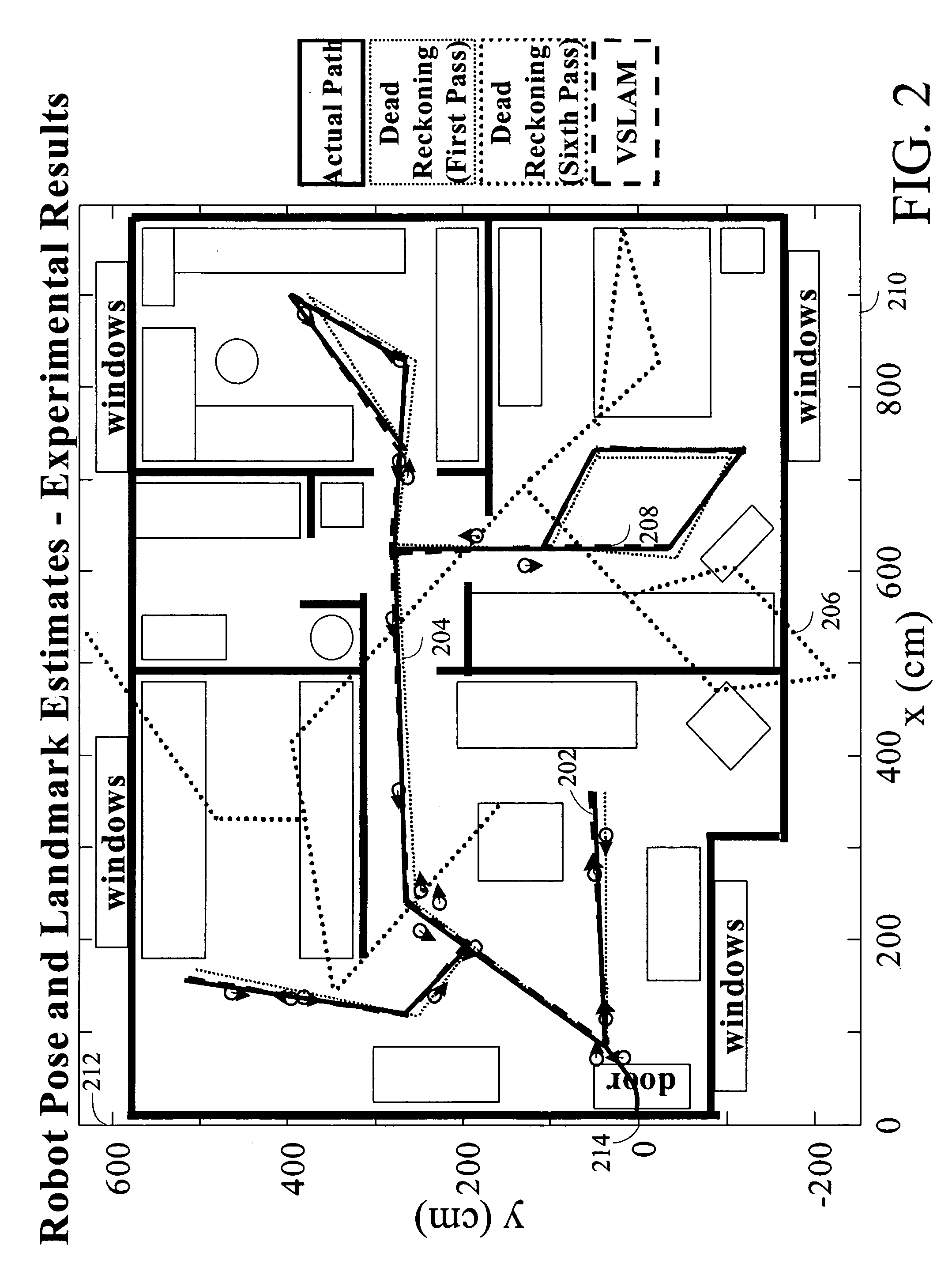

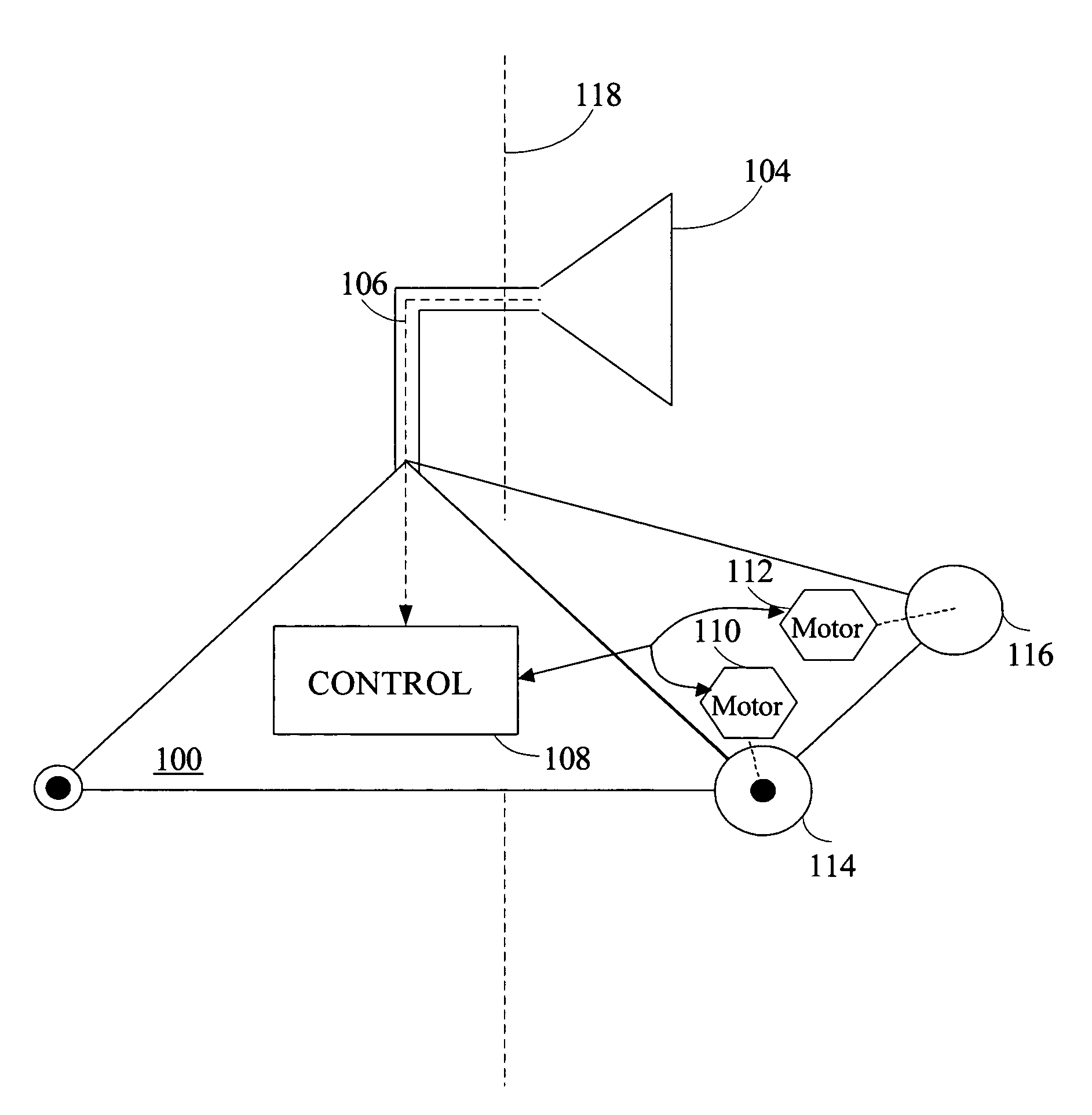

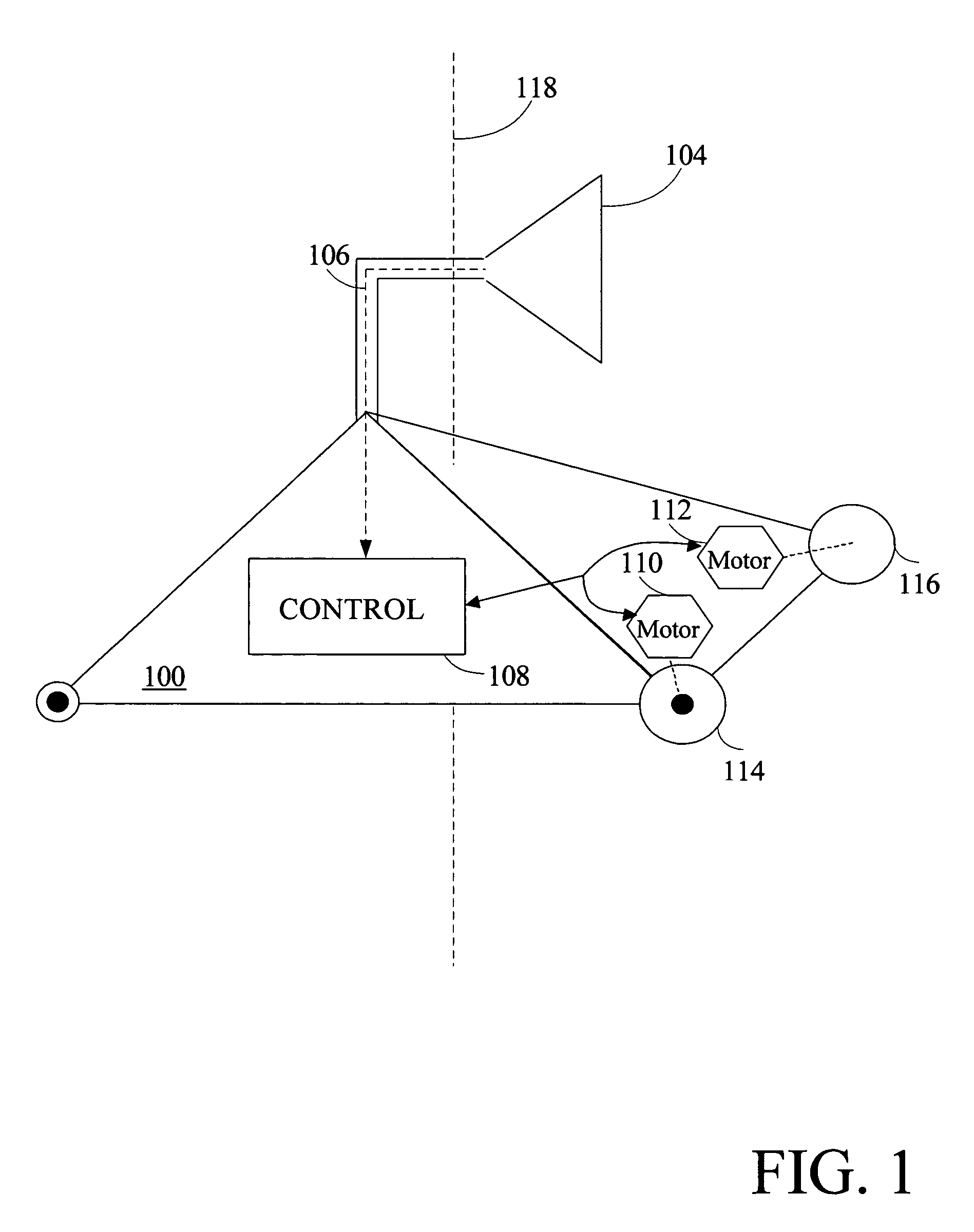

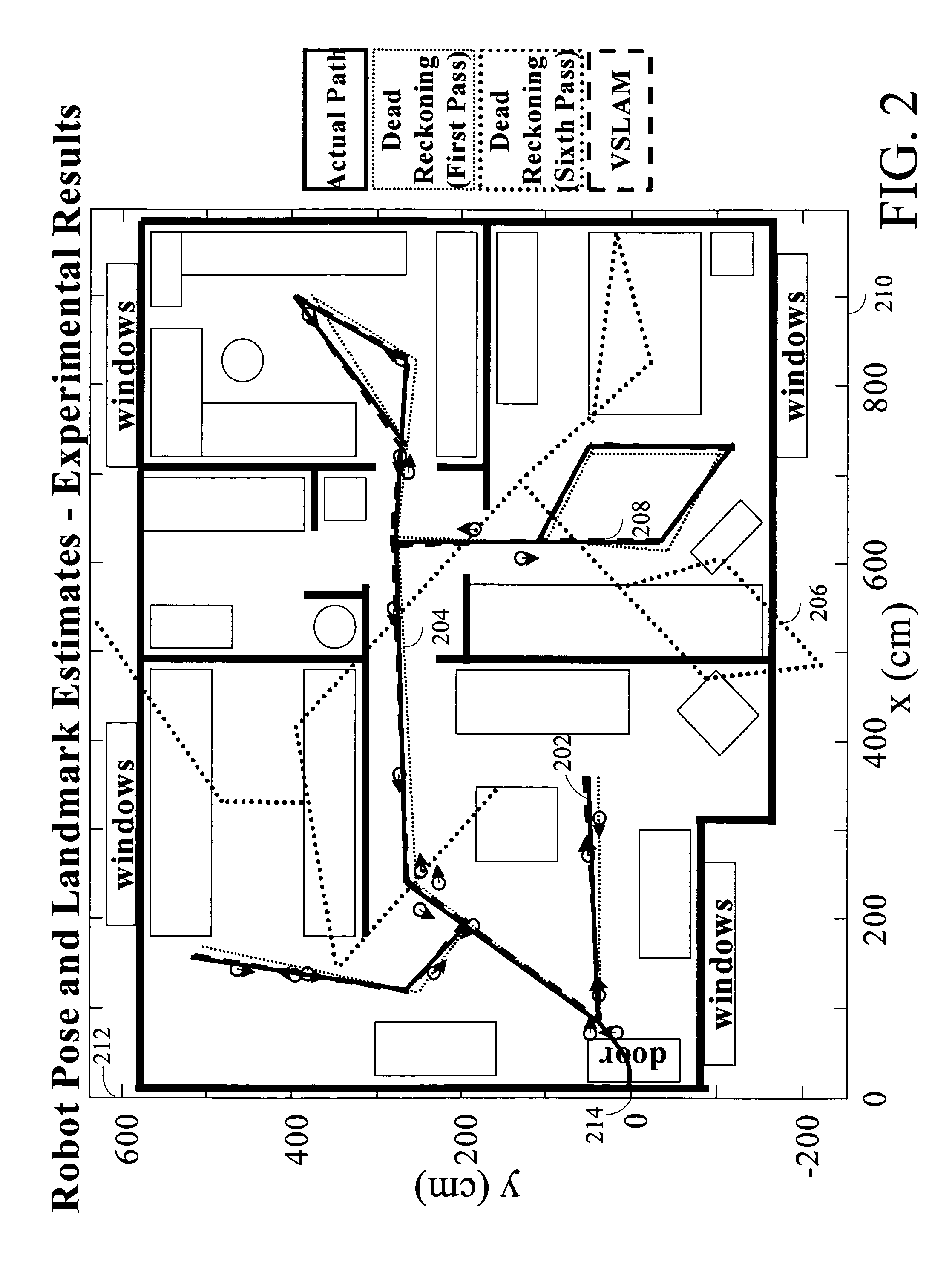

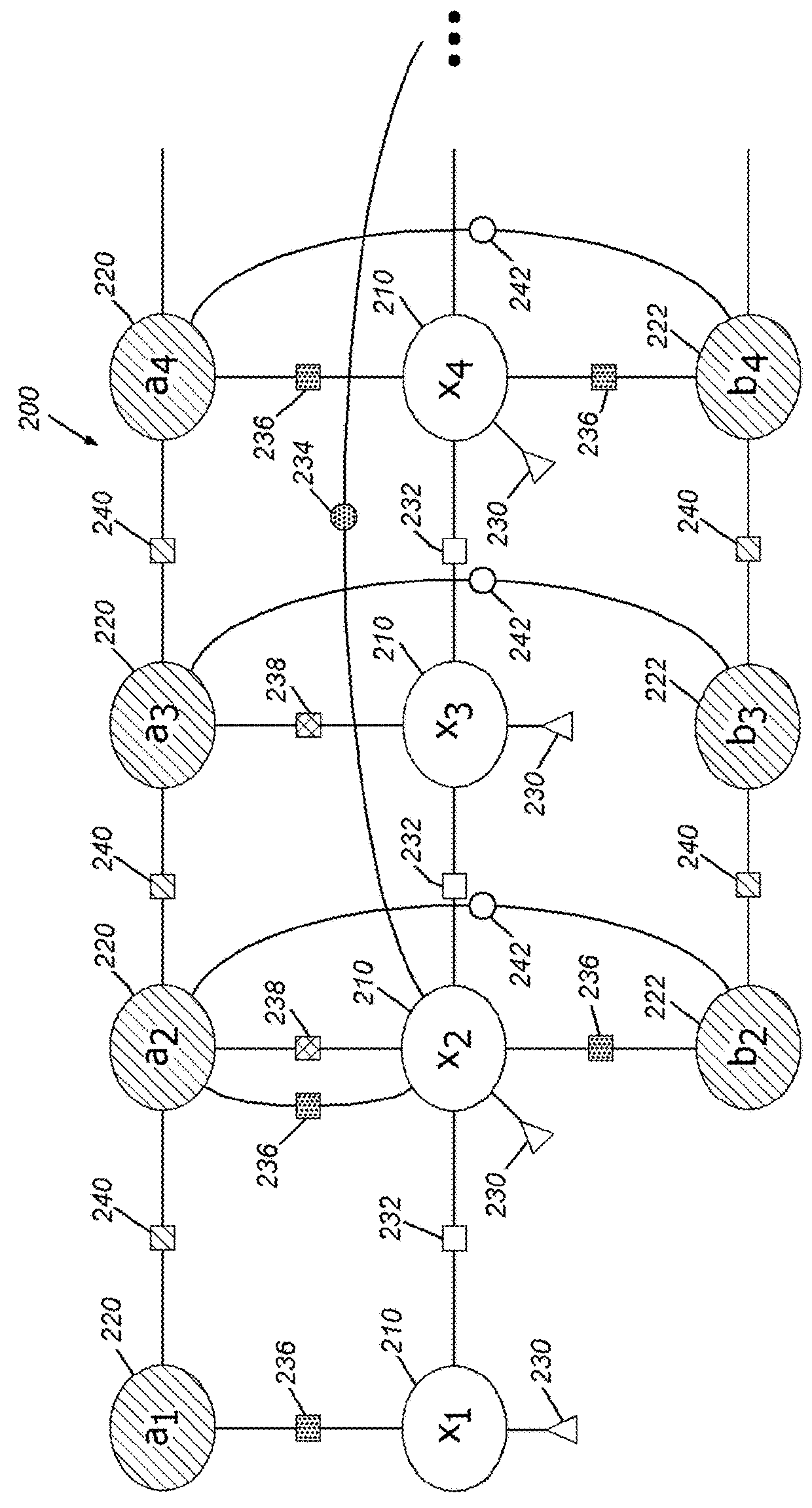

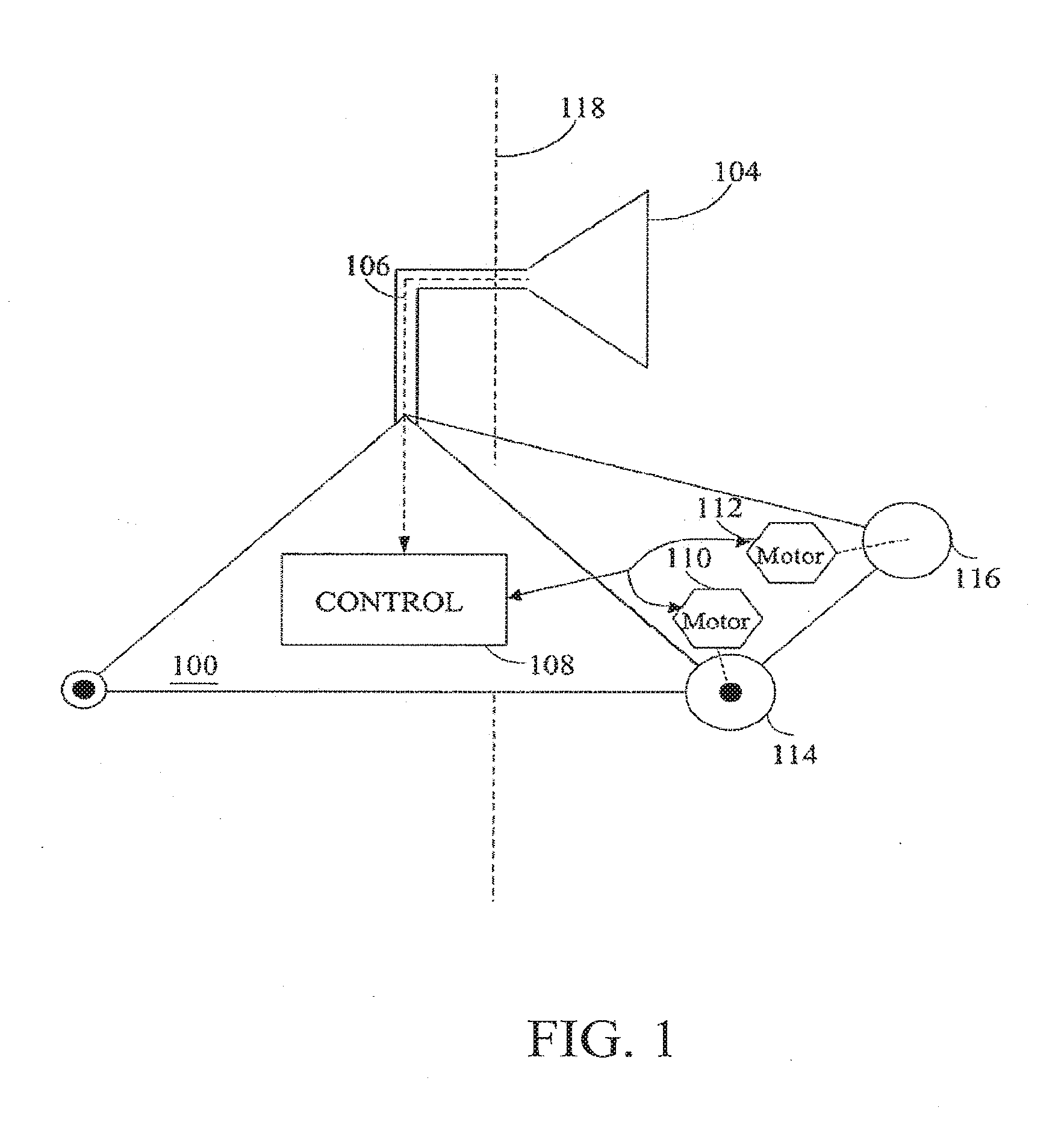

Systems and methods for incrementally updating a pose of a mobile device calculated by visual simultaneous localization and mapping techniques

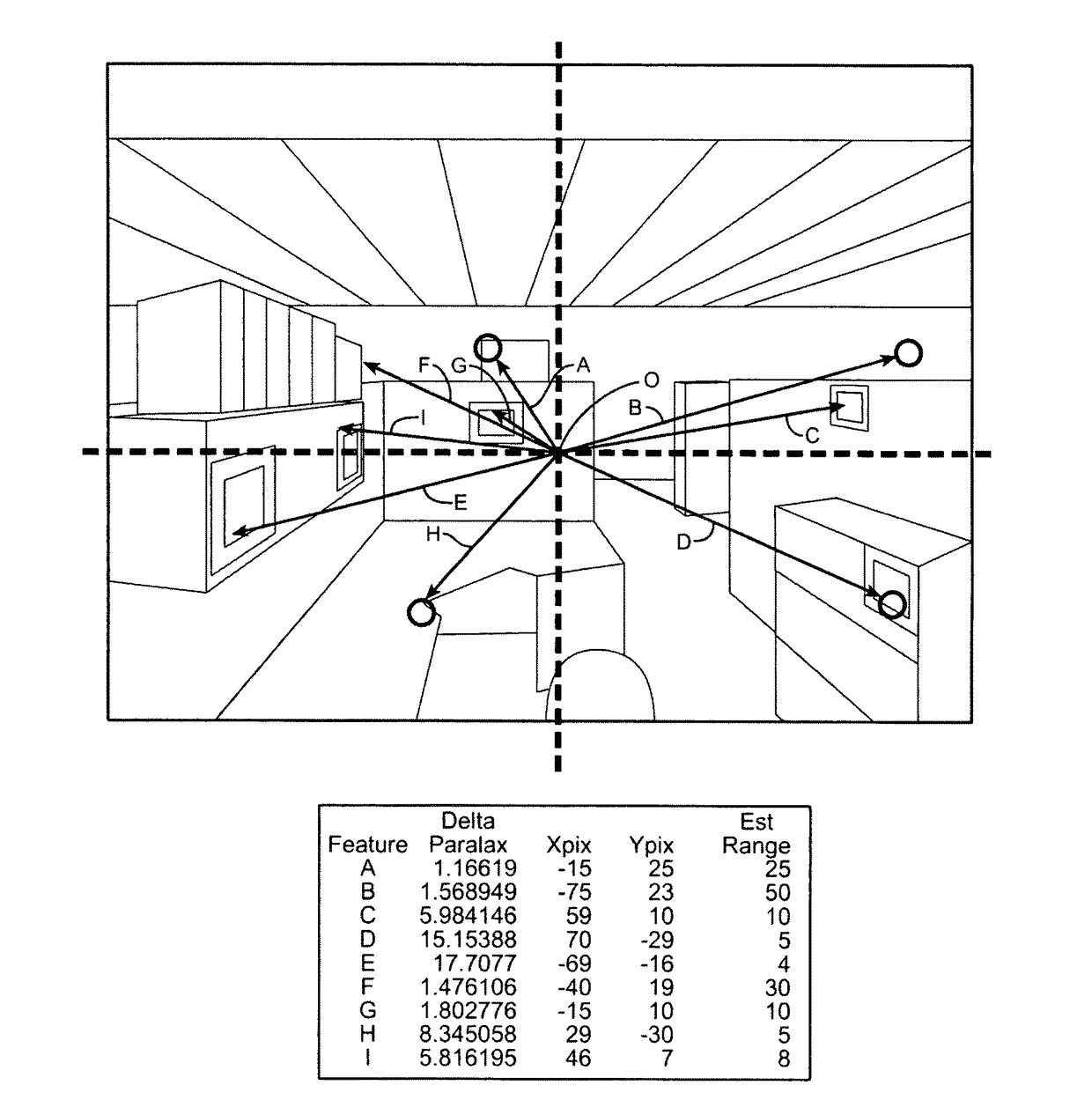

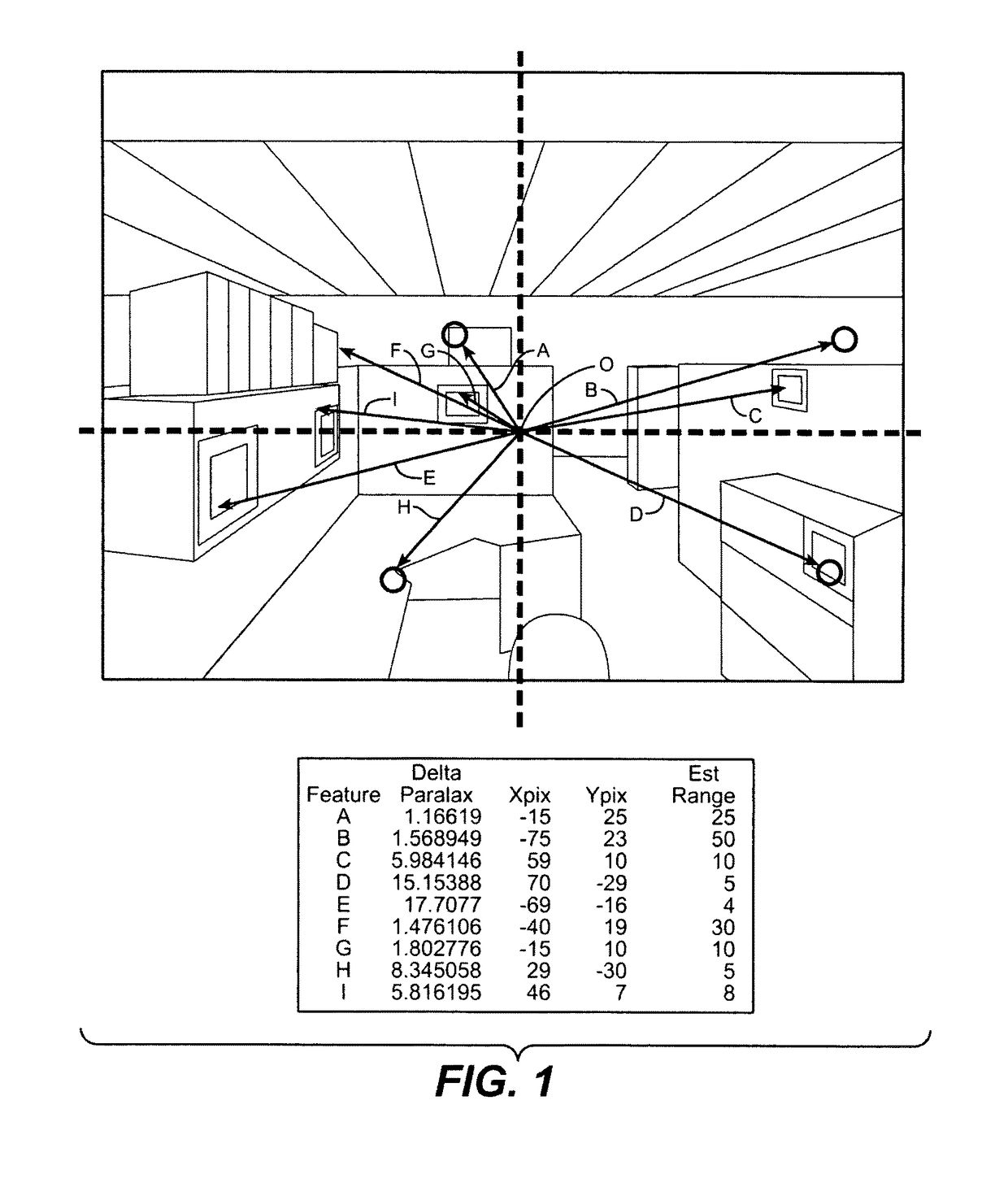

The invention is related to methods and apparatus that use a visual sensor and dead reckoning sensors to process Simultaneous Localization and Mapping (SLAM). These techniques can be used in robot navigation. Advantageously, such visual techniques can be used to autonomously generate and update a map. Unlike with laser rangefinders, the visual techniques are economically practical in a wide range of applications and can be used in relatively dynamic environments, such as environments in which people move. One embodiment further advantageously uses multiple particles to maintain multiple hypotheses with respect to localization and mapping. Further advantageously, one embodiment maintains the particles in a relatively computationally-efficient manner, thereby permitting the SLAM processes to be performed in software using relatively inexpensive microprocessor-based computer system.

Owner:IROBOT CORP

Robust sensor fusion for mapping and localization in a simultaneous localization and mapping (SLAM) system

This invention is generally related to methods and apparatus that permit the measurements from a plurality of sensors to be combined or fused in a robust manner. For example, the sensors can correspond to sensors used by a mobile device, such as a robot, for localization and / or mapping. The measurements can be fused for estimation of a measurement, such as an estimation of a pose of a robot.

Owner:IROBOT CORP

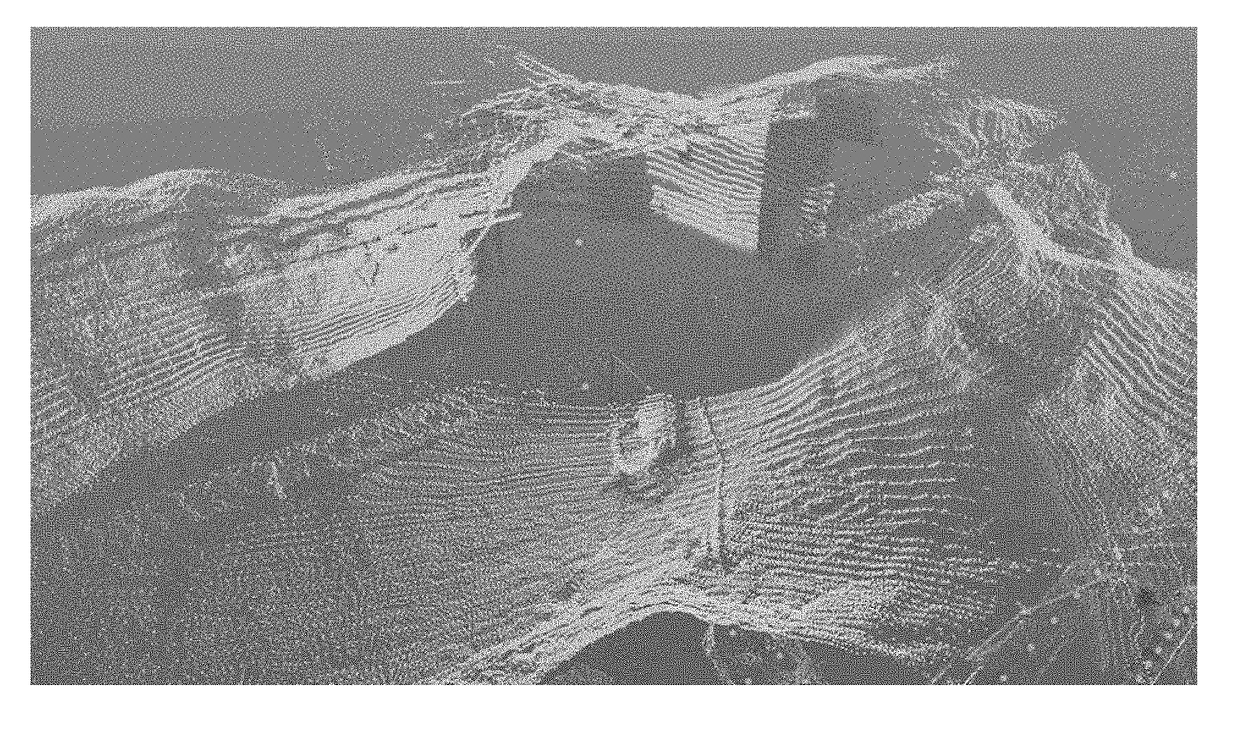

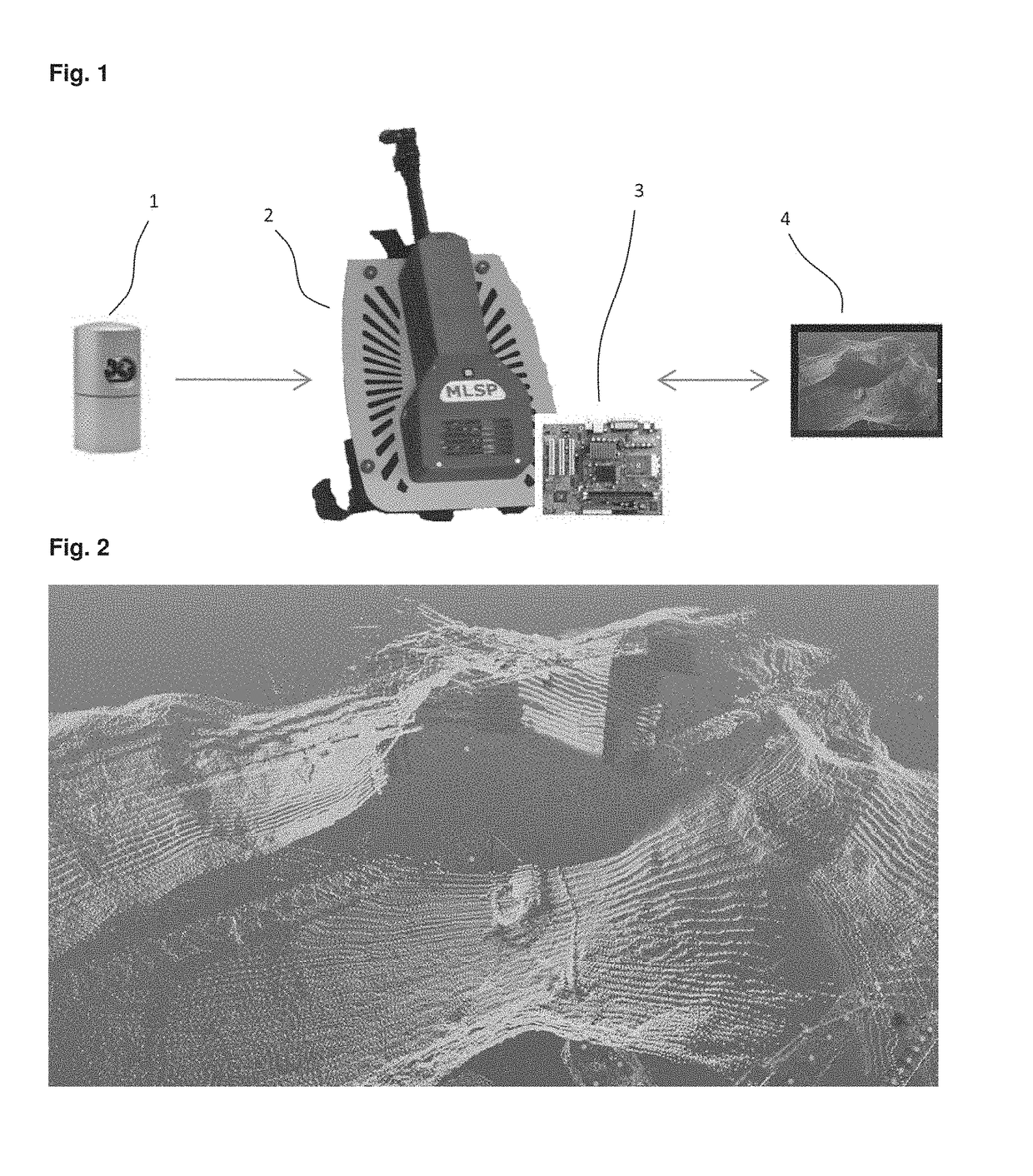

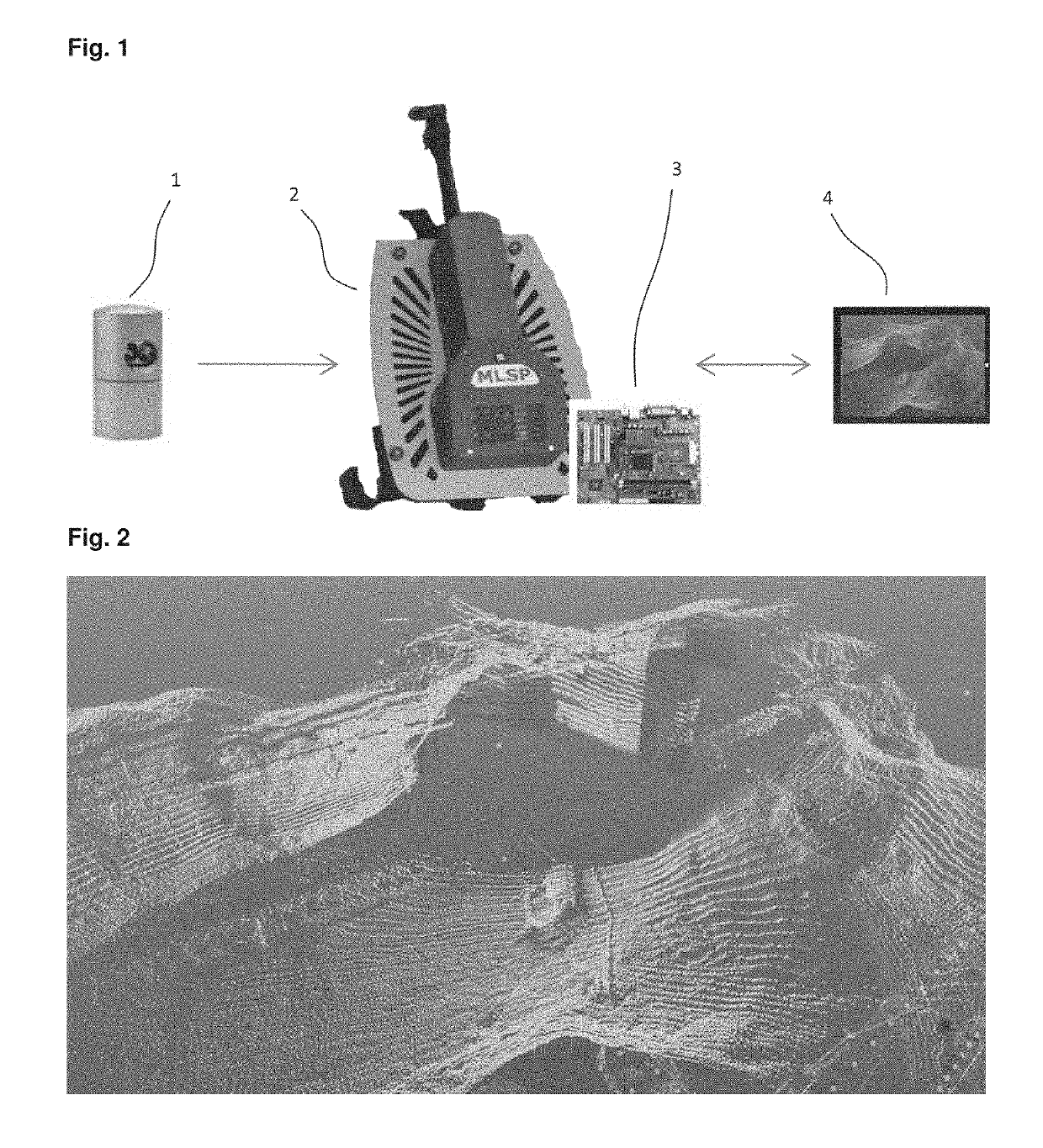

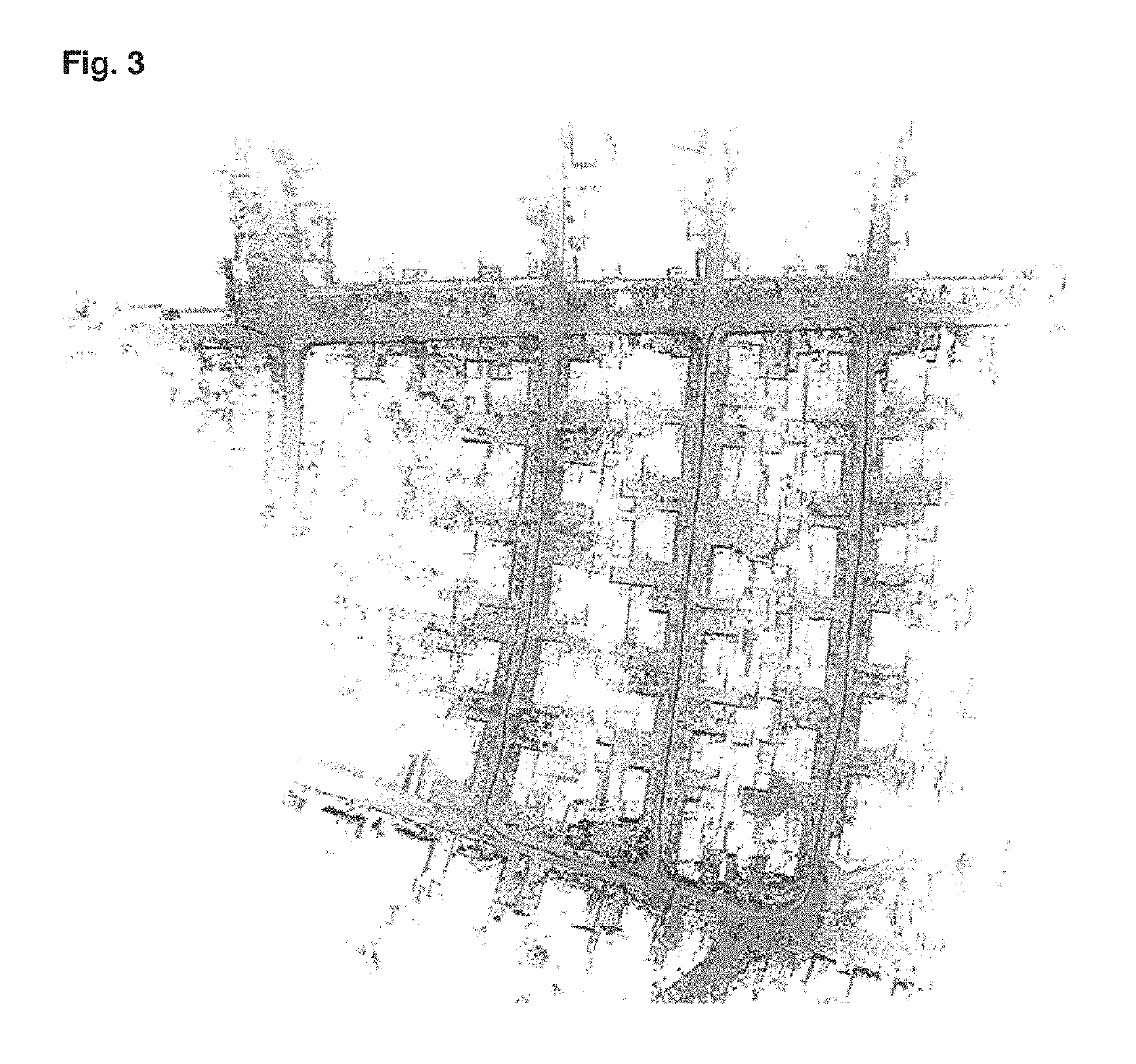

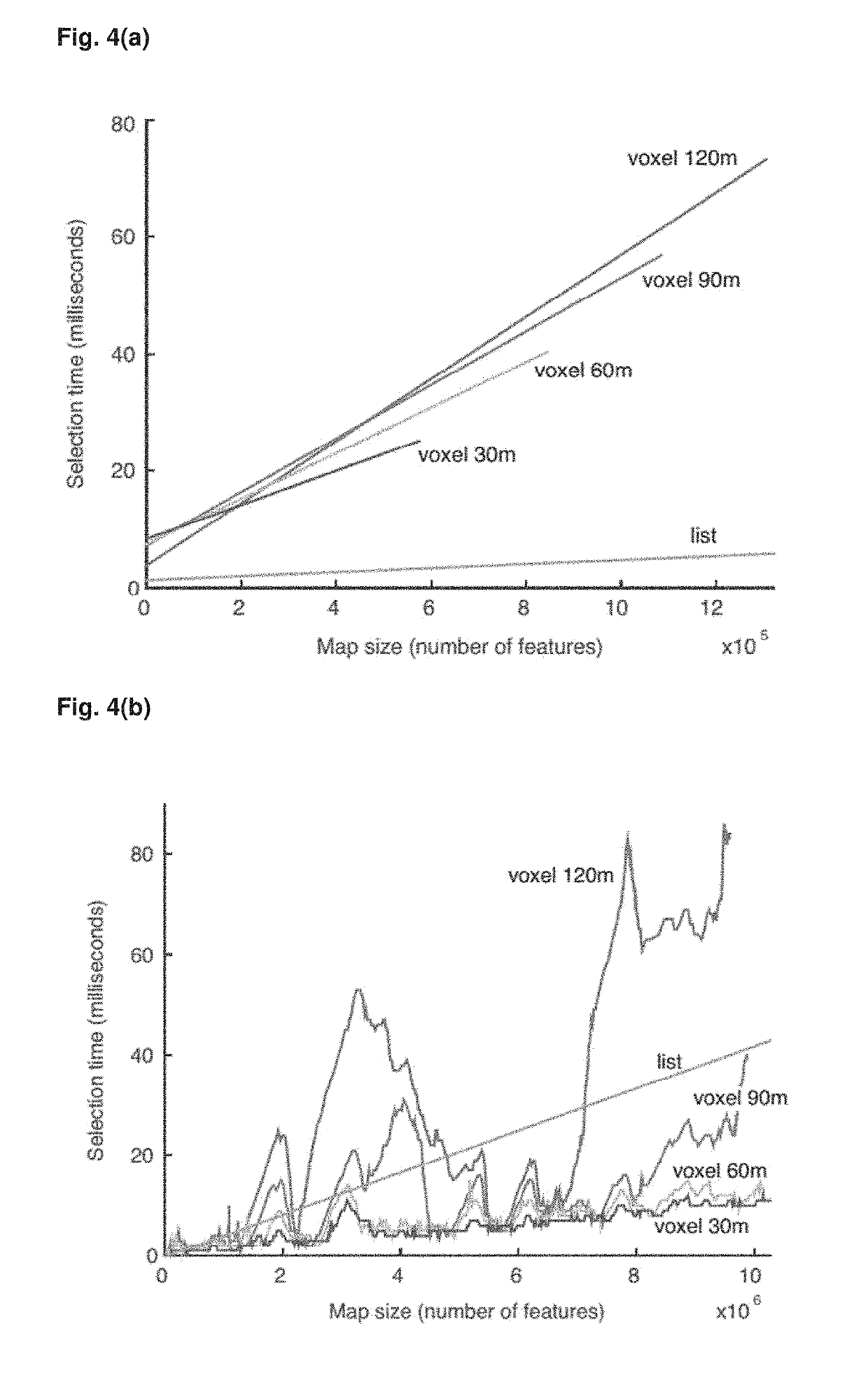

Method and device for real-time mapping and localization

ActiveUS20180075643A1Efficient storageImprove system robustnessImage enhancementImage analysisReference mapLaser ranging

A method for constructing a 3D reference map useable in real-time mapping, localization and / or change analysis, wherein the 3D reference map is built using a 3D SLAM (Simultaneous Localization And Mapping) framework based on a mobile laser range scanner A method for real-time mapping, localization and change analysis, in particular in GPS-denied environments, as well as a mobile laser scanning device for implementing said methods.

Owner:EURATOM

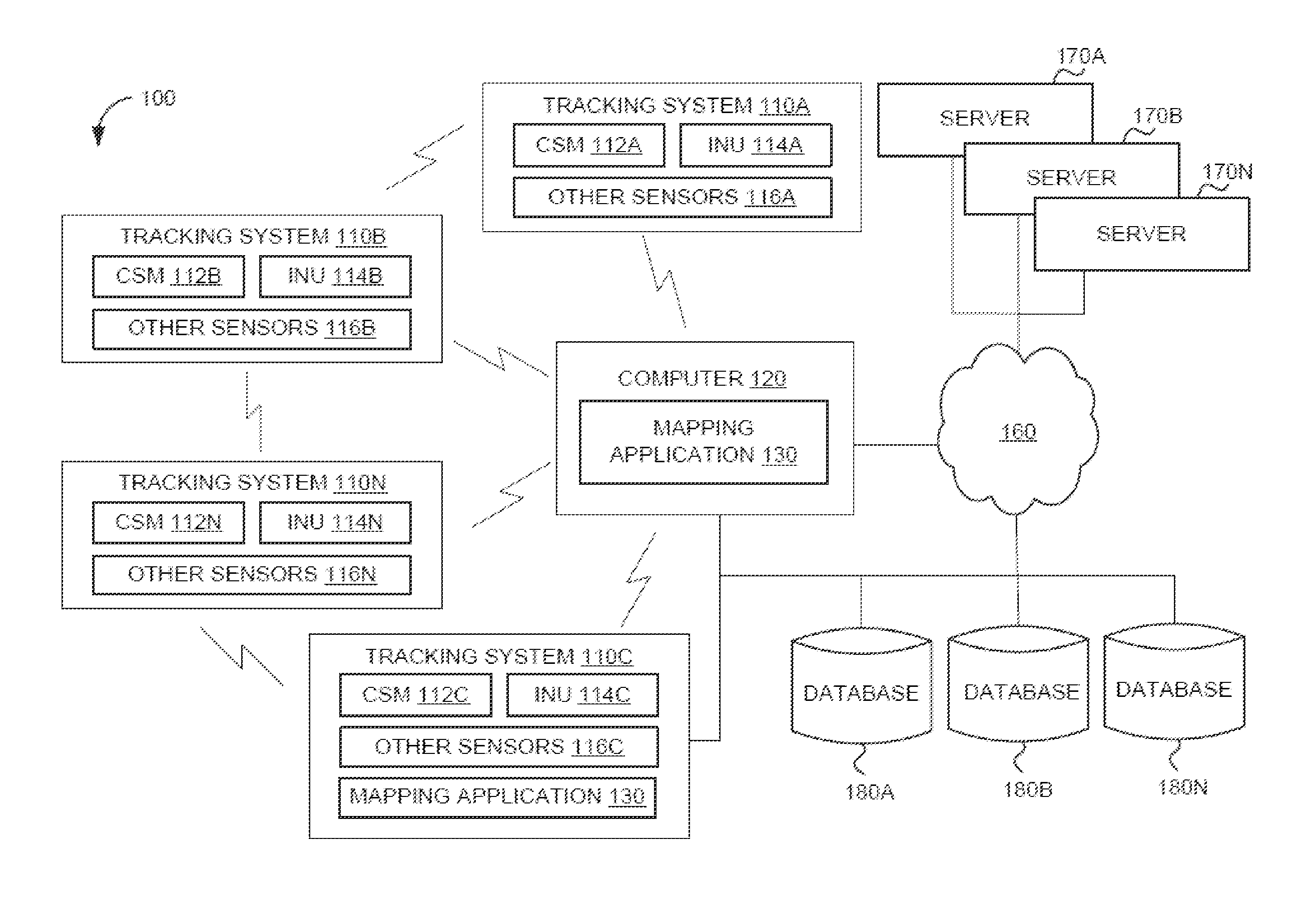

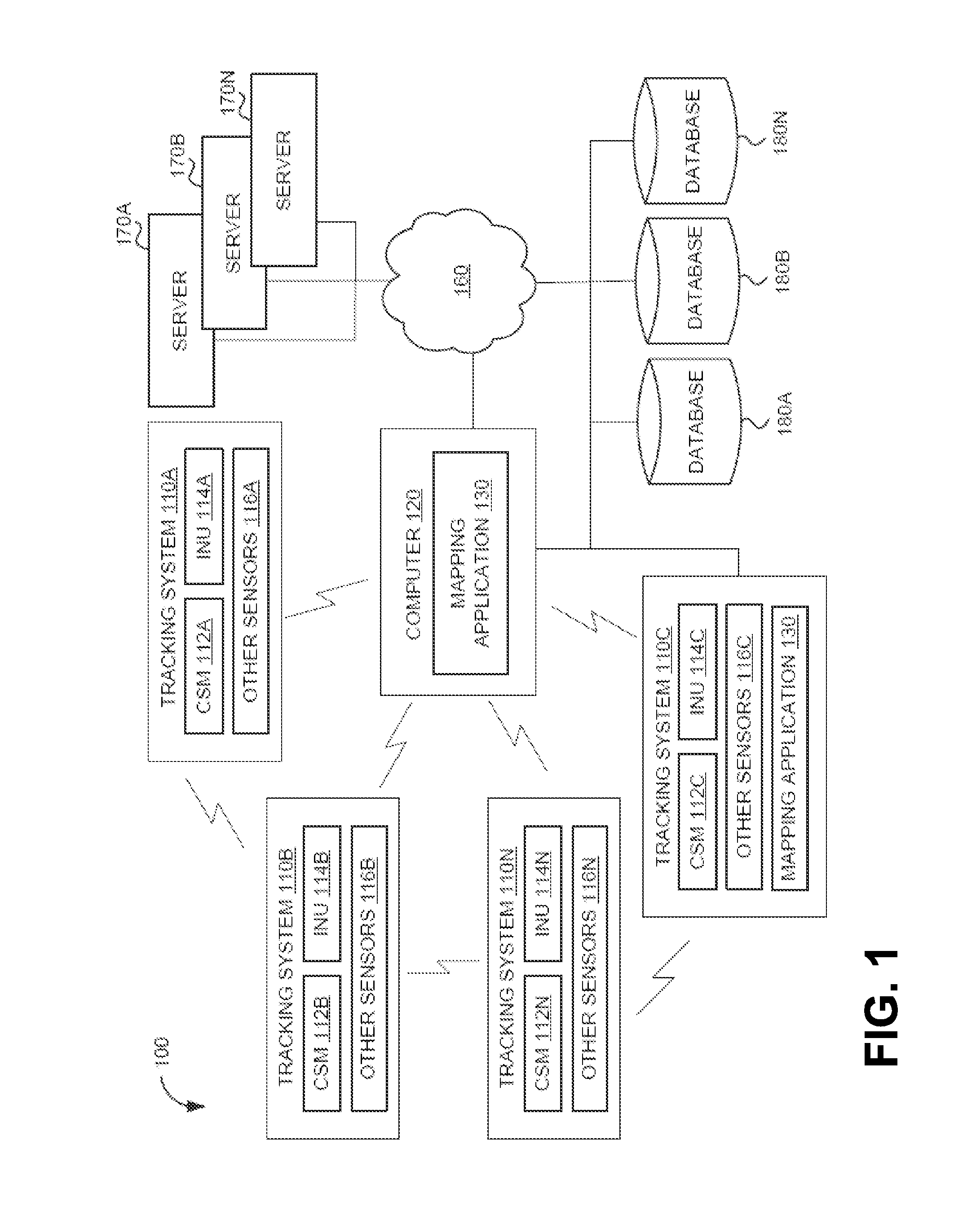

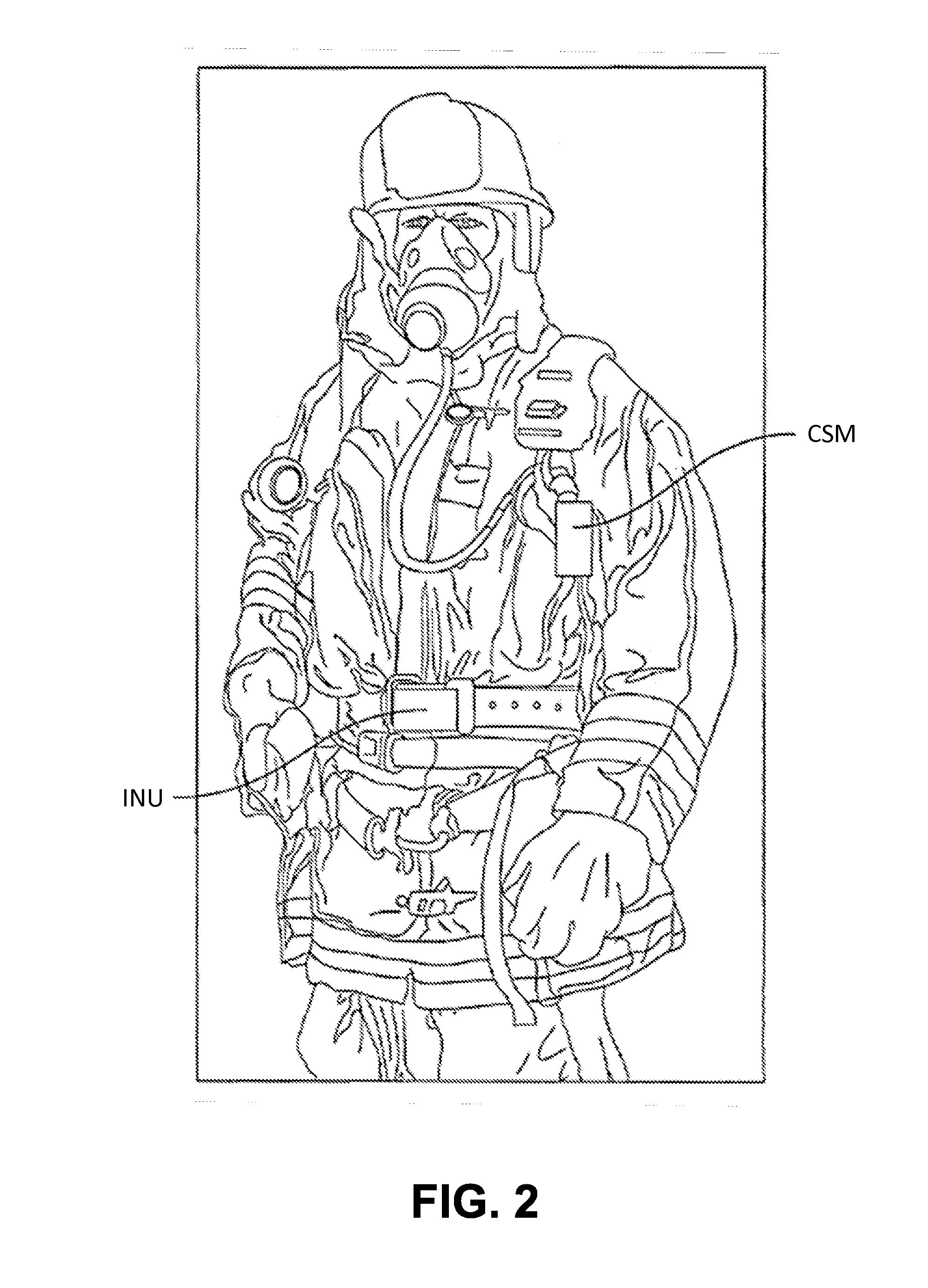

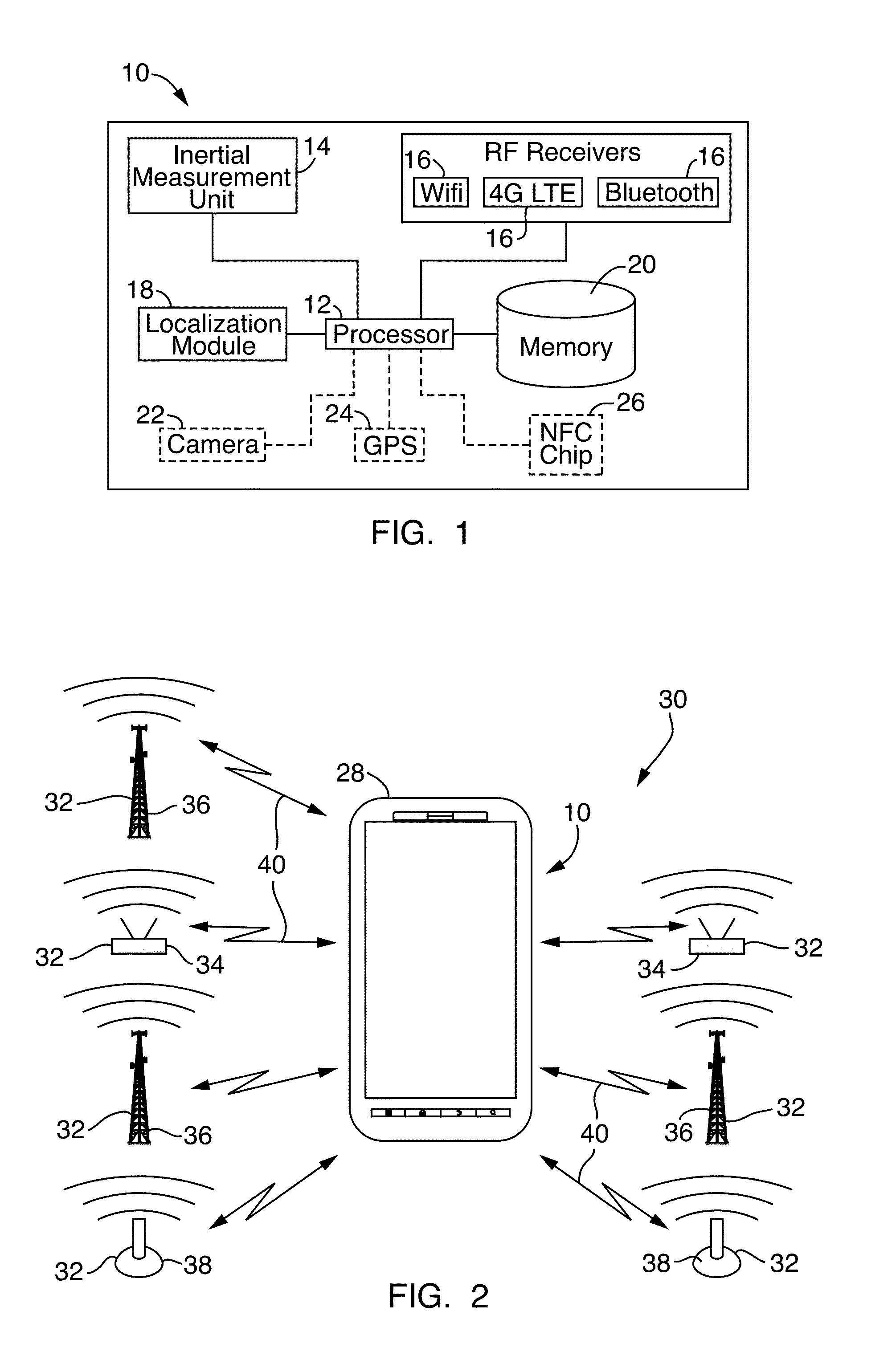

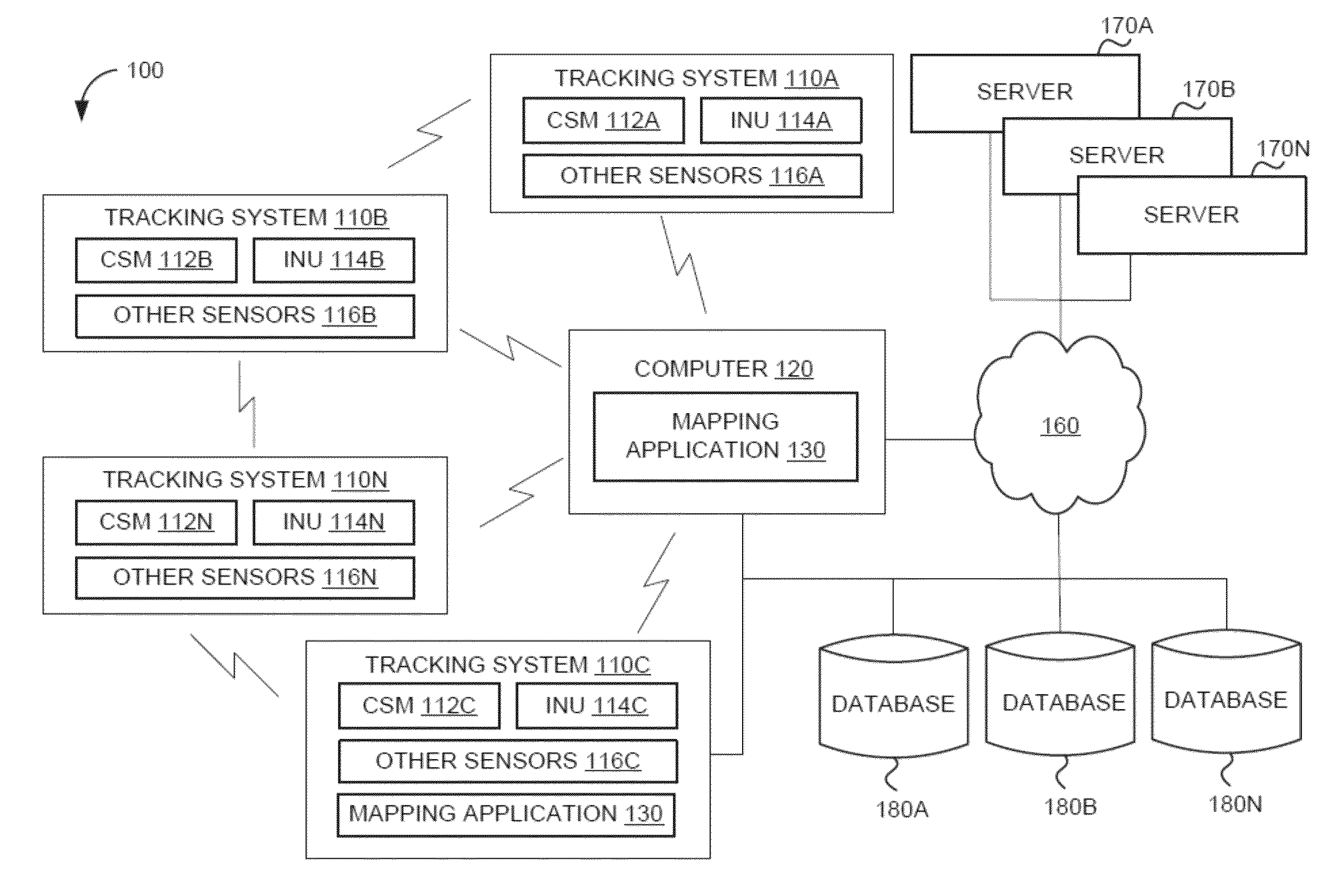

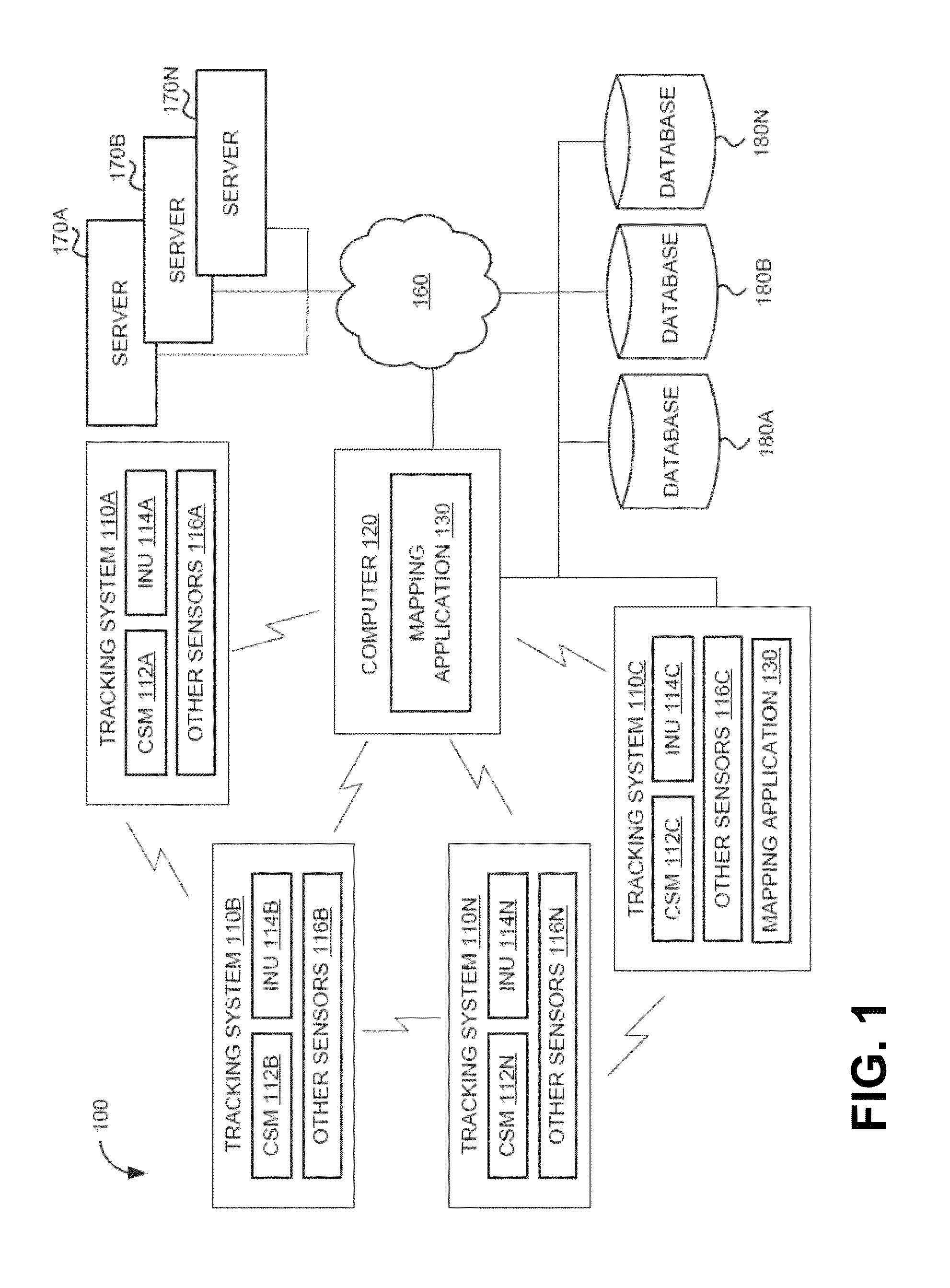

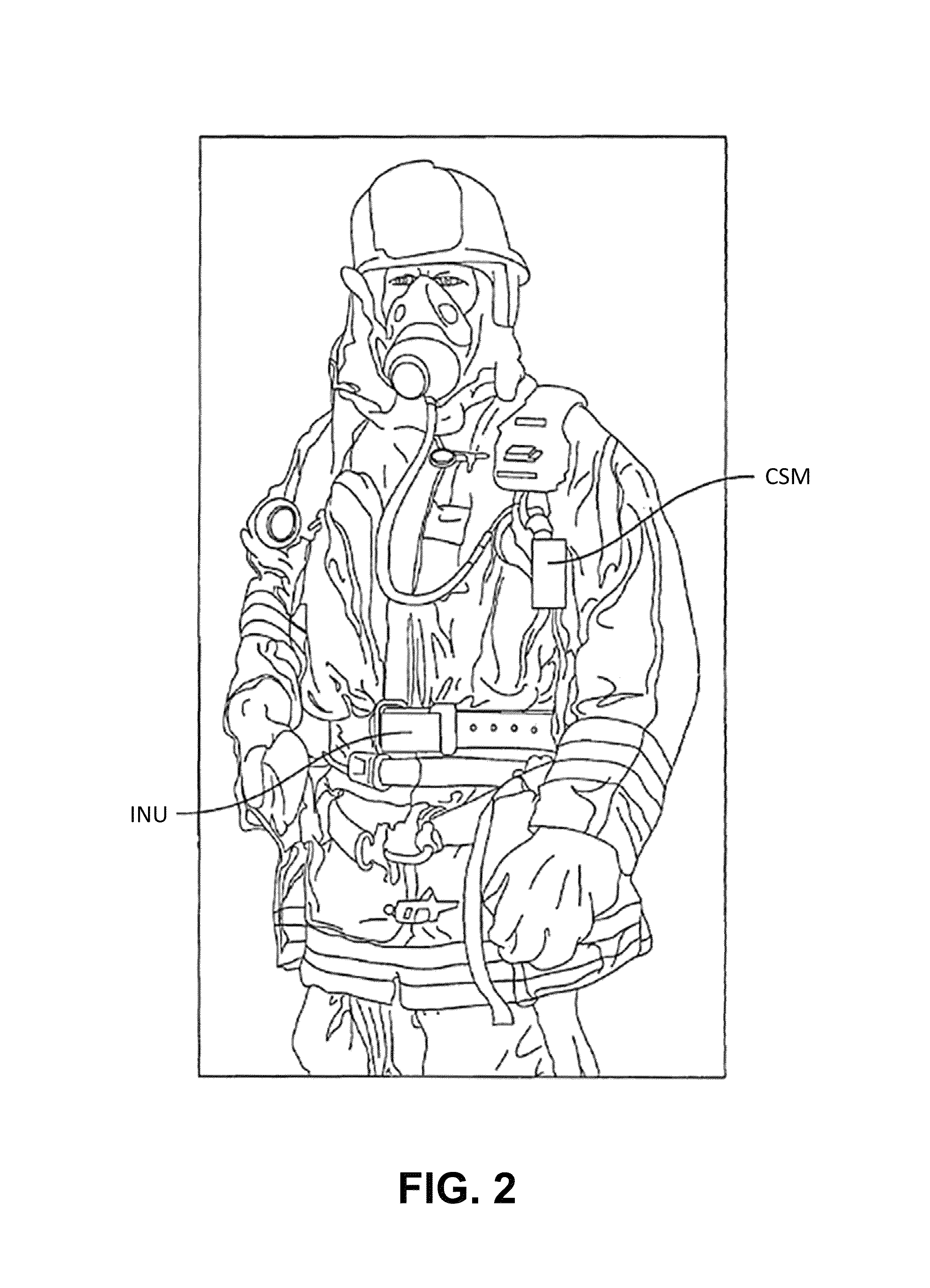

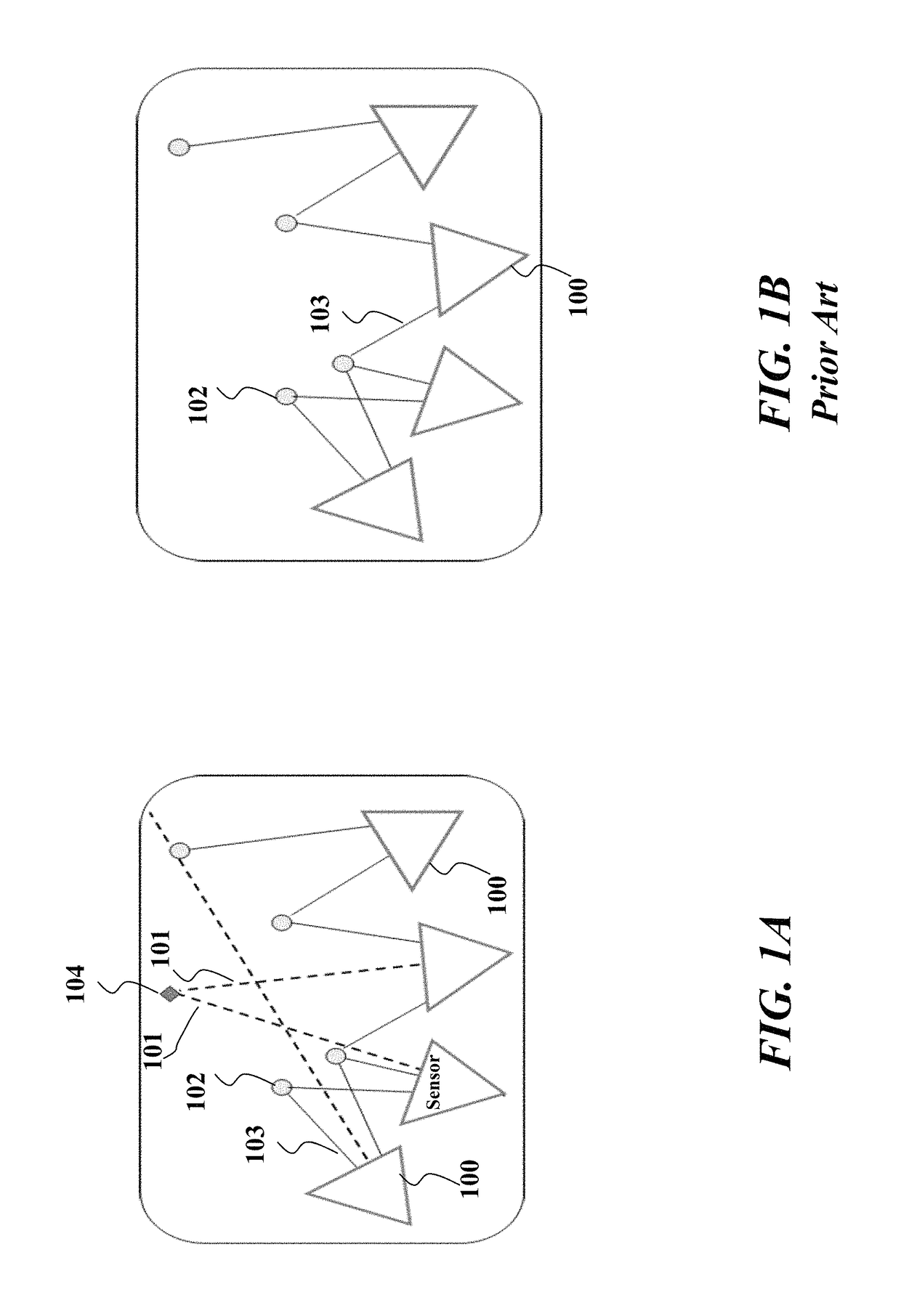

System and method for localizing a trackee at a location and mapping the location using inertial sensor information

ActiveUS20130332064A1Long durationNavigational calculation instrumentsRoad vehicles traffic controlPosition dependentNetwork communication

A system and method for recognizing features for location correction in Simultaneous Localization And Mapping operations, thus facilitating longer duration navigation, is provided. The system may detect features from magnetic, inertial, GPS, light sensors, and / or other sensors that can be associated with a location and recognized when revisited. Feature detection may be implemented on a generally portable tracking system, which may facilitate the use of higher sample rate data for more precise localization of features, improved tracking when network communications are unavailable, and improved ability of the tracking system to act as a smart standalone positioning system to provide rich input to higher level navigation algorithms / systems. The system may detect a transition from structured (such as indoors, in caves, etc.) to unstructured (such as outdoor) environments and from pedestrian motion to travel in a vehicle. The system may include an integrated self-tracking unit that can localize and self-correct such localizations.

Owner:TRX SYST

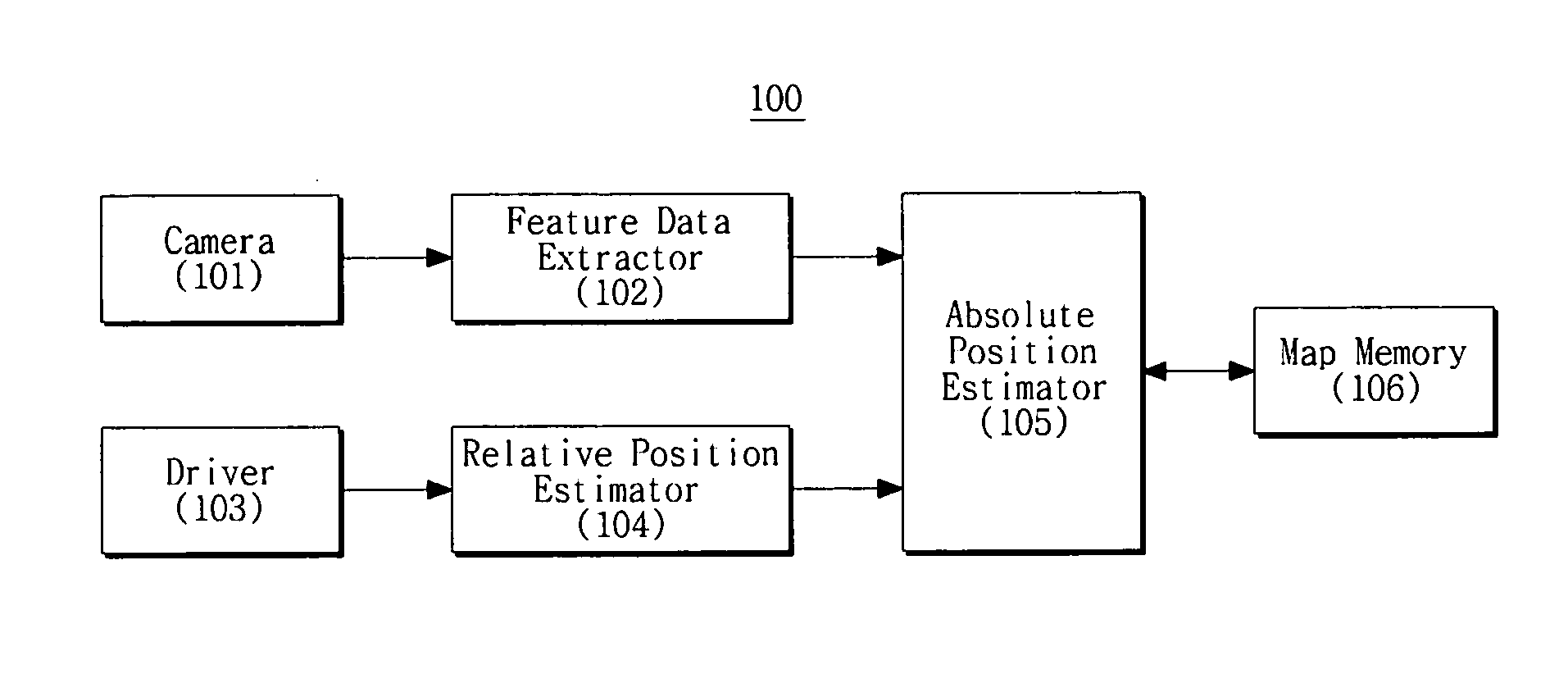

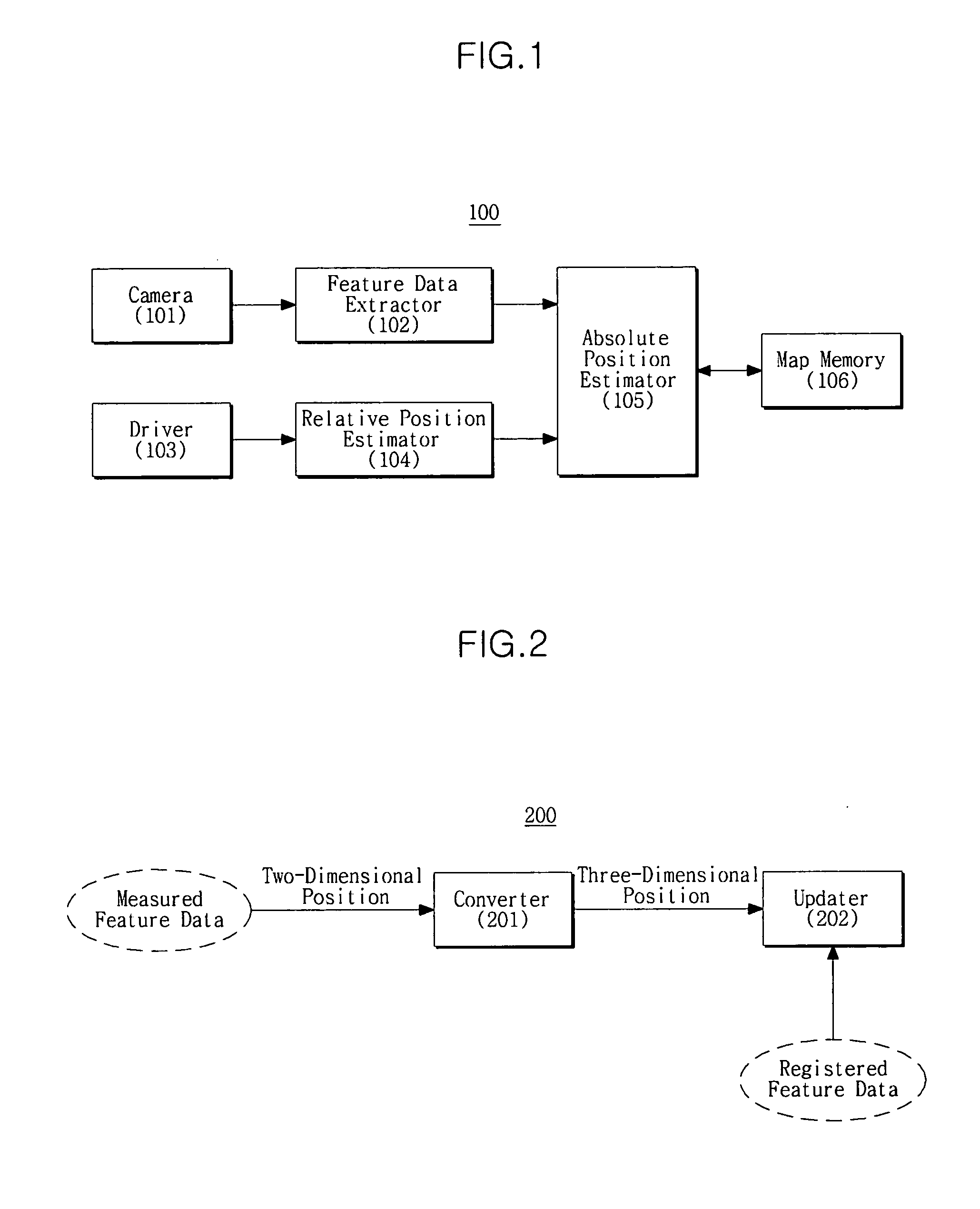

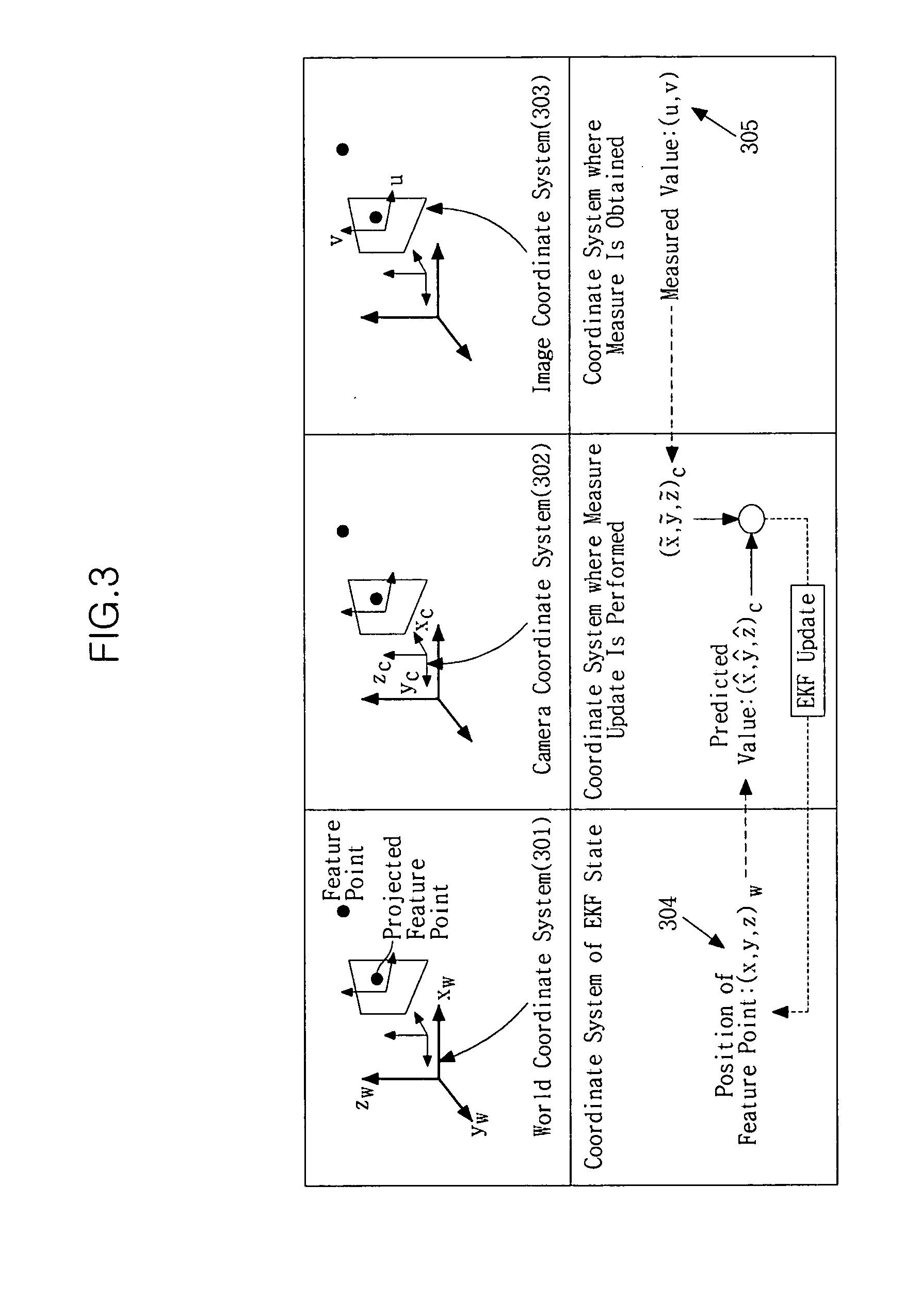

Method and apparatus for simultaneous localization and mapping of robot

A SLAM of a robot is provided. The position of a robot and the position of feature data may be estimated by acquiring an image of the robot's surroundings, extracting feature data from the image, and matching the extracted feature data with registered feature data. Furthermore, measurement update is performed in a camera coordinate system and an appropriate assumption is added upon coordinate conversion, thereby reducing non-linear components and thus improving the SLAM performance.

Owner:SAMSUNG ELECTRONICS CO LTD

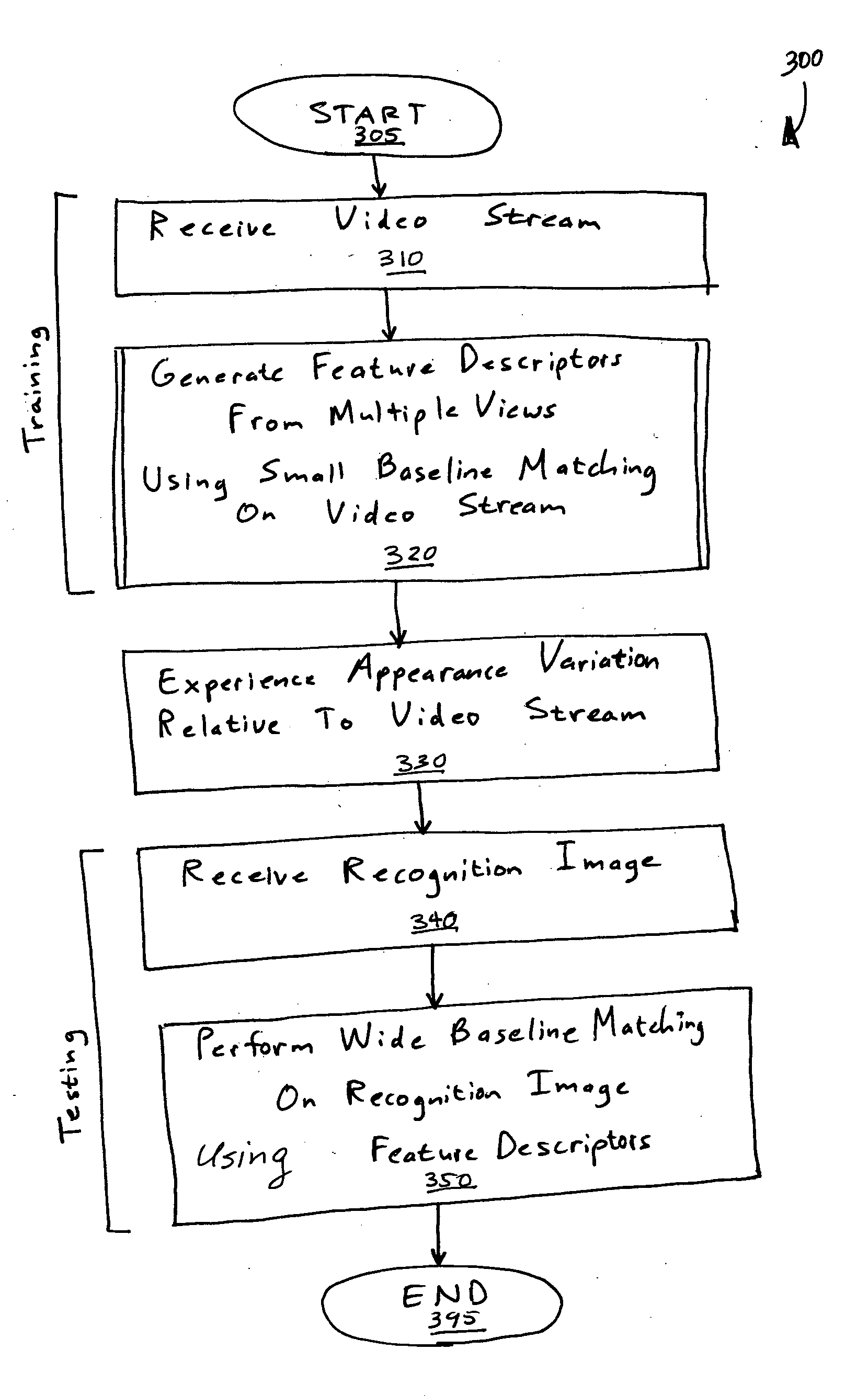

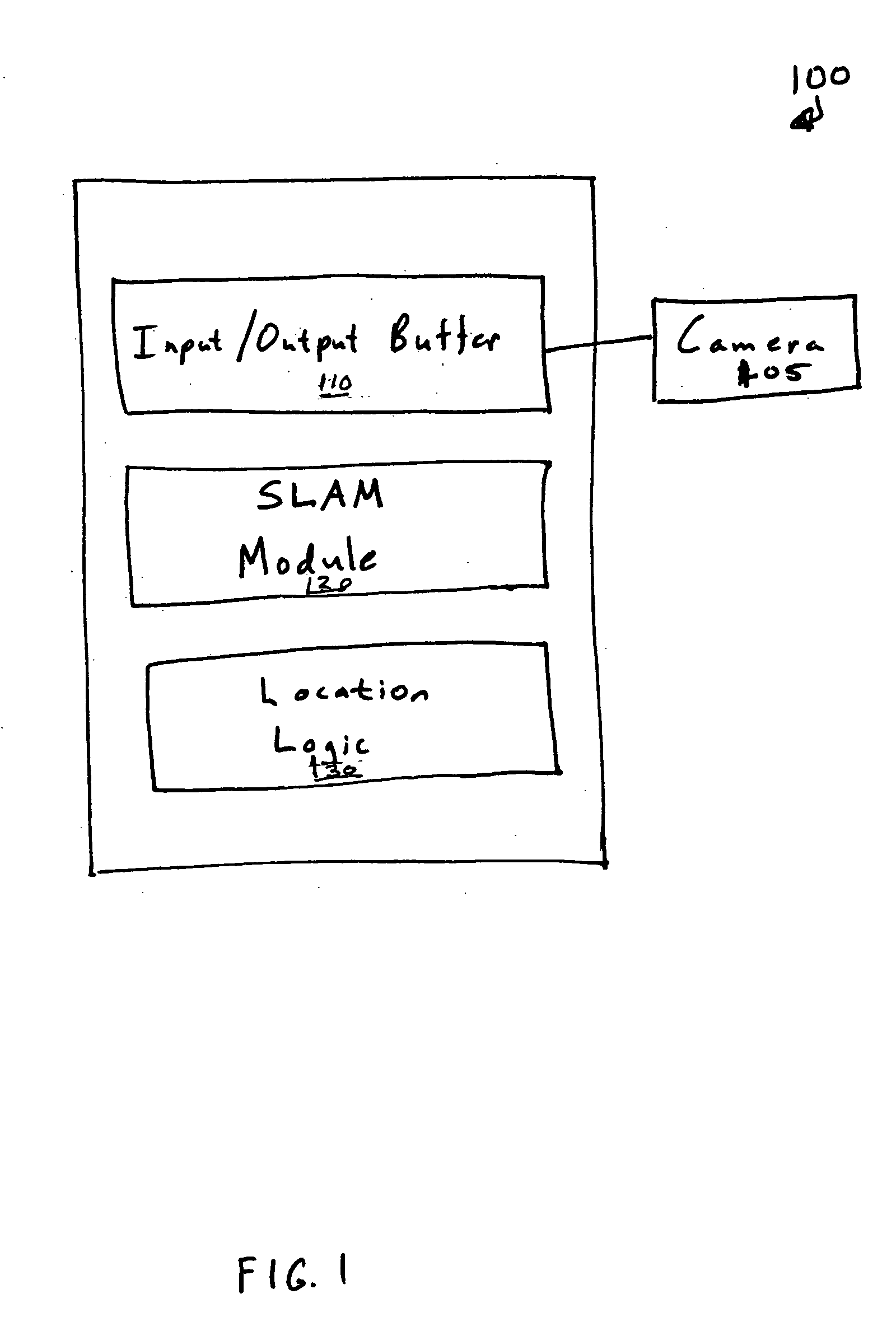

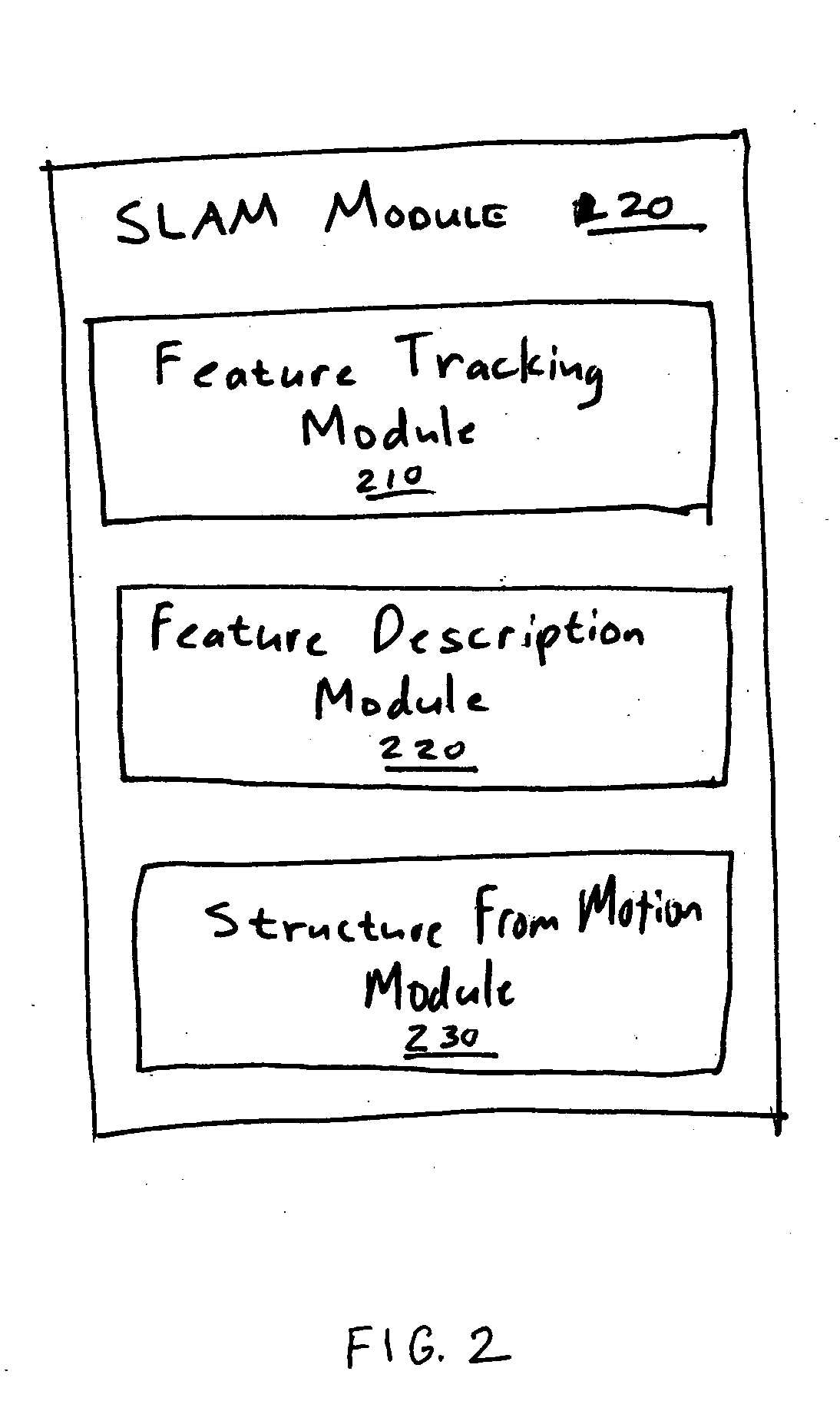

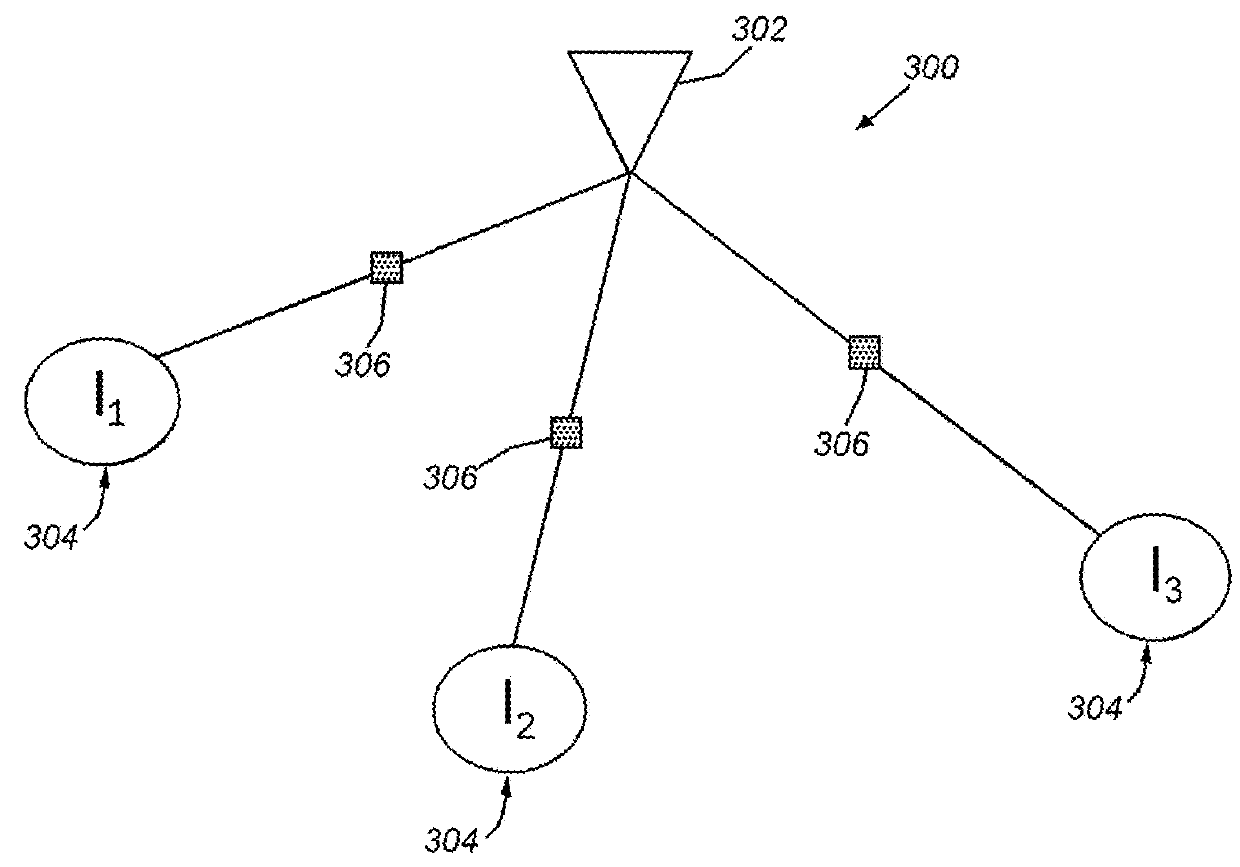

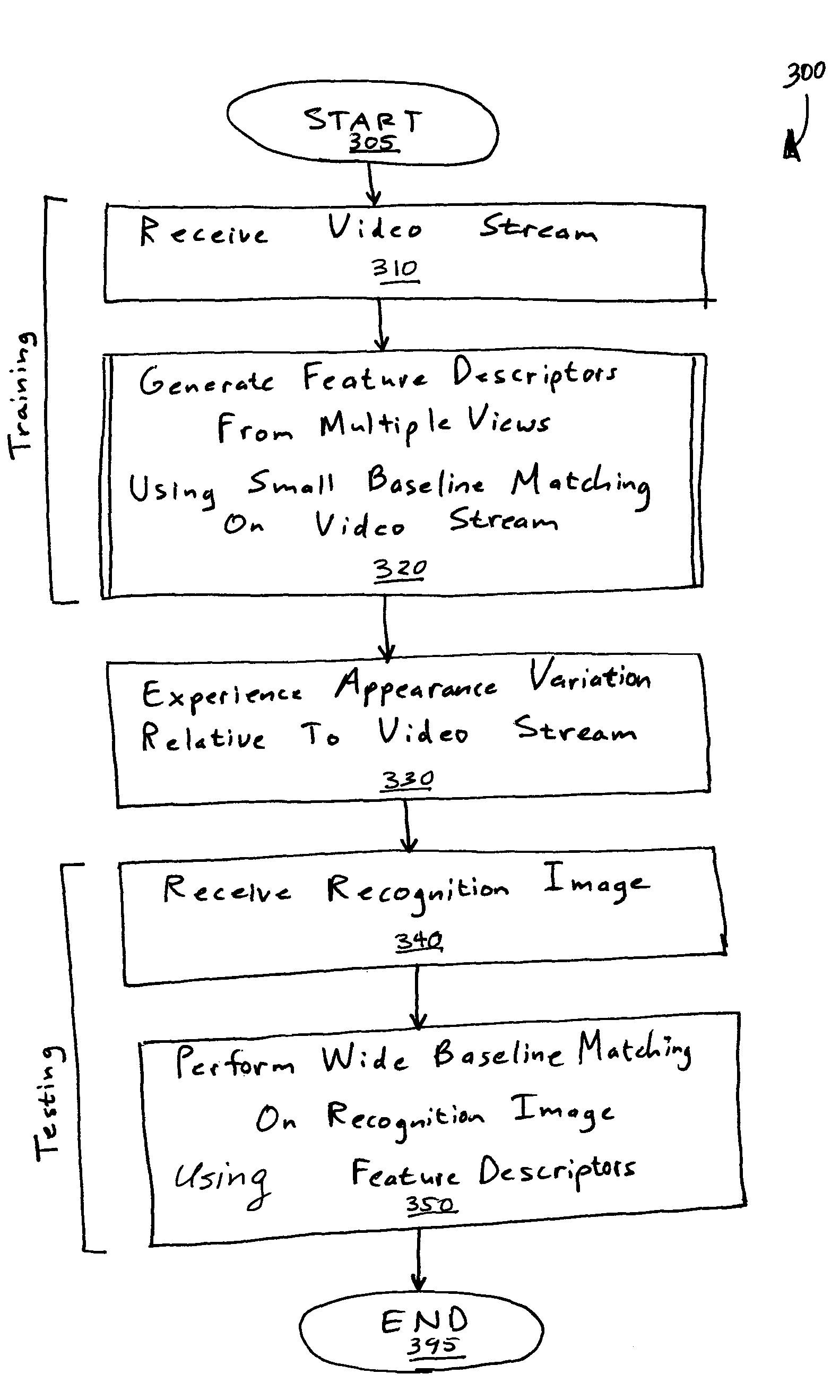

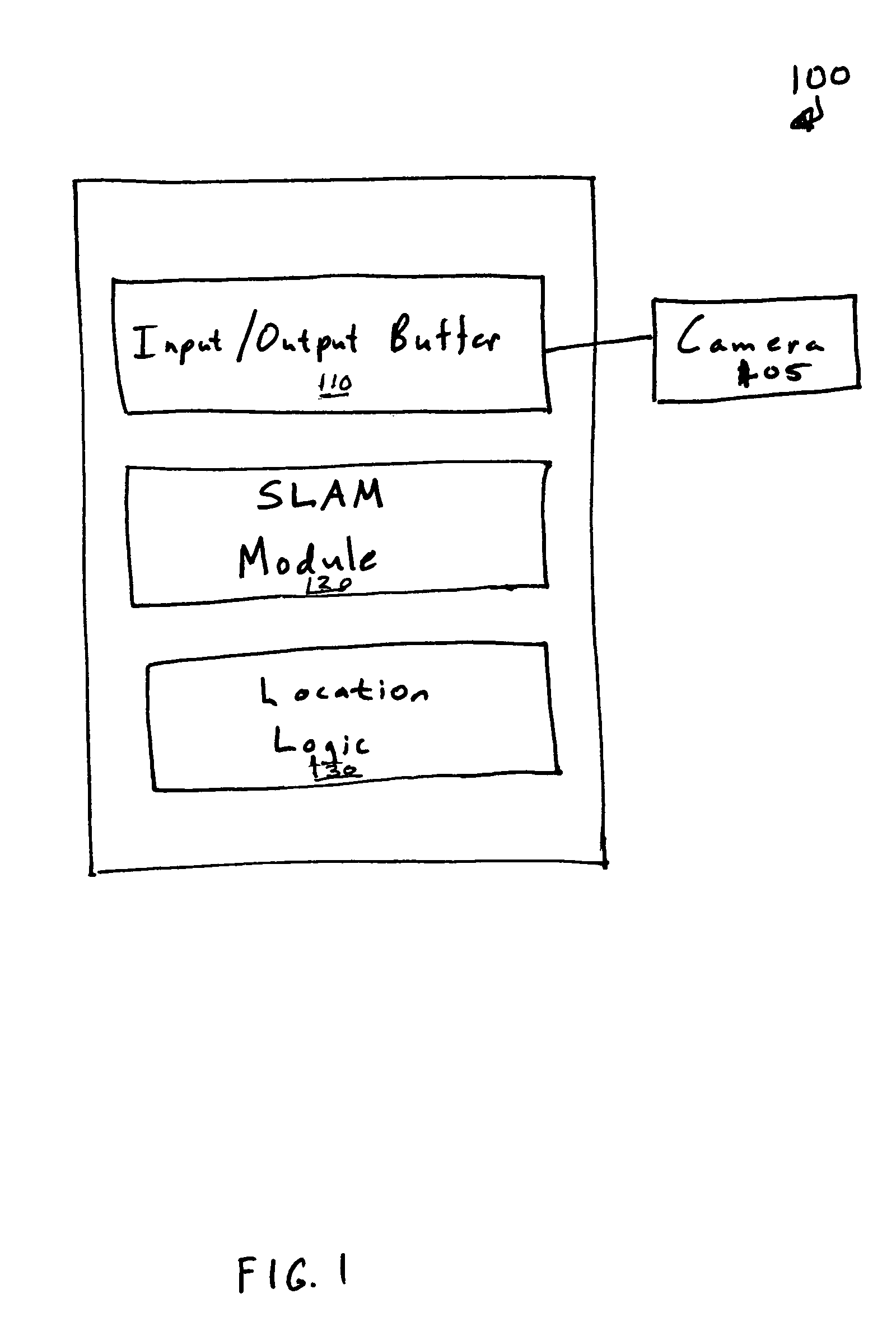

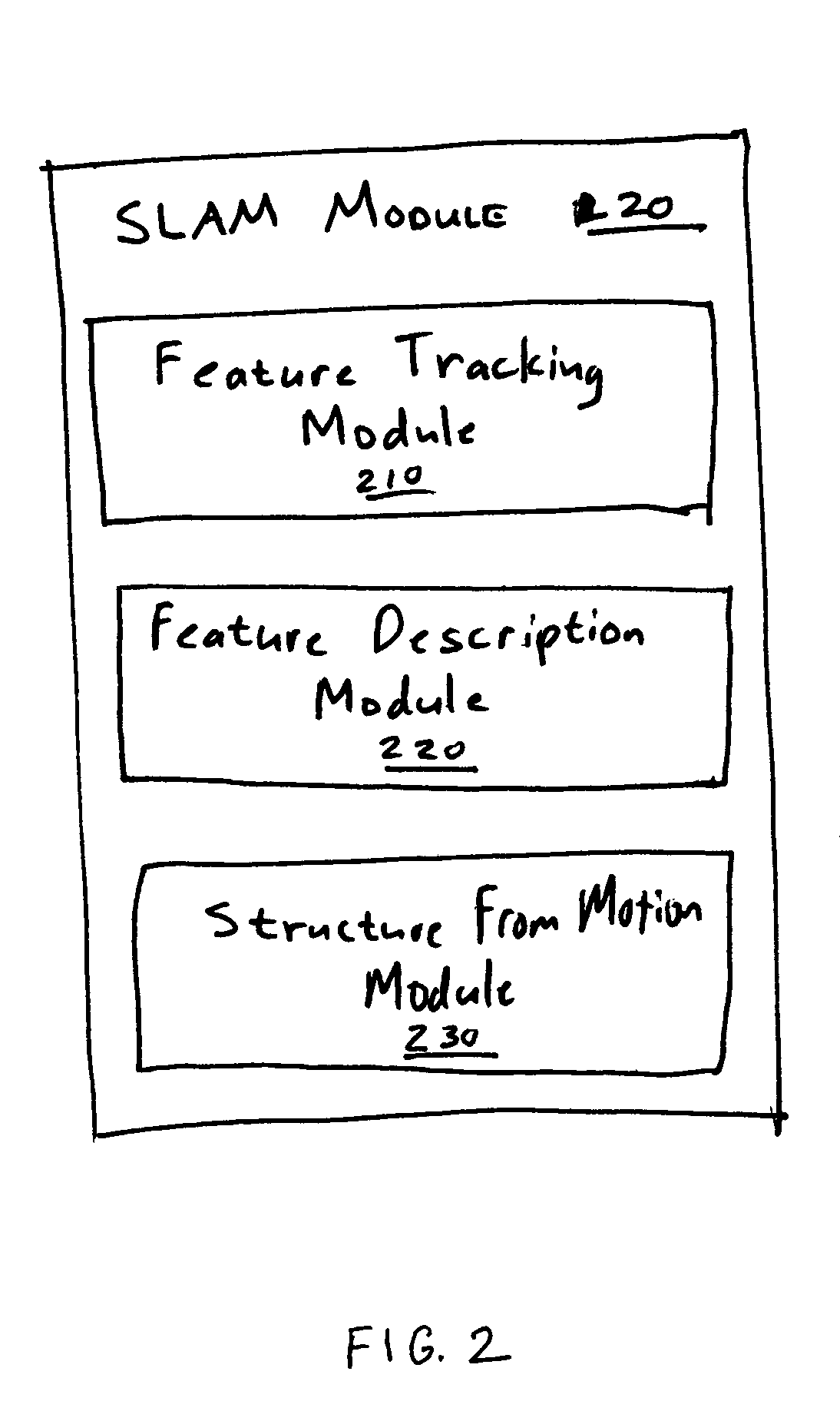

Simultaneous localization and mapping using multiple view feature descriptors

InactiveUS20050238200A1Efficiently build necessary feature descriptorReliable correspondenceThree-dimensional object recognitionKaiman filterKernel principal component analysis

Simultaneous localization and mapping (SLAM) utilizes multiple view feature descriptors to robustly determine location despite appearance changes that would stifle conventional systems. A SLAM algorithm generates a feature descriptor for a scene from different perspectives using kernel principal component analysis (KPCA). When the SLAM module subsequently receives a recognition image after a wide baseline change, it can refer to correspondences from the feature descriptor to continue map building and / or determine location. Appearance variations can result from, for example, a change in illumination, partial occlusion, a change in scale, a change in orientation, change in distance, warping, and the like. After an appearance variation, a structure-from-motion module uses feature descriptors to reorient itself and continue map building using an extended Kalman Filter. Through the use of a database of comprehensive feature descriptors, the SLAM module is also able to refine a position estimation despite appearance variations.

Owner:HONDA MOTOR CO LTD

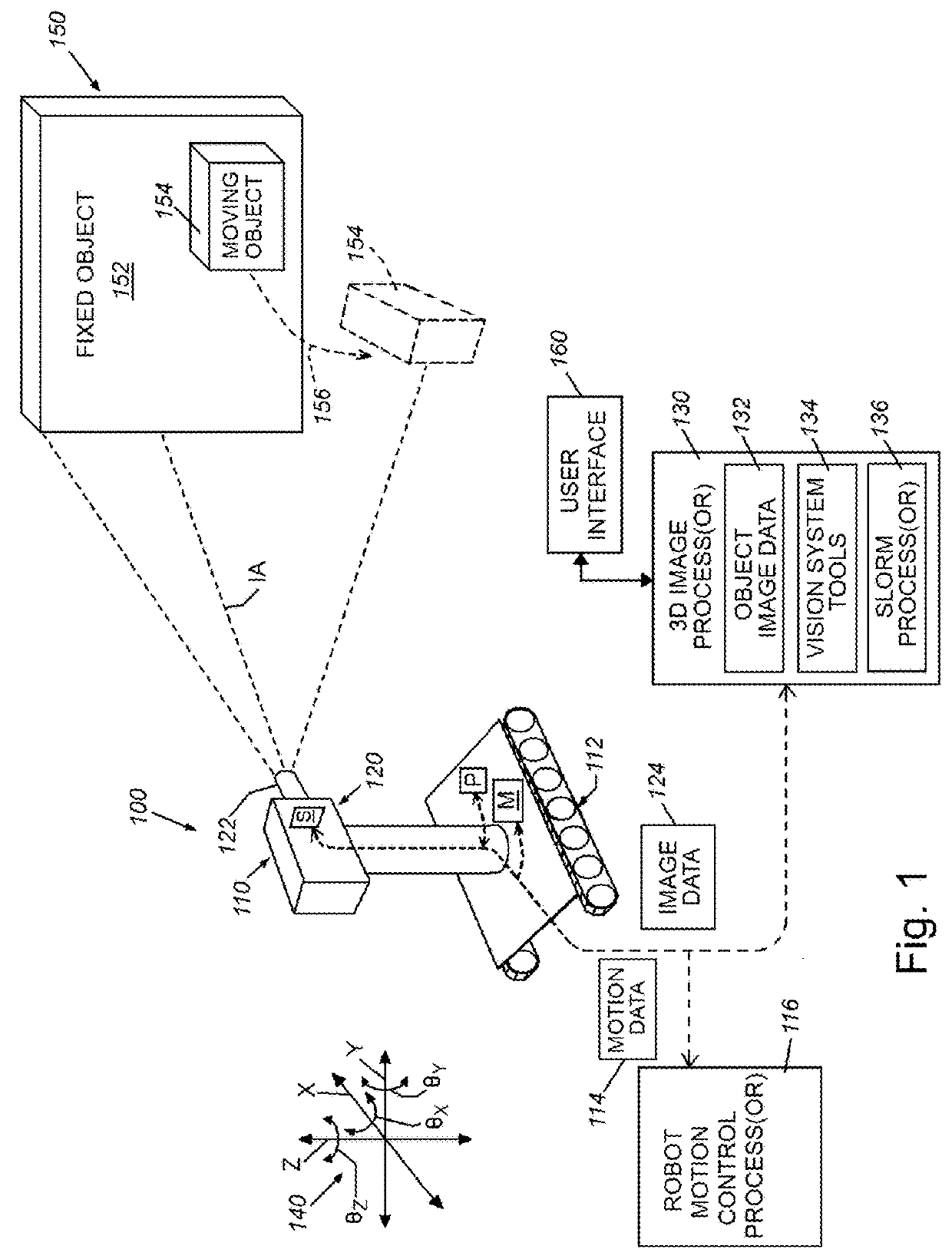

System and method for semantic simultaneous localization and mapping of static and dynamic objects

InactiveUS20180161986A1Enhanced freedom in manipulationOvercome disadvantagesProgramme-controlled manipulatorImage analysisSemantic propertyObject registration

A system for Semantic Simultaneous Tracking, Object Registration, and 3D Mapping (STORM) can maintain a world map made of static and dynamic objects rather than 3D clouds of points, and can learn in real time semantic properties of objects, such as their mobility in a certain environment. This semantic information can be used by a robot to improve its navigation and localization capabilities by relying more on static objects than on movable objects for estimating location and orientation.

Owner:CHARLES STARK DRAPER LABORATORY

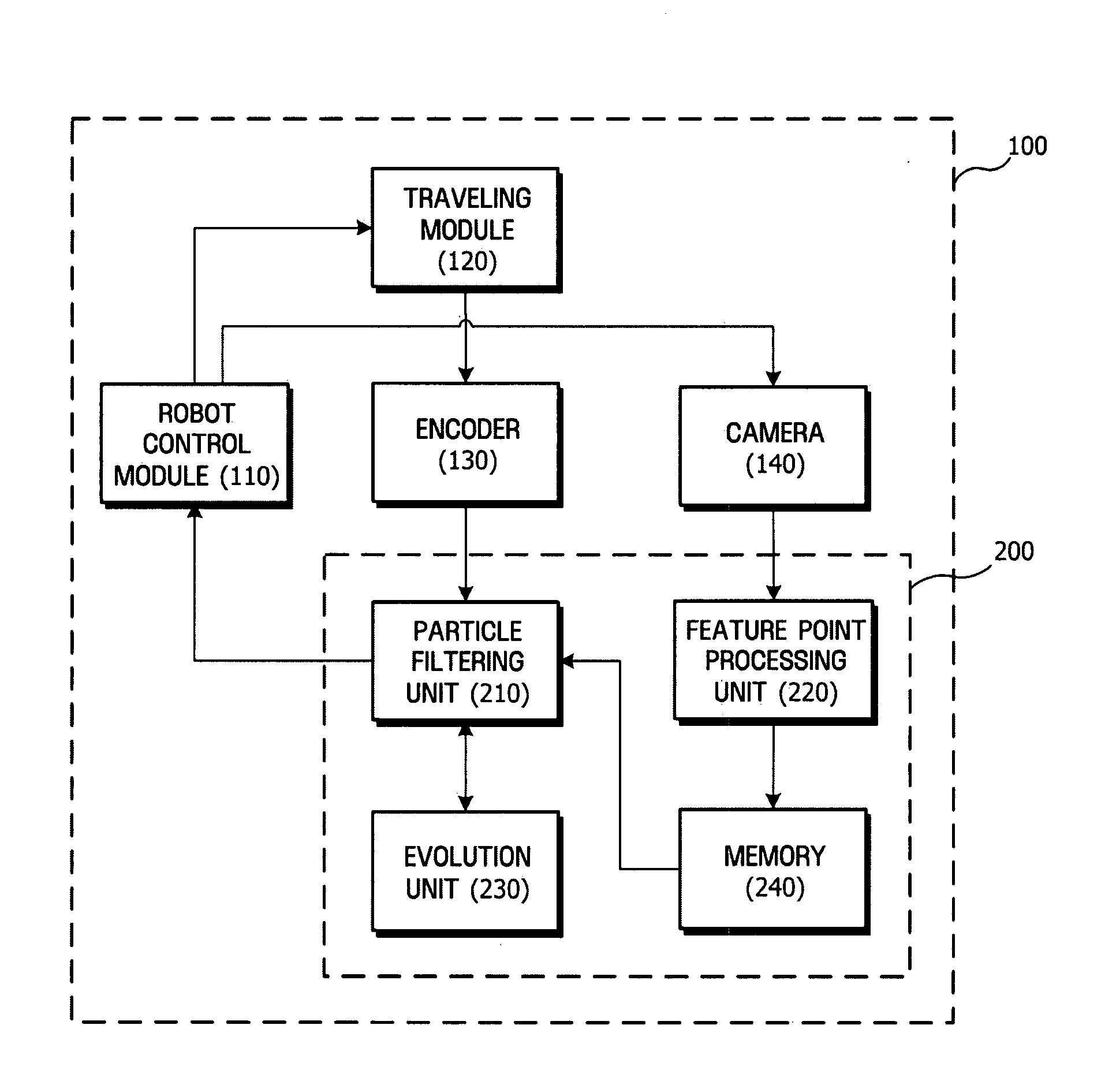

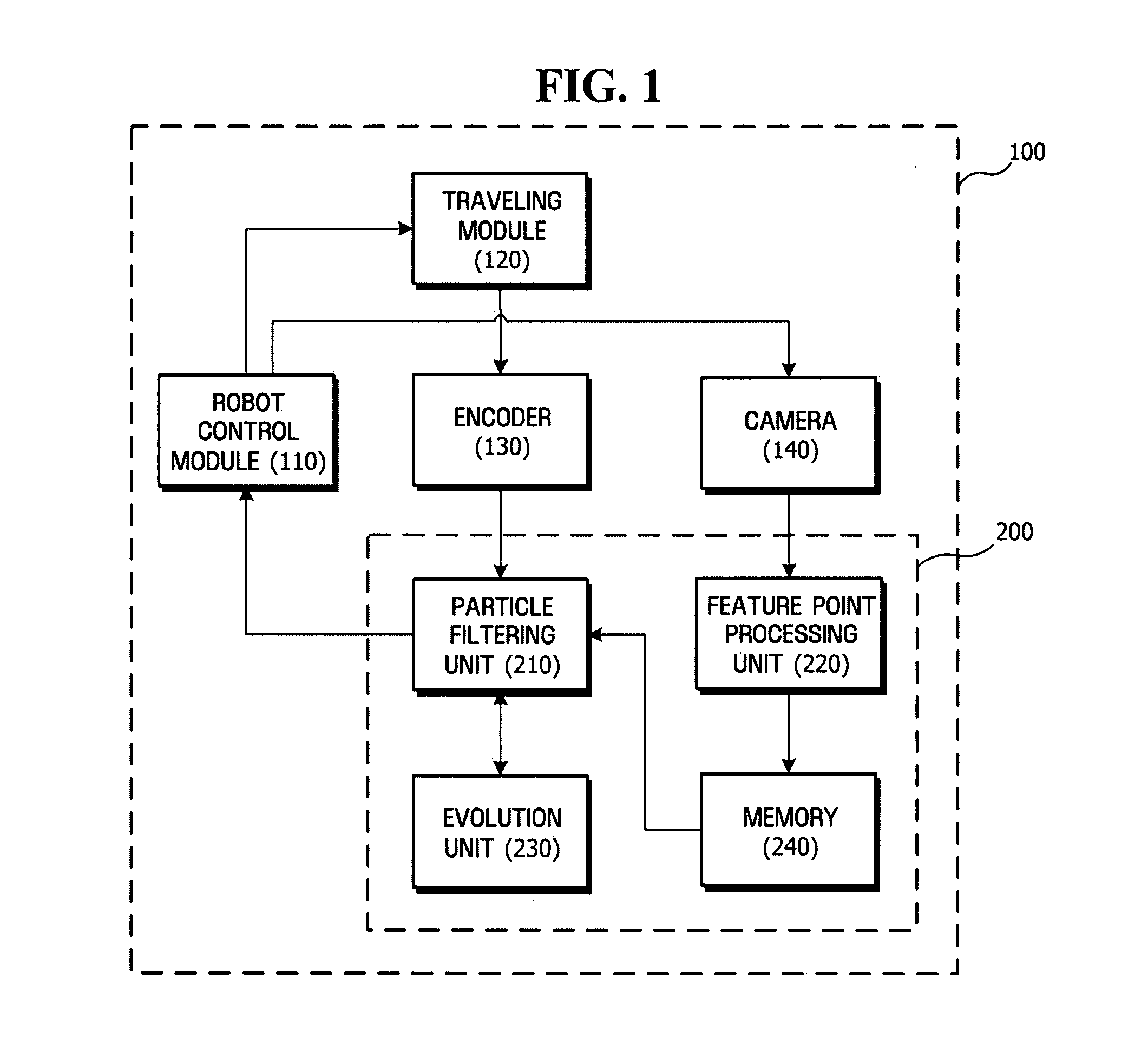

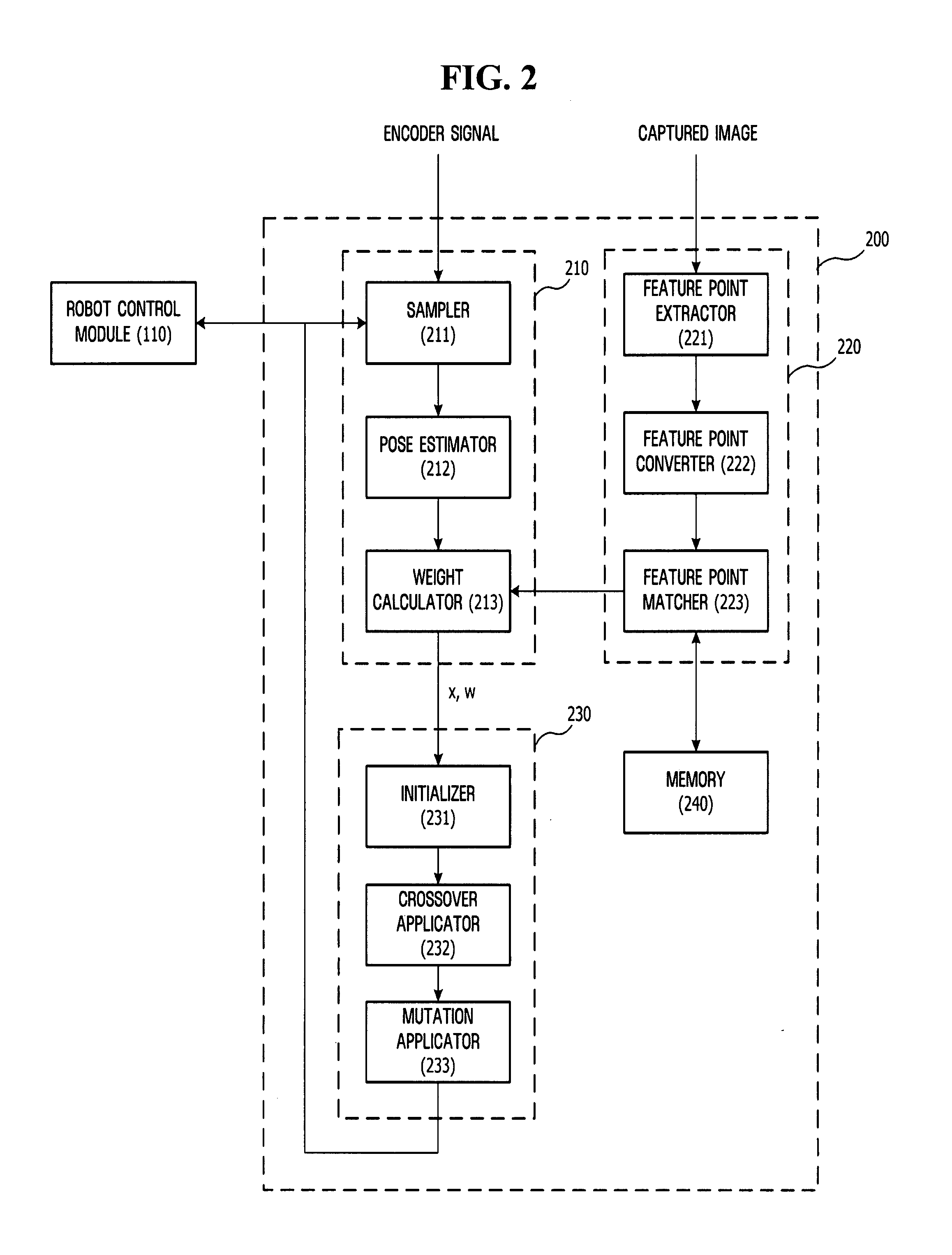

Method, medium, and system estimating pose of mobile robots

ActiveUS20080065267A1Reduce computational complexityComputational complexity is reducedProgramme-controlled manipulatorClosed circuit television systemsComputation complexitySimultaneous localisation and mapping

A method, medium, and system reducing the computational complexity of a Simultaneous Localization And Mapping (SLAM) algorithm that can be applied to mobile robots. A system estimating the pose of a mobile robot with the aid of a particle filter using a plurality of particles includes a sensor which detects a variation in the pose of a mobile robot, a particle filter unit which determines the poses and weights of current particles by applying the detected pose variation to previous particles, and an evolution unit which updates the poses and weights of the current particles by applying an evolution algorithm to the poses and weights of the current particles.

Owner:HARBIN INST OF TECH +1

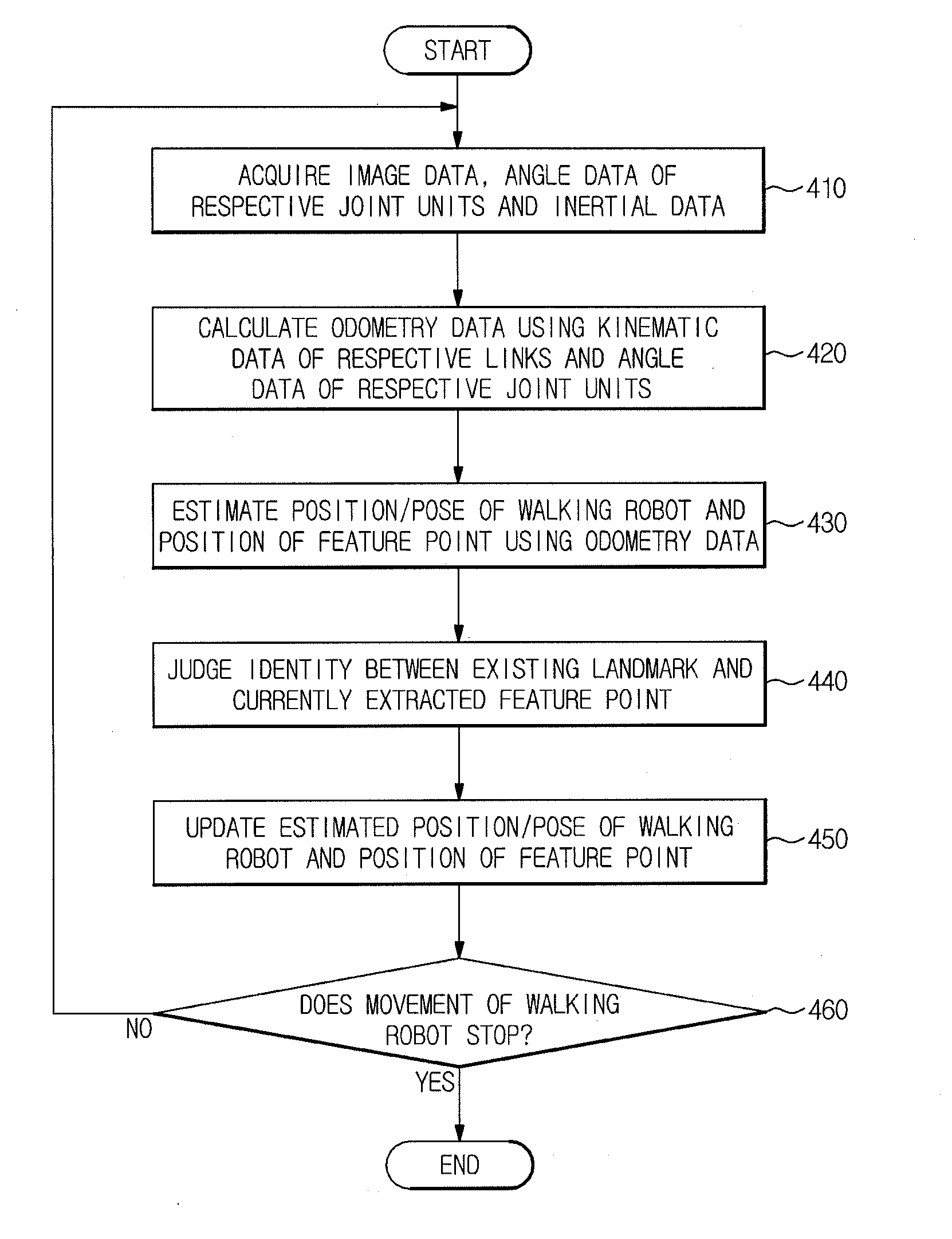

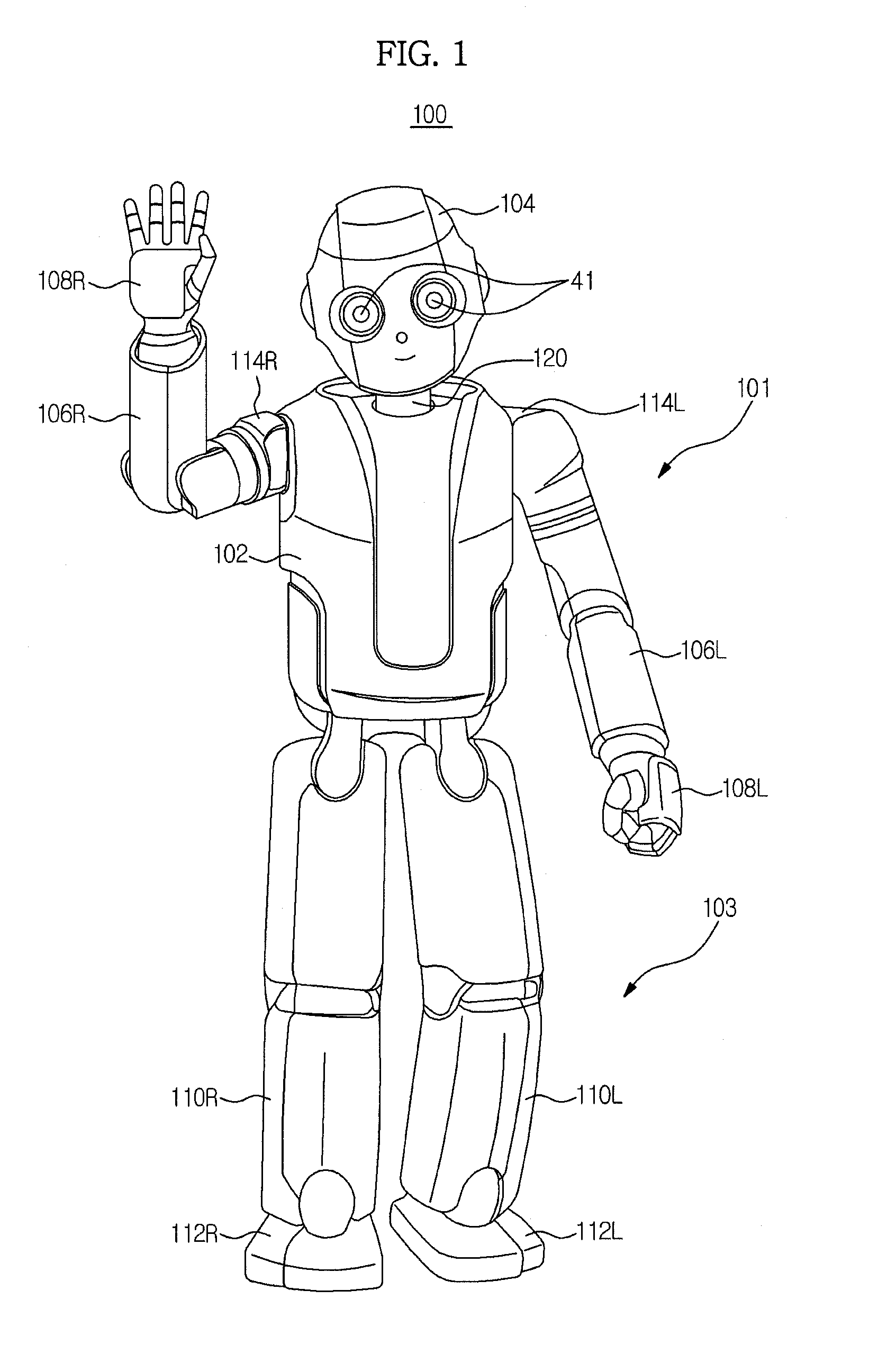

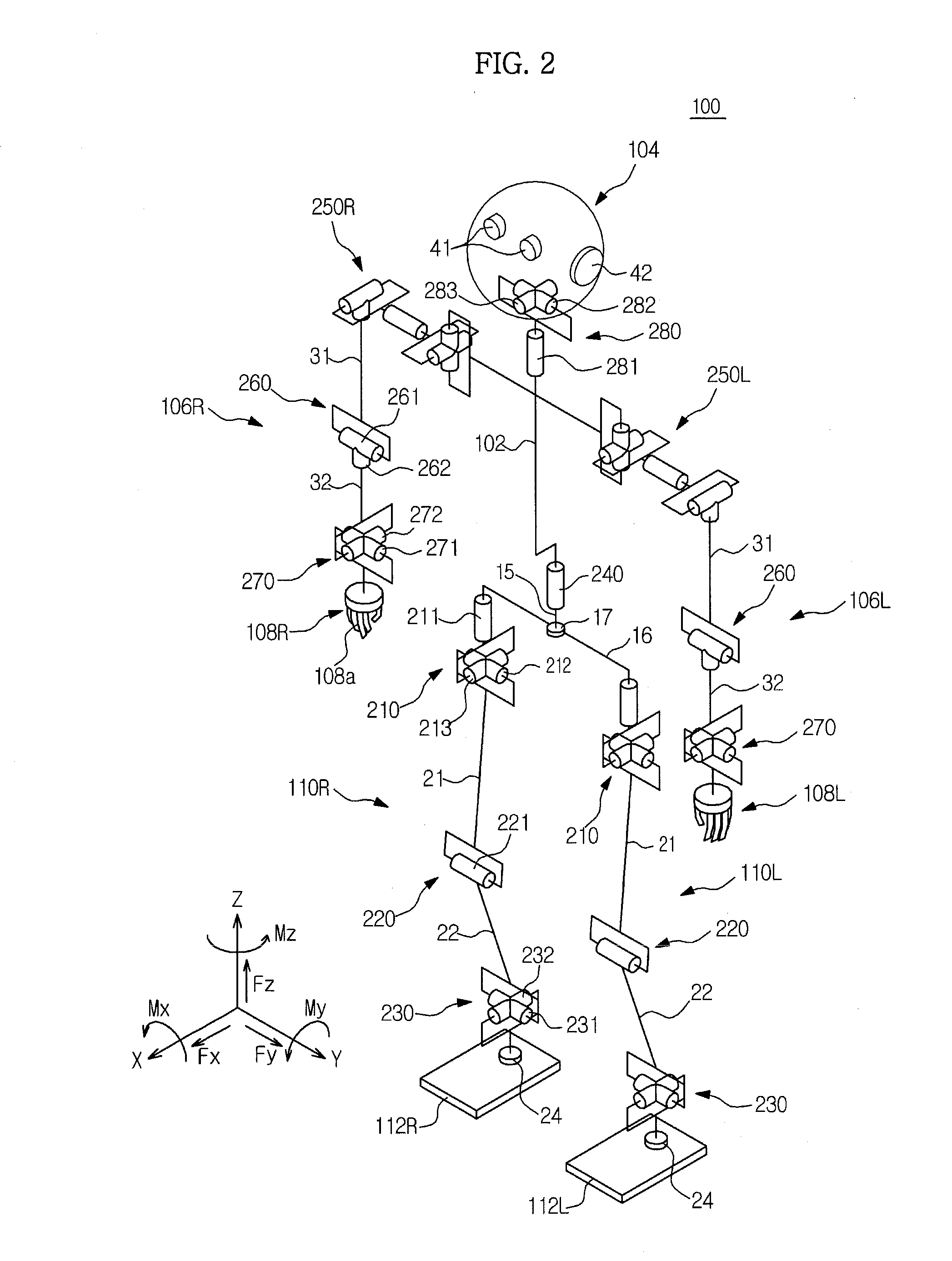

Walking robot and simultaneous localization and mapping method thereof

ActiveUS20120155775A1Improve accuracyFast convergenceProgramme-controlled manipulatorImage analysisOdometryComputer science

A walking robot and a simultaneous localization and mapping method thereof in which odometry data acquired during movement of the walking robot are applied to image-based SLAM technology so as to improve accuracy and convergence of localization of the walking robot. The simultaneous localization and mapping method includes acquiring image data of a space about which the walking robot walks and rotational angle data of rotary joints relating to walking of the walking robot, calculating odometry data using kinematic data of respective links constituting the walking robot and the rotational angle data, and localizing the walking robot and mapping the space about which the walking robot walks using the image data and the odometry data.

Owner:SAMSUNG ELECTRONICS CO LTD

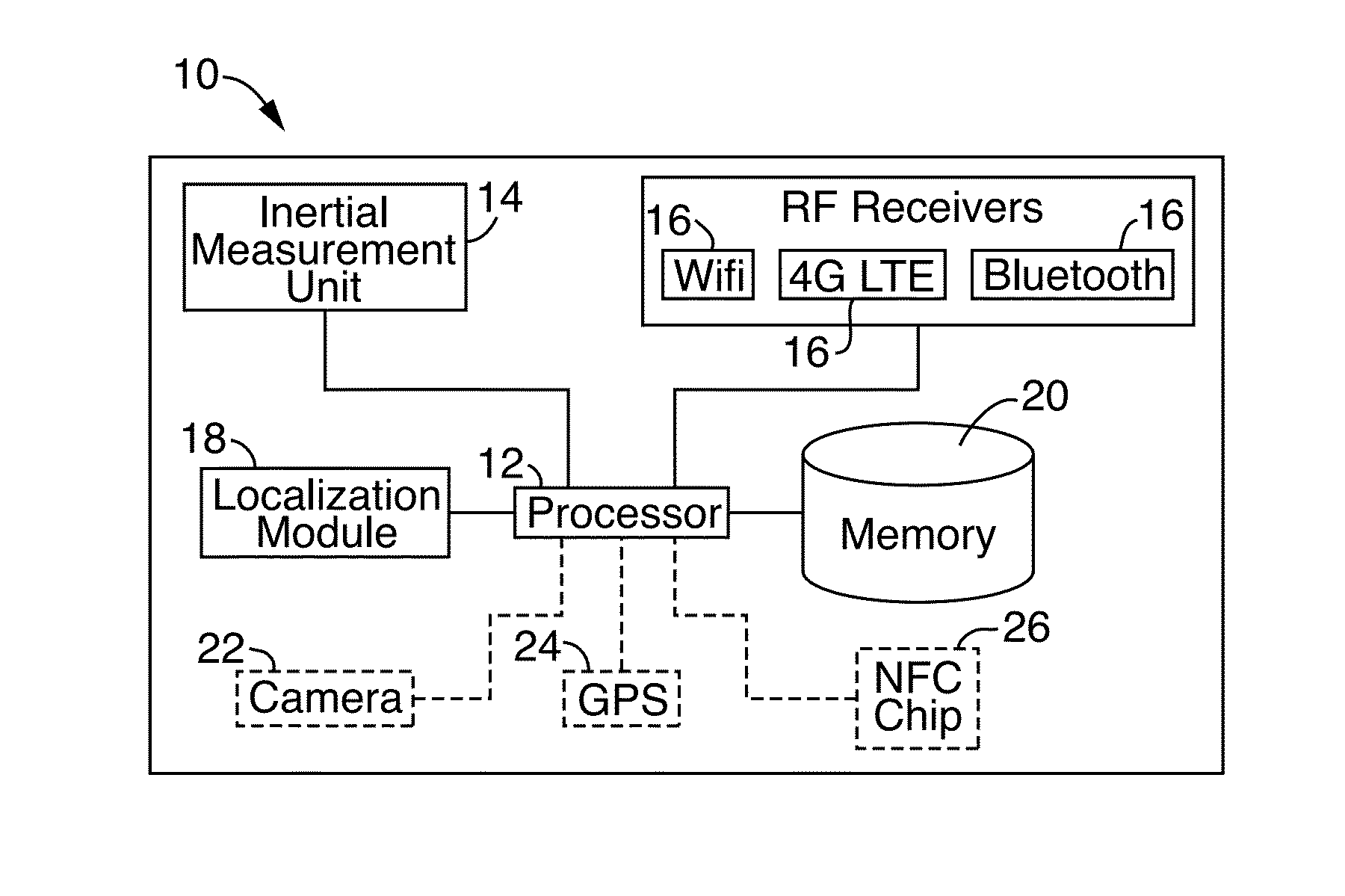

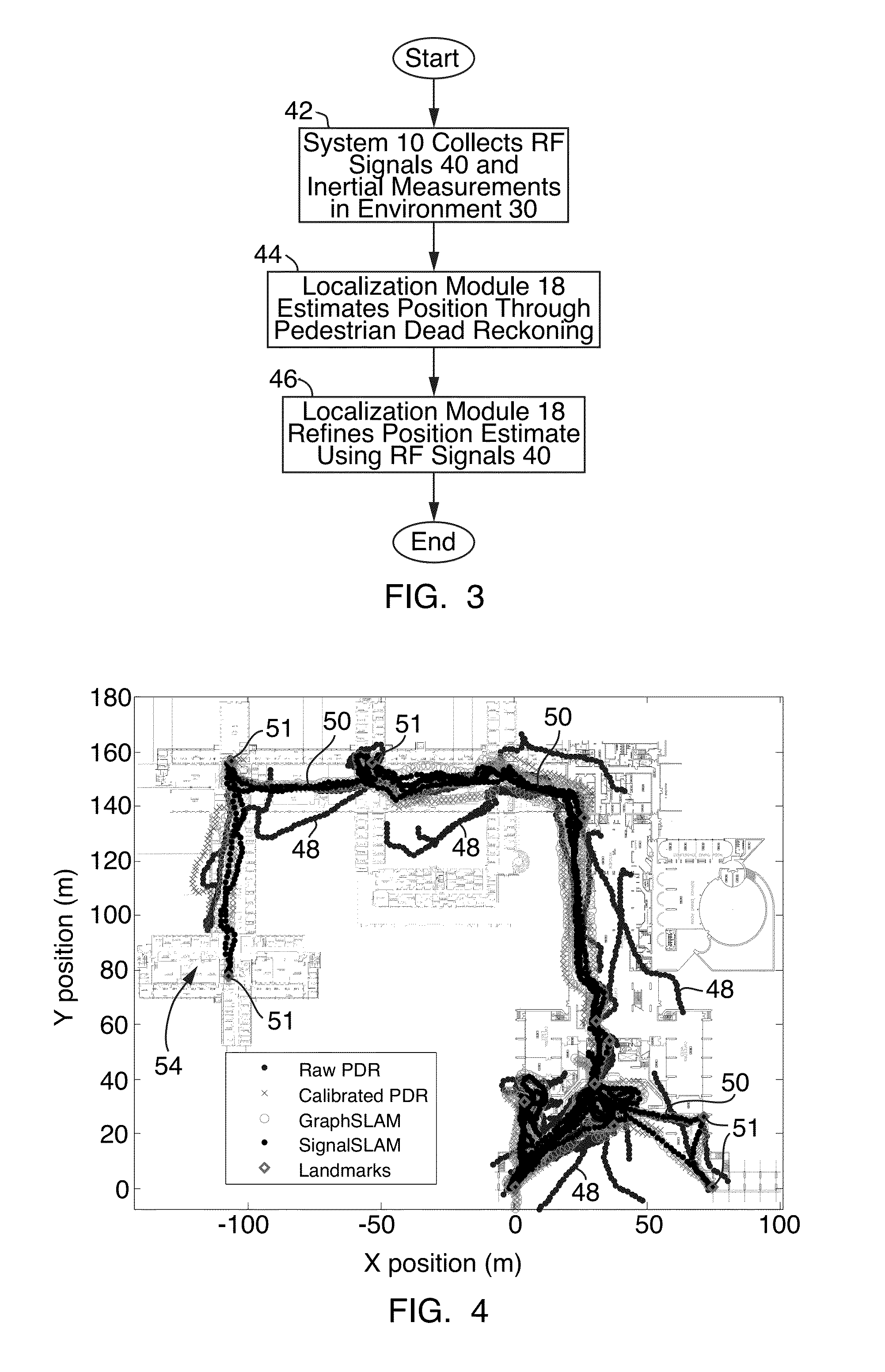

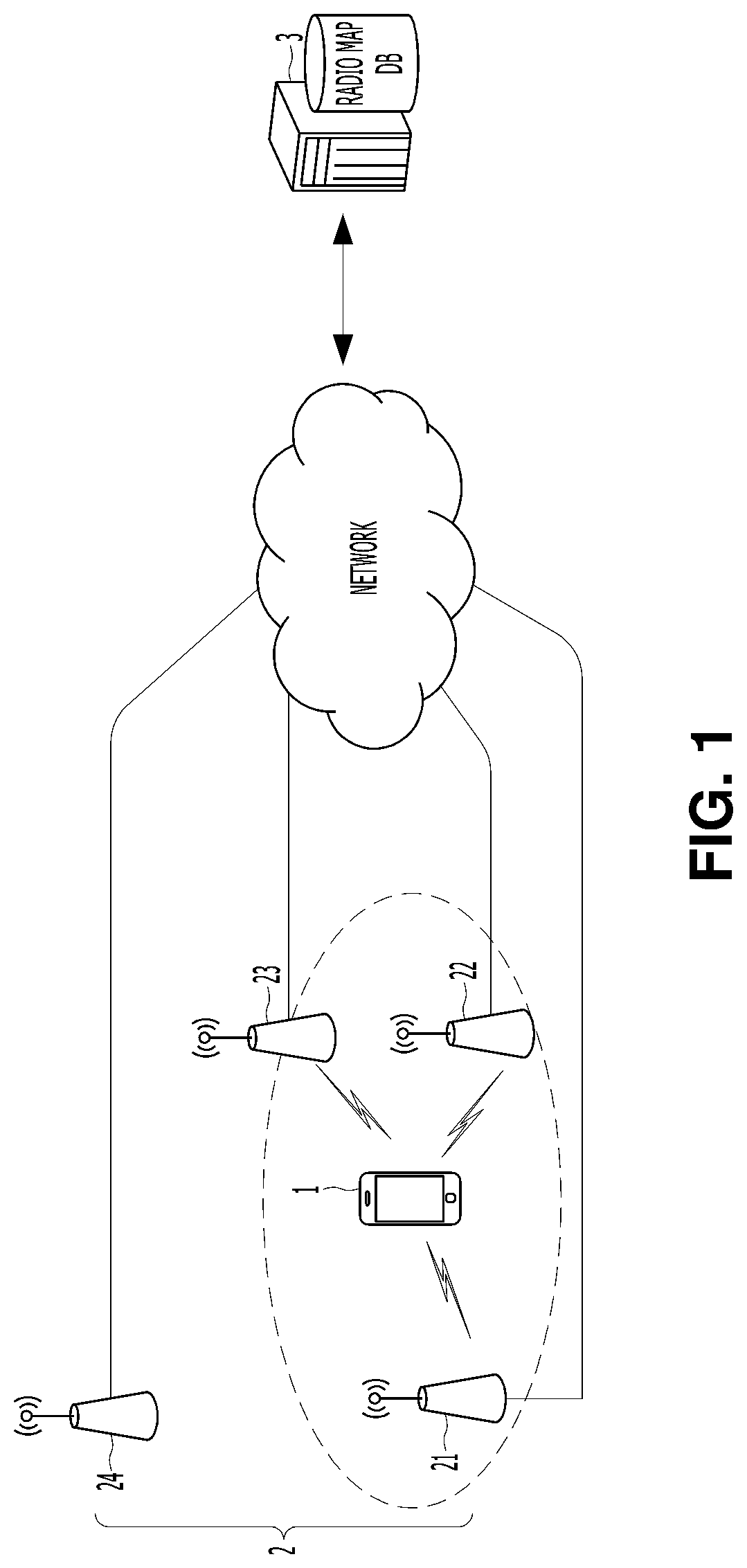

Simultaneous localization and mapping systems and methods

InactiveUS20150119086A1Navigation by speed/acceleration measurementsPosition fixationComputer moduleRadio frequency

A system and method for providing localization and mapping within an environment includes at least one mobile device adapted to be moved within the environment. The at least one mobile device includes an inertial measurement unit and at least one radio frequency receiver. The at least one mobile device detects inertial measurements through the inertial measurement unit and values of signal strength through the at least one radio frequency receiver. The system and method also includes a localization module that provides simultaneous localization and mapping of the at least one mobile device within the environment based at least on the inertial measurements detected by the inertial measurement unit and the values of signal strength detected by the at least one radio frequency receiver.

Owner:WSOU INVESTMENTS LLC

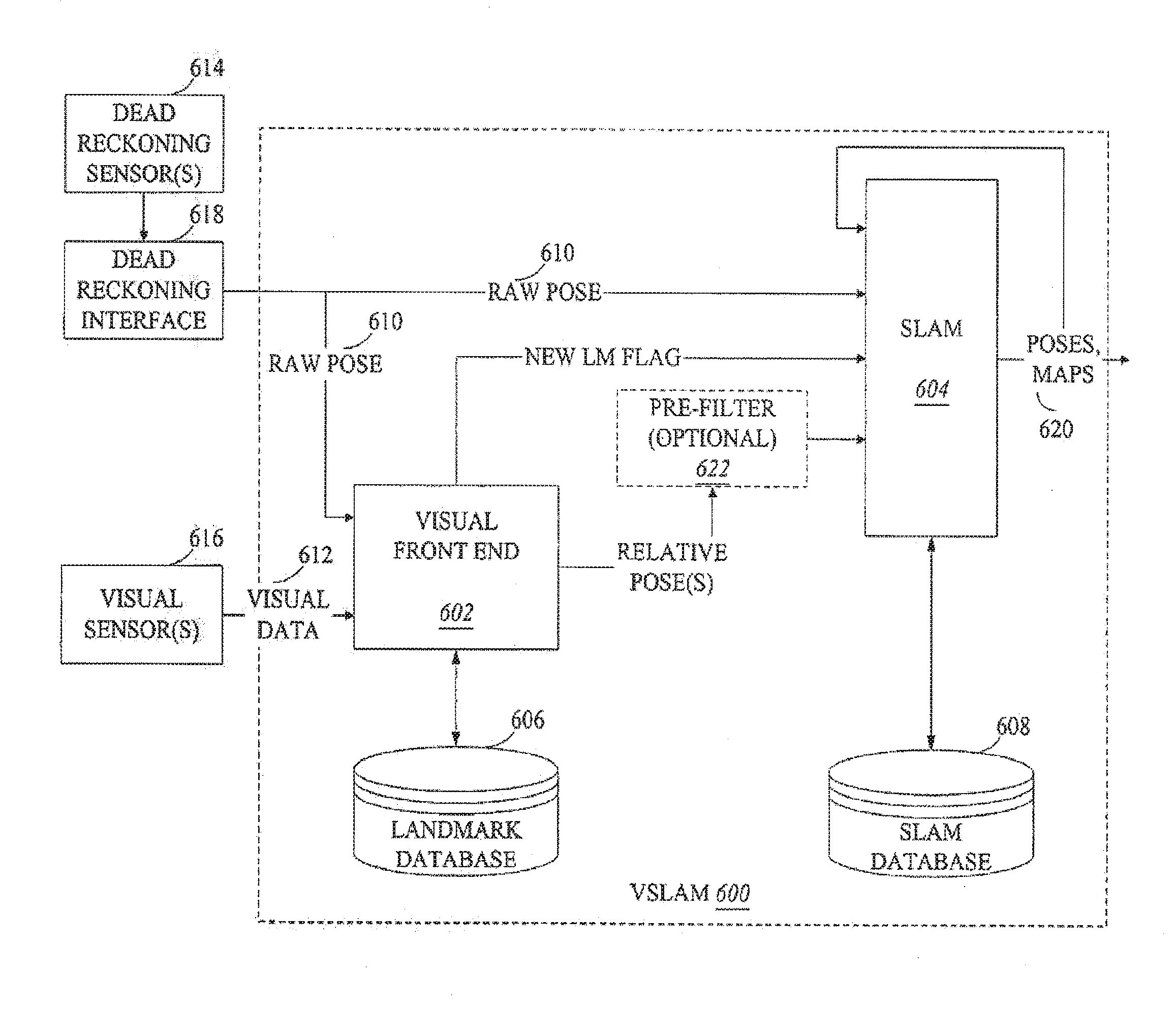

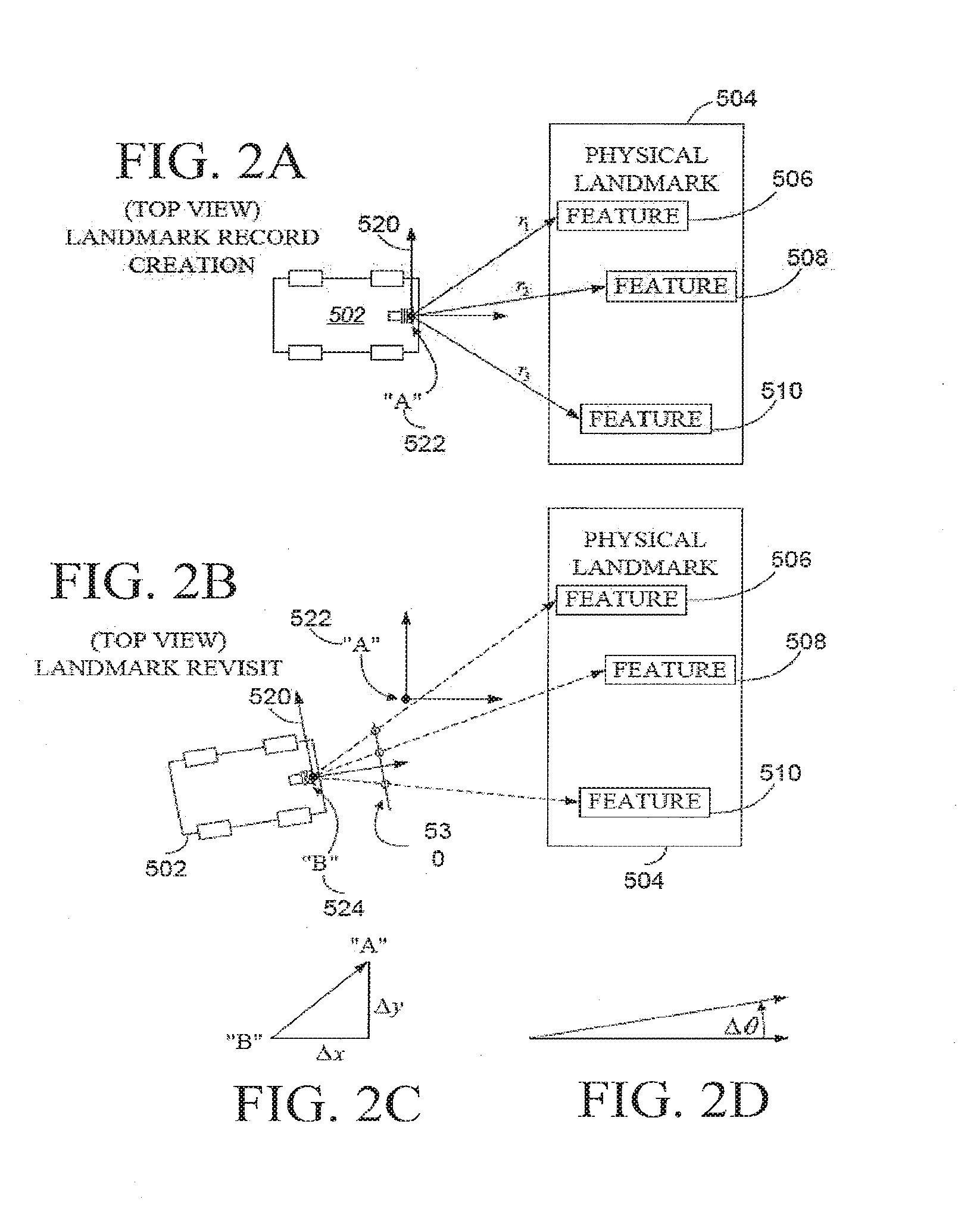

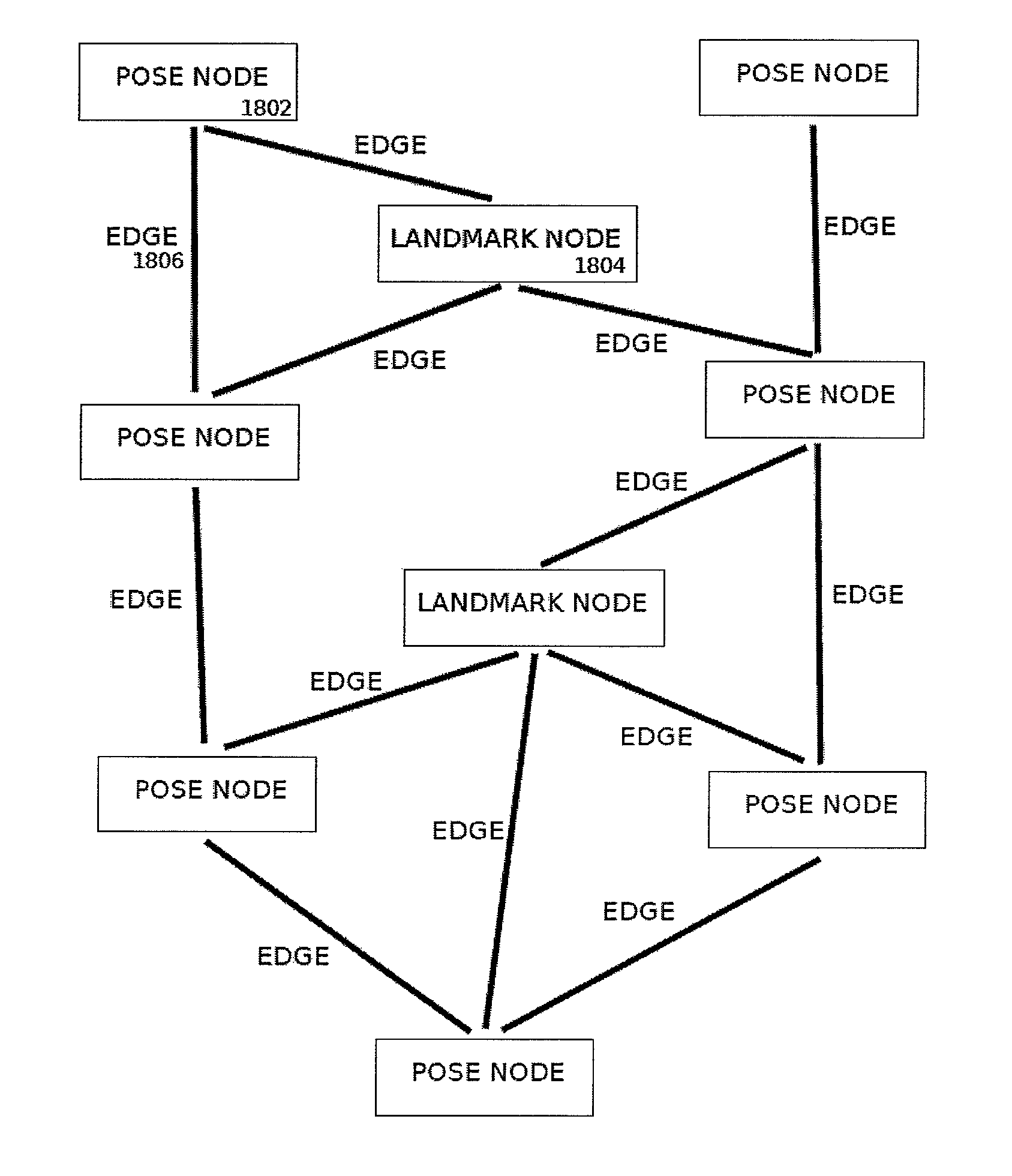

Systems and methods for vslam optimization

ActiveUS20160154408A1Database queryingCharacter and pattern recognitionLandmark matchingVisual technology

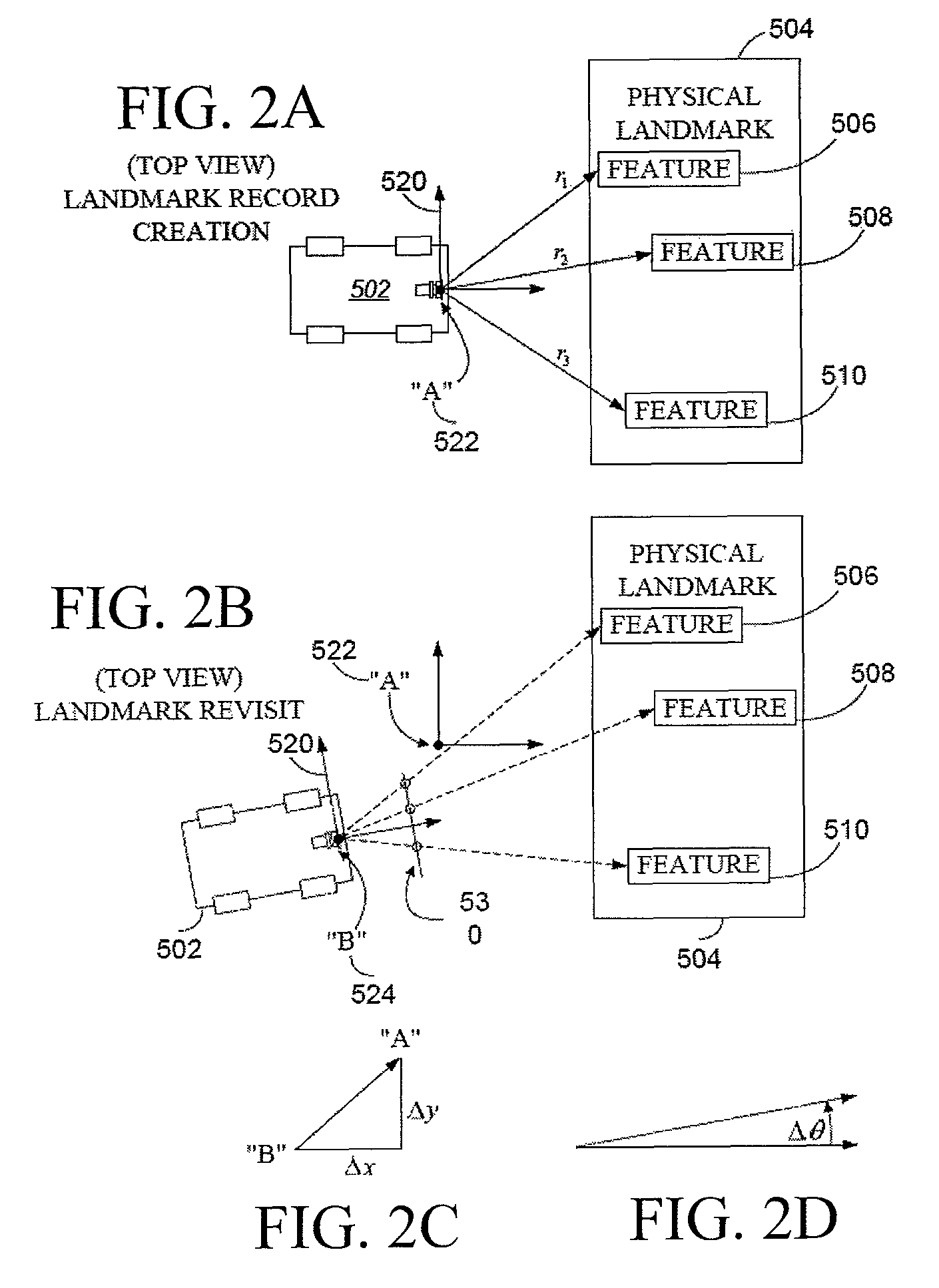

The invention is related to methods and apparatus that use a visual sensor and dead reckoning sensors to process Simultaneous Localization and Mapping (SLAM). These techniques can be used in robot navigation. Advantageously, such visual techniques can be used to autonomously generate and update a map. Unlike with laser rangefinders, the visual techniques are economically practical in a wide range of applications and can be used in relatively dynamic environments, such as environments in which people move. Certain embodiments contemplate improvements to the front-end processing in a SLAM-based system. Particularly, certain of these embodiments contemplate a novel landmark matching process. Certain of these embodiments also contemplate a novel landmark creation process. Certain embodiments contemplate improvements to the back-end processing in a SLAM-based system. Particularly, certain of these embodiments contemplate algorithms for modifying the SLAM graph in real-time to achieve a more efficient structure.

Owner:IROBOT CORP

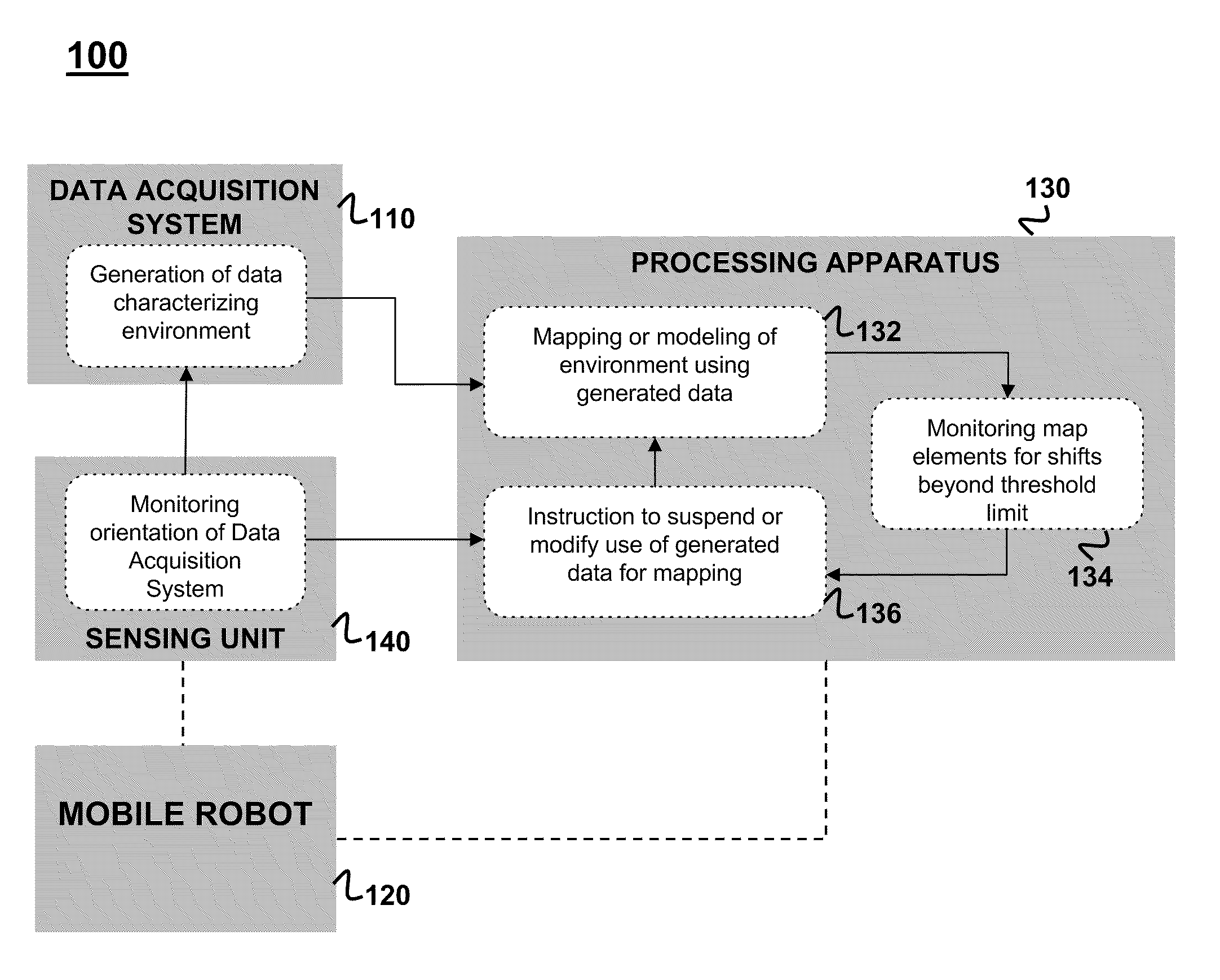

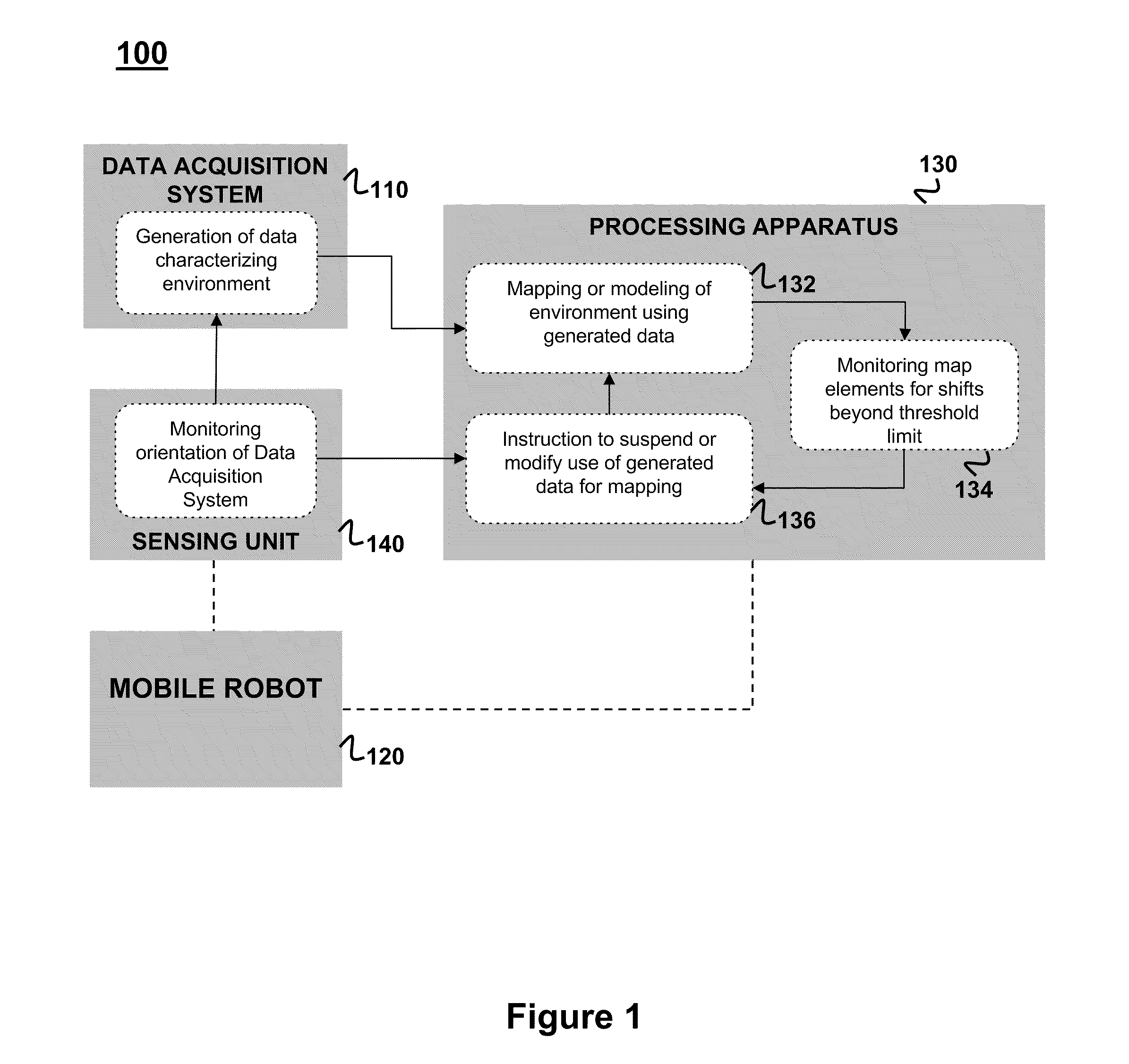

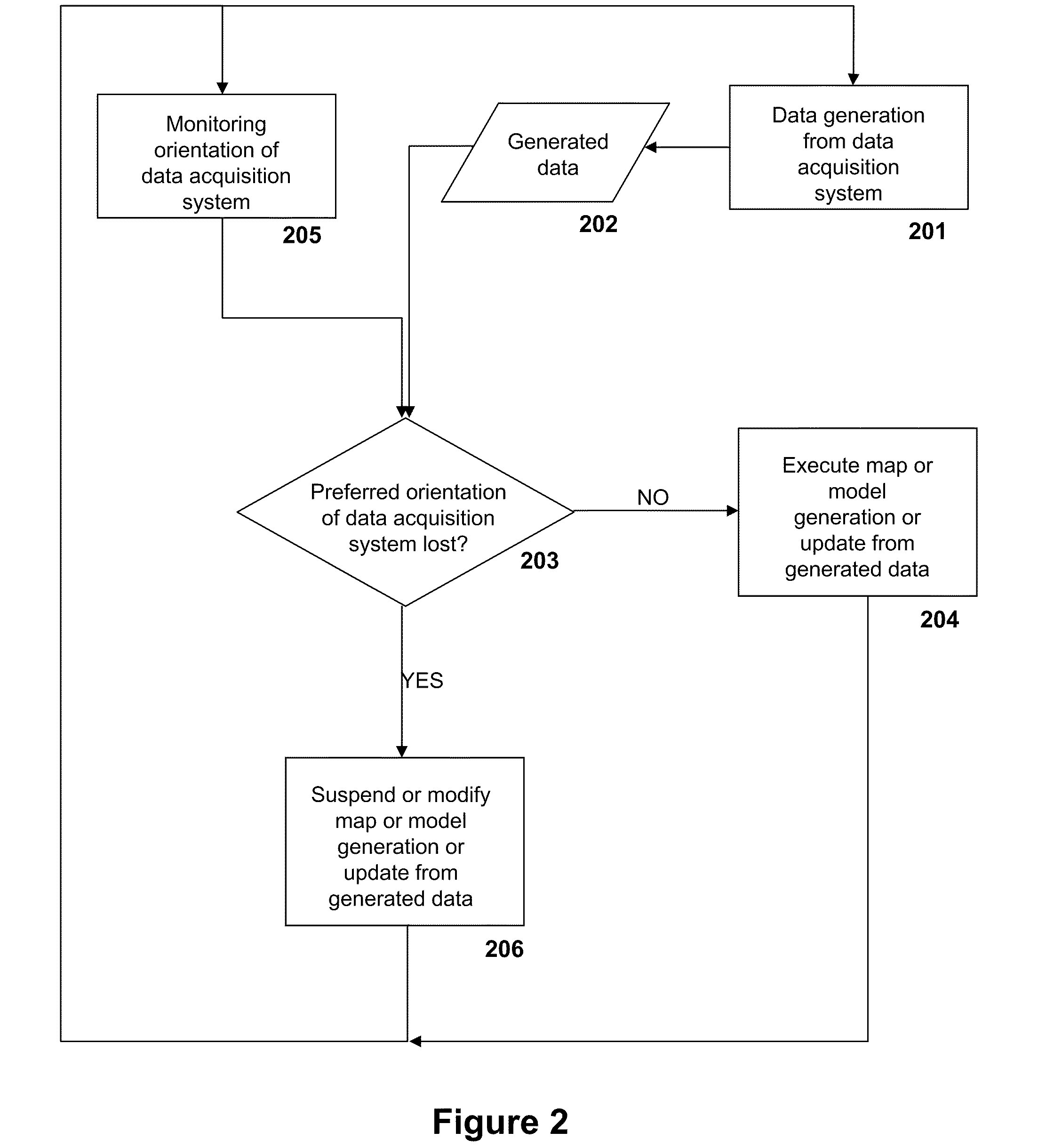

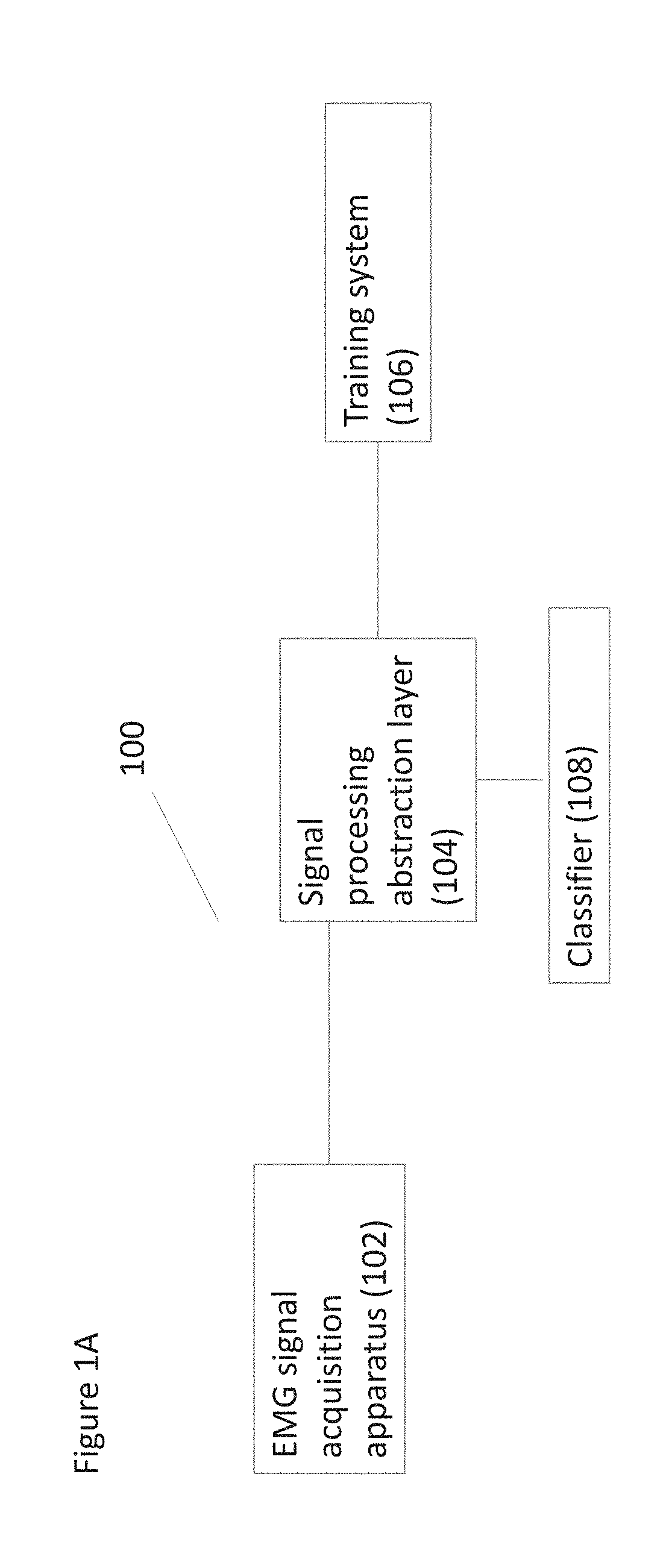

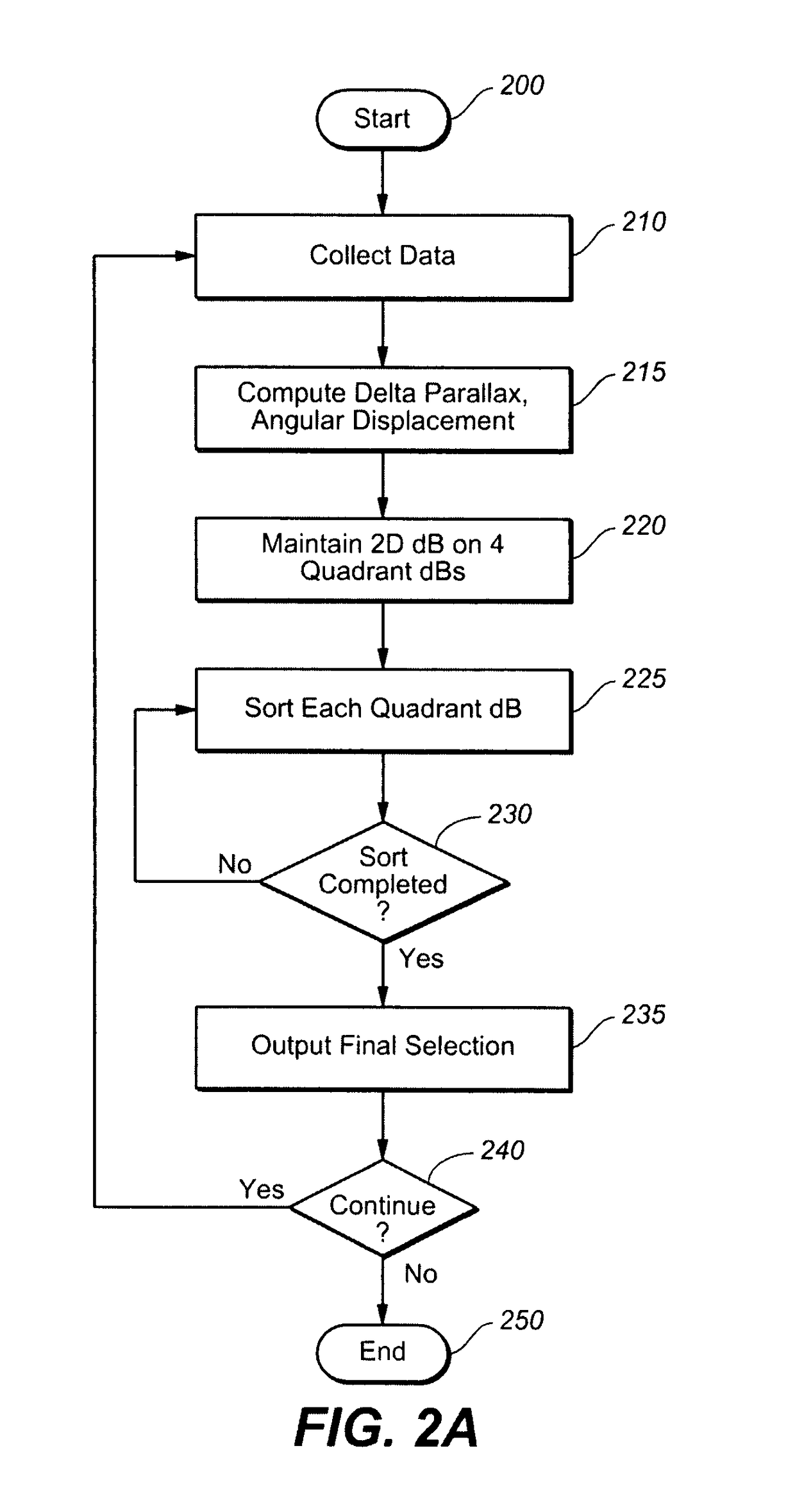

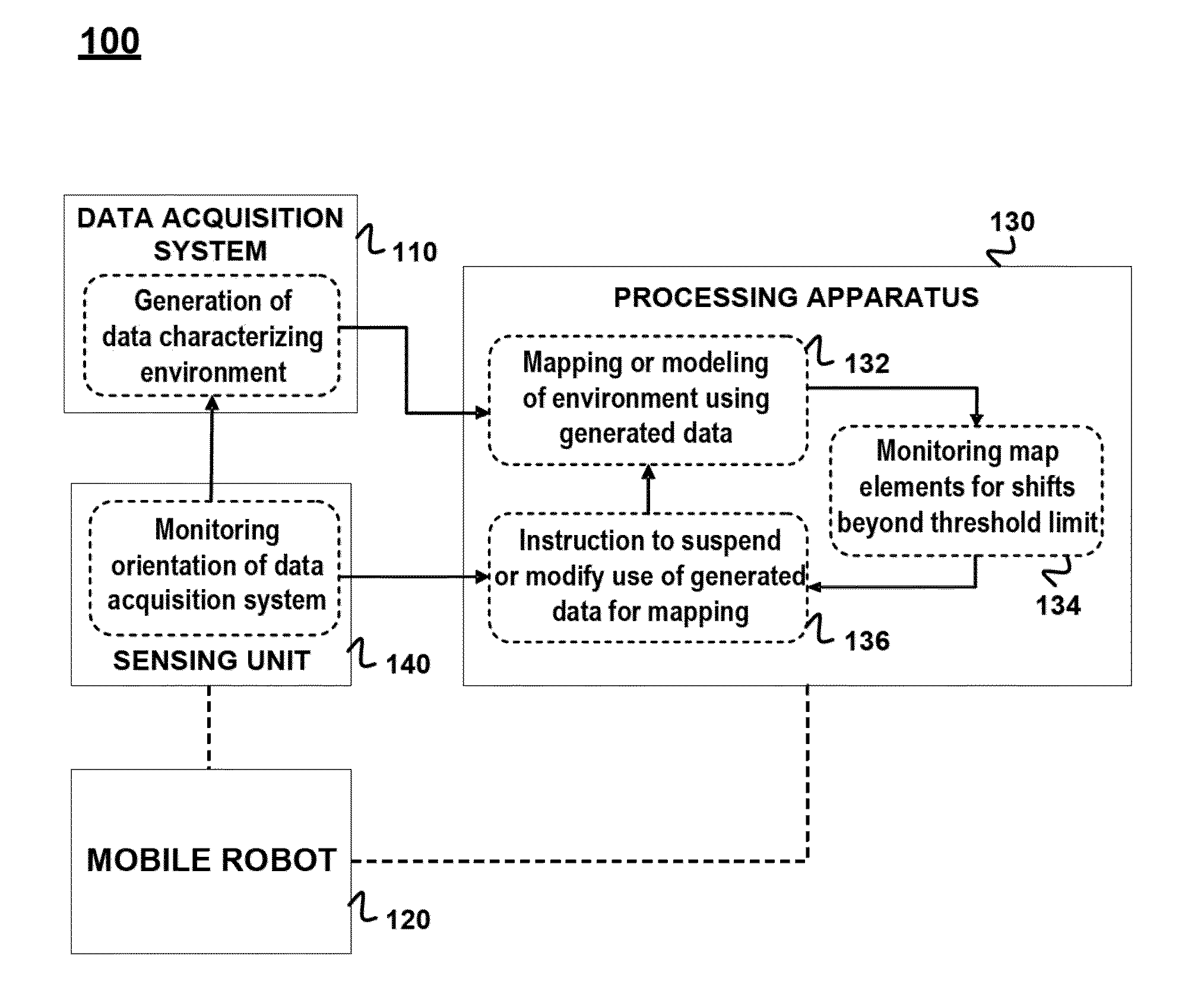

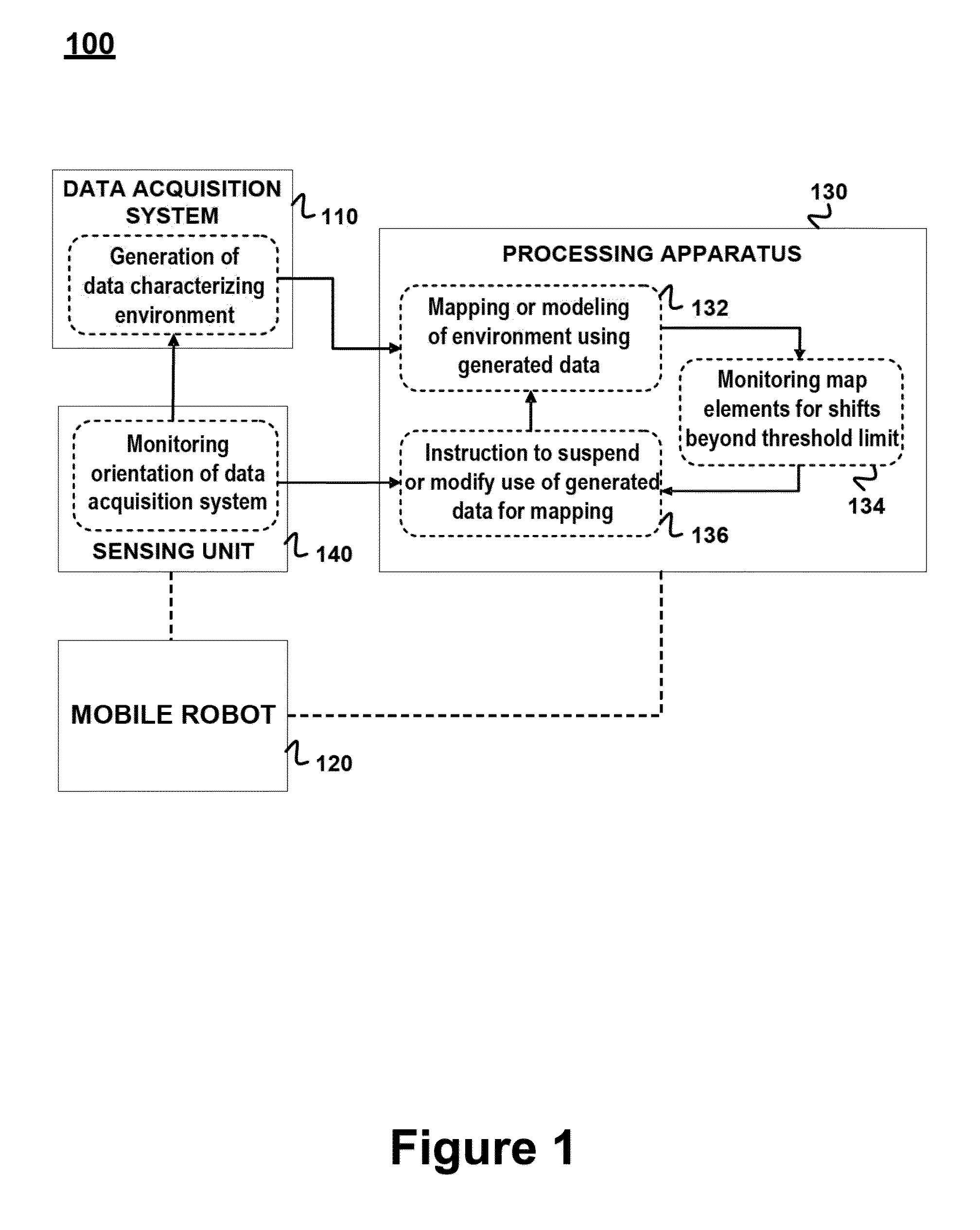

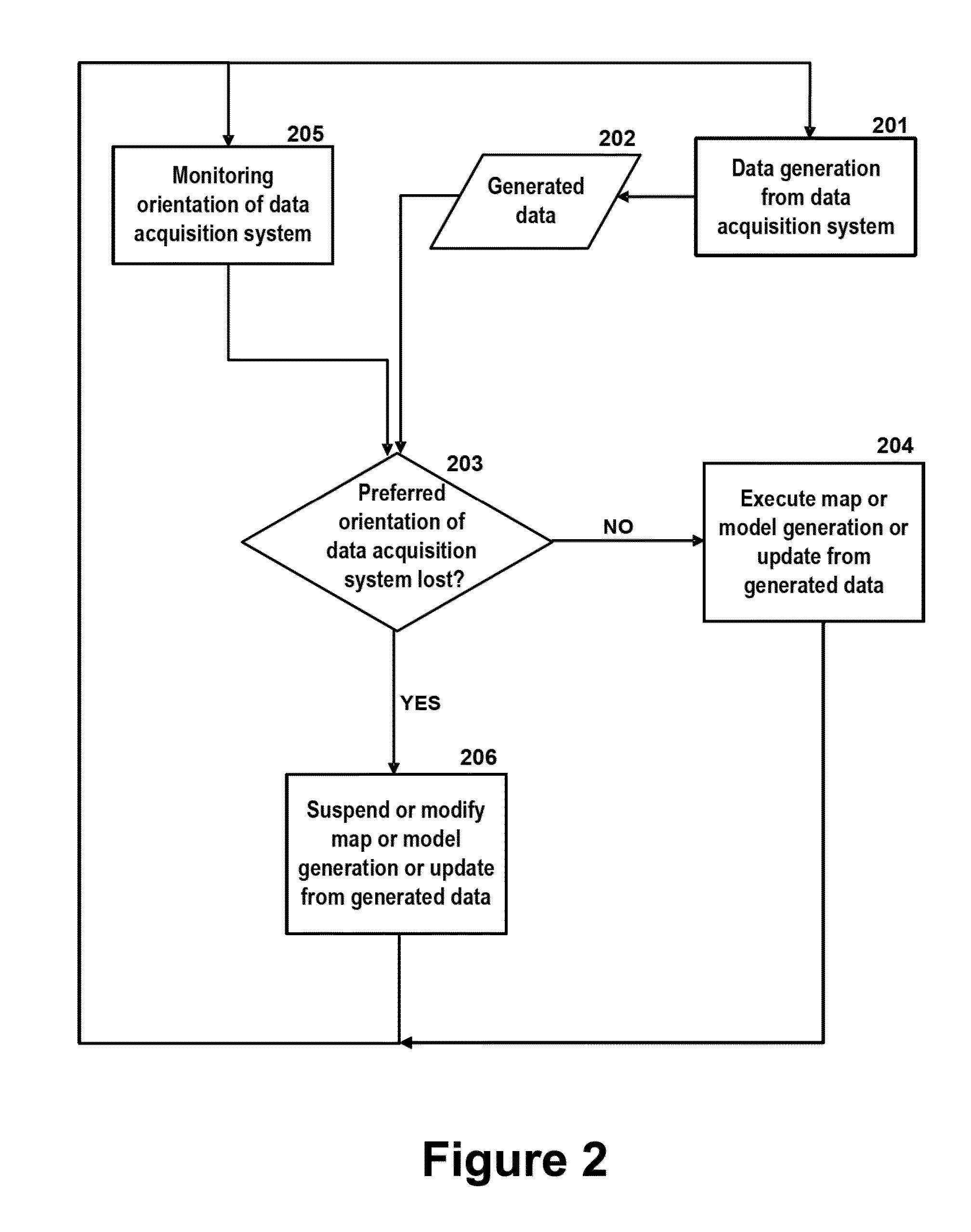

Method and apparatus for simultaneous localization and mapping of mobile robot environment

InactiveUS20110082585A1Maintain efficiencyProgramme-controlled manipulatorNavigation instrumentsCell basedMobile device

Techniques that optimize performance of simultaneous localization and mapping (SLAM) processes for mobile devices, typically a mobile robot. In one embodiment, erroneous particles are introduced to the particle filtering process of localization. Monitoring the weights of the erroneous particles relative to the particles maintained for SLAM provides a verification that the robot is localized and detection that it is no longer localized. In another embodiment, cell-based grid mapping of a mobile robot's environment also monitors cells for changes in their probability of occupancy. Cells with a changing occupancy probability are marked as dynamic and updating of such cells to the map is suspended or modified until their individual occupancy probabilities have stabilized. In another embodiment, mapping is suspended when it is determined that the device is acquiring data regarding its physical environment in such a way that use of the data for mapping will incorporate distortions into the map, as for example when the robotic device is tilted.

Owner:NEATO ROBOTICS

Simultaneous localization and mapping using multiple view feature descriptors

InactiveUS7831094B2Efficiently build necessary feature descriptorReliable correspondenceThree-dimensional object recognitionKaiman filterKernel principal component analysis

Owner:HONDA MOTOR CO LTD

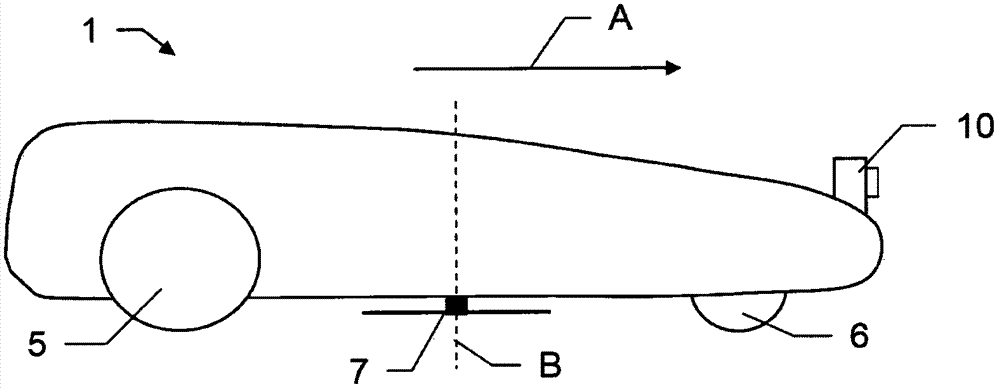

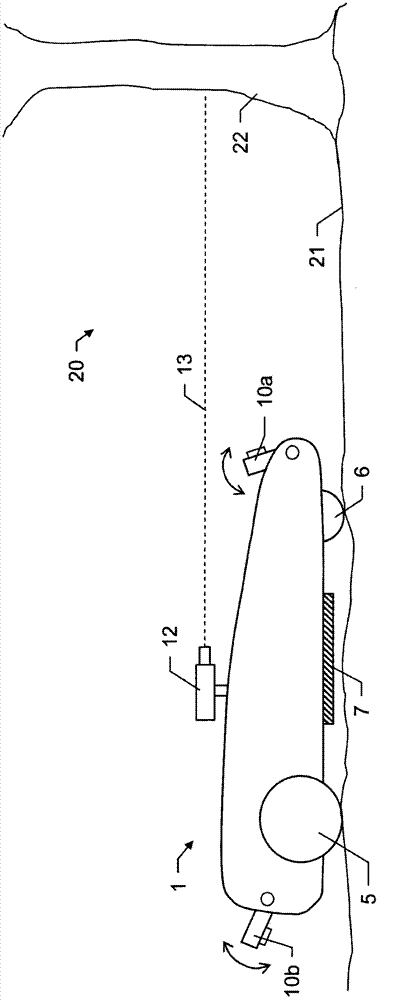

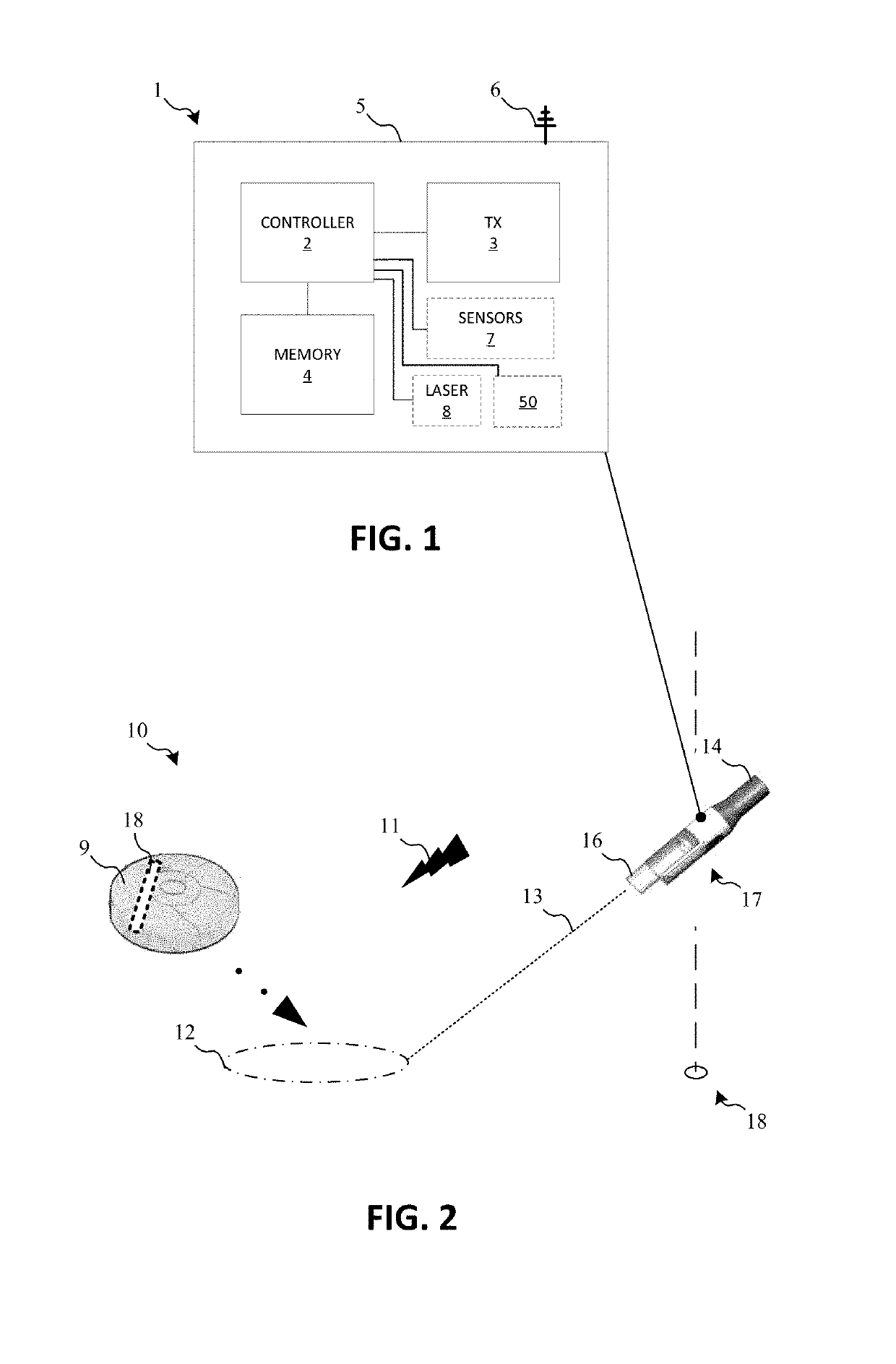

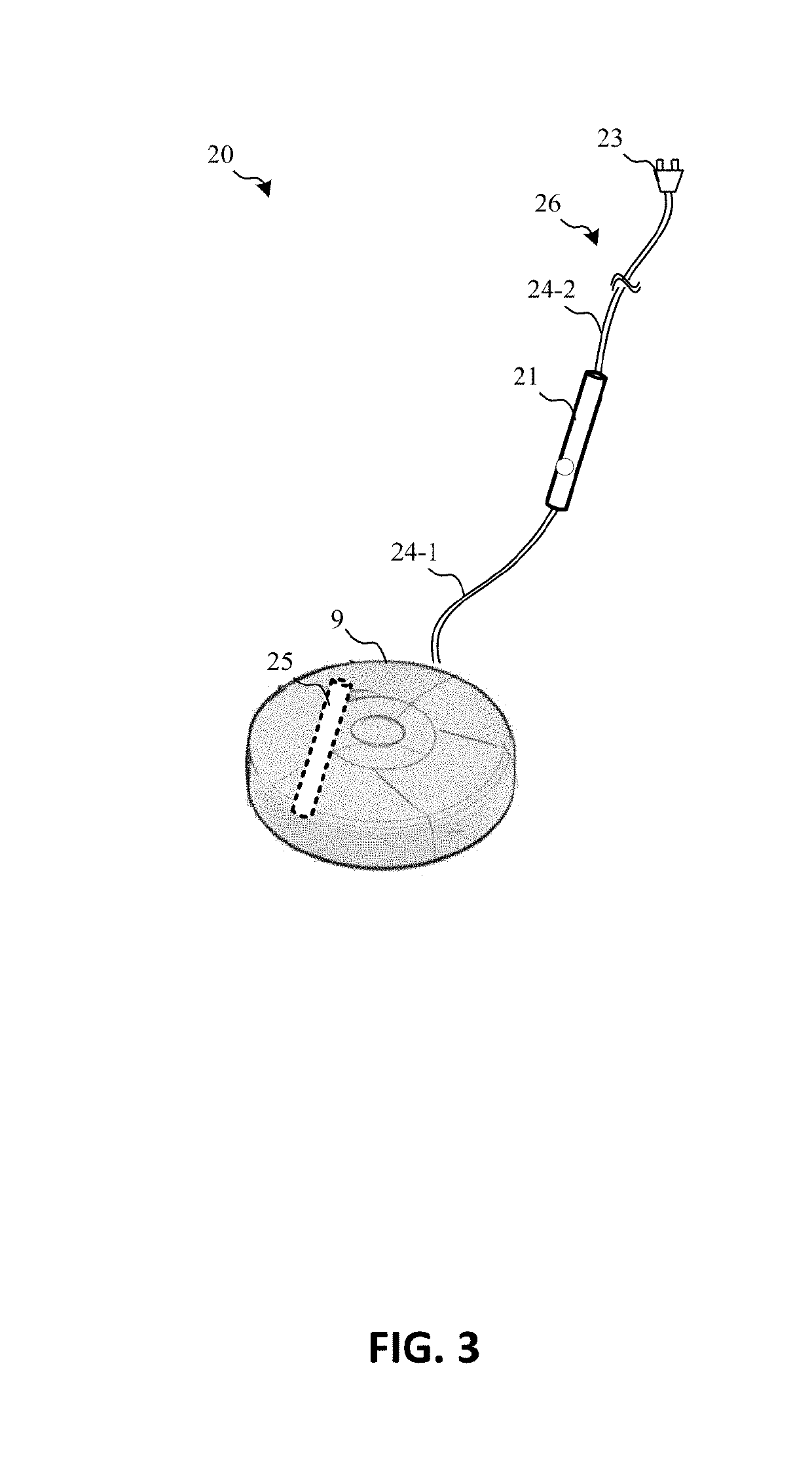

Autonomous gardening vehicle with camera

Method for generating scaled terrain information with an unmanned autonomous gardening vehicle (1), the gardening vehicle (1) comprising a driving unit comprising a set of at least one drive wheel (5) and a motor connected to the at least one drive wheel for providing movability of the gardening vehicle (1), a gardening-tool (7) and a camera (10a-b) for capturing images of a terrain, the camera (10a-b) being positioned and aligned in known manner relative to the gardening vehicle (1). In context of the method the gardening vehicle (1) is moved in the terrain whilst concurrently generating a set of image data by capturing an image series of terrain sections so that at least two (successive) images of the image series cover an amount of identical points in the terrain, wherein the terrain sections are defined by a viewing area of the camera (10a-b) at respective positions of the camera while moving. Furthermore, a simultaneous localisation and mapping (SLAM) algorithm is applied to the set of image data and thereby terrain data is derived, the terrain data comprising a point cloud representing the captured terrain and position data relating to a relative position of the gardening vehicle (1) in the terrain. Additionally, the point cloud is scaled by applying absolute scale information to the terrain data, particularly wherein the position data is scaled.

Owner:HEXAGON TECH CENT GMBH

Scanner vis

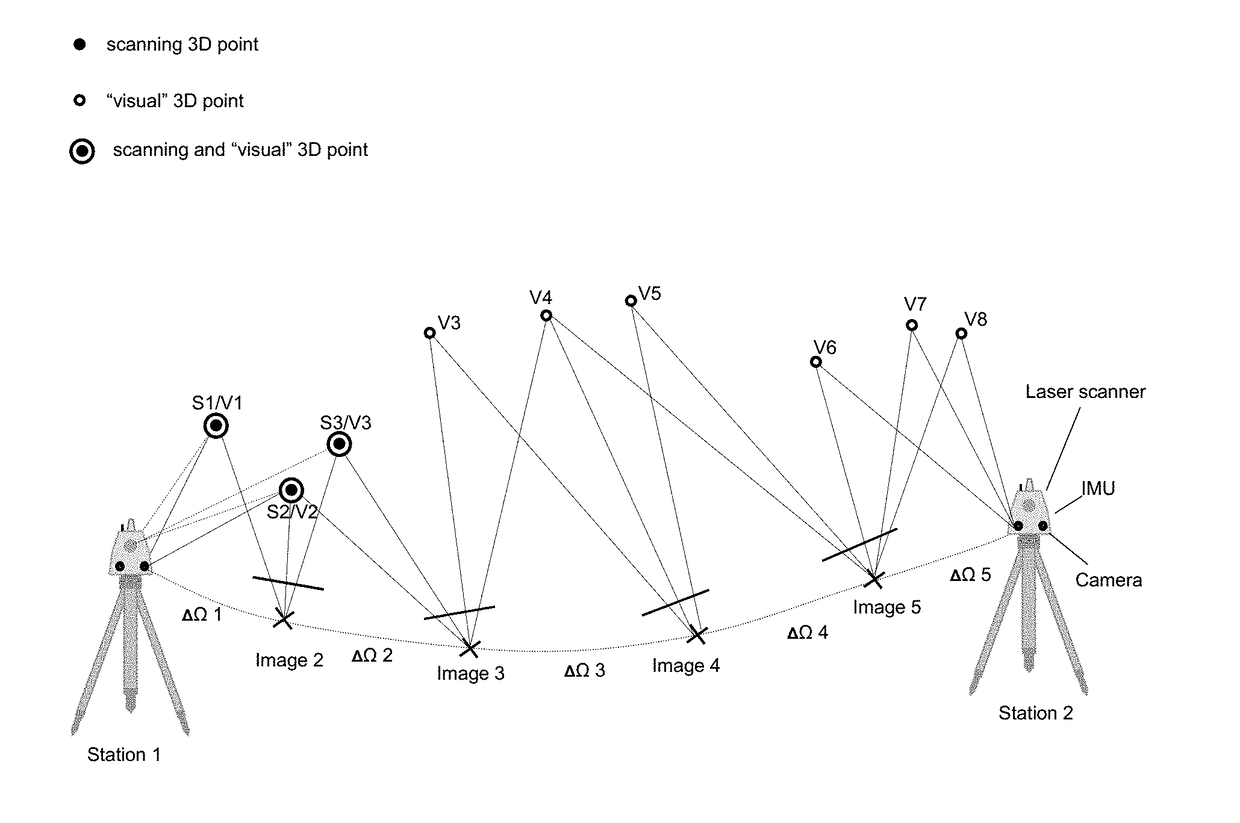

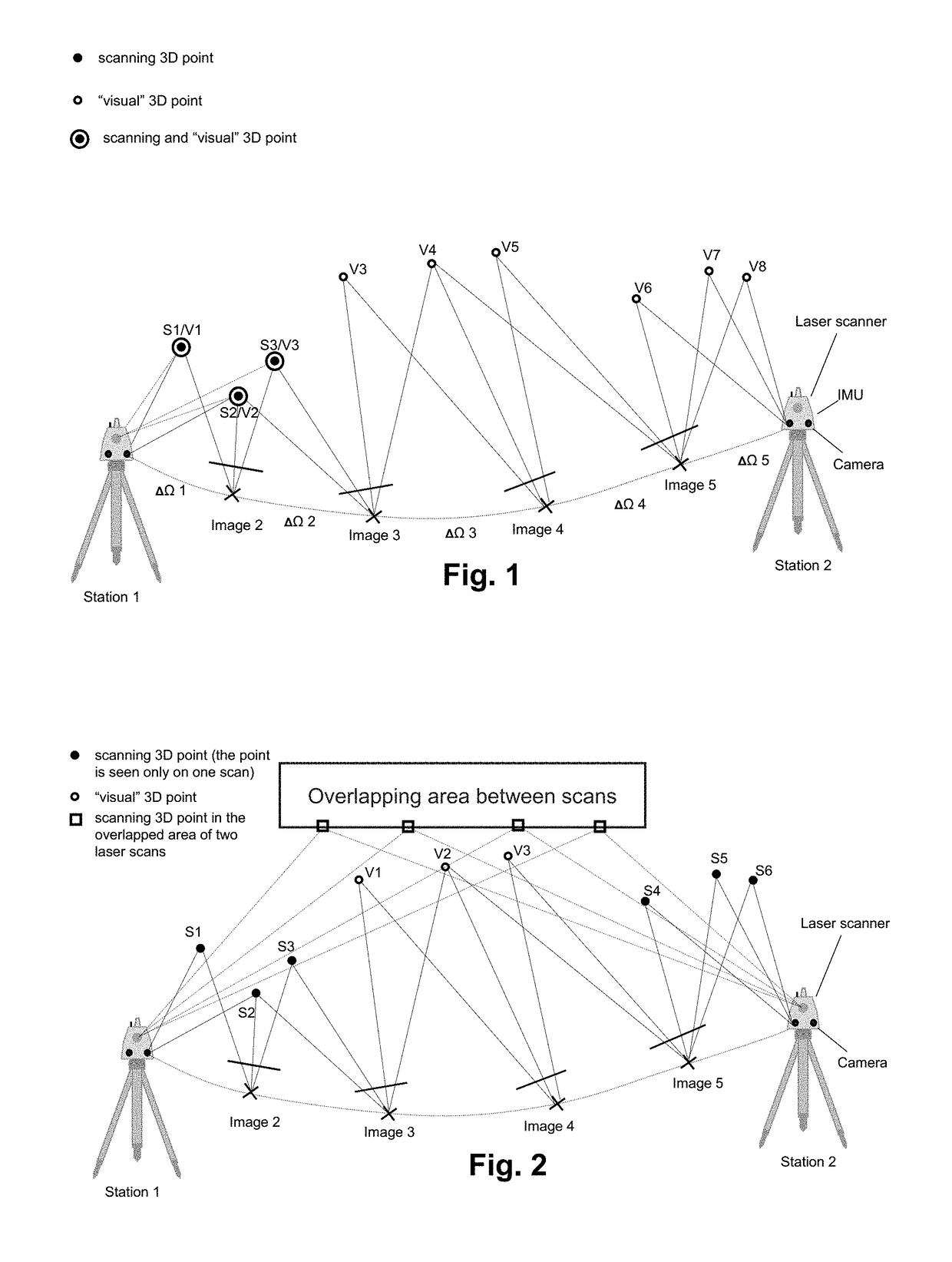

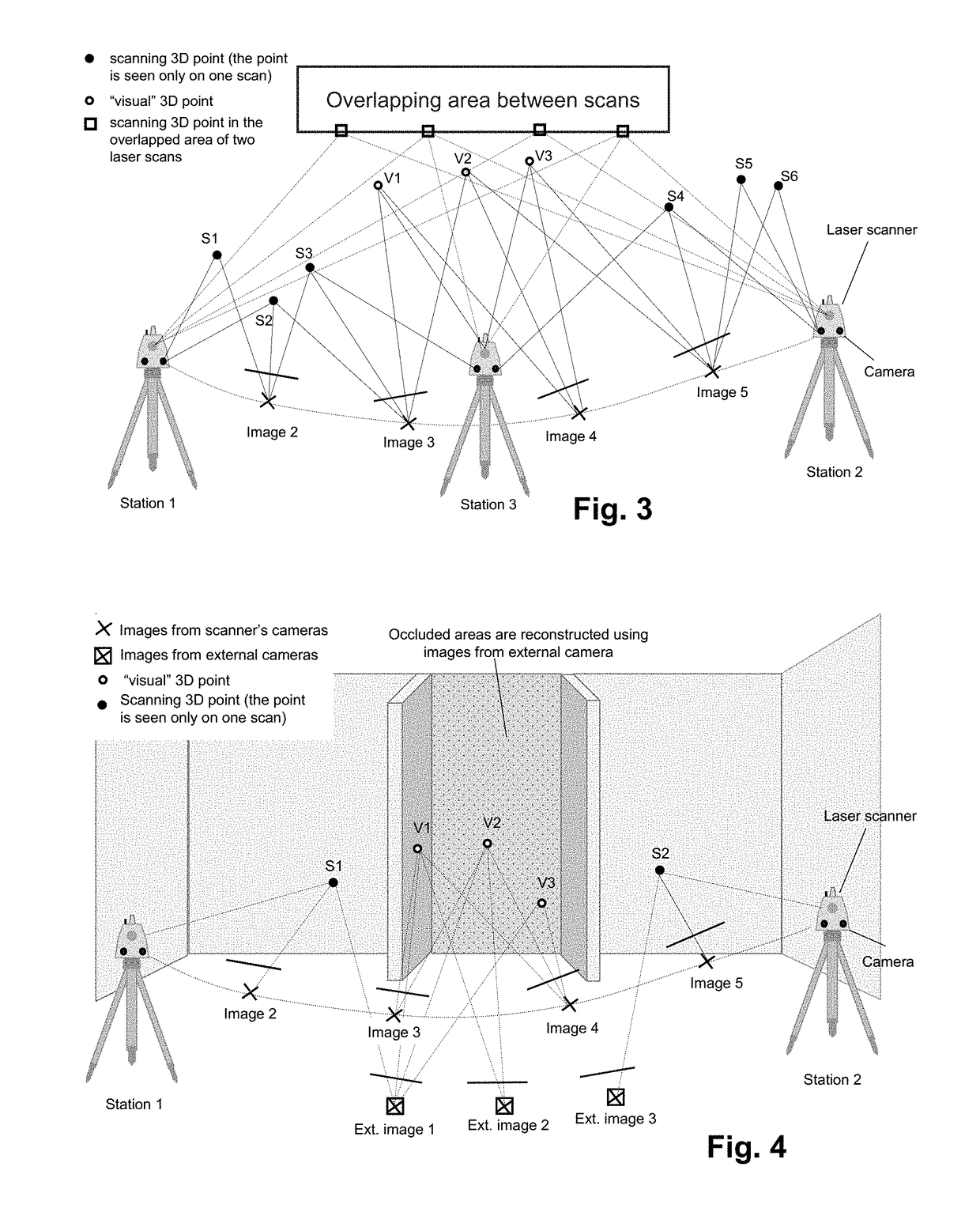

ActiveUS20180158200A1Distance minimizationReduce driftImage enhancementTelevision system detailsPoint cloudSurvey instrument

A method for registering two or more three-dimensional (3D) point clouds. The method includes, with a surveying instrument, obtaining a first 3D point cloud of a first setting at a first position, initiating a first Simultaneous Localisation and Mapping (SLAM) process by capturing first initial image data at the first position with a camera unit comprised by the surveying instrument, wherein the first initial image data and the first 3D point cloud share a first overlap, finalising the first SLAM process at the second position by capturing first final image data with the camera unit, wherein the first final image data are comprised by the first image data, with the surveying instrument, obtaining a second 3D point cloud of a second setting at the second position, and based on the first SLAM process, registering the first 3D point cloud and the second 3D point cloud relative to each other.

Owner:HEXAGON TECH CENT GMBH

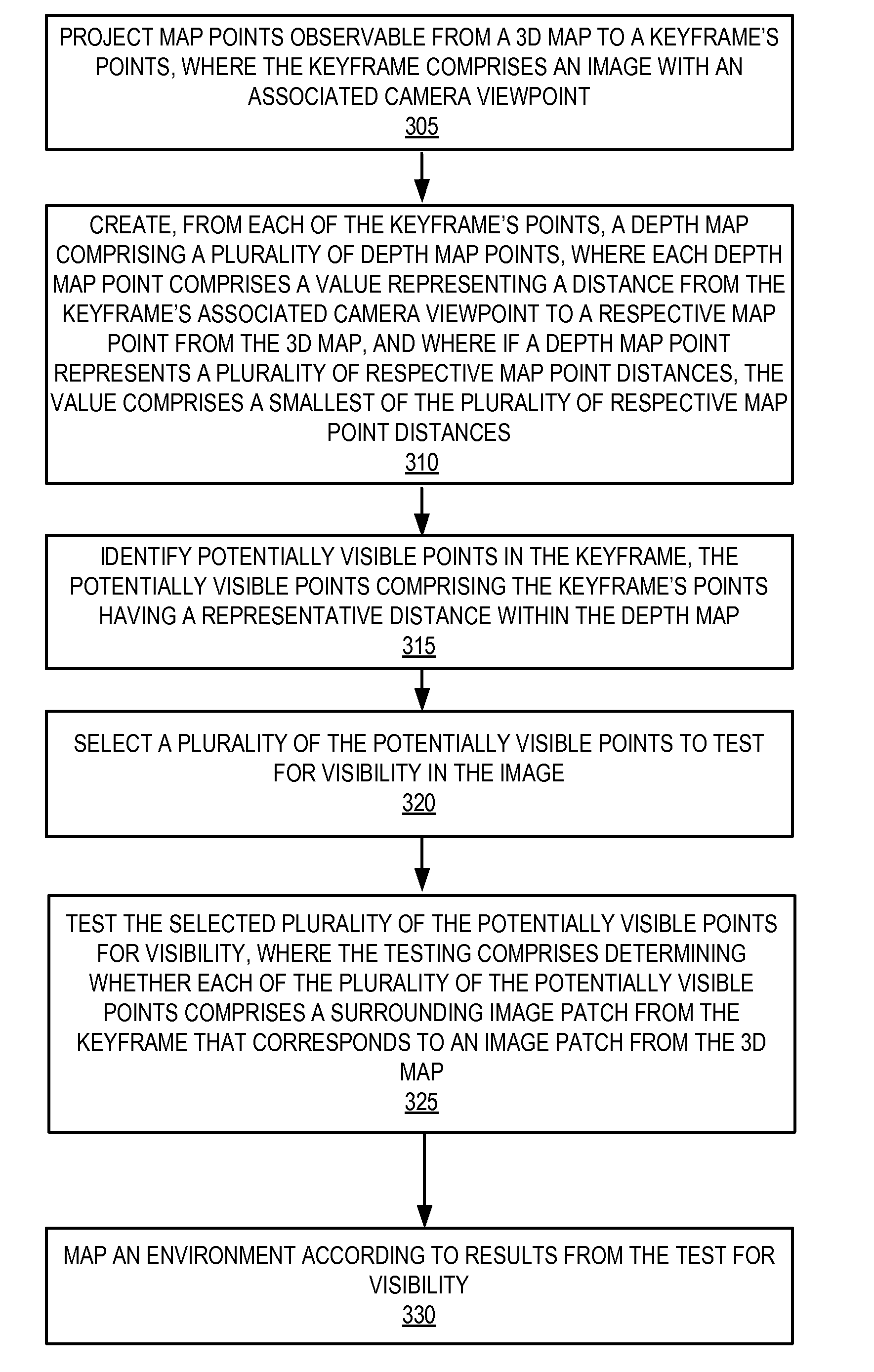

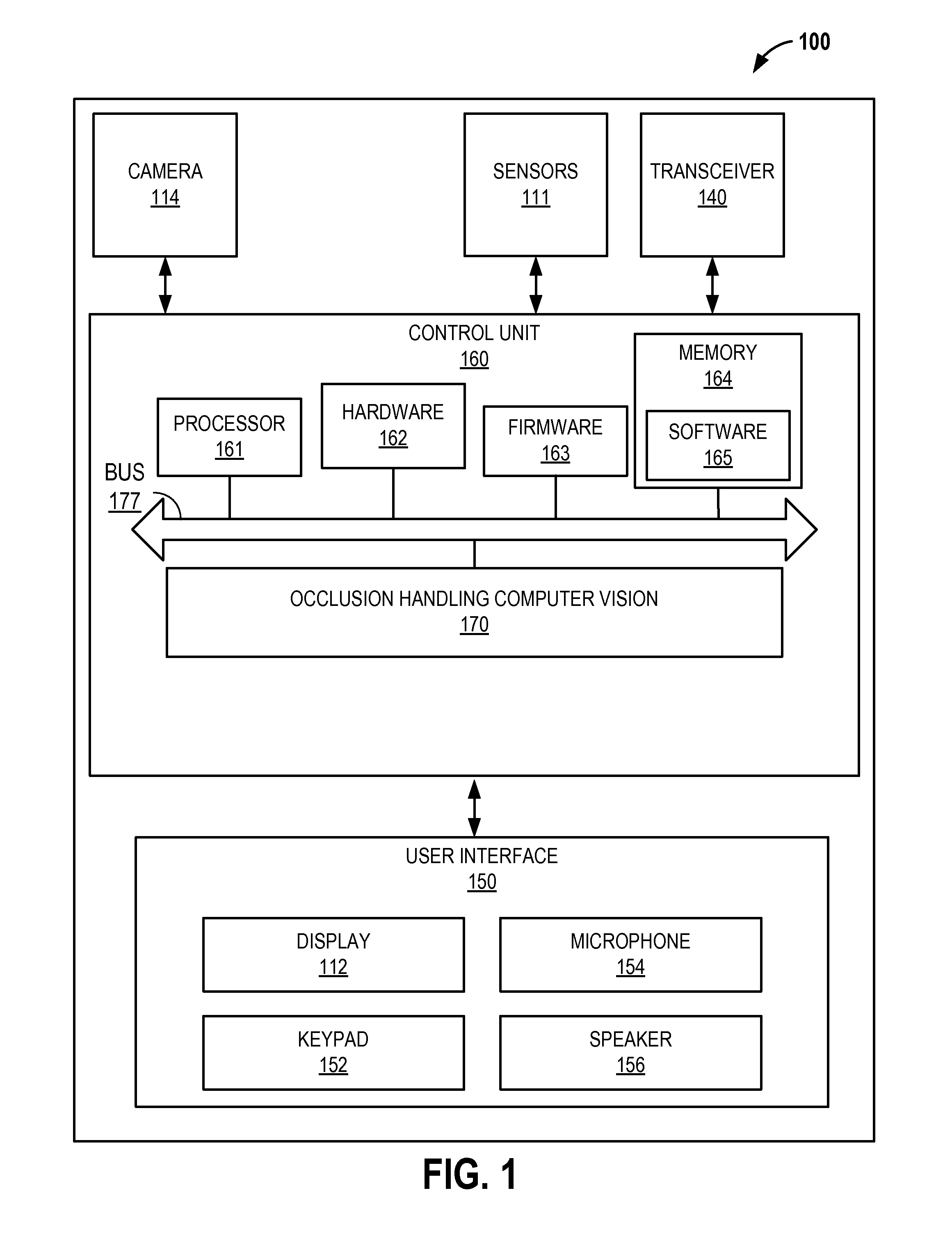

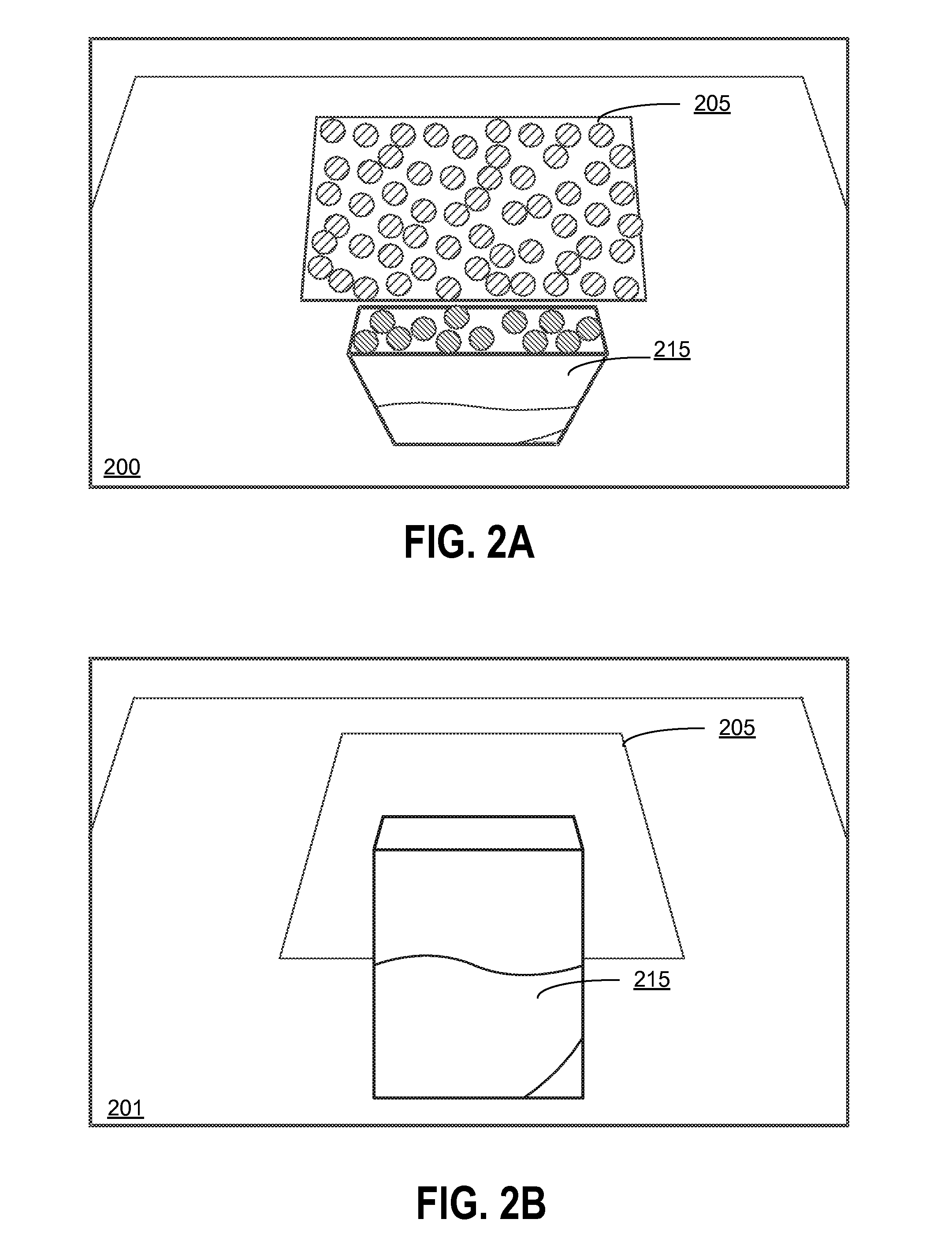

Occlusion handling for computer vision

Disclosed are a system, apparatus, and method for performing occlusion handling for simultaneous localization and mapping. Occluded map points may be detected according to a depth-mask created according to an image keyframe. Dividing a scene into sections may optimize the depth-mask. Size of depth-mask points may be adjusted according to intensity. Visibility may be verified with an optimized subset of possible map points. Visibility may be propagated to nearby points in response to determining an initial visibility of a first point's surrounding image patch. Visibility may also be organized and optimized according to a grid.

Owner:QUALCOMM INC

Systems and methods for VSLAM optimization

The invention is related to methods and apparatus that use a visual sensor and dead reckoning sensors to process Simultaneous Localization and Mapping (SLAM). These techniques can be used in robot navigation. Advantageously, such visual techniques can be used to autonomously generate and update a map. Unlike with laser rangefinders, the visual techniques are economically practical in a wide range of applications and can be used in relatively dynamic environments, such as environments in which people move. Certain embodiments contemplate improvements to the front-end processing in a SLAM-based system. Particularly, certain of these embodiments contemplate a novel landmark matching process. Certain of these embodiments also contemplate a novel landmark creation process. Certain embodiments contemplate improvements to the back-end processing in a SLAM-based system. Particularly, certain of these embodiments contemplate algorithms for modifying the SLAM graph in real-time to achieve a more efficient structure.

Owner:IROBOT CORP

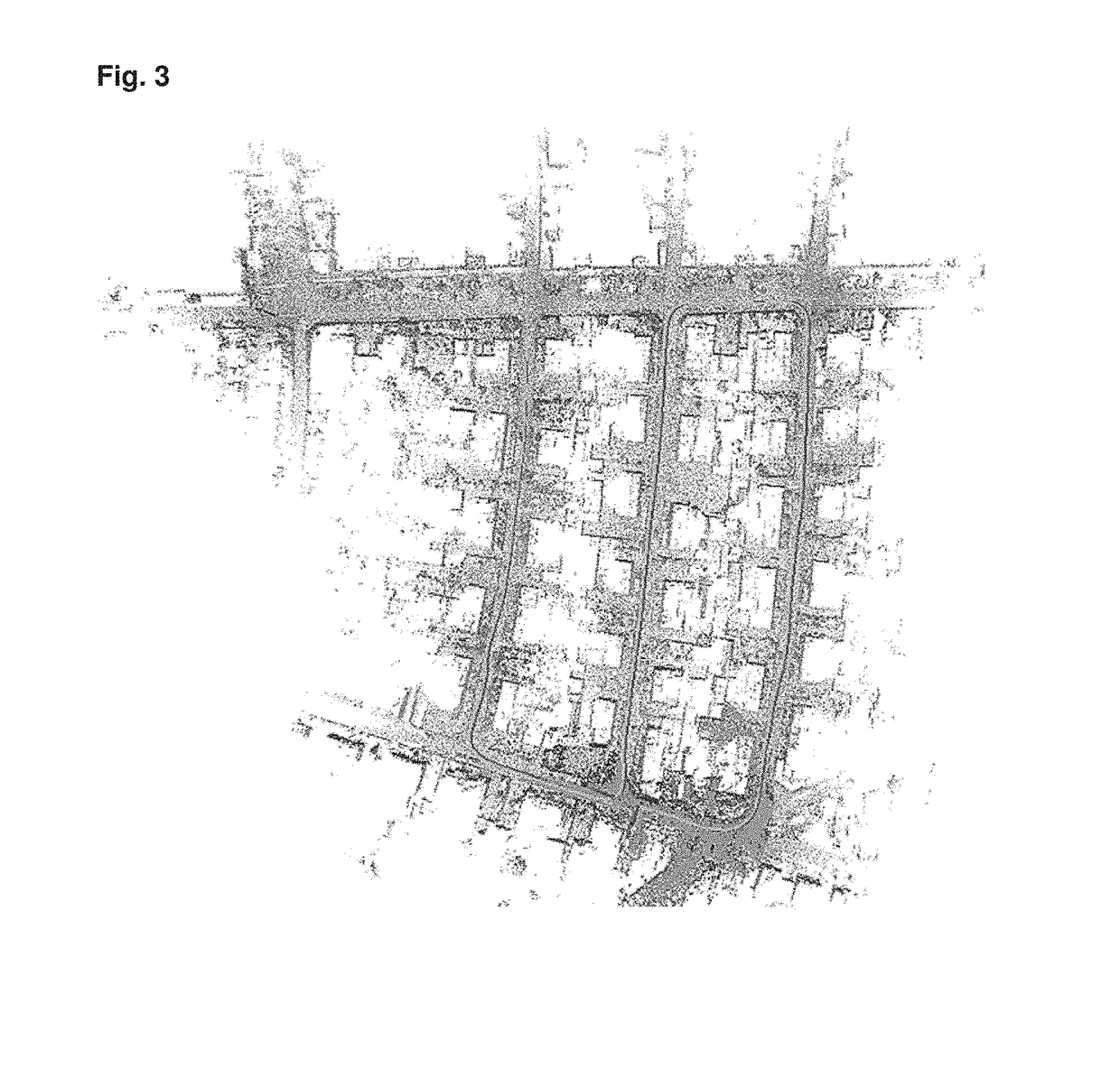

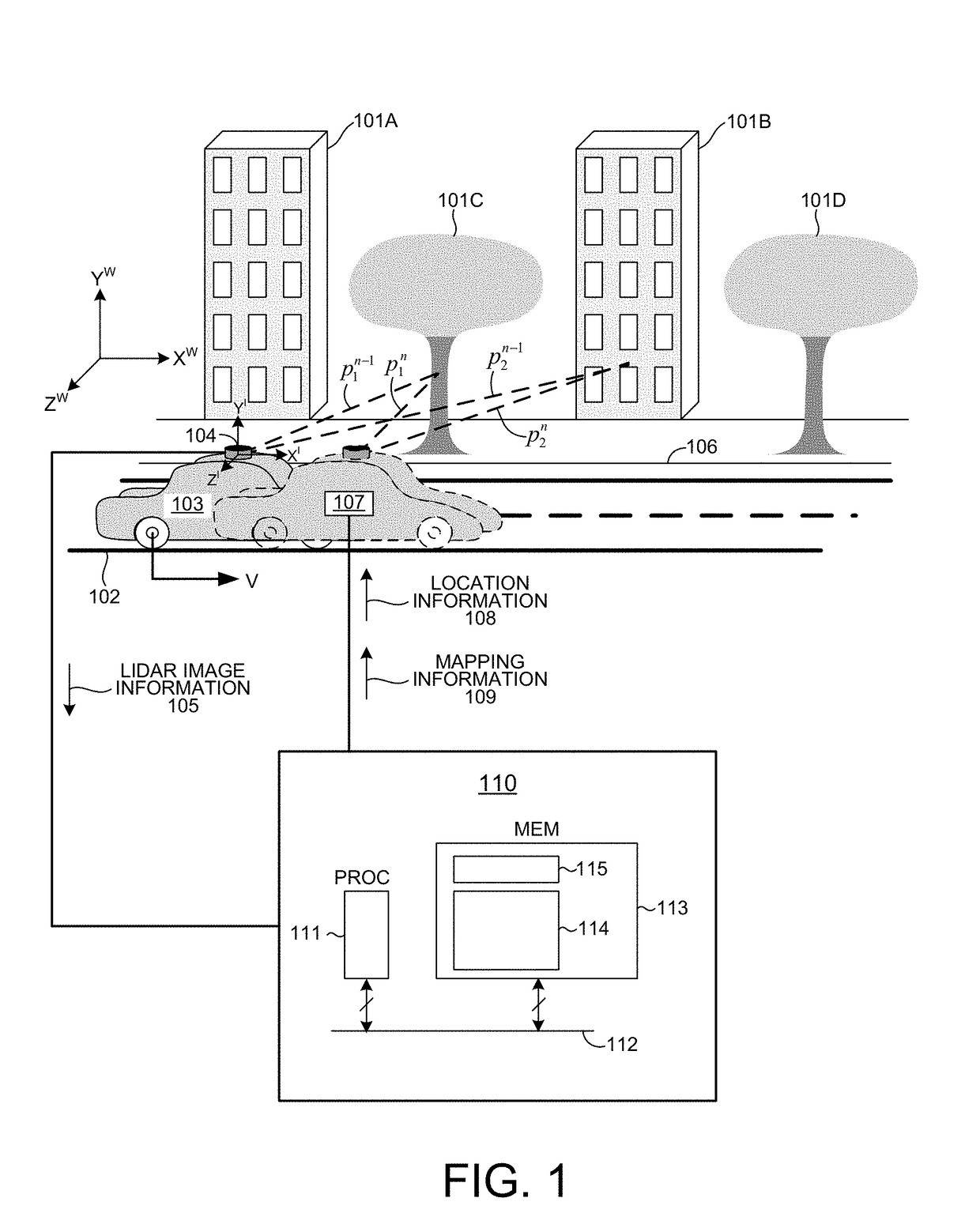

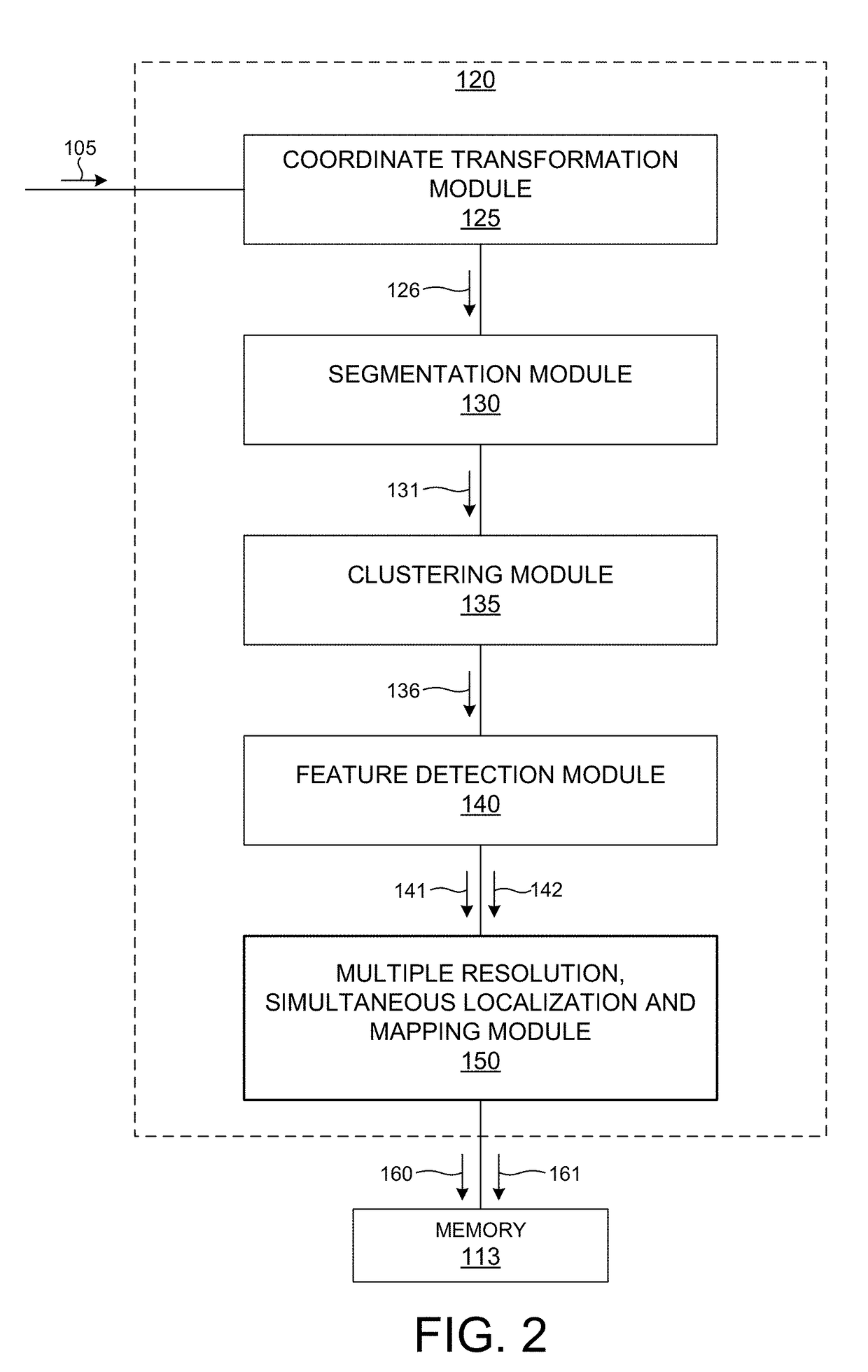

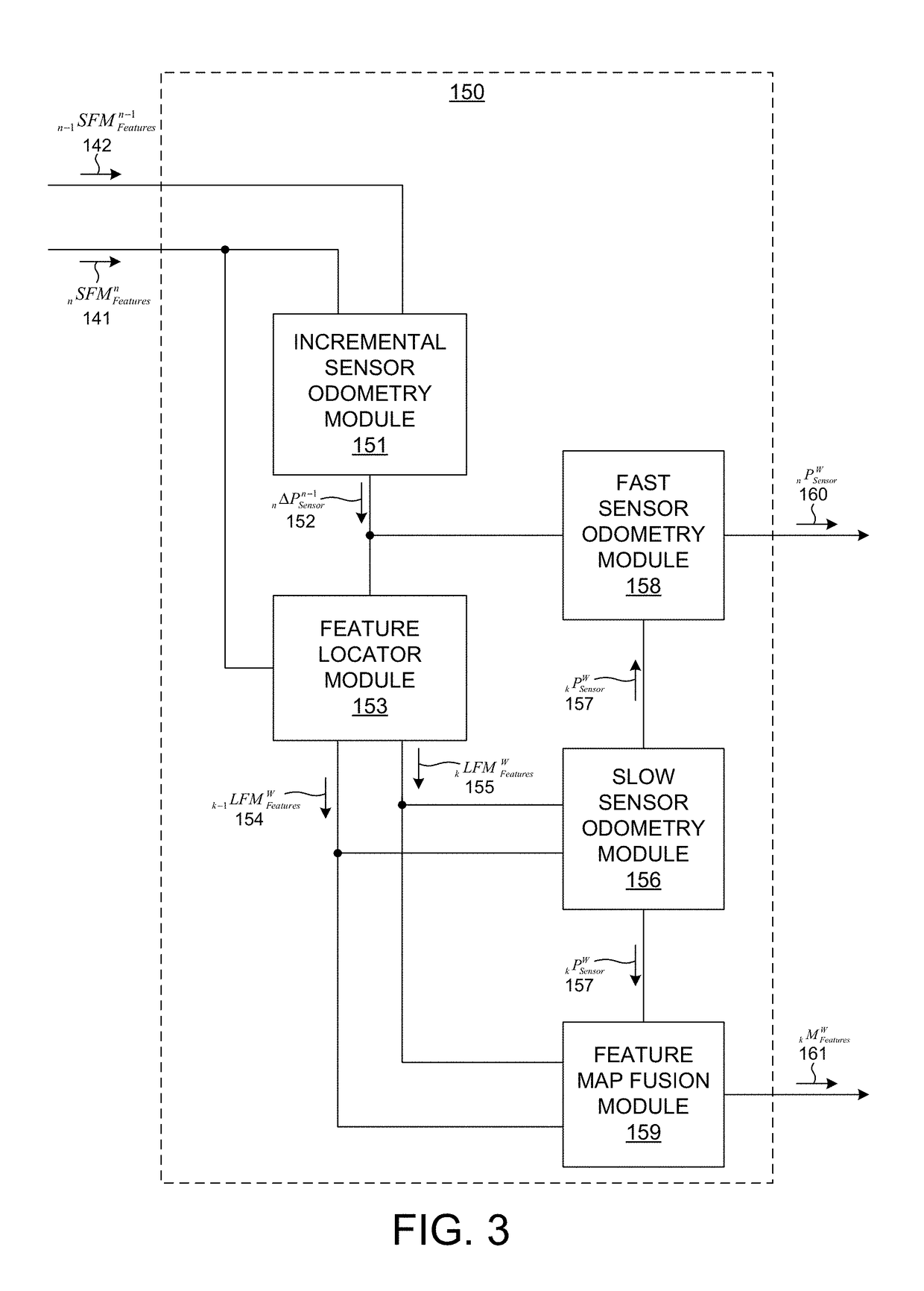

Multiple Resolution, Simultaneous Localization and Mapping Based On 3-D LIDAR Measurements

PendingUS20190079193A1Improve computing efficiencyAccuracyElectromagnetic wave reradiationFeature extractionOptical property

Methods and systems for improved simultaneous localization and mapping based on 3-D LIDAR image data are presented herein. In one aspect, LIDAR image frames are segmented and clustered before feature detection to improve computational efficiency while maintaining both mapping and localization accuracy. Segmentation involves removing redundant data before feature extraction. Clustering involves grouping pixels associated with similar objects together before feature extraction. In another aspect, features are extracted from LIDAR image frames based on a measured optical property associated with each measured point. The pools of feature points comprise a low resolution feature map associated with each image frame. Low resolution feature maps are aggregated over time to generate high resolution feature maps. In another aspect, the location of a LIDAR measurement system in a three dimensional environment is slowly updated based on the high resolution feature maps and quickly updated based on the low resolution feature maps.

Owner:VELODYNE LIDAR USA INC

System and method for localizing a trackee at a location and mapping the location using inertial sensor information

ActiveUS8751151B2Long durationRoad vehicles traffic controlPosition fixationPosition dependentNetwork communication

Owner:TRX SYST

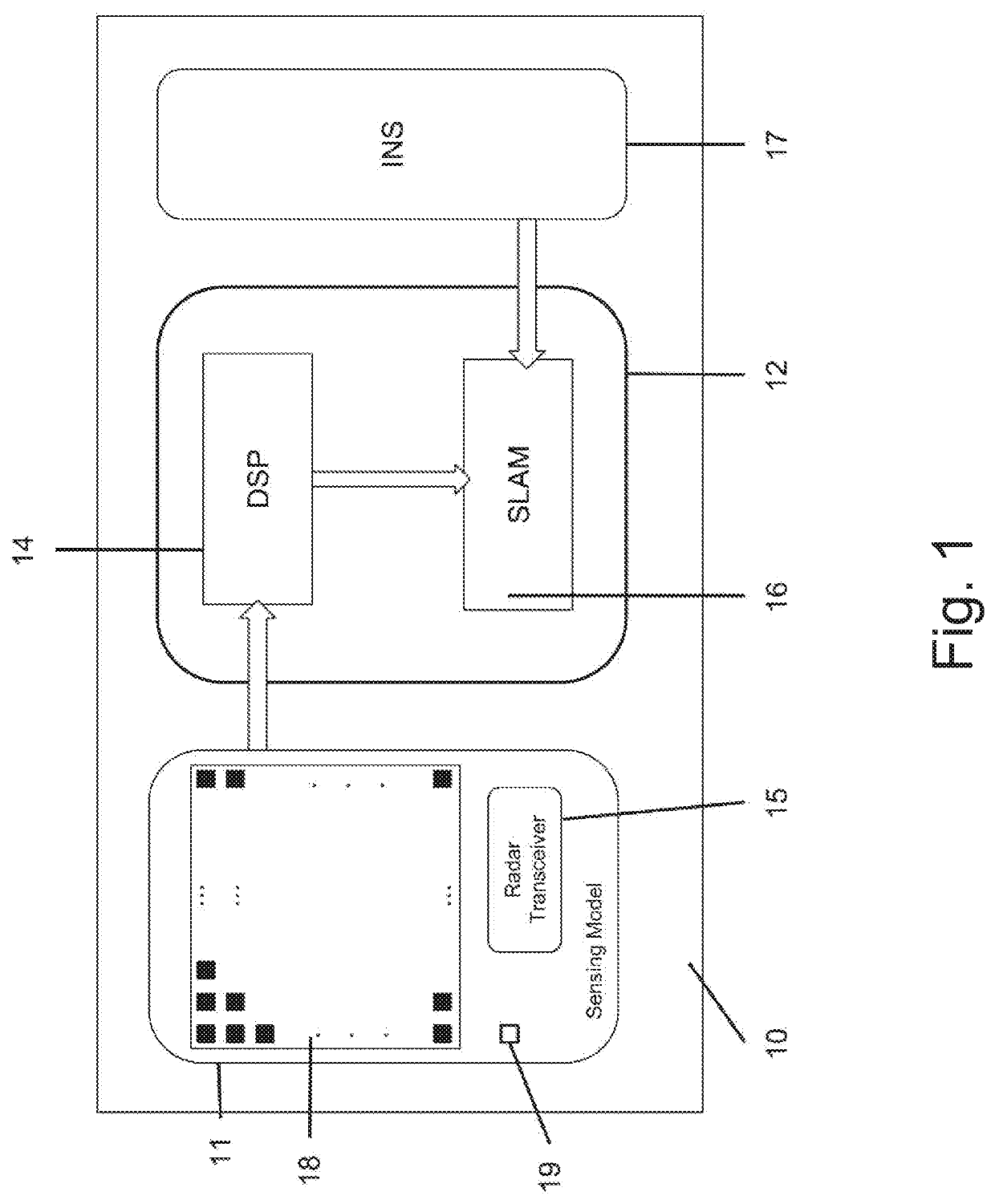

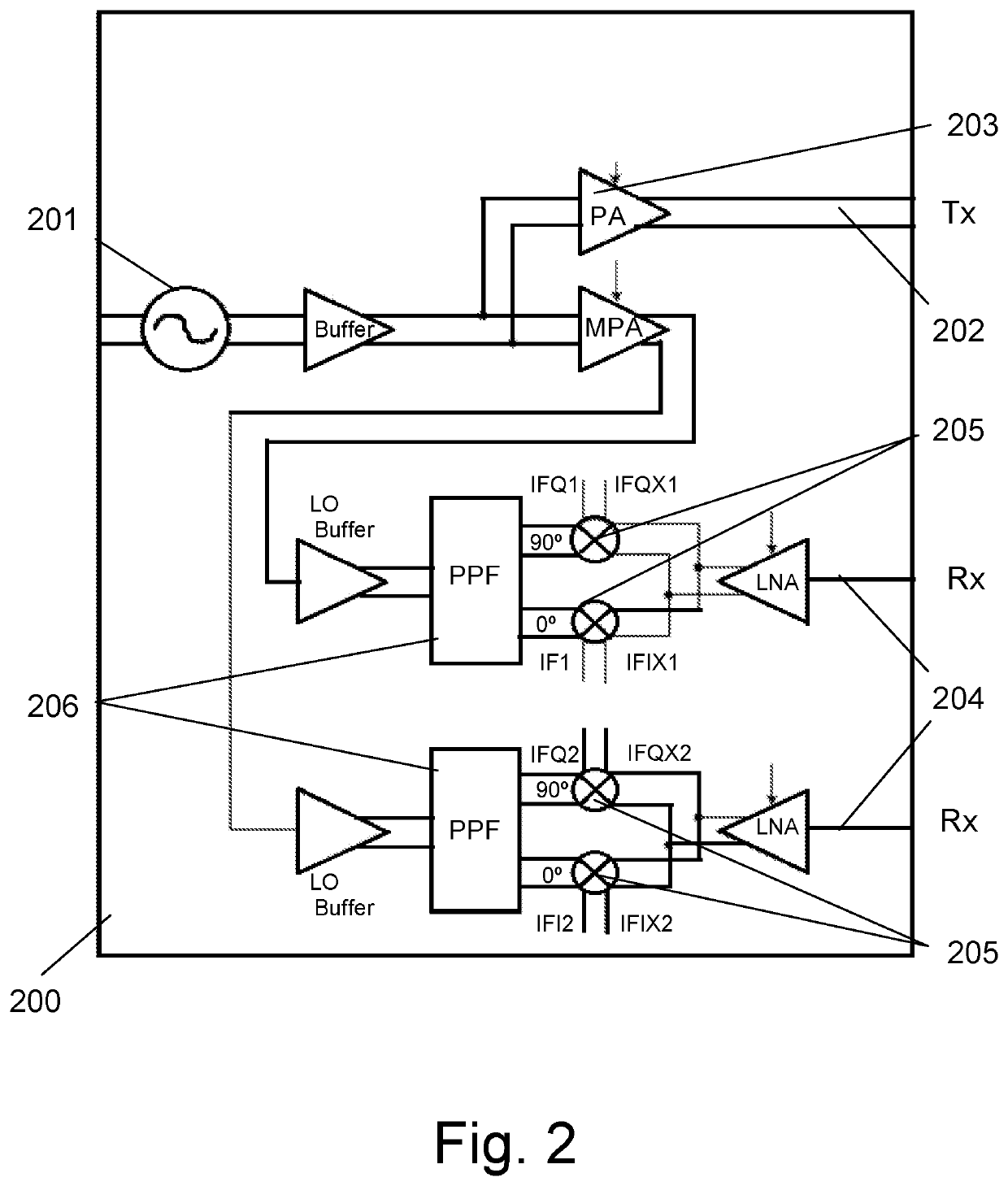

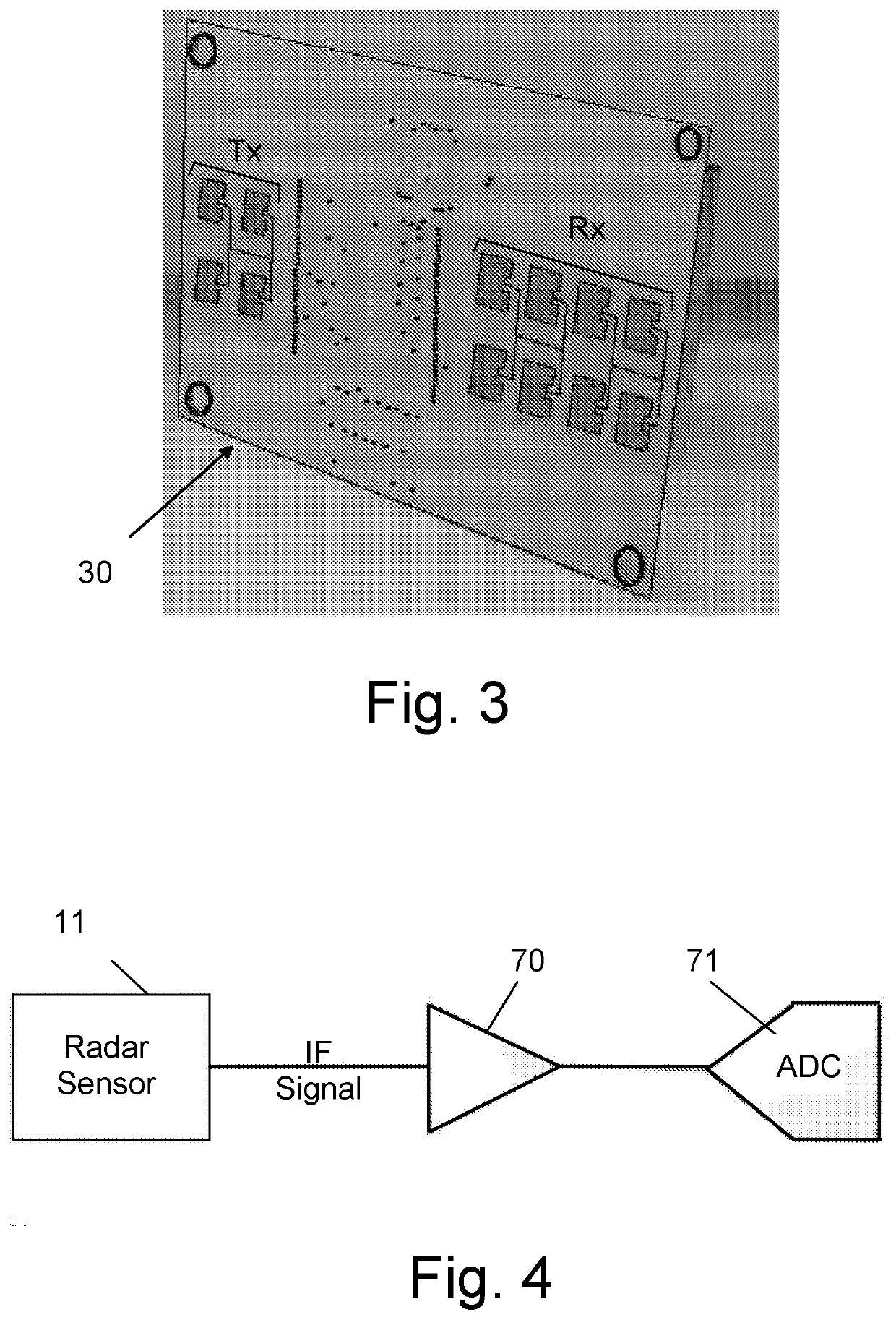

Radar-based system and method for real-time simultaneous localization and mapping

ActiveUS20190384318A1Position/course control in two dimensionsRadio wave reradiation/reflectionMems sensorsNormal-Wishart distribution

A method for performing Simultaneous Localization And Mapping (SLAM) of the surroundings of an autonomously controlled moving platform (such as UAV or a vehicle), using radar signals, comprising the following steps: receiving samples of the received IF radar signals, from the DSP; receiving previous map from memory; receiving data regarding motions parameters of the moving platform from an Inertial Navigation System (INS) module, containing MEMS sensors data; grouping points to bodies using a clustering process; merging bodies that are marked by the clustering process as separate bodies, using prior knowledge; for each body, creating a local grid map around the body with a mass function per entry of the grid map; matching between bodies from previous map and the new bodies; calculating the assumed new location of the moving platform for each on the mL particles using previous frame results and new INS data; for each calculated new location of the mL particles with normal distribution, sampling N assumed locations; for each body and each body particle from the previous map and for each location particle, calculating the velocity vector and orientation of the body between previous and current map, with respect to the environment using the body velocity calculated in previous step and sampling N2 particles of the body, or using image registration to create one particle where each particle contains the location and orientation of the body in the new map; for each particle from the collection of particles of the body: propagating the body grid according the chosen particle; calculating the Conflict of the new observed body and propagated body grid, using a fusion function on the parts of the grid that are matched; calculating Extra Conflict as a sum of the mass of occupied new and old grid cells that do not have a match between the grids; calculating particle weight as an inverse weight of the combination between Conflict and Extra conflict; for each N1 location particle, calculating the weight as the sum of the best weight per body for that particle; resampling mL particles for locations according to the location weight; for each body, choosing mB particles from the particles with location in one of the mL particles for the chosen location, and according to the particles weights; for each body and each one of the mB particles calculate the body velocity using motion model; and creating map for next step, with all the chosen particles and the mass function of the grid around each body, for each body particle according to the fusion function.

Owner:ARBE ROBOTICS LTD

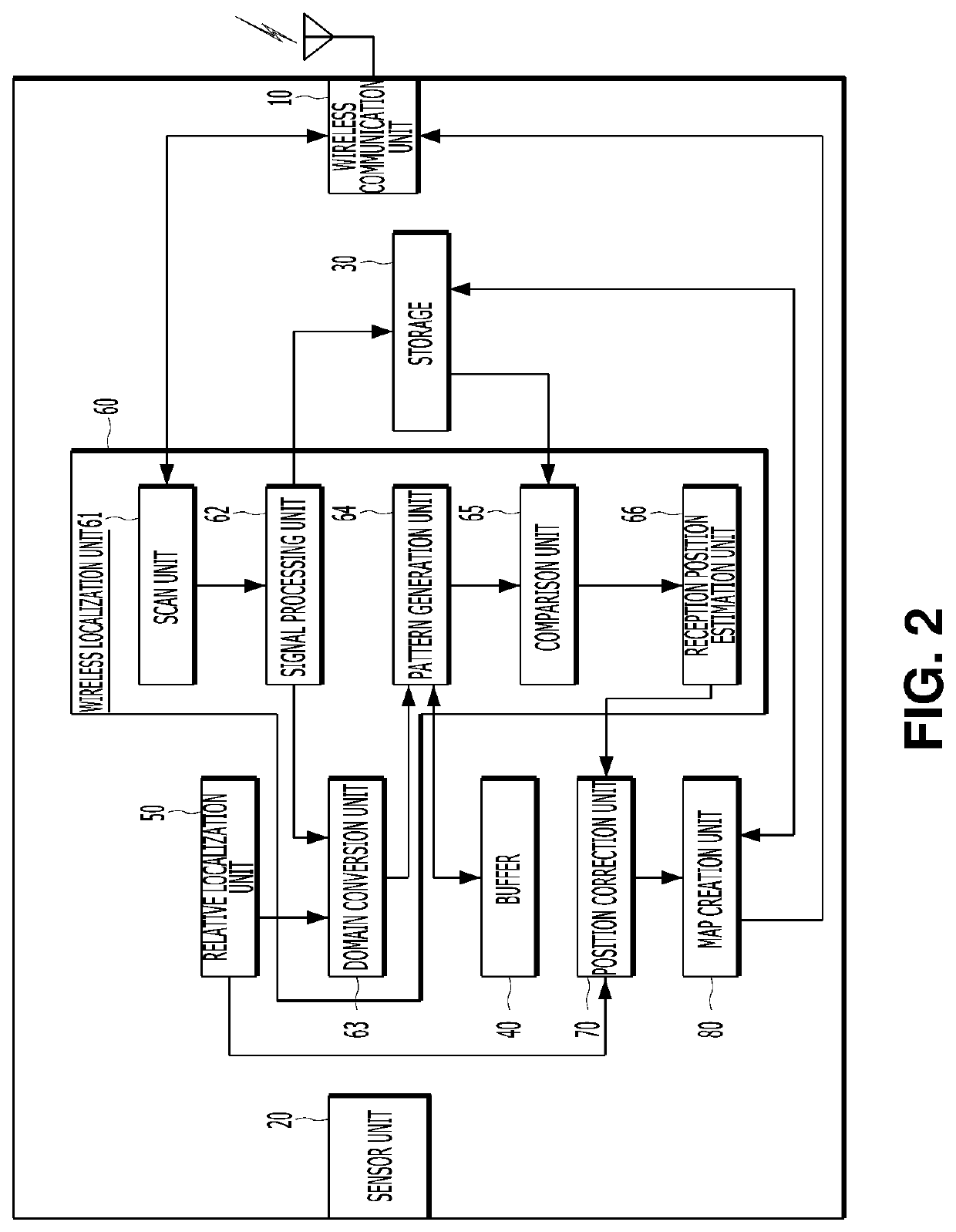

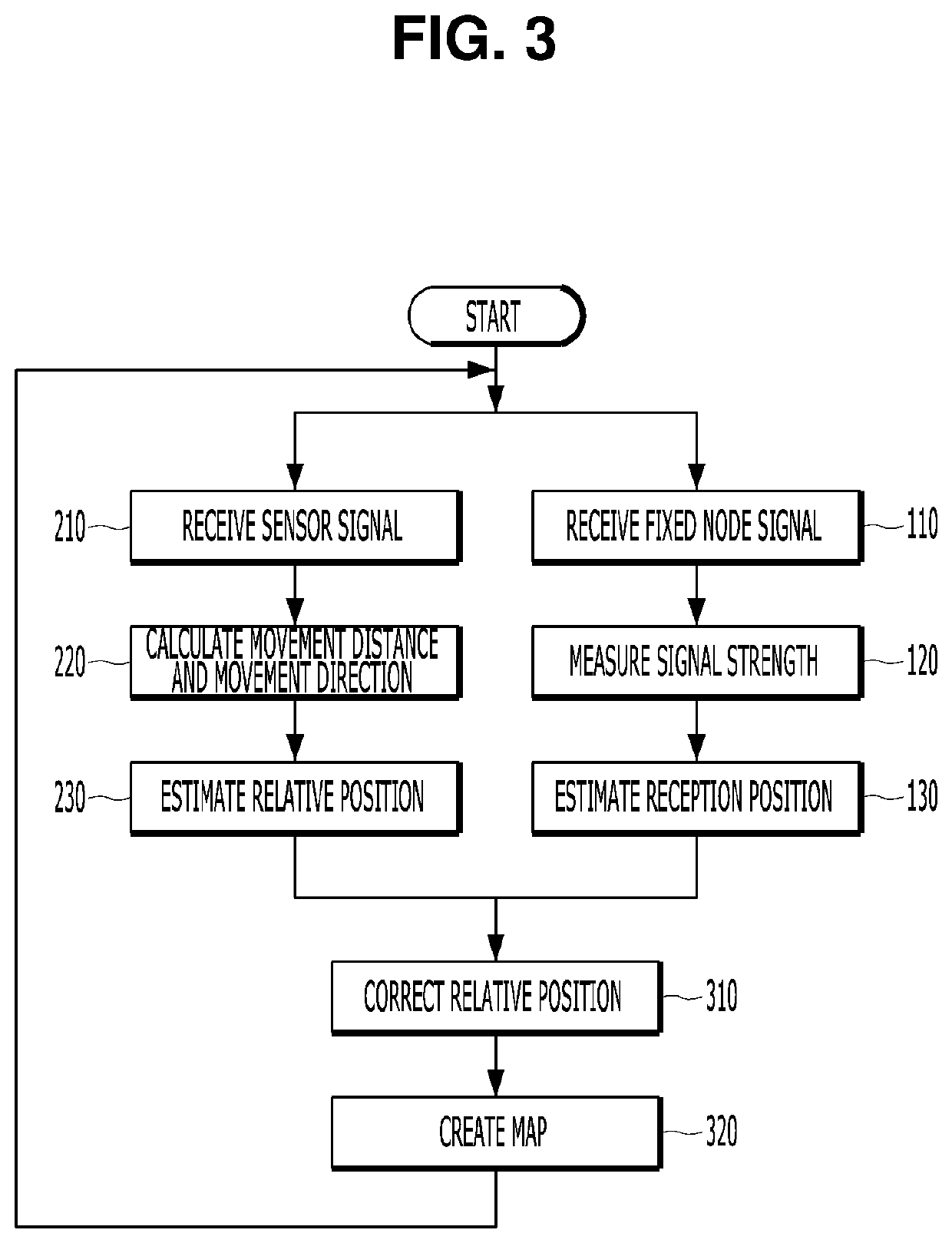

Slam method and apparatus robust to wireless environment change

ActiveUS20200033463A1Accurately estimate positionLow costPosition fixationUsing reradiationMotion sensingSignal strength

The present invention relates to SLAM (simultaneous localization and mapping) method and apparatus robust to a wireless environment change. A relative position of a moving node is estimated based on motion sensing of the moving node, the relative position of the moving node is corrected based on a comparison between a change pattern of at least one signal strength received over a plurality of time points and a signal strength distribution in a region in which the moving node is located, a route of the region is represented by using the relative position corrected as described above, and thereby, it is possible accurately estimate a position of the moving node and to create a map in which very accurate route information is recorded throughout the entire region at the same time, even if a wireless environment change such as signal interference between communication channels, expansion of an access point, and occurrence of a failure or an obstacle is made or a poor wireless environment such as lack of the number of access points occurs.

Owner:KOREA INST OF SCI & TECH

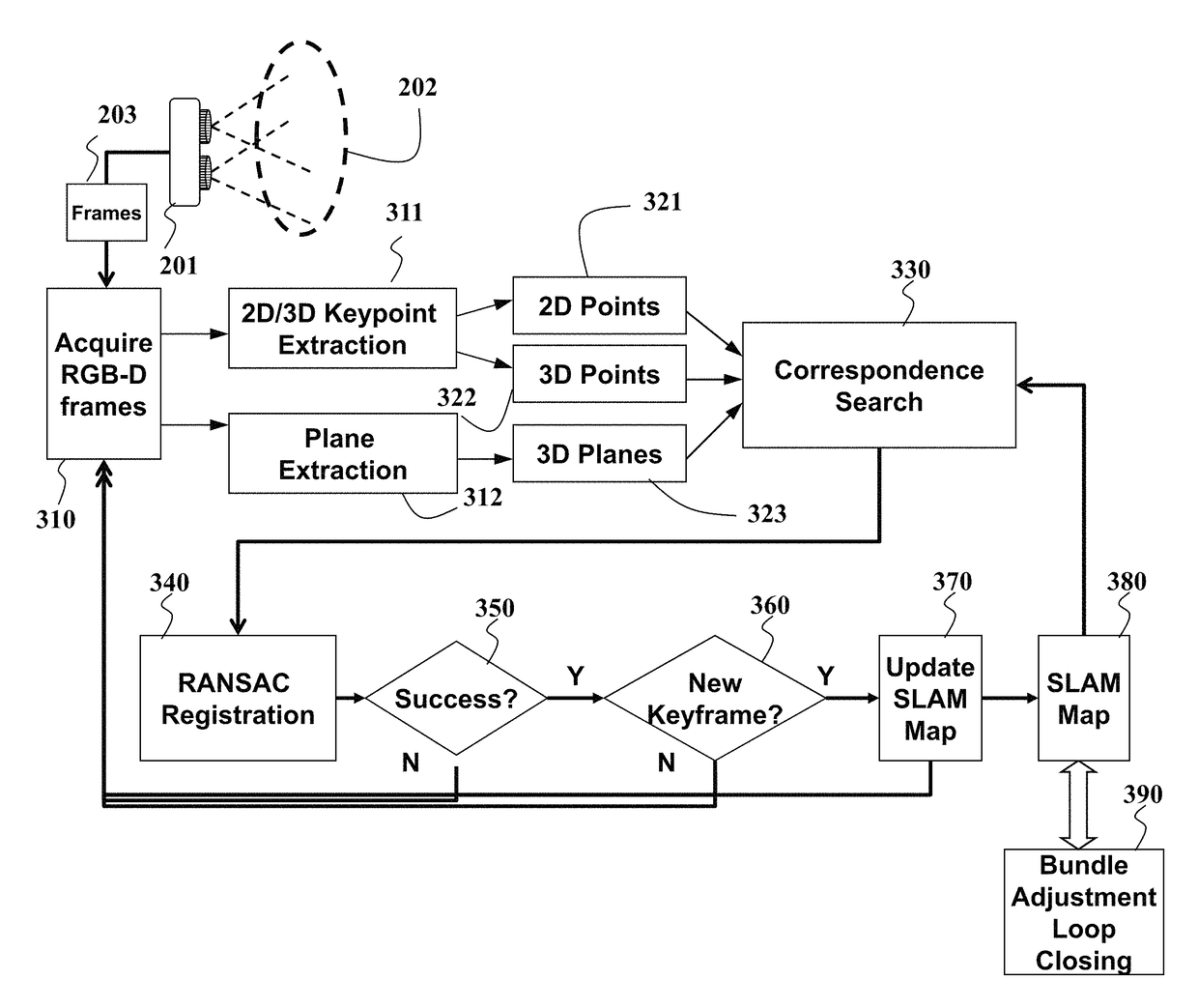

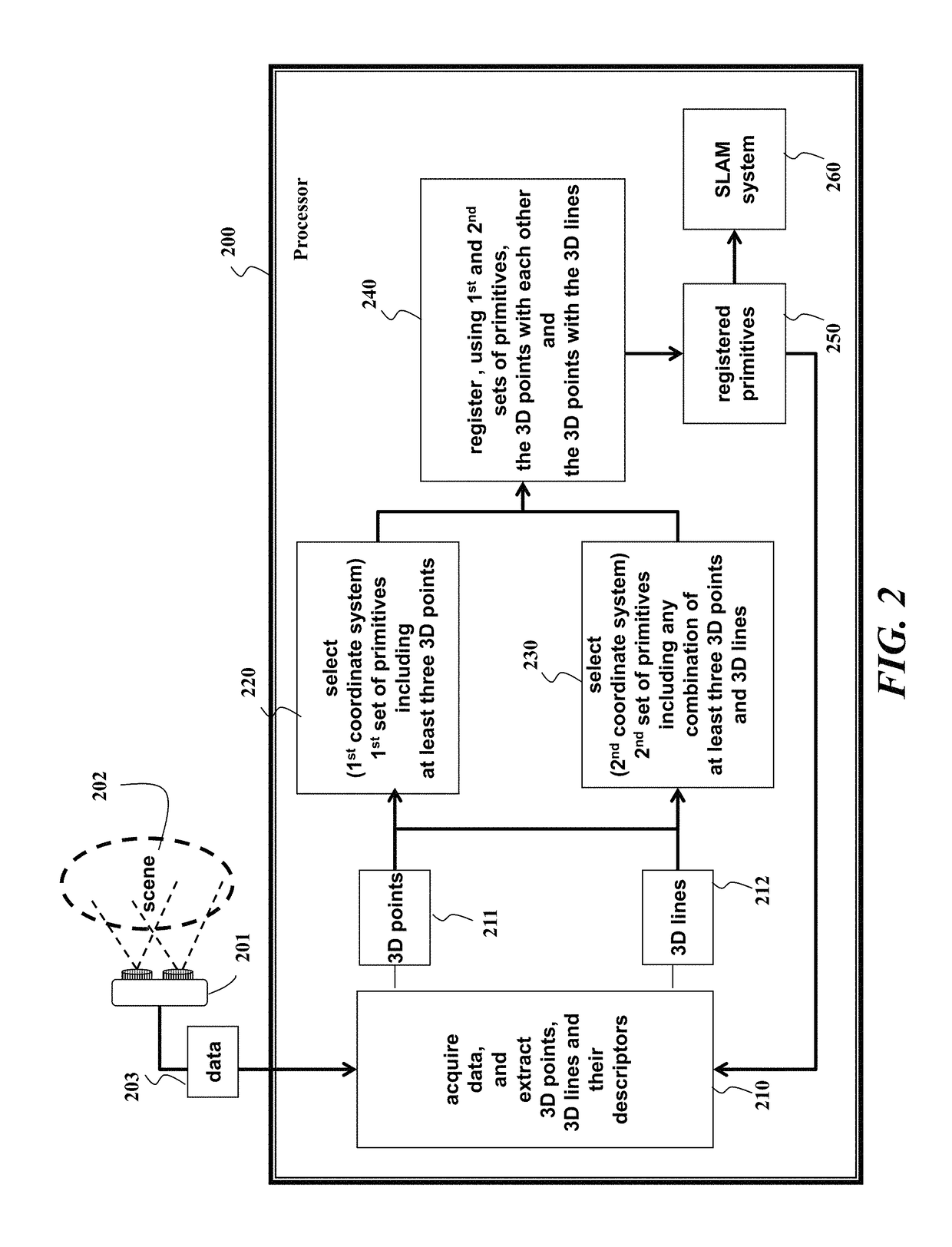

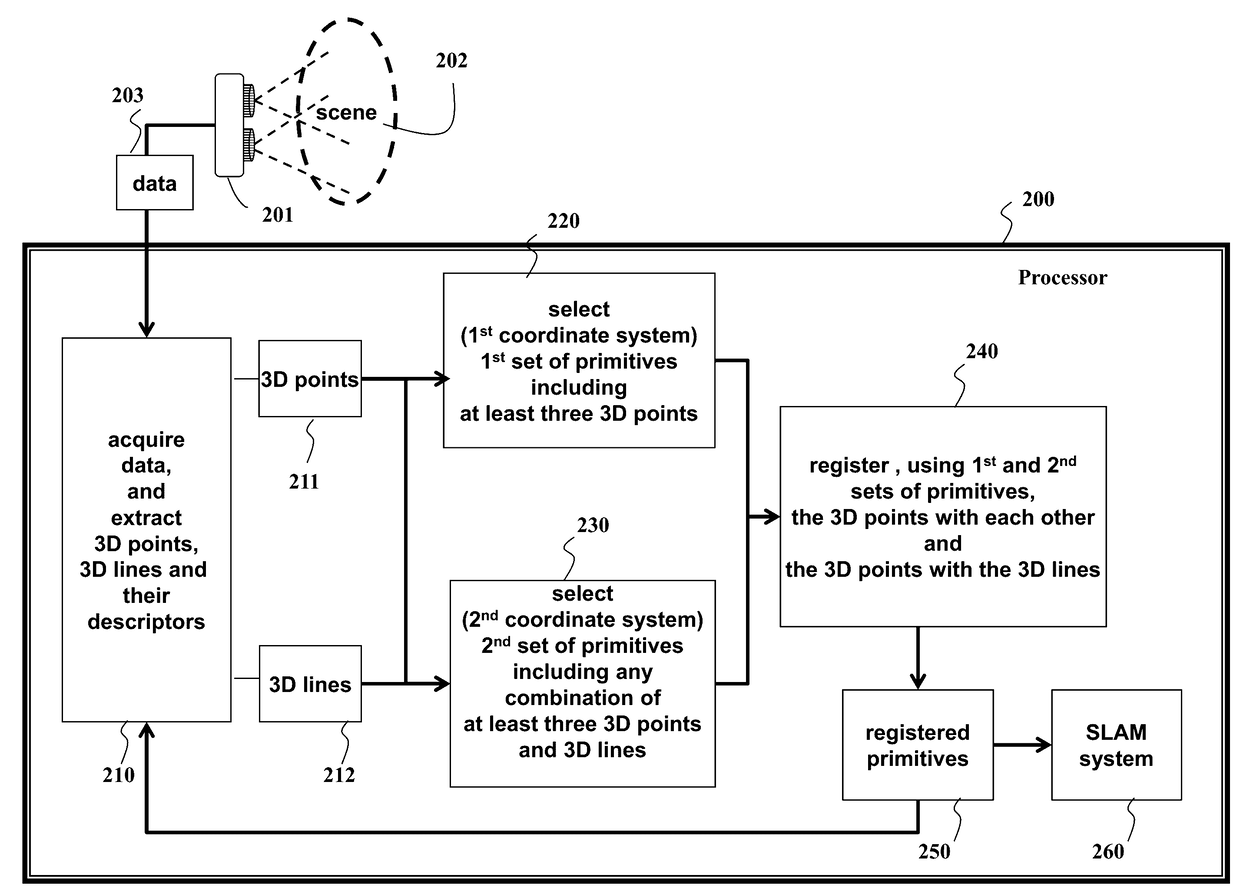

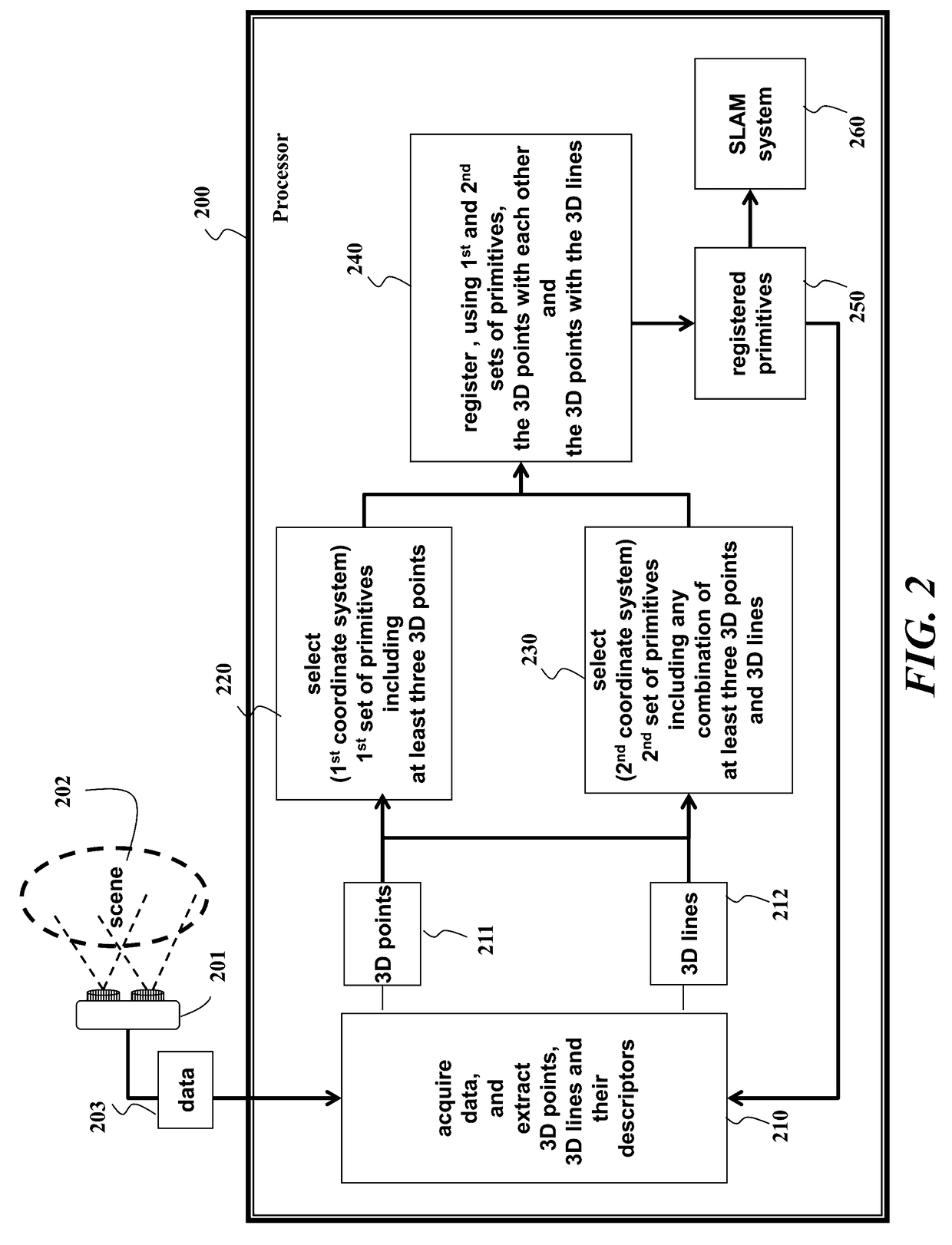

System and method for hybrid simultaneous localization and mapping of 2D and 3D data acquired by sensors from a 3D scene

ActiveUS9807365B2Improve registration accuracyMore on processing speedImage enhancementImage analysisViewpointsComputer vision

A method and system for registering data by first acquiring the data from a scene by a sensor at different viewpoints, and extracting, from the data, three-dimensional (3D) points and 3D lines, and descriptors associated with the 3D points and the 3D lines. A first set of primitives represented in a first coordinate system of the sensor is selected, wherein the first set of primitives includes at least three 3D points. A second set of primitives represented in a second coordinate system is selected, wherein the second set of primitives includes any combination of 3D points and 3D lines to obtain at least three primitives. Then, using the first set of primitives and the second set of primitives, the 3D points are registered with each other, and the 3D points with the 3D lines to obtain registered primitives, wherein the registered primitives are used in a simultaneous localization and mapping (SLAM) system.

Owner:MITSUBISHI ELECTRIC RES LAB INC

System and Method for Hybrid Simultaneous Localization and Mapping of 2D and 3D Data Acquired by Sensors from a 3D Scene

ActiveUS20170161901A1Improve registration accuracyImprove accuracyImage enhancementImage analysisViewpointsComputer vision

A method and system for registering data by first acquiring the data from a scene by a sensor at different viewpoints, and extracting, from the data, three-dimensional (3D) points and 3D lines, and descriptors associated with the 3D points and the 3D lines. A first set of primitives represented in a first coordinate system of the sensor is selected, wherein the first set of primitives includes at least three 3D points. A second set of primitives represented in a second coordinate system is selected, wherein the second set of primitives includes any combination of 3D points and 3D lines to obtain at least three primitives. Then, using the first set of primitives and the second set of primitives, the 3D points are registered with each other, and the 3D points with the 3D lines to obtain registered primitives, wherein the registered primitives are used in a simultaneous localization and mapping (SLAM) system.

Owner:MITSUBISHI ELECTRIC RES LAB INC

Method and device for real-time mapping and localization

ActiveUS10304237B2Efficient storageImprove system robustnessImage enhancementImage analysisReference mapLaser ranging

A method for constructing a 3D reference map useable in real-time mapping, localization and / or change analysis, wherein the 3D reference map is built using a 3D SLAM (Simultaneous Localization And Mapping) framework based on a mobile laser range scanner A method for real-time mapping, localization and change analysis, in particular in GPS-denied environments, as well as a mobile laser scanning device for implementing said methods.

Owner:EURO ATOMIC ENERGY COMMUNITY (EURATOM)

Systems, methods, apparatuses and devices for detecting facial expression and for tracking movement and location in at least one of a virtual and augmented reality system

ActiveUS20190155386A1Rapid and efficient mechanismImprove accuracyInput/output for user-computer interactionRecognisation of pattern in signalsFacial expressionAugmented reality systems

Systems, methods, apparatuses and devices for detecting facial expressions according to EMG signals for a virtual and / or augmented reality (VR / AR) environment, in combination with a system for simultaneous location and mapping (SLAM), are presented herein.

Owner:MINDMAZE GRP SA

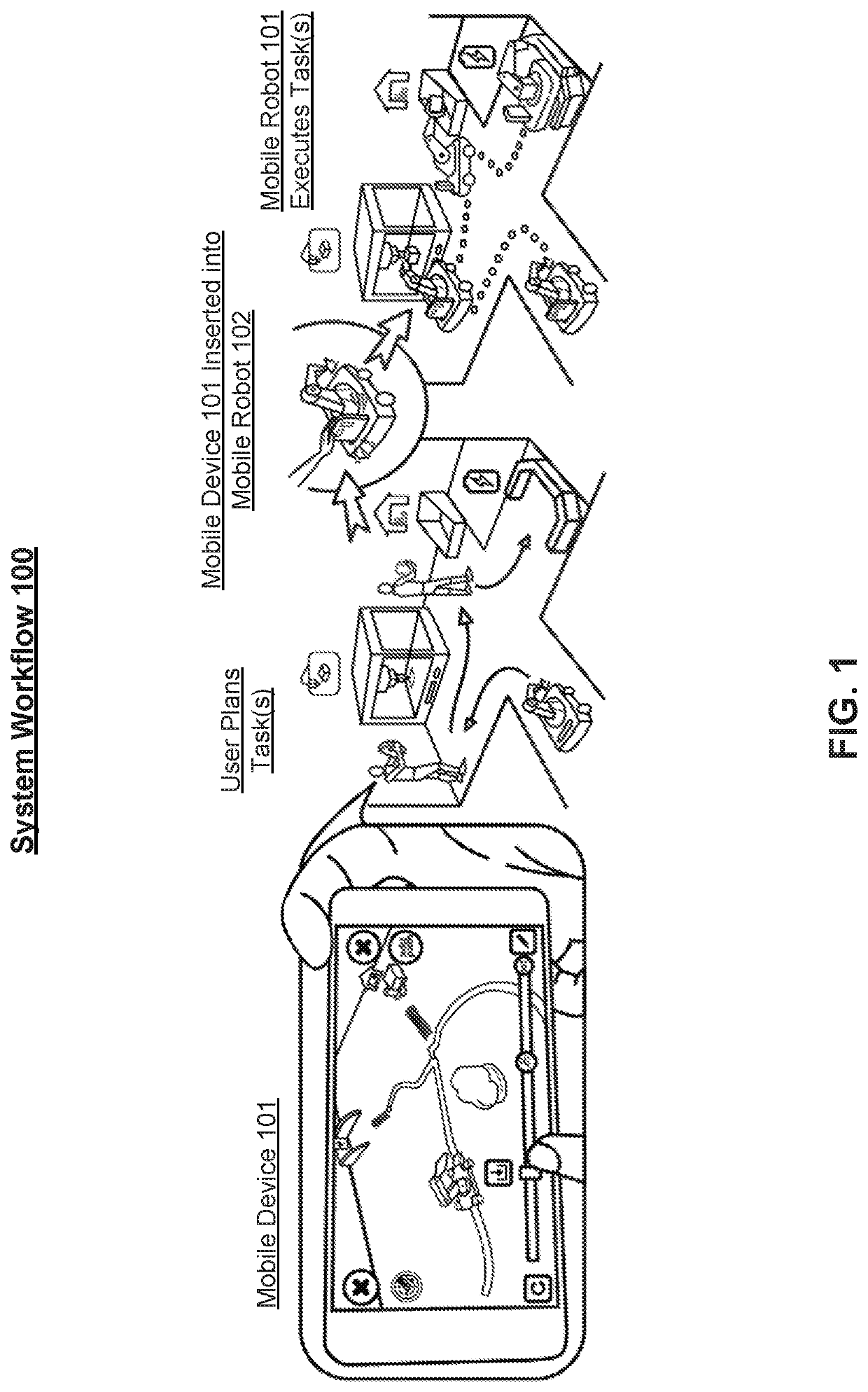

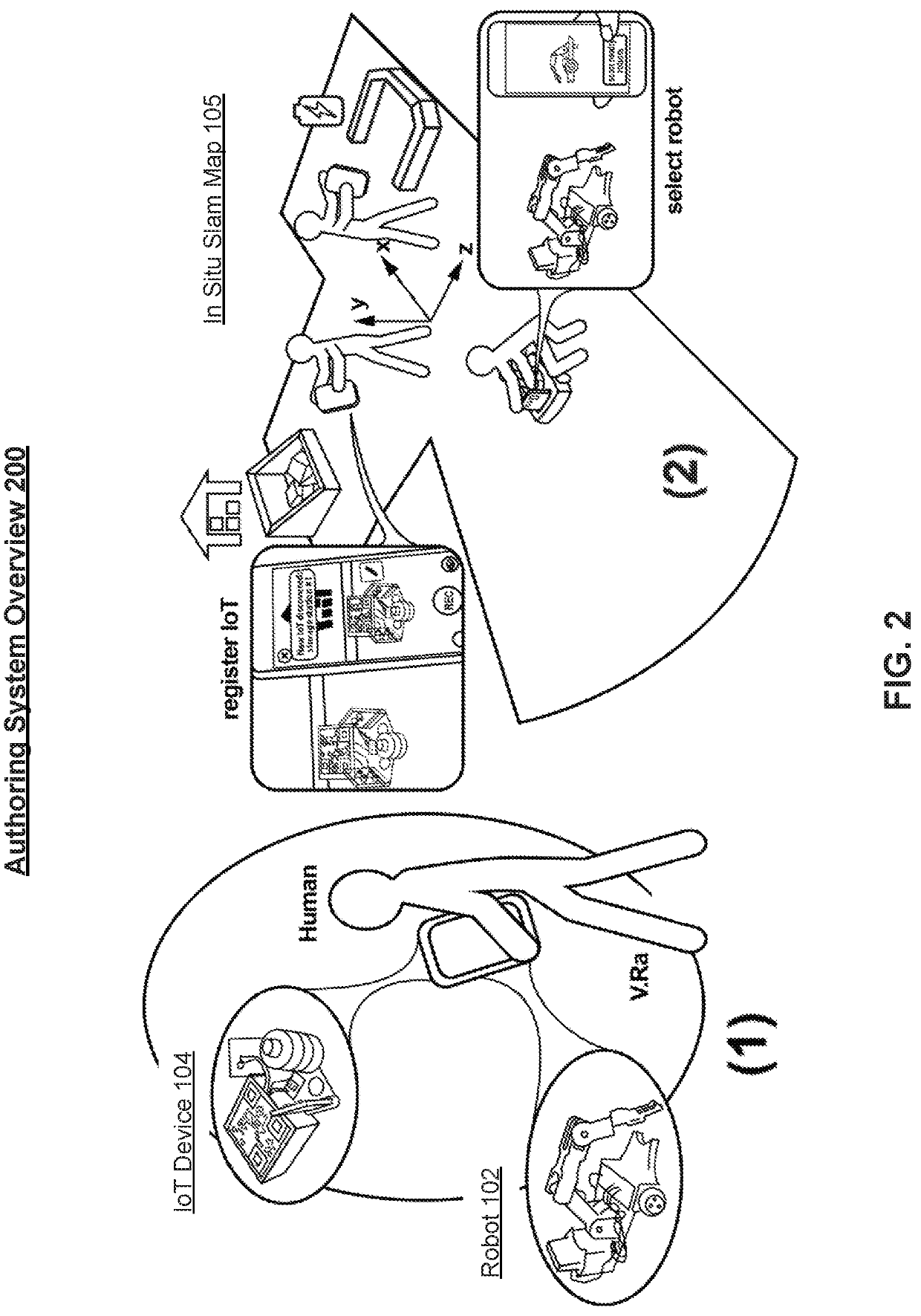

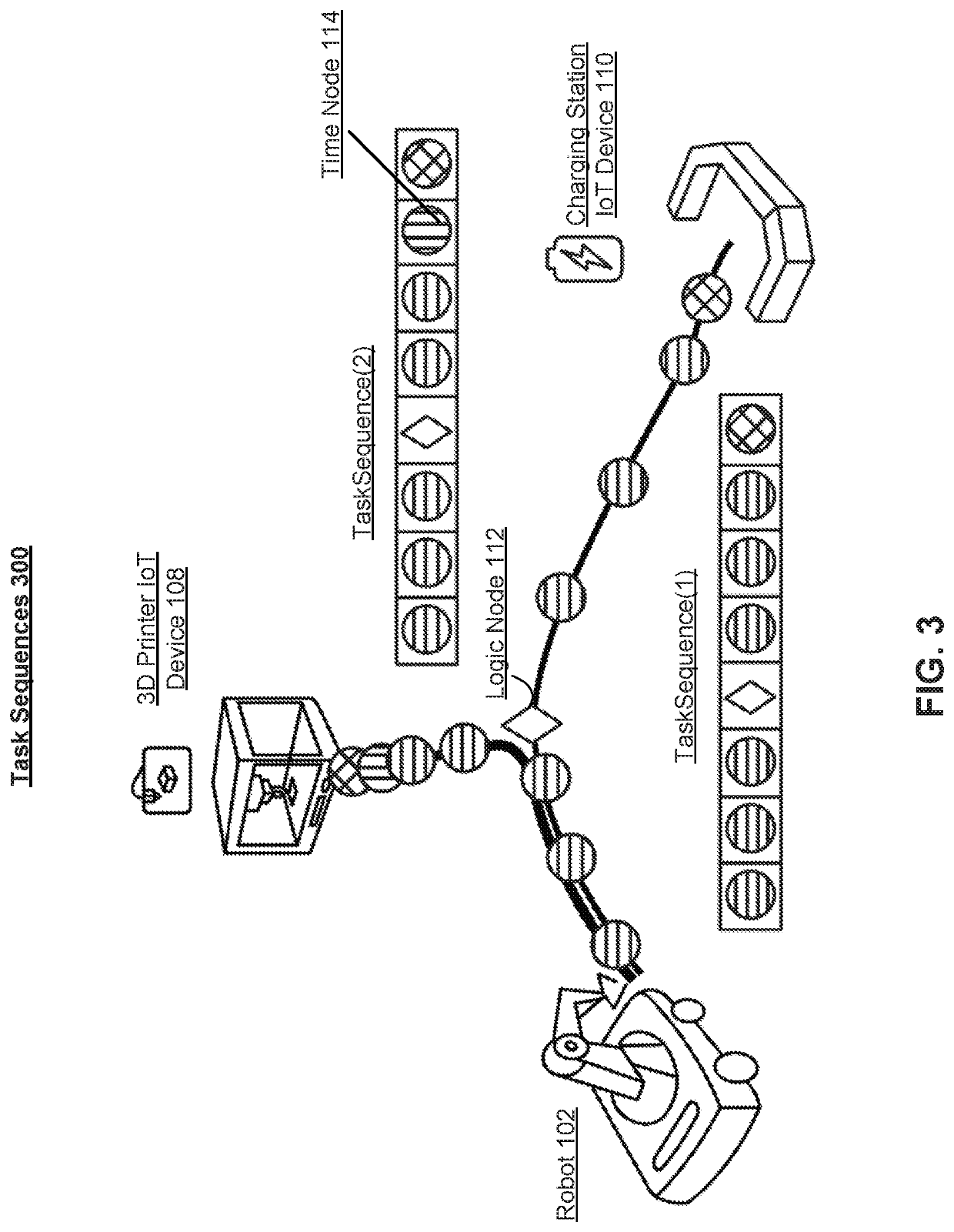

Robot navigation and robot-iot interactive task planning using augmented reality

Disclosed is a visual and spatial programming system for robot navigation and robot-IoT task authoring. Programmable mobile robots serve as binding agents to link stationary IoT devices and perform collaborative tasks. Three key elements of robot task planning (human-robot-IoT) are coherently connected with one single smartphone device. Users can perform visual task authoring in an analogous manner to the real tasks that they would like the robot to perform with using an augmented reality interface. The mobile device mediates interactions between the user, robot(s), and IoT device-oriented tasks, guiding the path planning execution with Simultaneous Localization and Mapping (SLAM) to enable robust room-scale navigation and interactive task authoring.

Owner:PURDUE RES FOUND INC

System and method for improved simultaneous localization and mapping

ActiveUS9651388B1Reduce in quantityImage enhancementInstruments for road network navigationComputer scienceLandmark

A system and method for simultaneous localization and mapping (SLAM), comprising an improved Geometric Dilution of Precision (GDOP) calculation, a reduced set of feature landmarks, the use of Inertial Measurement Units (IMU) to detect measurement motion, and the use of one-time use features and absolute reference landmarks.

Owner:ROCKWELL COLLINS INC

Method and apparatus for simultaneous localization and mapping of mobile robot environment

ActiveUS20140058610A1Programme-controlled manipulatorRoad vehicles traffic controlMobile deviceCell based

Techniques that optimize performance of simultaneous localization and mapping (SLAM) processes for mobile devices, typically a mobile robot. In one embodiment, erroneous particles are introduced to the particle filtering process of localization. Monitoring the weights of the erroneous particles relative to the particles maintained for SLAM provides a verification that the robot is localized and detection that it is no longer localized. In another embodiment, cell-based grid mapping of a mobile robot's environment also monitors cells for changes in their probability of occupancy. Cells with a changing occupancy probability are marked as dynamic and updating of such cells to the map is suspended or modified until their individual occupancy probabilities have stabilized. In another embodiment, mapping is suspended when it is determined that the device is acquiring data regarding its physical environment in such a way that use of the data for mapping will incorporate distortions into the map, as for example when the robotic device is tilted.

Owner:VORWERK & CO INTERHOLDING GMBH

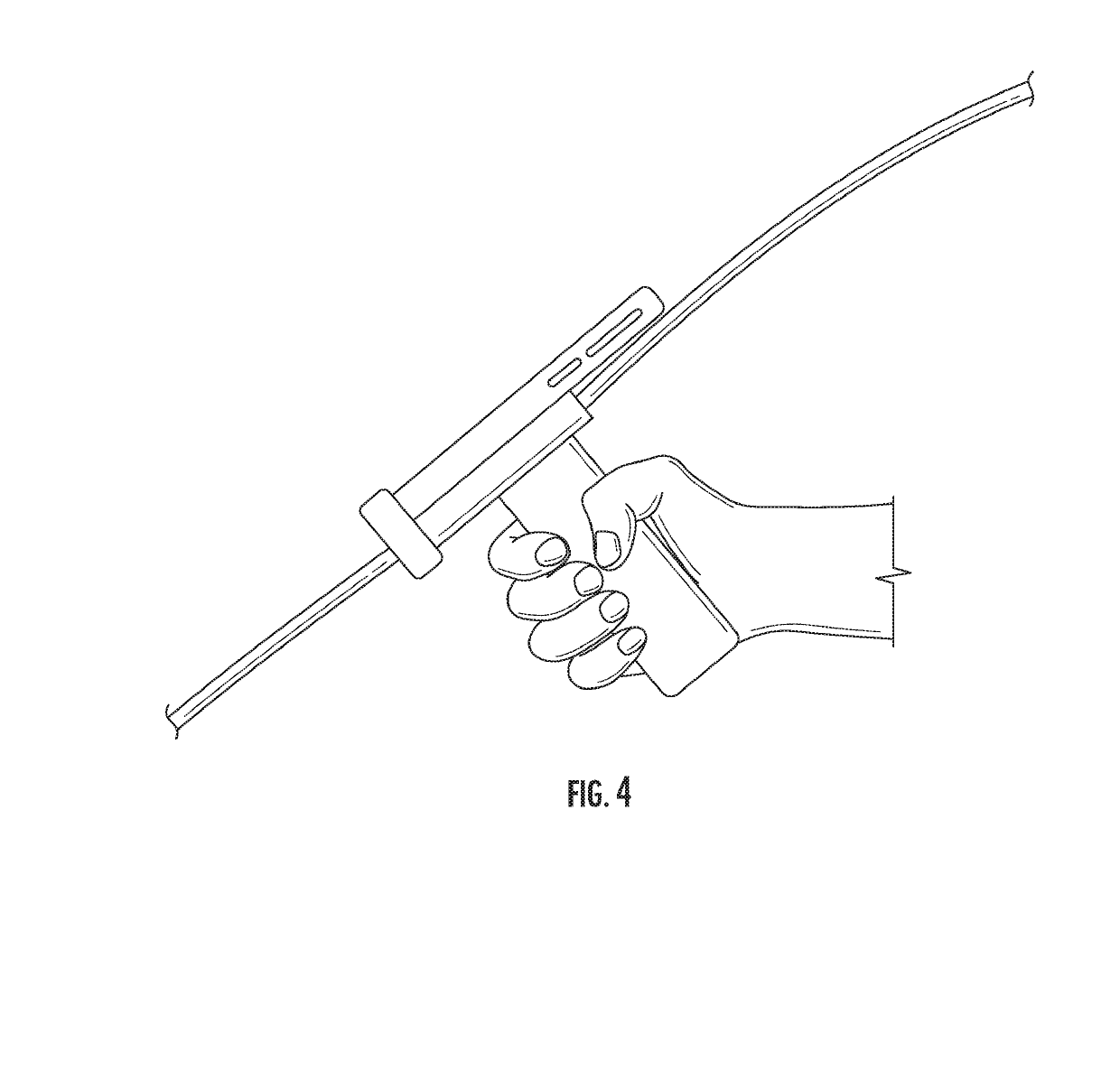

Techniques for bounding cleaning operations of a robotic surface cleaning device within a region of interest

ActiveUS20190320866A1Programme-controlled manipulatorAutomatic obstacle detectionMap LocationSurface cleaning

In general, the present disclosure is directed to a hand-held surface cleaning device that includes circuitry to communicate with a robotic surface cleaning device to cause the same to target an area / region of interest for cleaning. Thus, a user may utilize the hand-held surface cleaning device to perform targeted cleaning and conveniently direct a robotic surface cleaning device to focus on a region of interest. Moreover, the hand-held surface cleaning device may include sensory such as a camera device for extracting three-dimensional information from a field of view. This information may be utilized to map locations of walls, stairs, obstacles, changes in surface types, and other features in a given location. Thus, a robotic surface cleaning device may utilize the mapping information from the hand-held surface cleaning device as an input in a real-time control loop, e.g., as an input to a Simultaneous Localization and Mapping (SLAM) routine.

Owner:SHARKNINJA OPERATING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com