Patents

Literature

162 results about "Visual task" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Visual Task Board is a graphic-rich environment. It transforms the navigation list & forms in an interactive way. It will allow users to view, update multiple tasks. An activity stream will display recent activity. so users can see the changes in tasks. Users can also add a task in it. It is also possible to edit and update the tasks directly.

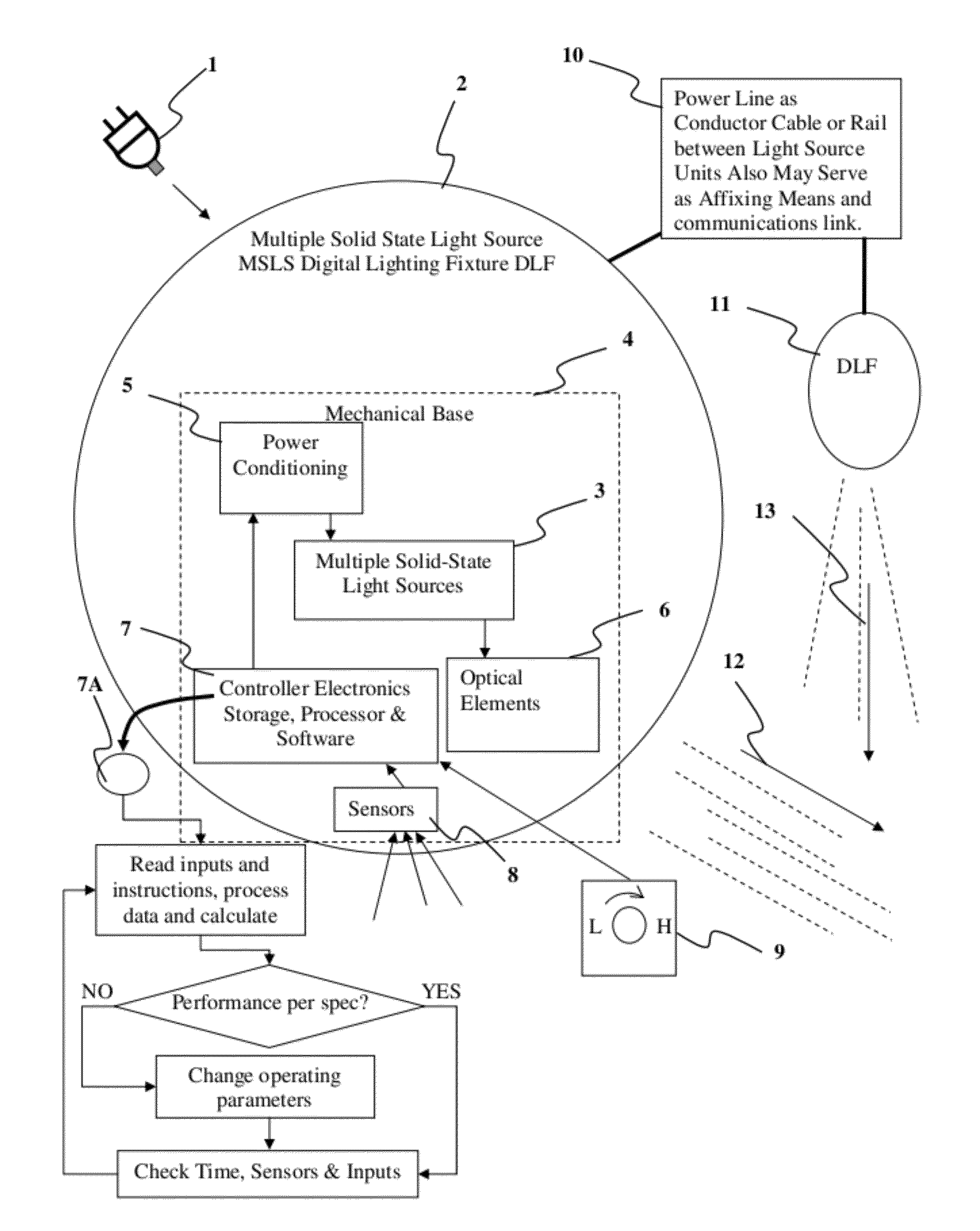

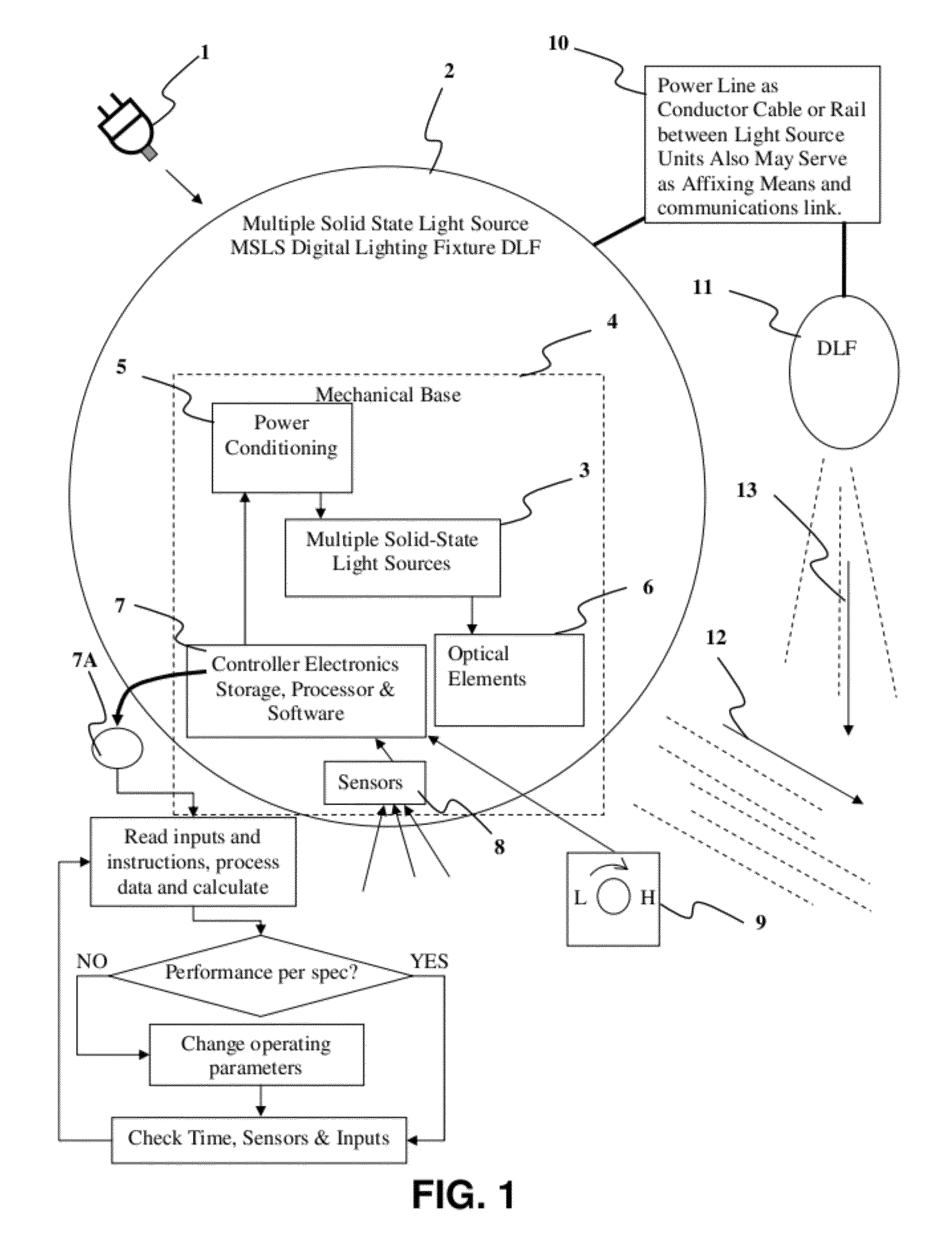

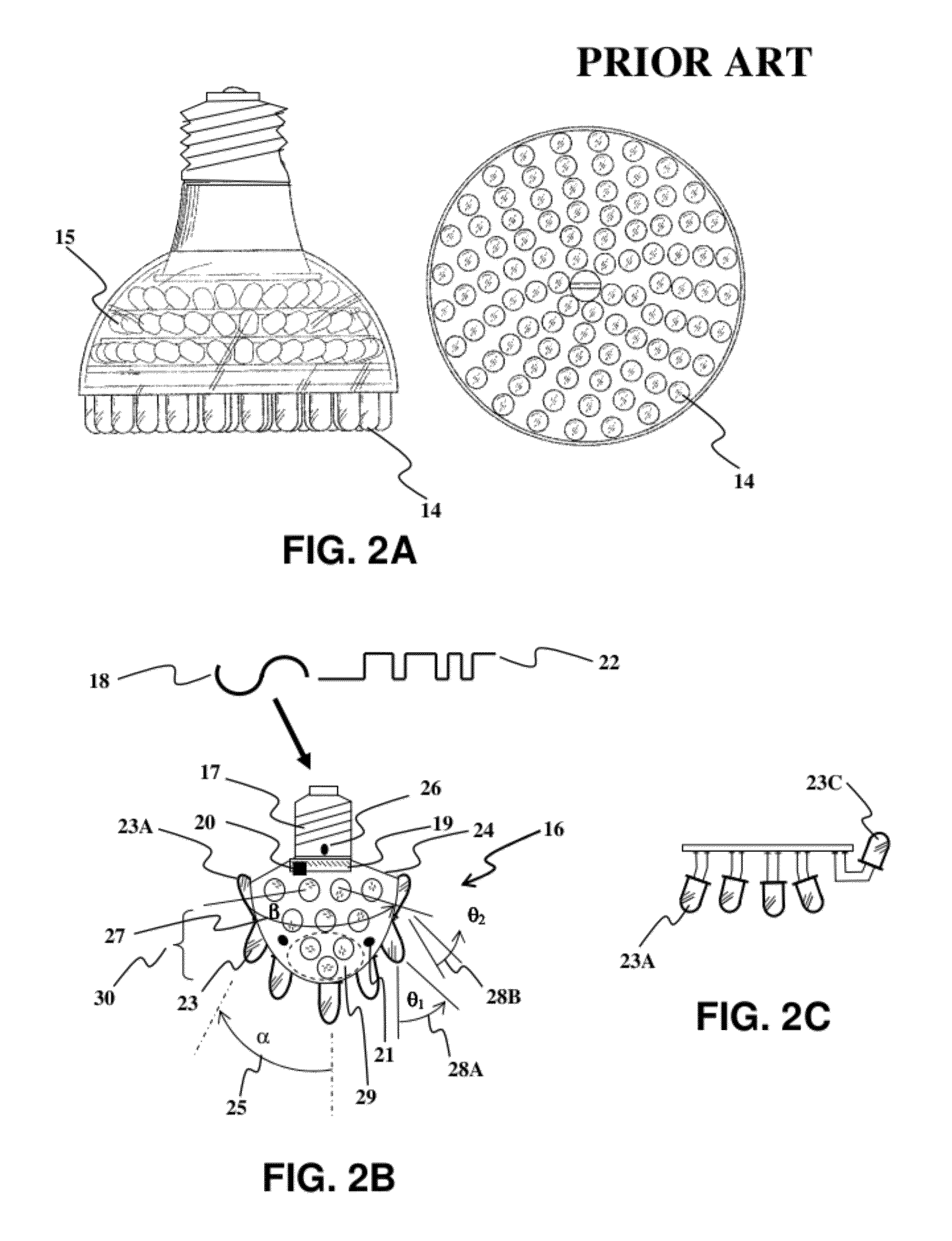

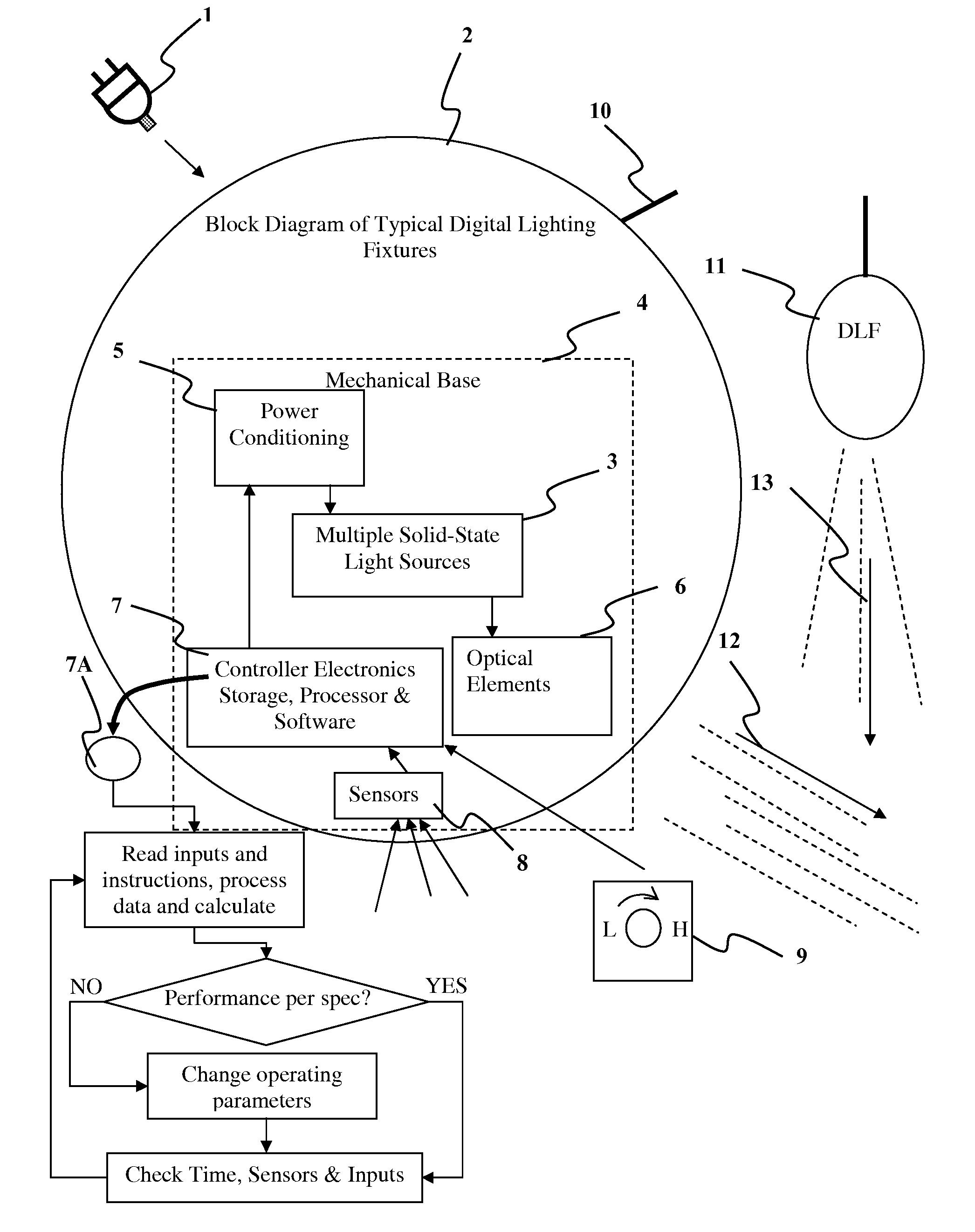

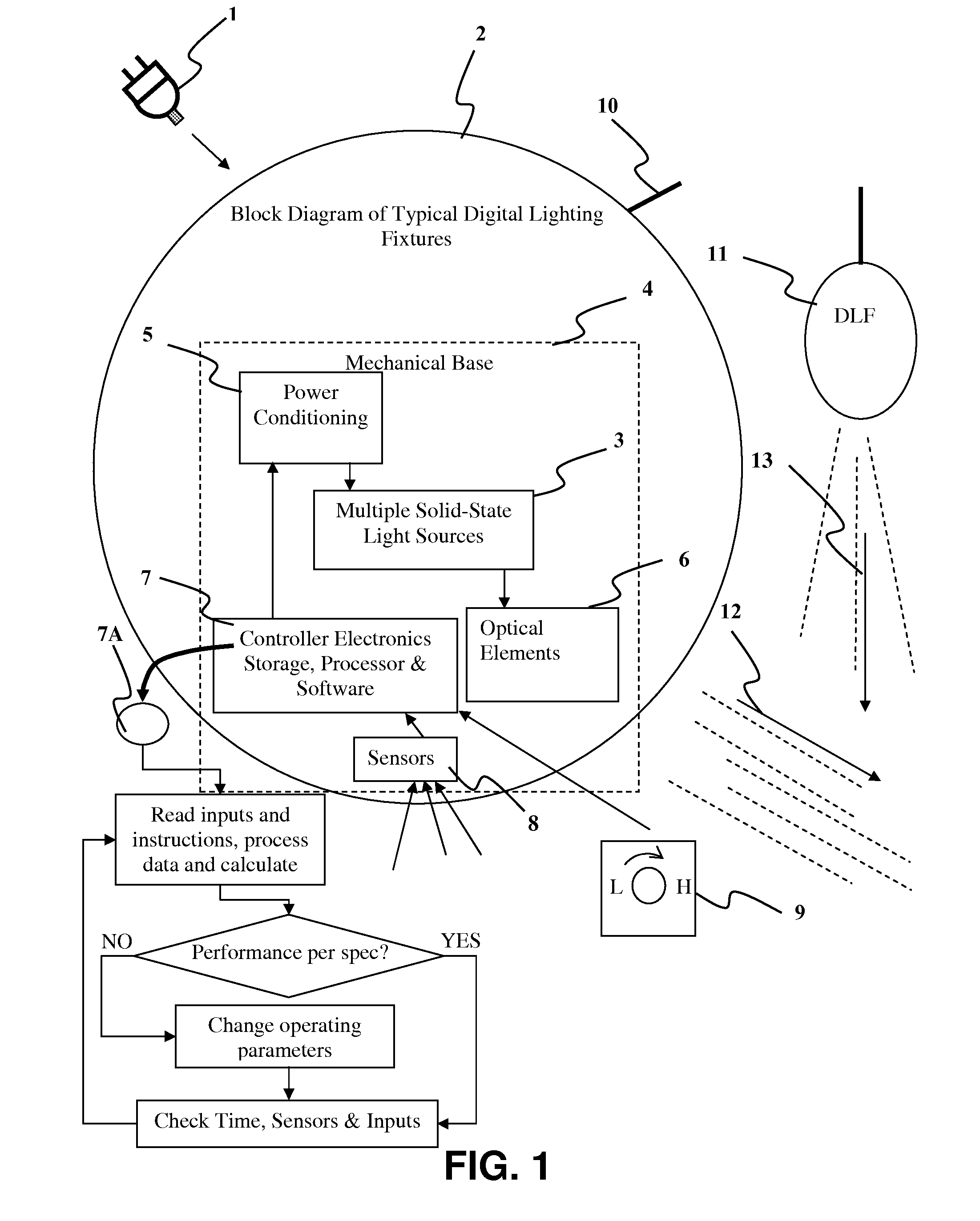

Detector Controlled Illuminating System

ActiveUS20120206050A1Correctly illuminateReduce electricity costsMechanical apparatusLight source combinationsUser needsCost effectiveness

An illuminating device coupled with sensors or an image acquisition device and a logical controller allows illumination intensity and spectrum to be varied according to changing user needs. The system provides illumination to areas according to the principles of correct lighting practice for the optimal performance of visual tasks in the most efficient, cost effective manner. Aspects of the invention include: lighting fixtures which adapt to ambient lighting, movement, visual tasks being performed, and environmental and personal conditions affecting illumination requirements at any given instant. Lighting fixtures having spatial distribution of spectrum and intensity, providing both “background” room lighting, and “task” lighting.

Owner:YECHEZKAL EVAN SPERO

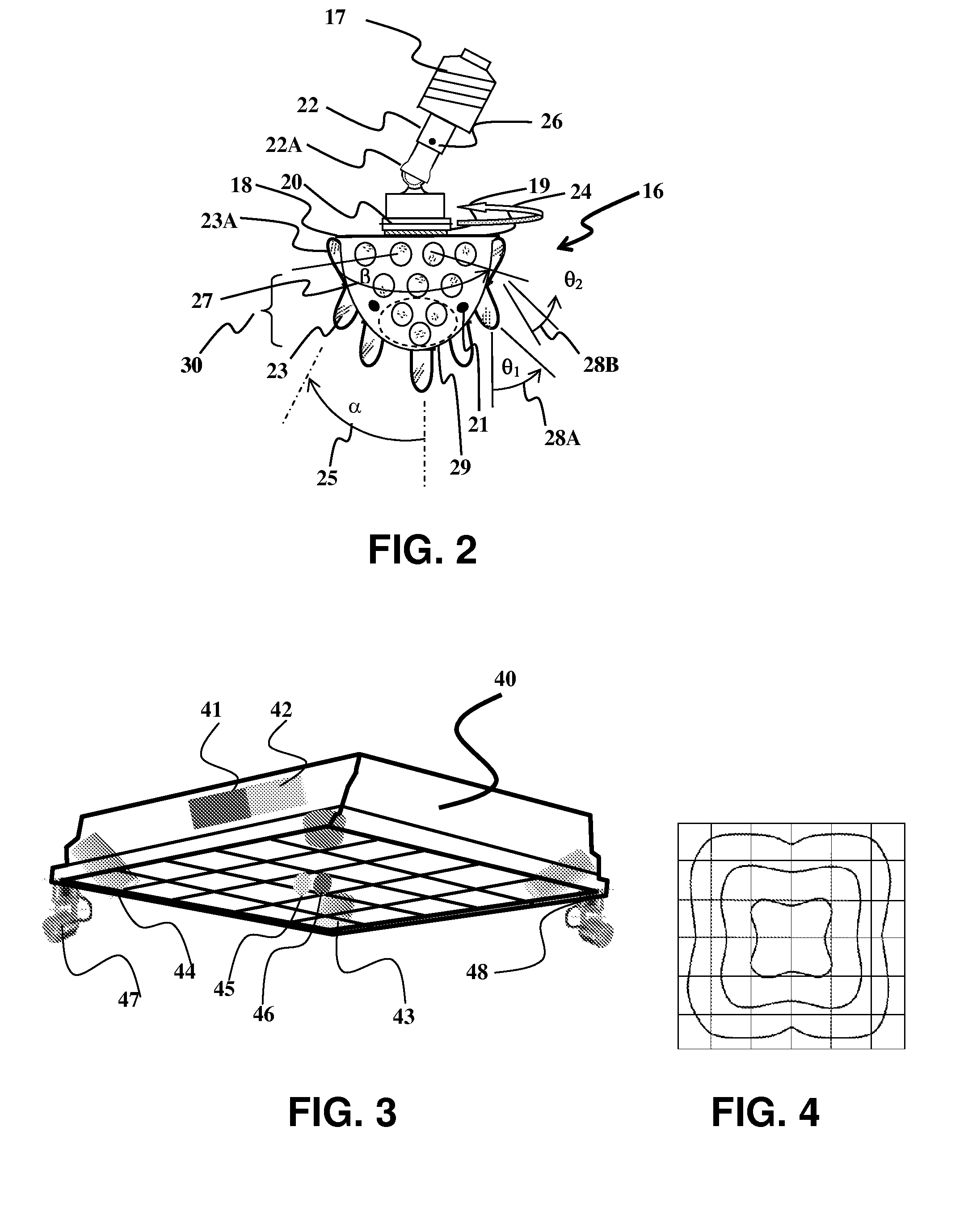

Detector controlled illuminating system

InactiveUS20150035440A1Save energyImprove visual effectsMechanical apparatusLight source combinationsUser needsCost effectiveness

Owner:SPERO YECHEZKAL EVAN

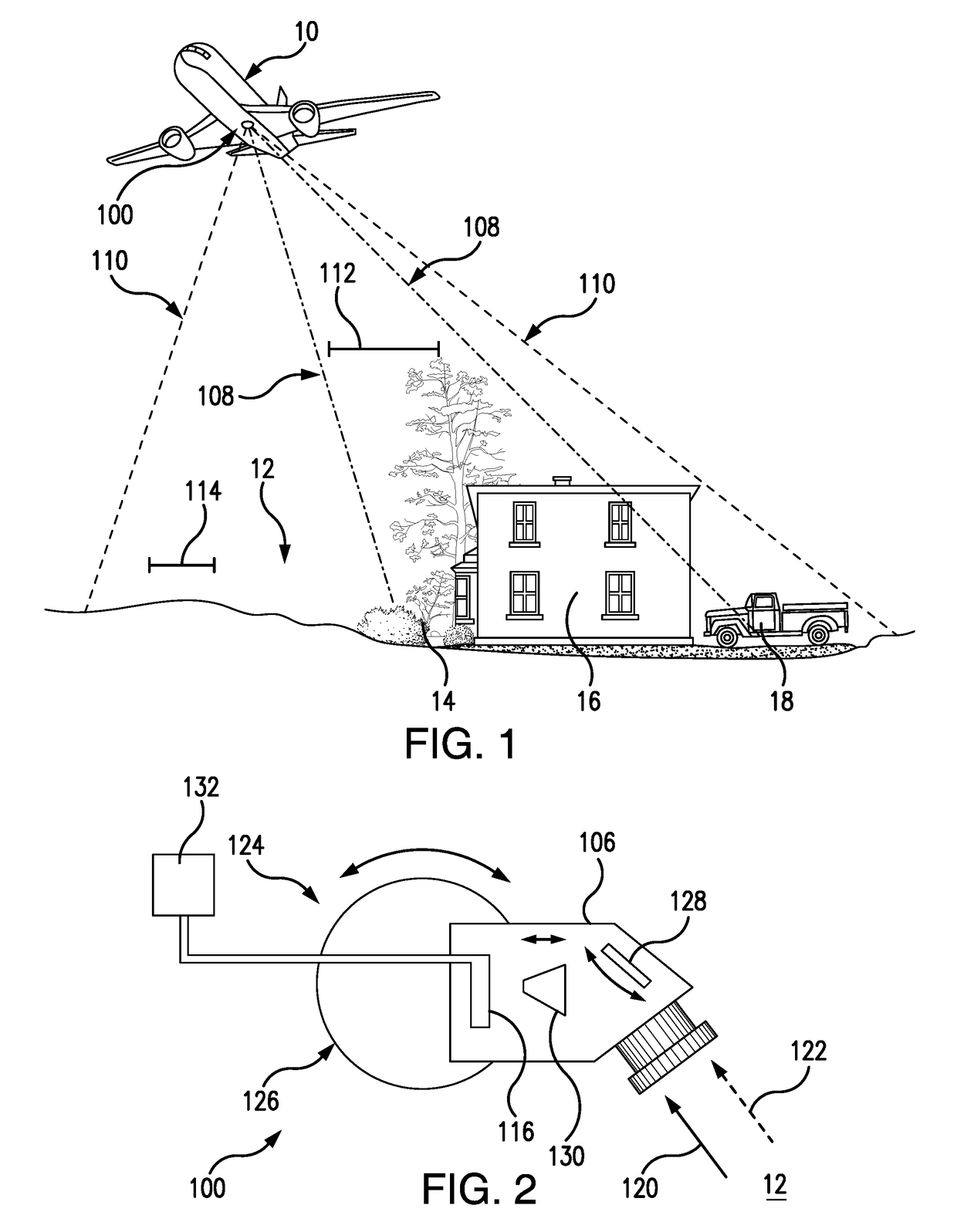

Active visual attention models for computer vision tasks

An imaging method includes obtaining an image with a first field of view and first effective resolution and the analyzing the image with a visual attention algorithm to one identify one or more areas of interest in the first field of view. A subsequent image is then obtained for each area of interest with a second field of view and a second effective resolution, the second field of view being smaller than the first field of view and the second effective resolution being greater than the first effective resolution.

Owner:THE BF GOODRICH CO

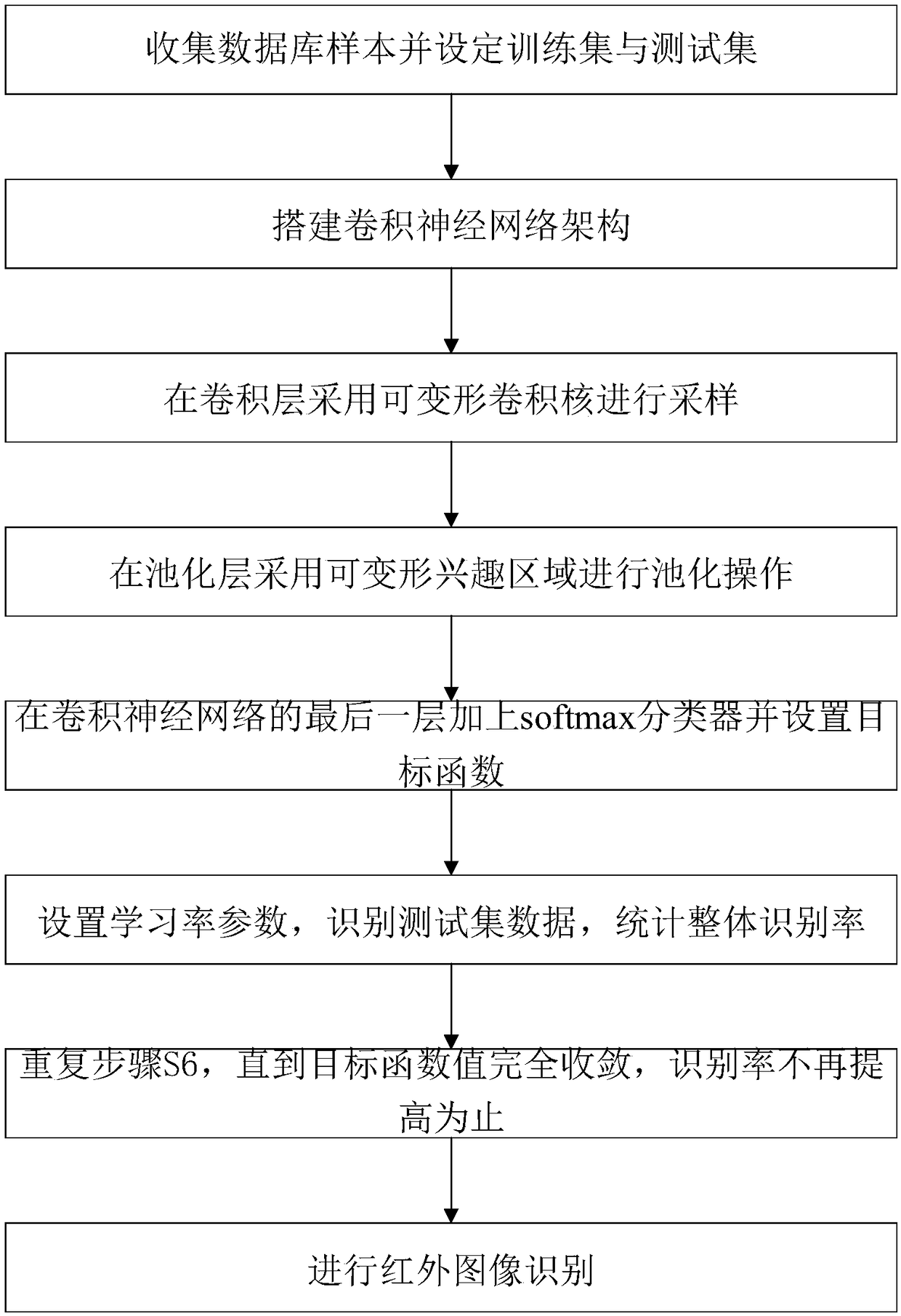

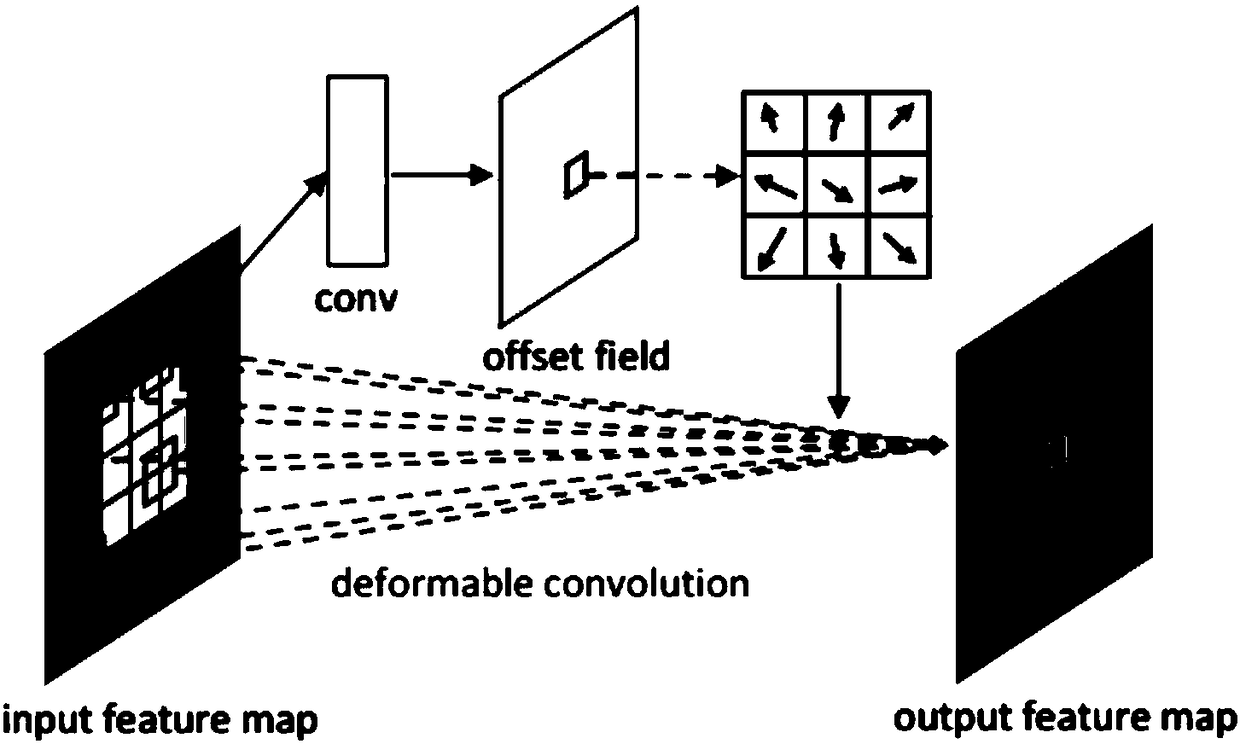

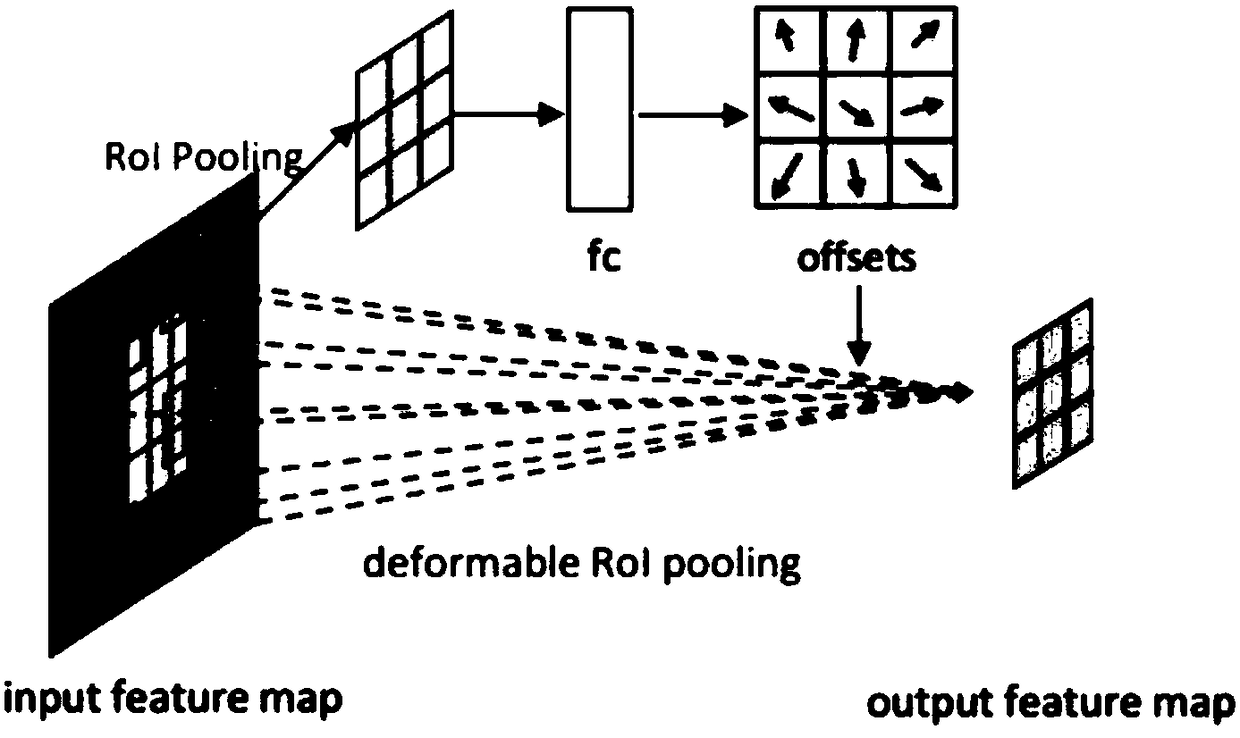

Deformable convolutional neural network-based infrared image object identification method

InactiveCN108564025AEasy to identifyOptimal pooling template valueCharacter and pattern recognitionNeural architecturesNonlinear deformationRate parameter

The invention discloses a deformable convolutional neural network-based infrared image object identification method. The method comprises the steps of constructing a training set and a test set; establishing a convolutional neural network architecture; adding a softmax classifier to the last layer, and setting an objective function; performing sampling by adopting a convolution kernel of linear ornonlinear deformation; performing pooling operation in a pooling layer by adopting a rule block sampling-based ROI pooling method which is the best in the industry at present; setting learning rate parameters according to experience; and easily performing standard back propagation end-to-end training, thereby obtaining a deformable convolutional neural network. An experiment proves that the spatial geometric deformation learning capability is introduced in the convolutional neural network, so that an identification task of an image with spatial deformation is better finished; the geometric transformation modeling capability of the convolutional neural network and the effectiveness of target detection and visual task identification are improved; and dense geometric deformation in space issuccessfully learnt.

Owner:GUANGDONG POWER GRID CO LTD +1

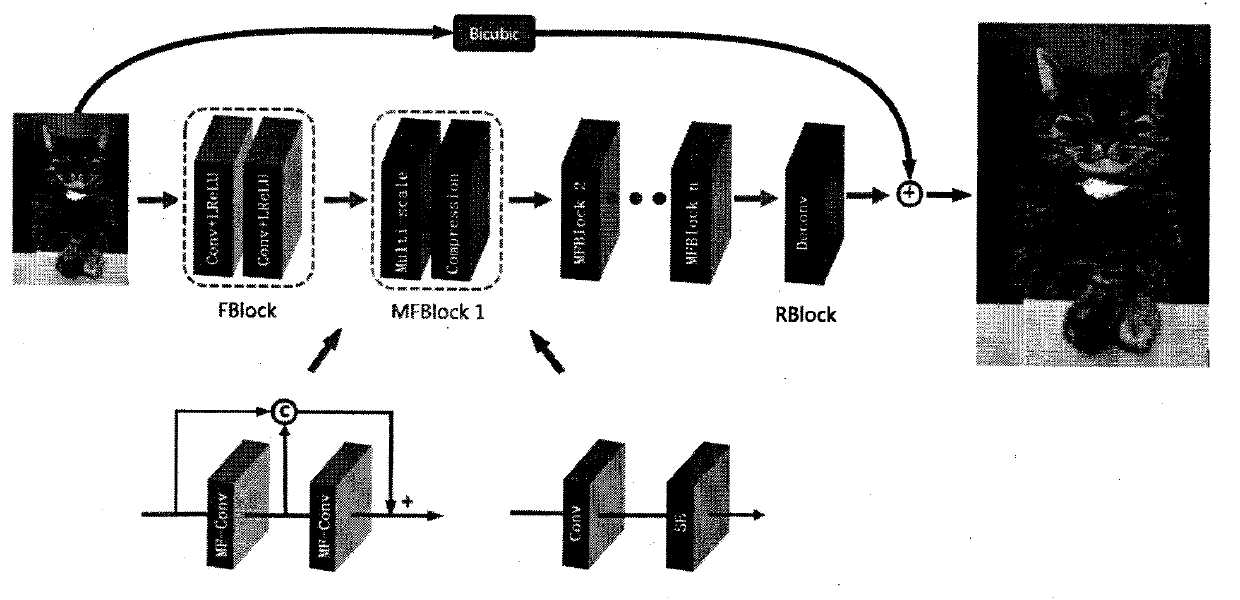

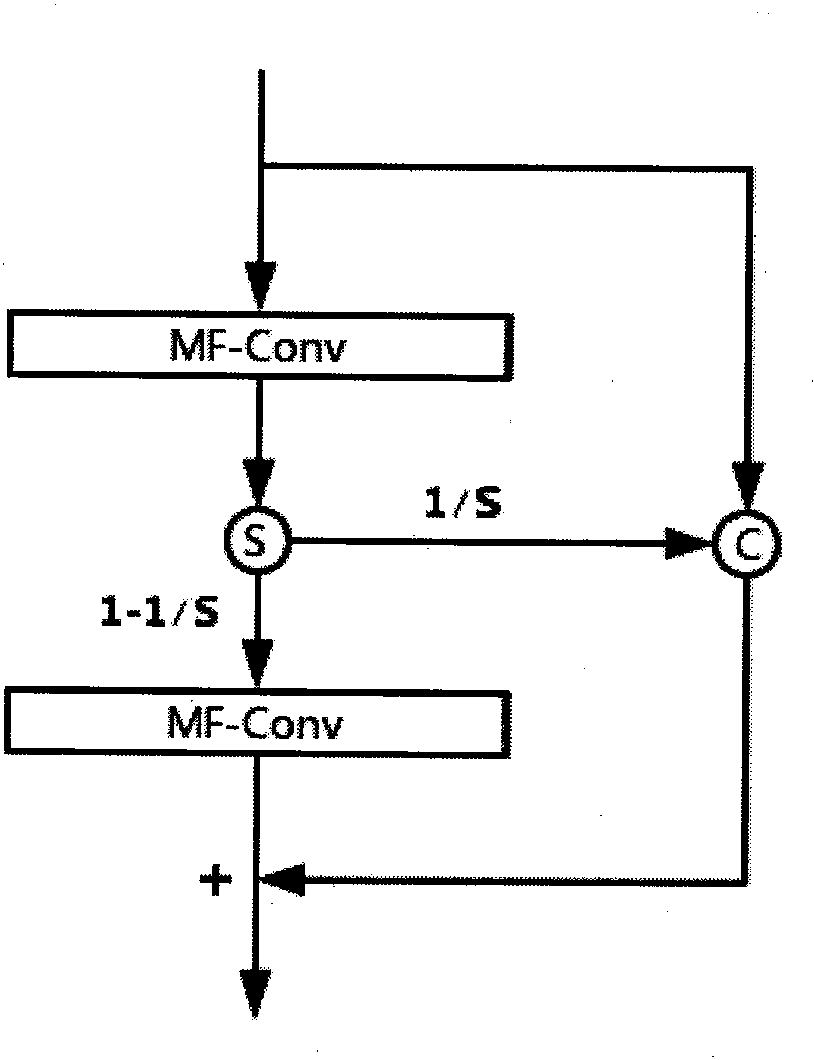

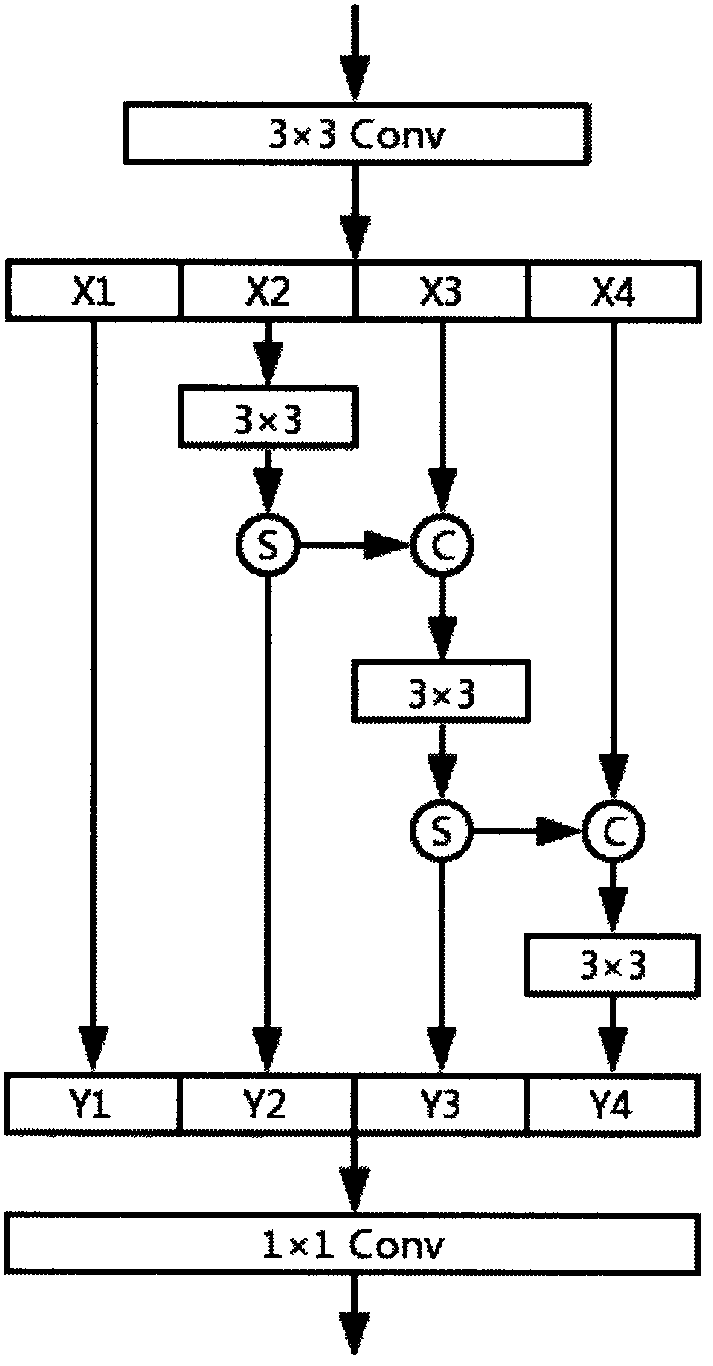

Single-image super-resolution reconstruction algorithm based on multi-scale residual error learning network

The invention relates to a single-image super-resolution reconstruction algorithm based on a multi-scale residual error learning network. In recent years, the convolutional neural network is widely applied to many visual tasks, and particularly, remarkable results are obtained in the field of single-image super-resolution reconstruction. Similarly, multi-scale feature extraction also achieves consistent performance improvement in the field. However, in the prior art, multi-scale features are extracted in a layered mode mostly, and with the increase of the depth and width of a network, the calculation complexity and the consumption of a memory can be greatly improved. In order to solve the problem, the invention provides a compact multi-scale residual error learning network, i.e., representing multi-scale characteristics in a residual error block. The model is composed of a feature extraction block, a multi-scale information block and a reconstruction block. In addition, due to the factthat the number of network layers is small and group convolution is used, the network has the advantage of being high in execution speed. Experimental results show that the method is superior to an existing method in time and performance.

Owner:TIANJIN POLYTECHNIC UNIV

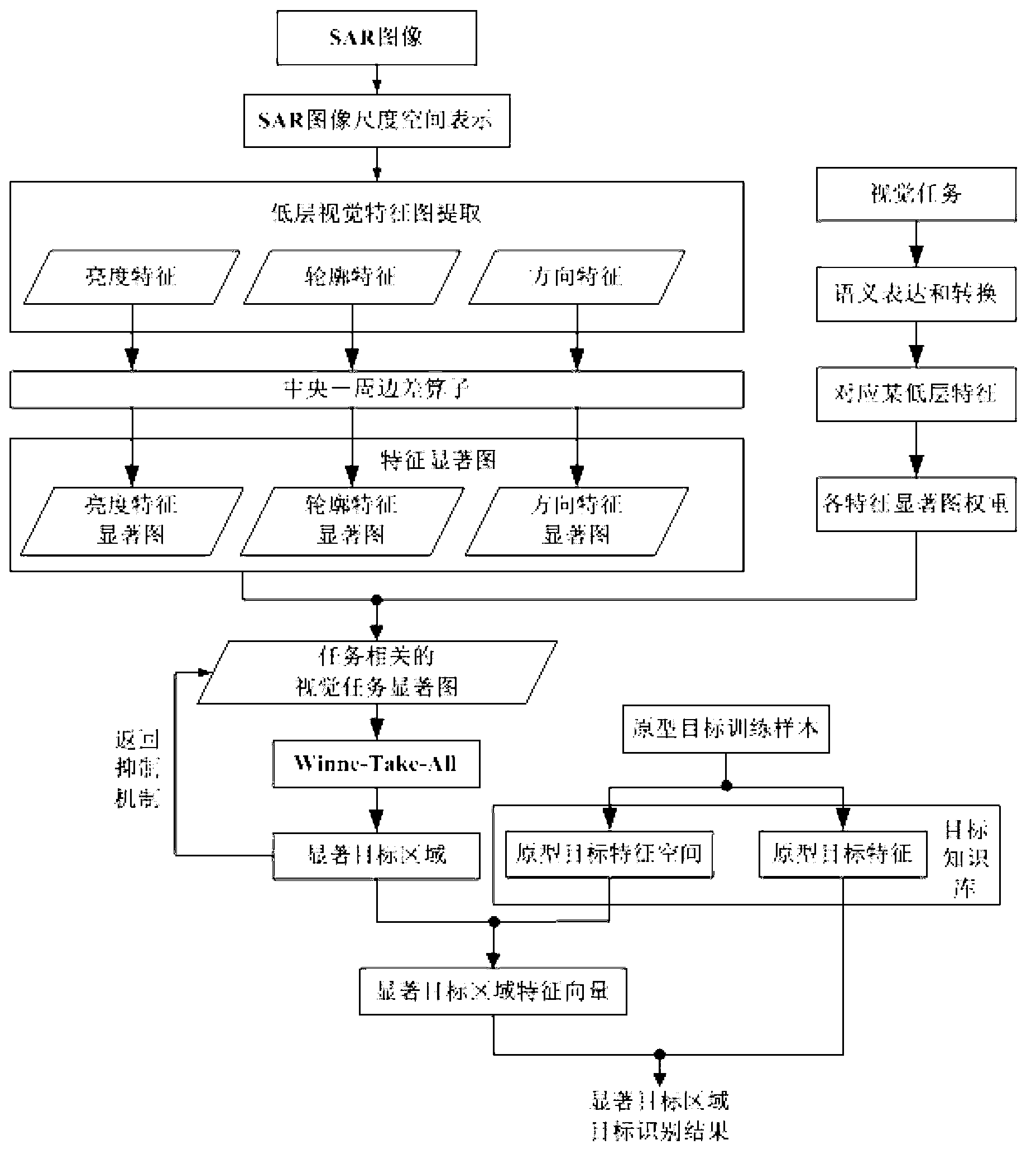

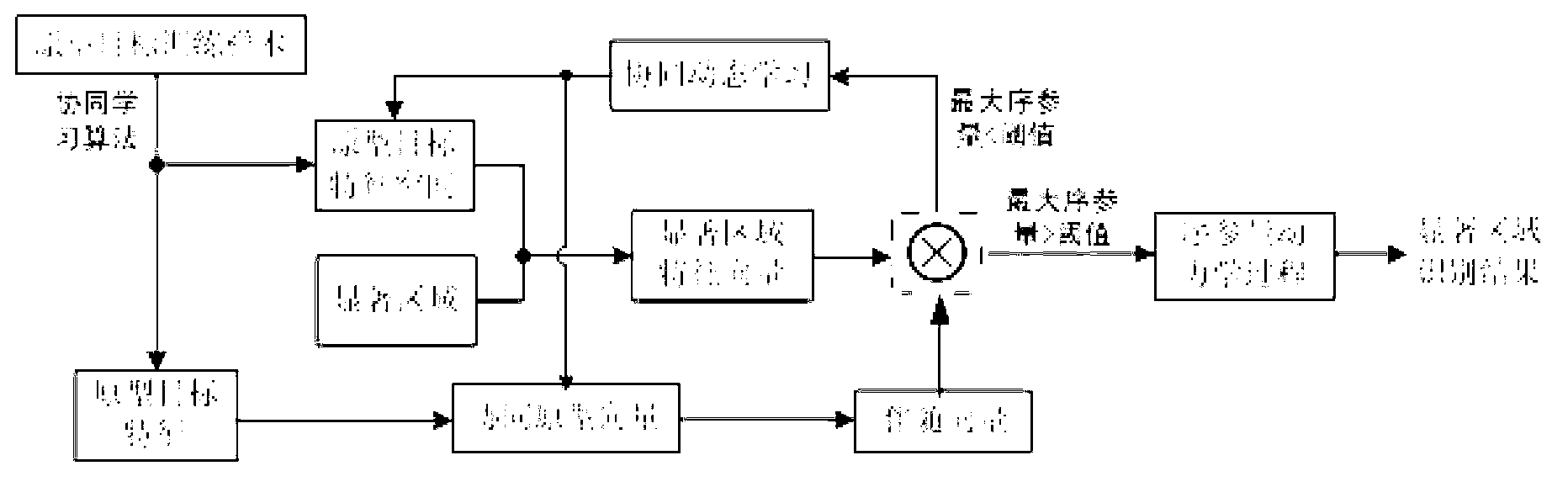

Method for recognizing collaborative target in SAR (Synthetic Aperture Radar) image based on visual attention mechanism

InactiveCN103065136AAbility to learn onlineQuick selectionCharacter and pattern recognitionPattern recognitionSynthetic aperture radar

The invention provides a method for recognizing a collaborative target in an SAR (Synthetic Aperture Radar) image based on a visual attention mechanism. The method comprises the following steps of: establishing the scale spatial presentation of an SAR image; extracting a low-layer visual feature map, carrying out semantic translation on a visual task, translating the visual task, decomposing the visual feature map, calculating the weight of each visual feature map, and generating a visual task saliency map relevant to the visual task; selecting a salient target region in the SAR image by utilizing the visual task saliency map to realize the separation of a foreground and a background of the target region in the SAR image; learning samples of various known prototype targets through a collaborative learning algorithm to obtain features and feature spaces of the known prototype targets and generate a known prototype target knowledge base; and recognizing collaboration of targets in the salient target region: recognizing the targets in the salient target region of the selected SAR image based on the known prototype target knowledge base by utilizing a collaboration pattern recognition order parameter dynamic iteration process.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

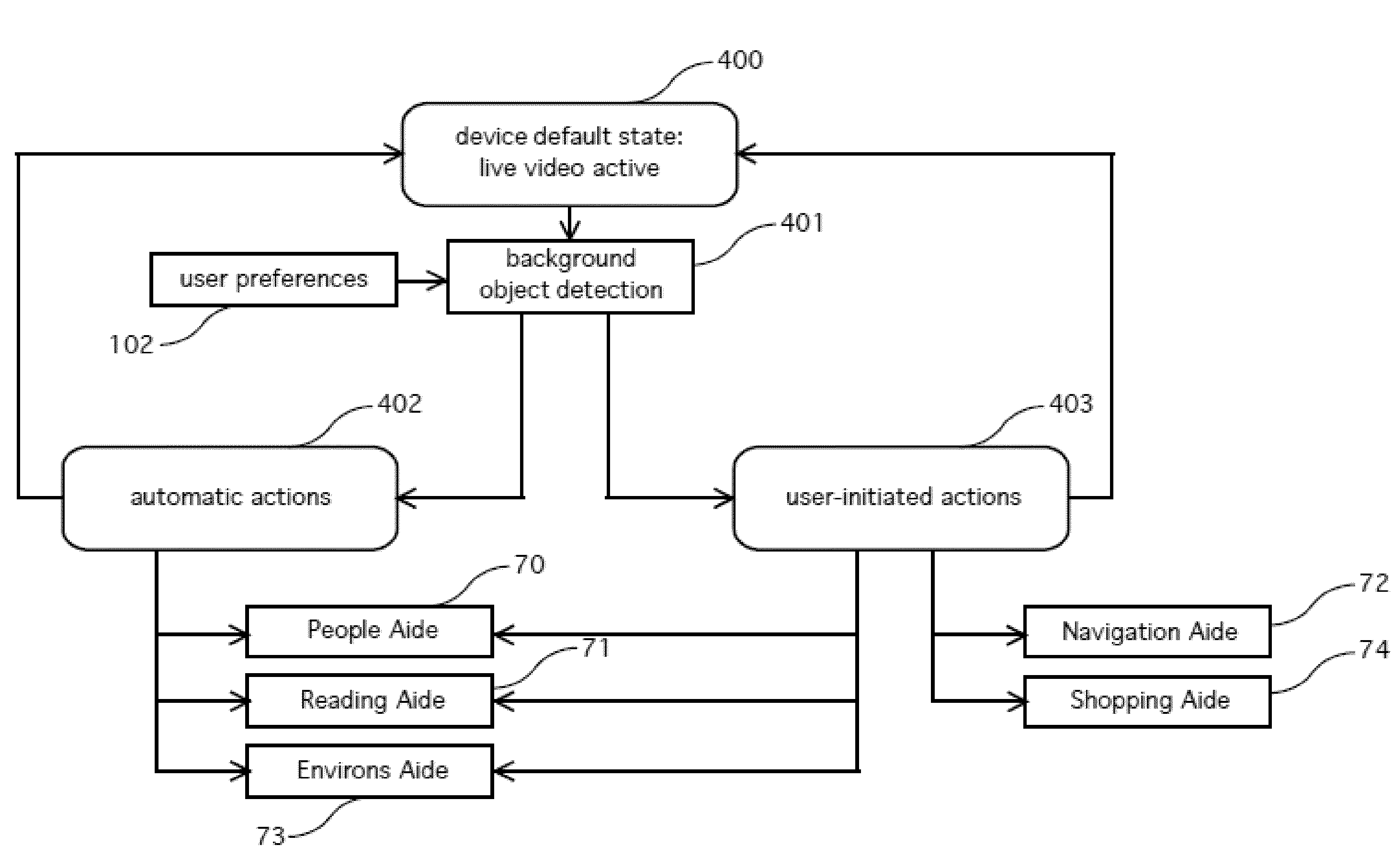

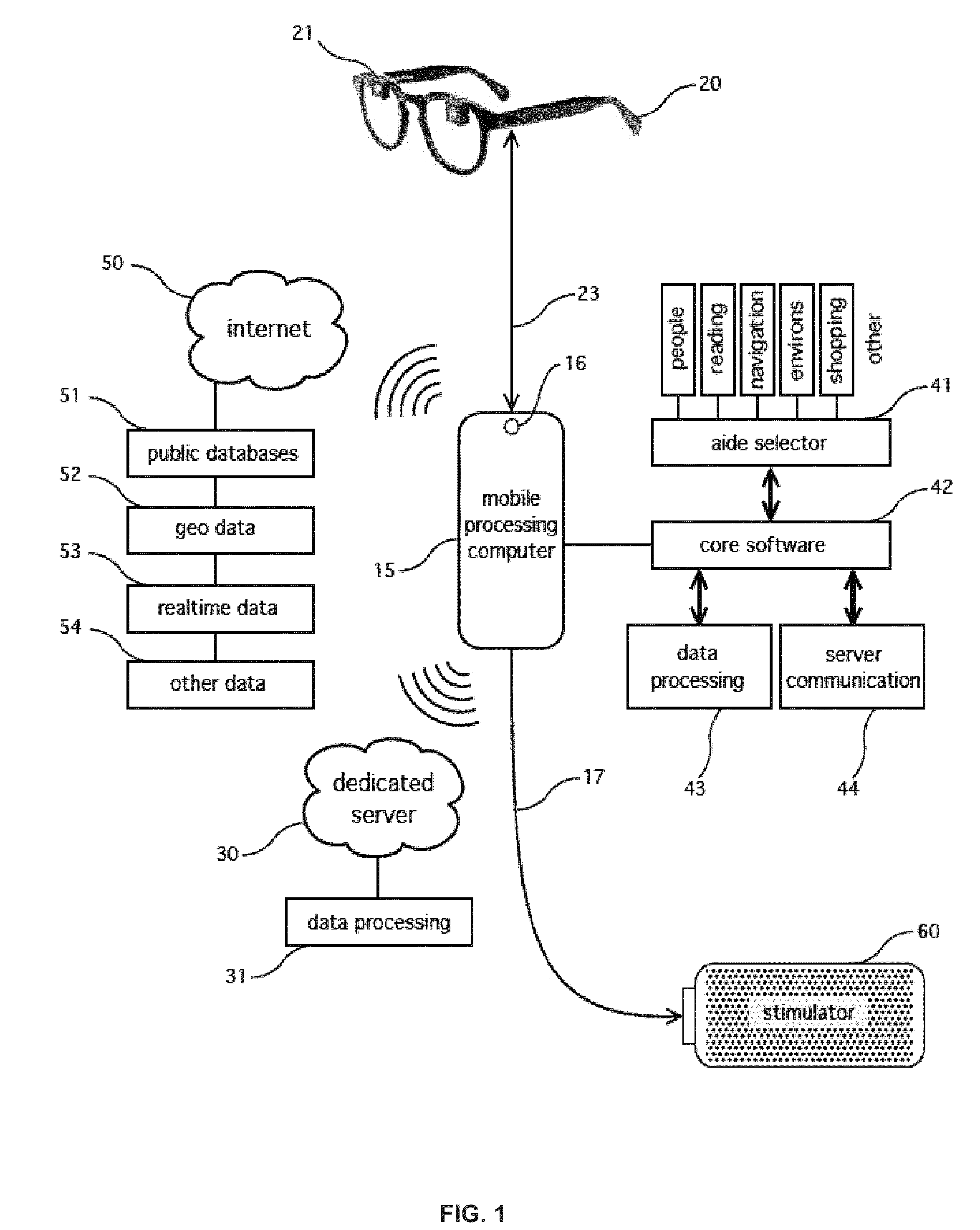

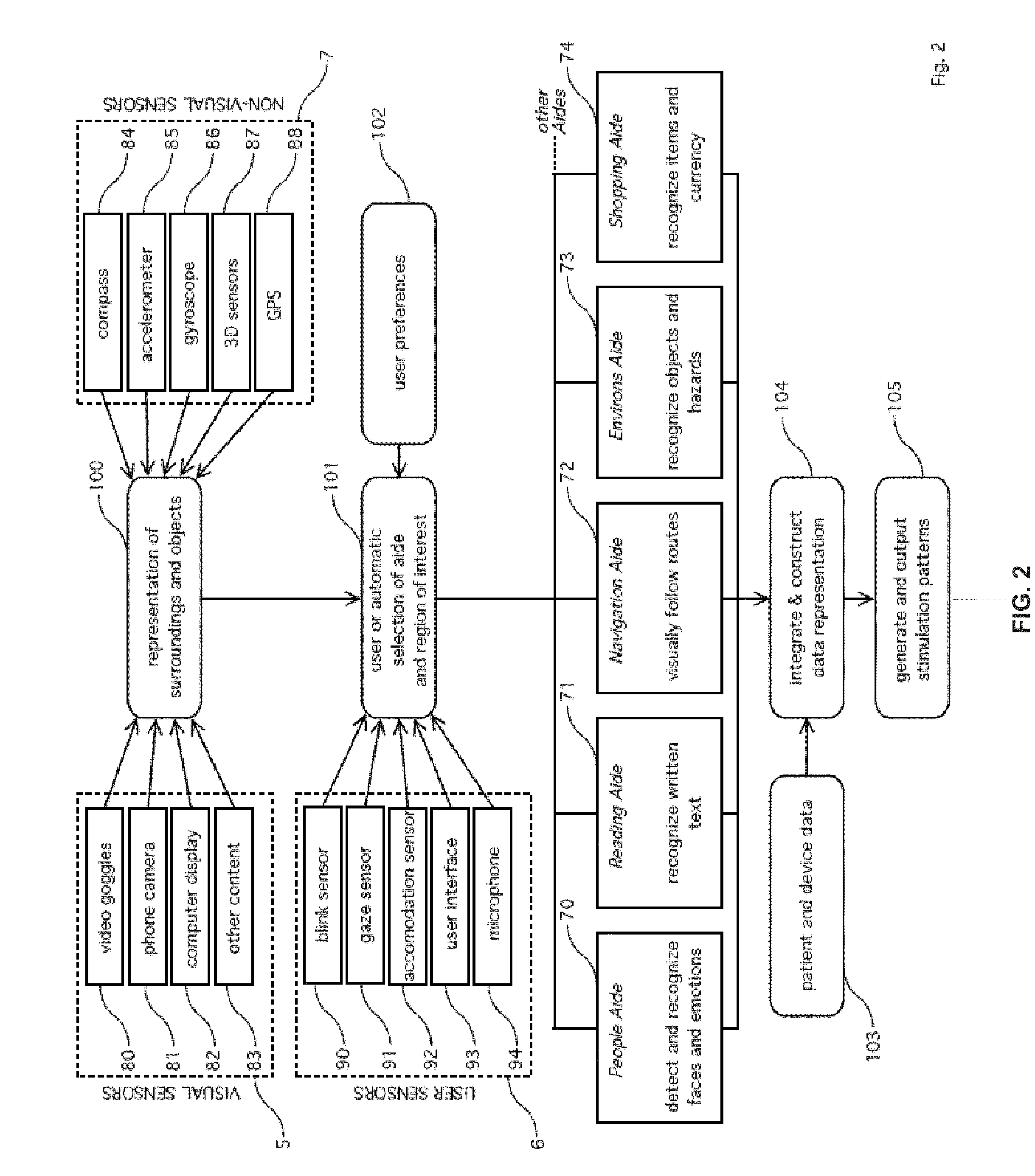

Smart prosthesis for facilitating artificial vision using scene abstraction

A method of providing artificial vision to a visually-impaired user implanted with a visual prosthesis. The method includes configuring, in response to selection information received from the user, a smart prosthesis to perform at least one function of a plurality of functions in order to facilitate performance of a visual task. The method further includes extracting, from an input image signal generated in response to optical input representative of a scene, item information relating to at least one item within the scene relevant to the visual task. The smart prosthesis then generates image data corresponding to an abstract representation of the scene wherein the abstract representation includes a representation of the at least one item. Pixel information based upon the image data is then provided to the visual prosthesis.

Owner:PIXIUM VISION SA

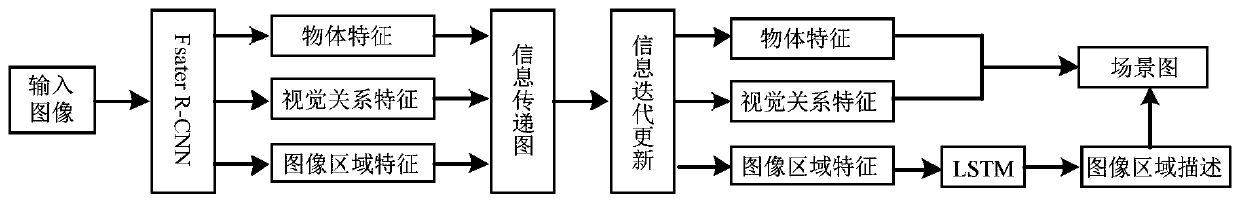

Scene map generation method

ActiveCN111462282AImprove accuracyGenerate helpNeural architecturesNeural learning methodsSemantic featureVisual perception

The invention discloses a scene graph generation method, which is characterized in that three different levels of semantic tasks of object detection, visual relationship detection and image region description are mutually connected, and the visual tasks of different semantic levels of scene understanding are jointly solved in an end-to-end mode. Firstly, an object, a visual relationship and imageregion description are aligned with a feature information transfer graph according to spatial features and semantic connection of the object, the visual relationship and the image region description,and then feature information is transferred to three different levels of semantic tasks through the feature information transfer graph so as to achieve simultaneous iterative updating of semantic features. According to the method, object detection and visual relation detection are realized by utilizing semantic feature connection of different levels of scene images so as to generate scene images corresponding to the scene images; and the main region of the scene image is subjected to image description by using a natural language, Meanwhile, the image region description is used as a supervisionmethod for scene image generation so as to improve the accuracy of scene image generation.

Owner:HARBIN ENG UNIV

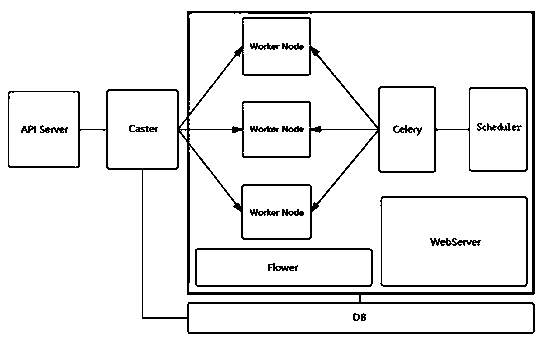

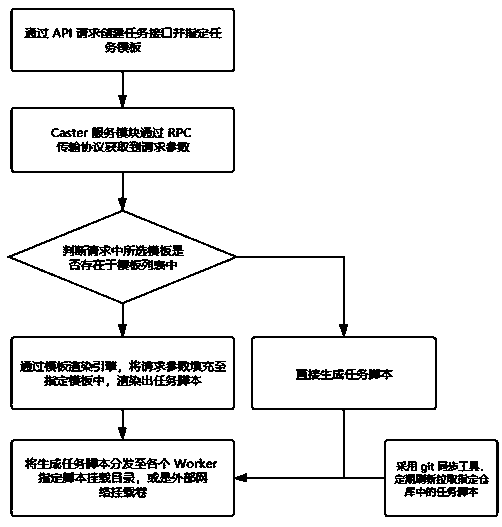

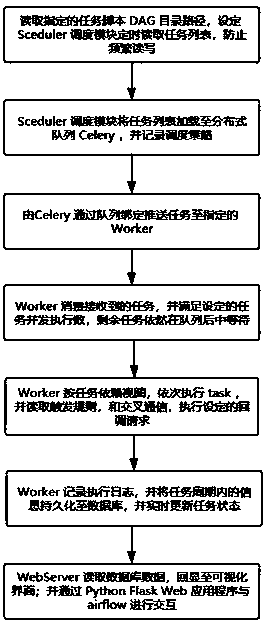

Airflow-based distributed asynchronous task construction and scheduling system and method

ActiveCN111506412ARealize rational utilizationSimplify Horizontal ScalingProgram initiation/switchingResource allocationFault toleranceExecution unit

The invention discloses a distributed asynchronous task scheduling system based on Airflow and a working method of the distributed asynchronous task scheduling system. The system comprises an interface calling module API Server, a task construction and distribution module Caster, an Airflow scheduling platform and a data storage module DB. The interface calling module is used for calling an API Server; the task construction distribution module Caster is used for rendering a task script; the Airflow scheduling platform comprises a task scheduling module Seduler, a task execution unit Worker Node, a task execution unit management module Flower, a distributed task queue Cell and a visual task scheduling management interface WebServer; and the data storage module DB is connected with the taskconstruction distribution module Caster and the Airflow scheduling platform and used for storing data logs generated in the operation process of the task construction distribution module Caster and the Airflow scheduling platform. According to the invention, the availability, flexibility and fault tolerance of the system can be effectively improved, and the load balance of the system is ensured.

Owner:SHANGHAI DATATOM INFORMATION TECH CO LTD

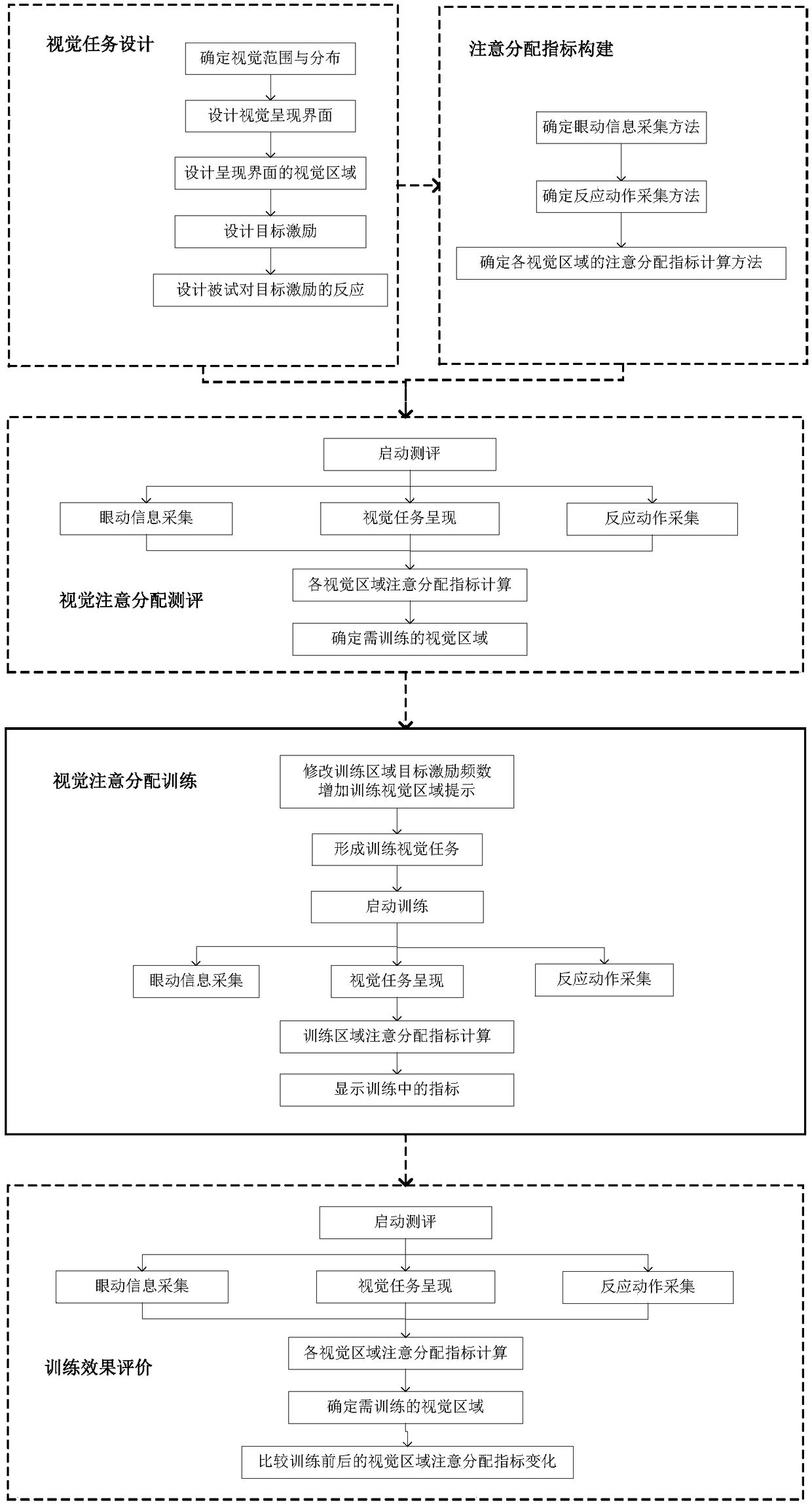

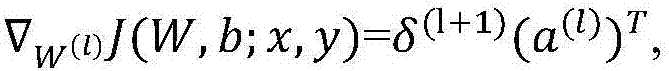

Visual attention allocation ability evaluating and training method

ActiveCN108309327AIncrease credibilityImprove workabilitySensorsPsychotechnic devicesComputer scienceVisual perception

The invention relates to a visual attention allocation ability evaluating and training method. The method includes the steps of setting a visual task according to the requirement of visual attention allocation needed by operations, constructing an attention allocation index according to a visual task mode, evaluating the visual attention index for a testee according to the settings in the last steps, conducting visual attention training on the testee, enhancing the attention allocation ability on visual areas with serial numbers of I1 and I2, and evaluating the visual attention allocation training effect for the testee according to the last steps. The visual attention allocation ability index can be quantitatively evaluated and trained, and the actual requirements for selecting and training complicated task operators are met.

Owner:PLA NAVY GENERAL HOSIPTAL

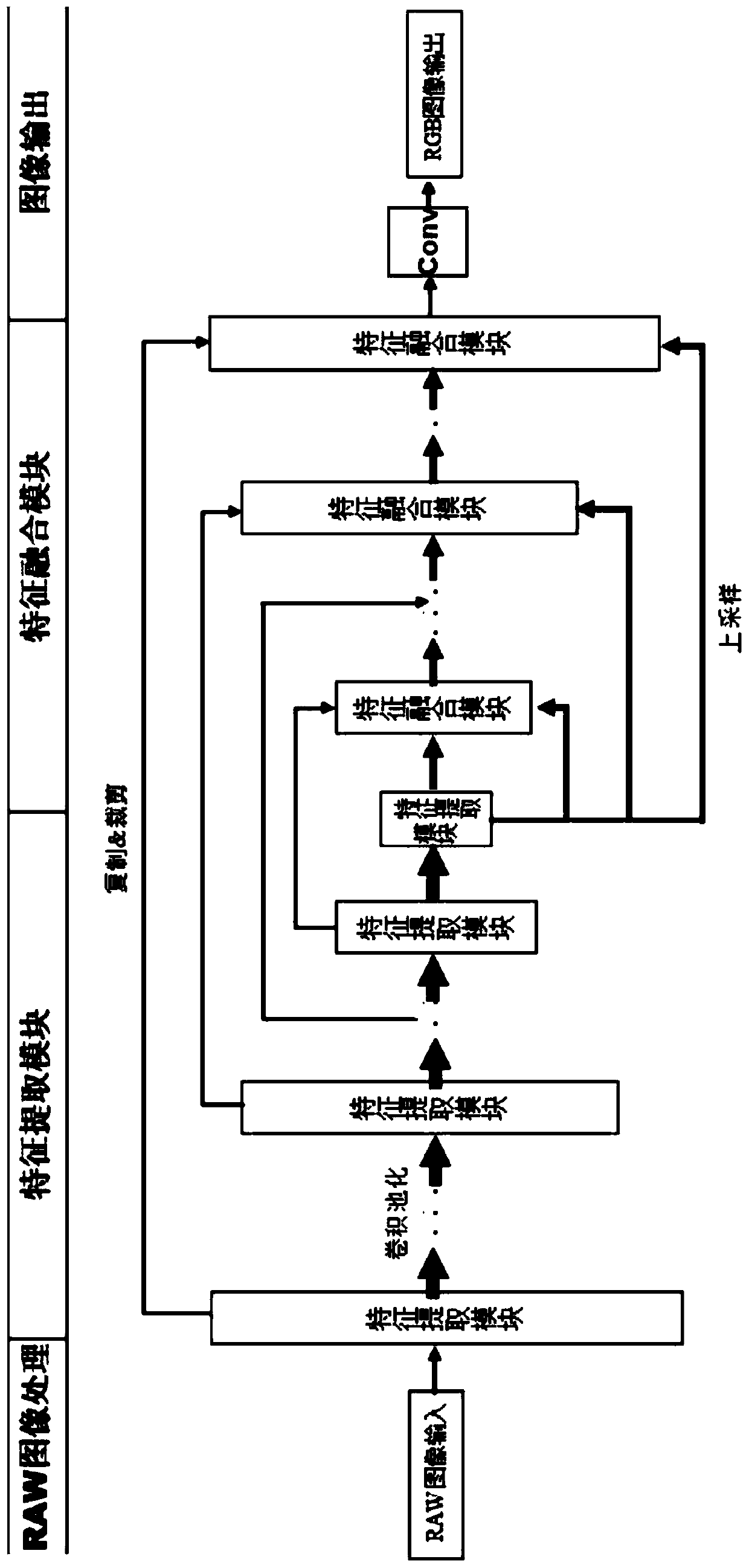

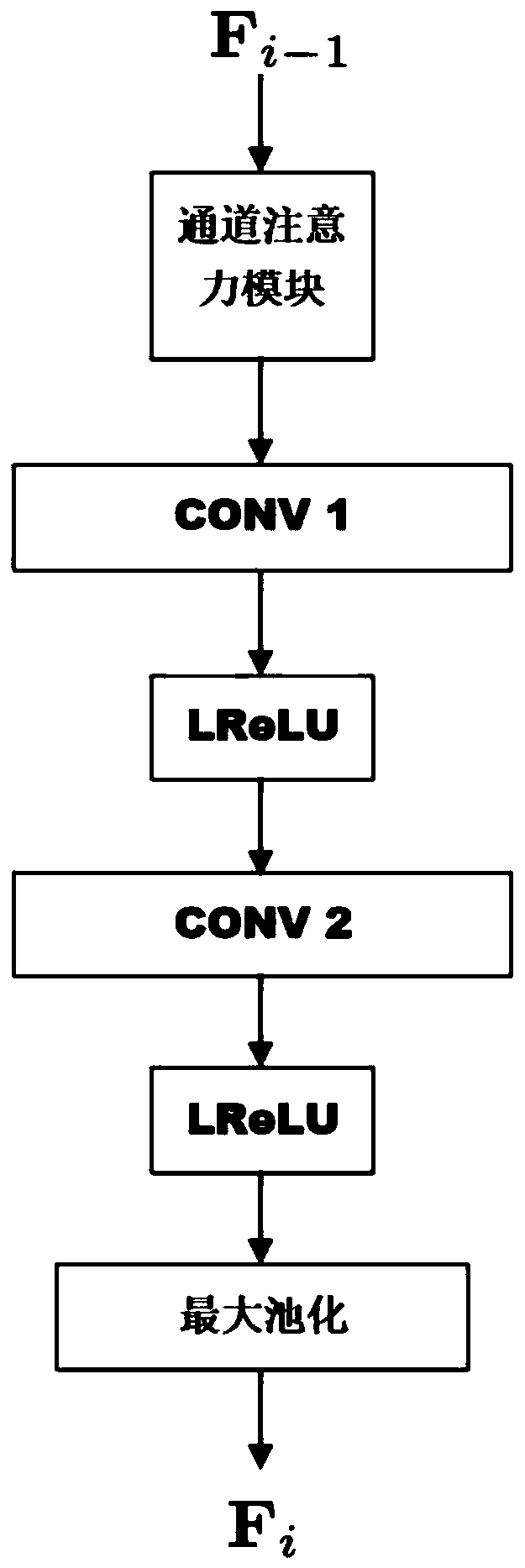

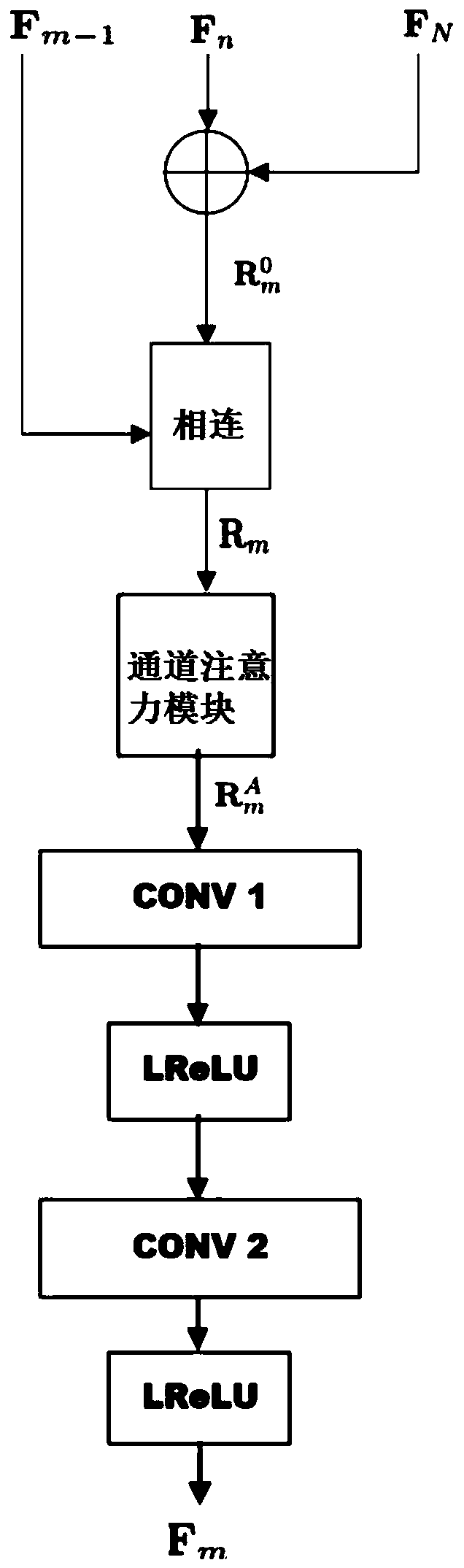

Low-illumination image enhancement method based on attention mechanism and multi-level feature fusion

InactiveCN110210608AImprove accuracyQuality improvementCharacter and pattern recognitionNeural architecturesIlluminanceImaging processing

The invention relates to a low-illumination image enhancement method based on an attention mechanism and multi-level feature fusion, and the method comprises the following steps: carrying out the processing of a low-illumination image at an input end, and outputting a four-channel feature map; using a convolutional layer based on an attention mechanism as a feature extraction module and for extracting basic features as low-level features; fusing the low-level features with the corresponding high-level features and the deepest feature of the convolutional layer, and obtaining a final feature map after deconvolution; and outputting the mapping to restore the final feature map into the RGB picture. According to the invention, multi-level features of the deep convolutional neural network model are fully utilized. Different levels of features are fused, different weights are given to a feature channel through a channel attention mechanism, better feature representation is obtained, the image processing accuracy is improved, a high-quality image is obtained, and the method can be widely applied to the technical field of computer low-level visual tasks.

Owner:ACADEMY OF BROADCASTING SCI STATE ADMINISTATION OF PRESS PUBLICATION RADIO FILM & TELEVISION +1

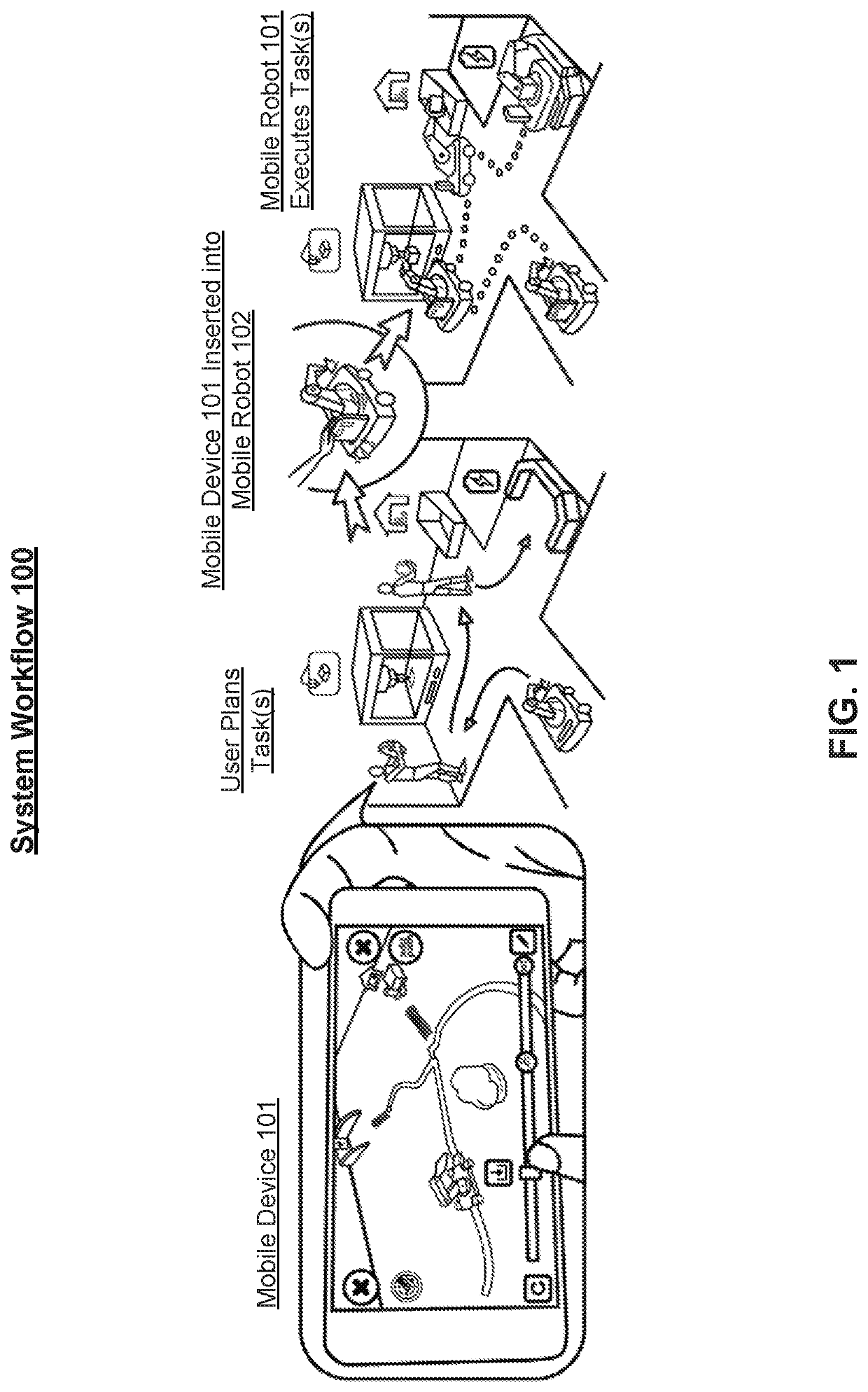

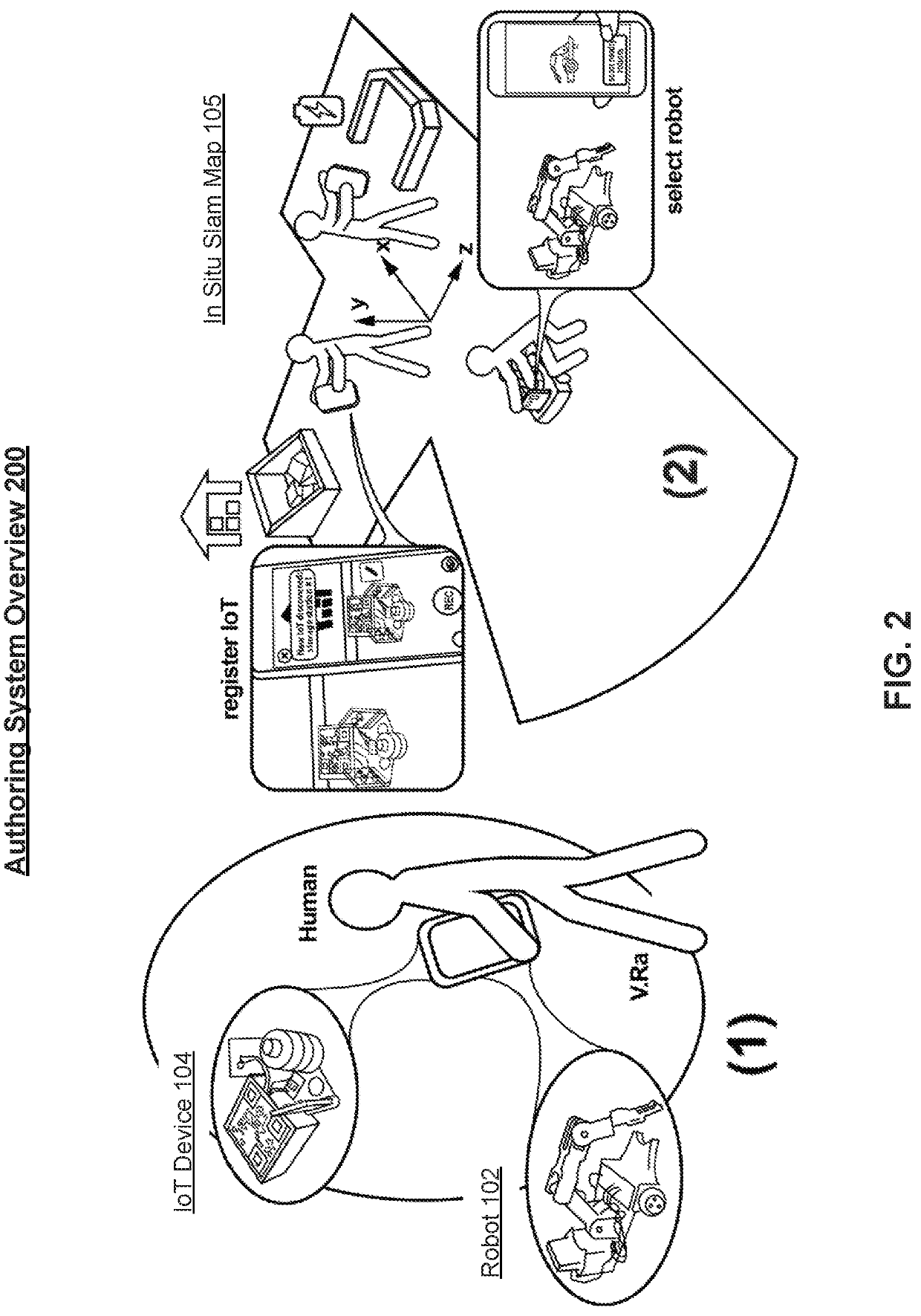

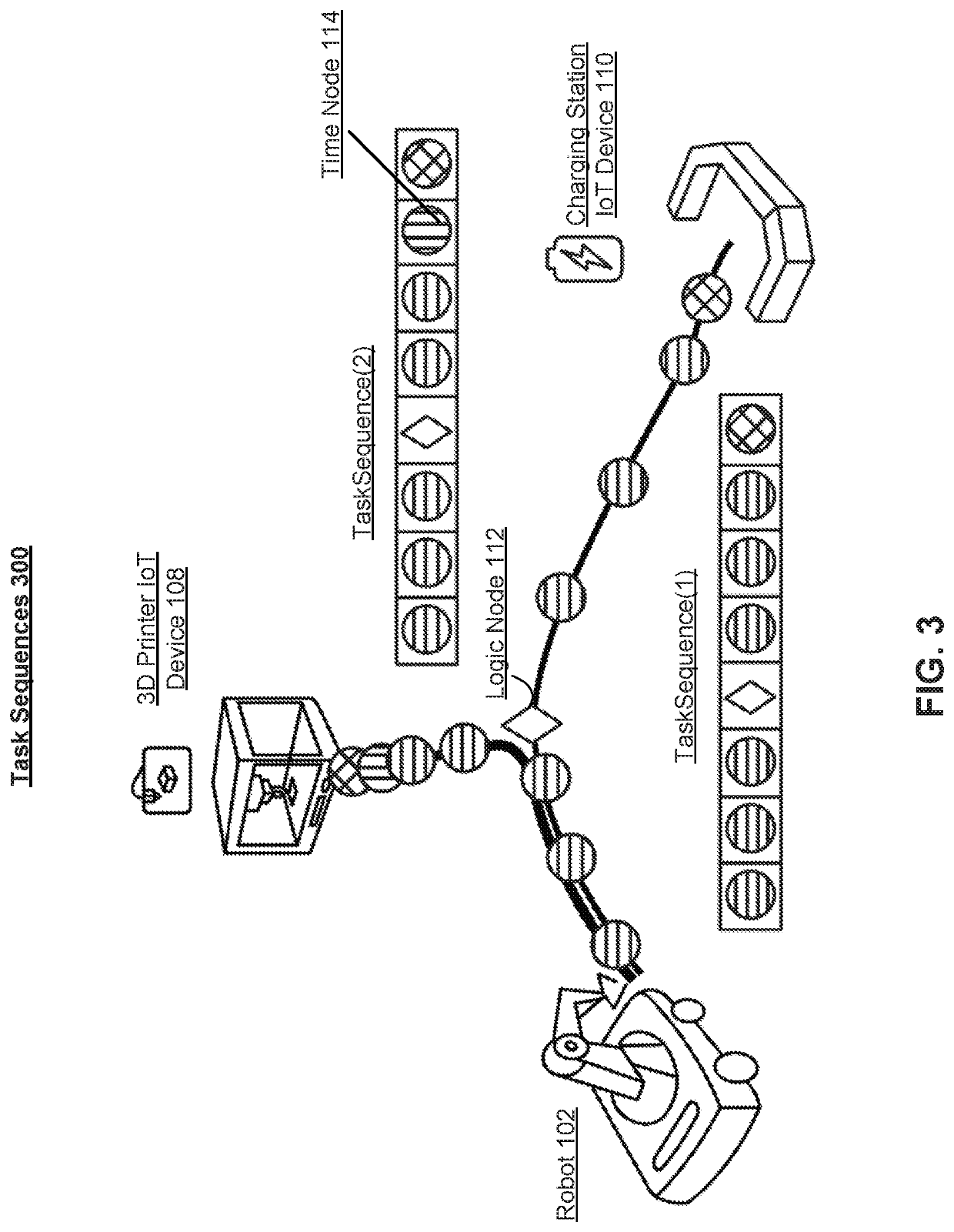

Robot navigation and robot-iot interactive task planning using augmented reality

Disclosed is a visual and spatial programming system for robot navigation and robot-IoT task authoring. Programmable mobile robots serve as binding agents to link stationary IoT devices and perform collaborative tasks. Three key elements of robot task planning (human-robot-IoT) are coherently connected with one single smartphone device. Users can perform visual task authoring in an analogous manner to the real tasks that they would like the robot to perform with using an augmented reality interface. The mobile device mediates interactions between the user, robot(s), and IoT device-oriented tasks, guiding the path planning execution with Simultaneous Localization and Mapping (SLAM) to enable robust room-scale navigation and interactive task authoring.

Owner:PURDUE RES FOUND INC

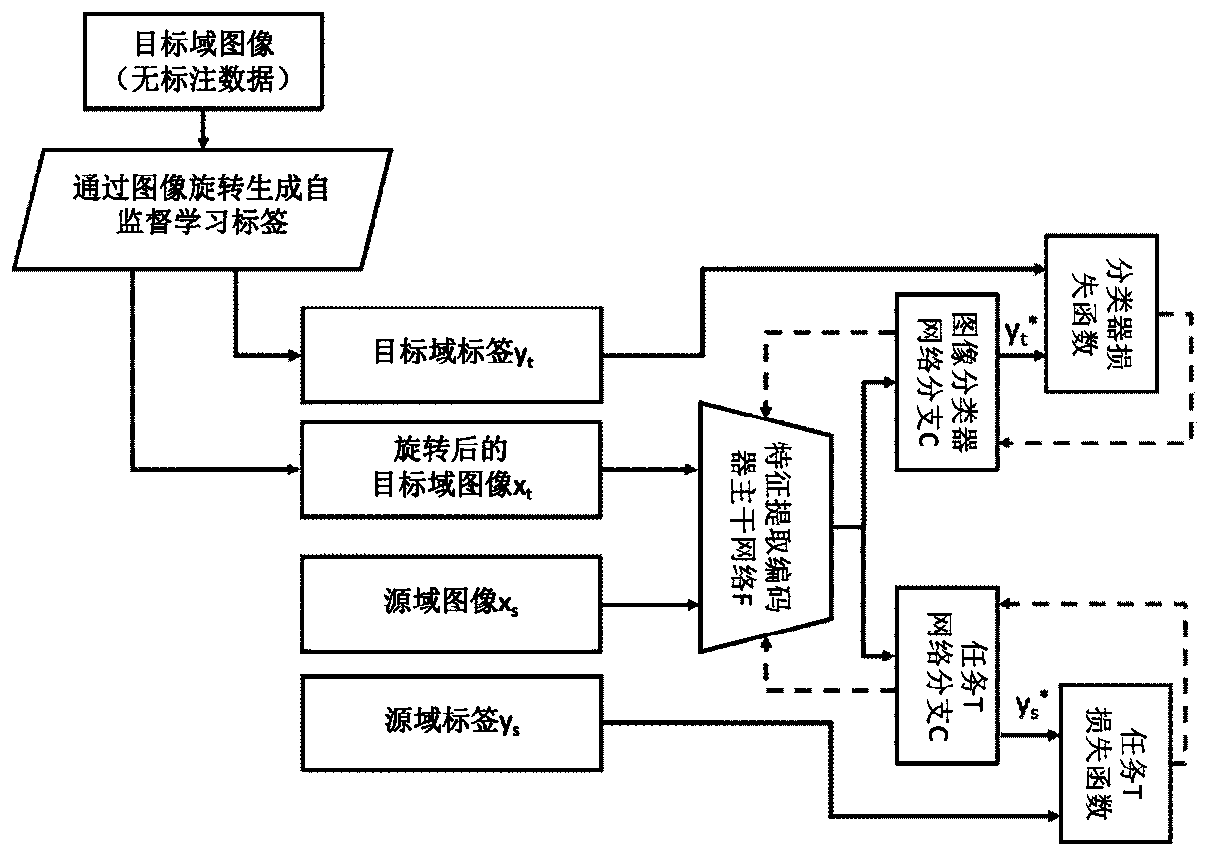

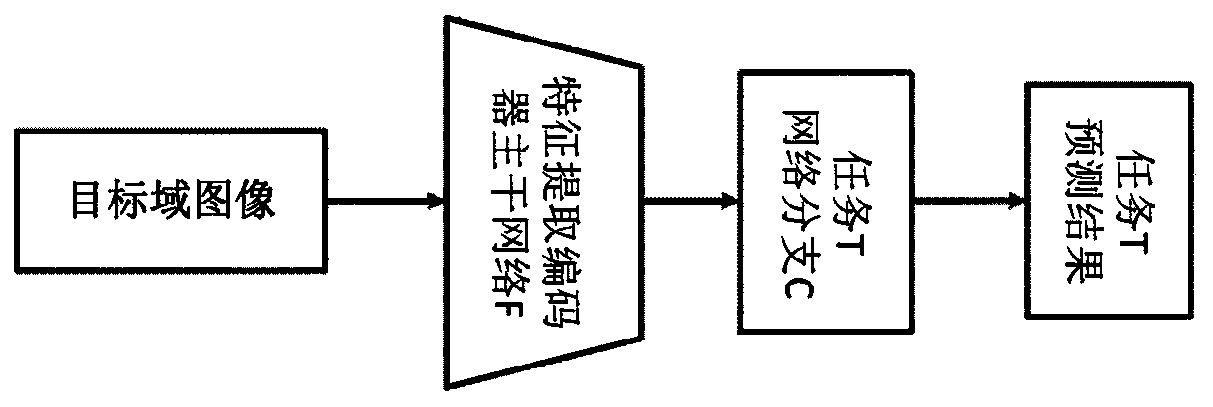

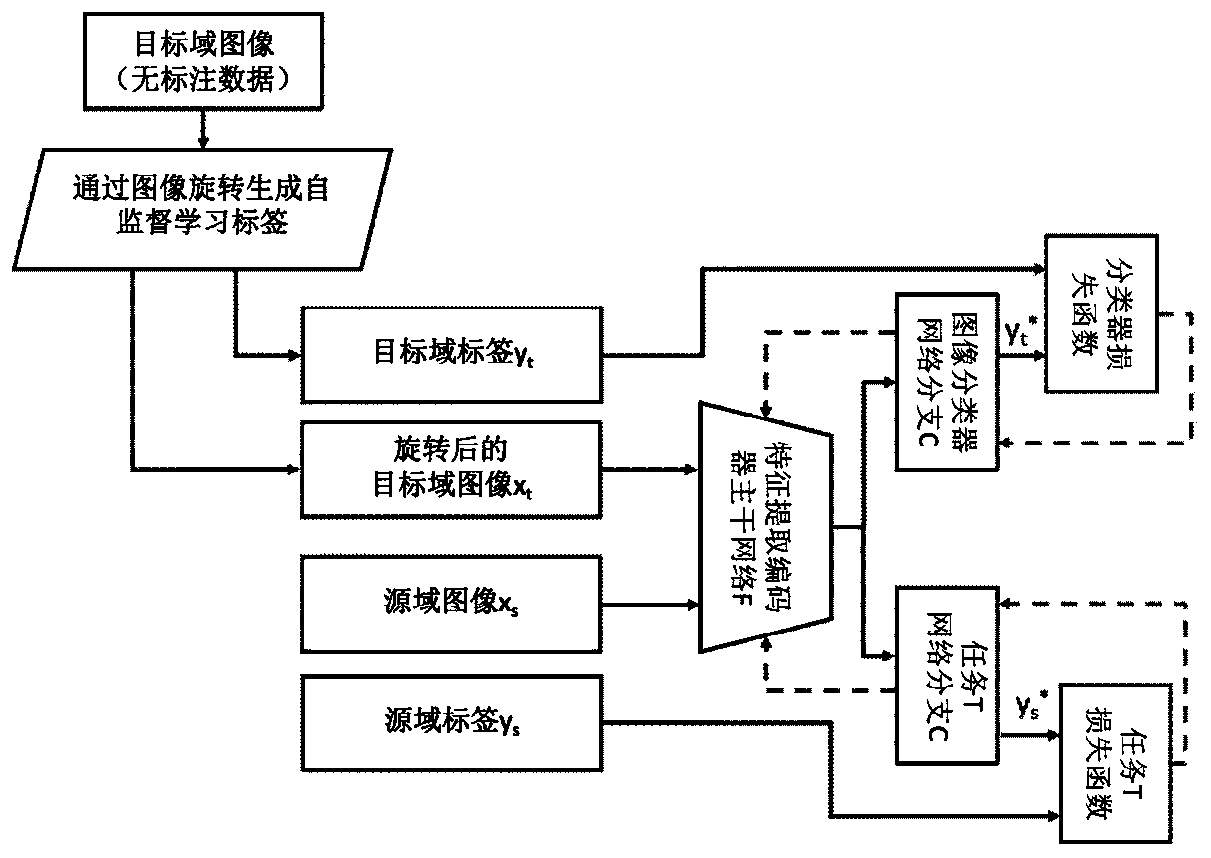

A domain adaptive deep learning method and a readable storage medium

ActiveCN109919209ARealization of domain adaptationGive full play to efficiencyCharacter and pattern recognitionNeural architecturesStudy methodsA domain

The invention discloses a domain self-adaptive deep learning method, which comprises the following steps of: carrying out rotation transformation on a target domain image to obtain a self-supervised learning training sample set; And carrying out joint training on the converted self-supervised learning training sample set and the source domain training sample set to obtain a domain adaptive deep learning model used for a visual task on a target domain. According to the method, a target domain sample does not need to be labeled, the feature representation of the target domain can be effectivelylearned, and the performance of a computer vision task on the target domain is improved. The invention further discloses a domain self-adaptive deep learning readable storage medium which also has theabove beneficial effects.

Owner:NAT INNOVATION INST OF DEFENSE TECH PLA ACAD OF MILITARY SCI

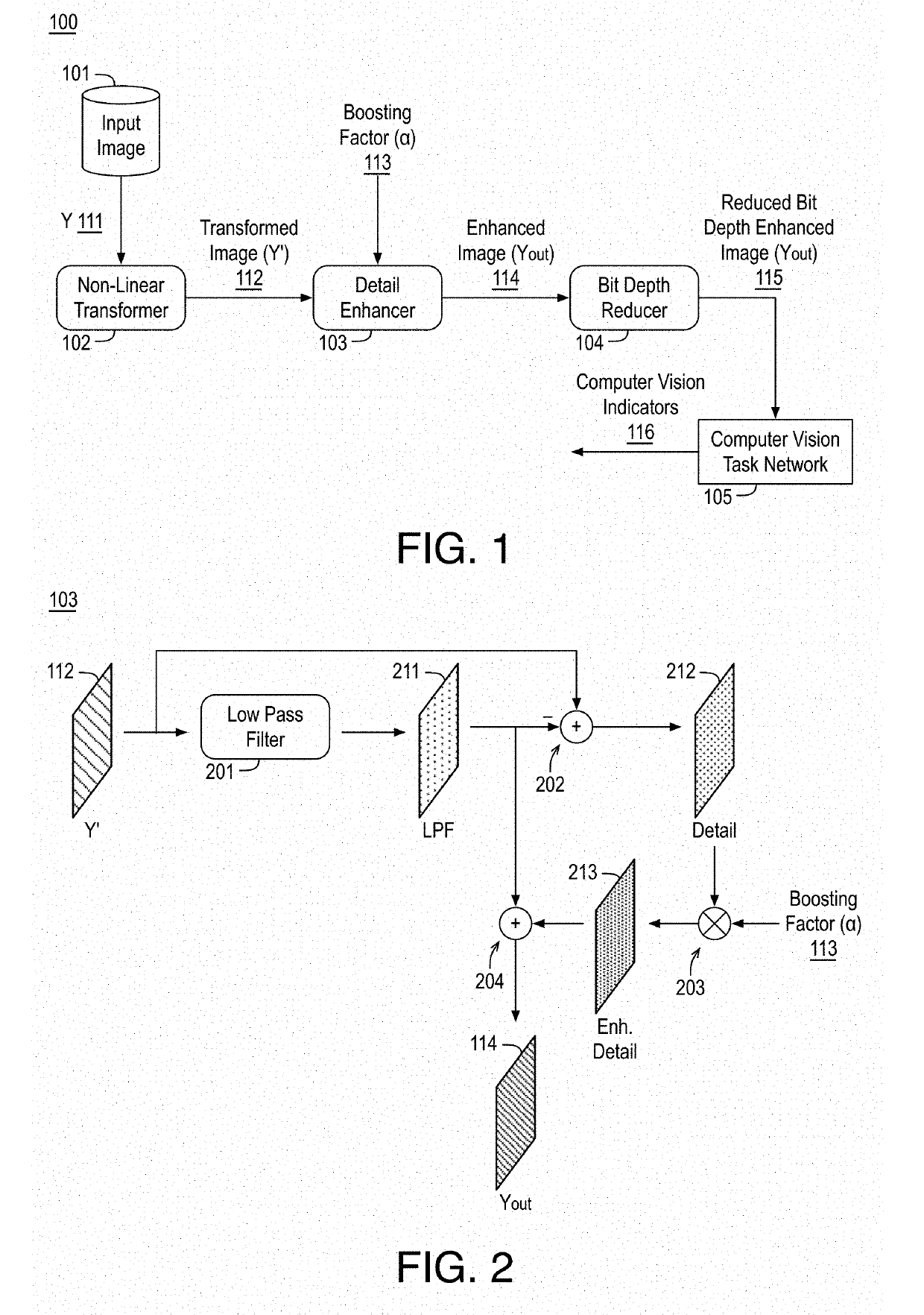

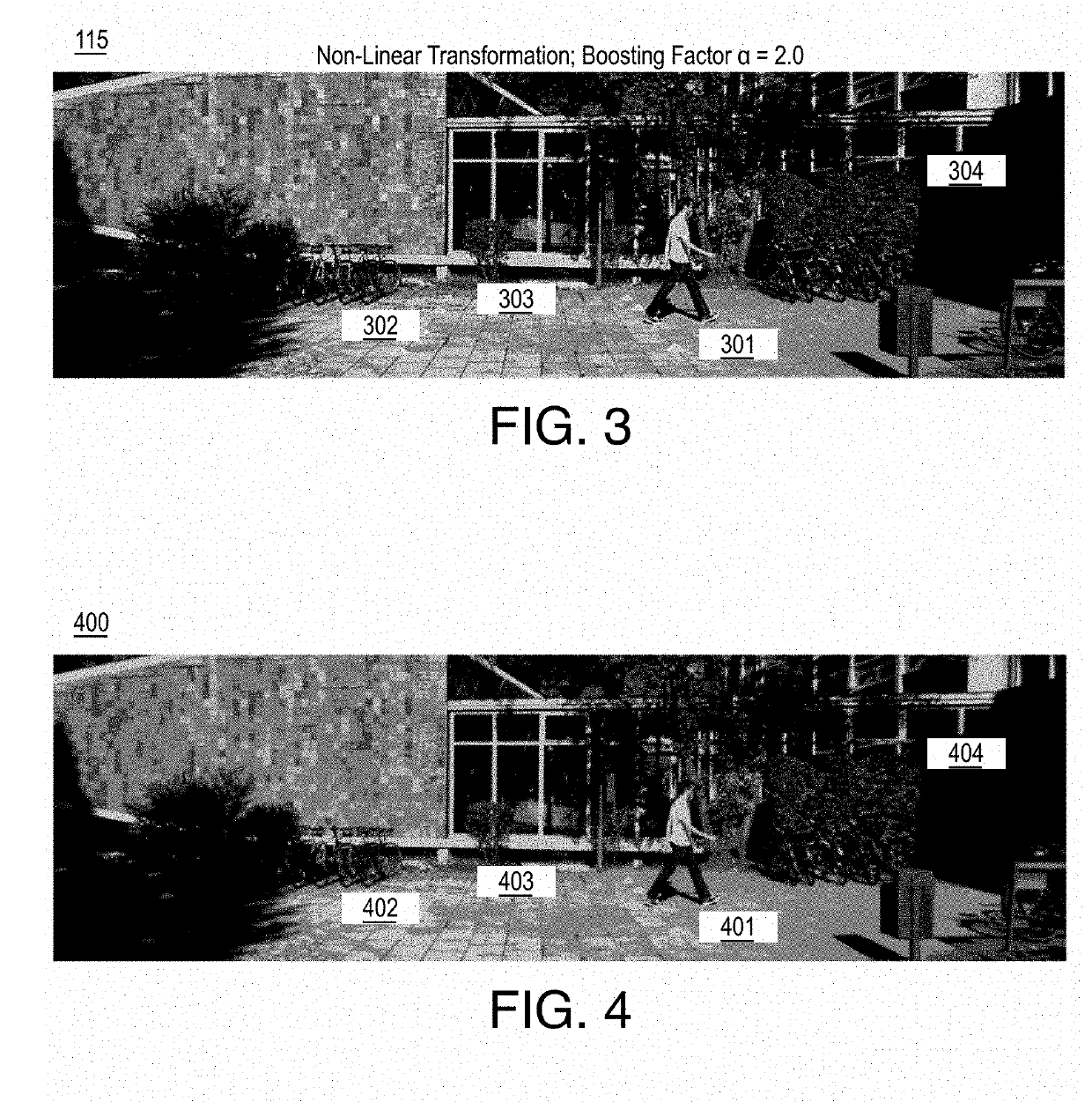

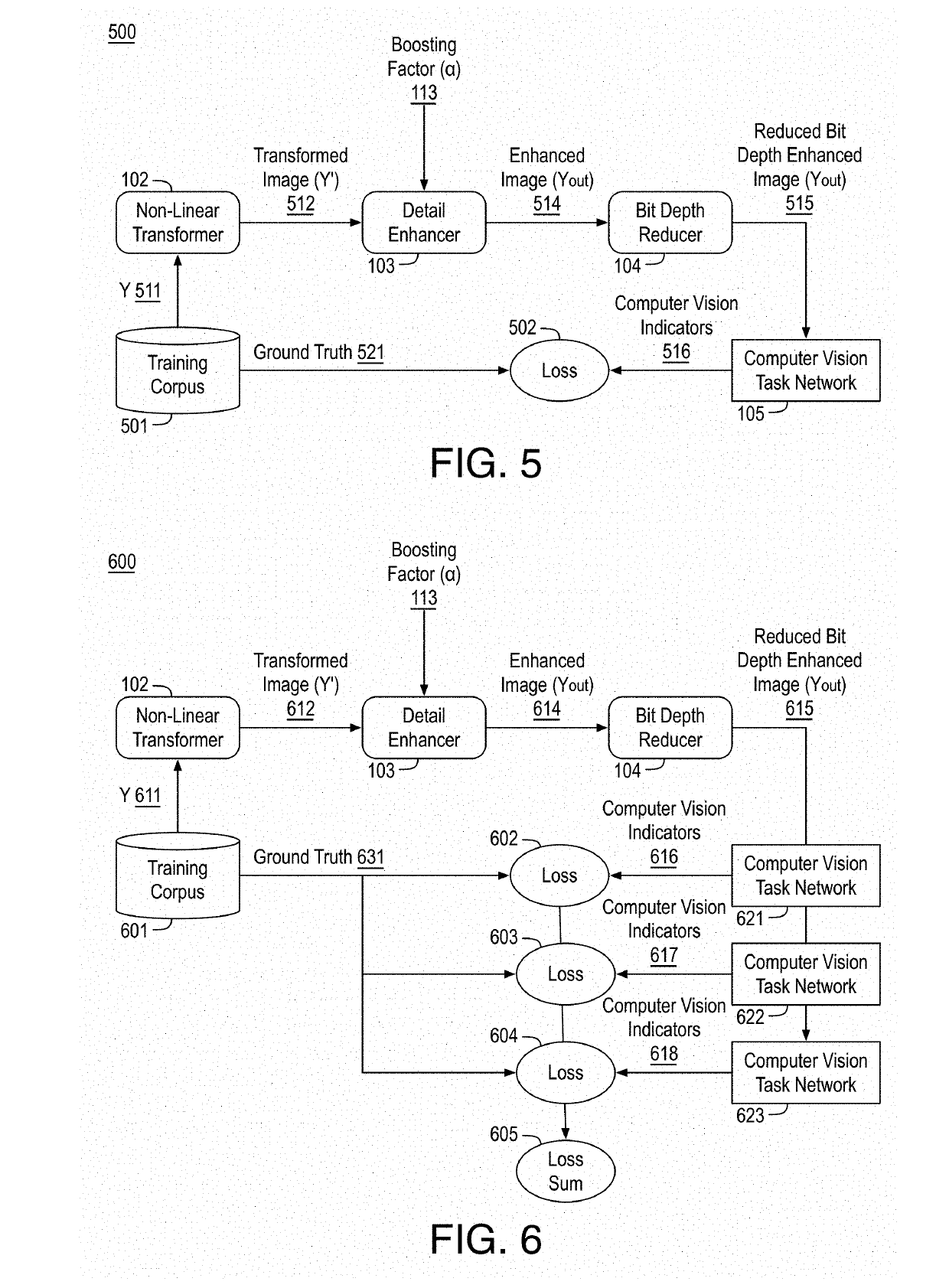

Local tone mapping to reduce bit depth of input images to high-level computer vision tasks

Techniques related to computer vision tasks are discussed. Such techniques include applying a pretrained non-linear transform and pretrained details boosting factor to generate an enhanced image from an input image and reducing the bit depth of the enhanced image prior to applying a pretrained computer vision network to perform the computer vision task.

Owner:INTEL CORP

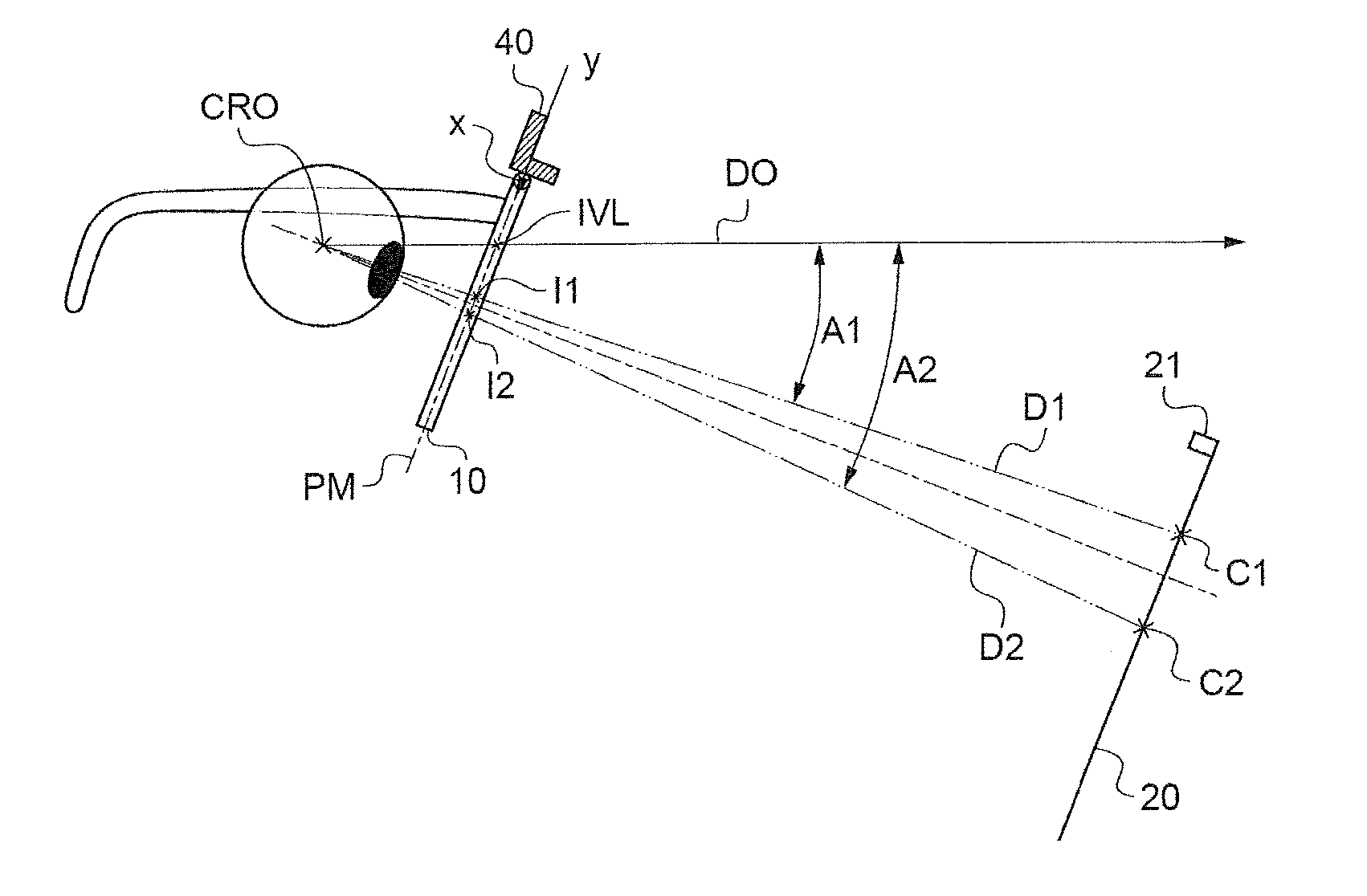

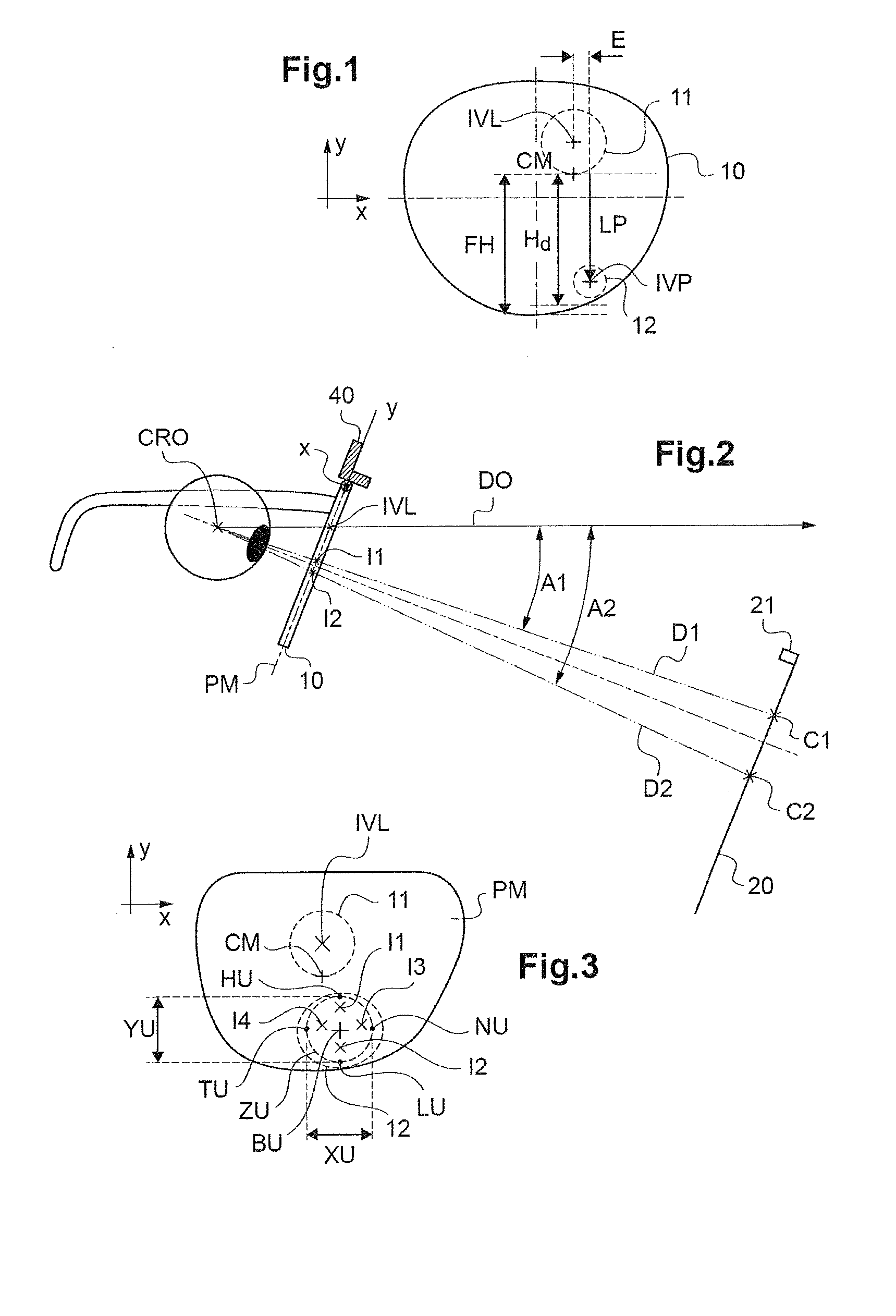

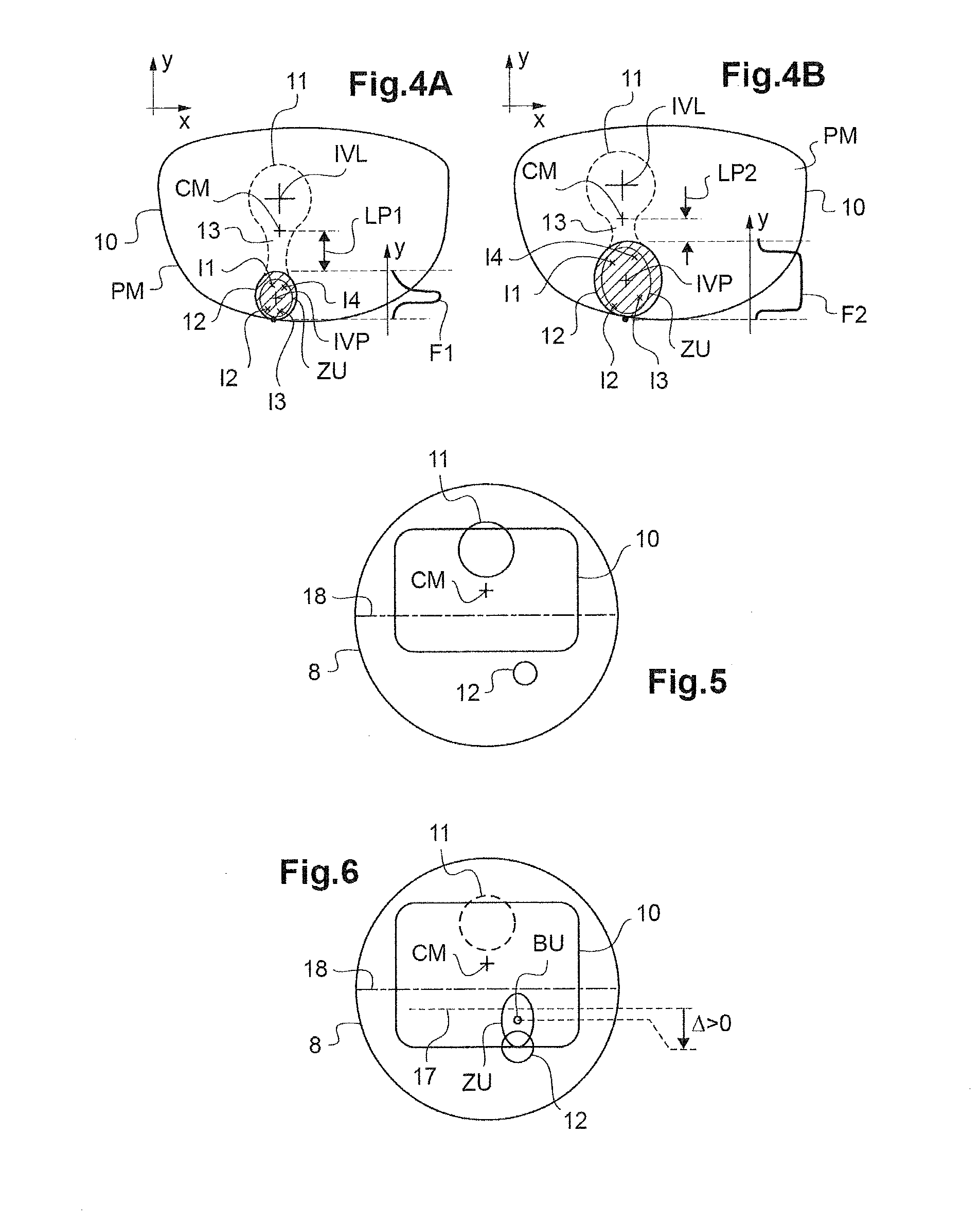

Method For Determining At Least One Optical Design Parameter For A Progressive Ophthalmic Lens

ActiveUS20160274383A1Improve visual comfortSpectales/gogglesEye diagnosticsGaze directionsComputer science

A method for determining at least one optical conception parameter for a progressive ophthalmic lens intended to equip a frame of a wearer, depending on the visual behavior of the latter. The method comprises the following steps: a) collecting a plurality of behavioral measurements relating to a plurality of gaze directions and / or positions of the wearer during a visual task; b) statistically processing said plurality of behavioral measurements in order to determine a zone of use of the area of an eyeglass fitted in said frame, said zone of use being representative of a statistical spatial distribution of said plurality of behavioral measurements; and c) determining at least one optical conception parameter for said progressive ophthalmic lens depending on a spatial extent and / or position of the zone of use.

Owner:ESSILOR INT CIE GEN DOPTIQUE

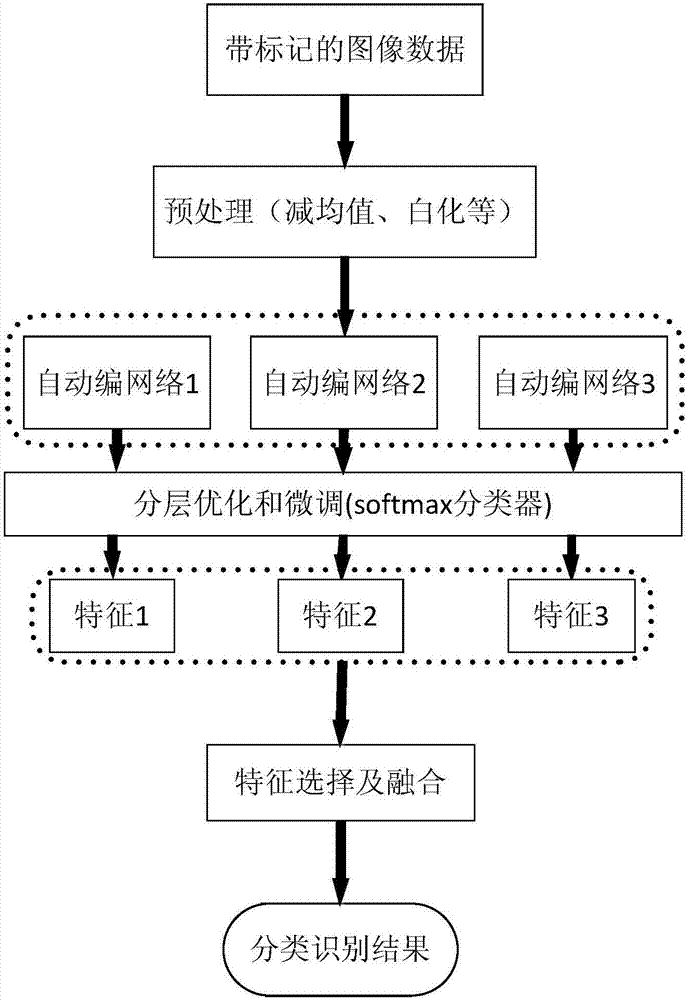

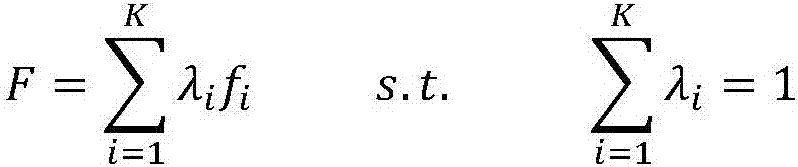

Depth feature representation method based on multiple stacked auto-encoding

InactiveCN107194438ACharacter and pattern recognitionNeural learning methodsPattern recognitionNetwork structure

The invention relates to a depth feature representation method based on multiple stacked auto-encoding. Feature expressions of different hierarchical structures of a target object are acquired through constructing stacked auto-encoding networks of different structures. A shallow-layer (the number of hidden layers is smaller) neural network structure is constructed, a back-propagation method is adopted to train network parameters to enable a neural network to achieve the optimal structure, and outputs, namely the feature expressions, of a second layer of the network are acquired; more deeply hierarchical network structures are respectively established, network parameters are trained according to a similar manner, and thus outputs (the feature expressions) of corresponding layers are acquired; and fusion and selection are carried out on the above-mentioned obtained features according to a manner of feature combination and selection to acquire a hierarchical feature representation that characterizes a target. Therefore, corresponding visual tasks (image classification, identification and detection) are carried out.

Owner:WUHAN UNIV

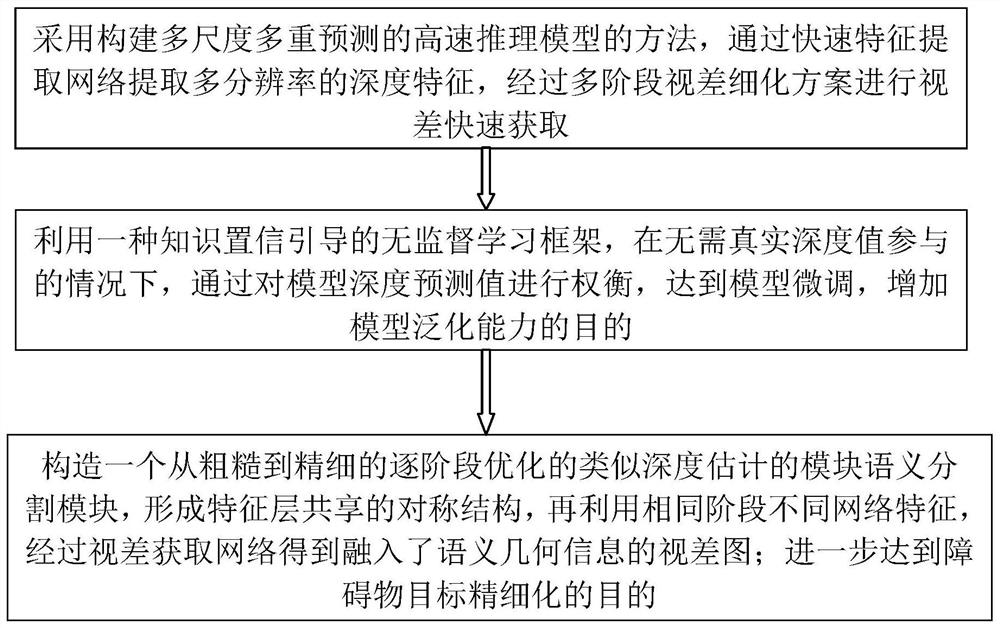

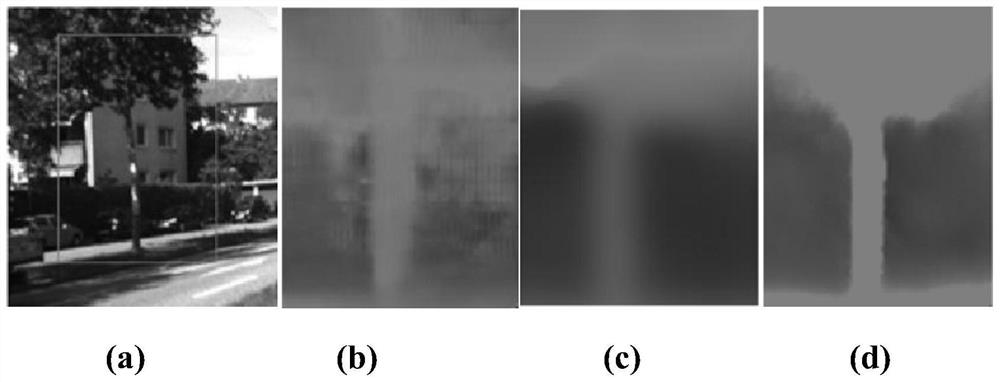

Construction method of multi-vision task collaborative depth estimation model

The invention provides a construction method of a multi-vision task collaborative depth estimation model. The construction method comprises the following specific steps: constructing a rapid scene depth estimation model under stereoscopic vision constraint; optimizing a parallax geometry and knowledge priori collaborative model; performing target depth refinement combined with semantic features: constructing a module semantic segmentation module which is optimized stage by stage from roughness to fineness and is similar to depth estimation, forming a symmetric structure shared by feature layers, and obtaining a disparity map integrated with semantic geometrical information through a disparity obtaining network by utilizing different network features in the same stage; and the purpose of obstacle target refinement is further achieved. Multi-scale knowledge prior and visual semantics are embedded into the depth estimation model, the nature of human perception is deeply approached through a multi-task collaborative sharing learning mode, and the depth estimation precision of the obstacle is improved.

Owner:HUBEI UNIV OF TECH

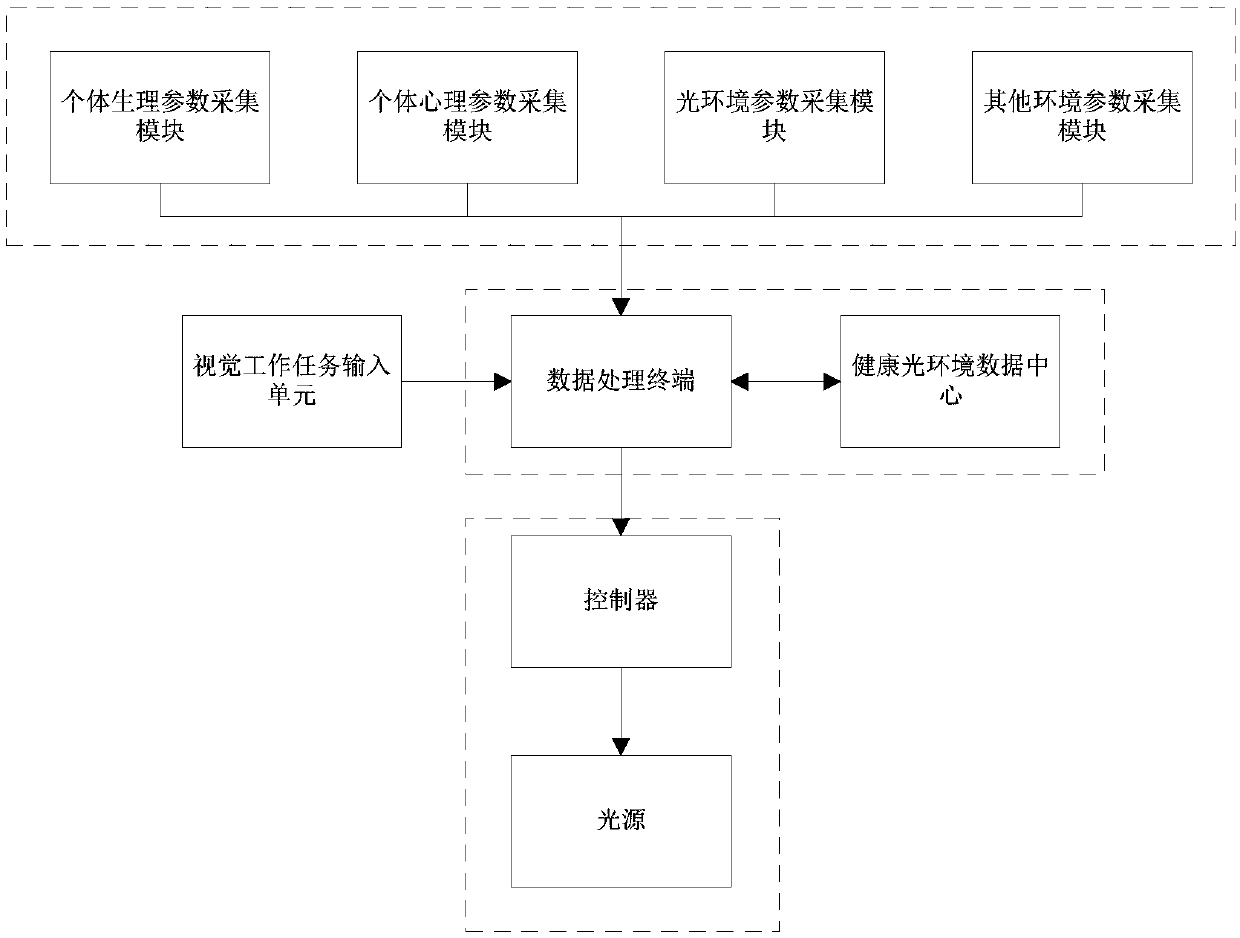

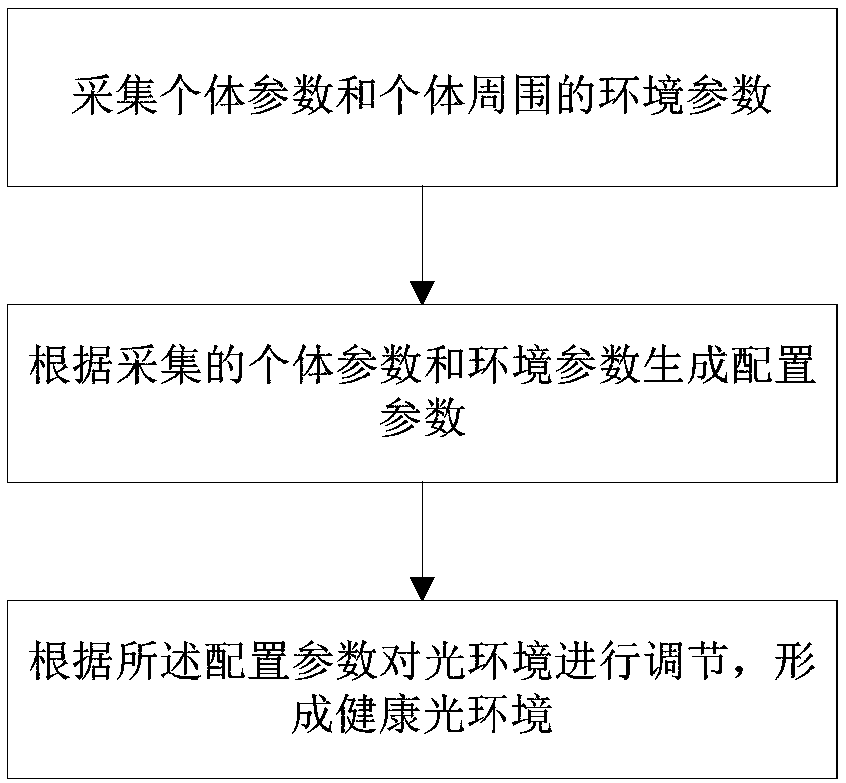

Regulating system and method for light environment

ActiveCN107596533AGood physical fitnessFit closelySensorsPsychotechnic devicesEngineeringData collecting

The invention provides a regulating system and a regulating method for light environment. The system comprises a data collecting unit, a control unit and a data processing unit, wherein the data collecting unit is used for collecting parameters of an individual and environmental parameters around the individual; the control unit is used for adjusting the light environment around the individual; the data processing unit is connected with the data collecting unit and the control unit, is used for generating and sending configuration parameters to the control unit according to the collected parameters of the individual and the environmental parameters, and the control unit adjusts the light environment according to the configuration parameters. According to the system and the method providedby the invention, the physical environment in which a light environment user is located and various representative physiological and psychological indexes of the individual are monitored in real time,then various light environment parameters of the environment are regulated in real time, so that the compatibility among the illumination, physique, visual tasks and the like is enhanced, and the real-time healthy light environment which is most suitable for individual physiological and psychological conditions of the user is obtained.

Owner:CHONGQING UNIV

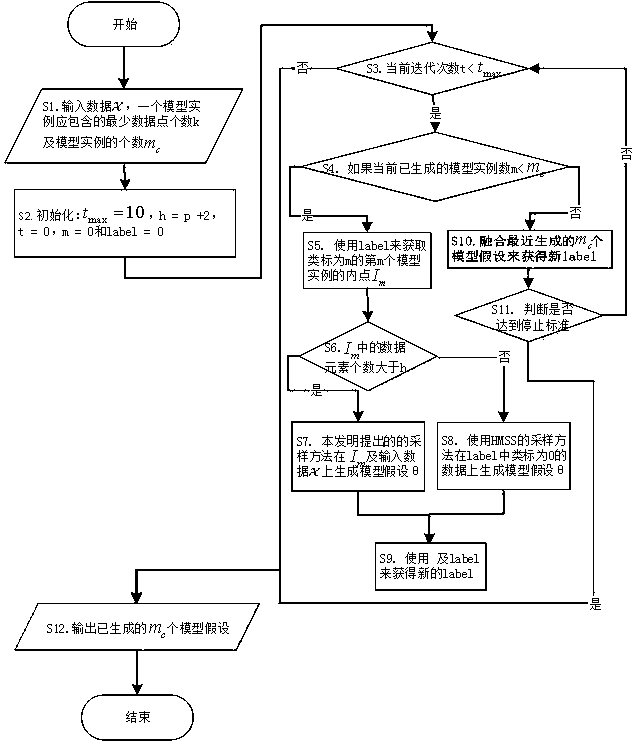

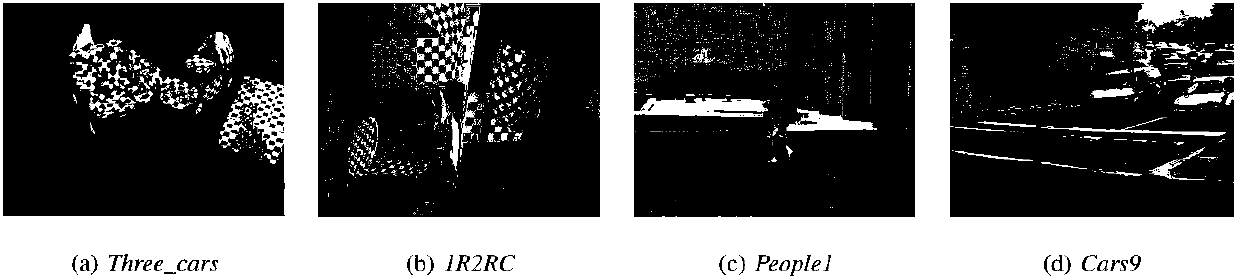

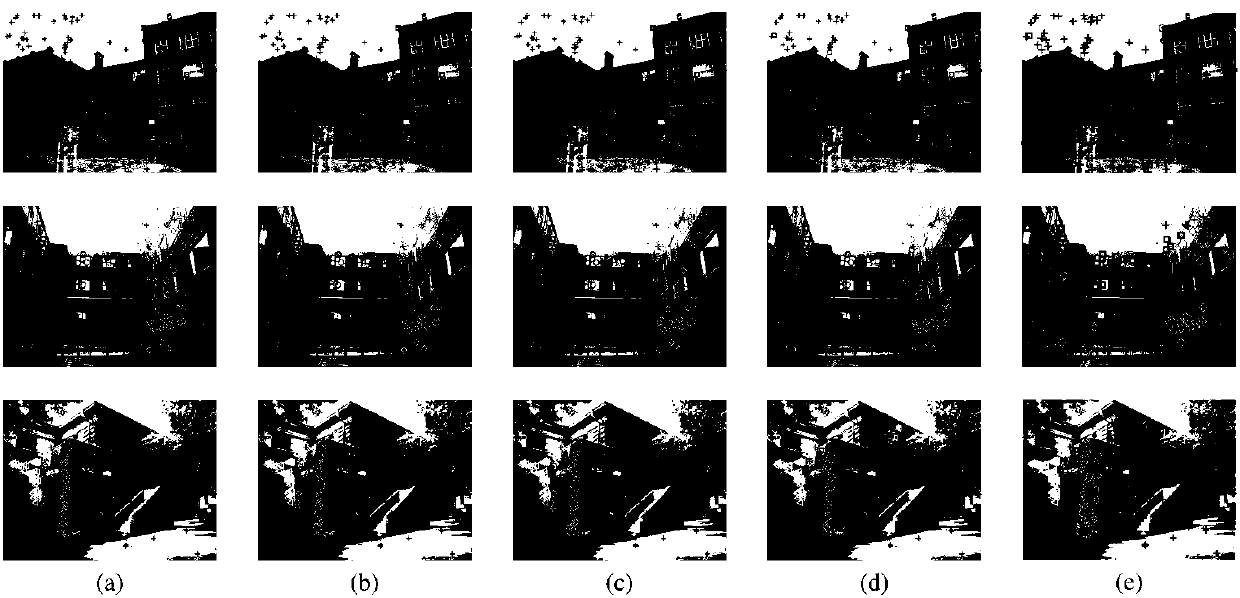

Robust model fitting method based on global greedy search

The invention discloses a robust model fitting method based on global greedy search. The robust model fitting method specifically comprises the steps that a data set is arranged, and parameters are initialized; a label is used for obtaining an inner point of an [m]th model instance of which the class label is m; according to a sampling method of the global greedy search, model hypotheses theta aregenerated on I<m> and input data x or the model hypotheses theta are generated on data of which the class label is 0 in the label according to a sampling method of an HMSS; a new label is obtained according to the model hypotheses theta and the label; m<c> recently generated model hypotheses are merged together to obtain an [m-tilde]<c> model hypothesis, then the [m-tilde]<c> model hypothesis isused for obtaining the new label; and the m<c> generated model hypotheses are output, and according to outputting of the m<c> generated model hypotheses, an image is segmented to complete the model fitting. The robust model fitting method selects data subsets from the inner points to generate more accurate initial model hypotheses, and can be applied to computer visual tasks such as homography matrix estimation, fundamental matrix estimation, two-view plane segmentation and motion segmentation.

Owner:FUJIAN AGRI & FORESTRY UNIV

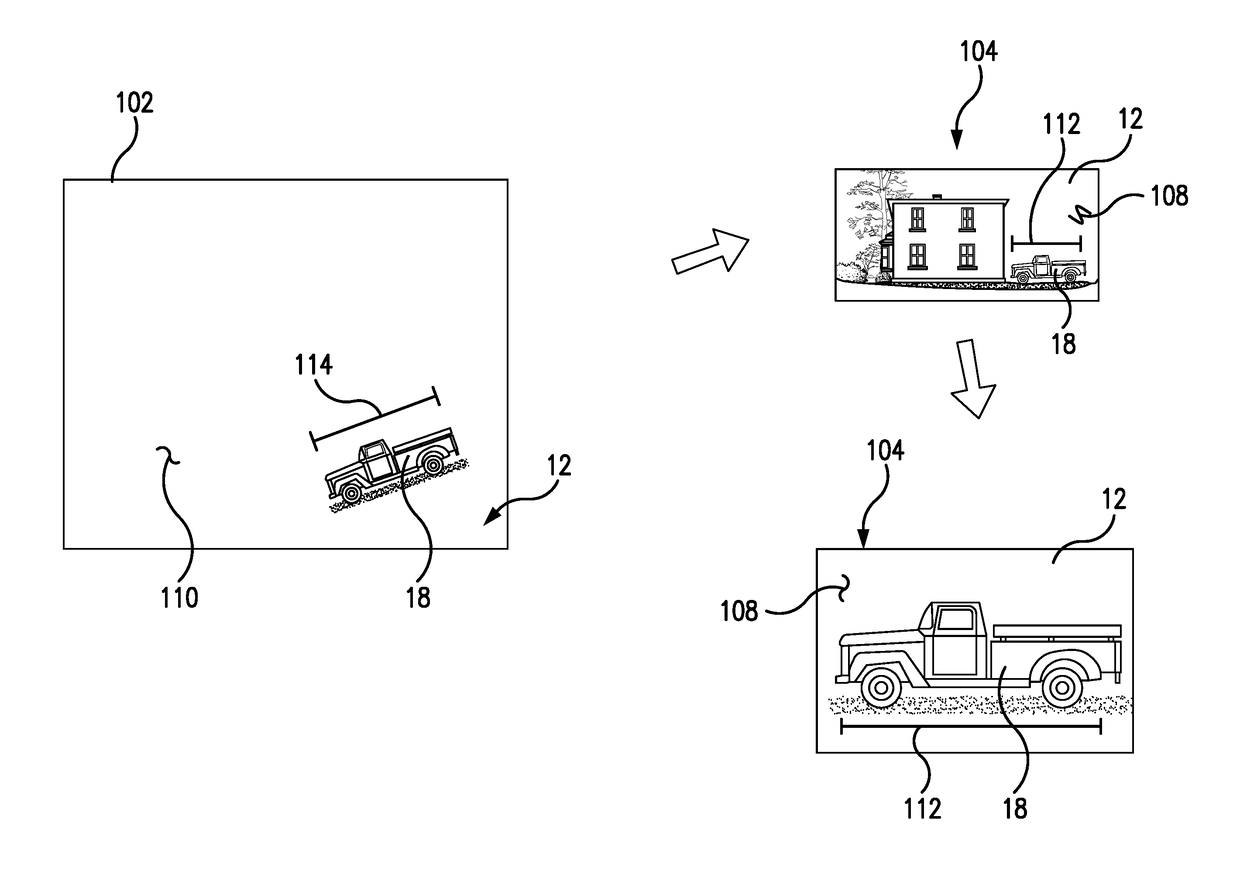

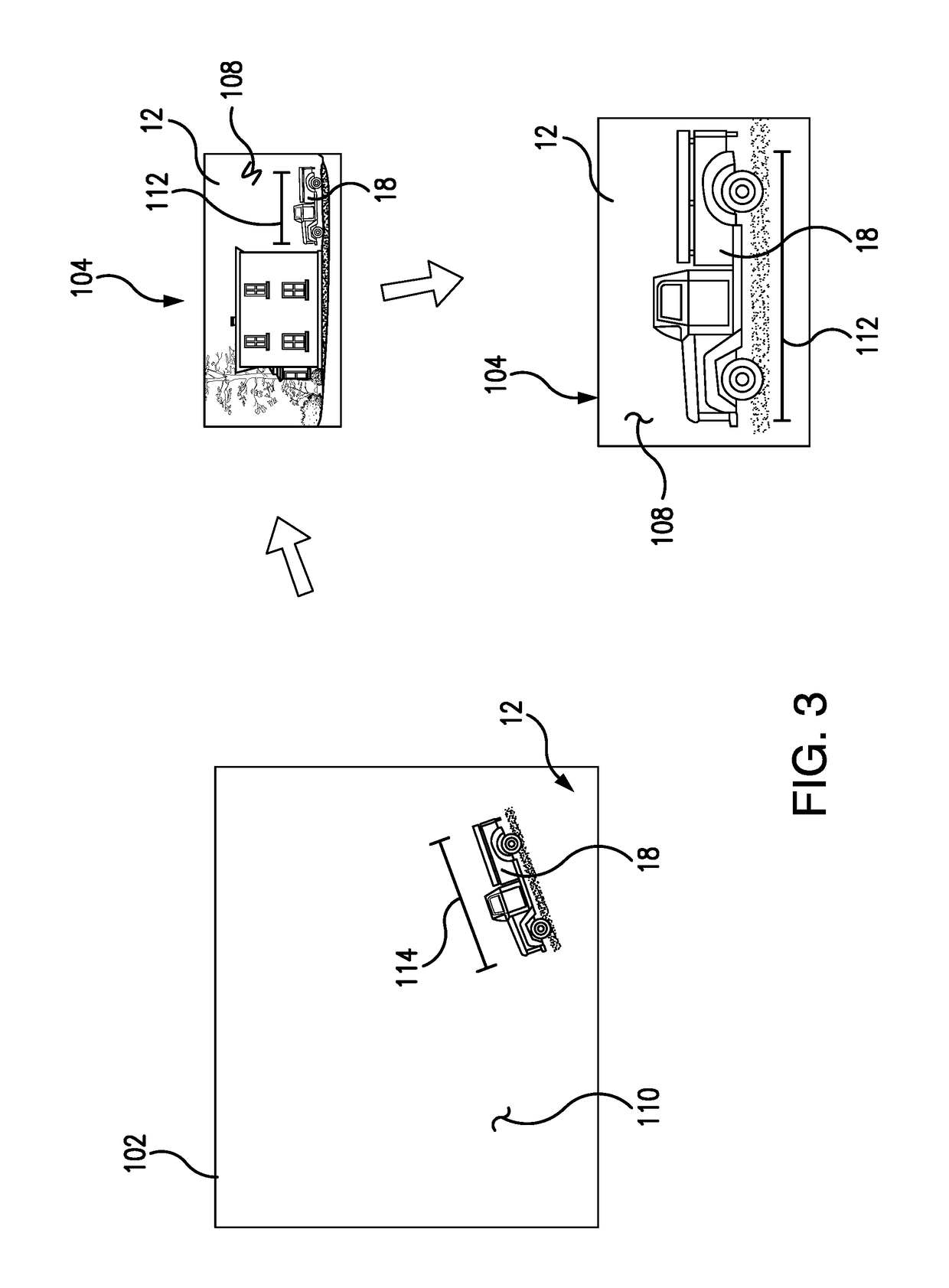

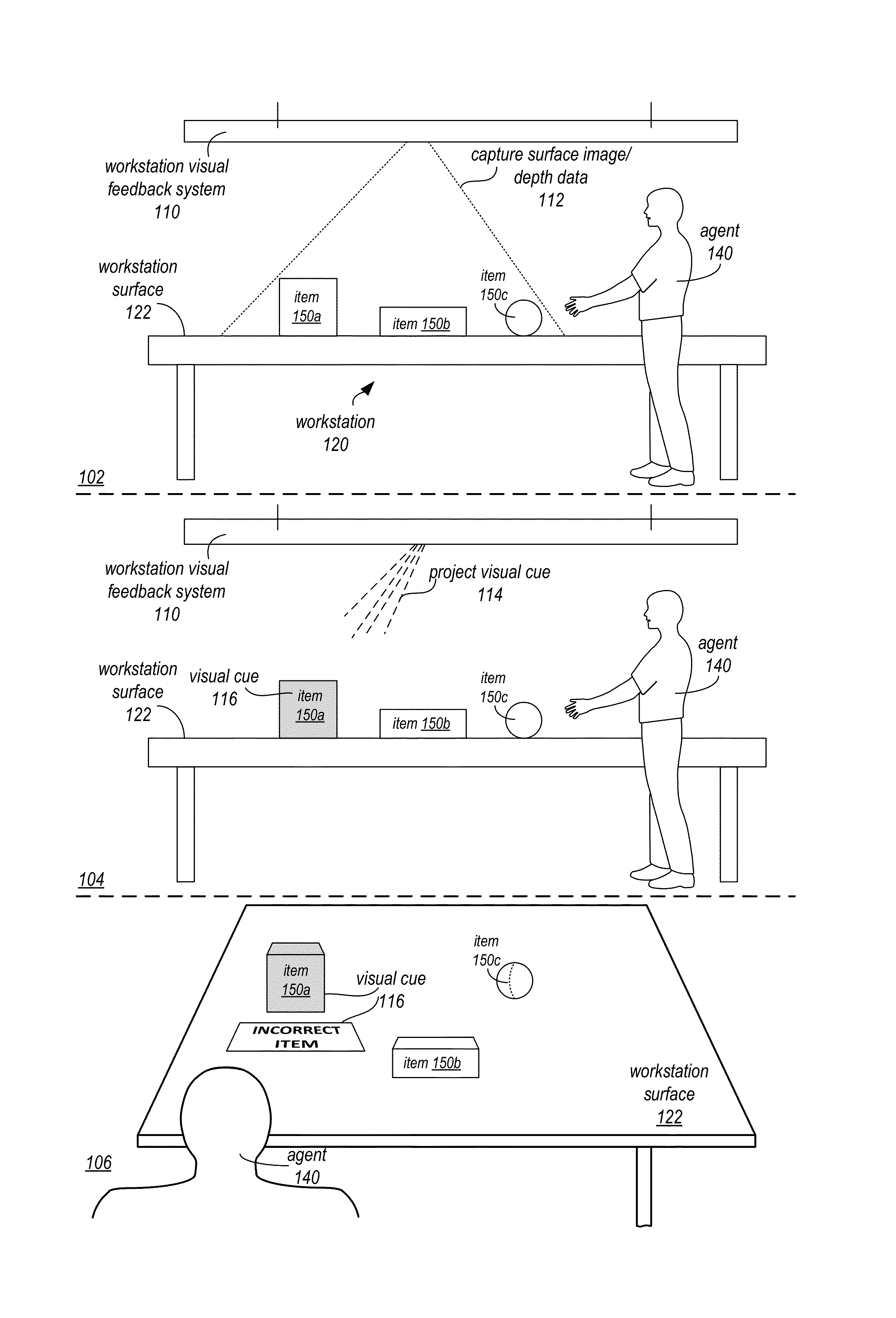

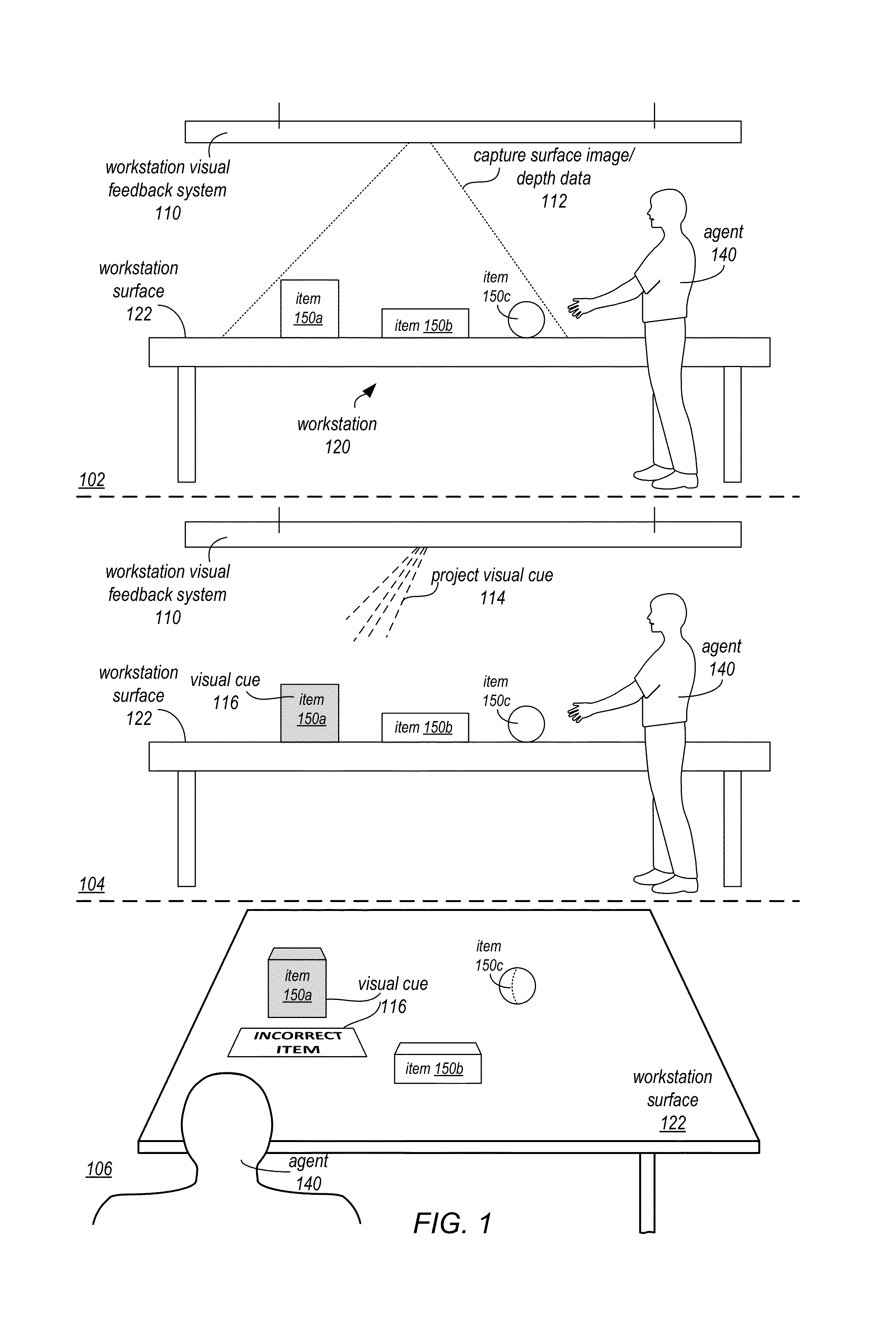

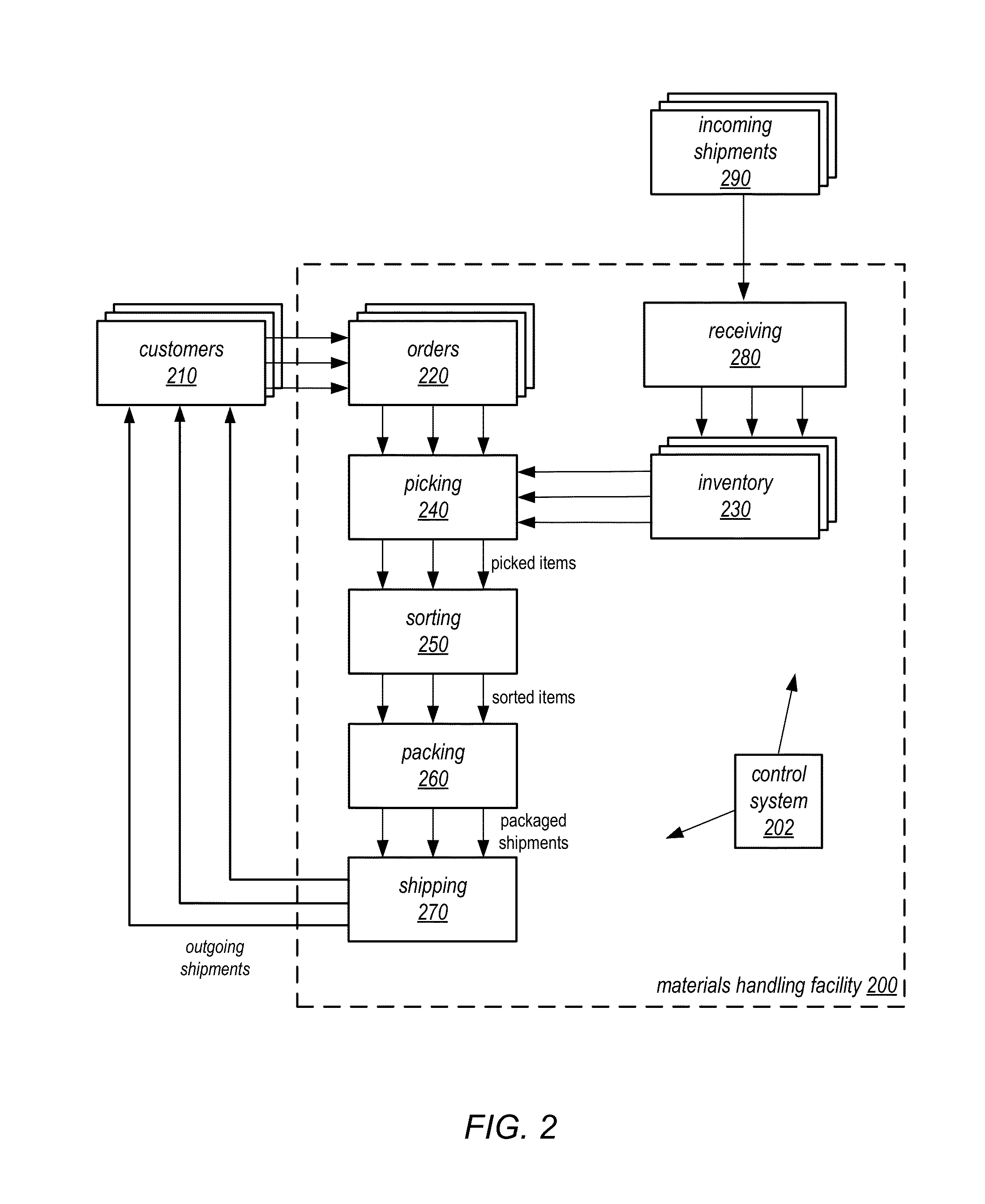

Visual task feedback for workstations in materials handling facilities

ActiveUS20160210738A1Image analysisCharacter and pattern recognitionComputer graphics (images)Workstation

Visual task feedback for workstations in a materials handling facility may be implemented. Image data of a workstation surface may be obtained from image sensors. The image data may be evaluated with regard to the performance of an item-handling task at the workstation. The evaluation of the image data may identify items located on the workstation surface, determine a current state of the item-handling task, or recognize an agent gesture at the workstation. Based, at least in part on the evaluation, one or more visual task cues may be selected to project onto the workstation surface. The projection of the selected visual task cues onto the workstation surface may then be directed.

Owner:AMAZON TECH INC

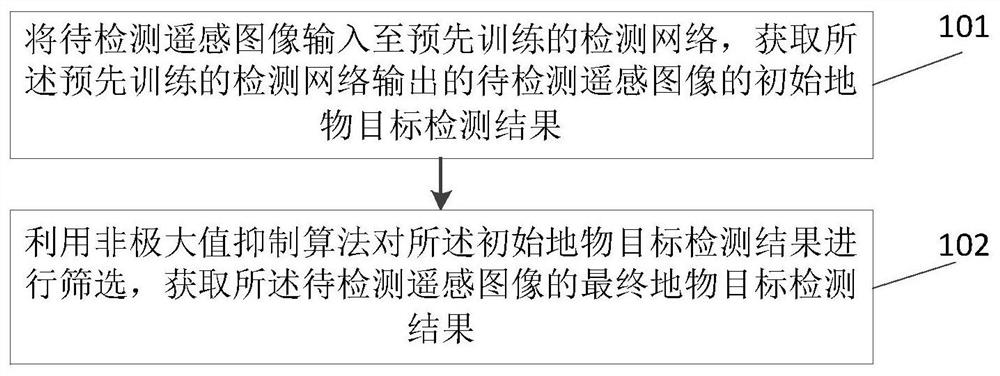

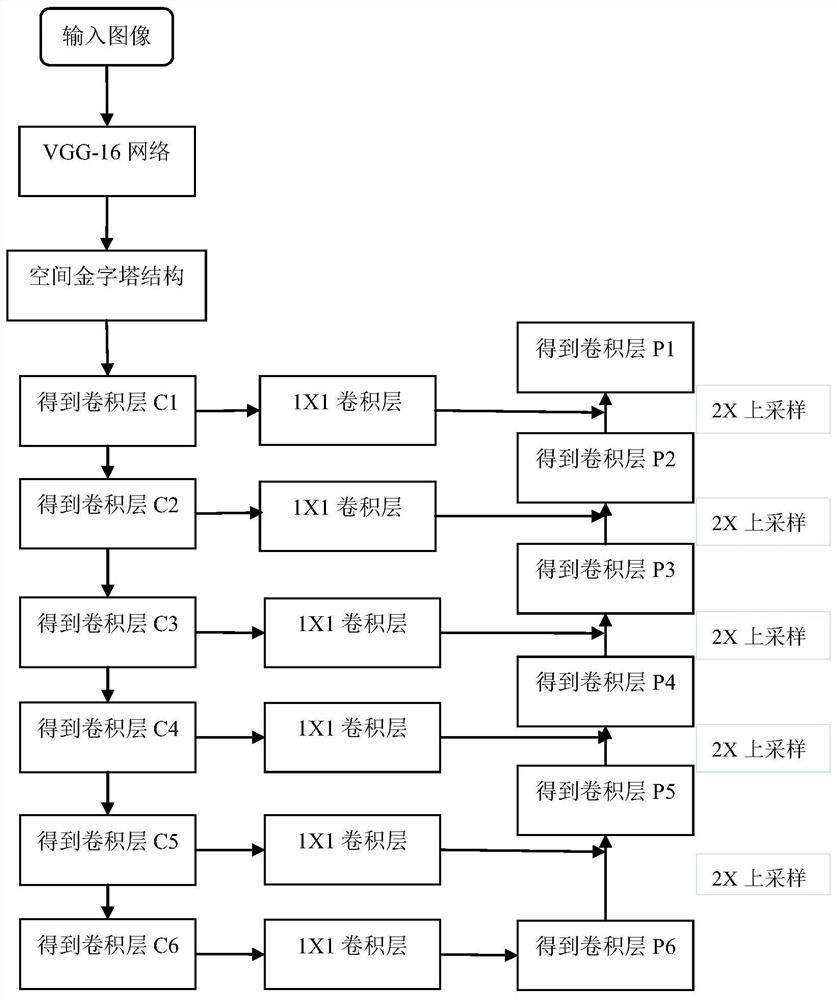

Ground object target detection method and system for remote sensing image

ActiveCN111626176AImplement automatic detectionImproving multi-scale object detection problemsScene recognitionNeural architecturesRemote sensingObject detection

The invention relates to a remote sensing image ground object target detection method and system, and the method comprises the steps: inputting a to-be-detected remote sensing image to a pre-trained detection network, and obtaining an initial ground object target detection result, outputted by the pre-trained detection network, of the to-be-detected remote sensing image; screening the initial ground object target detection result by using a non-maximum suppression algorithm to obtain a final ground object target detection result of the to-be-detected remote sensing image; according to the technical scheme provided by the invention, the problem of small object detection in a complex remote sensing scene is effectively solved, attention is dynamically distributed to objects of different scales, and an effective technology is provided for subsequent computer vision tasks including but not limited to remote sensing image target detection.

Owner:AEROSPACE INFORMATION RES INST CAS

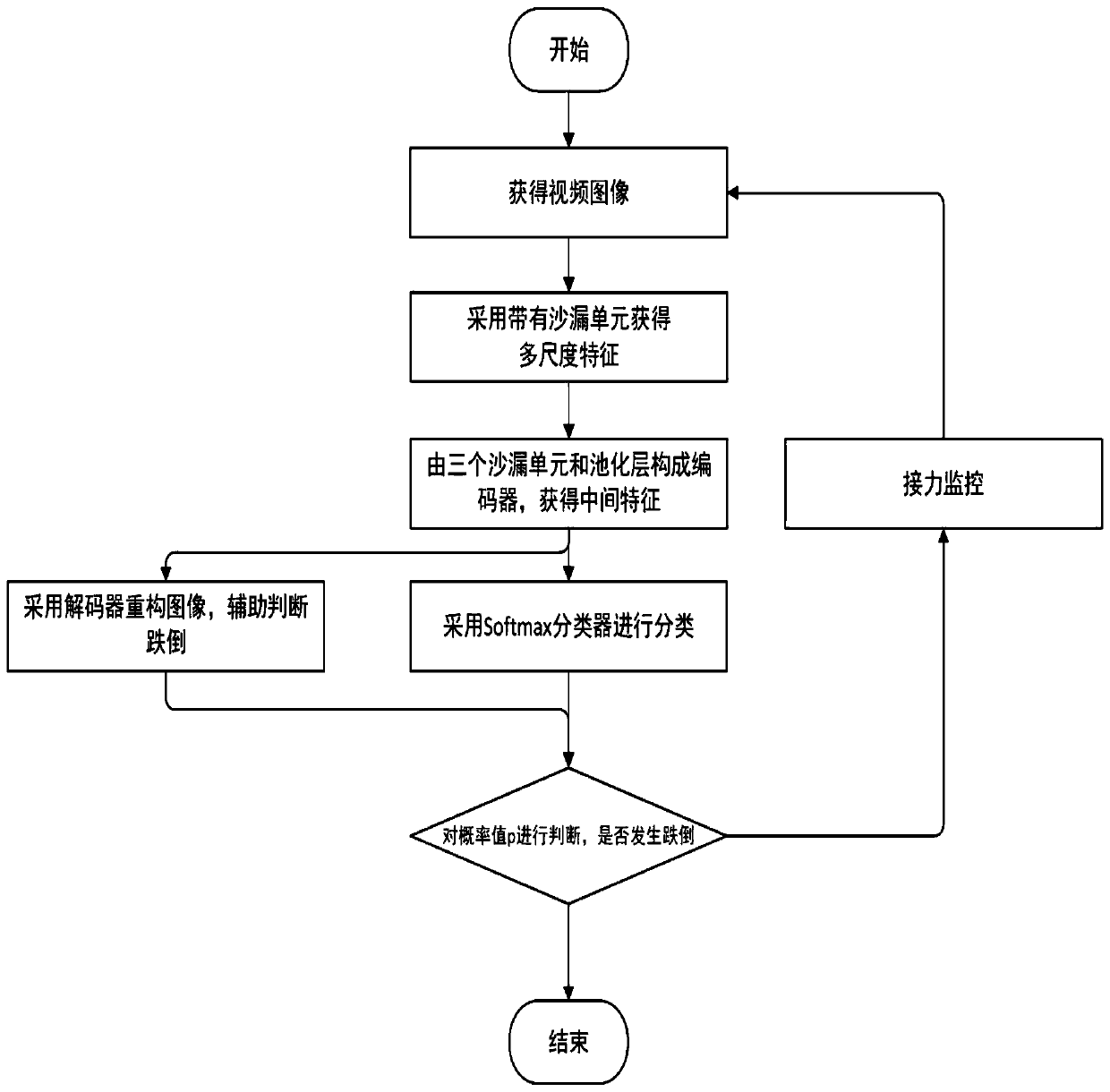

Fall detection method based on hourglass convolution automatic coding neural network

ActiveCN110503063ASmall feature sizeFew parametersImage enhancementImage analysisAlgorithmTheoretical computer science

The invention discloses a fall detection method based on an hourglass convolution automatic coding neural network, and the method comprises the following steps: inputting a collected image into the trained hourglass convolution automatic coding neural network, and judging whether a target falls or not; wherein the hourglass convolution automatic coding neural network comprises an hourglass convolution encoder and a classifier; wherein the hourglass convolution encoder is obtained by replacing a convolution layer in the convolution automatic encoder with an hourglass unit, namely an hourglass convolution layer; and the hourglass convolution encoder comprising three hourglass convolution layers and three pooling layers. According to the invention, the convolution layer in the convolution automatic encoder is replaced by the hourglass unit, so that in a complex visual task, when the hourglass convolution automatic encoder neural network is used to extract the multi-scale features of the video image, the intermediate features with richer information can be obtained, and the accuracy of fall detection is improved.

Owner:东北大学秦皇岛分校

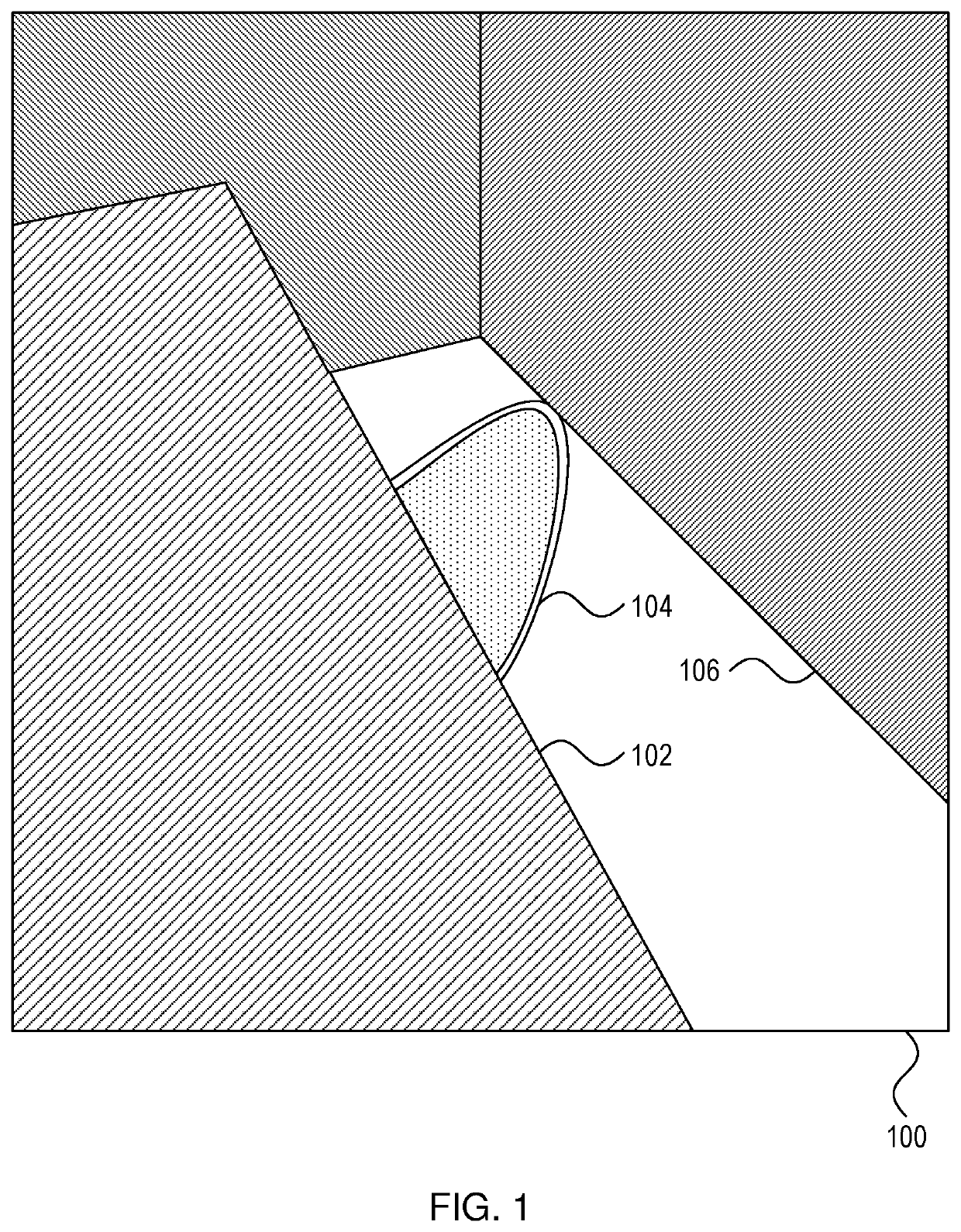

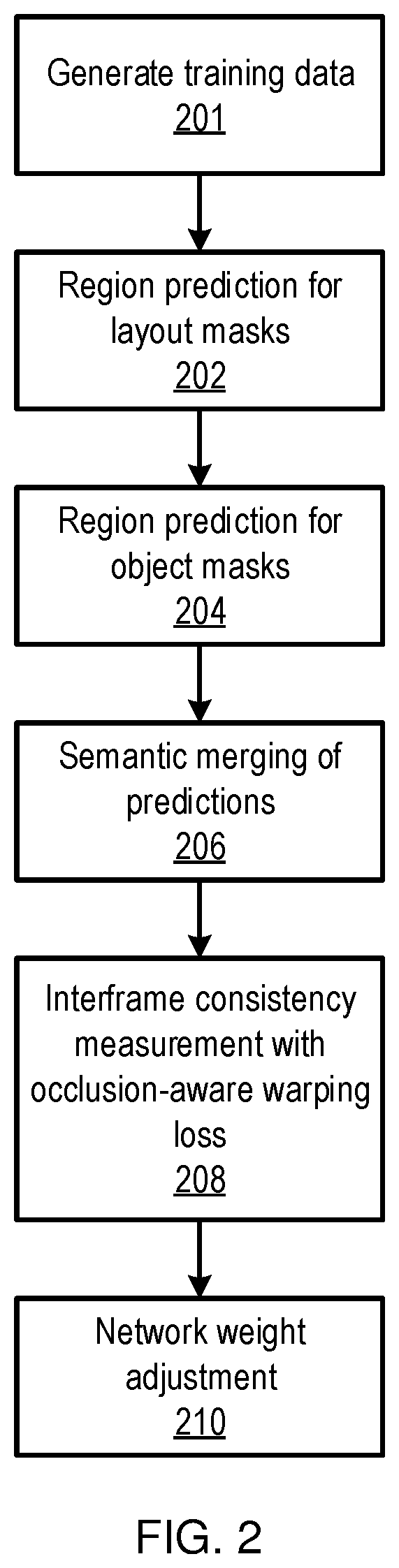

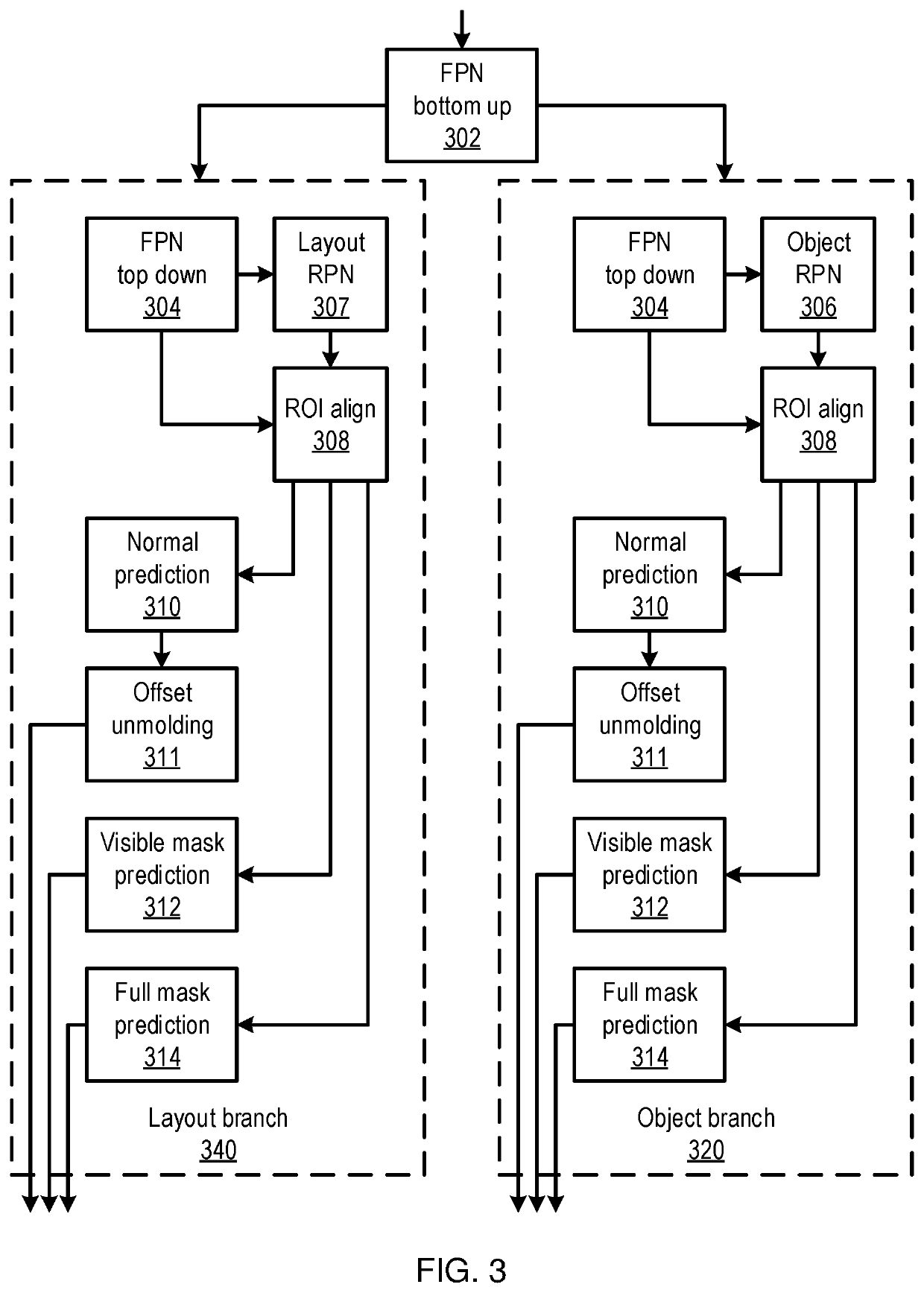

Occlusion-aware indoor scene analysis

Methods and systems for occlusion detection include detecting a set of foreground object masks in an image, including a mask of a visible portion of a foreground object and a mask of the foreground object that includes at least one occluded portion, using a machine learning model. A set of background object masks is detected in the image, including a mask of a visible portion of a background object and a mask of the background object that includes at least one occluded portion, using the machine learning model. The set of foreground object masks and the set of background object masks are merged using semantic merging. A computer vision task is performed that accounts for the at least one occluded portion of at least one object of the merged set.

Owner:NEC LAB AMERICA

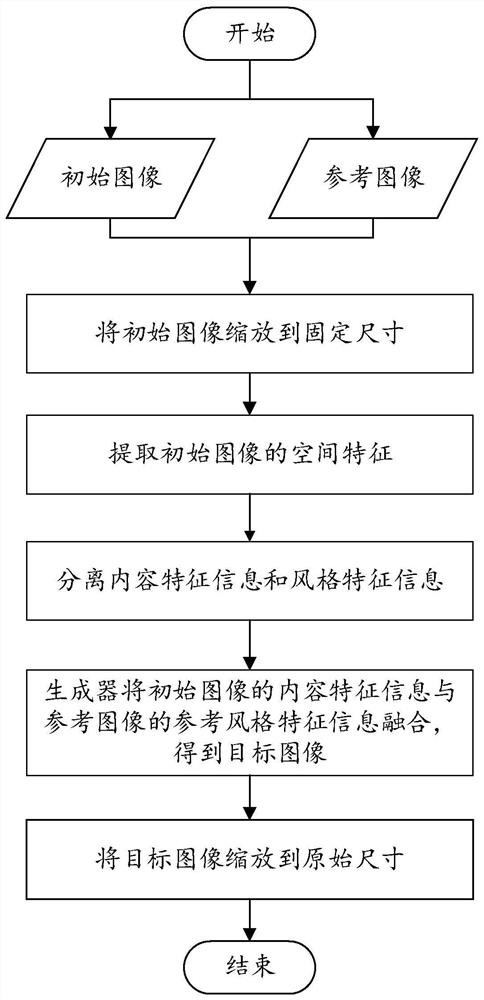

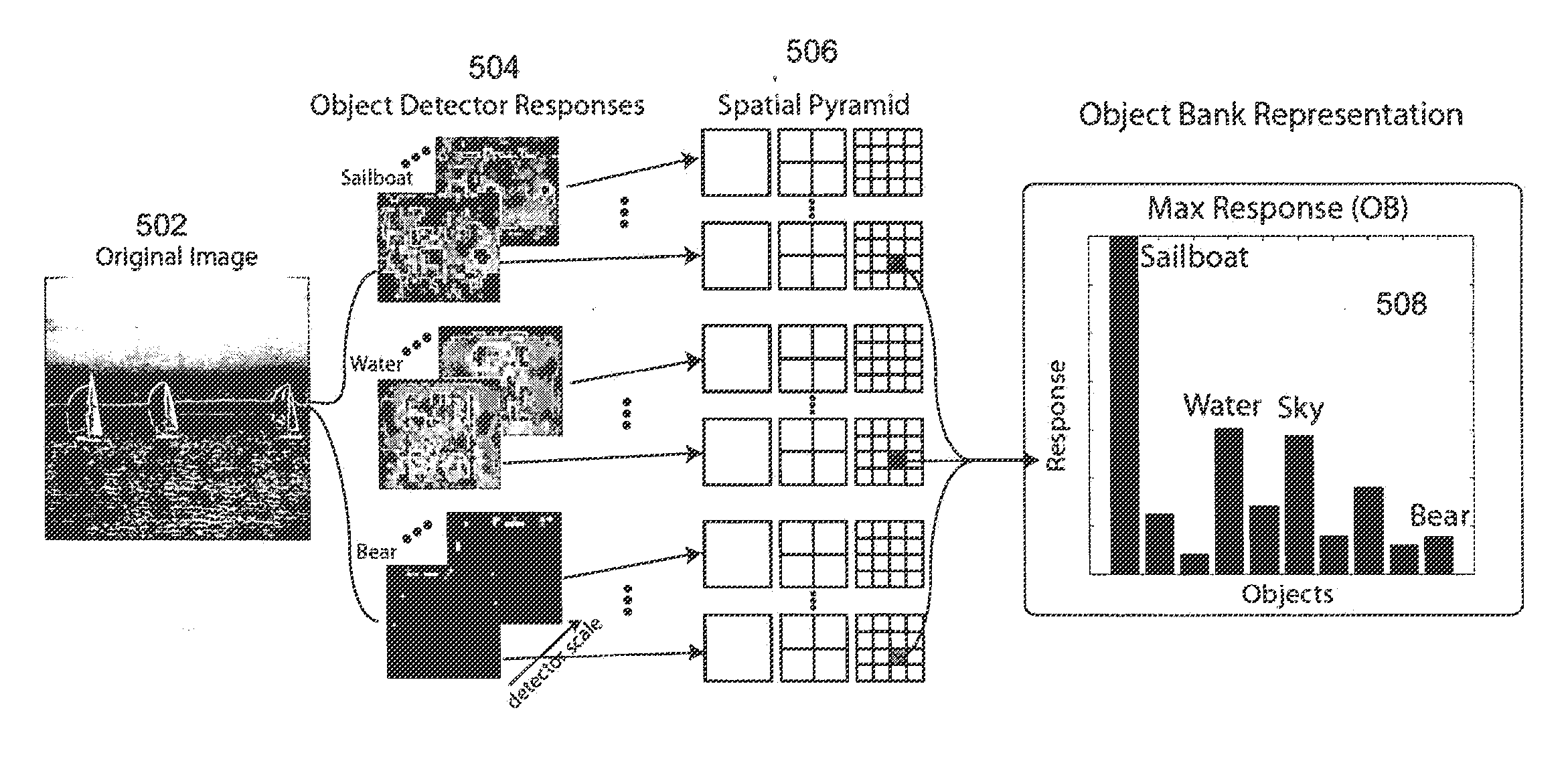

Unsupervised image-to-image translation method based on content style separation

ActiveCN112766079ASteady concernConvenience to followImage enhancementGeometric image transformationFeature extractionRadiology

The embodiment of the invention discloses an unsupervised image-to-image translation method. A specific embodiment of the method comprises the following steps: acquiring an initial image, and zooming the initial image to a specific size; performing spatial feature extraction on the initial image through an encoder to obtain feature information; inputting the feature information into a content style separation module to obtain content feature information and style feature information; generating reference style feature information of the reference image in response to the acquired reference image, and setting the reference style feature information as Gaussian noise consistent with the style feature information in shape in response to the fact that the reference image is not acquired; inputting the content feature information and the reference style feature information into a generator to obtain a target image translating the initial image into a reference image style; and zooming the target image to a size matched with the initial image to obtain a final target image. The implementation mode can be applied to various different advanced vision tasks, and the expandability of the whole system is improved.

Owner:BEIHANG UNIV

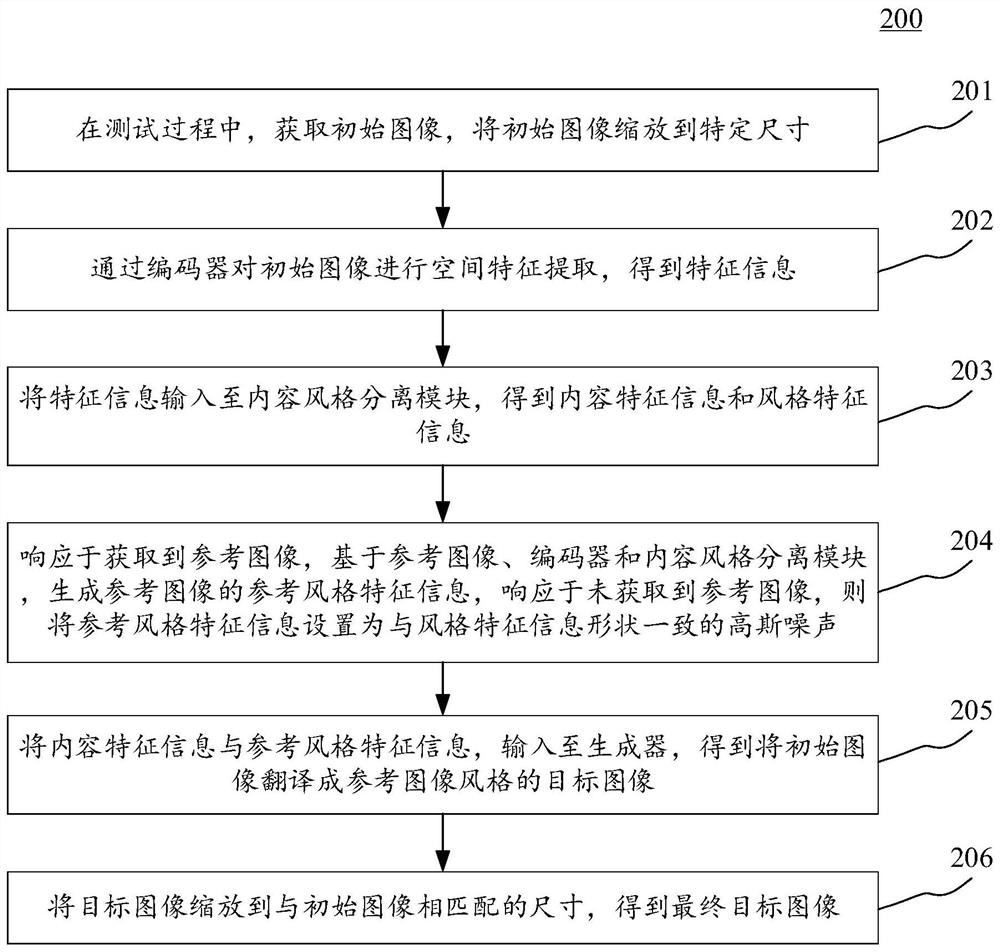

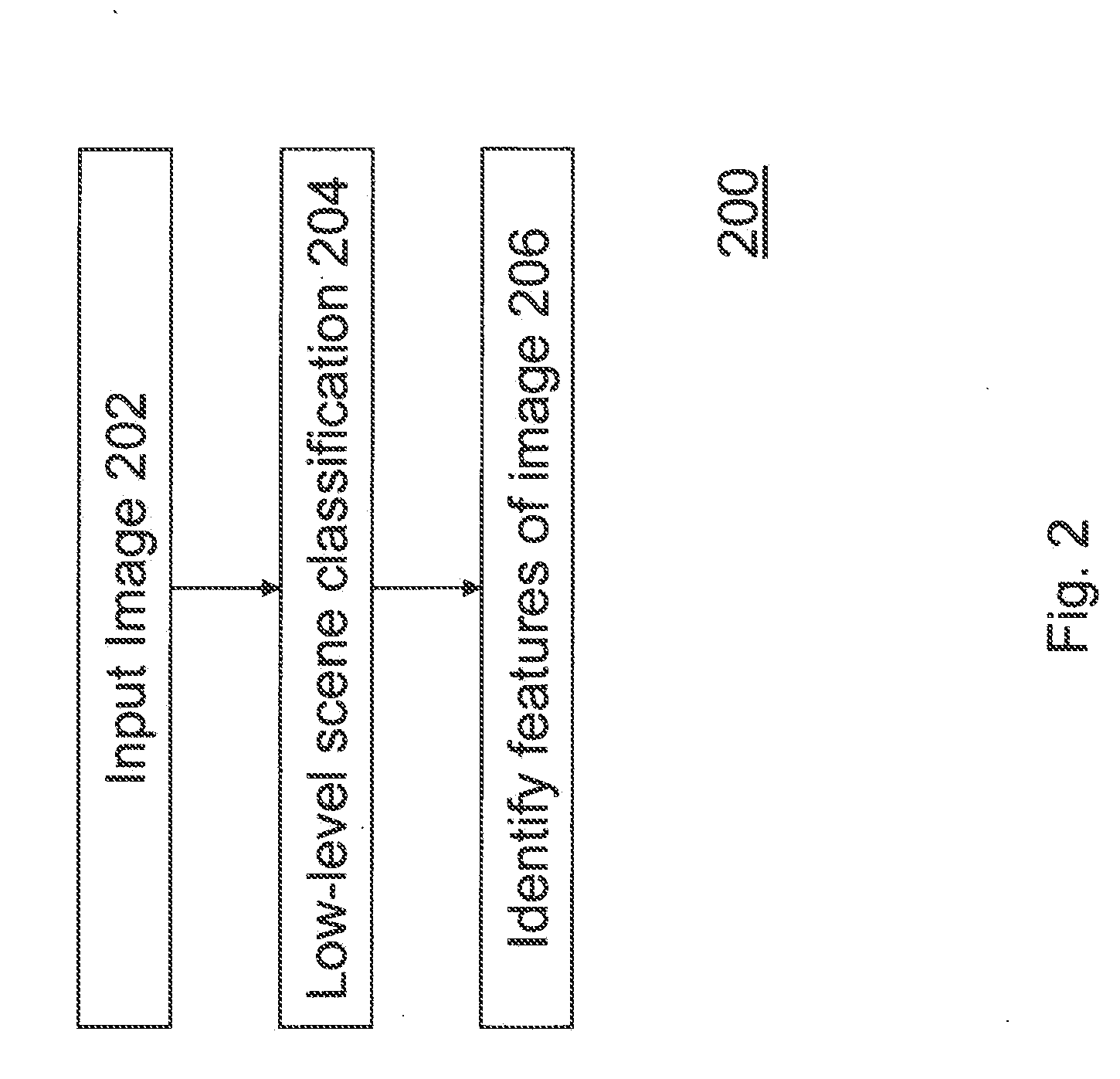

Method for Implementing a High-Level Image Representation for Image Analysis

InactiveUS20160155016A1Not alleviating taskGeometric image transformationCharacter and pattern recognitionData setVisual recognition

Robust low-level image features have been proven to be effective representations for a variety of visual recognition tasks such as object recognition and scene classification; but pixels, or even local image patches, carry little semantic meanings. For high-level visual tasks, such low-level image representations are potentially not enough. The present invention provides a high-level image representation where an image is represented as a scale-invariant response map of a large number of pre-trained generic object detectors, blind to the testing dataset or visual task. Leveraging on this representation, superior performances on high-level visual recognition tasks are achieved with relatively classifiers such as logistic regression and linear SVM classifiers.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

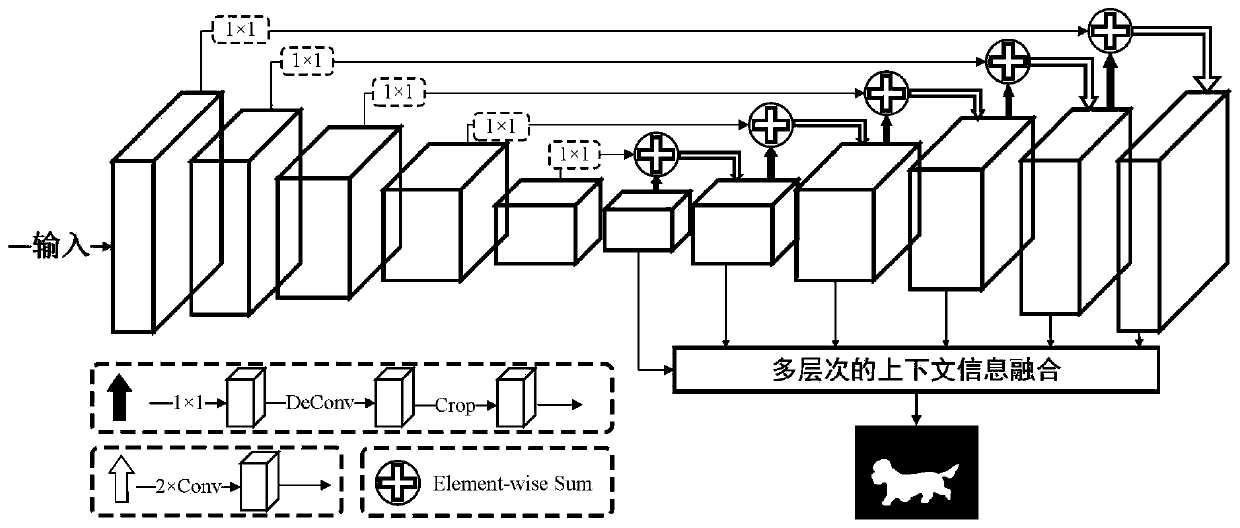

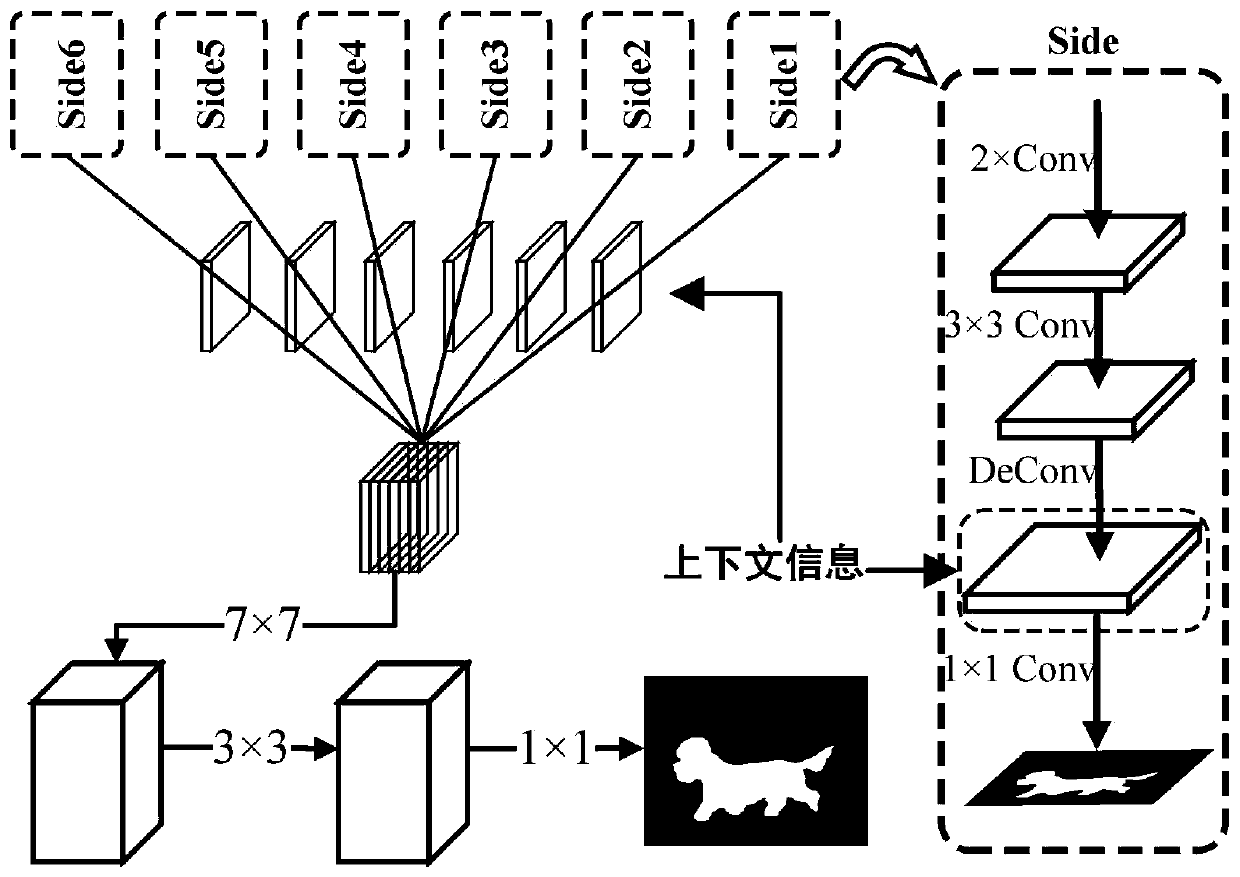

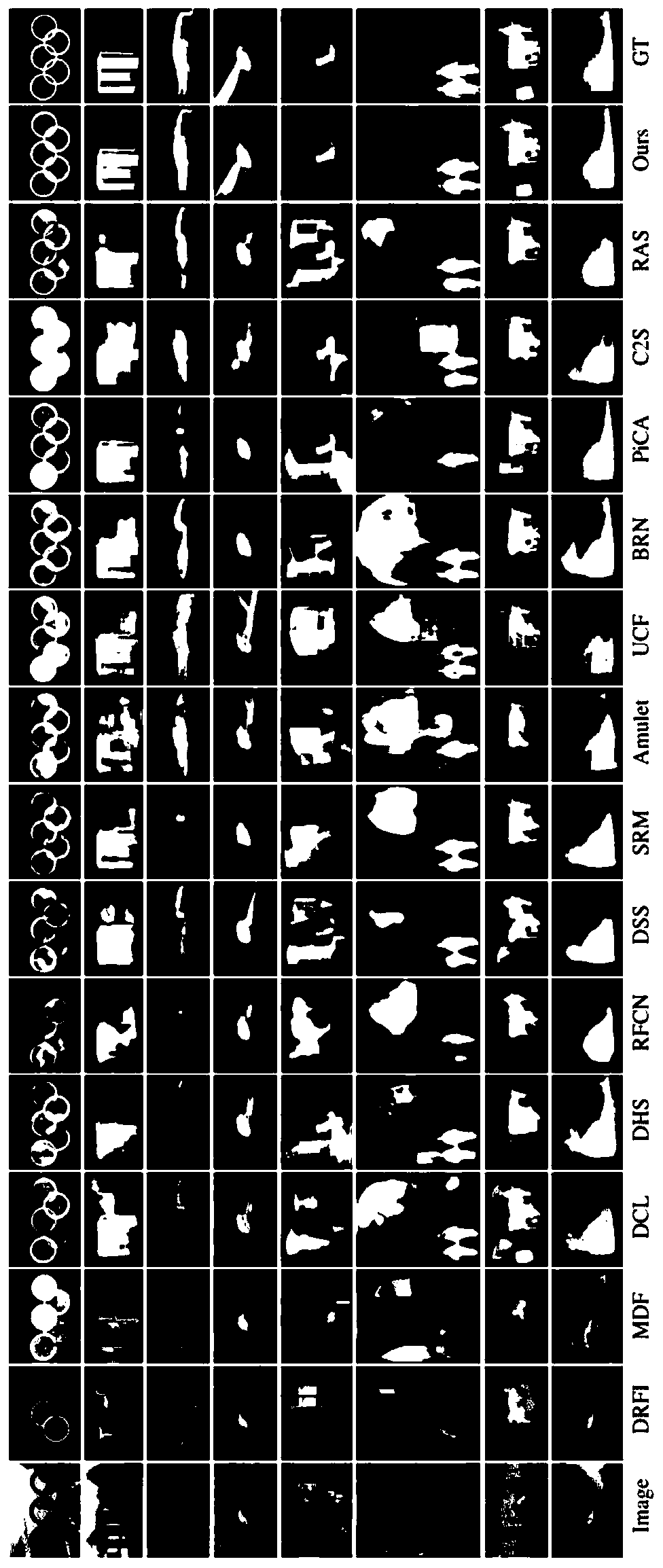

A salient object detection method based on multi-level context information fusion

ActiveCN109766918AAccurate Salient Object DetectionCharacter and pattern recognitionNeural architecturesSaliency mapVisual perception

The invention discloses a salient object detection method based on multi-level context information fusion. The object of the method is to construct and utilize multi-level context features to performimage saliency detection. According to the method, a new convolutional neural network architecture is designed, and the new convolutional neural network architecture is optimized in a manner of convolution from a high layer to a bottom layer, so that the context information on different scales is extracted for an image, and the context information is fused to obtain a high-quality image saliency map. The salient region detected by using the method can be used for assisting other visual tasks.

Owner:NANKAI UNIV

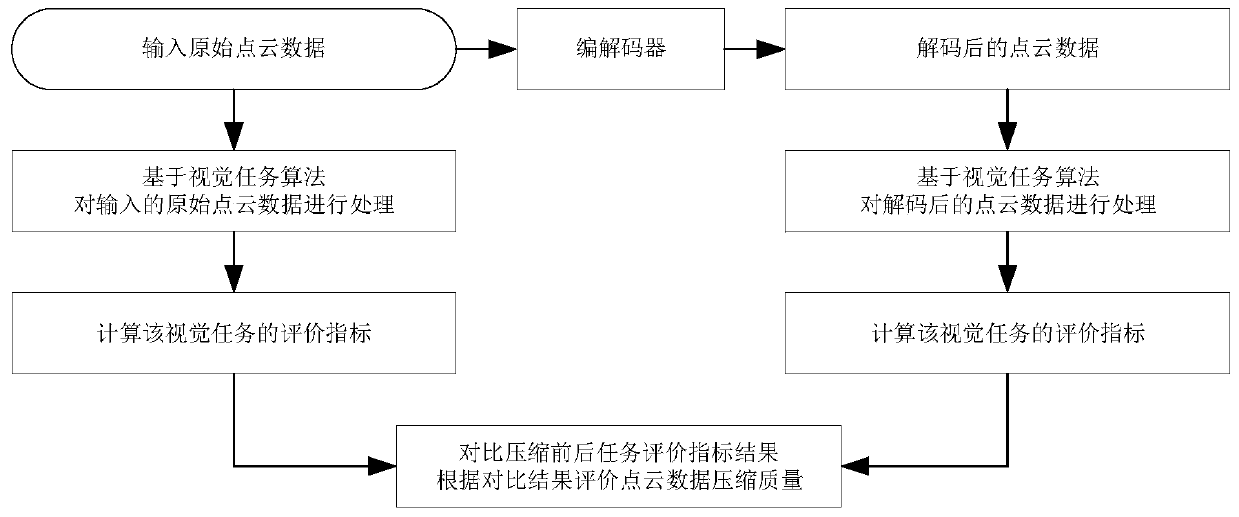

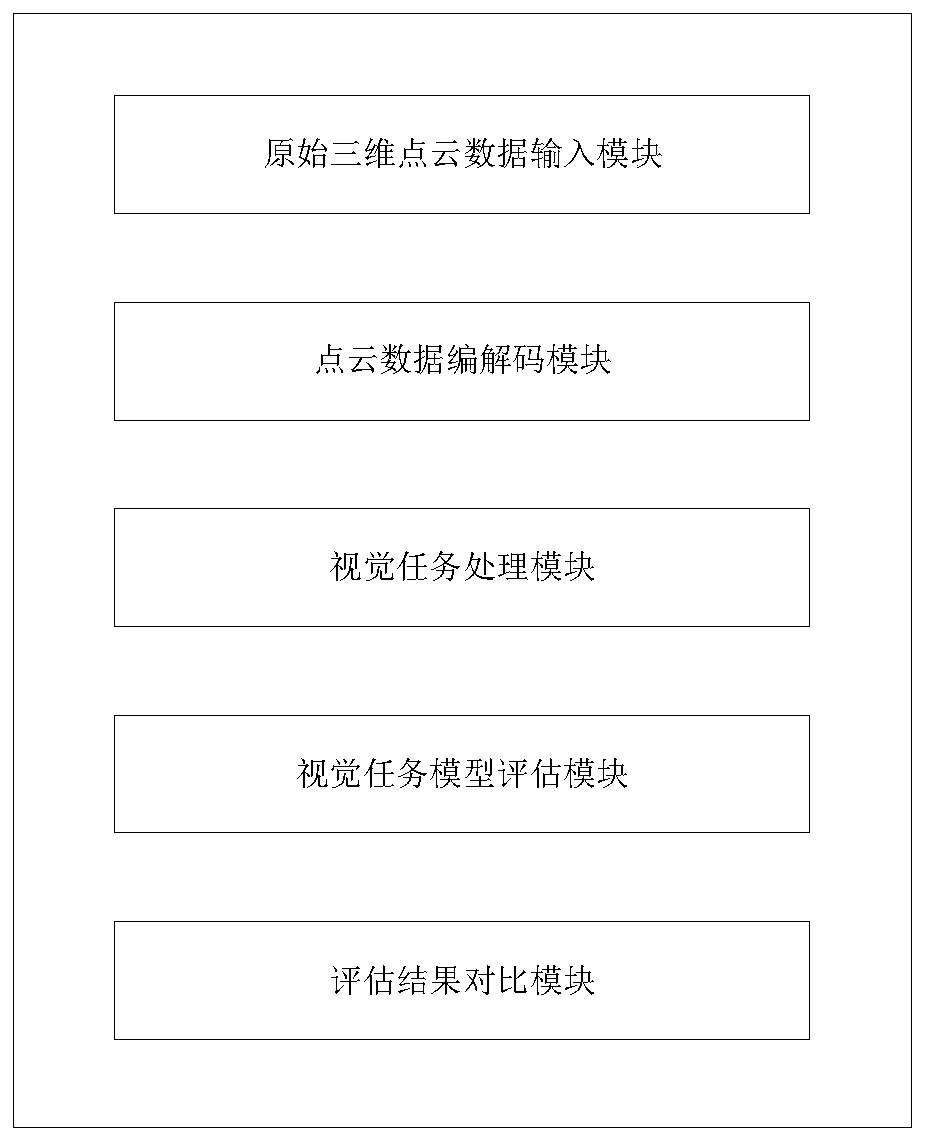

Visual task-based point cloud data compression quality evaluation method and system

The invention provides a visual task-based point cloud data compression quality evaluation method and system. The method comprises the steps of inputting original three-dimensional point cloud data; processing the original three-dimensional point cloud data, and calculating a first evaluation index of a visual task according to the processed first data; encoding and decoding the original three-dimensional point cloud data, processing the encoded and decoded three-dimensional point cloud data, and calculating a second evaluation index of the visual task according to the processed second data; and comparing the first evaluation index with the second evaluation index to obtain an evaluation index comparison result, and evaluating the point cloud data compression quality according to the comparison result. Compared with an existing point cloud quality evaluation method, the invention has pertinence, and the point cloud compression quality can be measured according to specific visual tasks.In addition, the point cloud compression quality can be comprehensively measured according to various visual tasks.

Owner:SHANGHAI JIAO TONG UNIV

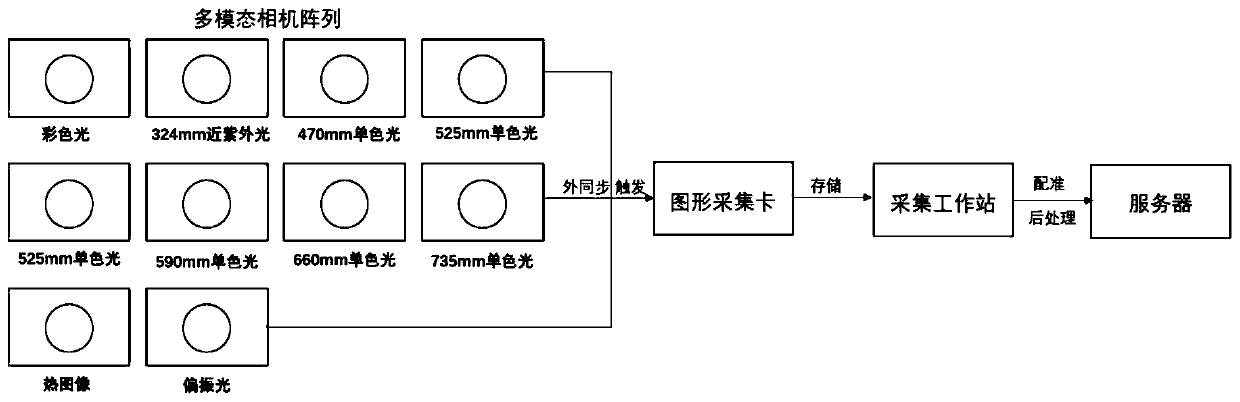

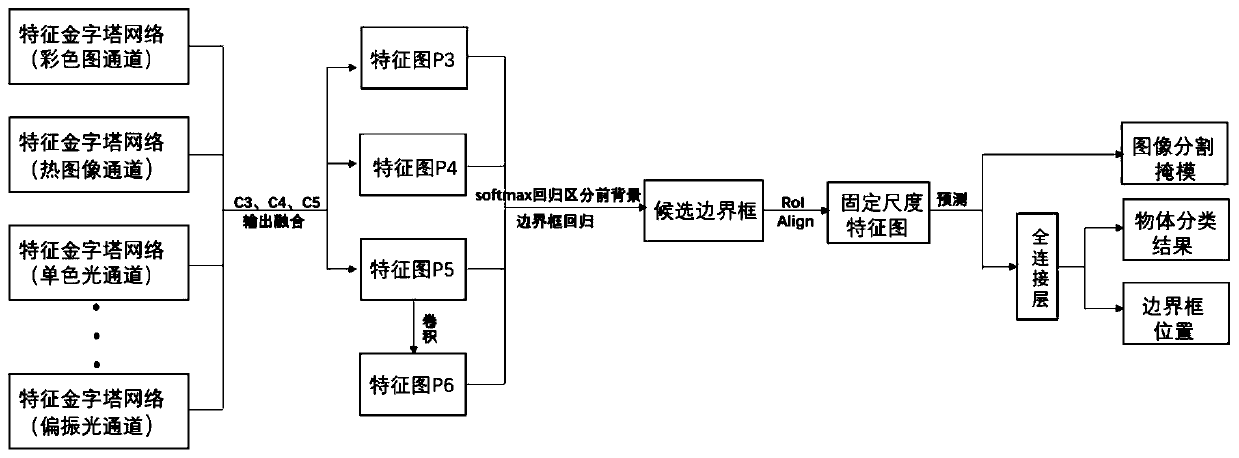

Pixel-level image segmentation system and method based on multi-modal spectral image

PendingCN111462128ASolve the difficulty of synchronous shootingHigh precisionImage enhancementImage analysisData setImage segmentation algorithm

The invention discloses a pixel-level image segmentation system based on a multi-modal spectral image. A plurality of visible light cameras and a thermal imaging sensor form a camera array, and the visible light cameras are built into a multi-modal camera array group to form a multi-modal information source; a graphic acquisition card is connected with the multi-modal information source and an acquisition workstation, and stores the acquired image data in the acquisition workstation; and a server carries out registration and post-processing on the graphic data in the acquisition workstation. The invention further discloses a pixel-level image segmentation method based on the multi-modal spectral image. A single-modal image segmentation algorithm is expanded into multi-modal input, and themulti-modal feature images are fused in a network intermediate layer, so that the precision of the Mask-RCNN image segmentation algorithm is improved. And meanwhile, a set of multi-modal spectral image acquisition system is constructed, can be used for constructing a multi-modal data set, is applied to related machine vision tasks such as target detection, image segmentation and semantic segmentation, and has a practical application prospect.

Owner:NANJING UNIV

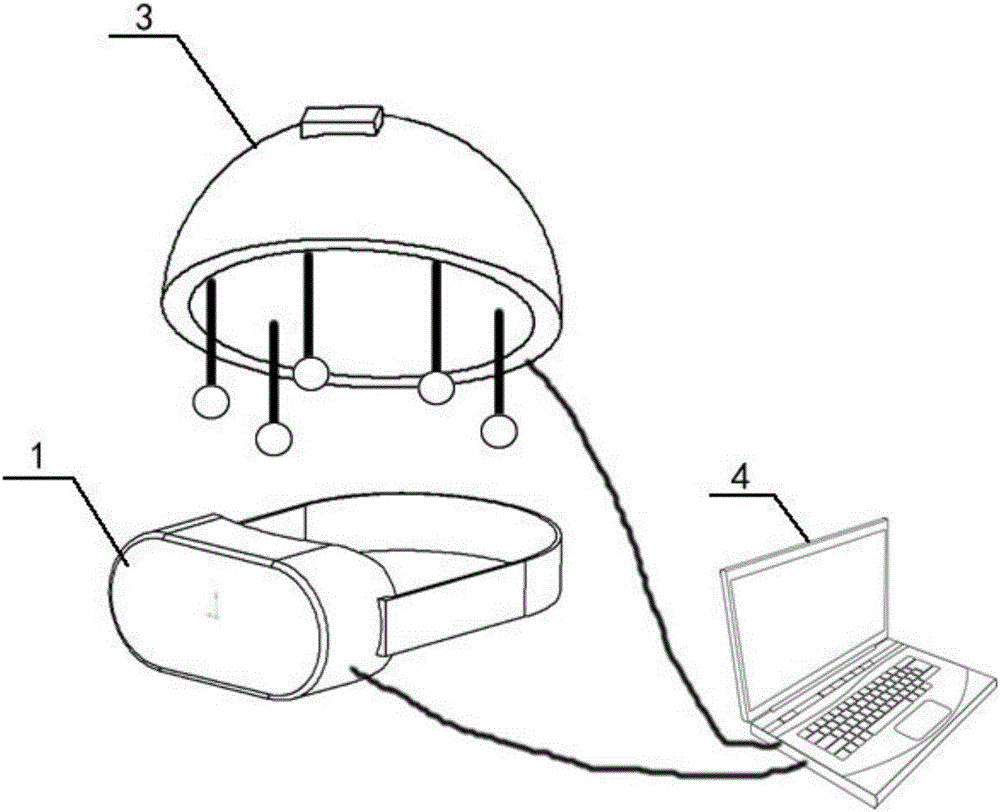

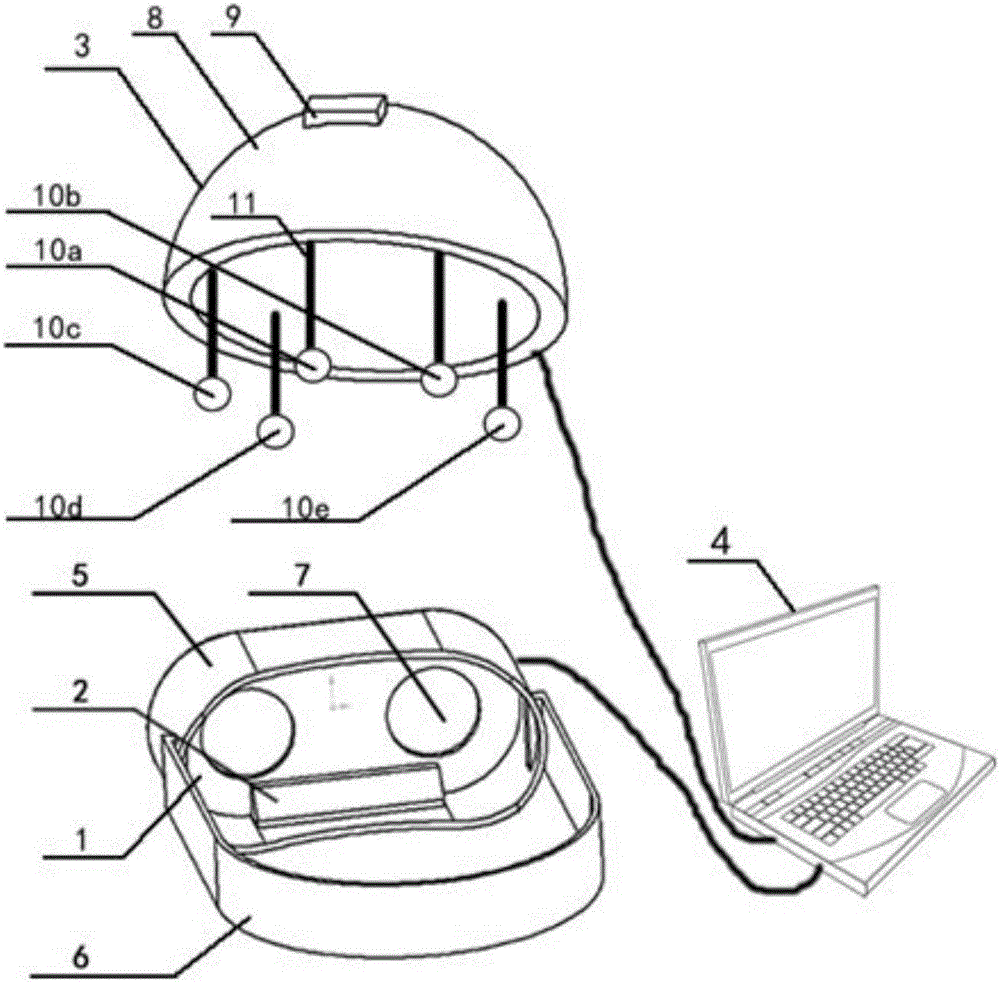

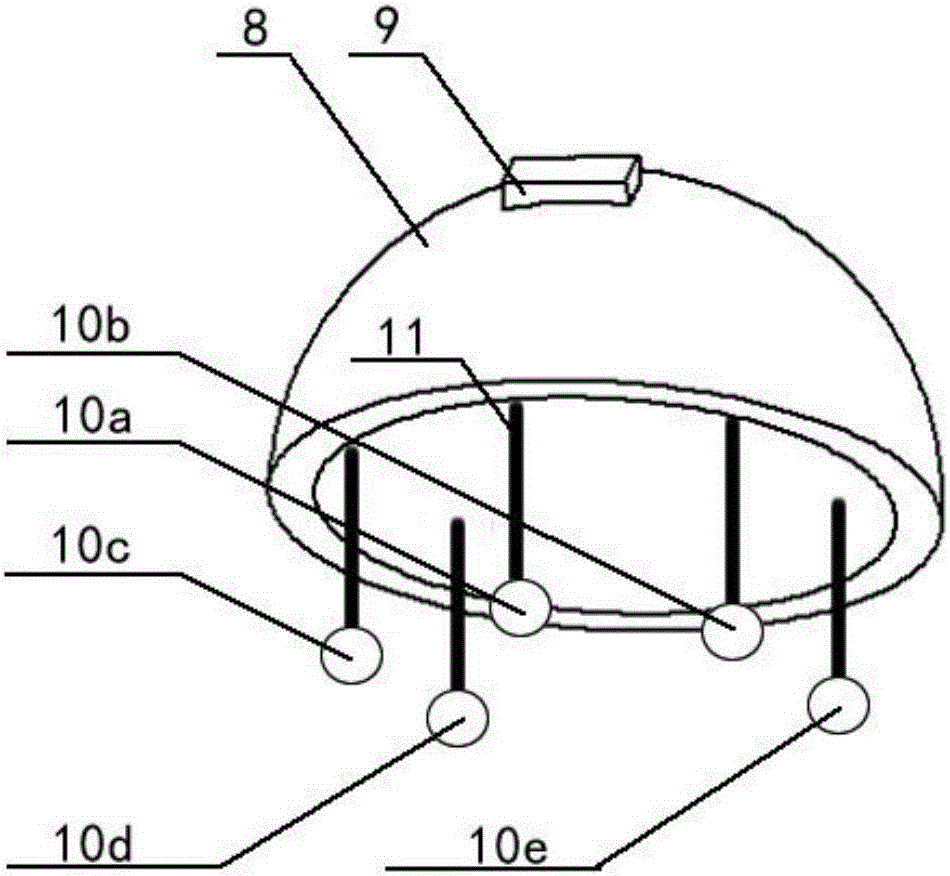

Eye movement measurement device based on simulated spatial disorientation scene

InactiveCN106551674AReduce the burden onEye movement test conditions are goodEye diagnosticsEye Movement MeasurementsElectricity

The invention provides a device capable of providing a simulated spatial disorientation scene for a subject and meanwhile recording the eye movement condition of the subject. The eye movement measurement device mainly comprises a head-mounted display device for providing a visual task for the subject, an eye movement tracking module for recording the eye movement condition of the subject in real time, a vestibule electrical simulation device for changing an input signal of a semicircular canal of a vestibule organ of a human body and providing vestibule electrical simulation for the subject and a control computer for controlling the simulation manner, the simulation current mode, the strength and the simulation time of the vestibule electrical simulation device, controlling the visual task displayed by the head-mounted display device, displaying relevant parameters in real time and recording the eye movement data transmitted back by the eye movement tracking module in real time. By means of the device, the visual task and the vestibule electrical simulation can be exerted on the subject at the same time, and meanwhile the eye movement condition of the subject is recorded; and in this way, a researcher can investigate the eye movement condition when the vestibule task and the visual task are exerted on the subject at the same time, meanwhile the burden of equipment installation and set is lowered for the researcher, the good eye movement experiment condition is provided, and research on the spatial disorientation is facilitated.

Owner:PLA NAVY GENERAL HOSIPTAL +1

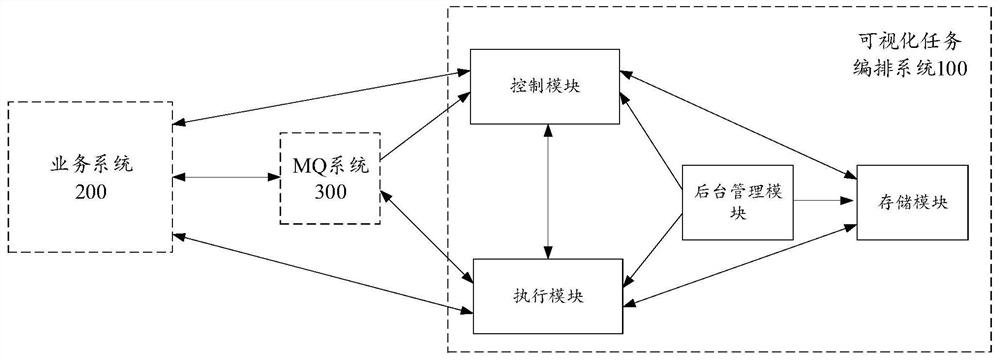

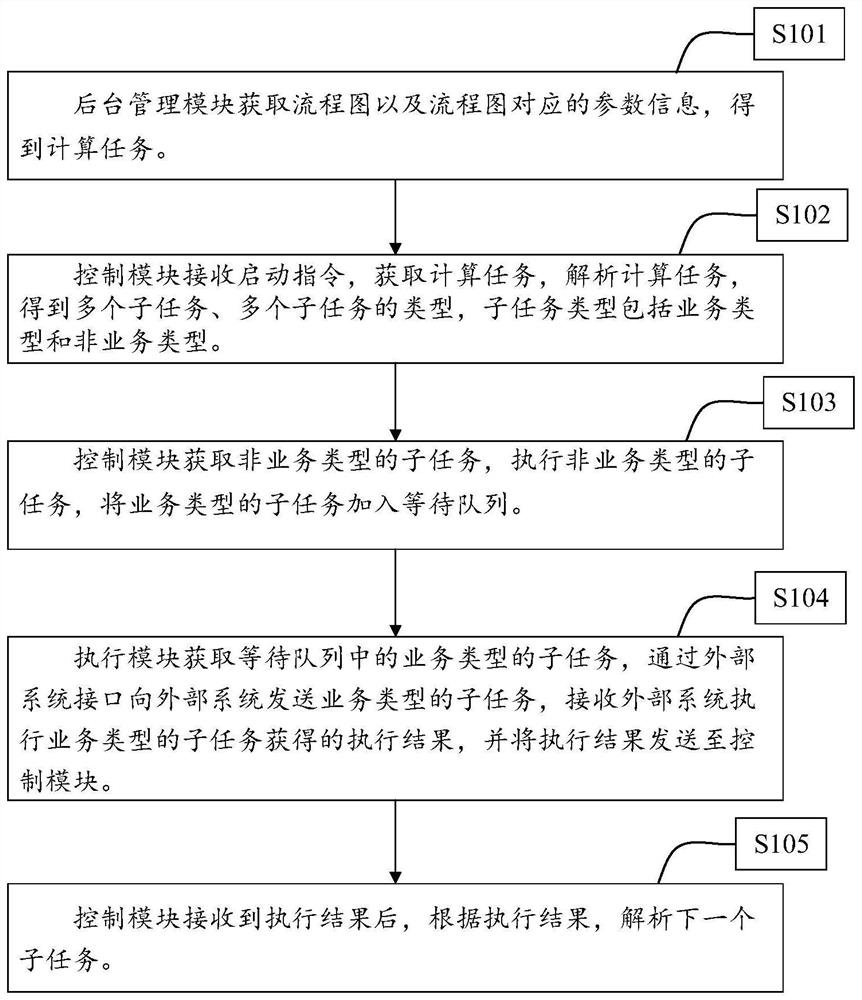

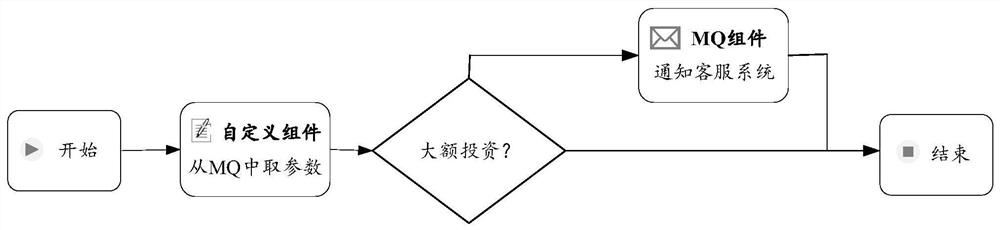

Visual task arrangement method and device and storage medium

PendingCN113448547AImprove orchestration system efficiencyPersonalized Task OrchestrationSoftware designVisual/graphical programmingIndustrial engineeringVisual task

The invention provides a visual task arrangement method, which comprises the steps that a control module obtains and analyzes a calculation task to obtain a plurality of subtasks and types of the subtasks, the calculation task comprises a flow chart and parameter information corresponding to the flow chart, the flow chart comprises a plurality of components and an execution sequence among the components, and the parameter information corresponds to the components; the plurality of subtasks and the plurality of components are in a one-to-one correspondence relationship, the parameter information comprises configuration parameters of each component in the plurality of components, and the types of the subtasks comprise a service type and a non-service type; the control module executes the sub-tasks of the non-service type and adds the sub-tasks of the service type into a waiting queue; and the execution module obtains the subtasks of the service type from the waiting queue, sends the subtasks of the service type to an external system through an external system interface, and receives the subtasks of the service type executed by the external system to obtain an execution result.

Owner:WEIKUN (SHANGHAI) TECH SERVICE CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com