Construction method of multi-vision task collaborative depth estimation model

A technology for depth estimation and construction methods, applied in computing, image data processing, instruments, etc., can solve problems such as difficulty in using parameters and affecting the generalization ability of model scenes, and achieve the effect of improving the accuracy of depth estimation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

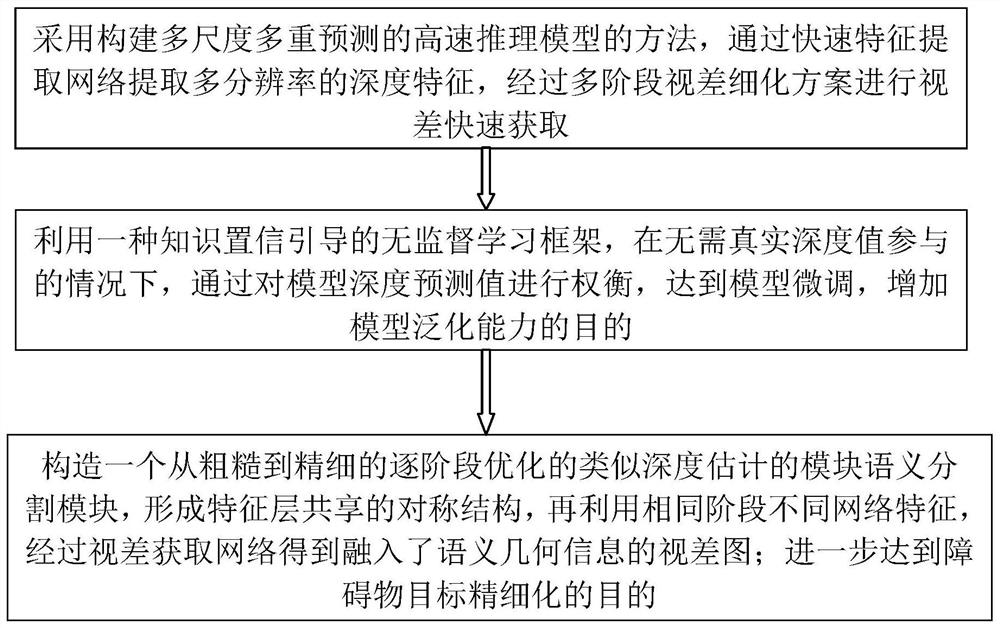

Method used

Image

Examples

Embodiment

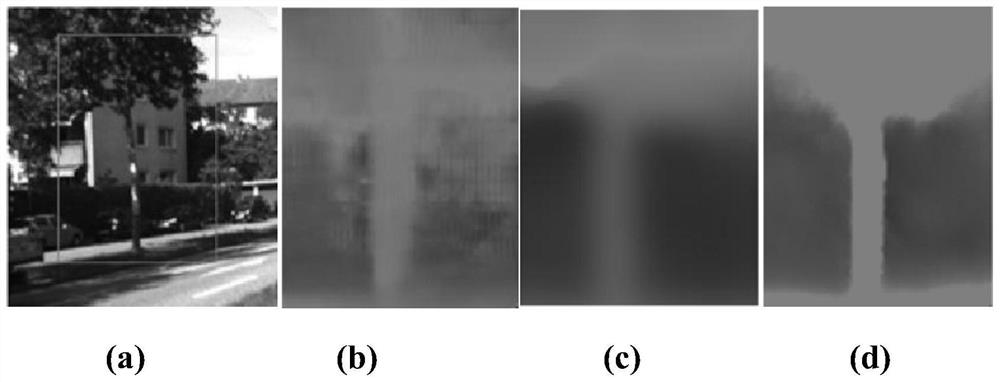

[0064] The experiment was verified on the Kitti dataset and compared with several classic depth acquisition algorithms. The experimental results are shown in Table 1. In terms of depth map indicators, the present invention has achieved the lowest error rate in both the global and occluded areas. The depth information of scene details has a better effect, such as figure 2 shown. At the same time, the present invention performs algorithm verification for different road conditions. As shown in FIG. 3 , better depth estimation effects can be obtained under four different road conditions.

[0065] Table 1 Experimental comparison on the Kitti dataset

[0066]

[0067]

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com