Scene map generation method

A technology of scene graph and image area, which is applied in the direction of neural learning method, biological neural network model, editing/combining graphics or text, etc. It can solve the problem of not using the relevant information of the scene graph, being unable to understand the main content of the scene image, and having no prominent display Image visual relationship and other issues

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] The specific embodiments of the present invention will be further described below in conjunction with the accompanying drawings.

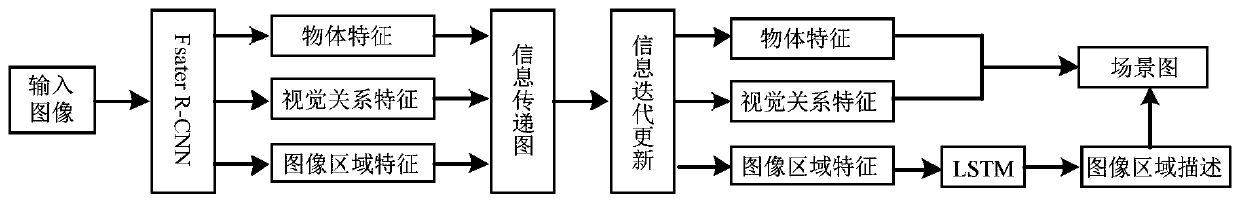

[0046] refer to figure 1 Specifically explaining this embodiment, the multi-level semantic task-based scene graph generation method described in this embodiment mainly includes Faster R-CNN object feature extraction, information transfer graph, feature information iterative update, image region description, and scene graph generation.

[0047] 1. For the three different levels of semantic vision tasks in scene understanding, object detection, visual relationship detection, and image region description, three different sets of proposals are produced:

[0048] Object region proposal: use the Faster R-CNN network to detect objects on the input image, and extract a set of candidate regions from the input image B={b 1 , b 2 ,...,b n}. For each region, the model not only extracts the bounding box b i Represents the position of the object, and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com