Increasing macroscalar instruction level parallelism

a macroscalar instruction and instruction level technology, applied in the field of processors, can solve the problems of reducing the performance of individual tasks relative to their best-case performance, over-utilizing excess resources, and effectively waste, and achieve the effect of increasing the instruction level parallelism and increasing the utilization of resources

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

example 1

Program Code Loop

[0071]

r = 0 ;s = 0 ;for (x=0; x{if (A [x]{r = A [x+s] ; }else{s = A [x+r] ;}B [x] = r + s;}

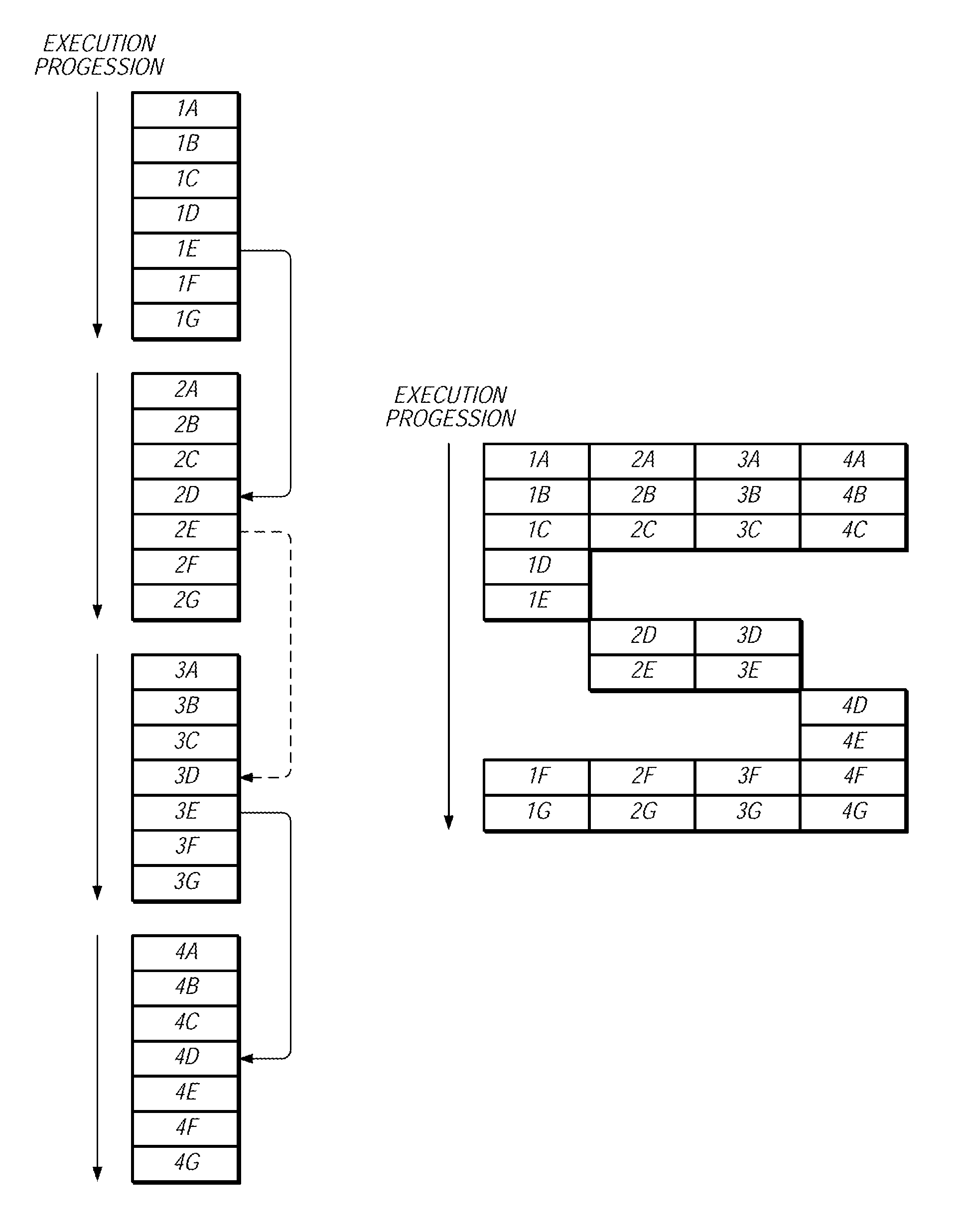

[0072]Using the Macroscalar architecture, the loop in Example 1 can be vectorized by partitioning the vector into segments for which the conditional (A[x]

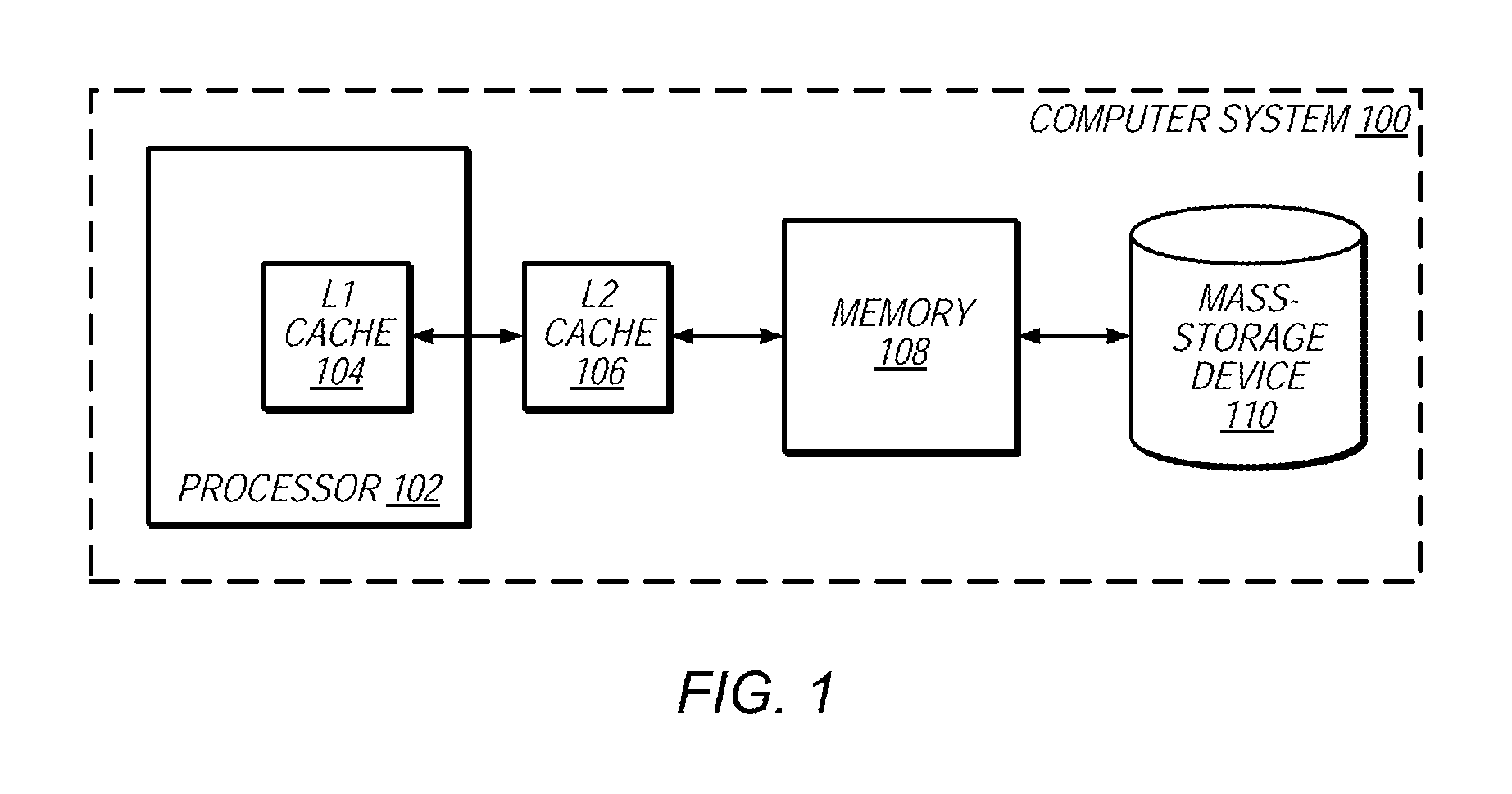

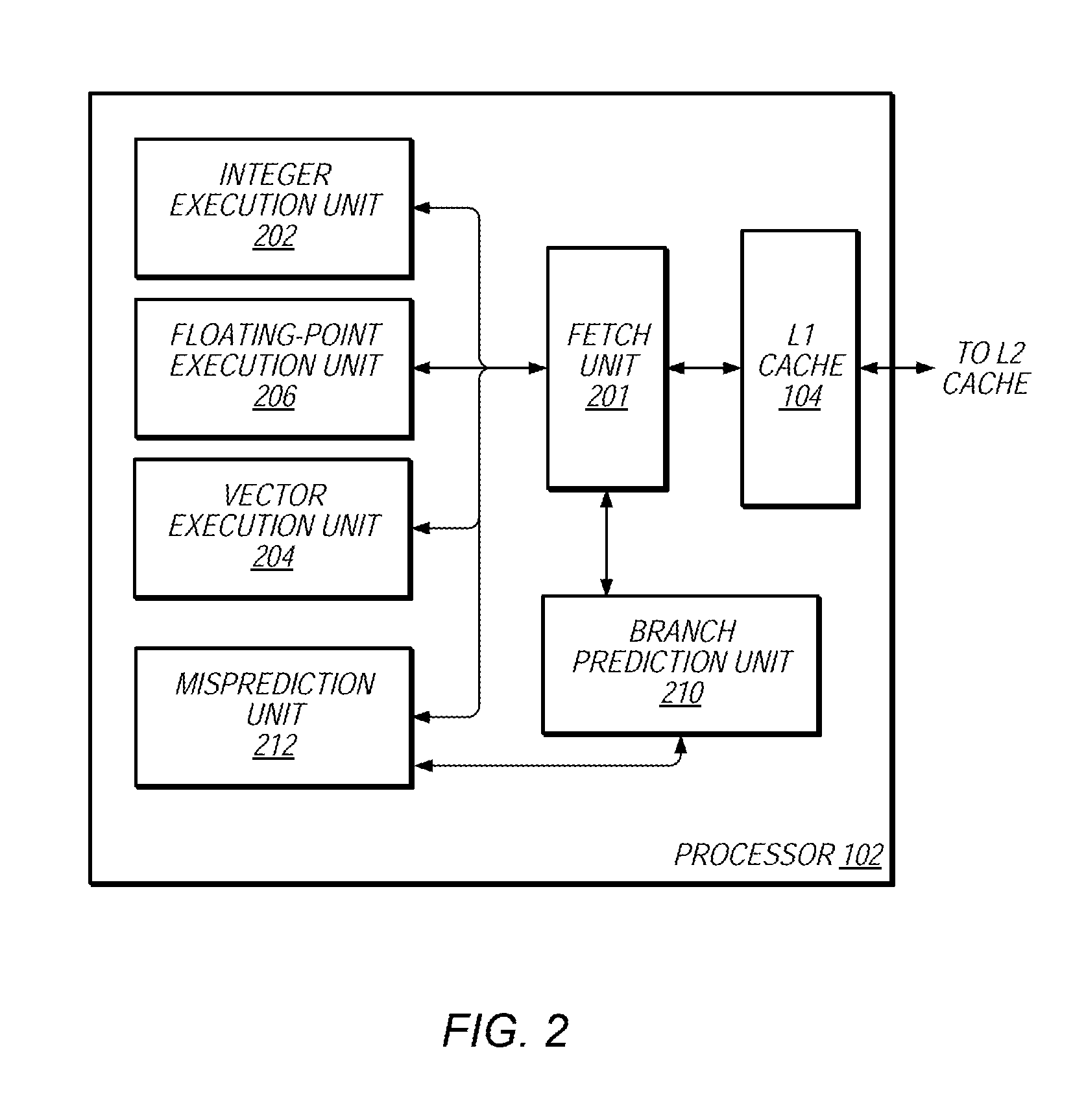

[0073]Instructions and examples of vectorized code are shown and described to explain the operation of a vector processor such as processor 102 of FIG. 2, in conjunction with the Macroscalar architecture. The following description is generally organized so that a number of instr...

example 2b

Program Code Loop 2

[0109]

j = 0 ;for (x=0; x{if (A [x+j]{j = A [x];}B [x] = j;}

[0110]In Example 2A, the control-flow decision is independent of the loop-carried dependency chain, while in Example 2B the control flow decision is part of the loop-carried dependency chain. In some embodiments, the loop in Example 2B may cause speculation that the value of “j” will remain unchanged and compensate later if this prediction proves incorrect. In such embodiments, the speculation on the value of “j” does not significantly change the vectorization of the loop.

[0111]In some embodiments, the compiler may be configured to always predict no data dependencies between the iterations of the loop. In such embodiments, in the case that runtime data dependencies exist, the group of active elements processed in parallel may be reduced to represent the group of elements that may safely be processed in parallel at that time. In these embodiments, there is little penalty for mispredicting more parallelism t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com