Context-based adaptive variable length coding for adaptive block transforms

A technology for transforming coefficients and image coding, which is applied in image coding, code conversion, image data processing, etc., and can solve the problem that the block division scheme is not a solution

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

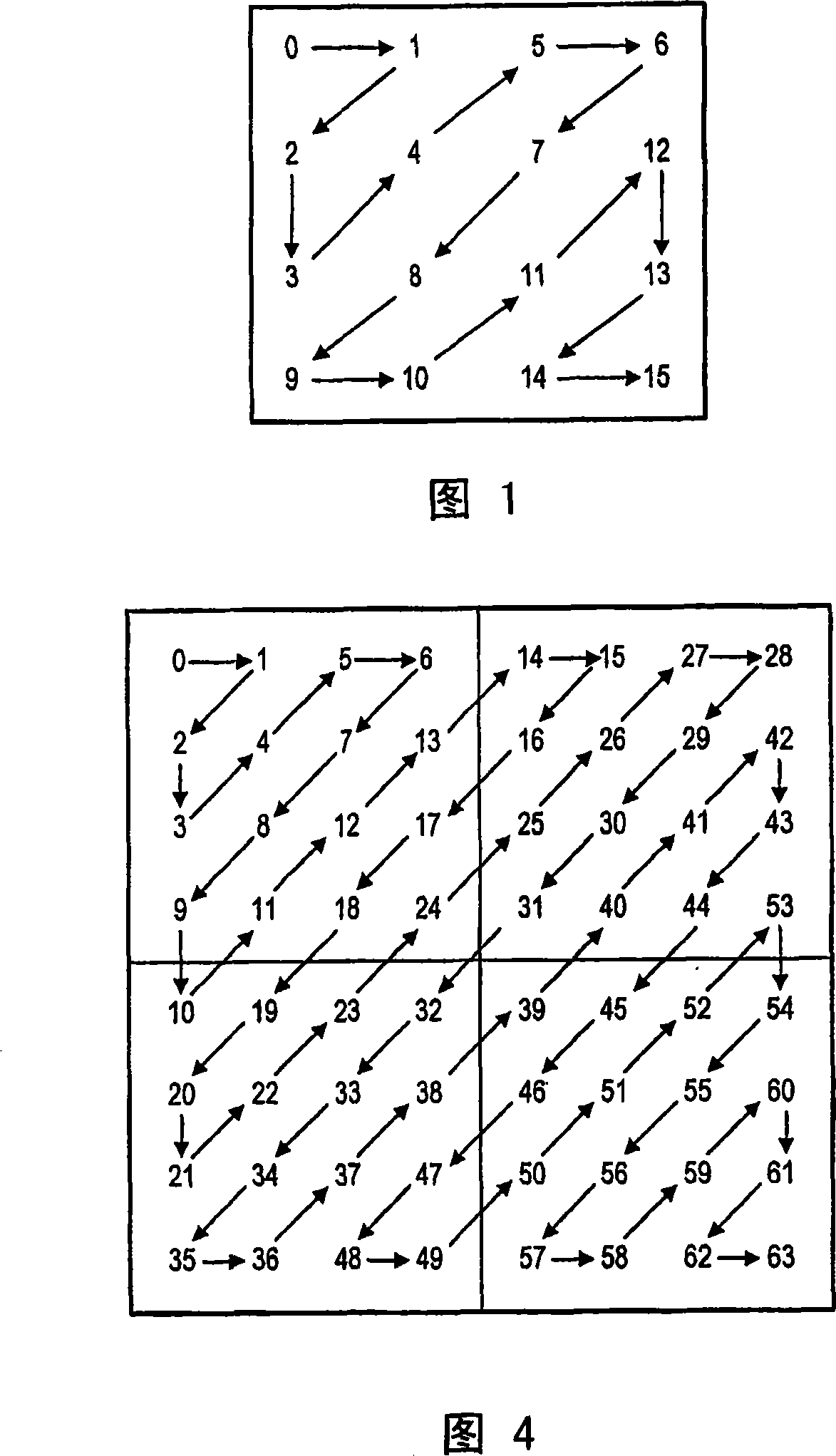

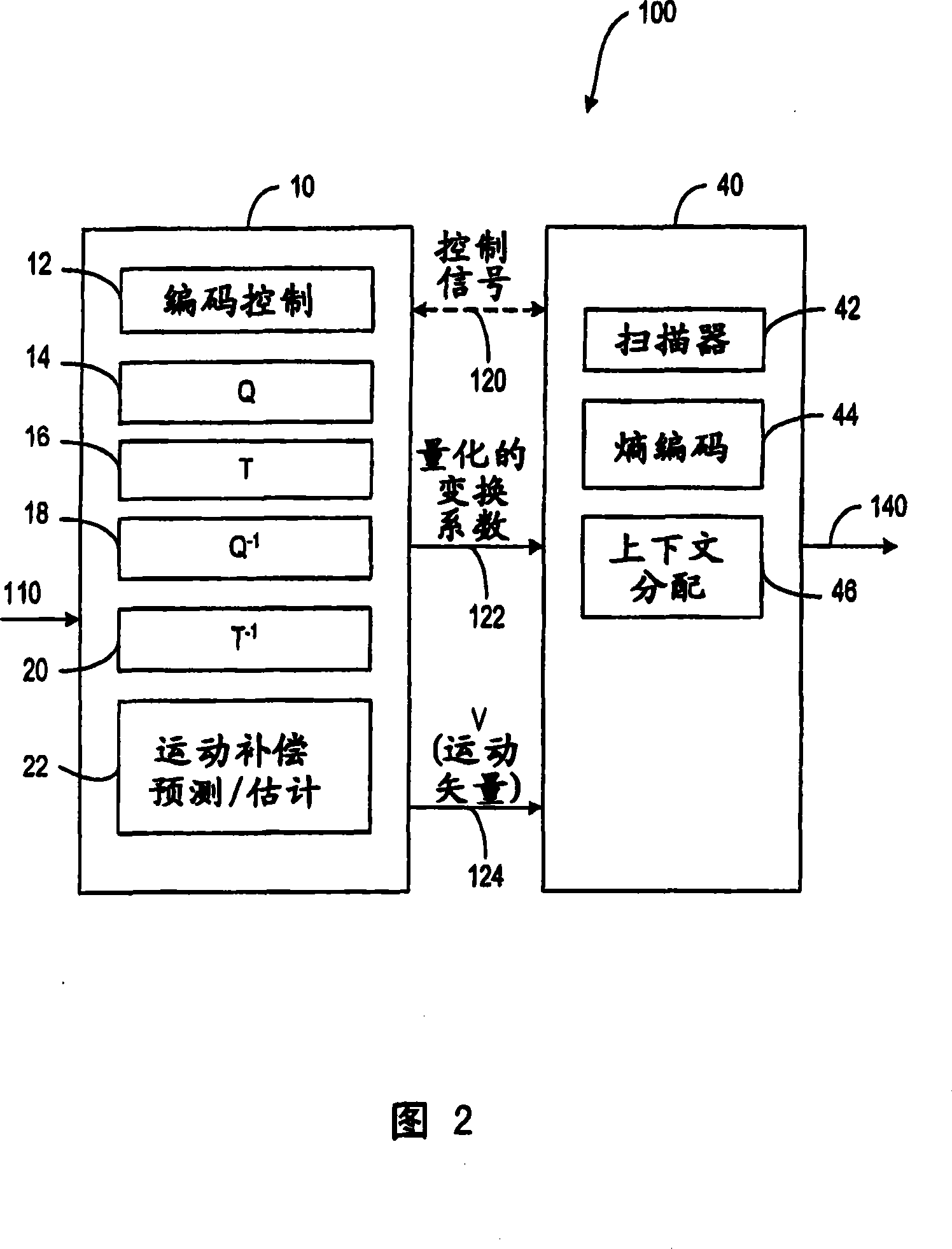

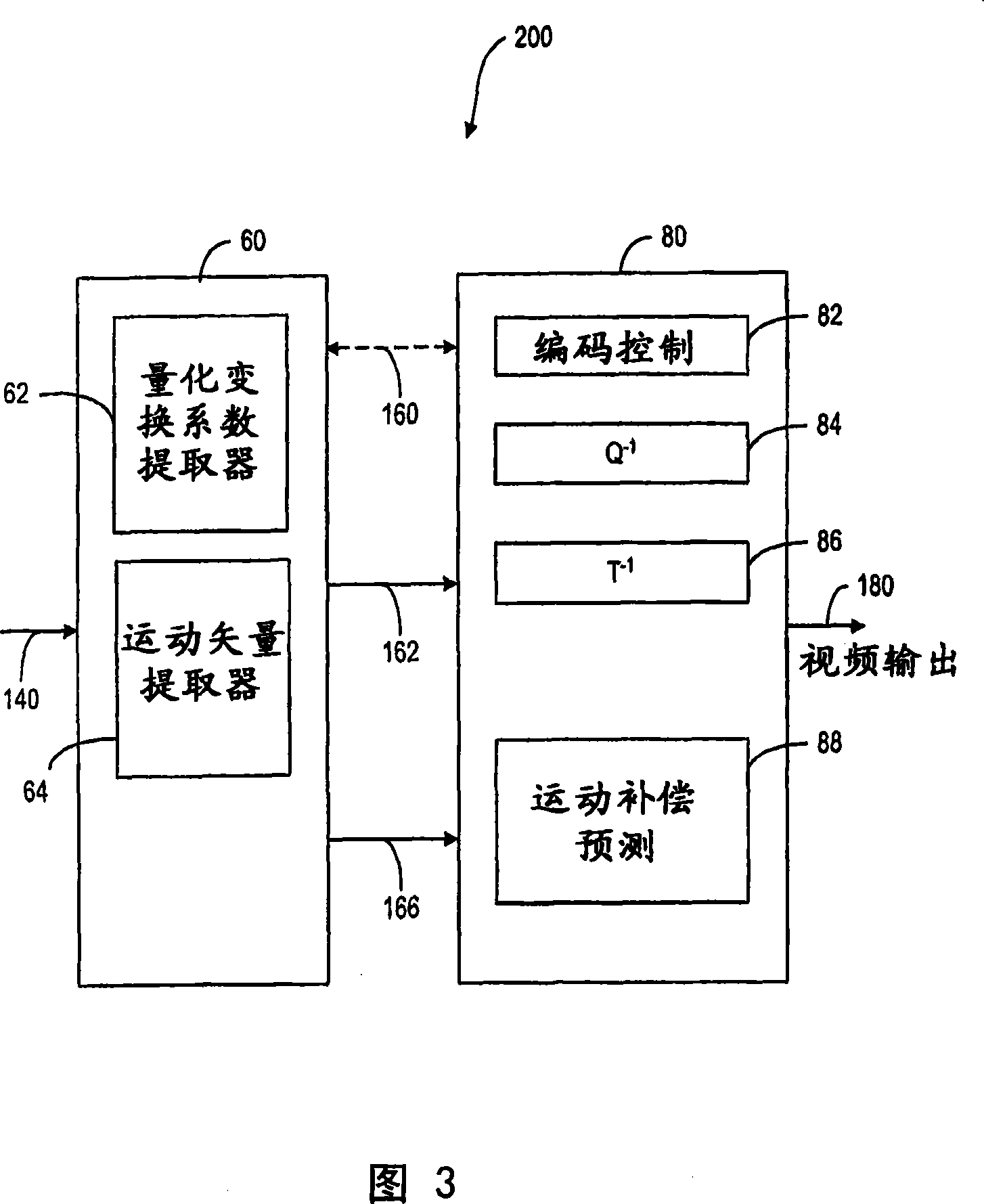

[0082] The block segmentation method according to the present invention divides the transform coefficient ABT block (8*8 block, 4*8 block or 8*4 block) into 4*4 blocks, which are coded using the standard 4*4 CAVLC algorithm. The partitioning of the coefficients among the 4x4 blocks is based on the energy of the coefficients to ensure that the statistical distribution of the coefficients in each 4x4 block is similar. The energy of a coefficient depends on the frequency of its corresponding transform function, which can be indicated, for example, by its position in the zigzag scan of the ABT block. As a result of this partitioning, not all coefficients selected to a given 4x4 block are spatially adjacent to each other in the ABT block.

[0083] The method proposed by the present invention operates on a block of coefficients produced using an 8x8, 4x8 or 8x4 transform, which has been scanned in a zigzag pattern (or any other pattern) to produce a sorted coefficient vector.

[00...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com