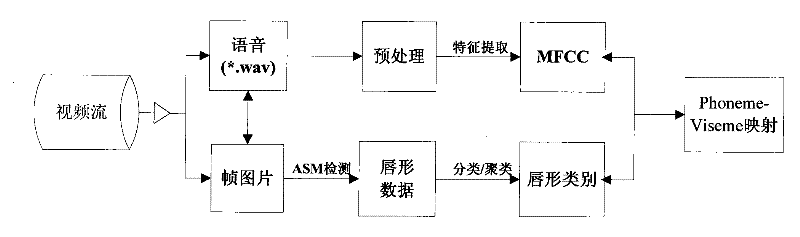

Method for voice-driven lip animation

A lip and voice technology, applied in the field of voice-driven lip animation, can solve the problems of visual matching error, audio matching error, large error, etc., and achieve the effects of easy implementation, operability, strong movement, and high motion efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

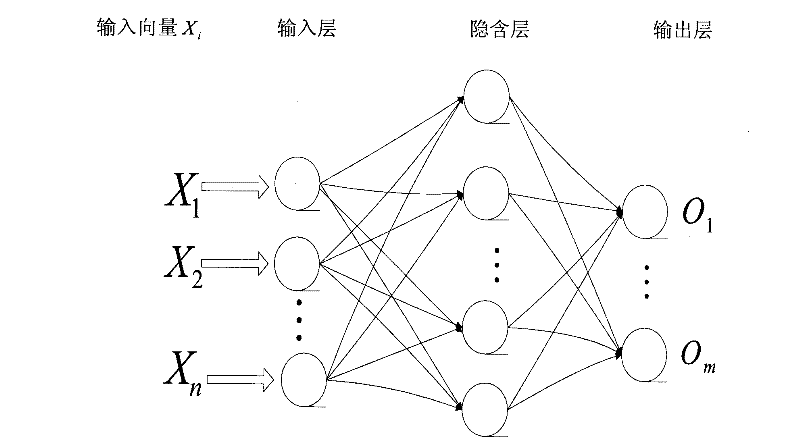

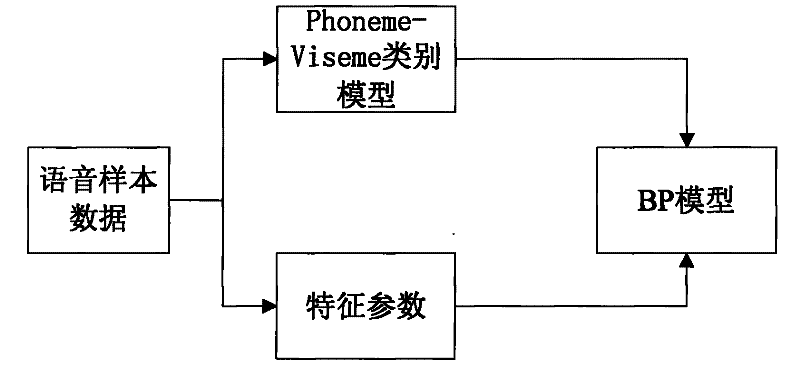

Method used

Image

Examples

specific Embodiment approach

[0030] The sample lip shape data is obtained through the SAM detection frame picture. Since the width and height of each person’s lips are different, they are normalized to a standard range according to a quantification rule. That is, although the height and width of people’s lips are different, each The distance from the edge of the individual lips to the center point is roughly the same as the width (height) ratio of the lips, so that in the lip clustering process, the lip sampling data can be eliminated due to the difference in the size of each person’s lips. , To normalize the lip shape of different people. In addition, the two-dimensional coordinate points on a frame of lip-shaped pictures are converted into points on high-dimensional space, so that the points on a frame of pictures are synthesized into a column of high-dimensional vectors, and each column vector represents a frame of lip-shaped picture information. Clustering analysis of these column vectors divides them ...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap