Performance optimization method for sharing cache

A high-speed cache and optimization method technology, which is applied in the direction of memory system, memory address/allocation/relocation, instrument, etc., can solve the problems of wasting on-chip resources, occupying high reuse block space, wasting on-chip resources, etc., to improve the overall The effect of cache performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

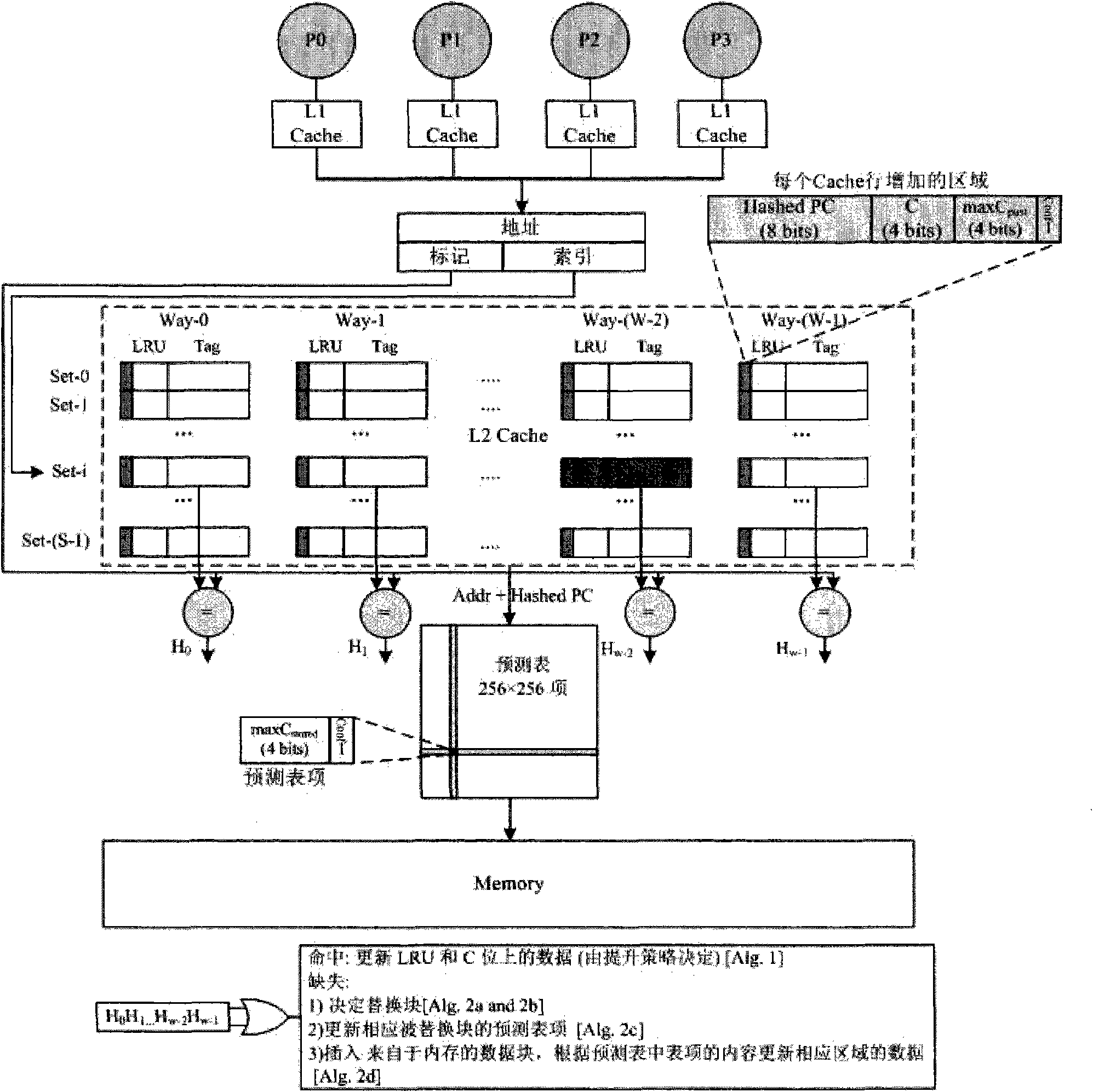

[0024] figure 1 Shown is the hardware structure of the ELF predictor of the 4-core processor. The processor has a W-way set associative shared level-2 cache, and each processor core has a private level-1 cache, and these level-1 caches are interconnected through a bus. connected to a shared secondary cache. The ELF strategy adds an independent prediction table structure between the L2 Cache and the main memory, which is used to save the usage frequency history (4-bit counter maxCstored) of the data that has not been cached and restore the usage frequency information when the data re-enters the Cache. The prediction table is organized as a 256×256 direct-mapped unmarked two-dimensional matrix structure, where the rows are indexed using the hashedPC field of the Cache block (the instruction PC that causes the cache miss is the result of every 8-bit XOR), and the columns are indexed by The block address is indexed by the XOR result of every 8 bits. This method can distinguish di...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com