Data size-based shuffle switch matrix compression method

A technology of data granularity and switch matrix, applied in machine execution devices, concurrent instruction execution, etc., can solve problems such as large proportion of complex operations and differences in data granularity, and achieve the effects of compressing capacity, reducing power consumption, and improving utilization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

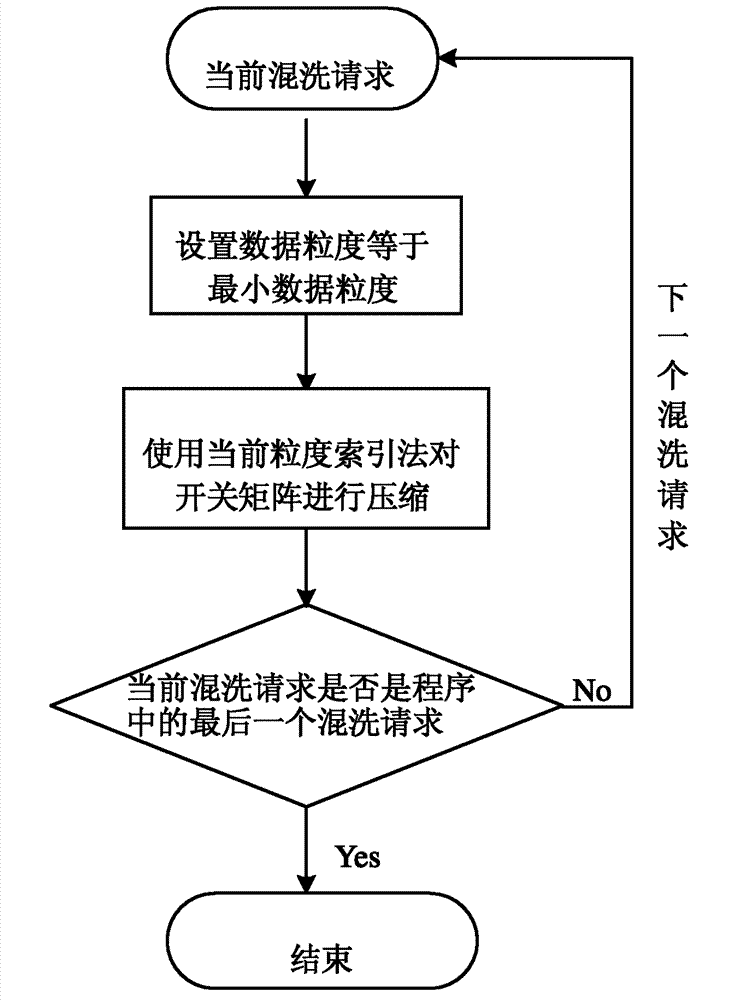

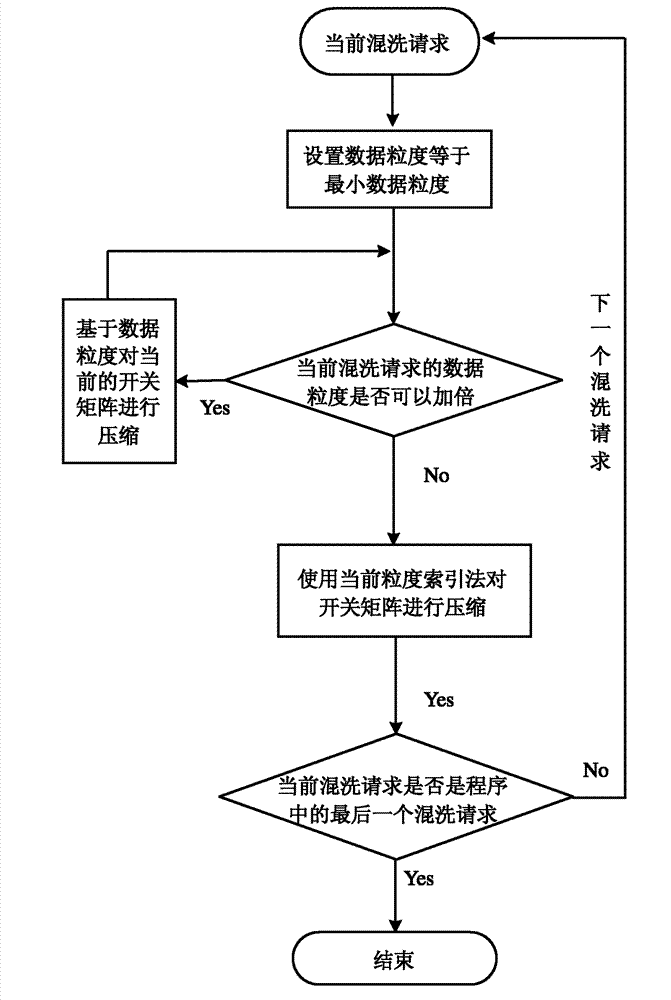

[0041] In the preprocessing stage of the present invention, for each shuffling request, the final shuffling data granularity is determined step by step, and the switch matrix is compressed according to the data granularity determined step by step, and finally further compressed according to the current granularity index method, so that Complete the final compression of the switch matrix.

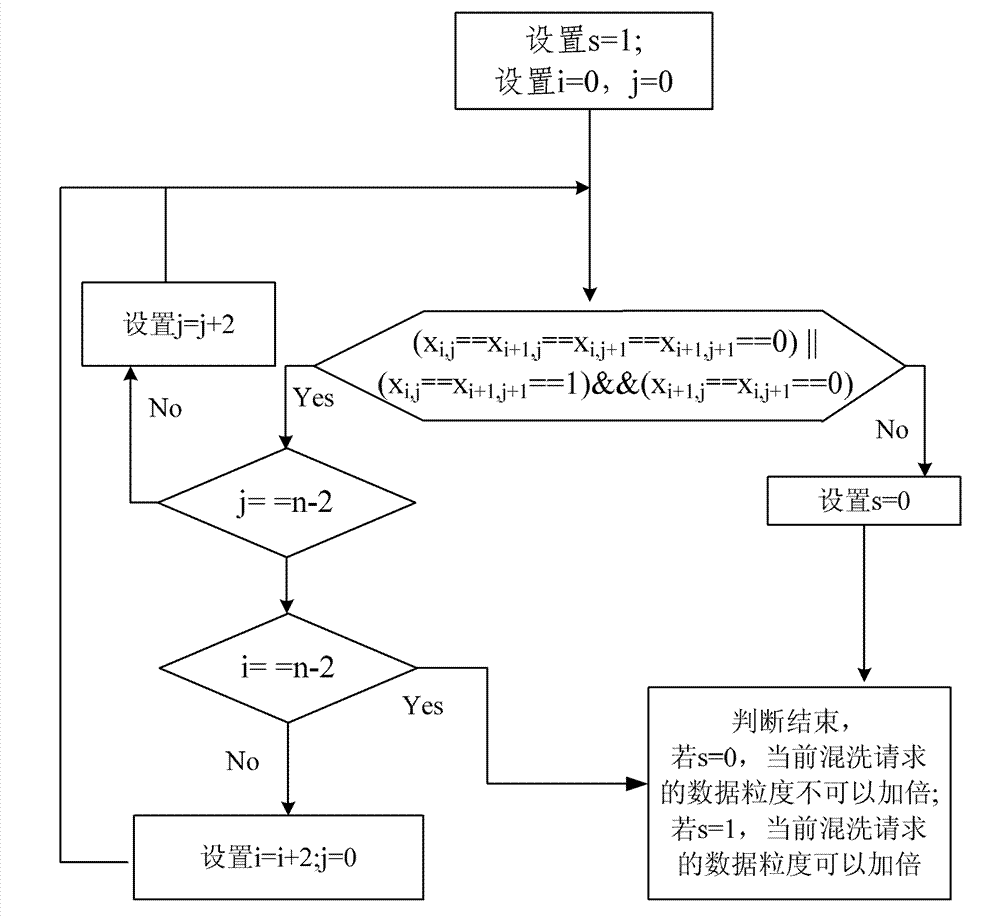

[0042]Assume that the total width of the data path of the processor is W bits, the minimum data granularity is G bits, the size of the Crossbar is N*N, and the width of each port is G bits (where W and G are integer powers of 2, And N=W / G), then the shuffling mode of each shuffling request is initially an N*N switch matrix, let it be X, and the value of each element in it is X i,j (1≤i≤N-1, 1≤j≤N-1), assuming that the maximum data granularity sup...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com