Video image fusion performance evaluation method based on structure similarity and human vision

A technology of structural similarity and video images, applied in the field of image processing, can solve problems such as large differences in evaluation results, no consideration of human visual perception characteristics, and susceptibility to noise

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

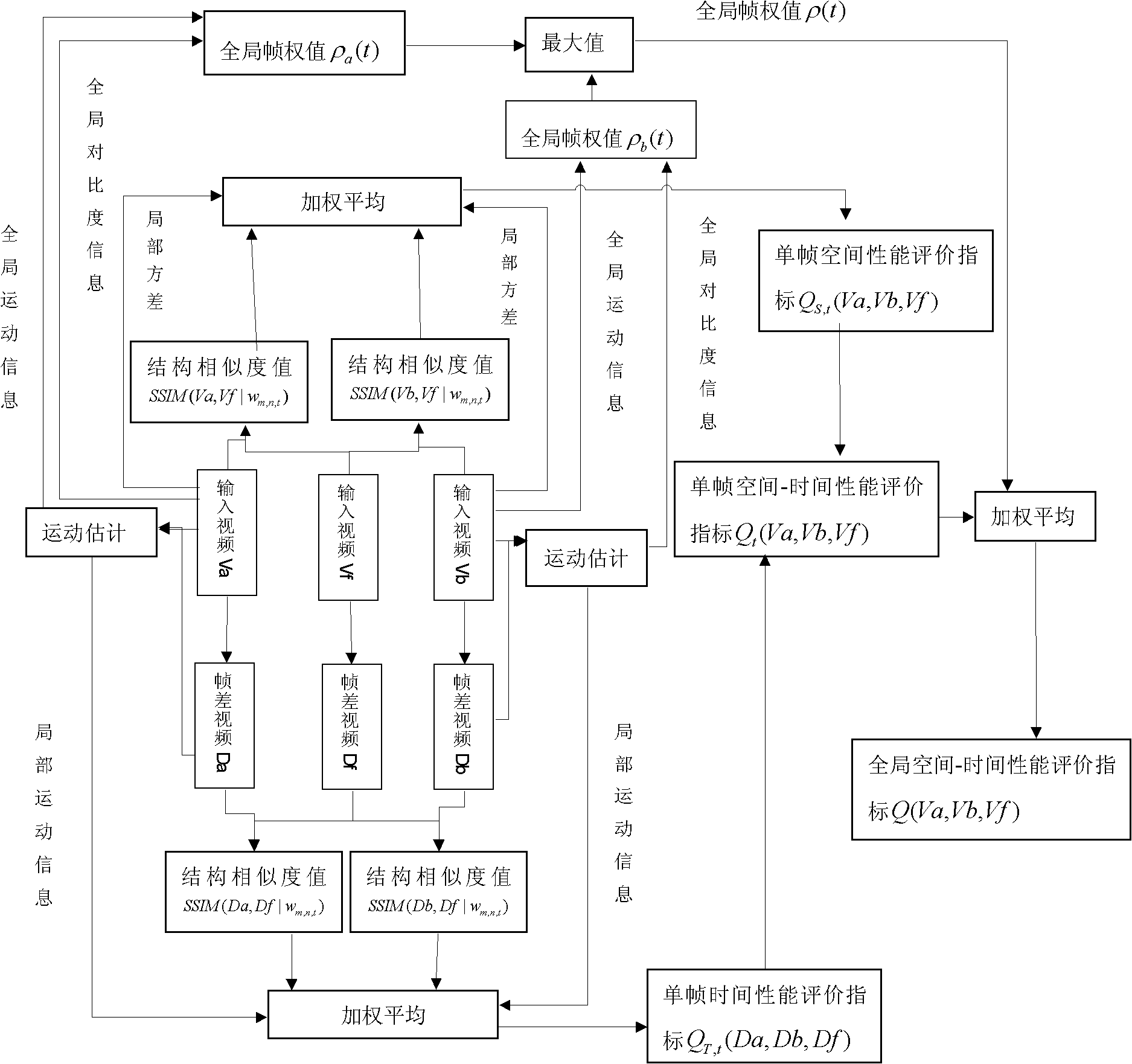

[0038] The present invention will be described in further detail below with reference to the accompanying drawings.

[0039] refer to figure 1 , taking two reference input videos Va, Vb and fused video Vf as an example, the implementation steps are:

[0040] In the first step, each frame image of each input video and fused video is taken as the processing object, and the single-frame spatial performance evaluation index is calculated.

[0041] The following takes the tth frame image of each video image as an example to illustrate:

[0042] (1.1) Define a local window w at the spatial point (m, n) for the fused video image Vf and the input video image Va and Vb of the t-th frame image m,n,t , a window of size 7×7 is used in the present invention;

[0043] (1.2) Calculate the fusion between the fused video image Vf and the input video image Va, Vb in the current window w m,n,t The local structure similarity value under SSIM(Va, Vf|w m,n,t ) and SSIM(Vb, Vf|w m,n,t ):

[0...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com