Unmanned aerial vehicle autonomous navigation landing visual target tracking method

An unmanned aerial vehicle, visual autonomous technology, applied in the direction of instruments, character and pattern recognition, computer components, etc., can solve the problem of unreliable tracking of ground targets

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0057] The present invention will be described in detail below with reference to the accompanying drawings and examples.

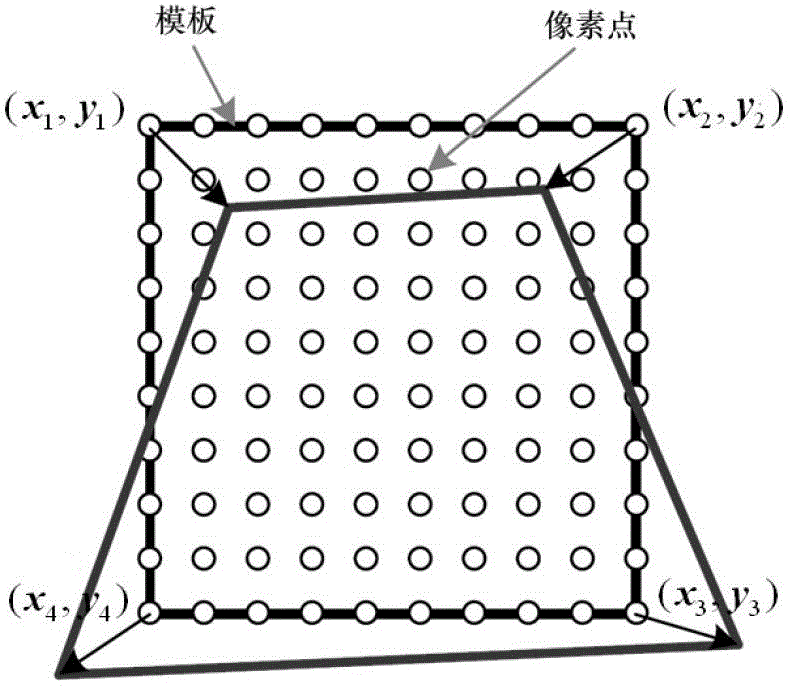

[0058] Step 1. The airborne camera collects the template image of the landing target point and performs affine illumination normalization on the template image to obtain the pixel gray value I of the normalized template image at point x norm (x), where x represents the coordinates of the pixel in the template image;

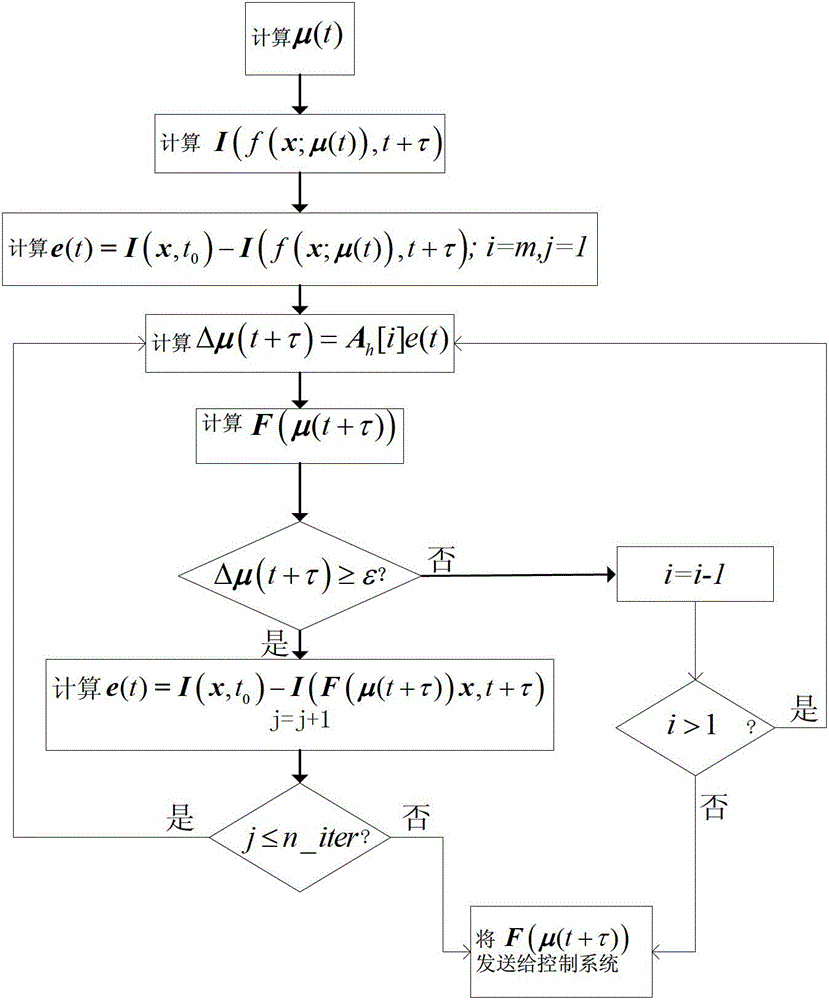

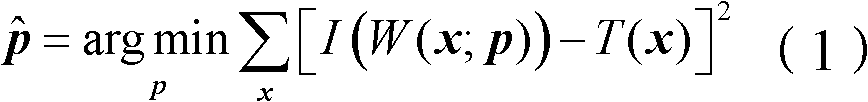

[0059] For the video sequence, the target motion can be regarded as the three-dimensional motion on the two-dimensional space and the time dimension. For this reason, the gray value of the pixel point of the real-time input image at x=(x, y) at time t is expressed by I(x , t) said. put a certain t x The real-time video image at the moment is selected as the reference image, and for the target area selected in the reference image, a point set containing N elements can be used represent, and the column vector I(x, t) of the image gray value...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com