Information processing method and electronic equipment

An information processing method and technology of electronic equipment, applied in the field of electronics, can solve the problem of inability to correctly recognize personal pronouns of voice commands, and achieve the effect of improving user experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

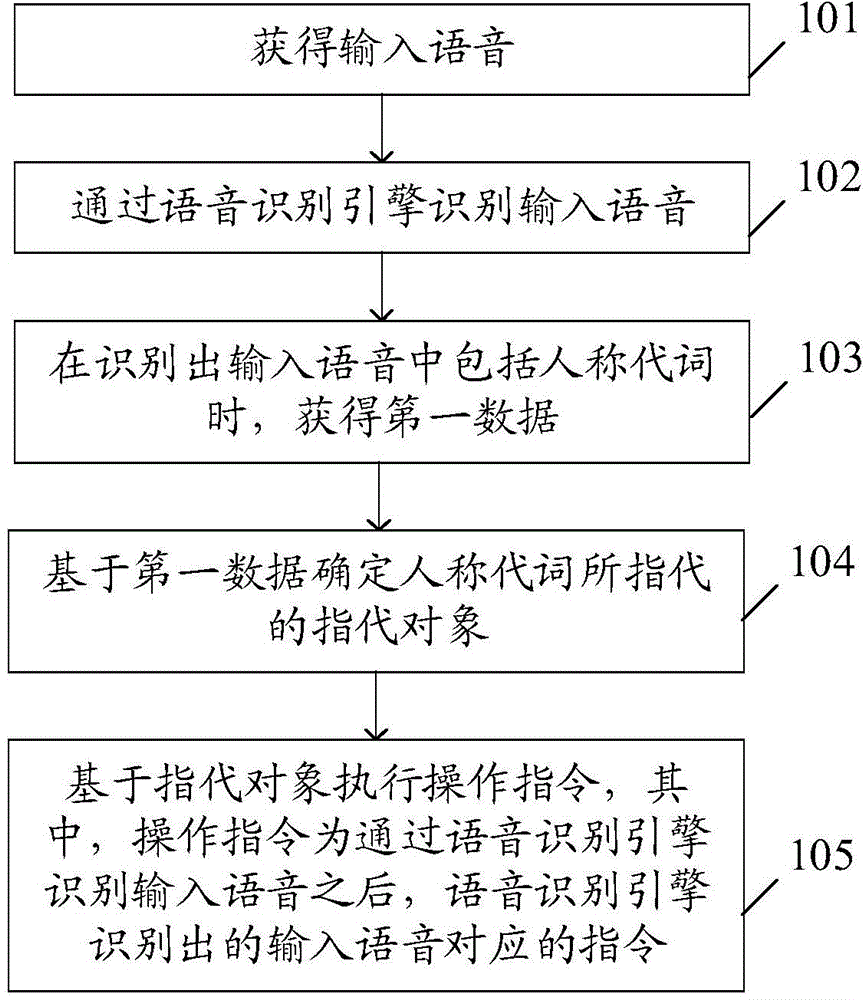

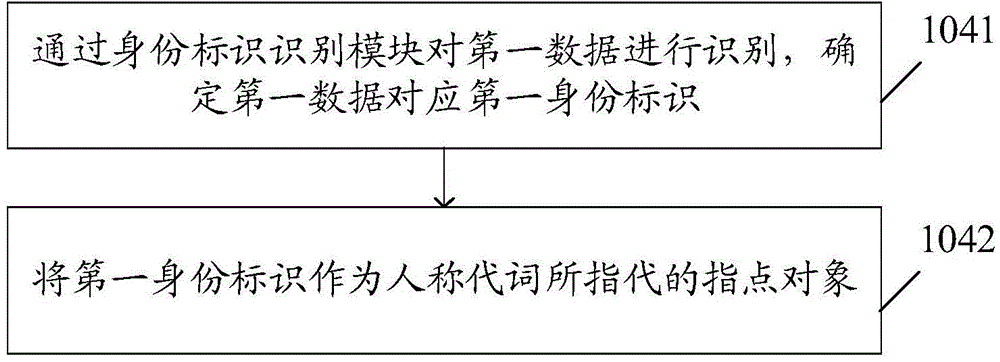

[0086] Example 1, see Figure 4 , including the following steps:

[0087] Step 201: the electronic device obtains the input voice of the user: "search my photos";

[0088] Step 202: Recognize the input voice by the voice recognition engine;

[0089] Step 203: recognize that the input voice contains the first type of personal pronoun "I", load the voiceprint extraction module, and extract the voiceprint data from the input voice through the voiceprint extraction module;

[0090] Step 204: The voiceprint recognition module recognizes that the identity corresponding to the voiceprint parameter is "Li Ming" by comparing the voiceprint parameter with the voiceprint feature database;

[0091] Step 205: Determine that "Li Ming" is the referent of "I";

[0092] Step 206: Execute the execution instruction corresponding to the input voice, and search for photos associated with "Li Ming" from the image library. Among them, the generation of the execution instruction can occur at any ...

example 2

[0093] Instance two, see Figure 5 , including the following steps:

[0094] Execute step 207 after the above step 202: recognize that the input voice contains the first type of personal pronoun "I", load the image recognition module, and obtain the first image containing the current user;

[0095] Step 208: Determine that the first image is the referent of "I";

[0096] Step 209: Execute the execution command corresponding to the input voice, extract the facial features from the first image, compare the facial features with the facial features of each photo in the picture library, and search for the matching facial features Photo; wherein, the generation of execution instructions can occur at any moment after step 202 and before step 209 .

example 3

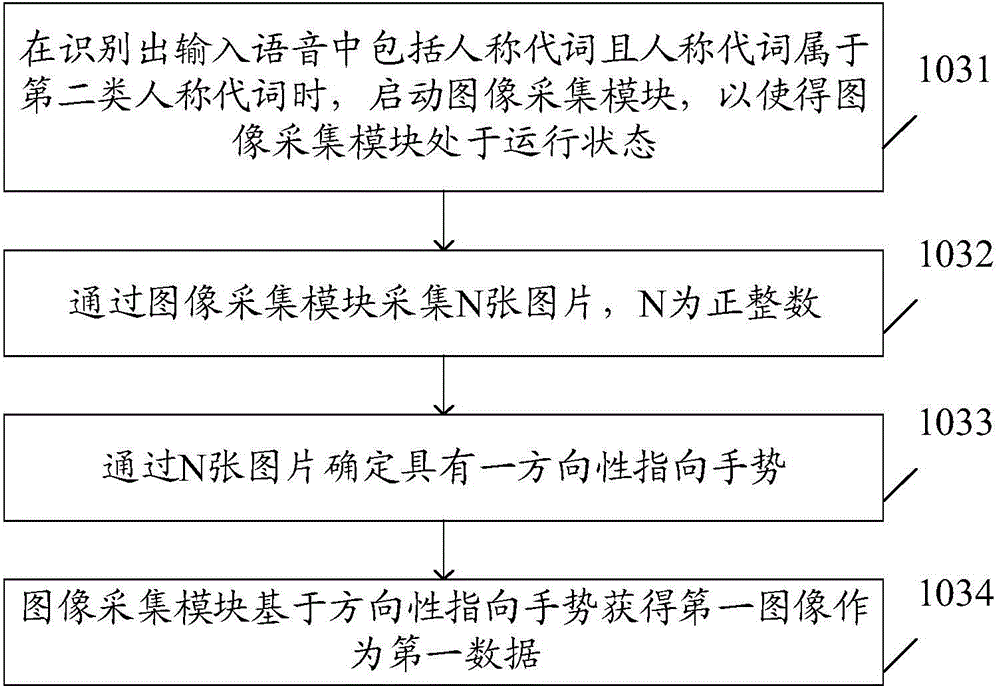

[0097] Example three, see Figure 6 , including the following steps:

[0098] Step 301: When the electronic device is playing the local music, obtain the user's input voice: "send the song to Mr. Li";

[0099] Step 302: Recognize the input voice by the voice recognition engine;

[0100] Step 303: recognize that "Mr. Li" is included in the input voice, and start the image acquisition module;

[0101] Step 304: Obtain N pictures through the image acquisition module, wherein the N pictures can be N frames of images in a video;

[0102] Step 305: Determine a directional pointing gesture through N pictures, wherein the directional pointing gesture can be determined according to the direction of the user's gesture or the direction of finger movement in the N pictures;

[0103] Step 306: Determine the collection position of the image collection module according to the directional pointing gesture, and collect an image at the determined collection position, which is the first image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com