Indoor autonomous navigation method for micro unmanned aerial vehicle

An autonomous navigation and unmanned aerial vehicle technology, applied in navigation, surveying and navigation, navigation through speed/acceleration measurement, etc., can solve the problem of not considering the reliability factors of positioning and state estimation, it is difficult to satisfy autonomous flight, and it is difficult to fully adapt Indoor autonomous flight and other issues to achieve the effect of improving autonomous environment perception, avoiding real-time modeling, and improving overall modeling accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

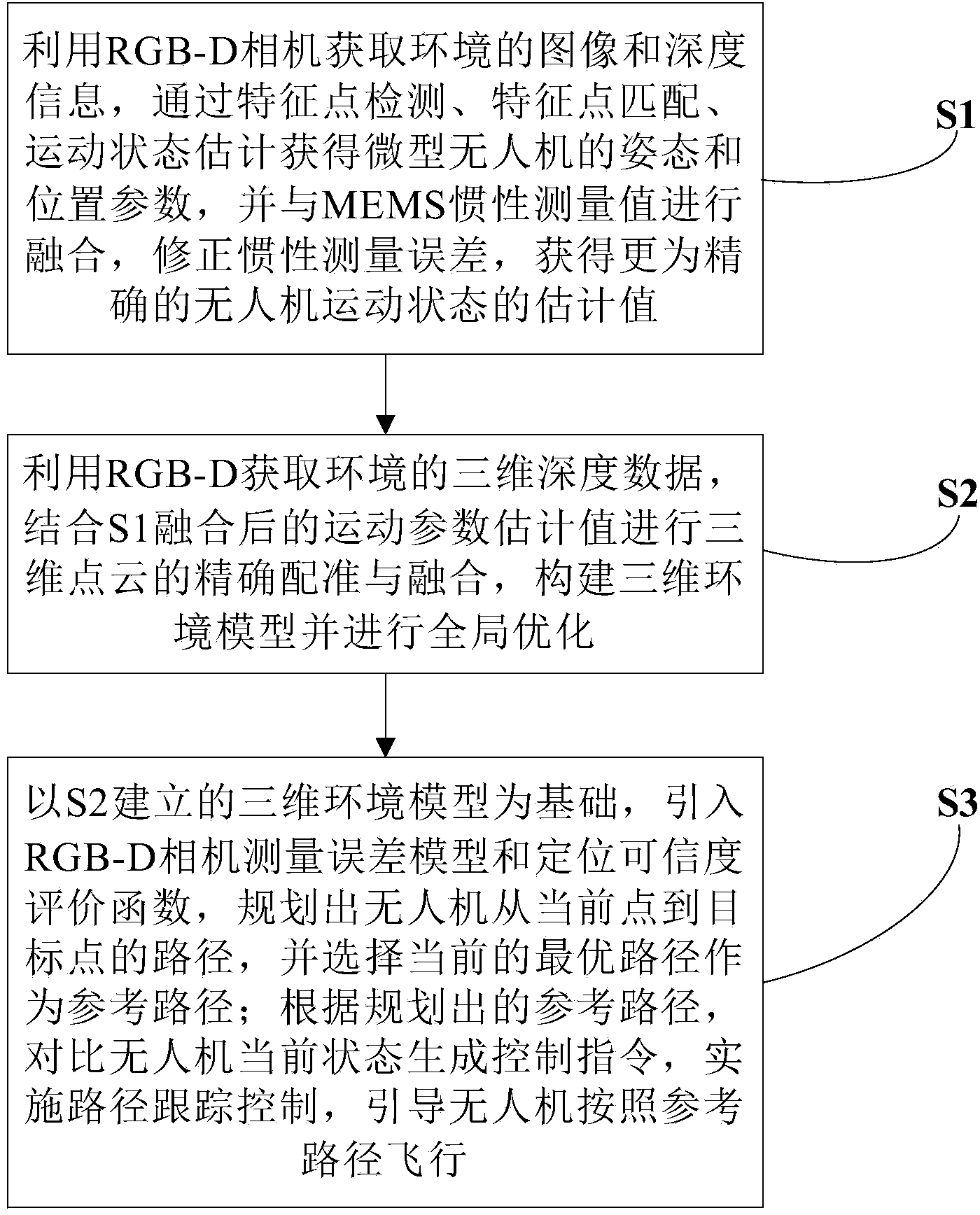

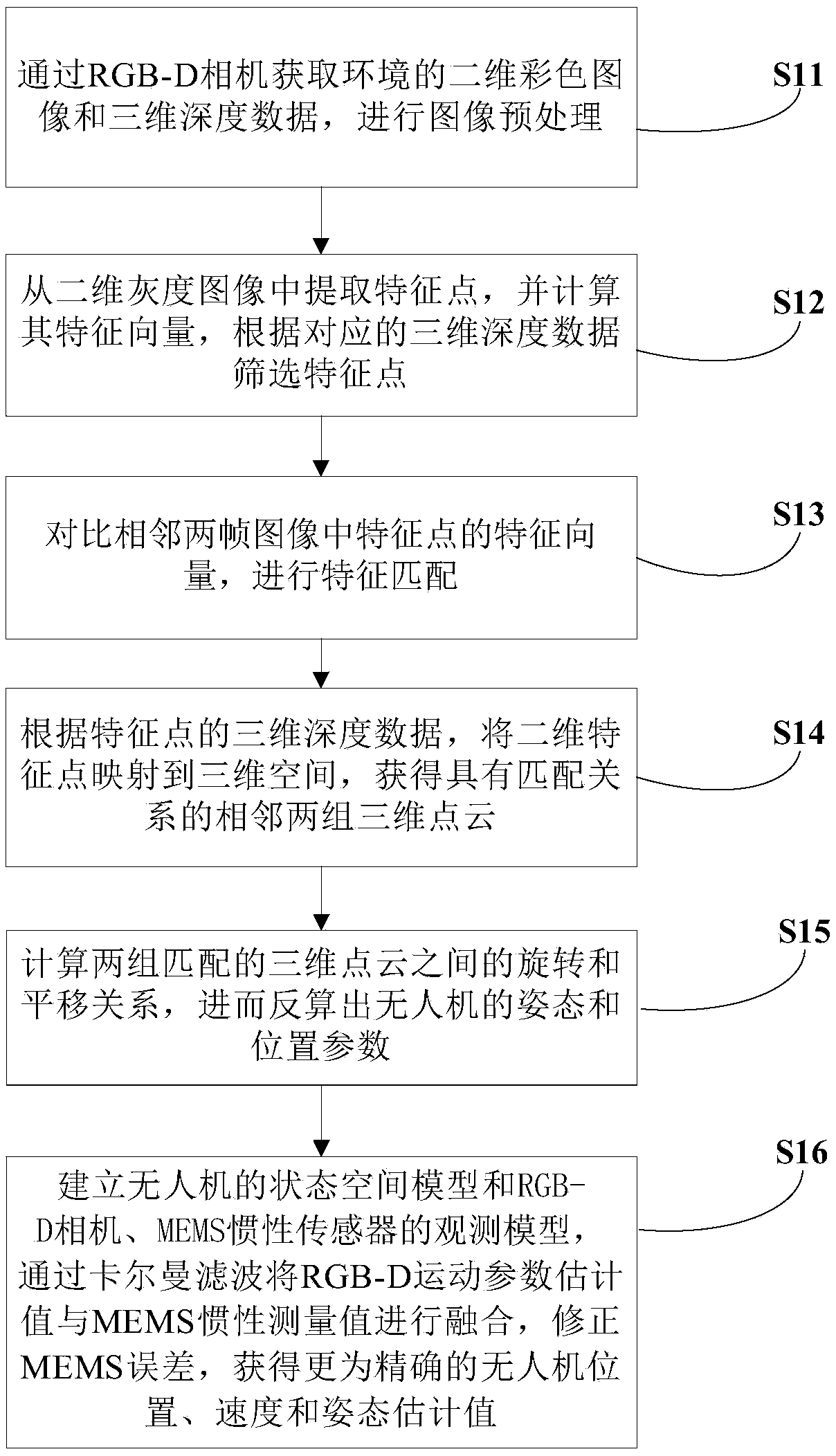

[0058] S1 Motion state estimation of micro UAV based on RGB-D and MEMS sensors, such as figure 2 As shown, it specifically includes the following steps:

[0059] S11: Obtain the image and depth data of the environment through the RGB-D camera, including a series of two-dimensional color domain (RGB) pixels composed of two-dimensional color images and corresponding three-dimensional depth domain data.

[0060] For a point p in a three-dimensional environment i , the form of information obtained by the RGB-D camera is as follows: p i =(x i ,y i ,z i ,r i , g i ,b i ), where x i ,y i ,z i is the 3D depth data of the point, representing the 3D position of the point relative to the center of the RGB-D camera, r i , g i ,b i It is the color gamut (RGB) information corresponding to this point. Therefore, at a certain moment, all the environmental points in the field of view of the RGB-D camera are described as p i The information in the form constitutes a frame of two...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com