A method of identifying motion of local matching window based on sliding window

A local matching and sliding window technology, applied in the field of video recognition, can solve the problems of inability to recognize similar actions and low recognition rate, and achieve the effect of improving action recognition rate, improving representation, and increasing time constraints

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] The present invention will be further described below in conjunction with the accompanying drawings.

[0032] refer to figure 1 , an action recognition method based on a sliding window local matching window, comprising the following steps:

[0033] 1) Obtain the depth map sequence of the person in the scene from the stereo camera, extract the position of the 3D joint points from the depth map, and use the 3D displacement difference between the poses as the feature expression of the depth map of each frame;

[0034] 2) Use the clustering method to learn the descriptors in the training set to obtain a feature set, and use it to express the features of each descriptor, so as to obtain the coded representation of each frame of image;

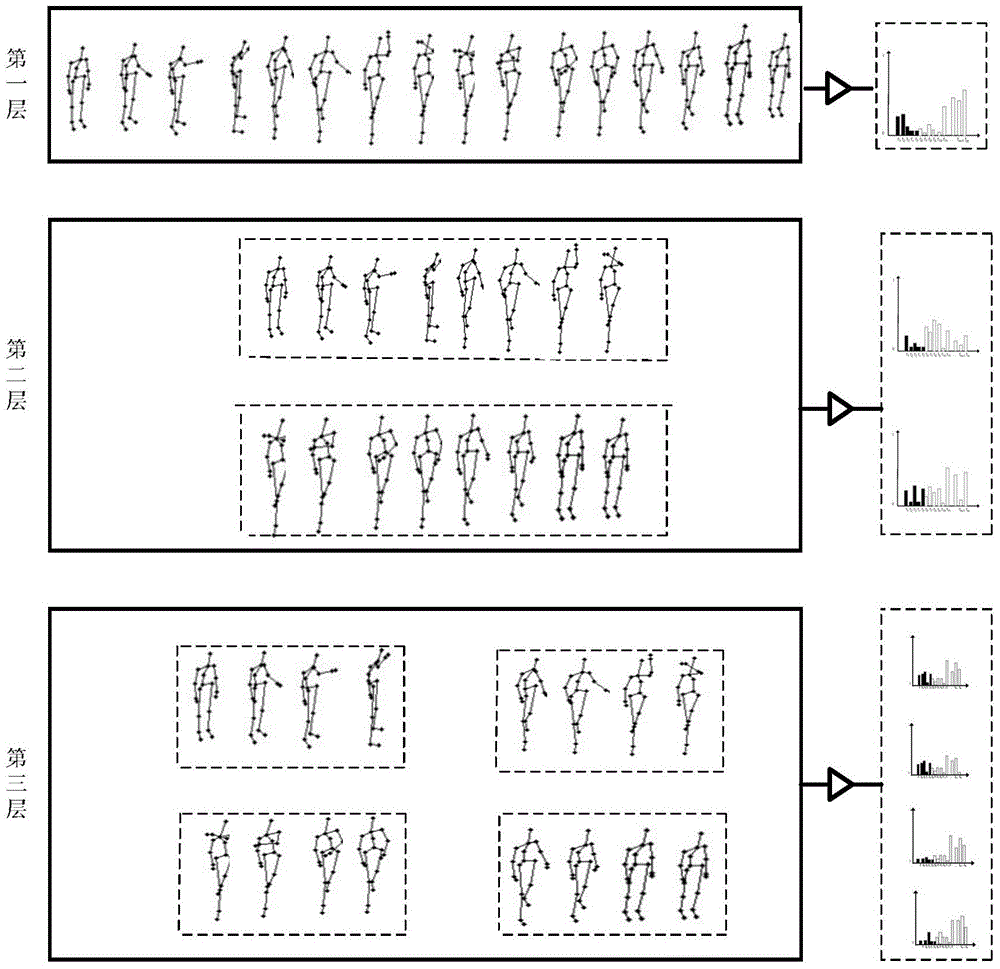

[0035] 3) Use a local matching model based on a sliding window to divide the entire action image sequence into action segments, and obtain the feature histogram expression of each action segment;

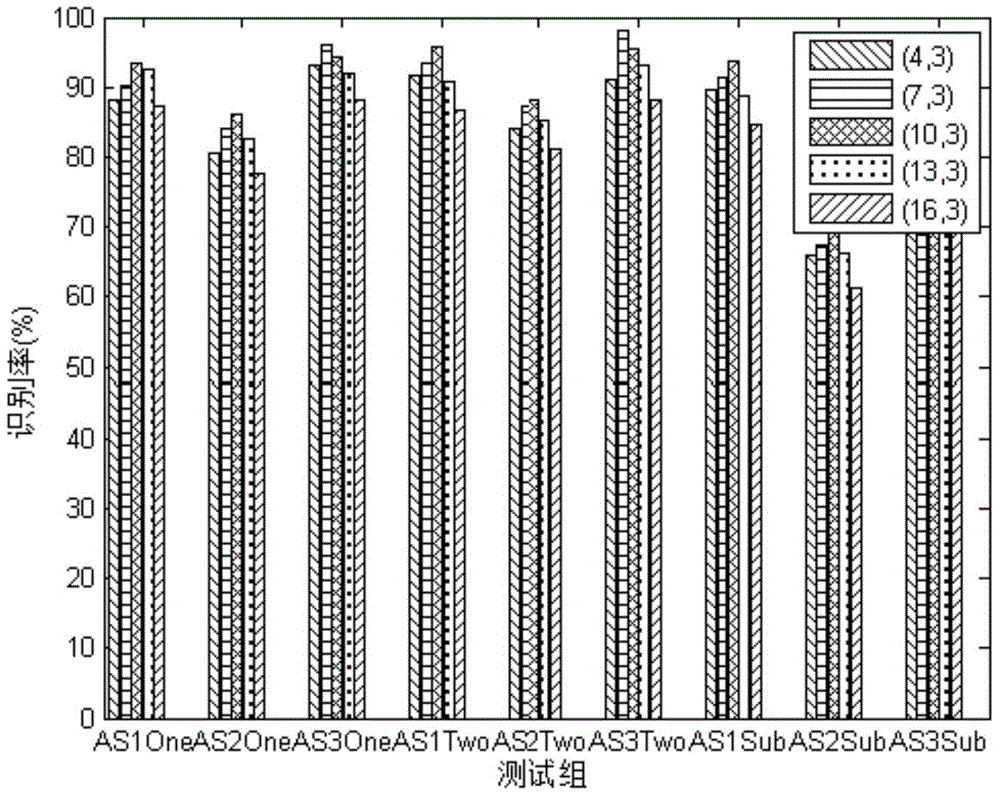

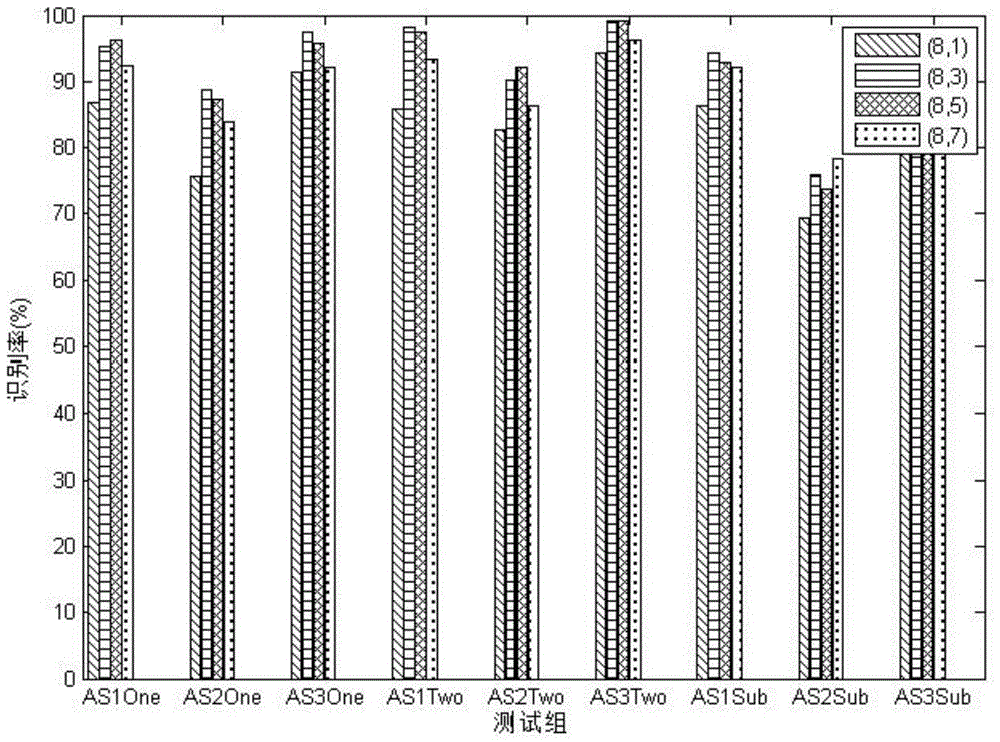

[0036] The feature matching process of the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com