Human muscle movement perception based menu selection method for human-computer interaction interface

A human-computer interaction interface and menu selection technology, applied in the field of human-computer interaction, can solve the problems of insufficient fit, shaking or even falling, and lack of humanization, and achieve the effect of convenient control, simplified learning process, and humanized operation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The technical scheme of the present invention is described in detail below in conjunction with accompanying drawing and embodiment:

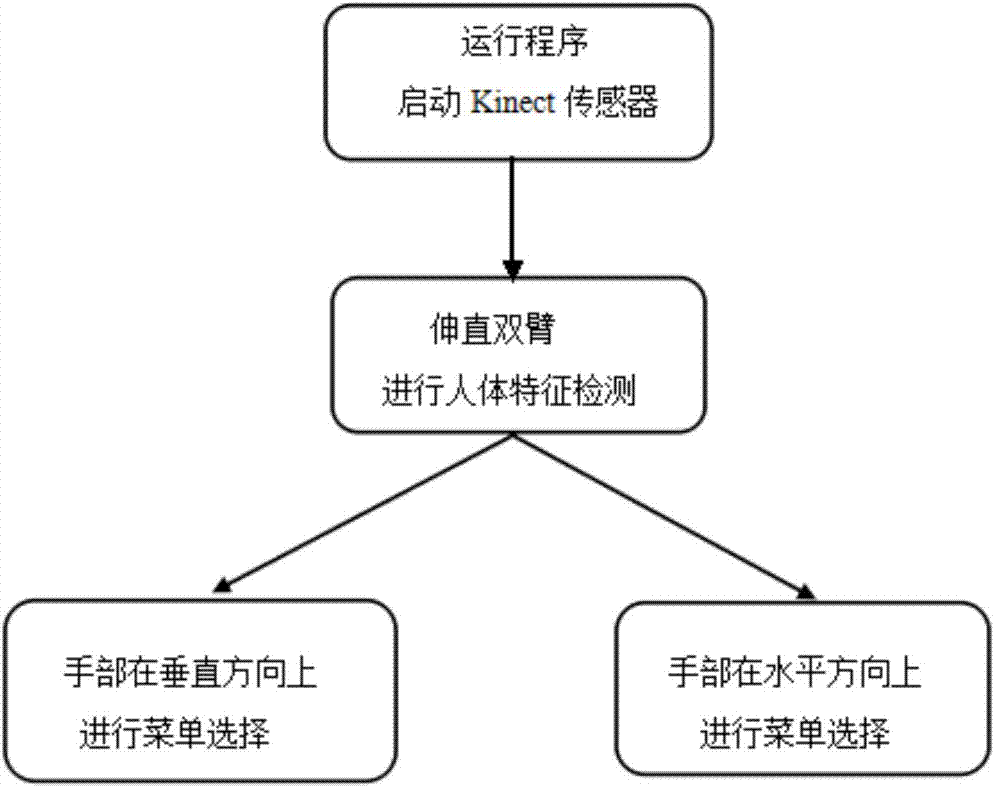

[0034] The human-computer interaction interface menu selection method based on human muscle kinesthetic perception requires a hardware system that at least includes a Kinect sensor, a host computer (computer) connected to the Kinect sensor and a display screen connected to the host computer. The overall flow of the method is as follows: figure 1 shown, including the following steps:

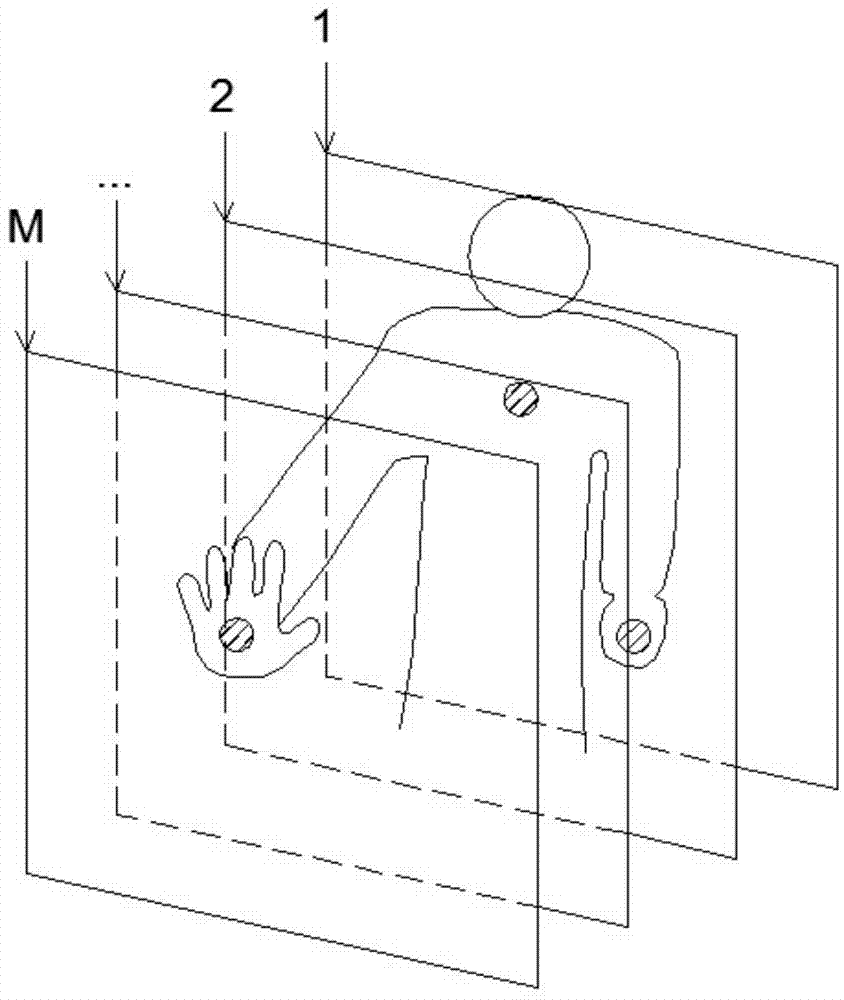

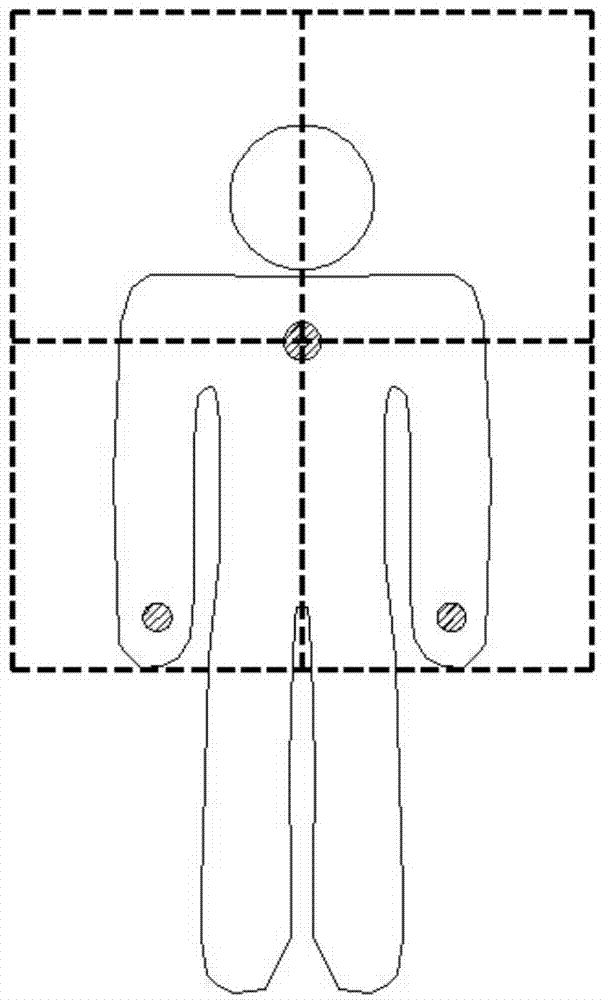

[0035] Step 1. Initialization parameter setting: according to the needs of the user, respectively set the number of space divisions of the space in front of the human body in the horizontal direction and the vertical direction.

[0036] Connect the Kinect sensor, and use the Kinect sensor to obtain information on the positions of various joints of the human body. Here we mainly obtain the three joint points of the user's middle chest (the midpoint of the hum...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com