Patents

Literature

86 results about "Spatial perception" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Gaze-contingent Display Technique

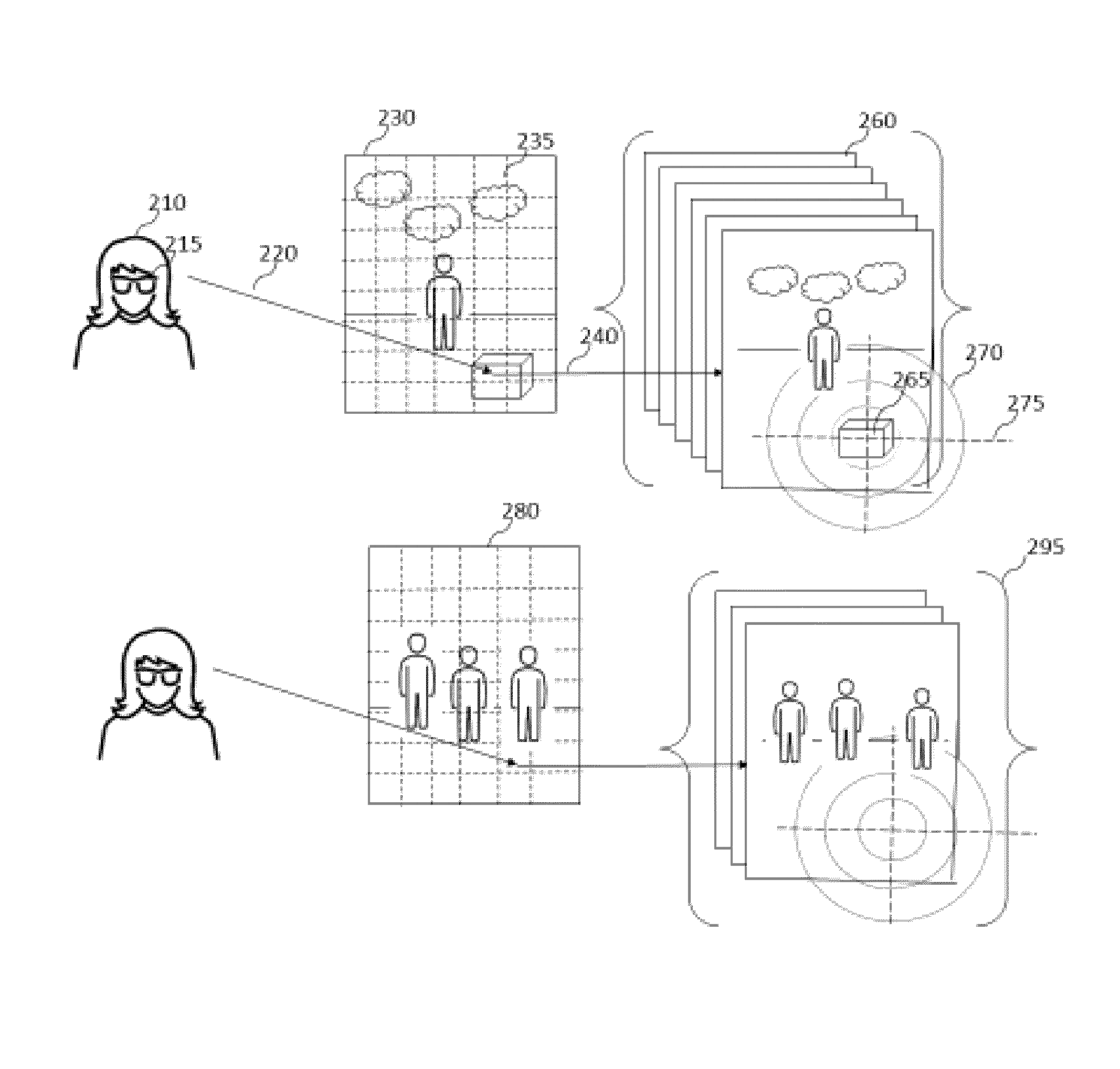

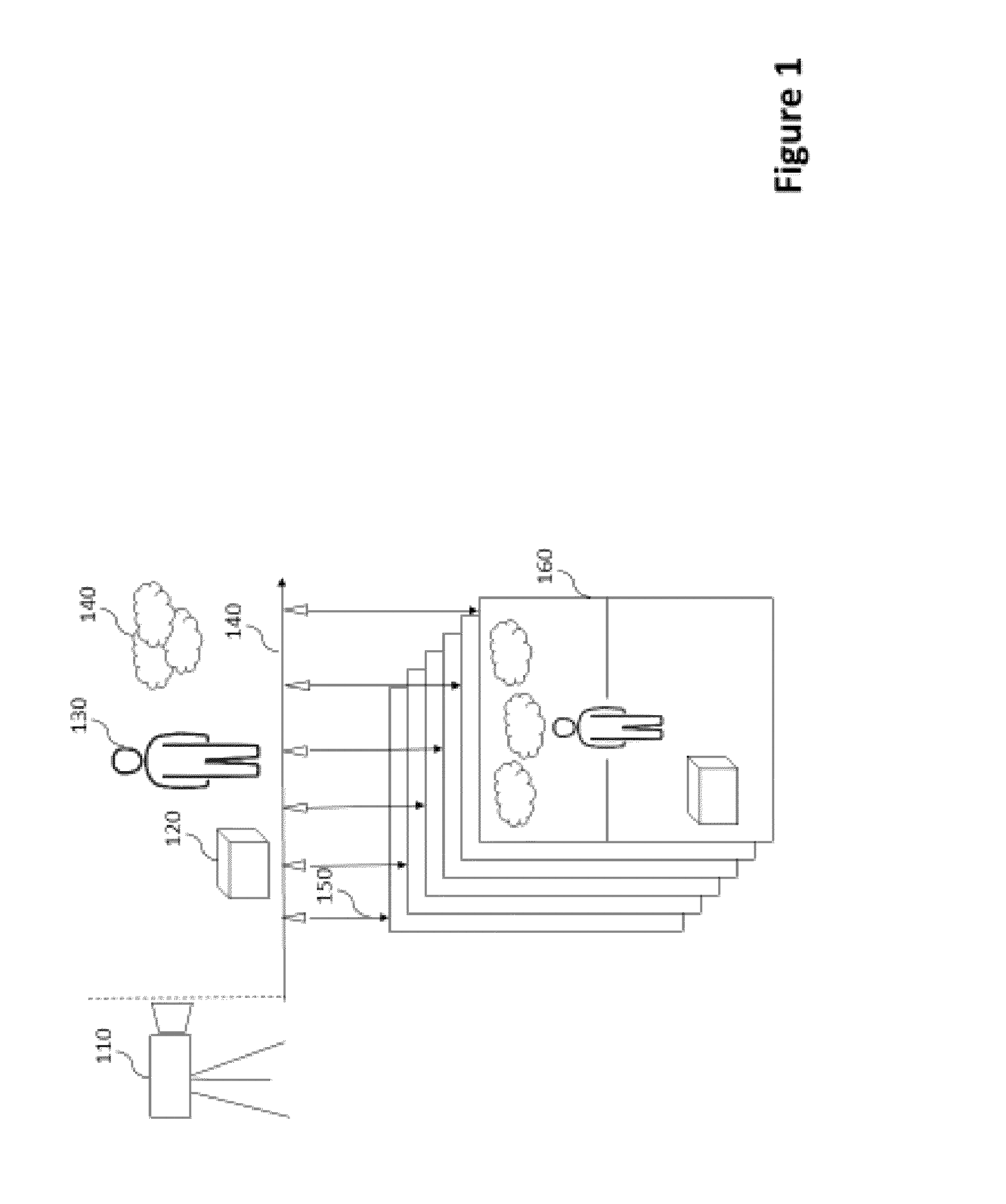

ActiveUS20160191910A1Increase speedImprove viewing experienceSteroscopic systemsInput/output processes for data processingProgram instructionSpatial perception

A gaze contingent display technique for providing a human viewer with an enhanced three-dimensional experience not requiring stereoscopic viewing aids. Methods are shown which allow users to view plenoptic still images or plenoptic videos incorporating gaze-contingent refocusing operations in order to enhance spatial perception. Methods are also shown which allow the use of embedded markers in a plenoptic video feed signifying a change of scene incorporating initial depth plane settings for each such scene. Methods are also introduced which allow a novel mode of transitioning between different depth planes wherein the user's experience is optimized in such a way that these transitions trick the human eye into perceiving enhanced depth. This disclosure also introduces a system of a display device which comprises gaze-contingent refocusing capability in such a way that depth perception by the user is significantly enhanced compared to prior art. This disclosure comprises a nontransitory computer-readable medium on which are stored program instructions that, when executed by a processor, cause the processor to perform operations relating to timing the duration the user's gaze is fixated on each of a plurality of depth planes and making a refocusing operation contingent of a number of parameters.

Owner:VON & ZU LIECHTENSTEIN MAXIMILIAN RALPH PETER

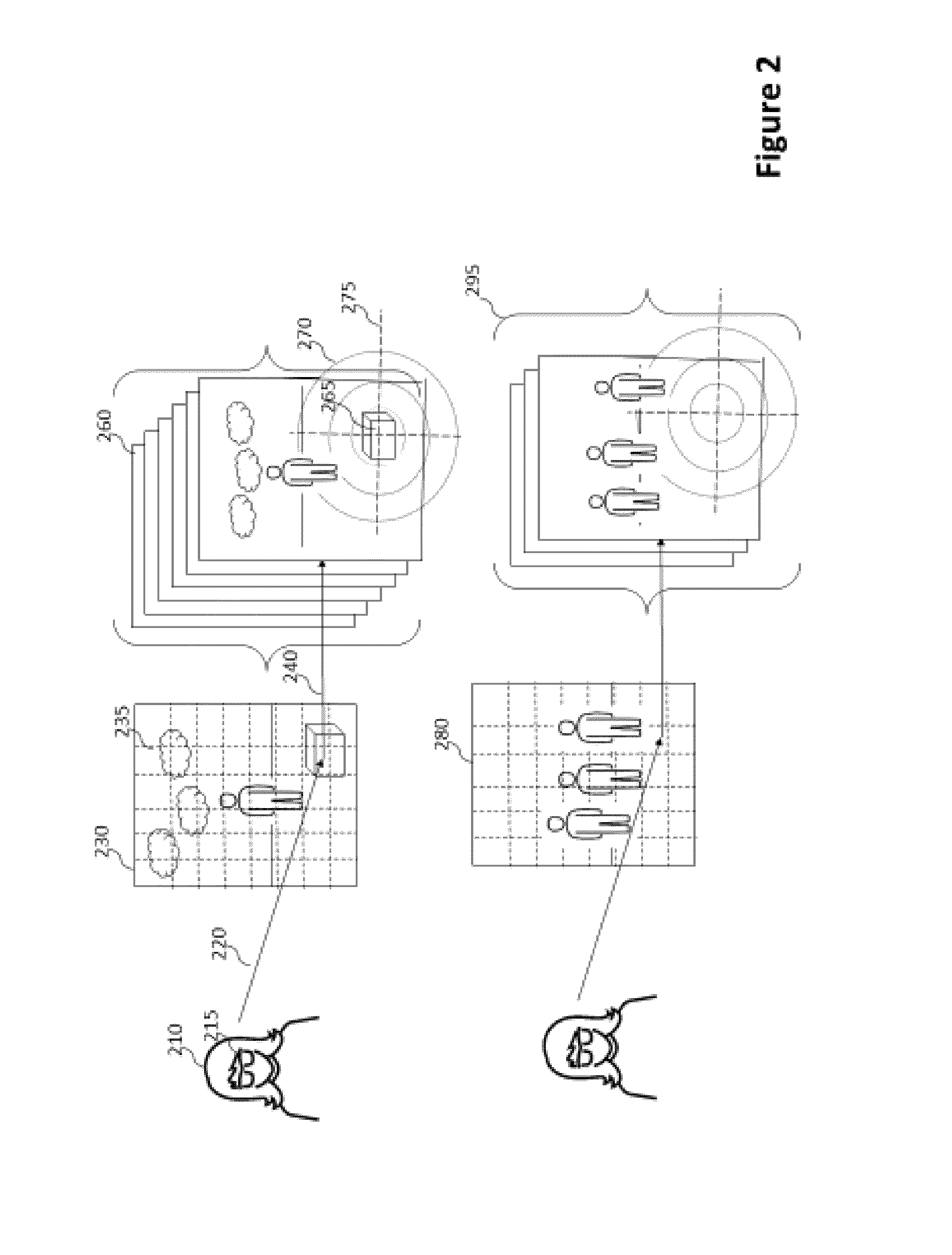

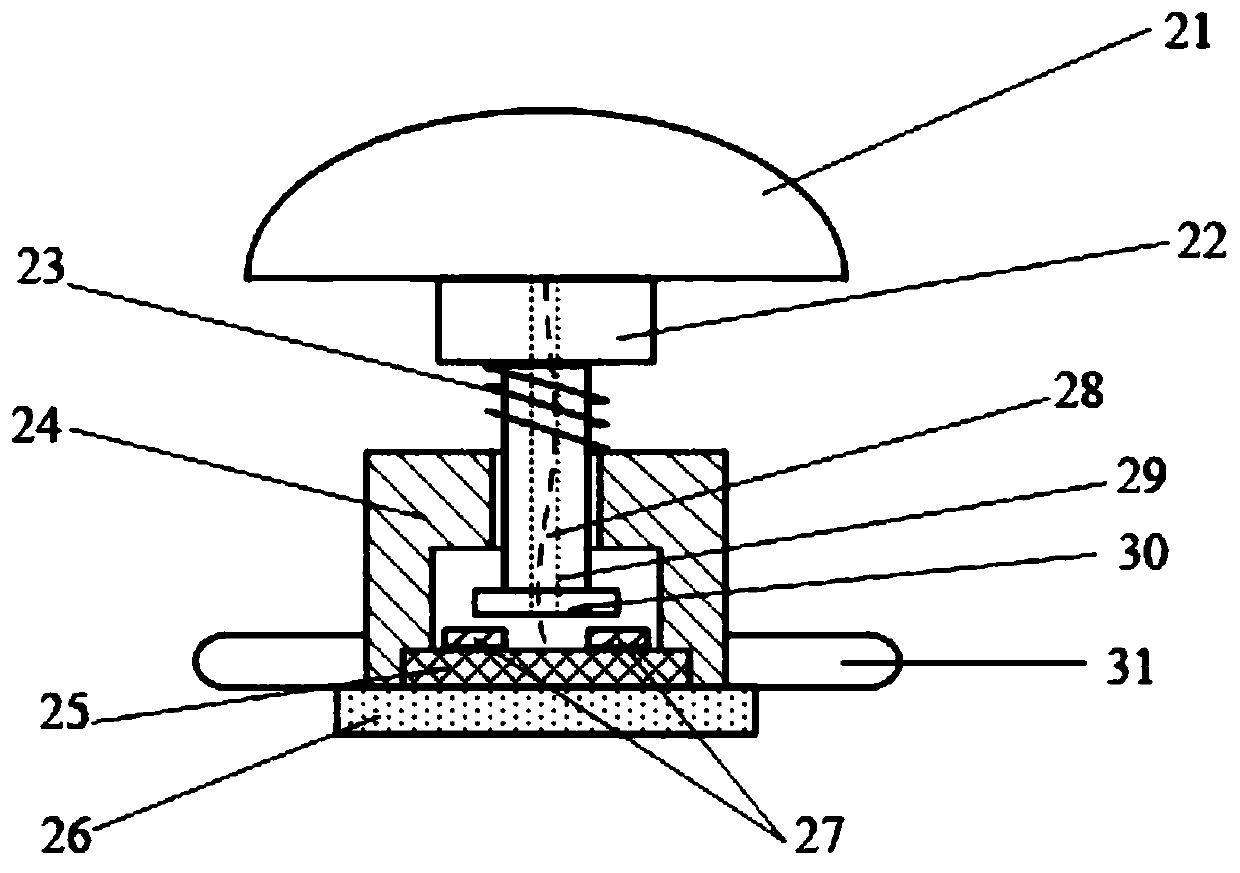

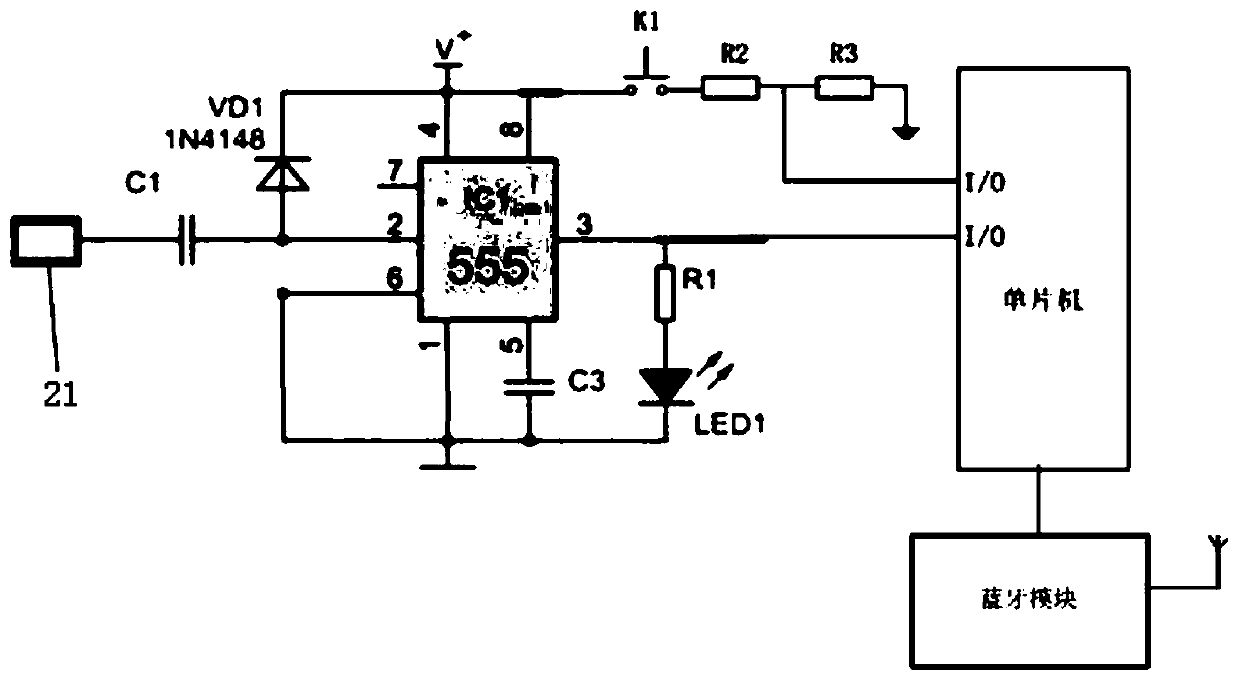

Multiple-rotor-wing unmanned aerial vehicle sensing and avoiding system and avoiding method thereof

ActiveCN103869822ASimplify complexityHigh data ratePosition/course control in three dimensionsSense and avoidSpatial perception

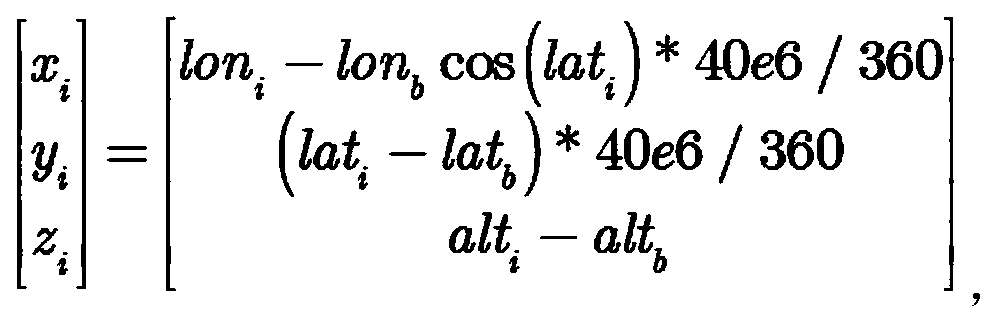

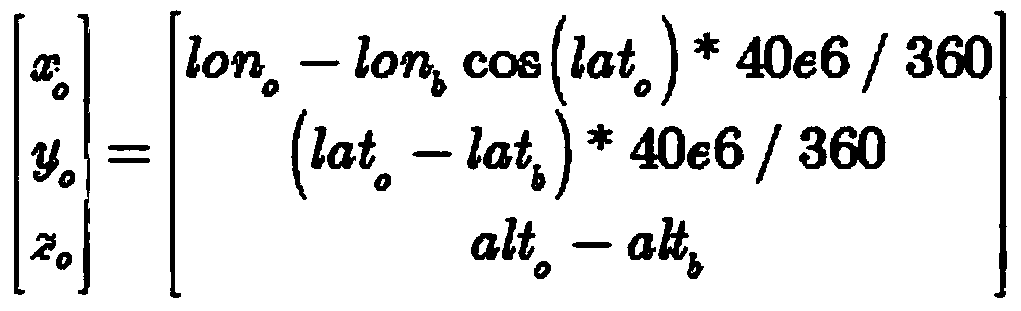

The invention discloses a multiple-rotor-wing unmanned aerial vehicle sensing and avoiding system which comprises a barrier avoiding module. The output end of the barrier avoiding module is connected with a flight control module, the input end of the barrier avoiding module is further connected with a navigation module, the barrier avoiding module is further connected with an ADS-B perception module for data receiving and sending, the ADS-B perception module comprises an ADS-B OUT transmitting module and an ADS-B IN receiving module, the ADS-B OUT transmitting module is connected with a transmitting antenna, and the ADS-B IN receiving module is connected with a receiving antenna. The invention further discloses an avoiding method which simplifies spatial perception and barrier avoiding complexity of an unmanned aerial vehicle, can guarantee safe space flight of the unmanned aerial vehicle without dependence on an air control system, and is suitable for the low-flying multiple-rotor-wing unmanned aerial vehicle sensing and avoiding system.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

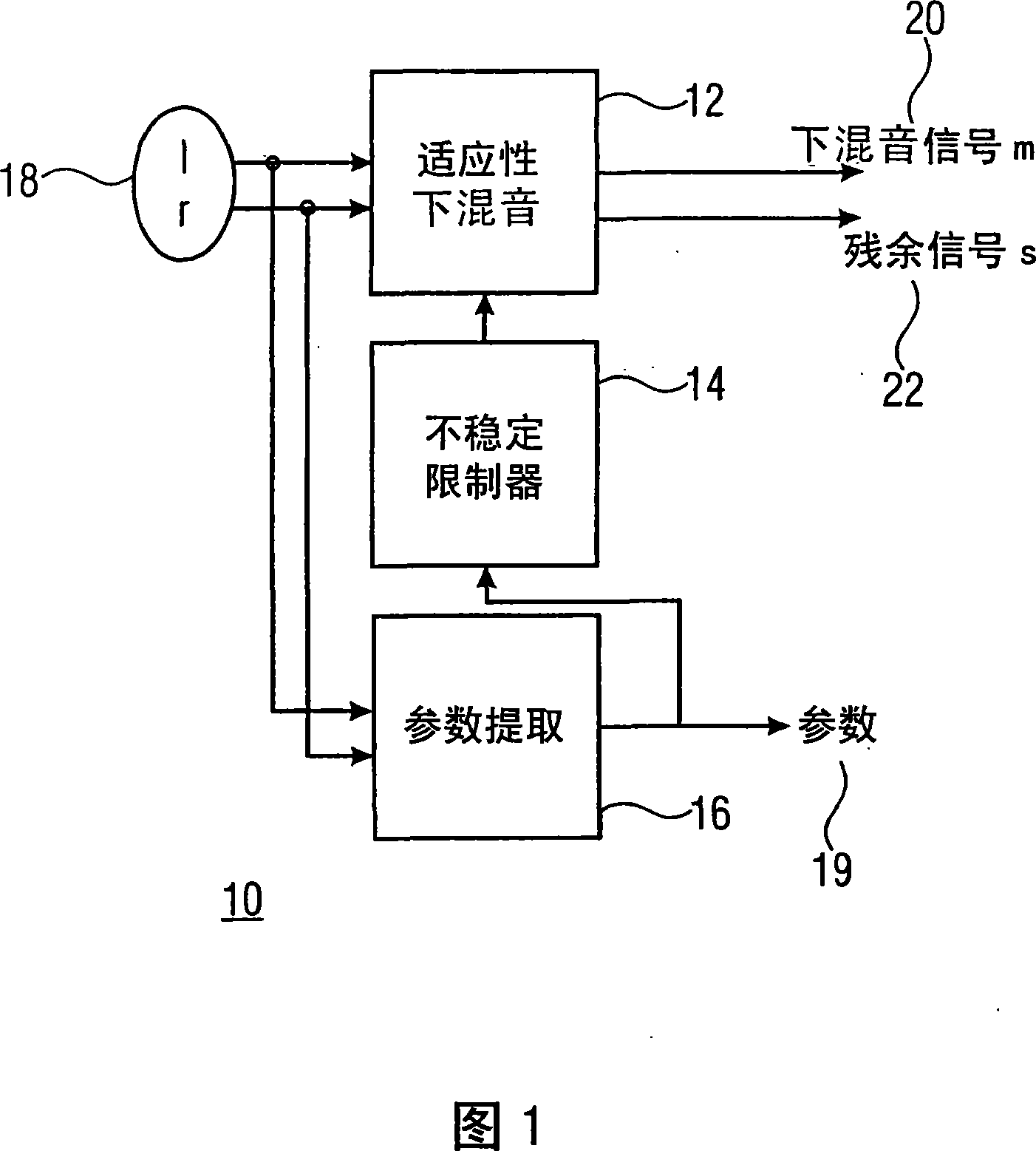

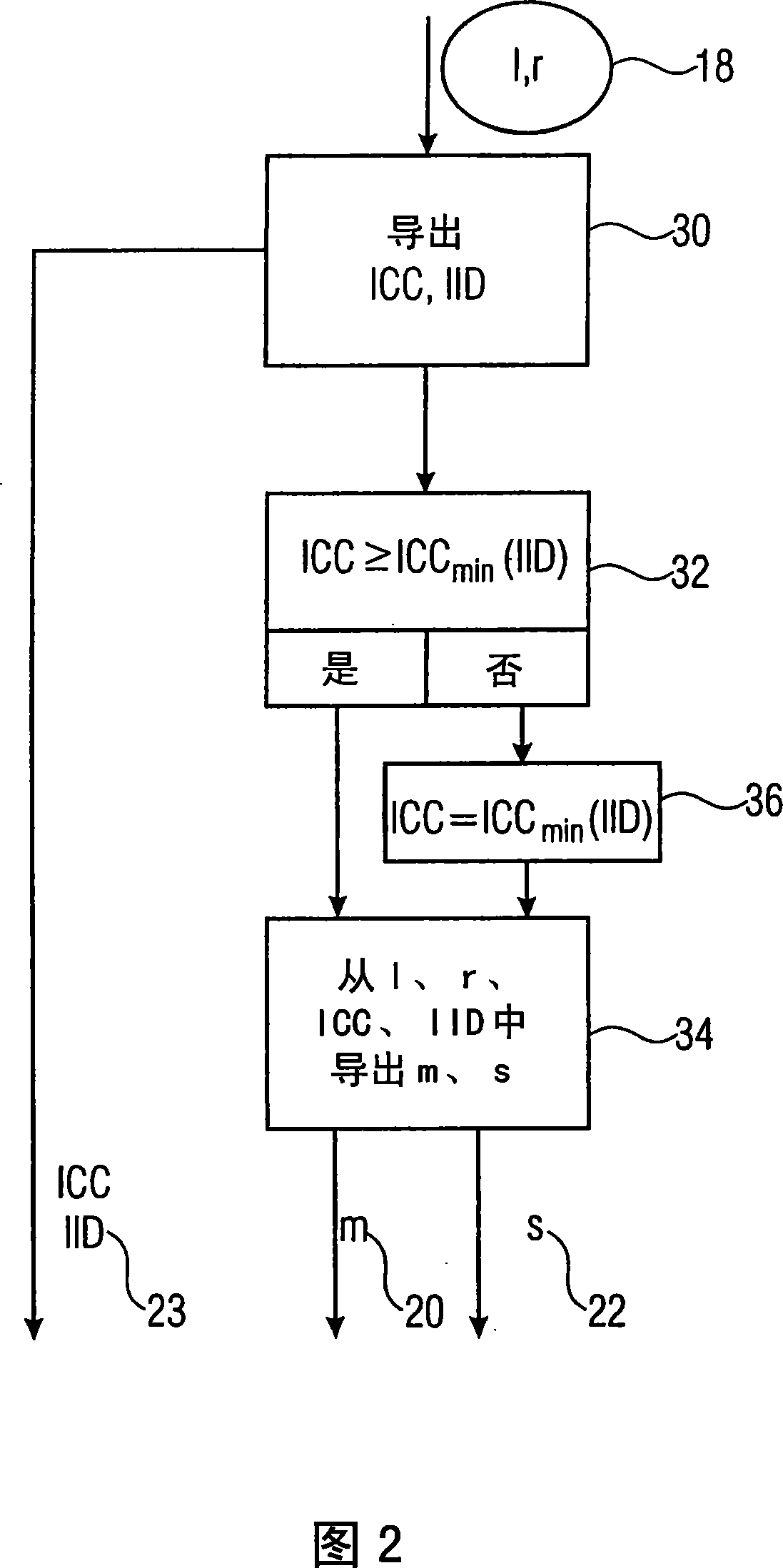

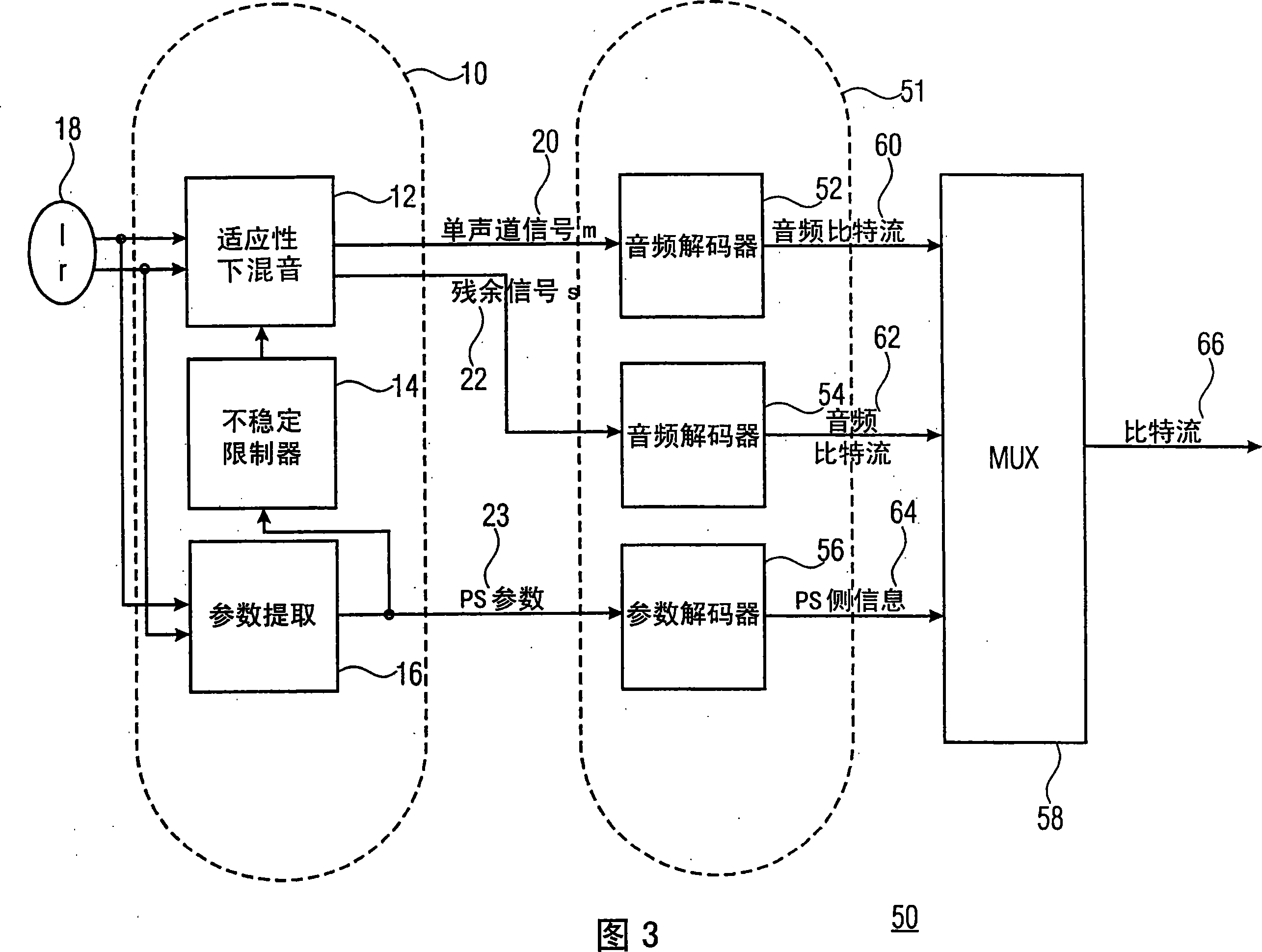

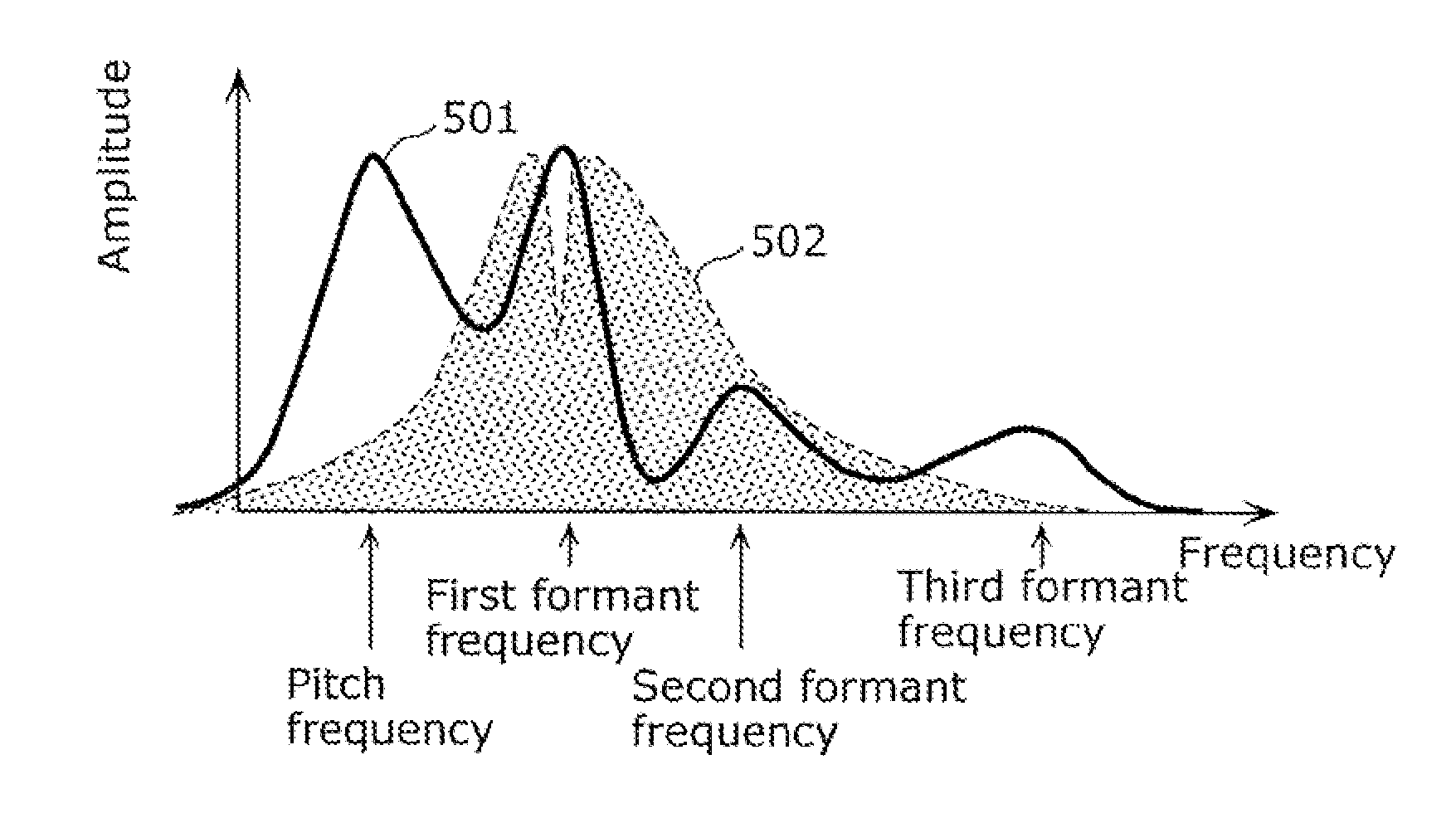

Adaptive residual audio coding

ActiveCN101160619ASave relationshipImplement the restriction logicSpeech analysisSpatial perceptionInstability

An audio signal having at least two channels can be efficiently down-mixed into a downmix signal and a residual signal, when the down-mixing rule used depends on a spatial parameter that is derived from the audio signal and that is post-processed by a limiter to apply a certain limit to the derived spatial parameter with the aim of avoiding instabilities during the up-mixing or down-mixing process. By having a down-mixing rule that dynamically depends on parameters describing an interrelation between the audio channels, one can assure that the energy within the down-mixed residual signal is as minimal as possible, which is advantageous in the view of coding efficiency. By post processing the spatial parameter with a limiter prior to using it in the down-mixing, one can avoid instabilities in the down- or up-mixing, which otherwise could result in a disturbance of the spatial perception of the encoded or decoded audio signal.

Owner:DOLBY INT AB +1

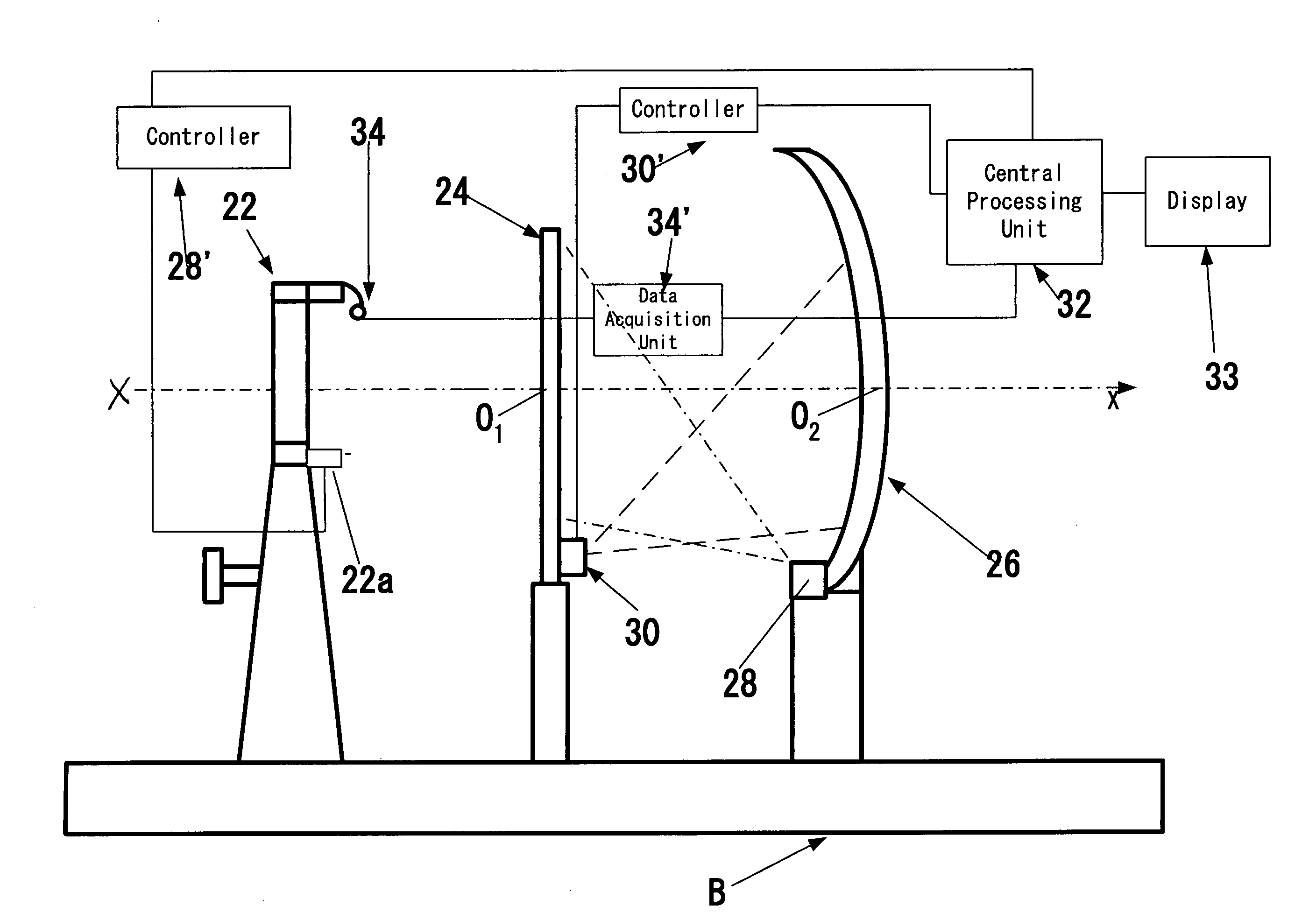

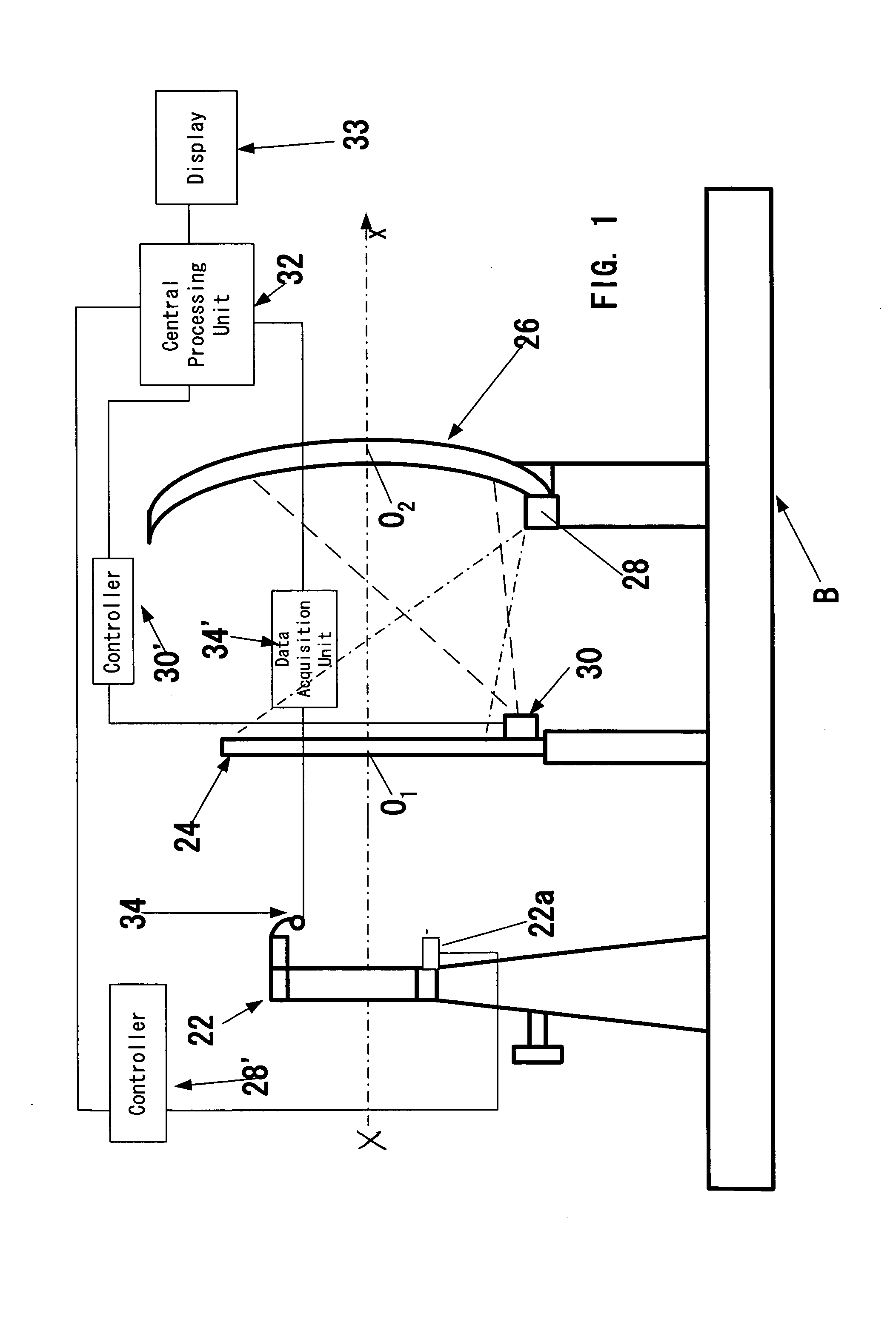

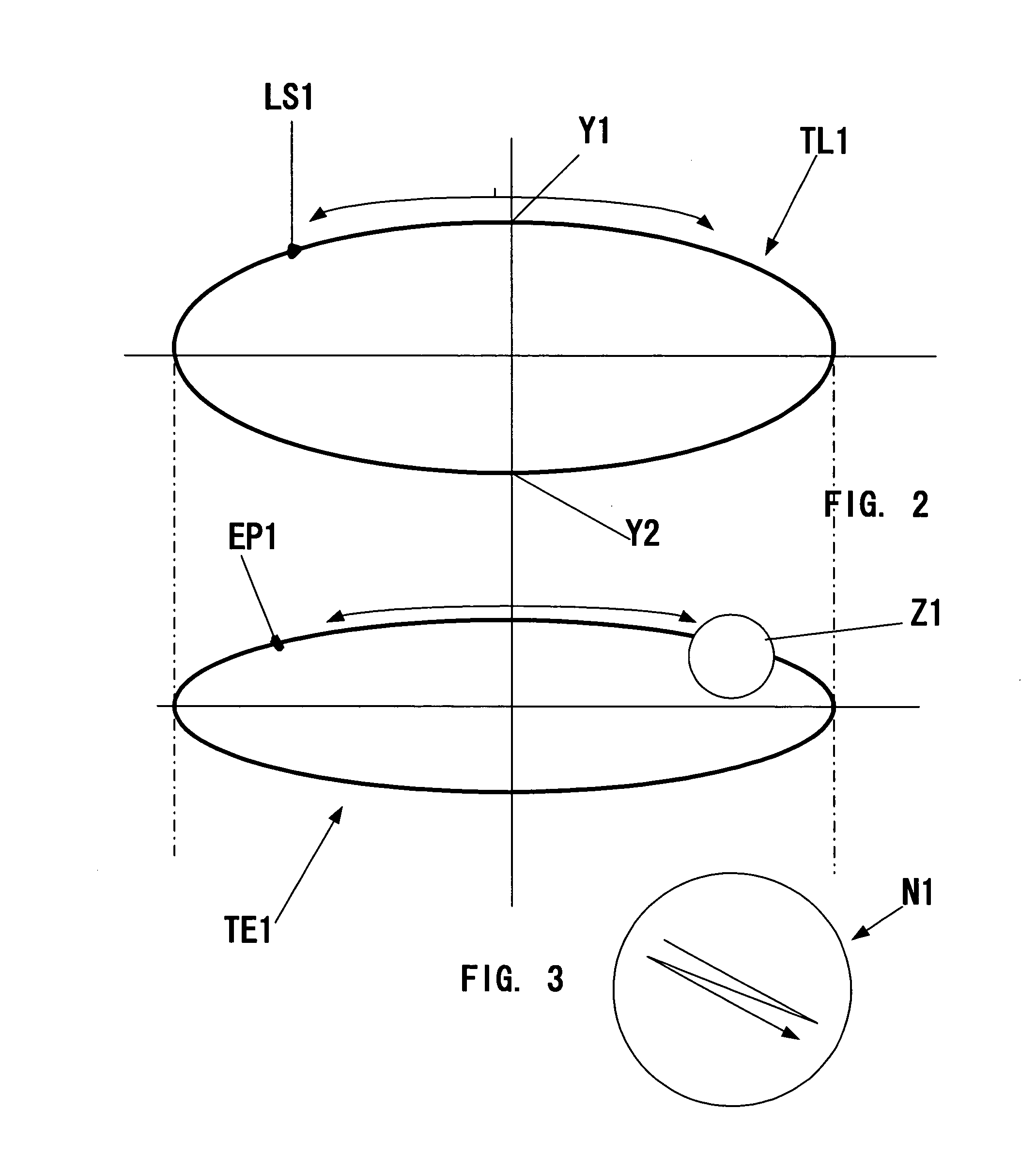

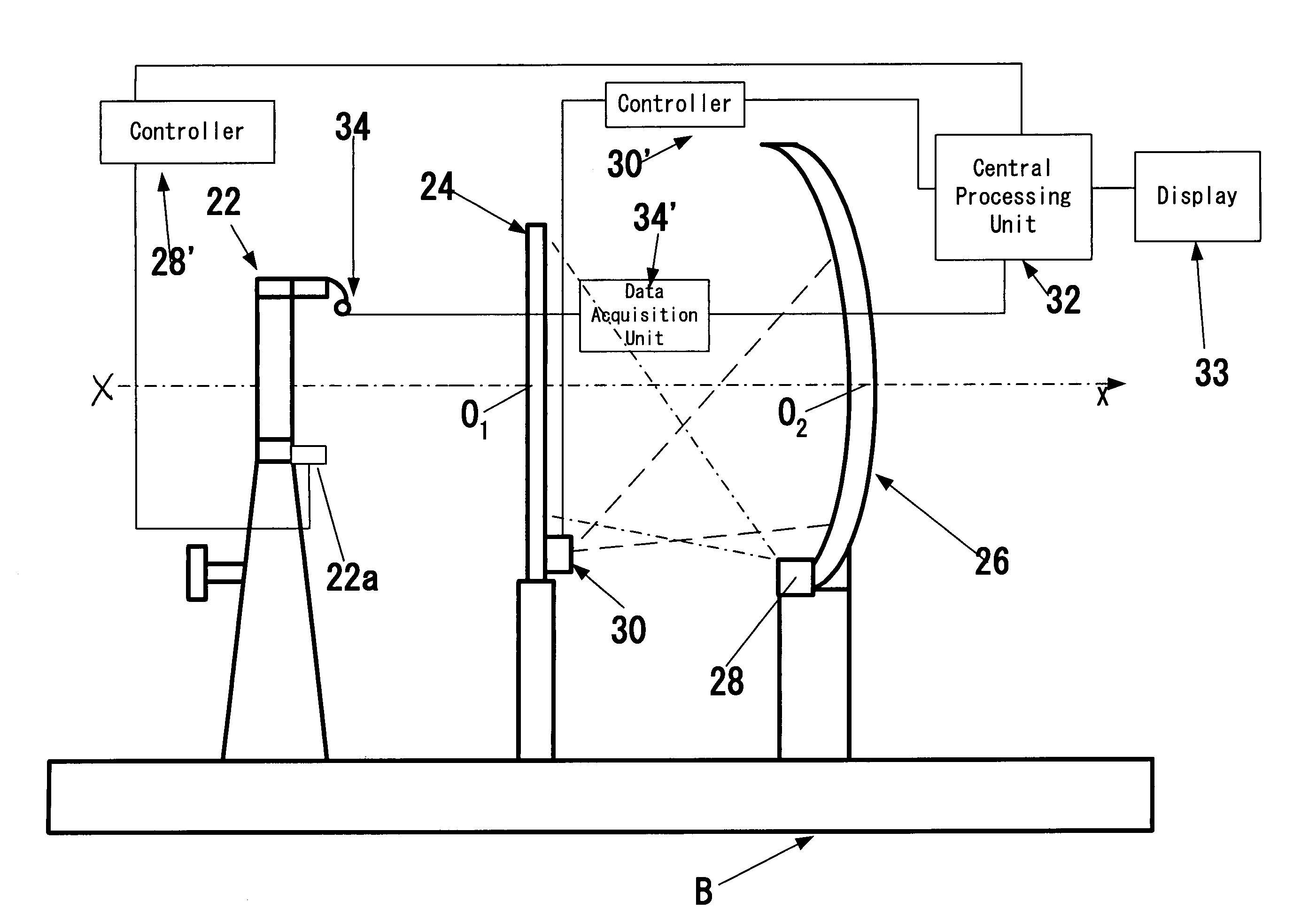

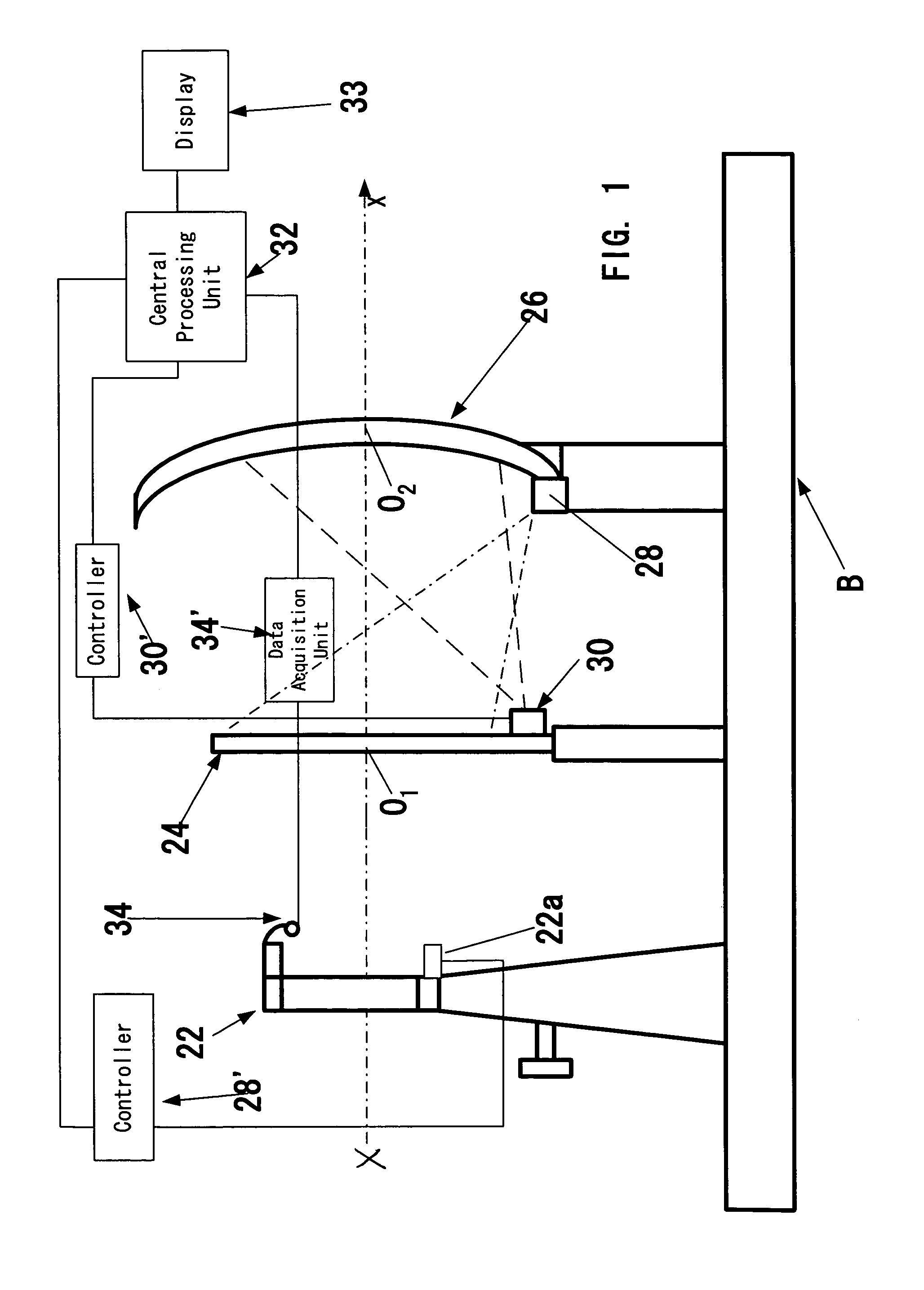

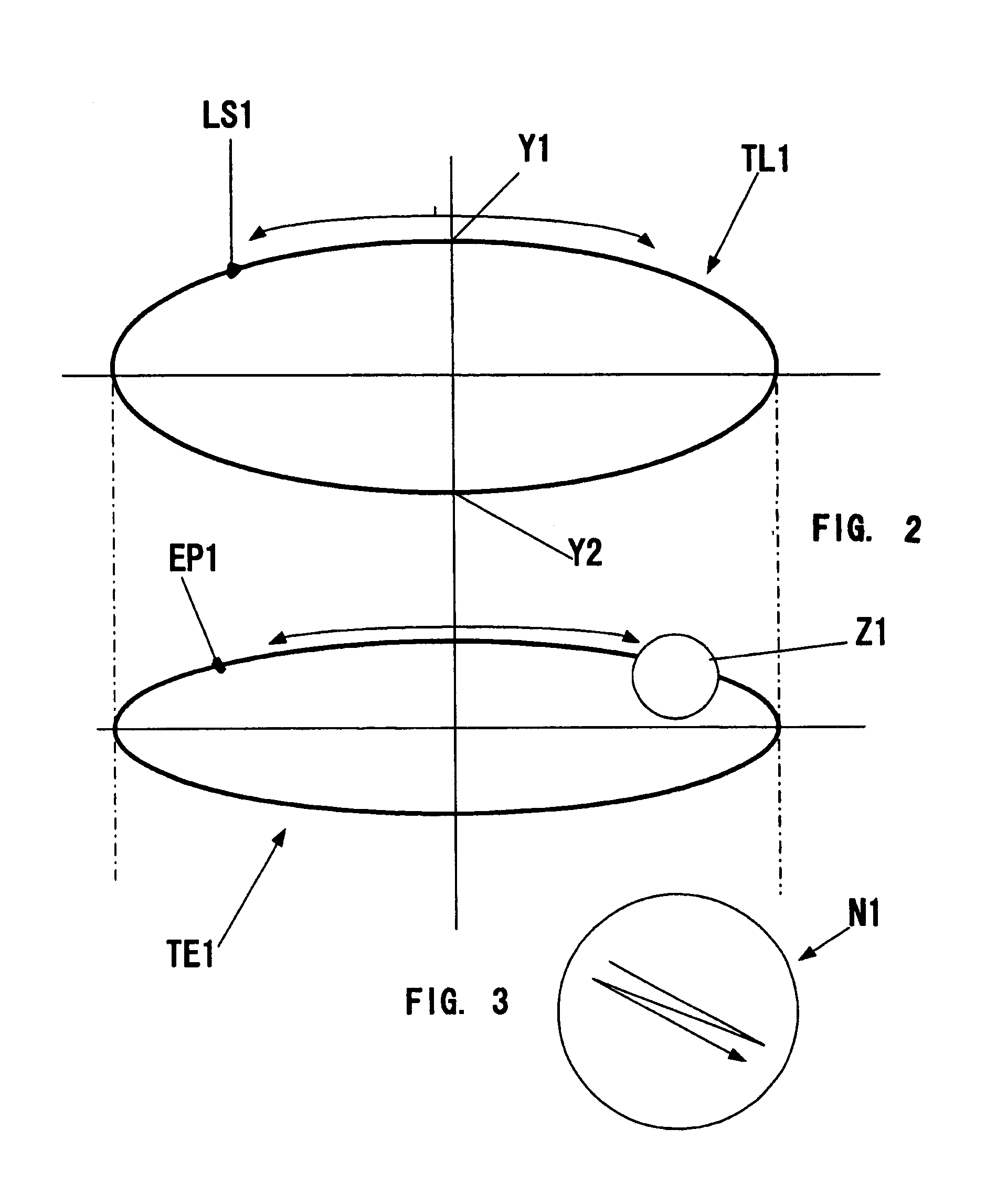

Method and apparatus for detecting abnormalities in spatial perception

An apparatus for detecting abnormalities in spatial perception that consists of a first transparent screen in a close-vision field and a second screen seen through the first one and located in a far-vision field of a person being tested. The apparatus is provided with devices for forming images selectively on the first or second screen and with a device for tracking trajectories of the eye pupils while following the images. The method is based on detecting specific irregularities in trajectories of the eye pupils, such as nystagmuses, cut-offs, sudden drops of the gaze, etc., when a person follows the smooth trajectory of a moving image on a selected screen, or when the size and position of the eye pupil change with certain irregularity in response to instantaneous switching of images between the screens without changing the position of the person's head.

Owner:PUGACH VLADIMIR +2

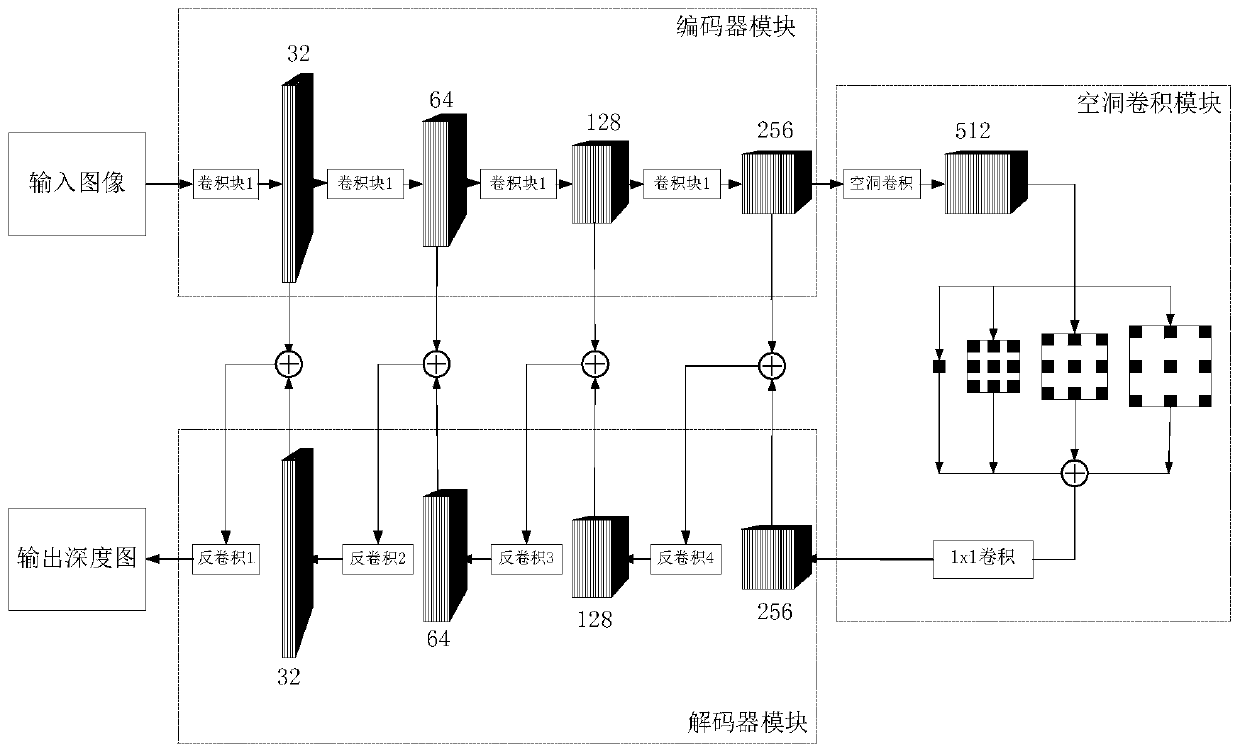

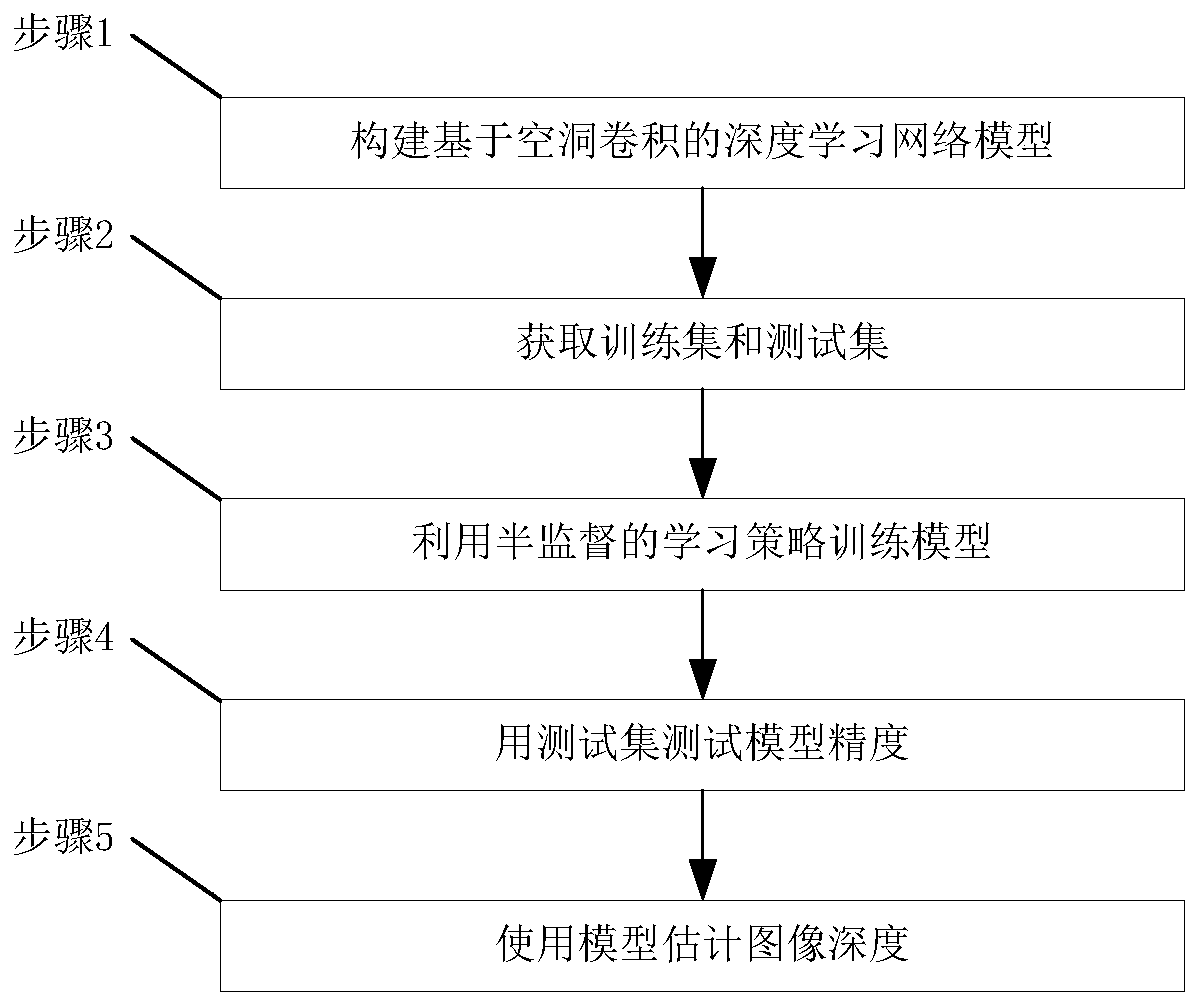

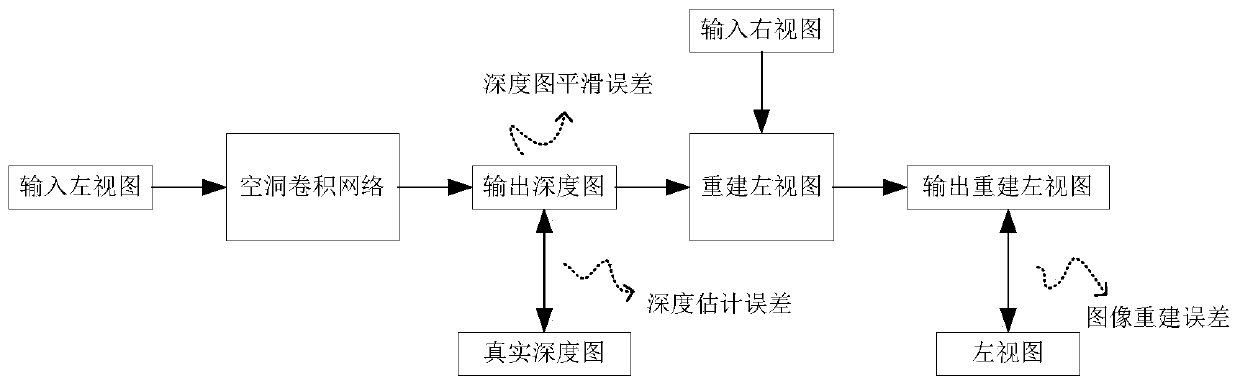

An image depth estimation system and method based on hole convolution and semi-supervised learning

PendingCN109741383AReduce the amount of parametersFast Image Depth Estimation MethodImage analysisCharacter and pattern recognitionSpatial perceptionEstimation methods

The invention discloses an image depth estimation system and method based on cavity convolution and semi-supervised learning, and solves the problem of estimating scene depth from a single image. According to the invention, a conventional encoder is adopted; the network structure model of the decoder is improved, and a cavity convolution module is added between an encoder module and a decoder module. The image depth estimation method specifically comprises the steps of obtaining a training set and a test set; using a semi-supervised learning strategy to train a model; testing the precision ofthe model by using a test set; estimating image depth using a model. According to the method, the spatial perception capability of the network is improved by using hole convolution, a semi-supervisedlearning strategy is adopted, and the output depth map is optimized through the depth map smoothing error. The method has the characteristics of small parameter model, high prediction precision and complete detail information. The method is applied to the fields of image three-dimensional reconstruction and automatic driving.

Owner:XIDIAN UNIV

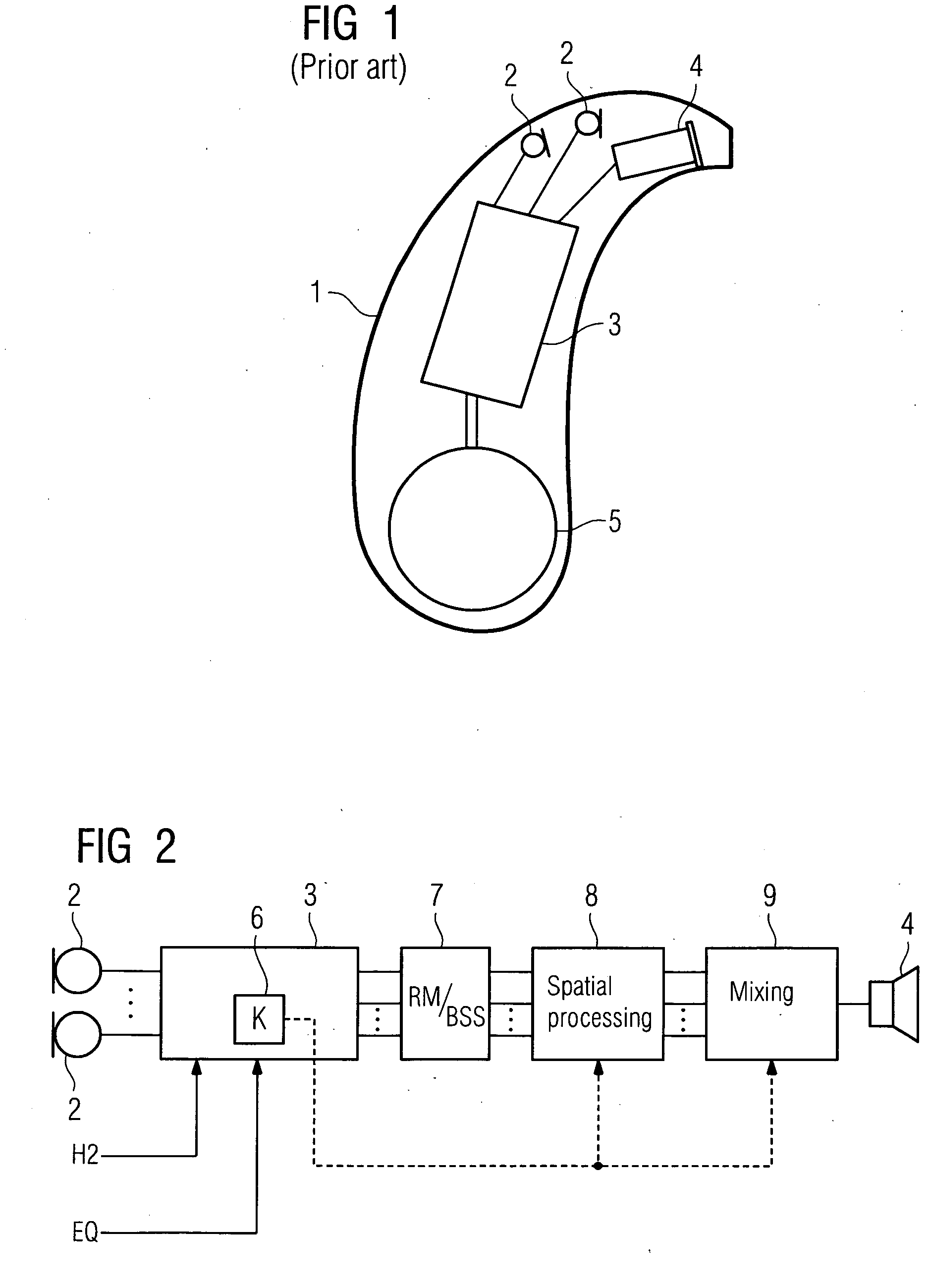

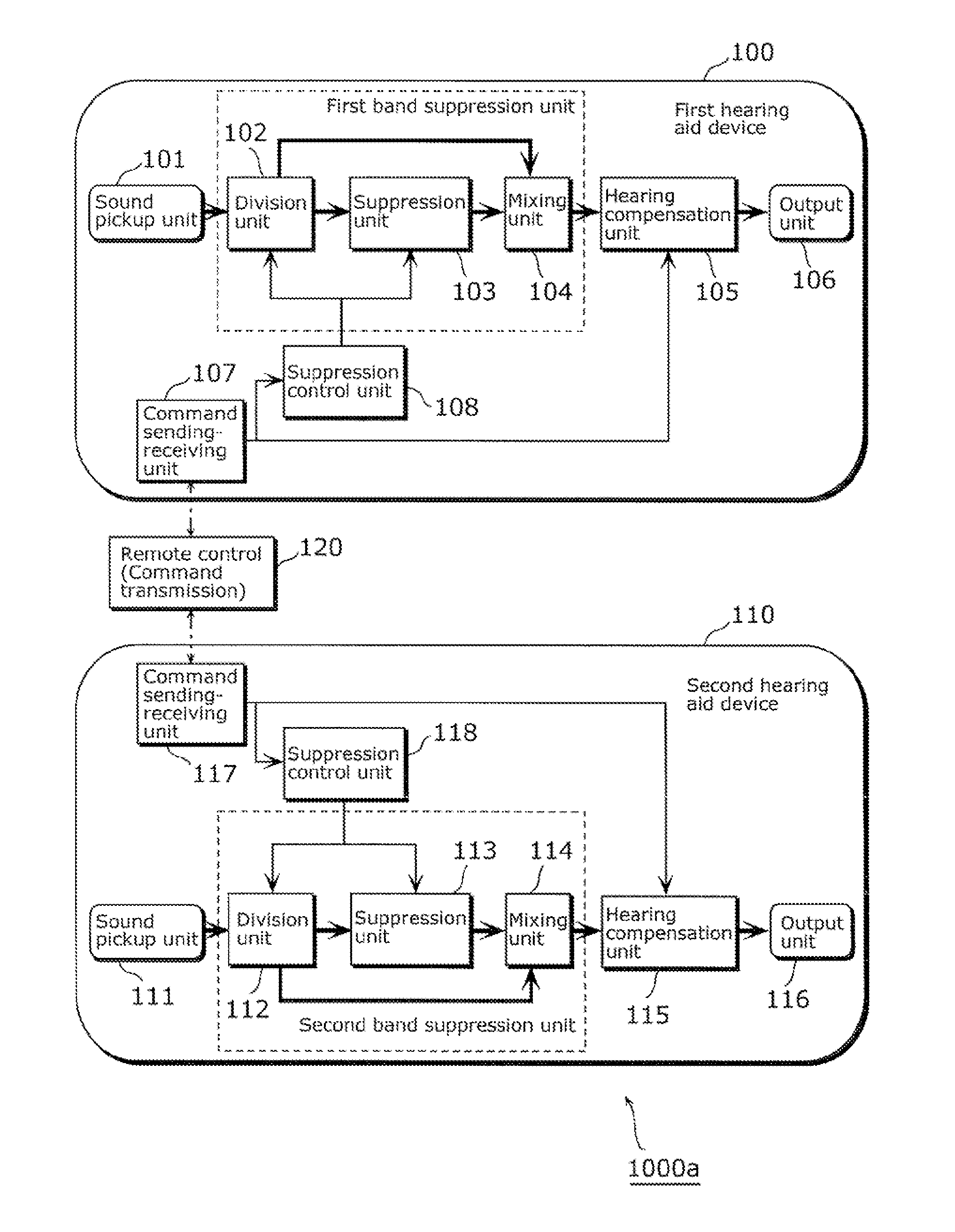

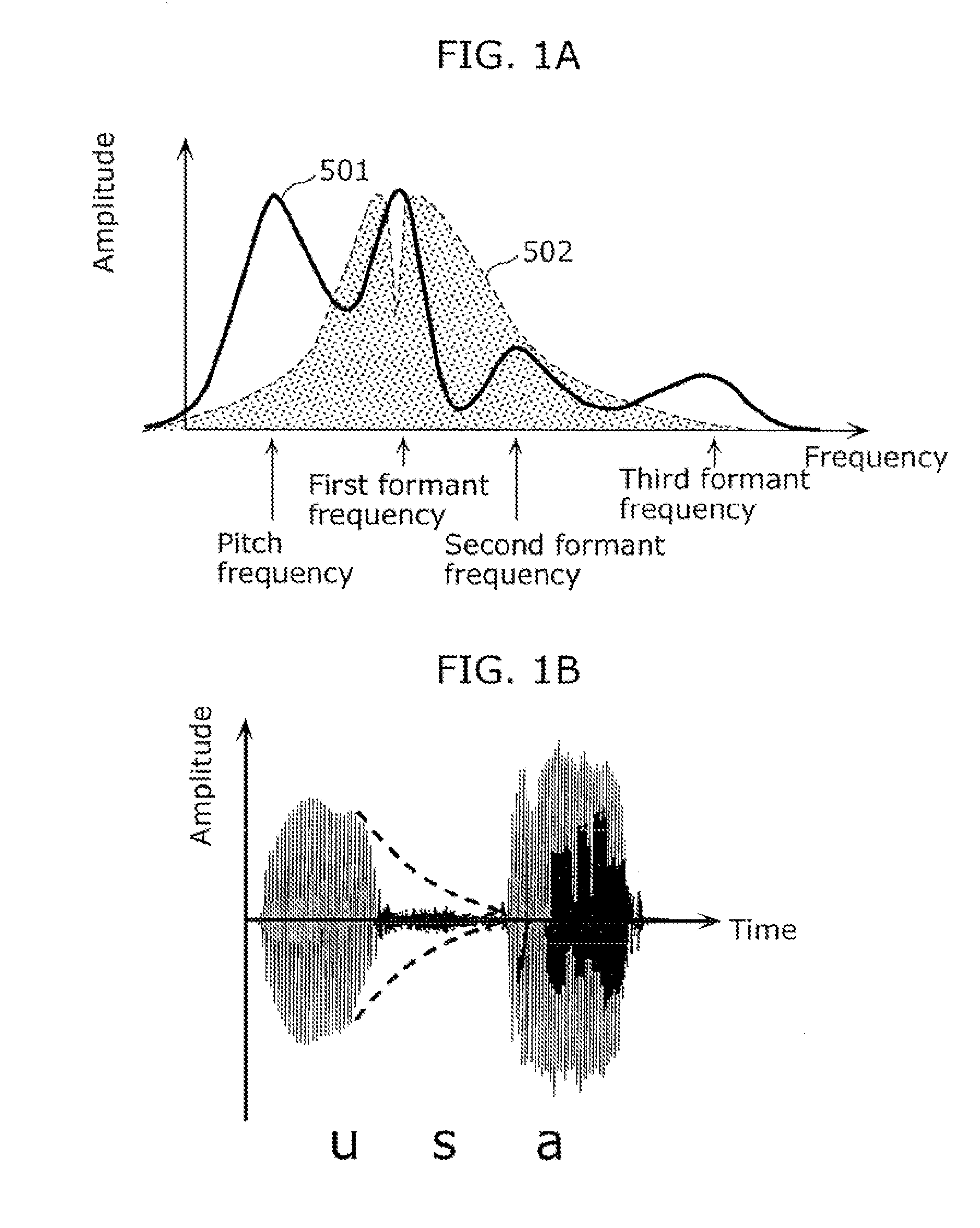

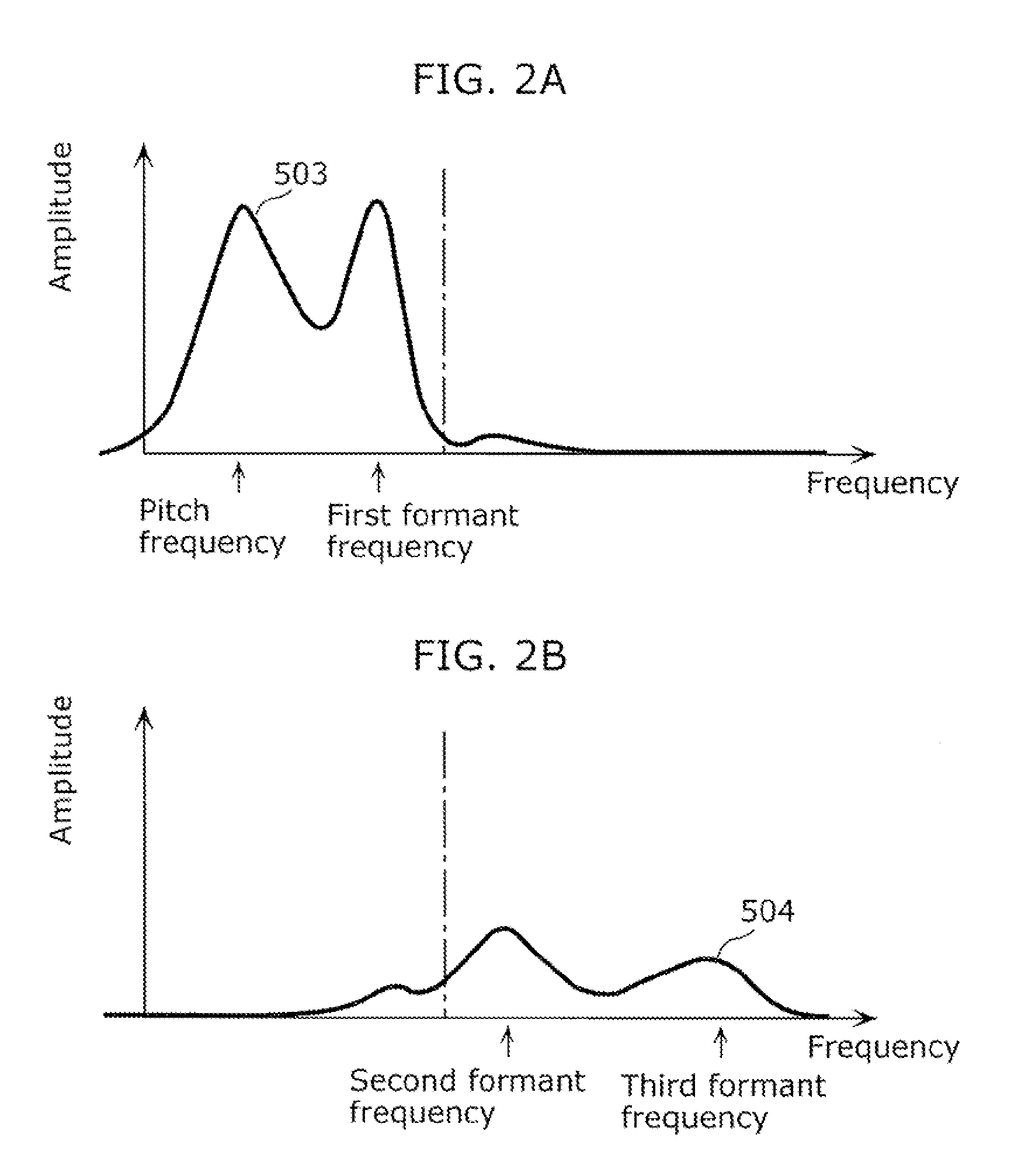

System, method, program, and integrated circuit for hearing aid

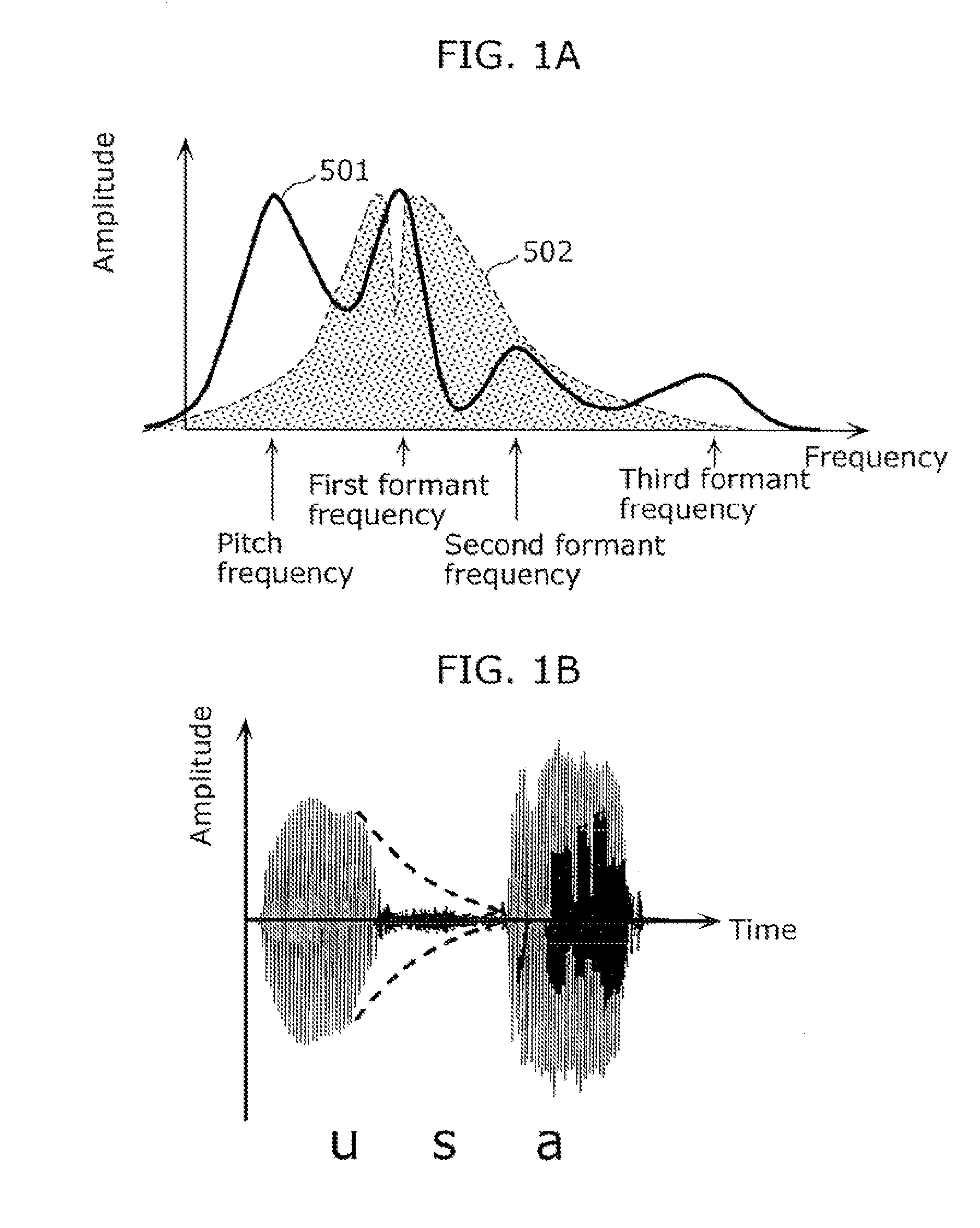

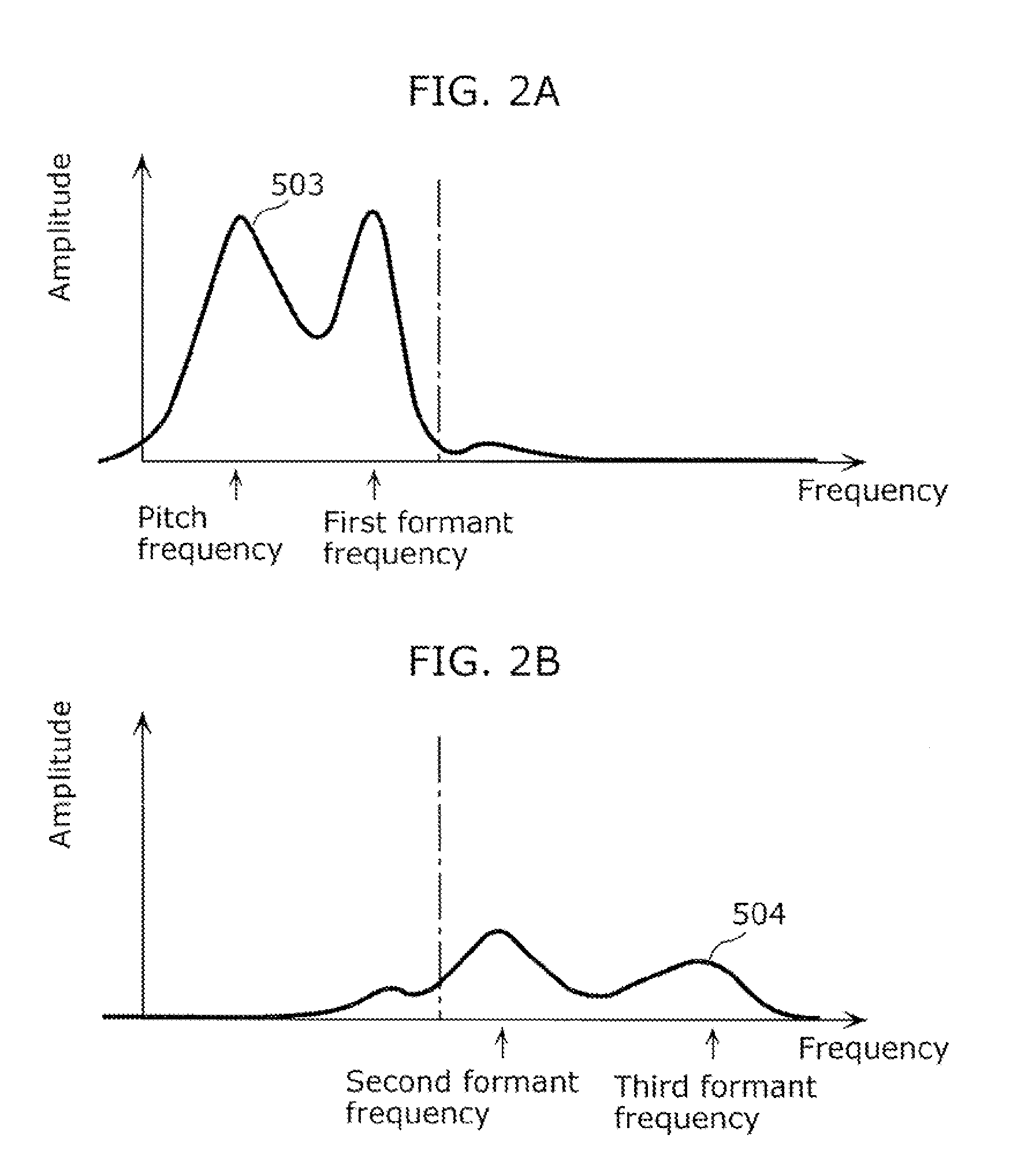

ActiveUS20110280424A1Improve claritySpeech analysisSets with customised acoustic characteristicsDichotic listeningSpatial perception

To provide a hearing aid system (1000) performing dichotic-listening binaural hearing aid processing which improves the clarity of speech and maintains the spatial perception ability. Each of first and second hearing aid devices (1100, 1200) includes a sound pickup unit (1110, 1210) and an output unit (1120, 1220) outputting a sound indicated by a suppressed acoustic signal. The hearing aid system (1000) includes: a first band suppression unit (1300) generating the suppressed acoustic signal indicating the sound outputted from the output unit (1120), by suppressing a signal in a first suppression-target band out of the acoustic signal outputted from the sound pickup unit (1110); and a second band suppression unit (1400) generating the suppressed acoustic signal indicating the sound outputted from the output unit (1220), by suppressing a signal in a second suppression-target band out of the acoustic signal outputted from the sound pickup unit (1210). The suppressed acoustic signals indicating the sounds outputted respectively from the output units (1120, 1220) include, in common, a signal in a non-voice band included in the acoustic signal.

Owner:PANASONIC CORP

Method and apparatus for detecting abnormalities in spatial perception

An apparatus for detecting abnormalities in spatial perception that consists of a first transparent screen in a close-vision field and a second screen seen through the first one and located in a far-vision field of a person being tested. The apparatus is provided with devices for forming images selectively on the first or second screen and with a device for tracking trajectories of the eye pupils while following the images. The method is based on detecting specific irregularities in trajectories of the eye pupils, such as nystagmuses, cut-offs, sudden drops of the gaze, etc., when a person follows the smooth trajectory of a moving image on a selected screen, or when the size and position of the eye pupil change with certain irregularity in response to instantaneous switching of images between the screens without changing the position of the person's head.

Owner:PUGACH VLADIMIR +2

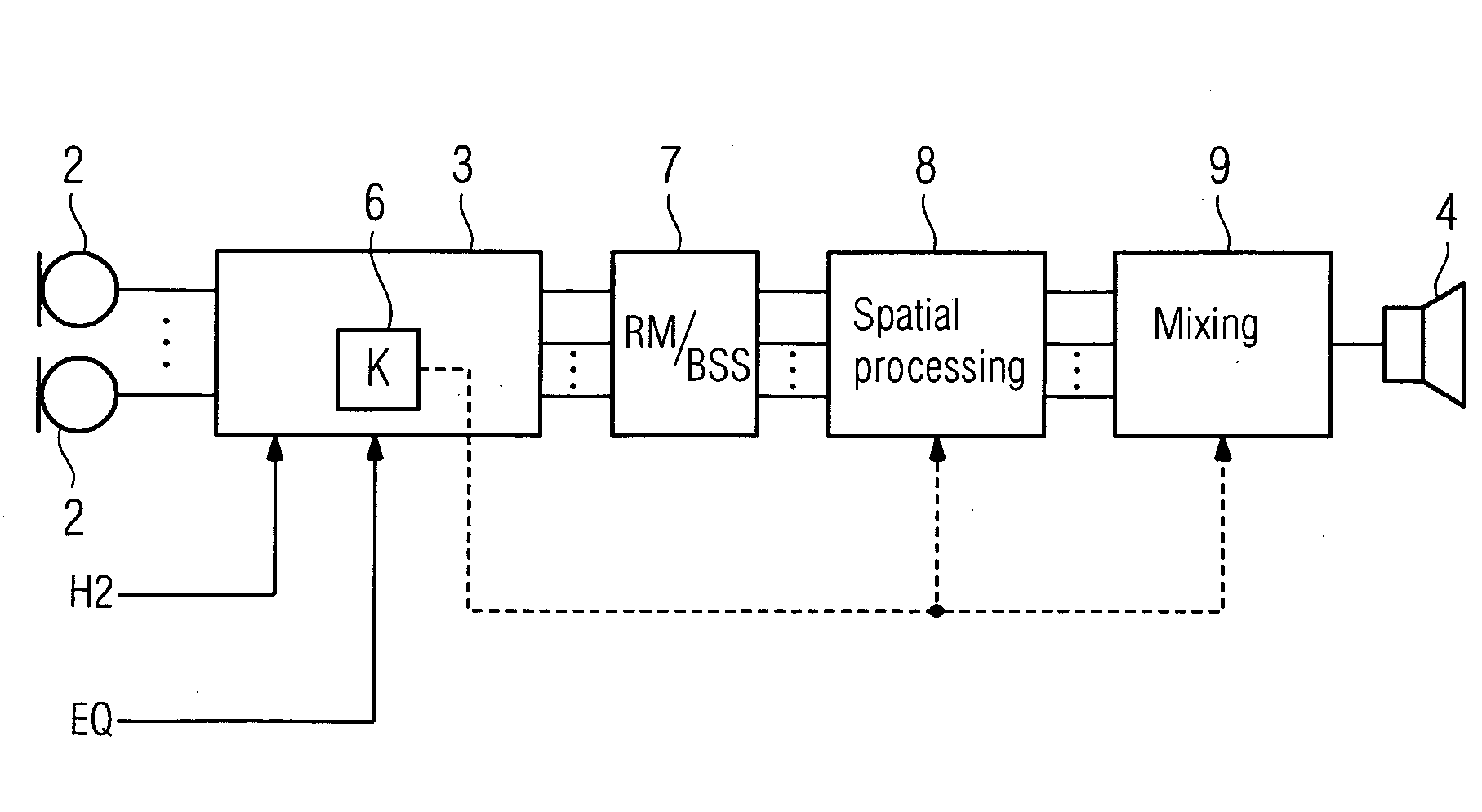

Method for improving spatial perception and corresponding hearing apparatus

InactiveUS20080205659A1Spatial can be restoredStereophonic systemsDeaf-aid setsSpatial perceptionHearing apparatus

In order to improve spatial perception of acoustic signals an input signal is received via aid of a binaural hearing apparatus and optionally analyzed. At least one variable that influences spatial perception of the binaural output signal, based on the input signal, of the hearing apparatus will be changed. Thus, for example, the distance or direction of a source at / from which it is perceived can, with the aid of a classifier or directional microphone, be varied automatically for corresponding input signals, as a result of which improved spatial perception can be achieved.

Owner:SIEMENS AUDIOLOGISCHE TECHN

System, method, program, and integrated circuit for hearing aid

ActiveUS8548180B2Improve claritySpeech analysisSets with customised acoustic characteristicsDichotic listeningSpatial perception

To provide a hearing aid system (1000) performing dichotic-listening binaural hearing aid processing which improves the clarity of speech and maintains the spatial perception ability. Each of first and second hearing aid devices (1100, 1200) includes a sound pickup unit (1110, 1210) and an output unit (1120, 1220) outputting a sound indicated by a suppressed acoustic signal. The hearing aid system (1000) includes: a first band suppression unit (1300) generating the suppressed acoustic signal indicating the sound outputted from the output unit (1120), by suppressing a signal in a first suppression-target band out of the acoustic signal outputted from the sound pickup unit (1110); and a second band suppression unit (1400) generating the suppressed acoustic signal indicating the sound outputted from the output unit (1220), by suppressing a signal in a second suppression-target band out of the acoustic signal outputted from the sound pickup unit (1210). The suppressed acoustic signals indicating the sounds outputted respectively from the output units (1120, 1220) include, in common, a signal in a non-voice band included in the acoustic signal.

Owner:PANASONIC CORP

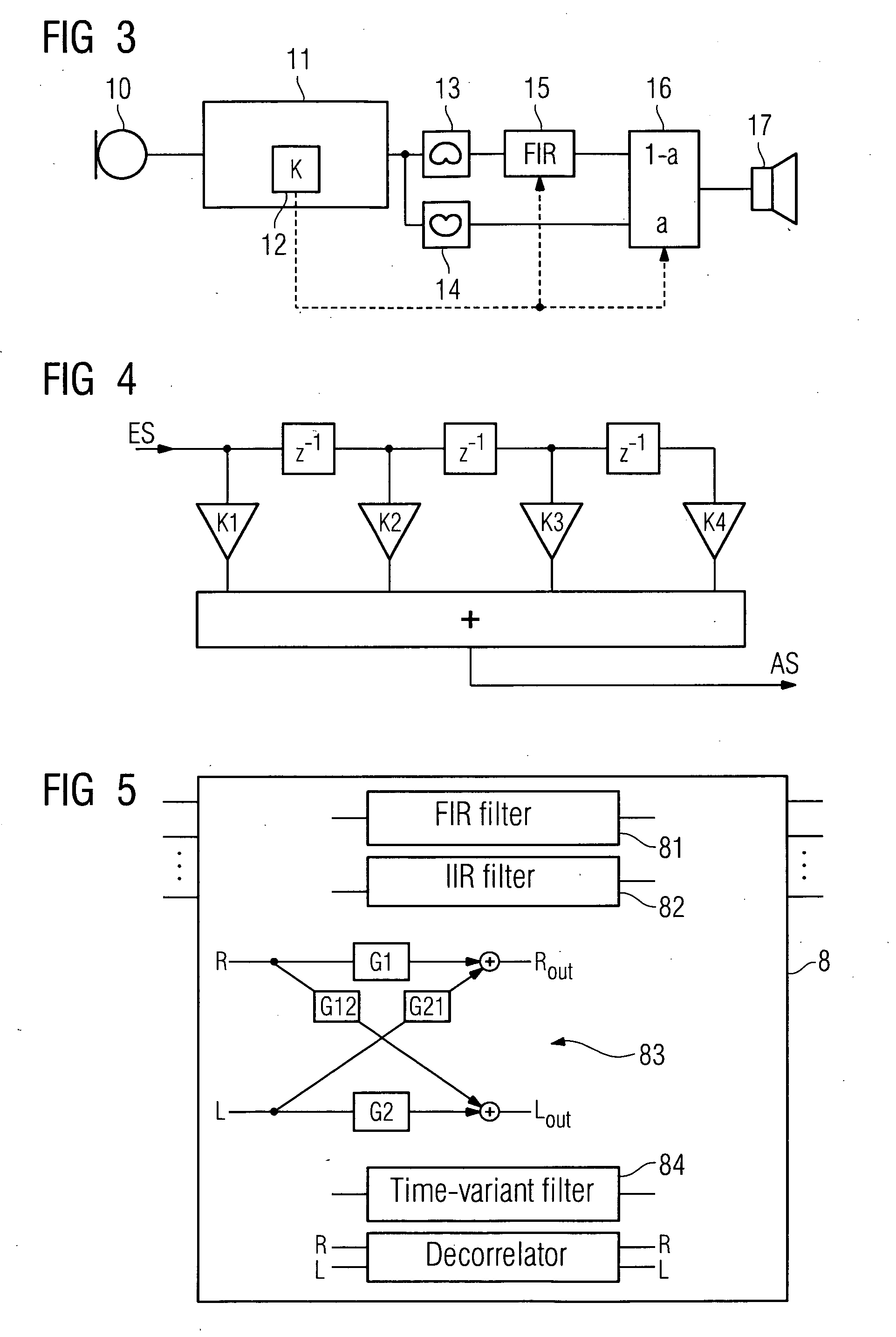

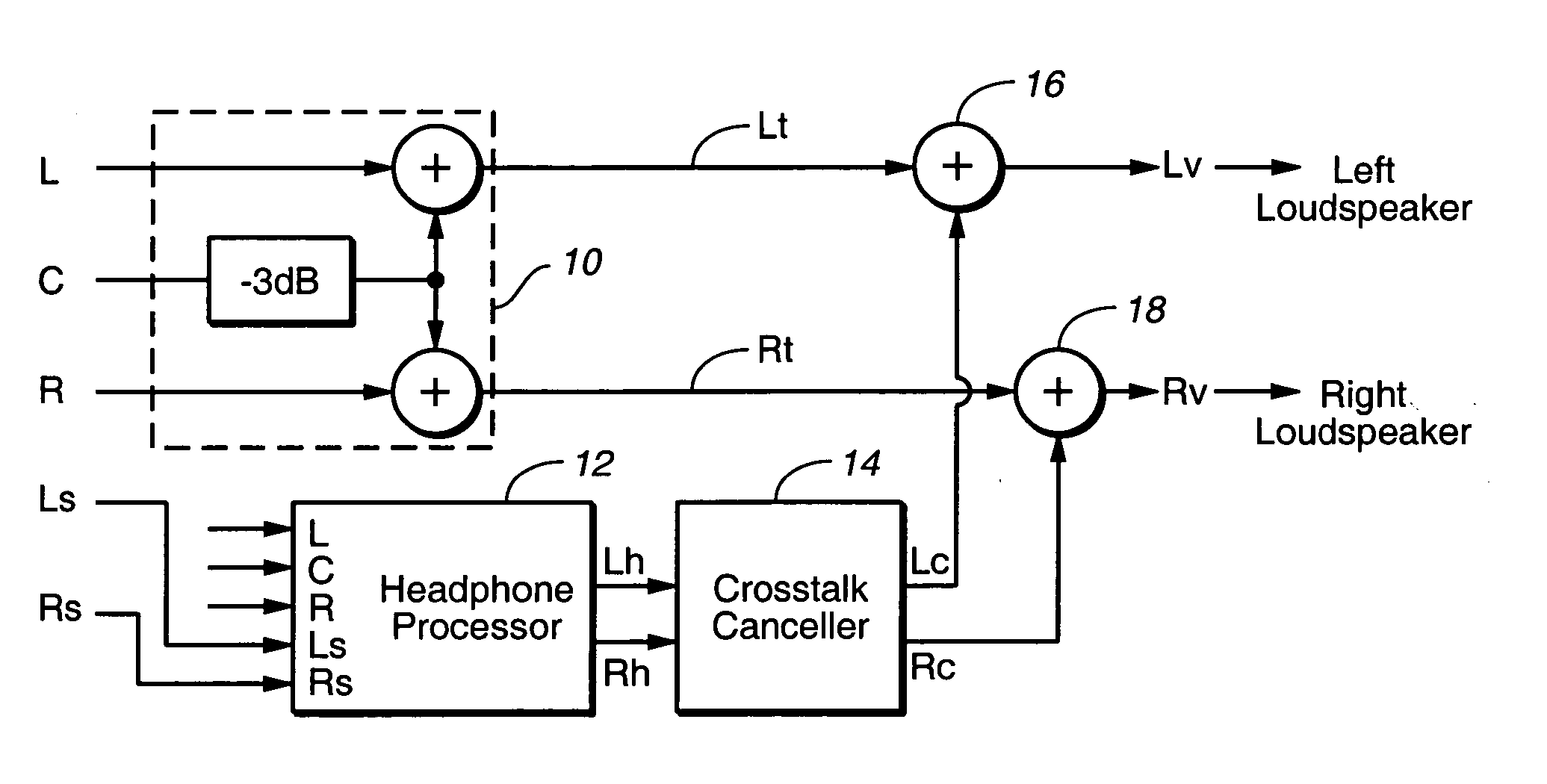

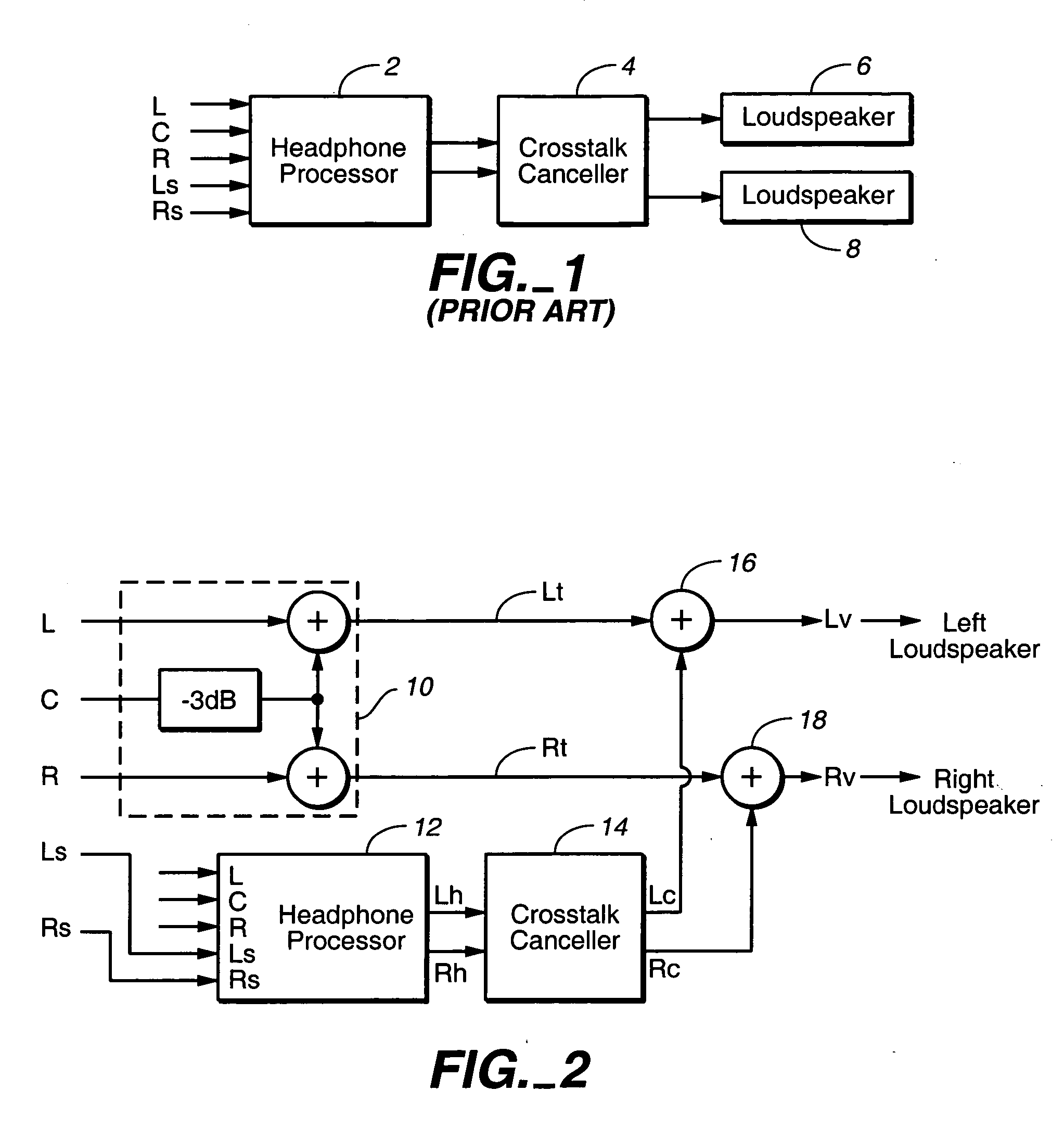

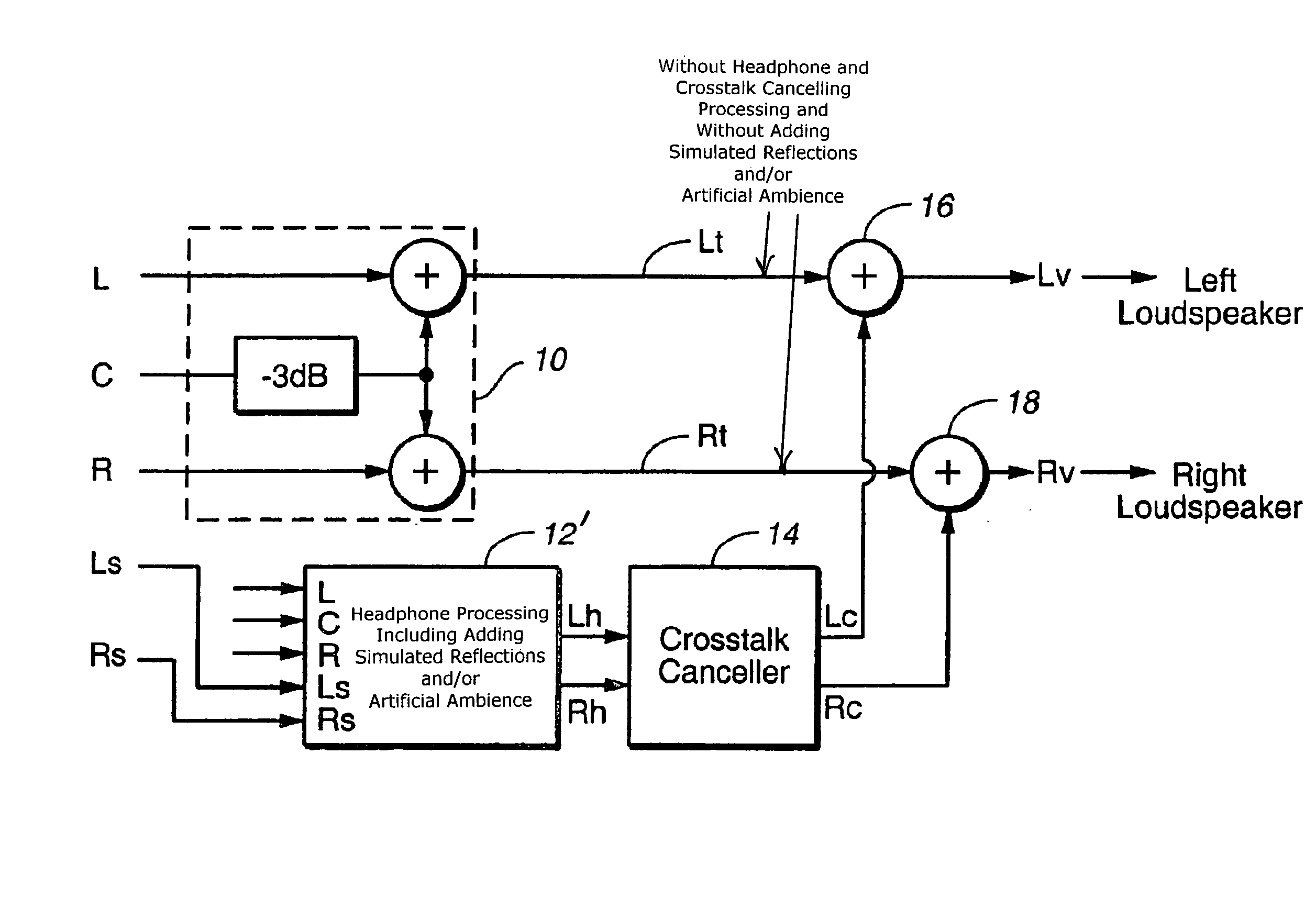

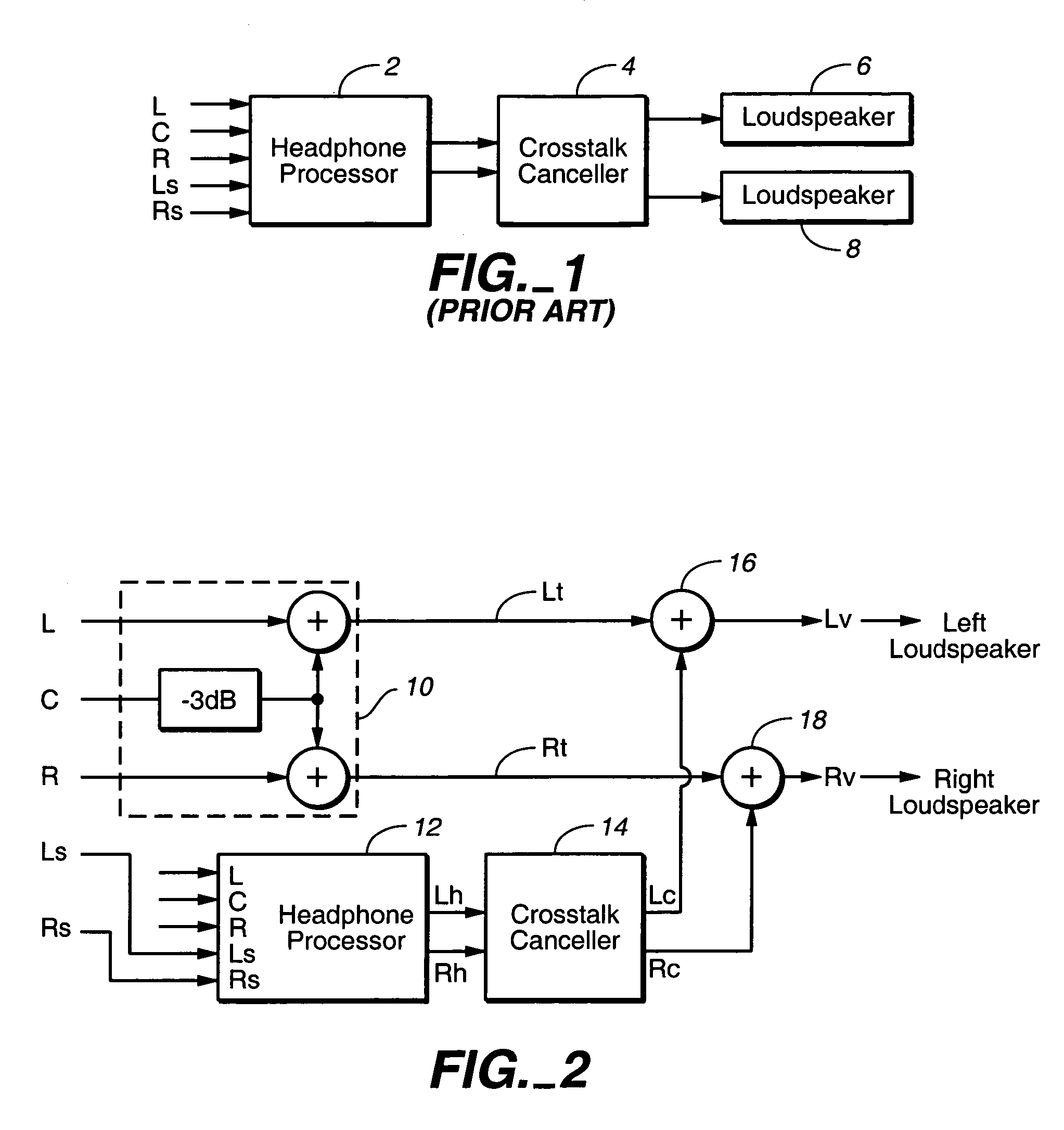

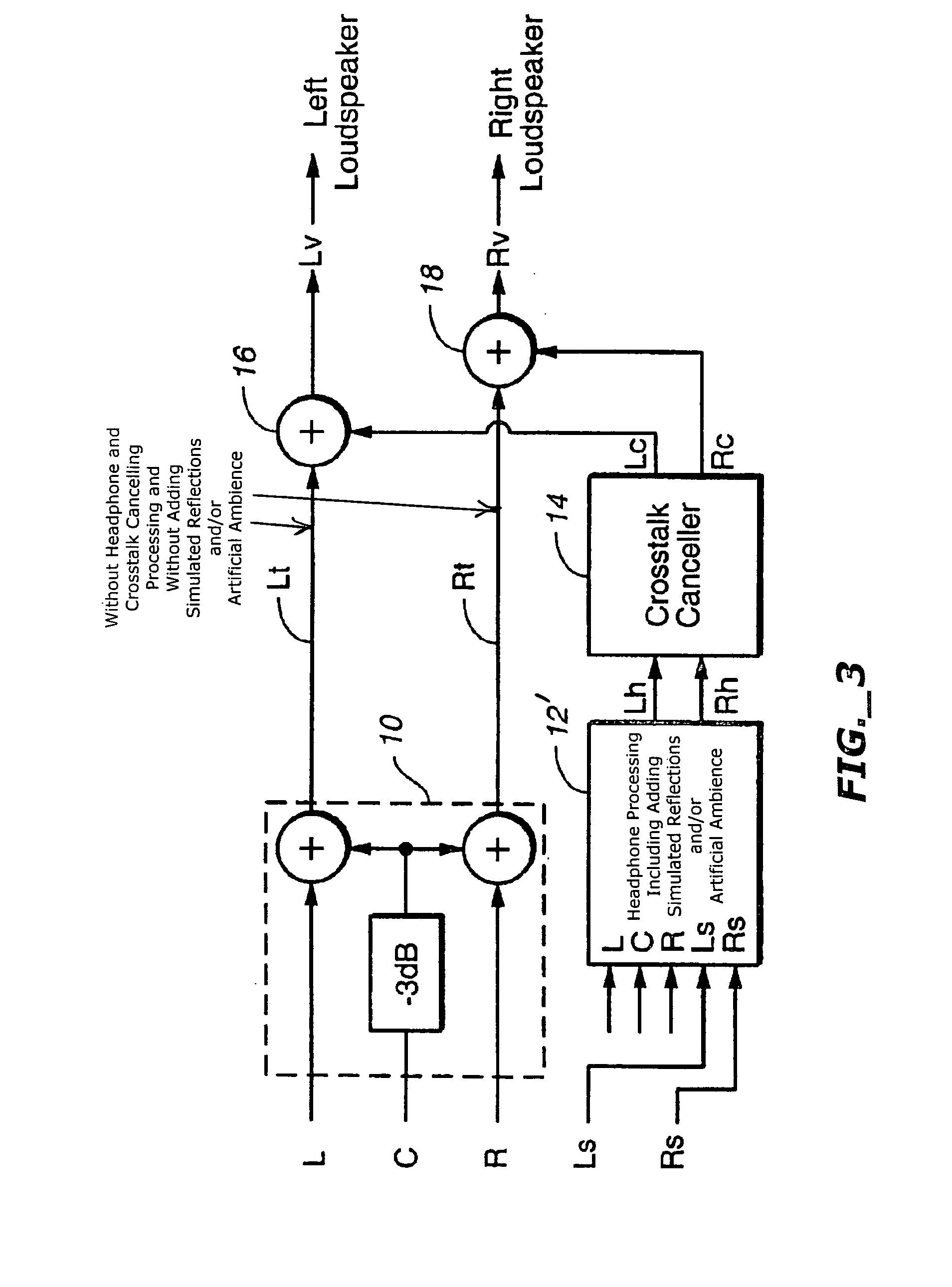

Method for improving spatial perception in virtual surround

InactiveUS20050129249A1Avoid damagePromote reproductionPseudo-stereo systemsLoudspeaker spatial/constructional arrangementsSpatial perceptionVocal tract

A method for improving the spatial perception of multiple sound channels when reproduced by two loudspeakers, generally front-located with respect to listeners, each channel representing a direction, applies some of the channels, such as sound channels representing directions other than front directions, to the loudspeakers with headphone and crosstalk cancelling processing, and applies the other ones of the sound channels, such as sound channels representing front directions to the loudspeakers without headphone and crosstalk cancelling processing. The headphone processing includes applying directional HRTFs to channels applied to the loudspeakers with headphone and crosstalk cancelling processing and may also include adding simulated reflections and / or artificial ambience to channels applied to the loudspeakers with headphone and crosstalk cancelling processing.

Owner:DOLBY LAB LICENSING CORP

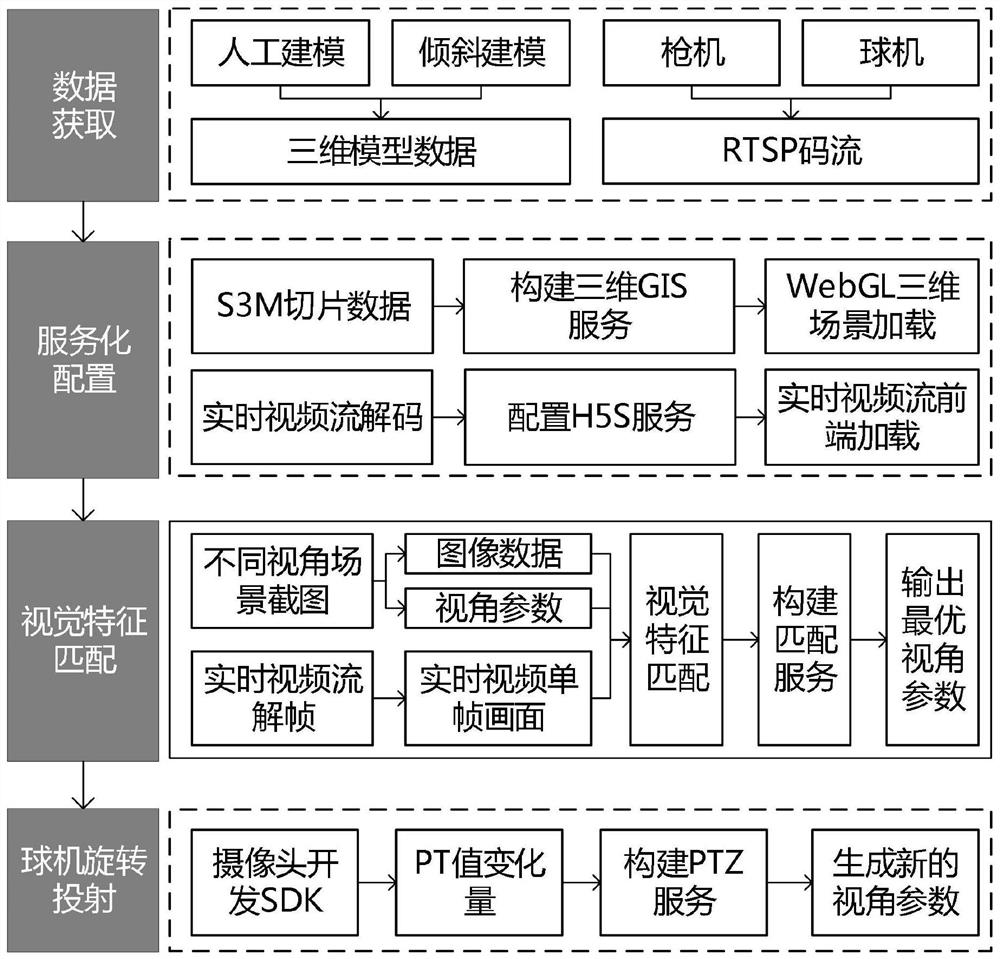

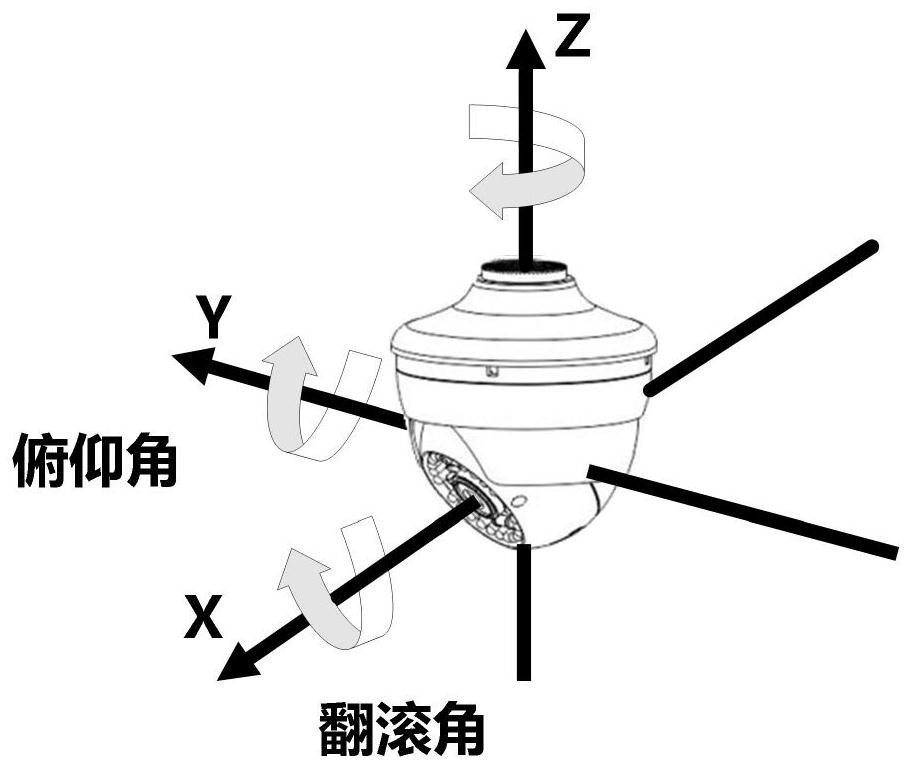

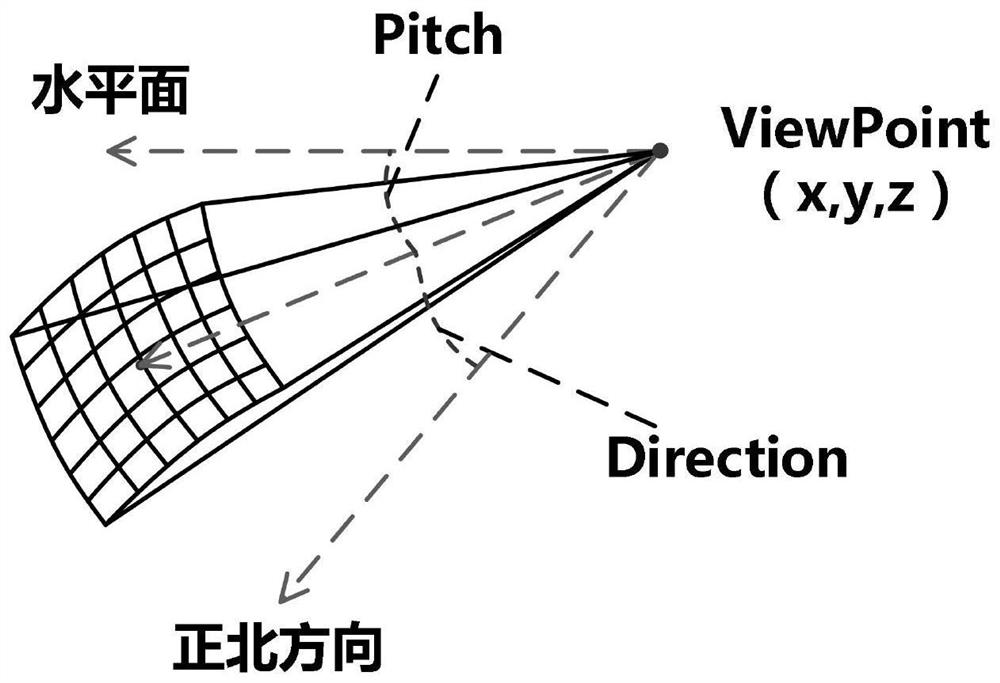

Real-time monitoring video and three-dimensional scene fusion method based on three-dimensional GIS

PendingCN112053446AOvercoming Application LimitationsSolve the disadvantages of fragmentationTelevision system detailsImage analysisVideo monitoringSpatial perception

The invention discloses a real-time monitoring video and three-dimensional scene fusion method based on a three-dimensional GIS (Geographic Information System), which belongs to the technical field ofthree-dimensional GIS, and comprises the following steps: S1, inputting model data, checking texture and triangular patch number of an artificial modeling model by using hypergraph iDesktop software,removing repeated points, converting a format to generate a model data set, and storing the model data set in a database; performing root node combination and texture compression on the original OSGBformat data by the inclination model; and S2, converting the model data set and the inclined OSGB slice into a three-dimensional slice cache in an S3M format. The live-action fusion method disclosedby the invention is oriented to the fields of public security and smart cities, avoids the application limitation of the traditional two-dimensional map and monitoring video, solves the disadvantage that the monitoring video picture is split, enhances the spatial perception of video monitoring, improves the display performance of multi-path real-time video integration in a three-dimensional sceneto a certain extent, and improves the real-time performance of the three-dimensional scene. The method can be widely applied to the fields of public security and smart cities with strong videos and strong GIS services.

Owner:NANJING GUOTU INFORMATION IND

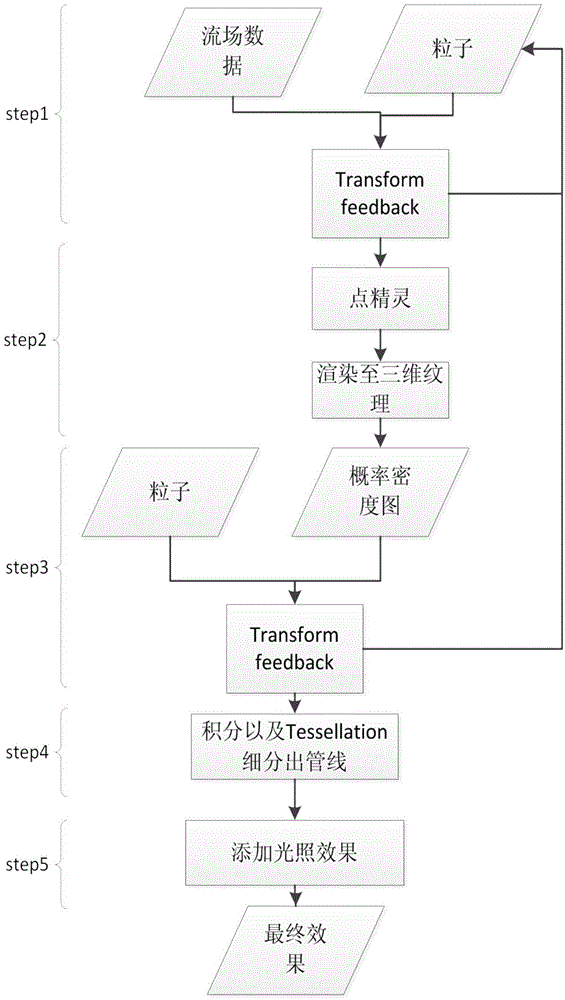

GPU-based interactive ocean three-dimensional flow field dynamic visual algorithm

ActiveCN104867186AReal-time interactive change lengthReal-time interaction to change the speed of movement3D-image rendering3D modellingComputational scienceRegular grid

The invention belongs to the field of scientific visualization, and particularly relates to a GPU-based interactive ocean three-dimensional flow field dynamic visual algorithm. The GPU-based interactive ocean three-dimensional flow field dynamic visual algorithm is based on the mode data of a regular grid. A uniform seed point placement algorithm in a three-dimensional flow field space is adopted. Furthermore a density ball which is designed by means of a Gauss function is mapped to multiple patterns for realizing uniform distribution of the seed points. Finally a streamline is generated by means of GPU accelerated rendering technology. Then the streamline is expanded to a pipe line by means of Tessellation technology, thereby realizing dynamic visual drawing for the ocean three-dimensional flow field. For improving spatial perception for the three-dimensional streamline, a Phong / Blinn illumination model is used for performing illumination processing on the streamline. Furthermore the GPU-based interactive ocean three-dimensional flow field dynamic visual algorithm is realized based on the GPU and has higher streamline generating efficiency and higher control efficiency.

Owner:OCEAN UNIV OF CHINA

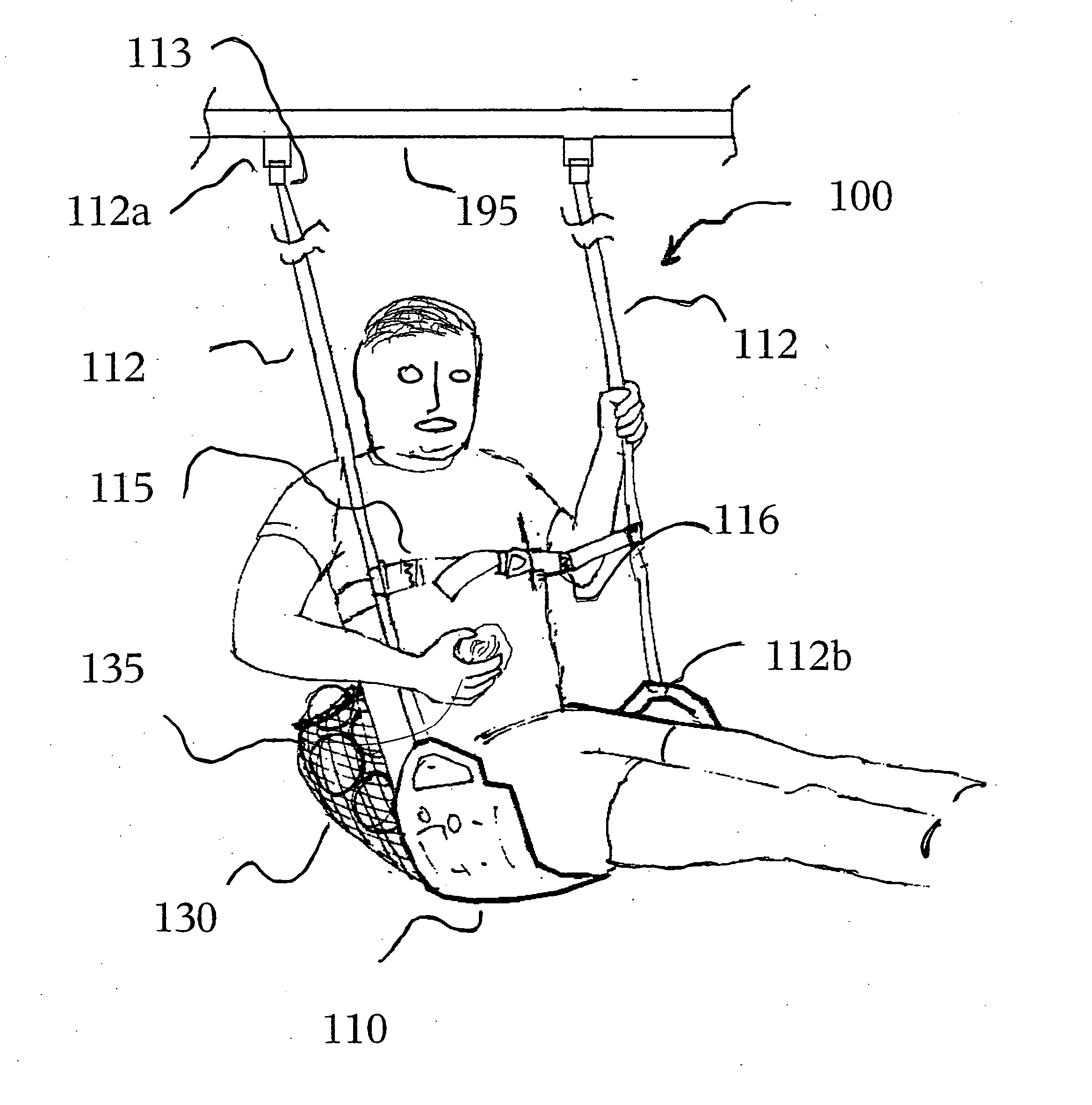

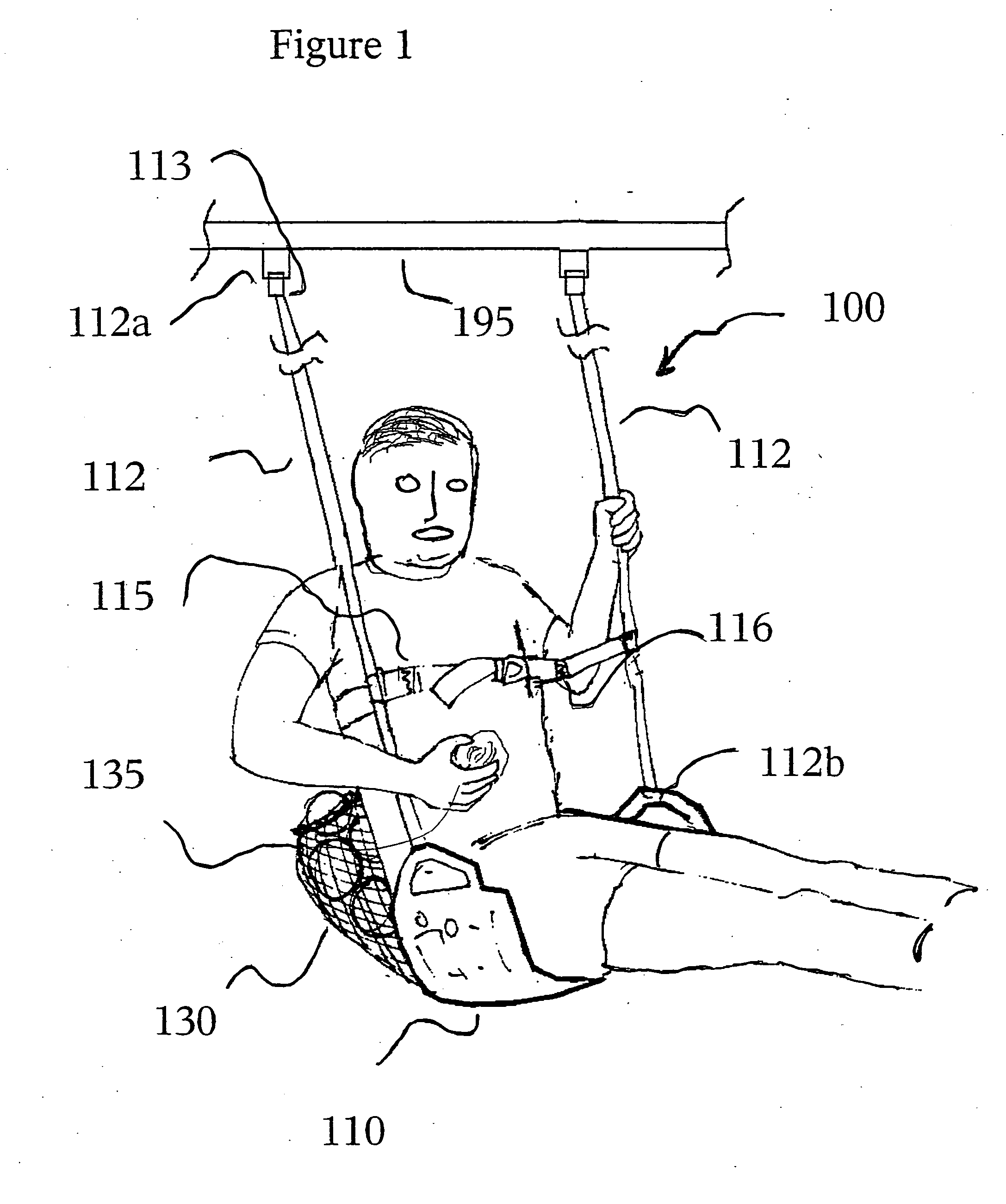

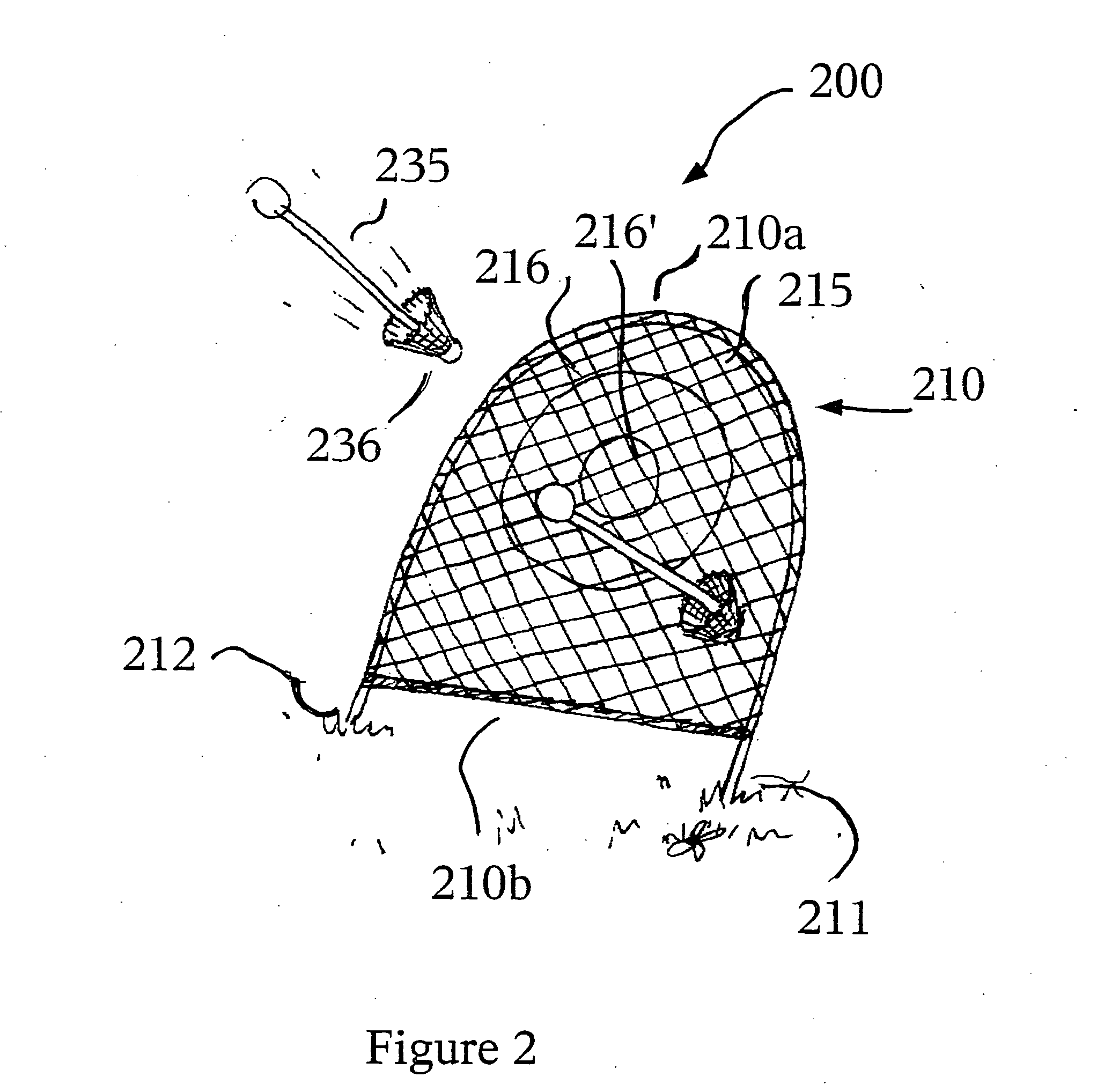

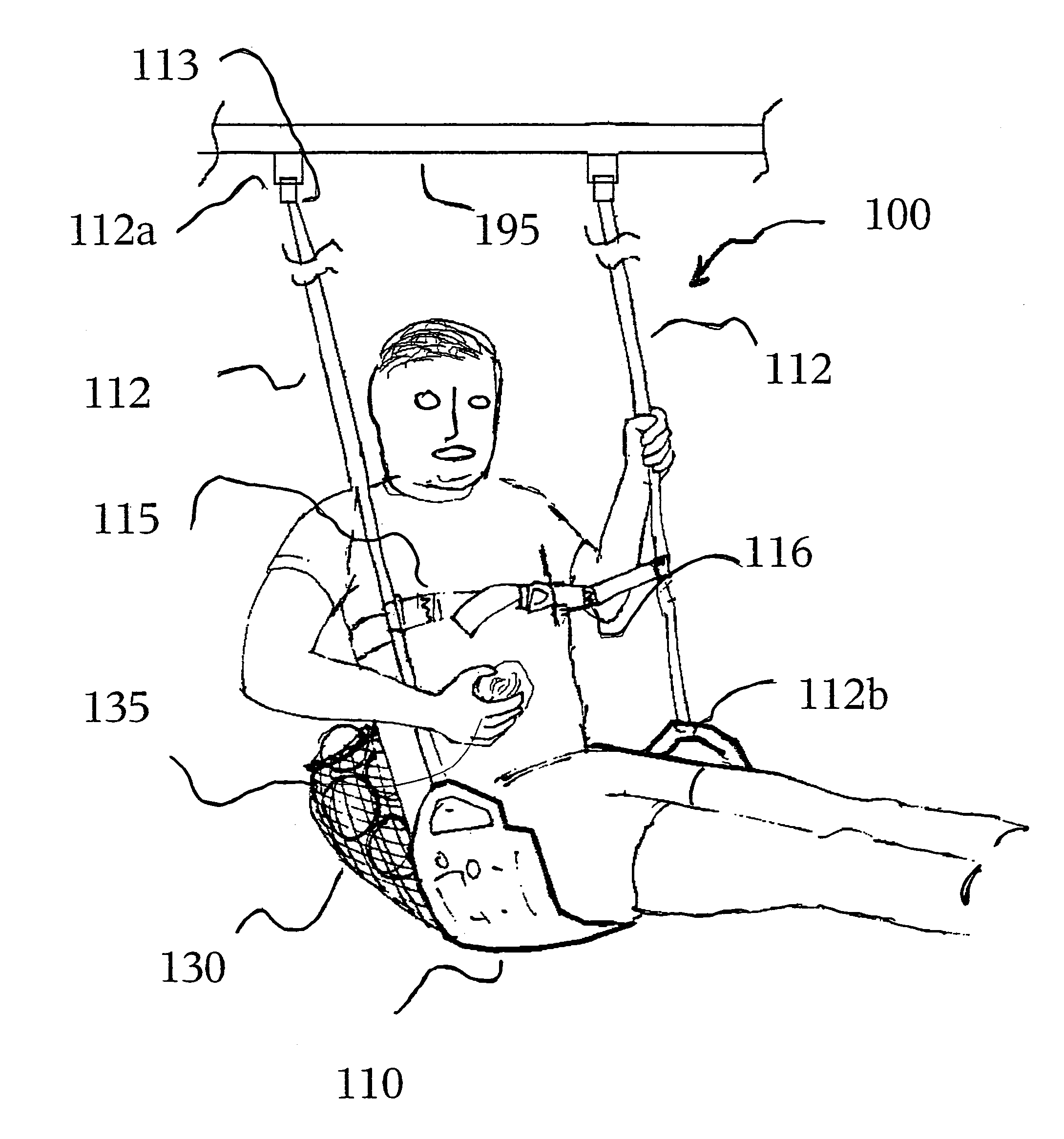

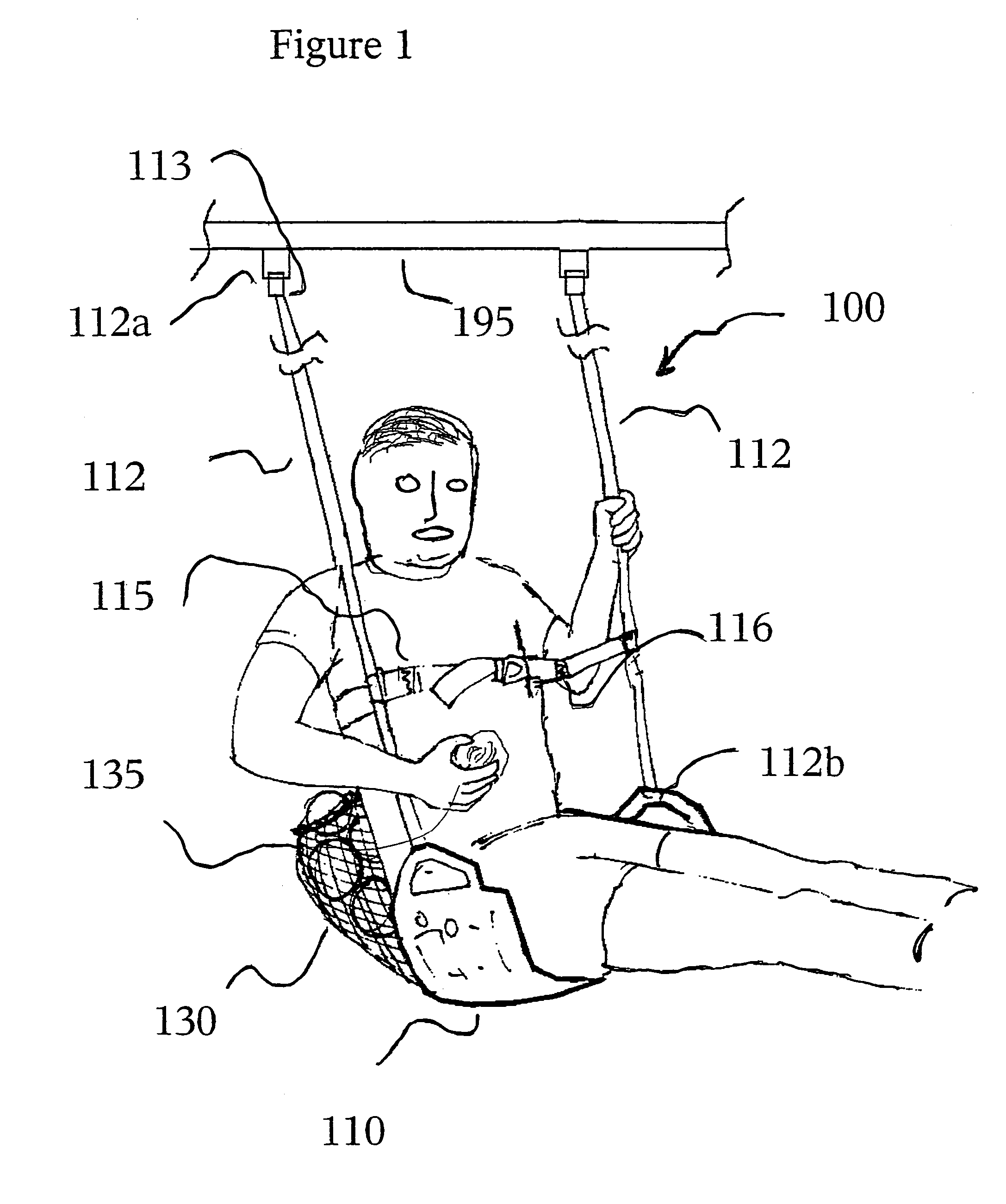

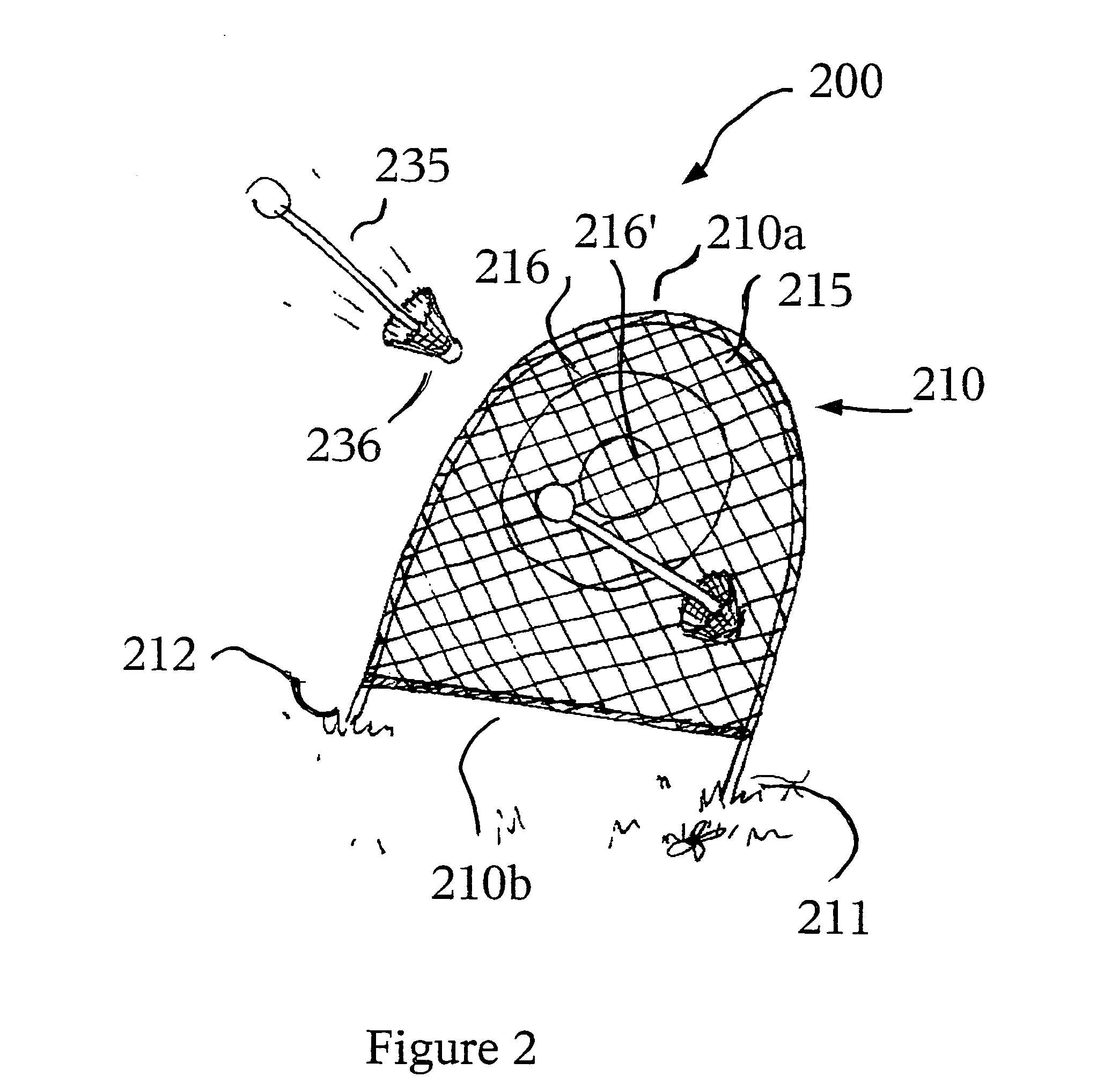

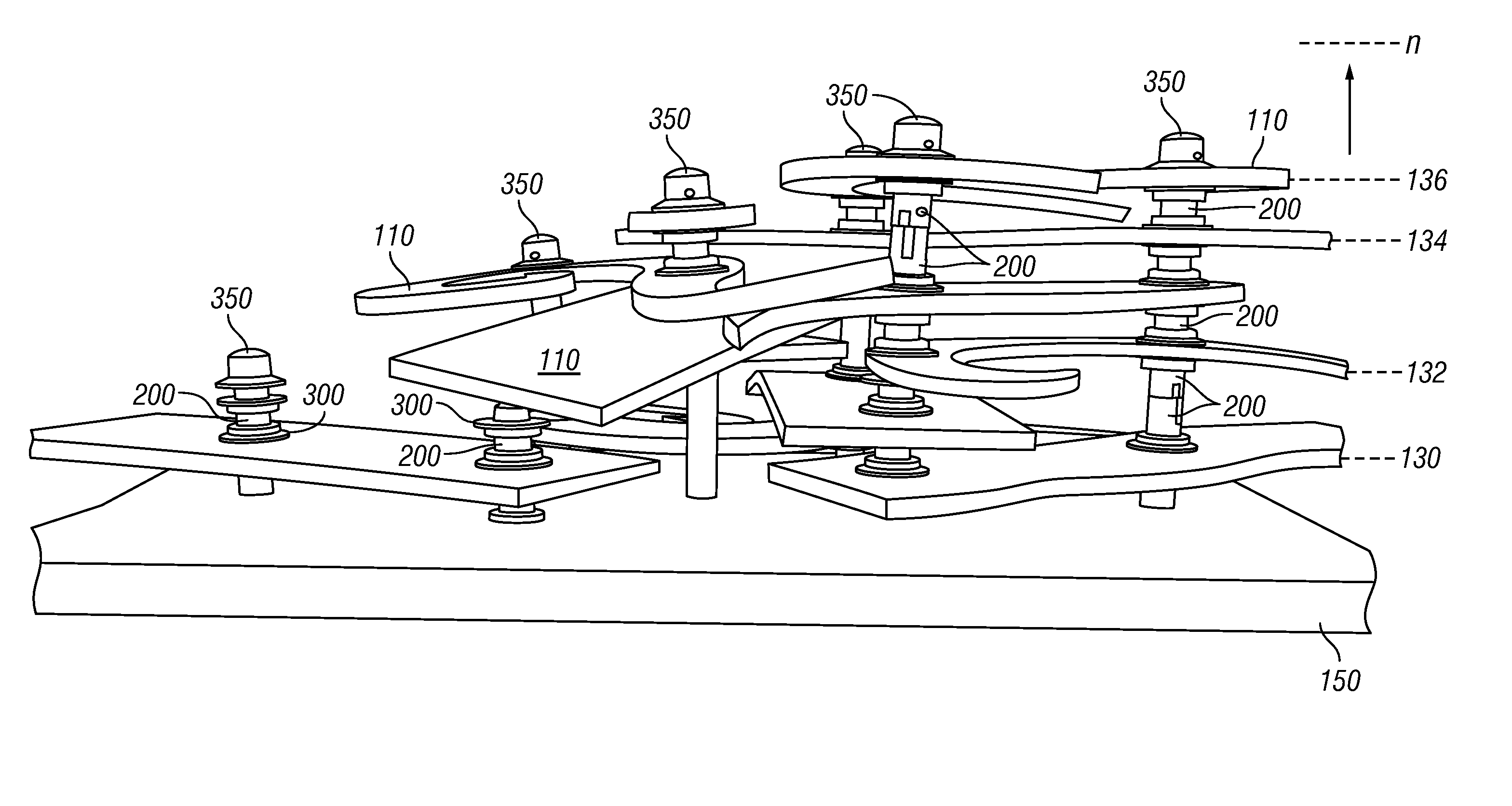

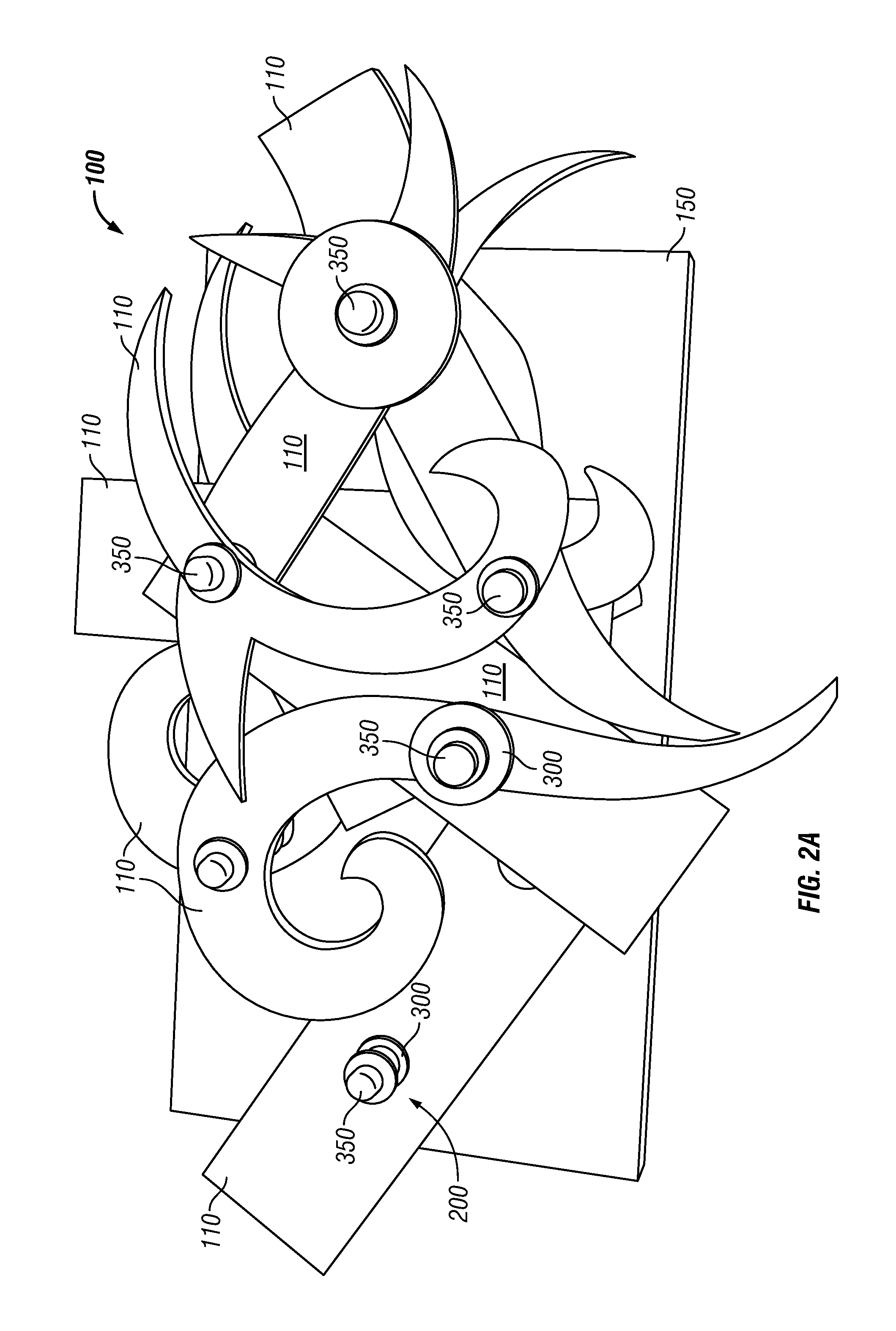

Play swing systems and methods of play

ActiveUS20050049055A1Tendency increaseEncourage useGymnastic climbingIndoor gamesReflexSpatial perception

A play swing system includes a safety harness attached to each seat, as well as other features for interactive and competitive throwing and tossing games. The safety harness may incorporates various games features, and thus encourage use in activities that require at least one free hand, or otherwise increase the risk of slippage and falling from the play swing seat. Various embodiments accommodate as well as challenge the spatial perception, dexterity and reflexes of players of different ages. For examples, younger players might compete by throwing objects at a fixed target mounted on the ground. In other embodiments, the target is moving in synchronization with the oscillatory motion of the adjacent players swing by a physical coupling or attachment. Interactive play is encouraged at the higher skill levels by configuring the targets associated with adjacent seats to face each other. In this embodiment, the players oscillate in opposite directions so that they are closest to the target when the relative velocity is highest. The objects of the associated games can be building a higher score, as well as soaking the other player(s) with water supplied by an external source and actuated by instantaneous or accumulated contact of a throwing object with a target.

Owner:PUBLICOVER MARK W +2

Play swing systems and methods of play

A play swing system includes a safety harness attached to each seat, as well as other features for interactive and competitive throwing and tossing games. The safety harness may incorporates various games features, and thus encourage use in activities that require at least one free hand, or otherwise increase the risk of slippage and falling from the play swing seat. Various embodiments accommodate as well as challenge the spatial perception, dexterity and reflexes of players of different ages. For examples, younger players might compete by throwing objects at a fixed target mounted on the ground. In other embodiments, the target is moving in synchronization with the oscillatory motion of the adjacent players swing by a physical coupling or attachment. Interactive play is encouraged at the higher skill levels by configuring the targets associated with adjacent seats to face each other. In this embodiment, the players oscillate in opposite directions so that they are closest to the target when the relative velocity is highest. The objects of the associated games can be building a higher score, as well as soaking the other player(s) with water supplied by an external source and actuated by instantaneous or accumulated contact of a throwing object with a target.

Owner:PUBLICOVER MARK W +2

Method for improving spatial perception in virtual surround

InactiveUS8155323B2Avoid damagePromote reproductionPseudo-stereo systemsLoudspeaker spatial/constructional arrangementsSpatial perceptionVocal tract

A method for improving the spatial perception of multiple sound channels when reproduced by two loudspeakers, generally front-located with respect to listeners, each channel representing a direction, applies some of the channels, such as sound channels representing directions other than front directions, to the loudspeakers with headphone and crosstalk cancelling processing, and applies the other ones of the sound channels, such as sound channels representing front directions to the loudspeakers without headphone and crosstalk cancelling processing. The headphone processing includes applying directional HRTFs to channels applied to the loudspeakers with headphone and crosstalk cancelling processing and may also include adding simulated reflections and / or artificial ambience to channels applied to the loudspeakers with headphone and crosstalk cancelling processing.

Owner:DOLBY LAB LICENSING CORP

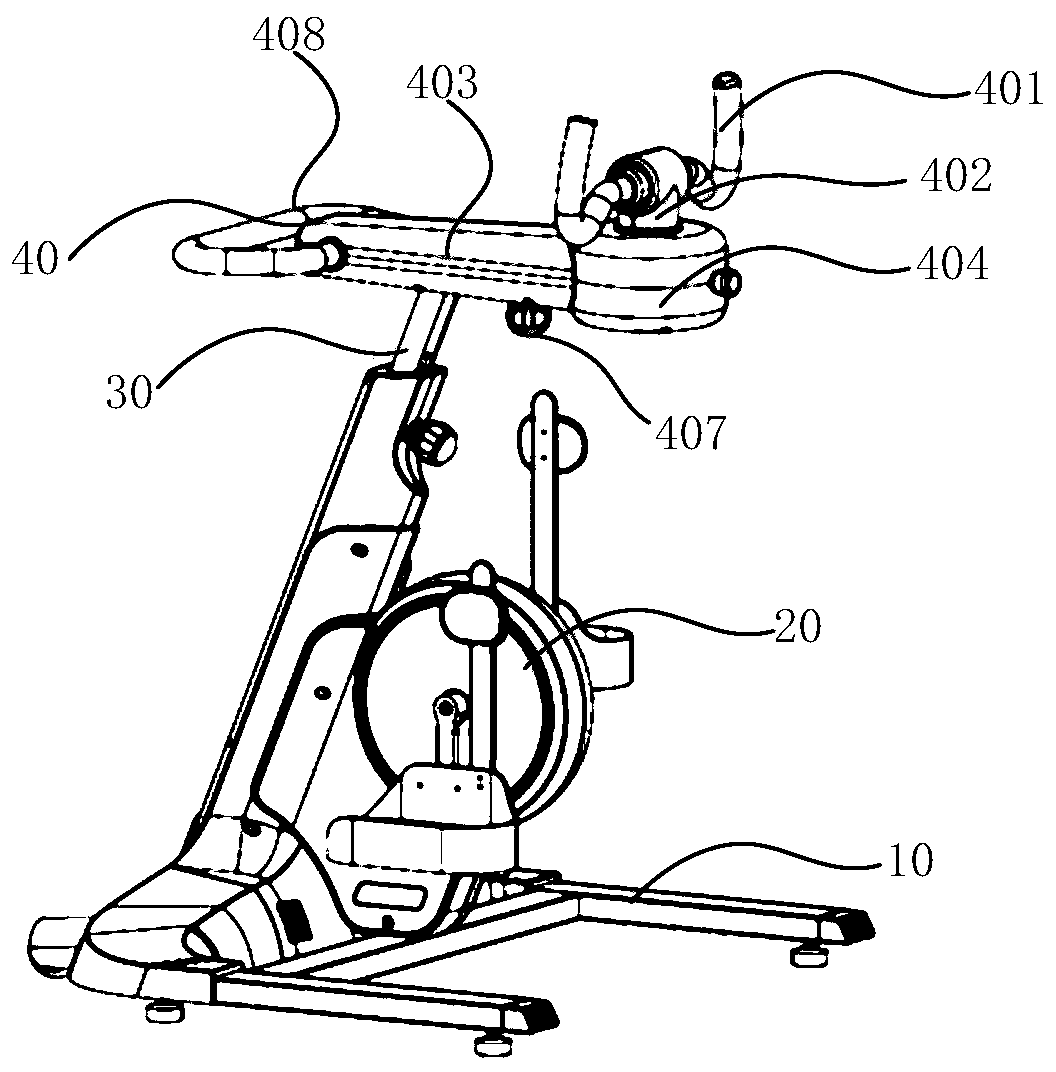

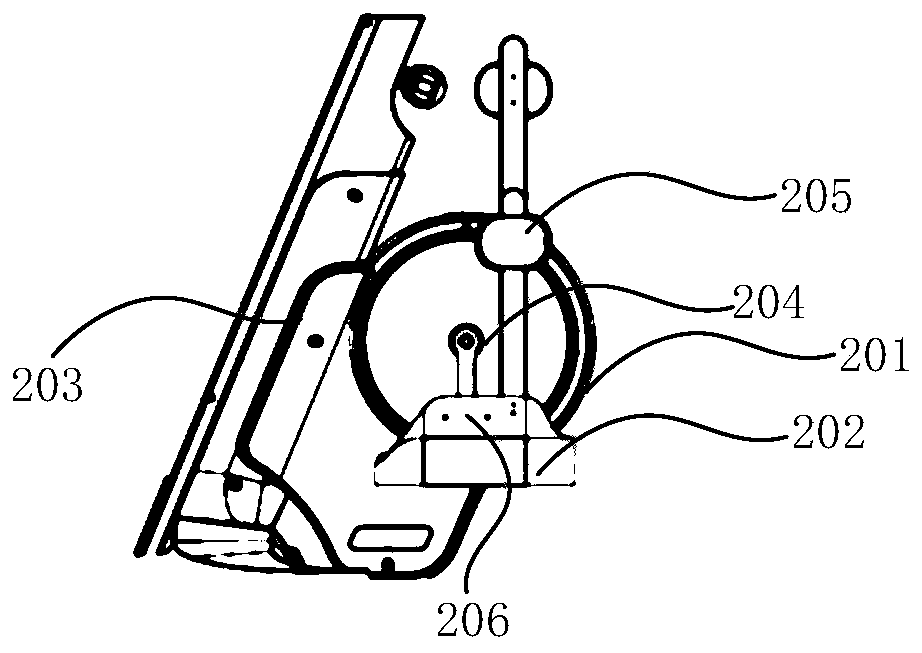

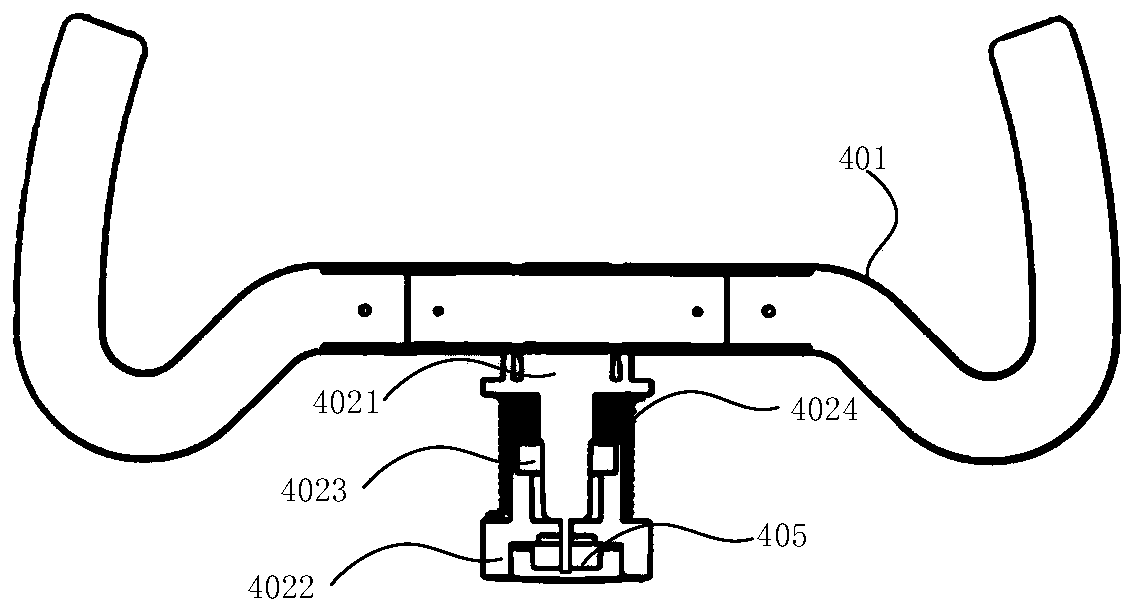

Device, system and method for training upper and lower limbs

ActiveCN111407590AIncrease interest in trainingOvercome the defect of single training angleChiropractic devicesMovement coordination devicesInformation processingSpatial perception

The invention discloses a device, system and method for training upper and lower limbs. The system comprises the device for training upper and lower limbs, information processing equipment connected to the device for training upper and lower limbs, and display equipment connected to the information processing equipment. The system for training upper and lower limbs can realize high-interactivity active and passive cooperative rehabilitation training of upper and lower limbs under the induction of a virtual-reality three-dimensional training scene, realizes overall spatial perception closed-loop motion feedback from the eyes to the brain, then to the upper limbs and finally to the lower limbs, and is beneficial for improving the training participation degree of a patient. Meanwhile, the system has four modes including a constant-speed movement mode, a passive movement mode, a power-assisted movement mode and an active movement mode, so movement scenes are enriched. In addition, a human-computer interaction force can be estimated through the current change of a lower limb motor, and the torque feedback adjustment control of the lower limb motor is realized according to the interaction force, so active compliance control is realized.

Owner:西安臻泰智能科技有限公司

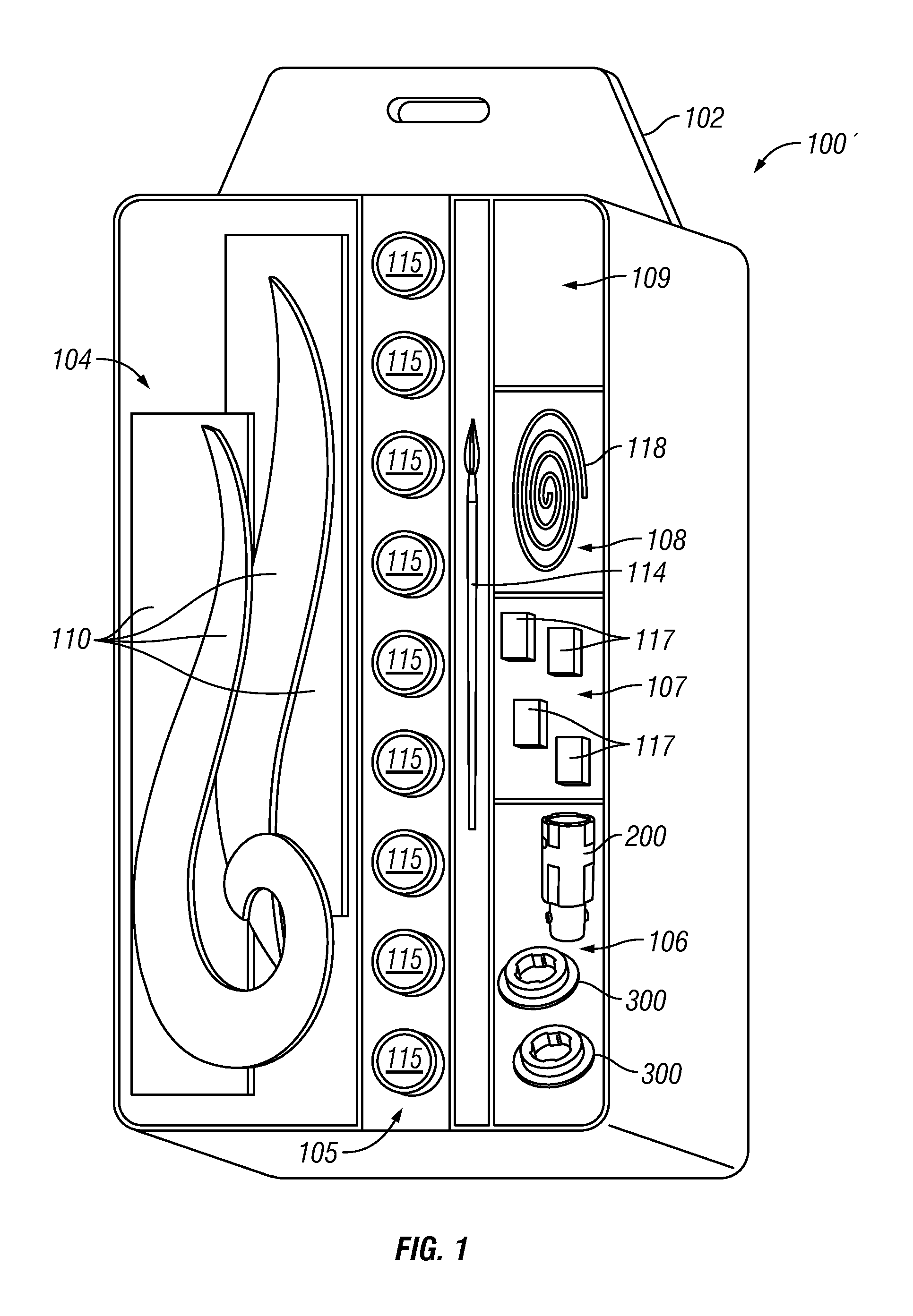

Assembly kit for three dimensional works

Kits designed to stimulate creativity, to provide exercise for fine motor skills, and to improve spatial perception. The kits may be useful as a stimulating “toy” for children to develop creativity, spatial perception and motor skills, as a therapeutic kit for the elderly or those requiring rehabilitation of fine motor skills; as a diversion to relieve stress, to create unique works for the home or office, to display photos and memorabilia, and for a host of other purposes, limited by the imagination. The kits include at least shaped components, fasteners, and a platform onto which a three-dimensional work is mounted.

Owner:STAT VENTURES

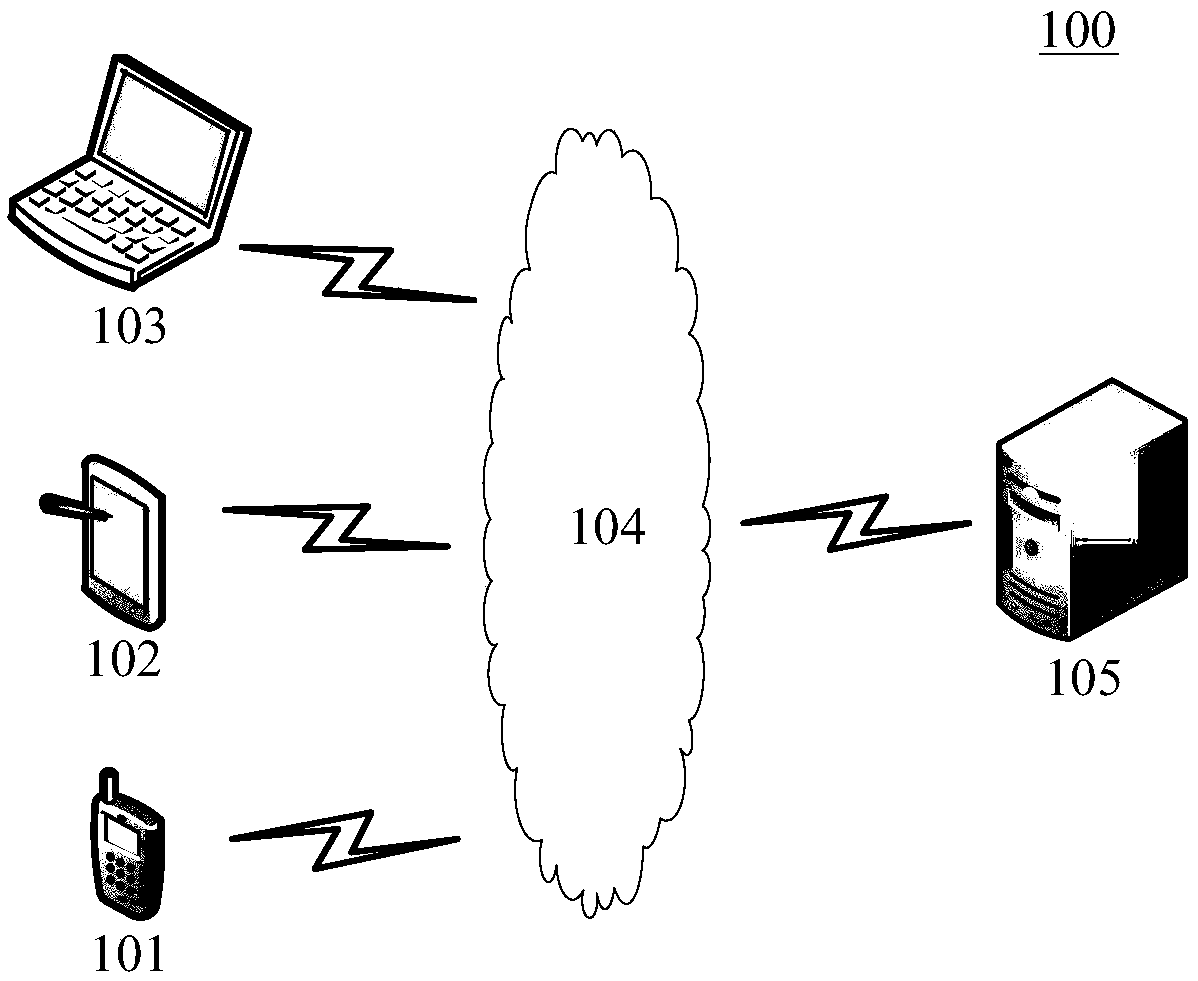

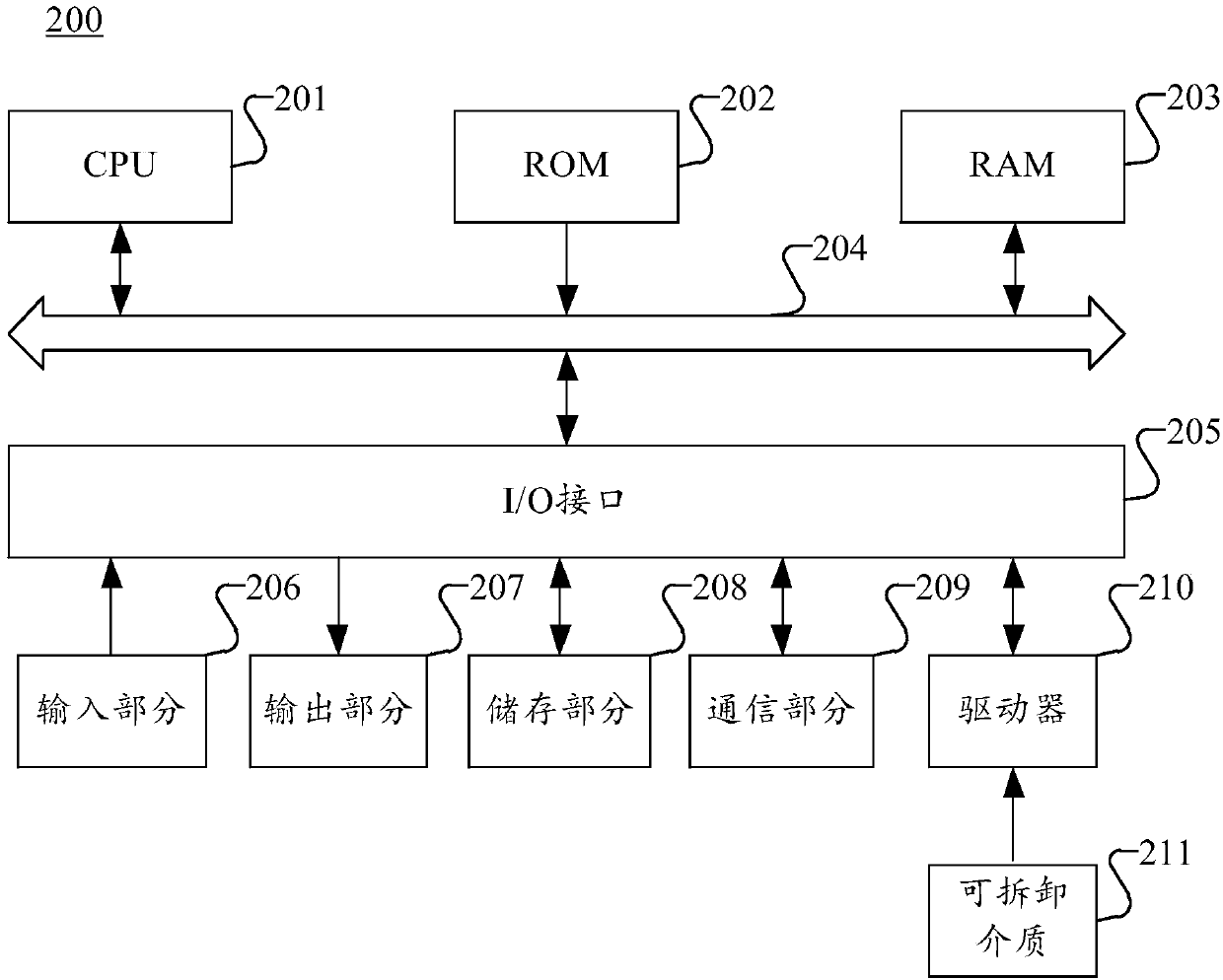

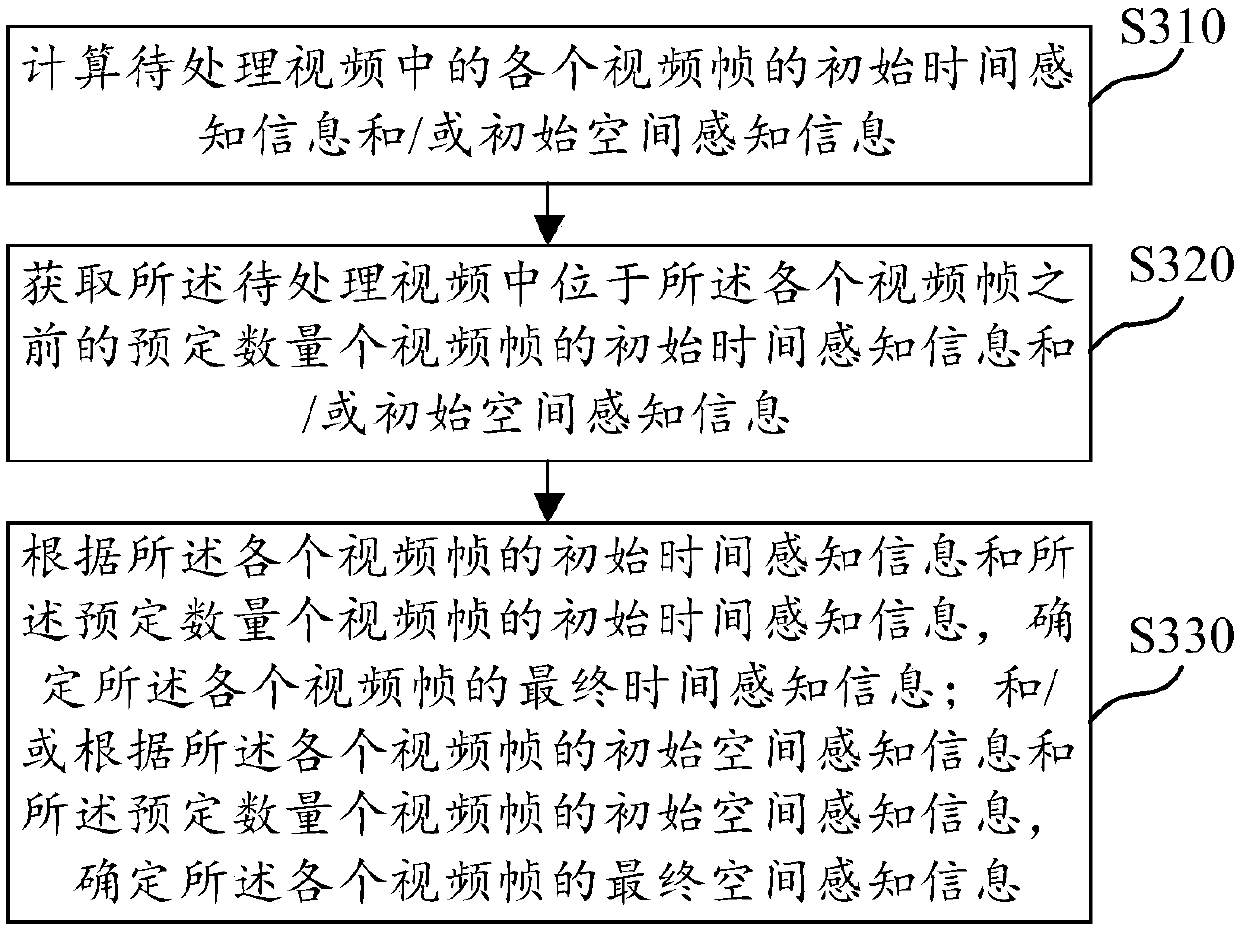

Video frame processing method and device, computer readable medium and electronic equipment

ActiveCN110166796AAccurate processingGuaranteed mobilitySelective content distributionSpatial perceptionComputer vision

The embodiment of the invention provides a video frame processing method and device, a computer readable medium and electronic equipment. The video frame processing method comprises the steps of calculating initial time perception information and / or initial space perception information of each video frame in a to-be-processed video; obtaining initial time perception information and / or initial space perception information of a predetermined number of video frames in front of each video frame in the to-be-processed video; determining final time perception information of each video frame according to the initial time perception information of each video frame and the initial time perception information of the predetermined number of video frames; and / or determining final spatial perception information of each video frame according to the initial spatial perception information of each video frame and the initial spatial perception information of the predetermined number of video frames. According to the technical scheme provided by the embodiment of the invention, the kinematicity between the video frames can be considered, so that the TI and / or SI of the obtained video frames can be ensured to accord with objective description.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

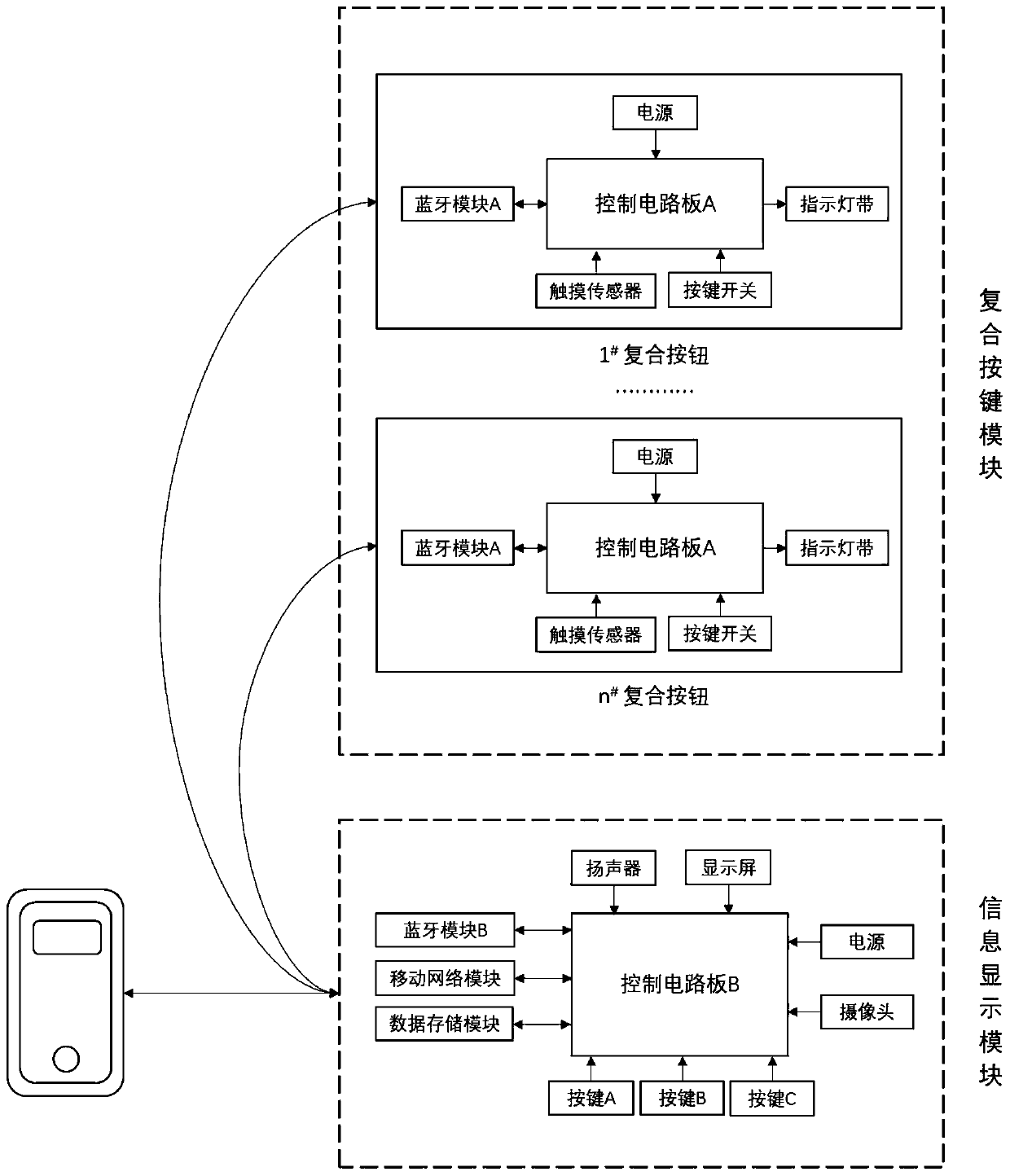

Space perception ability training device and method for autistic children

ActiveCN111408010AFast, comprehensive and accurate collectionGuaranteed accuracyProgramme controlTransmission systemsAuditory visualAuditory sense

The invention discloses a space perception ability training device for autistic children. The device organically combines auditory sense, visual sense and tactile sense, and related plastic nerves inthe brain and a repair path of brain self-healing ability are perceived through stimulation of multiple sense organs. Moreover, the device performs hierarchical mode training on the ability of the autistic children from distinguishing spatial positioning, understanding spatial orientation concepts to analyzing spatial relations, and achieves coordinated movement of hands, feet, eyes and brains, thereby improving the integration function and the spatial perception ability of the autistic children. The scientific, normalized, professional, systematic and standardized spatial perception ability training device and method are provided for the autistic children and rehabilitation guidance personnel, the accuracy of training results is effectively guaranteed, and the workload of teachers or guardians is greatly reduced.

Owner:XIAN UNIV OF TECH

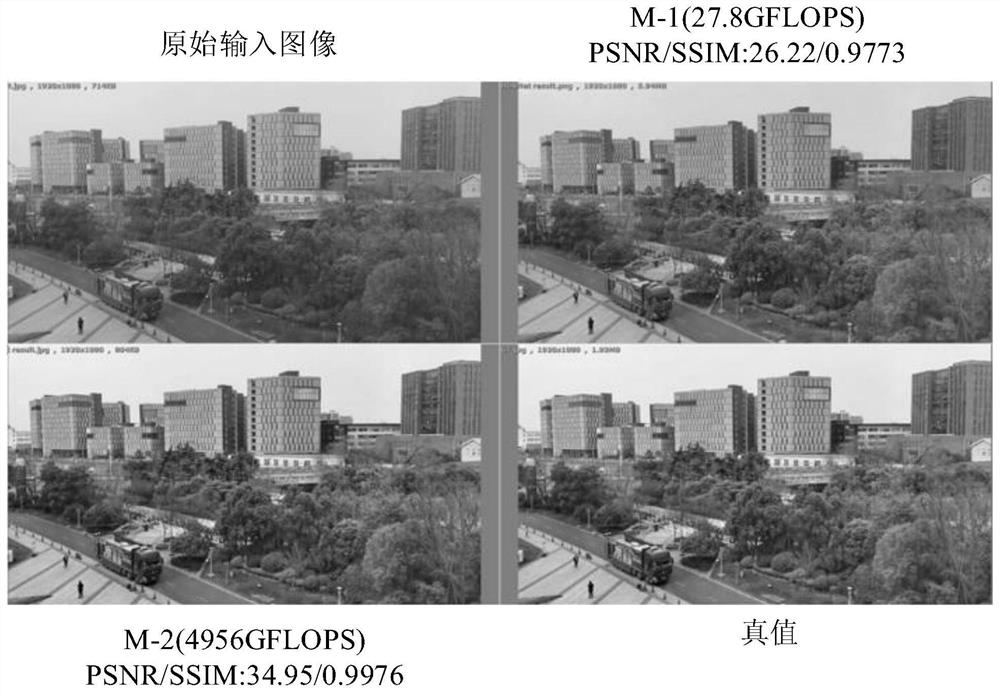

Image enhancement method, model training method and equipment

ActiveCN113066017ARealize the mappingSolve the problem of lack of spatial informationImage enhancementImage analysisPattern recognitionImage extraction

The embodiment of the invention discloses an image enhancement method, a model training method and equipment, which can be applied to the field of image processing in the field of artificial intelligence, and the method comprises the following steps: extracting features from an input image through a first neural network layer to obtain a first feature, performing pixel classification and image classification on the first feature through a second neural network layer and a third neural network layer to generate first classification information and second classification information, and obtaining a target lookup table based on the first classification information, the second classification information and a spatial perception three-dimensional lookup table (3D LUT); building the spatial perception three-dimensional lookup table according to each image category and each pixel category; and finally, obtaining an enhanced image according to the input image and the target lookup table. Compared with a traditional three-dimensional lookup table, the method has the advantages that the processing capacity is improved, and the problem that inaccurate results (such as local wrong colors and artifacts) are easily generated due to less information in an enhancement method based on the traditional three-dimensional lookup table is solved.

Owner:HUAWEI TECH CO LTD

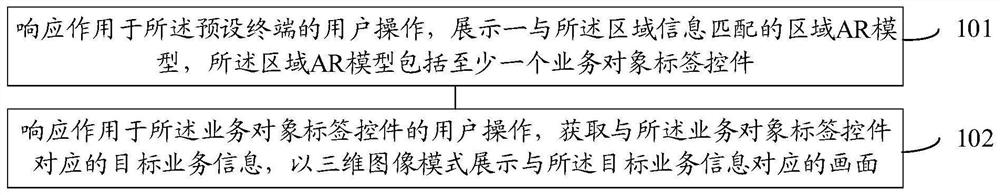

Information display method and device

PendingCN112232900ARich browsing methodsIncrease spatial awarenessBuying/selling/leasing transactionsImage data processingSpatial perceptionComputer graphics (images)

The embodiment of the invention provides an information display method and device, and the method comprises the steps that a terminal can respond to a user operation, displays a region AR model matching region information, enables the two-dimensional region information to be converted into a three-dimensional region AR model through combining with an augmented reality technology. A region AR modelat least can comprise a business object label control. A terminal can respond to an operation of a user acting on the business object label control and acquire target business information of the business object label control, and then a business picture corresponding to the target business information is displayed in a three-dimensional image mode. Therefore, on one hand, the user can obtain theinformation of the region from different angles, the browsing mode of the user is enriched, the spatial perception of the user is improved, the user can browse the business object in a three-dimensional image mode, and the spatial perception is further improved.

Owner:BEIJING 58 INFORMATION TTECH CO LTD

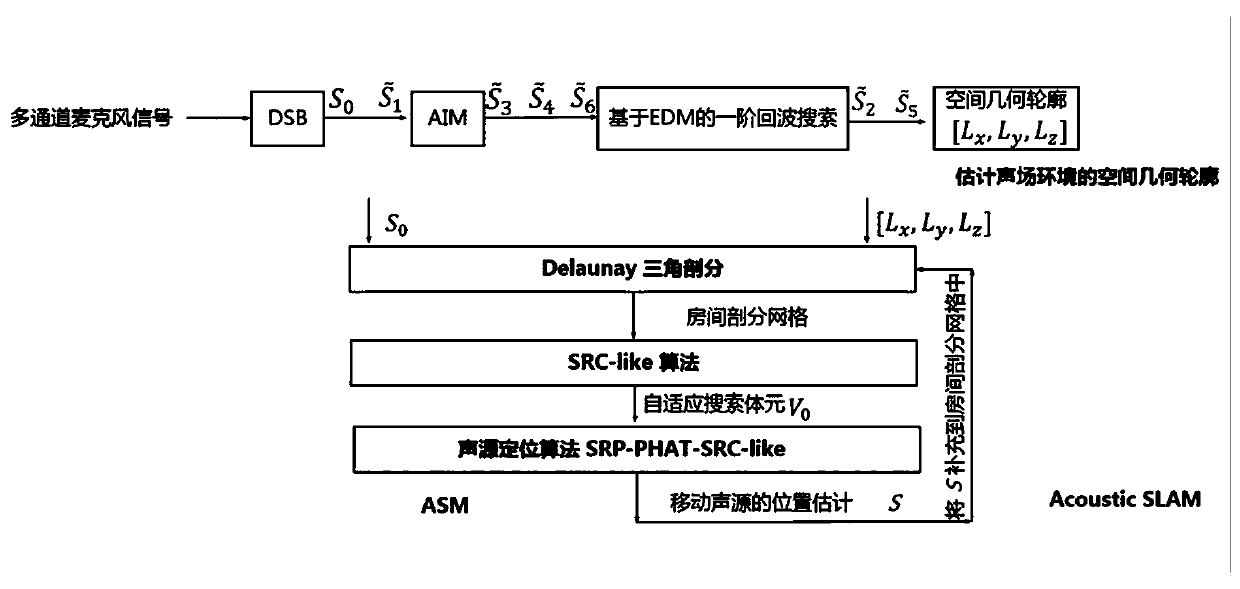

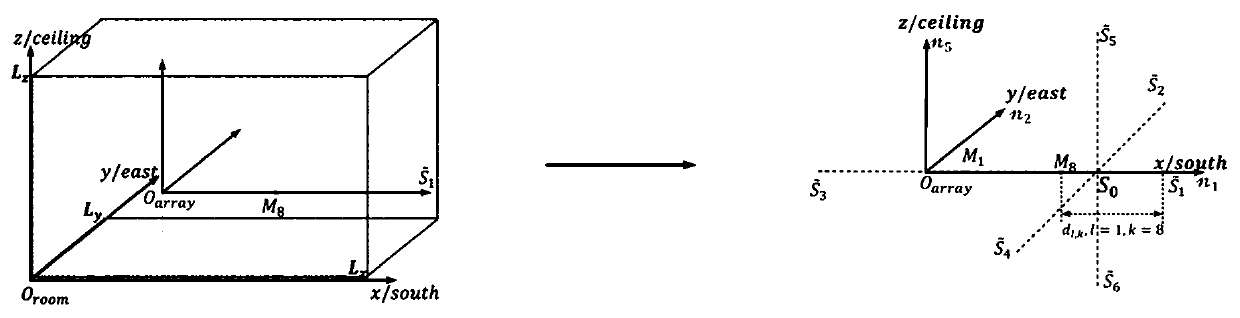

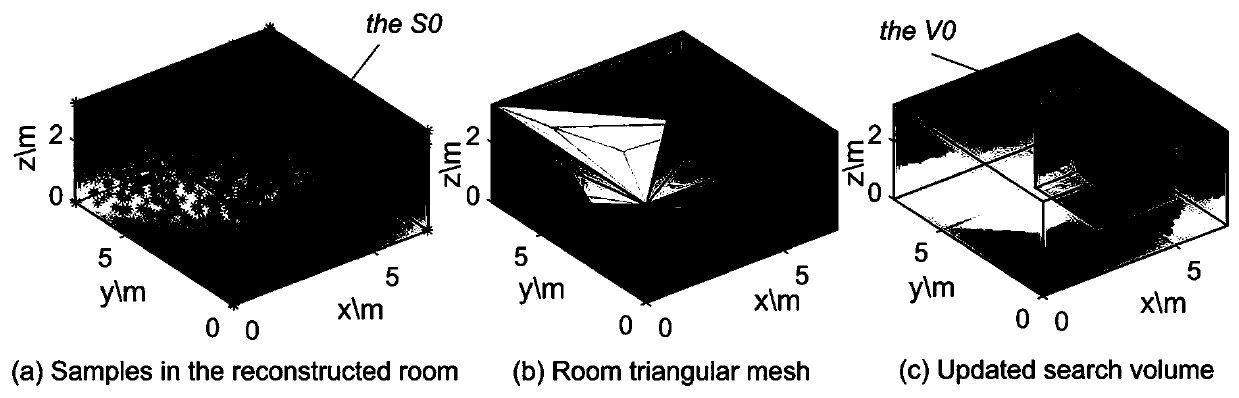

Acoustic simultaneous localization and mapping method based on multi-channel sound acquisition

ActiveCN109901112APrecise positioningAvoid full scope searchPosition fixationSimultaneous localization and mappingSound source location

The invention discloses an acoustic simultaneous localization and mapping method based on multi-channel sound acquisition. The method, through a multi-channel sound acquisition mode and based on a geometric acoustic mirror image model, analyzes channel state in the speech signal propagation process, realizes spatial perception of indoor sound field environment and improves continuous positioning performance of a mobile sound source (speaker) by utilizing the spatial perception result. The method, by introducing a Delaunay triangulation method, analyzes motion state of the mobile sound source and estimates an adaptive search subspace of the position of a sound source, thereby avoiding tedious and redundant repeated search for the position of the mobile sound source in the indoor sound fieldfull space range and improving universality of the spatial perception and mobile sound source location scheme; and the method is not only suitable for interior space contour reconstruction, but alsosuitable for tracking and positioning of the mobile sound source in the indoor environment.

Owner:GUILIN UNIV OF ELECTRONIC TECH

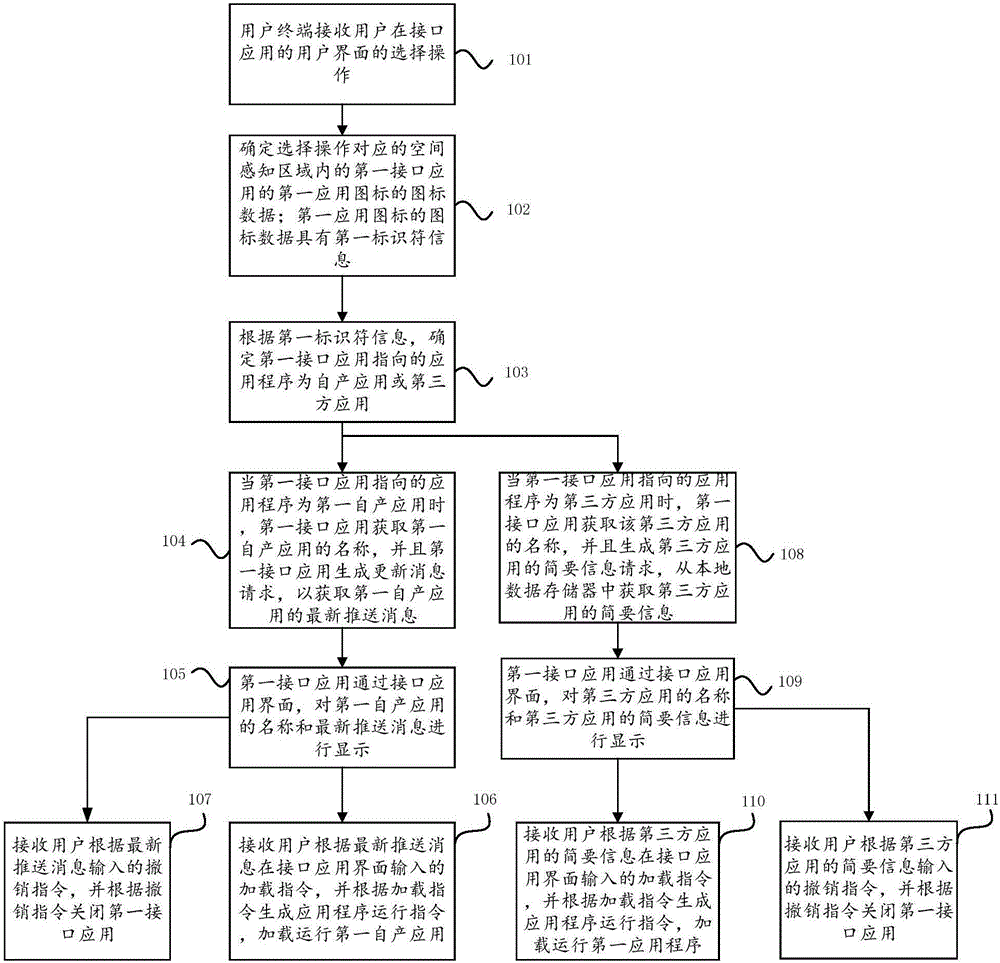

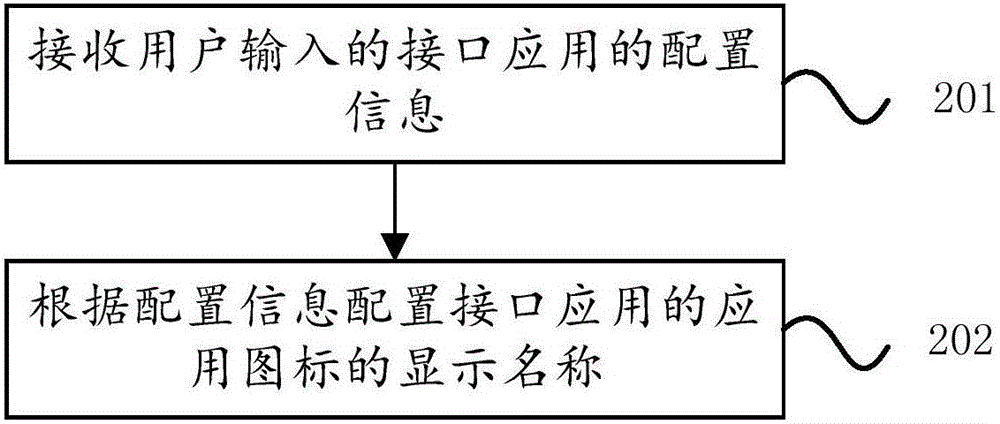

Application pre-loading method

ActiveCN106648733AReduce the chance of false startsReduce occupancyProgram loading/initiatingInput/output processes for data processingThird partySpatial perception

An embodiment of the invention relates to an application pre-loading method. The method comprises the steps of receiving selection operation of a user at a user interface of an interface application by a user terminal; determining icon data of a first application icon of a first interface application in a spatial perception region corresponding to the selection operation, wherein the icon data of the first application icon has first identifier information; determining that an application which the first interface application points to is a homegrown application or a third-party application according to the first identifier information, wherein an open data interface is arranged between the homegrown application and the interface application, and no data interface is arranged between the third-party application and the interface application; when the application which the first interface application points to is a first homegrown application, obtaining a name of the first homegrown application by the first interface application, and generating a message updating request by the first interface application to obtain a latest pushing message of the first homegrown application; and displaying the name of the first homegrown application and the latest pushing message by the first interface application through an interface application interface.

Owner:北京博瑞彤芸科技股份有限公司

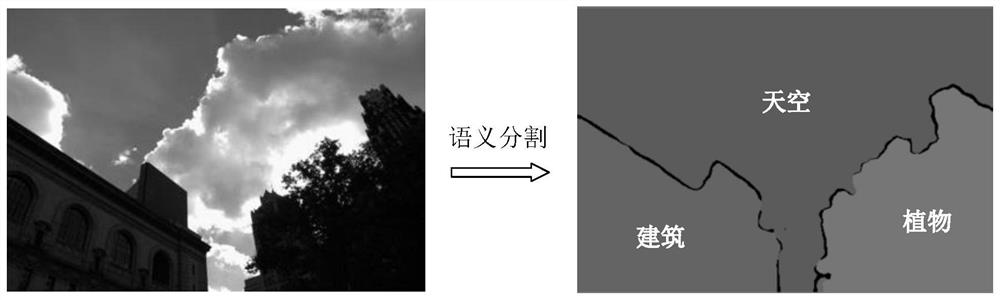

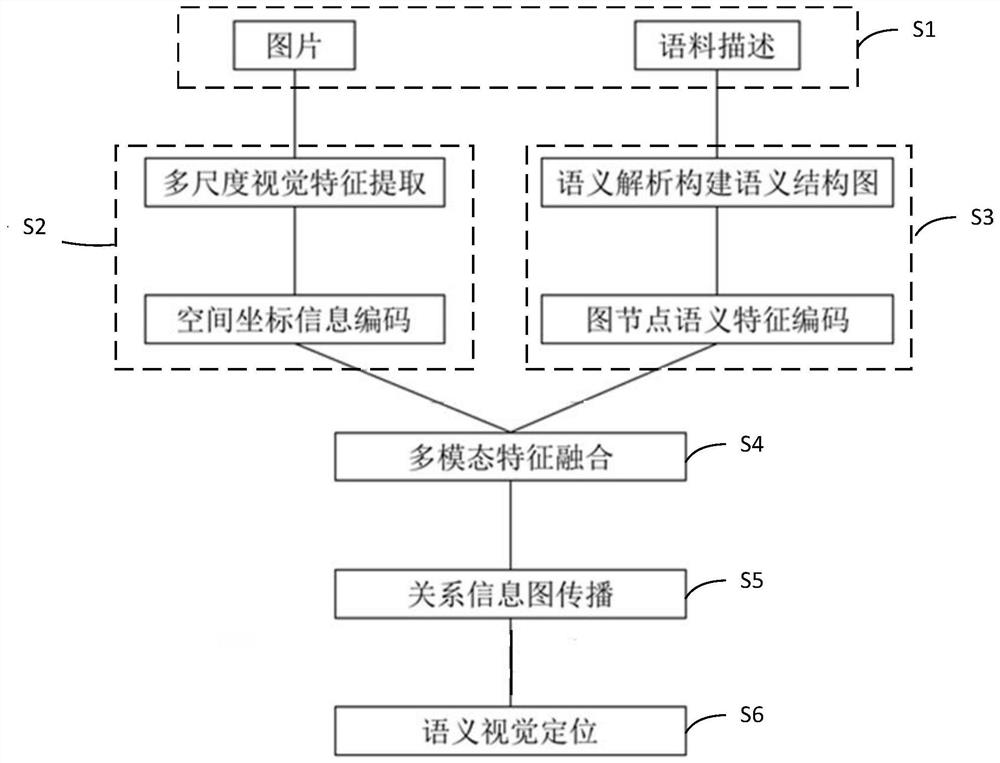

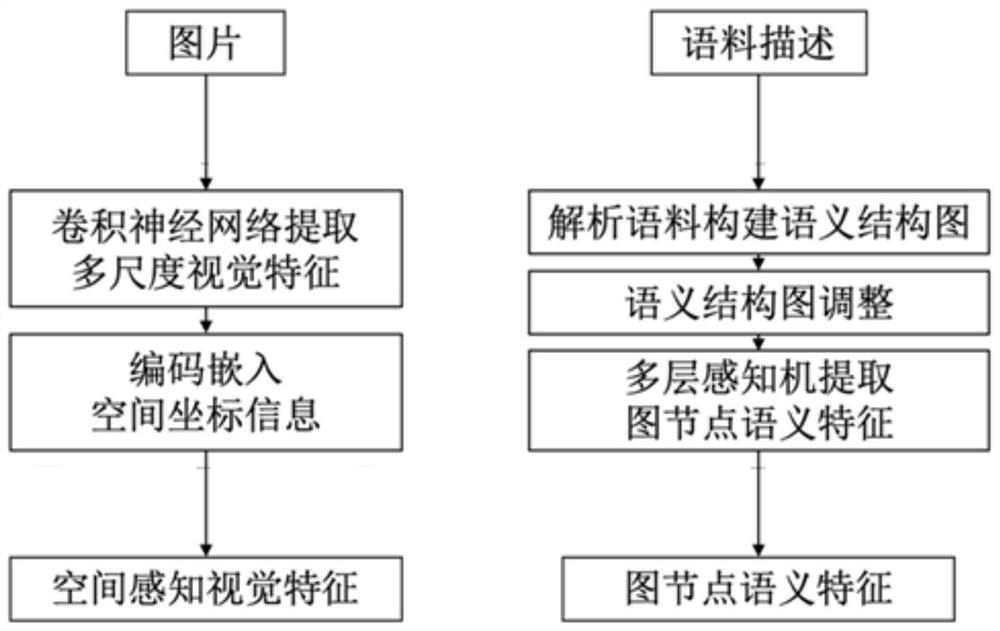

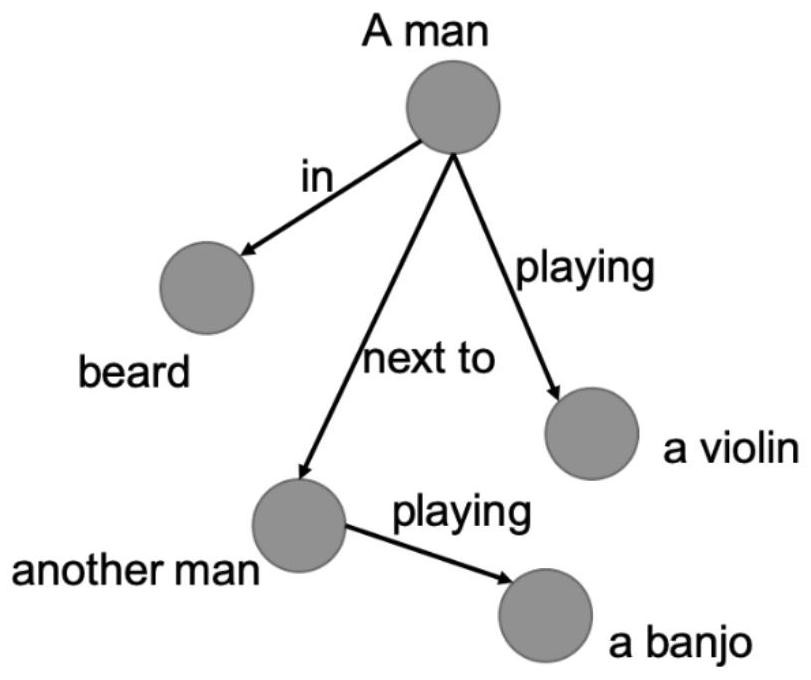

Semantic vision positioning method and device based on multi-modal graph convolutional network

ActiveCN111783457AAccurate acquisitionImproving the performance of semantic visual localization tasksSemantic analysisCharacter and pattern recognitionSpatial perceptionMultilayer perceptron

The invention provides a semantic vision positioning method and device based on a multi-modal graph convolutional network. The method comprises the steps: obtaining an input picture and corpus description; extracting multi-scale visual features of an input picture by using a convolutional neural network, and encoding and embedding spatial coordinate information to obtain spatial perception visualfeatures; analyzing the corpus description to construct a semantic structure diagram, encoding each node word vector in the semantic structure diagram, and learning diagram node semantic features through a multilayer perceptron; fusing the spatial perception visual features and the graph node semantic features to obtain multi-modal features of each node in the semantic structure graph; spreading relationship information of nodes in the semantic structure chart through a graph convolution network, and learning visual semantic relationships under the guidance of semantic relationships; and performing semantic visual position reasoning to obtain a visual position of the semantic information. According to the method, context semantic information is combined when ambiguous semantic elements areprocessed, and visual positioning can be guided by utilizing semantic relation information.

Owner:BEIJING SHENRUI BOLIAN TECH CO LTD +1

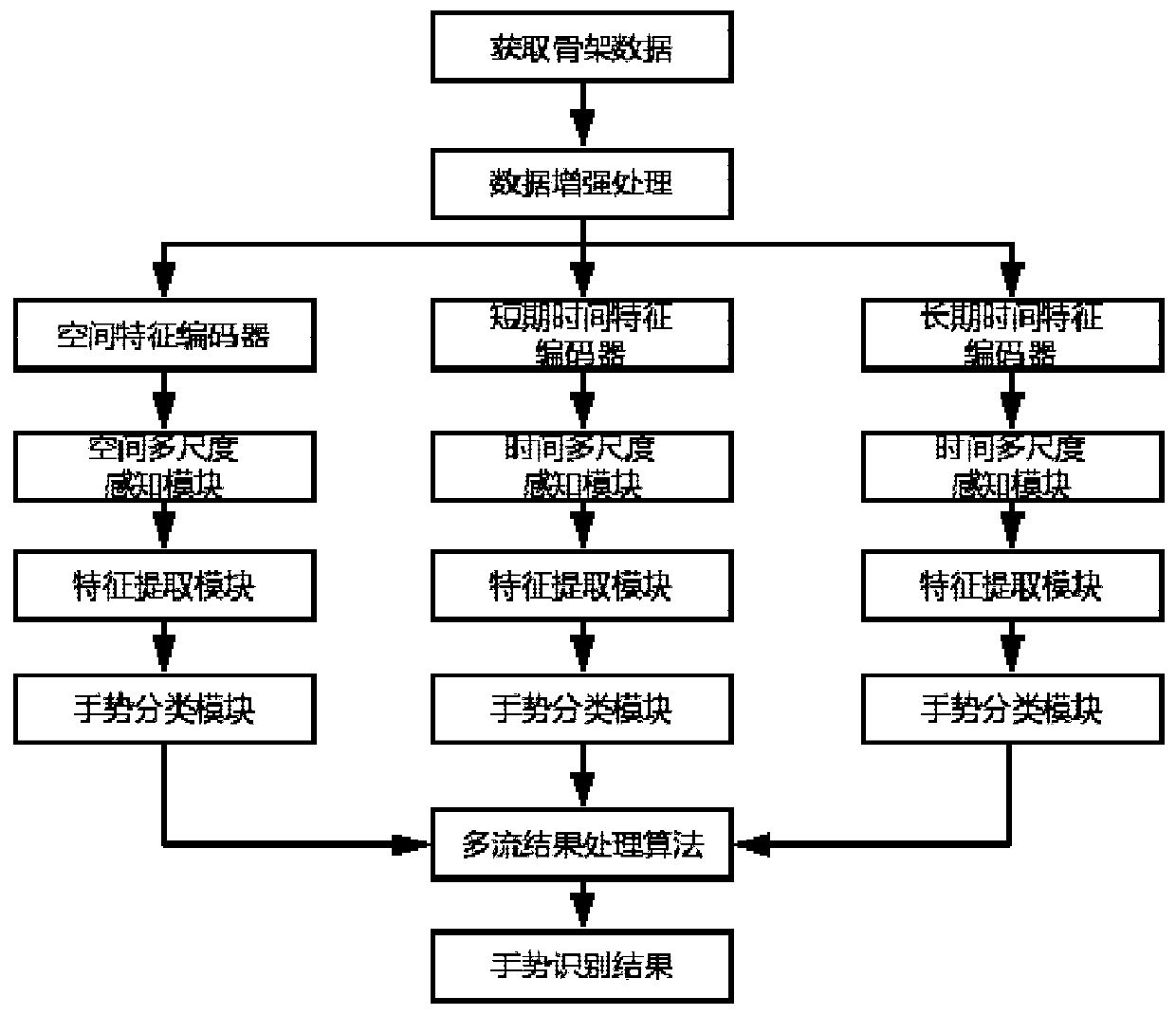

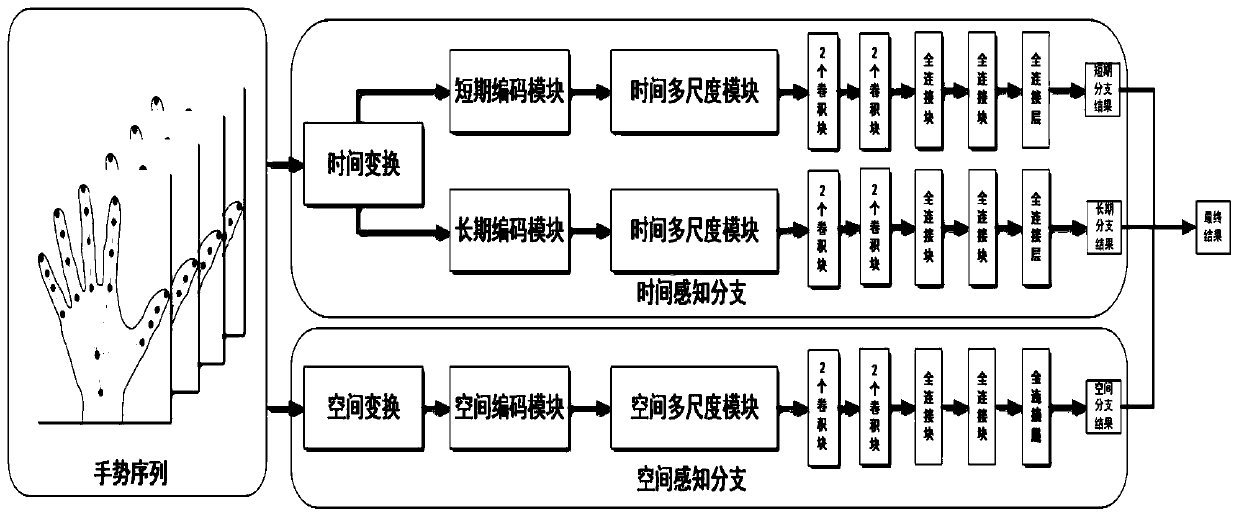

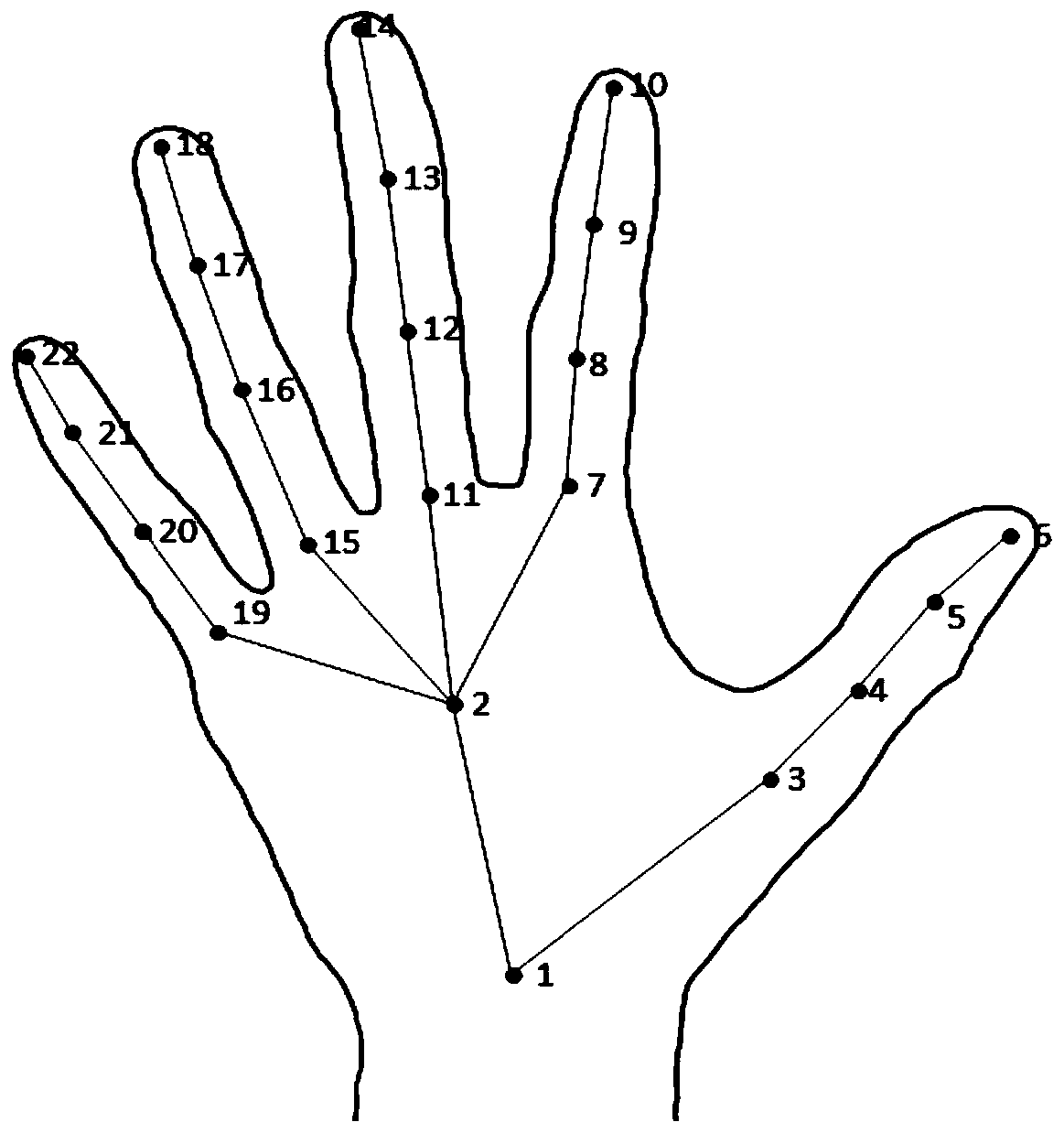

Gesture recognition method and system based on skeleton

ActiveCN111291713AAvoid disadvantagesImprove recognition efficiencyImage enhancementImage analysisPattern recognitionSpatial perception

The invention discloses a gesture recognition method and system based on a skeleton. The gesture recognition method comprises the steps of conducting data enhancement on an obtained to-be-recognized original gesture skeleton sequence; respectively extracting motion features between skeleton nodes in each frame and spatial motion features of different scales, and obtaining a first dynamic gesture prediction label by utilizing a spatial perception network; respectively extracting motion characteristics between adjacent interframe skeleton nodes and time motion characteristics of different scales, and obtaining a second dynamic gesture prediction label by utilizing a short-term time perception network; respectively extracting motion characteristics between non-adjacent interframe skeleton nodes and time motion characteristics of different scales, and obtaining a third dynamic gesture prediction label by using a long-term time perception network; and according to the obtained dynamic gesture prediction label, outputting a final gesture prediction label by utilizing a space-time multi-scale chain network model. According to the invention, improvement of the overall identification efficiency and the identification precision can be realized by optimizing the individual branches in a targeted manner.

Owner:SHANDONG UNIV

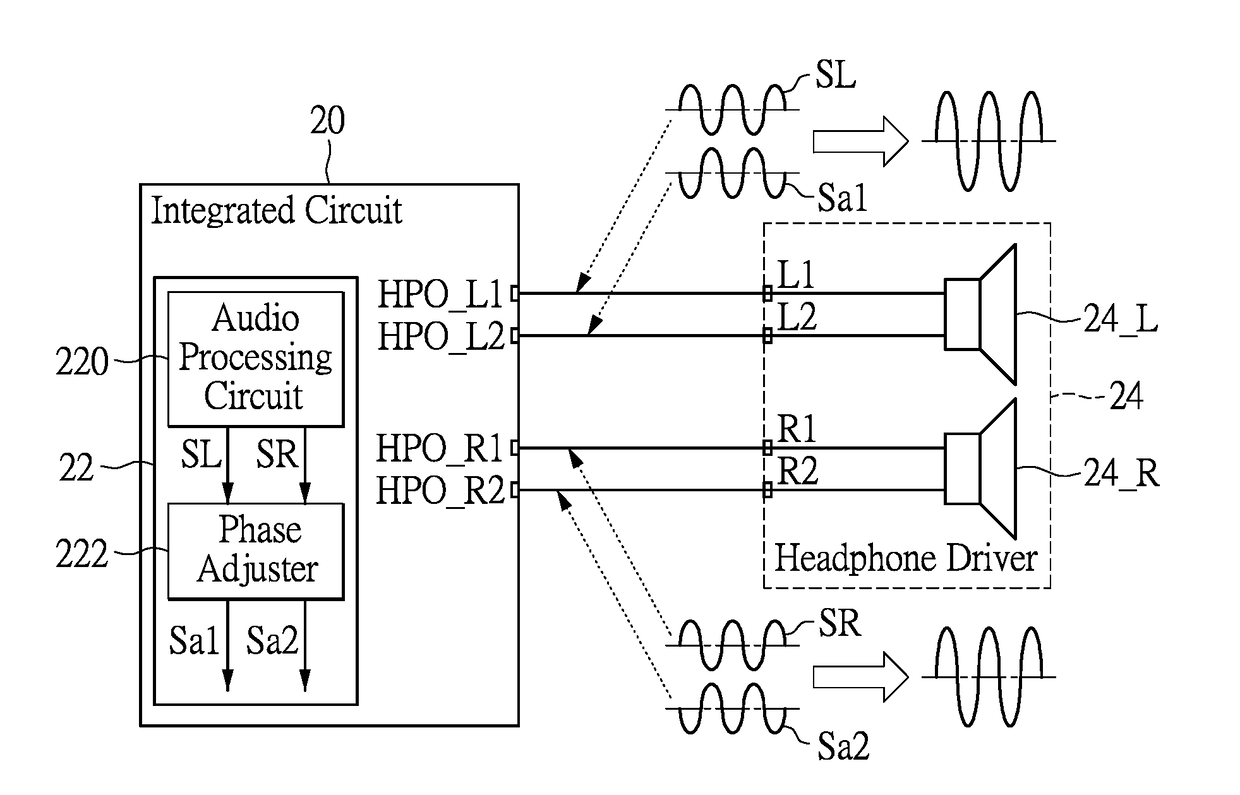

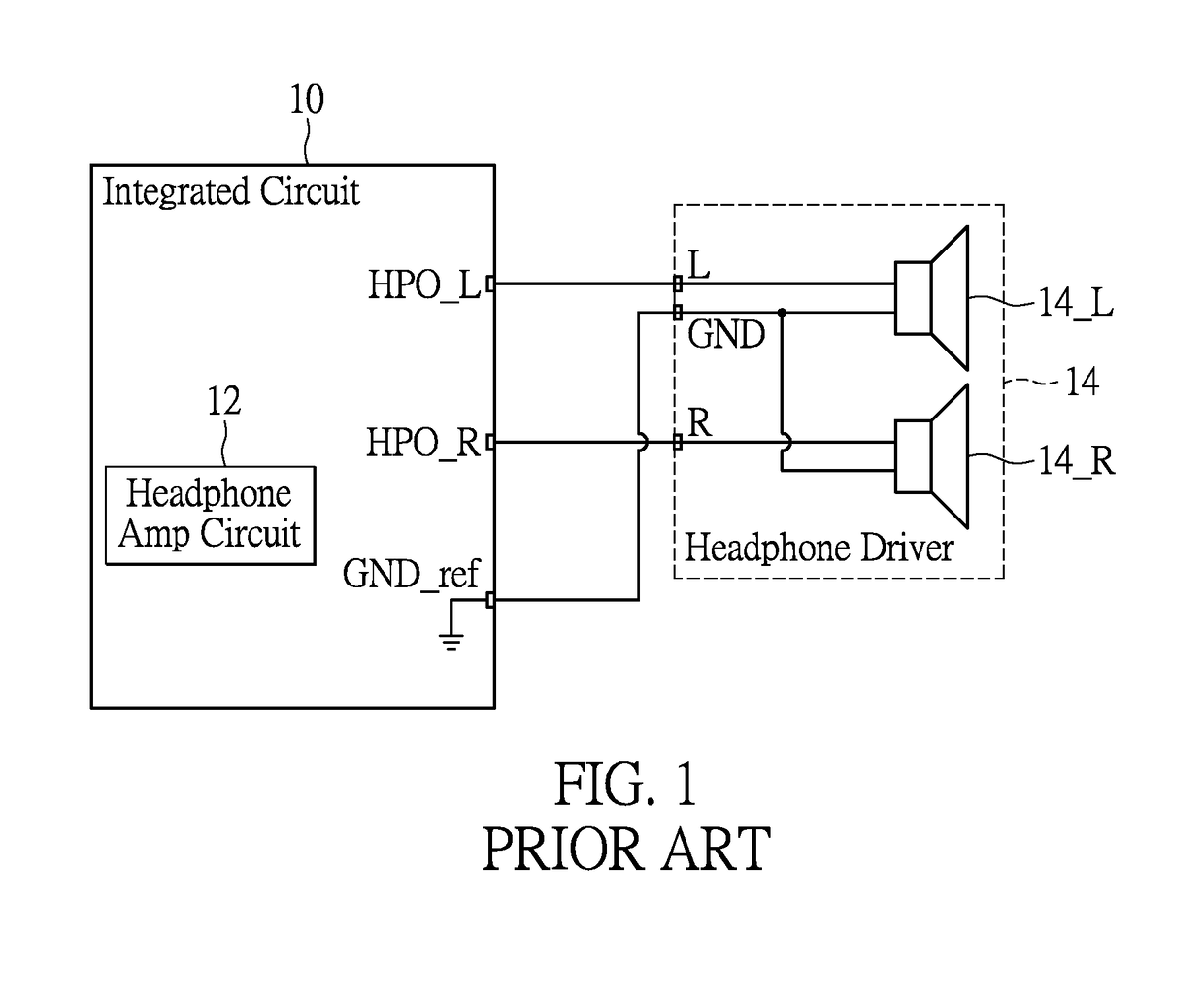

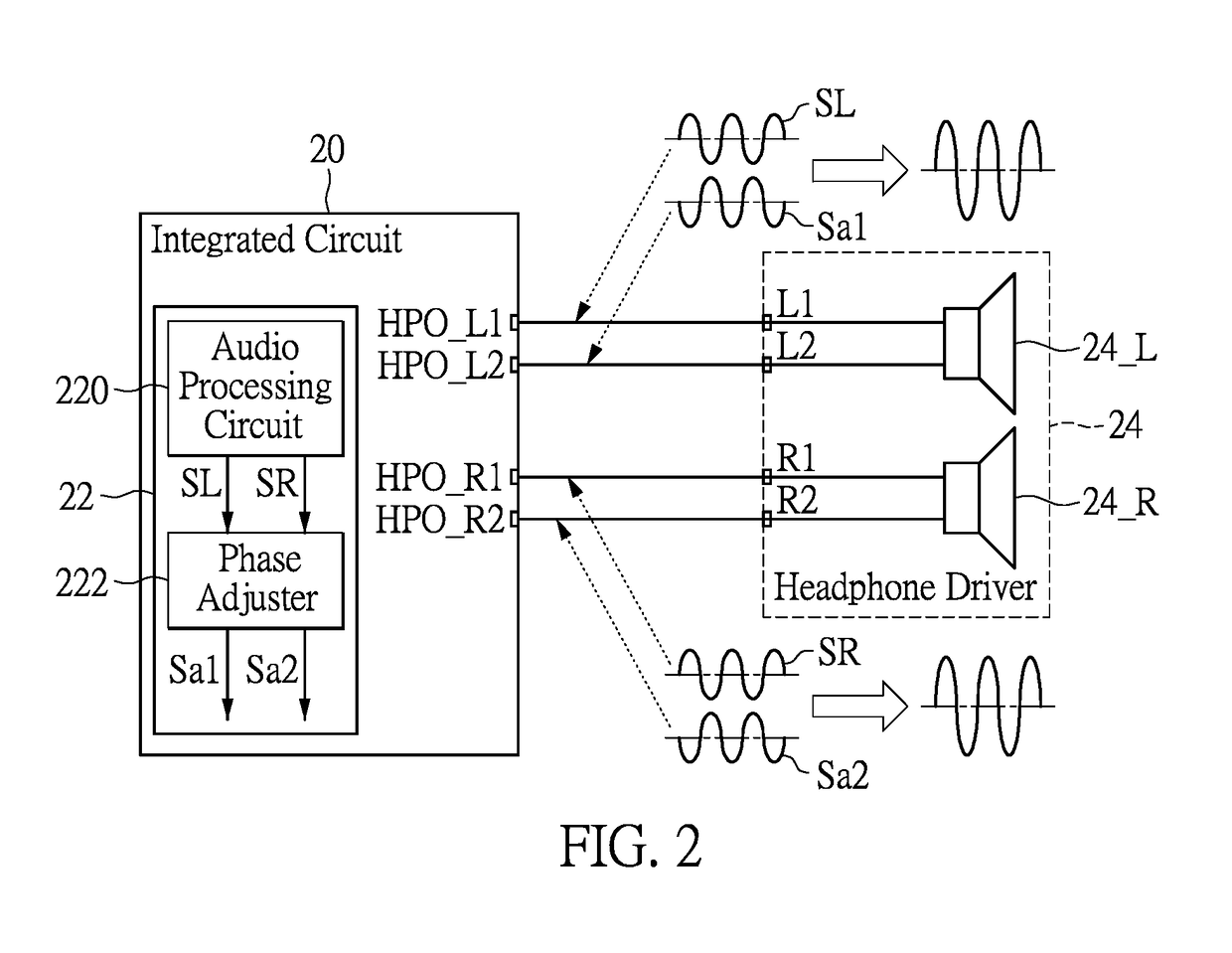

Headphone amplifier circuit for headphone driver, operation method thereof, and USB interfaced headphone device using the same

ActiveUS20180192220A1Effectively solve crosstalk issueImprove isolationHeadphones for stereophonic communicationGain controlHeadphone amplifierAudio power amplifier

Provided herein are a headphone amplifier circuit for a headphone driver, an operation method thereof, and a universal serial bus (USB) interfaced headphone device using the same. The output stage of the headphone amplifier circuit is improved to have a differential output structure so as to effectively solve the crosstalk issue and increase the isolation between the left and the right channels such that the audio content presents a spatial perception and a distance perception more specifically.

Owner:REALTEK SEMICON CORP

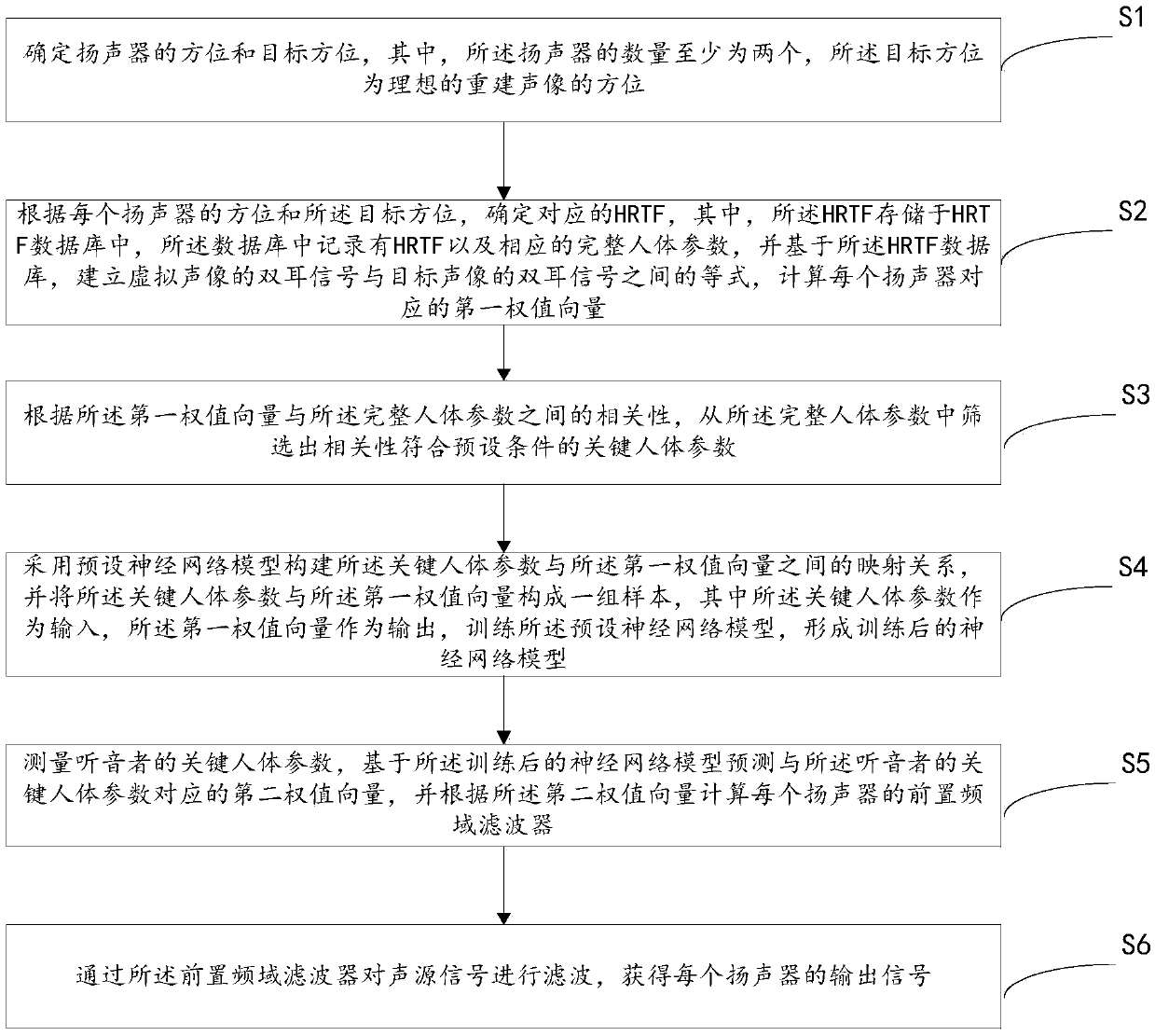

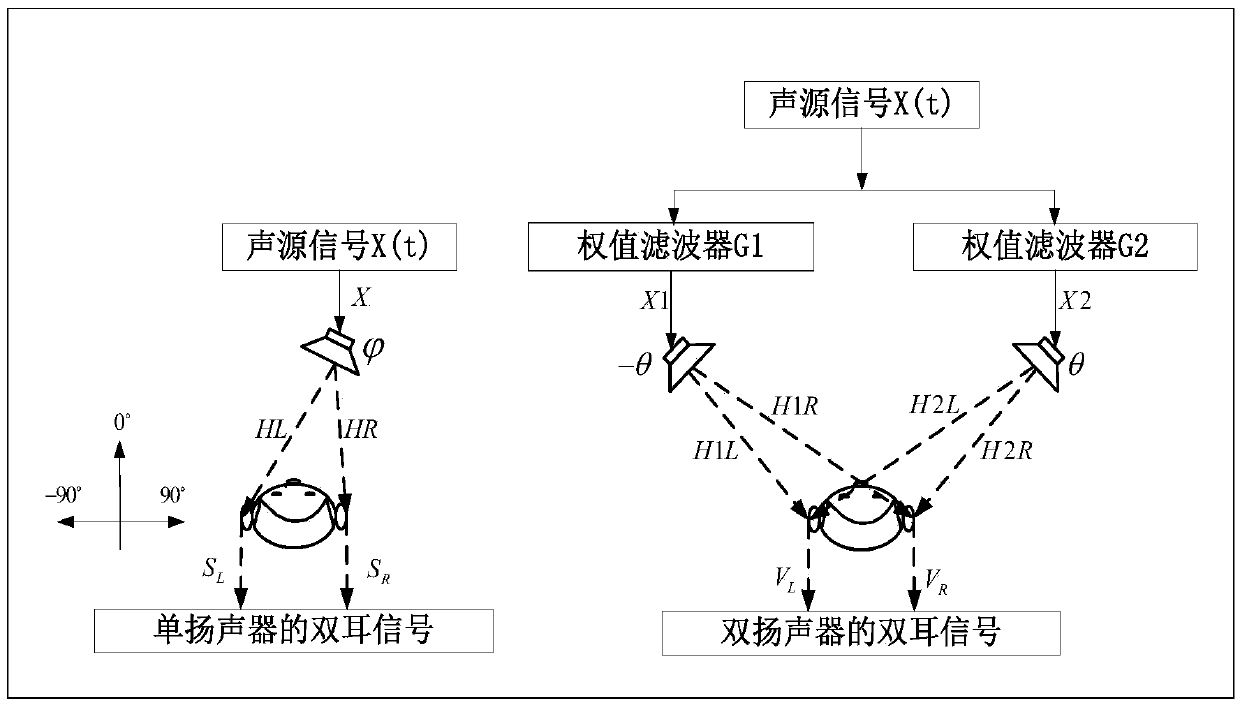

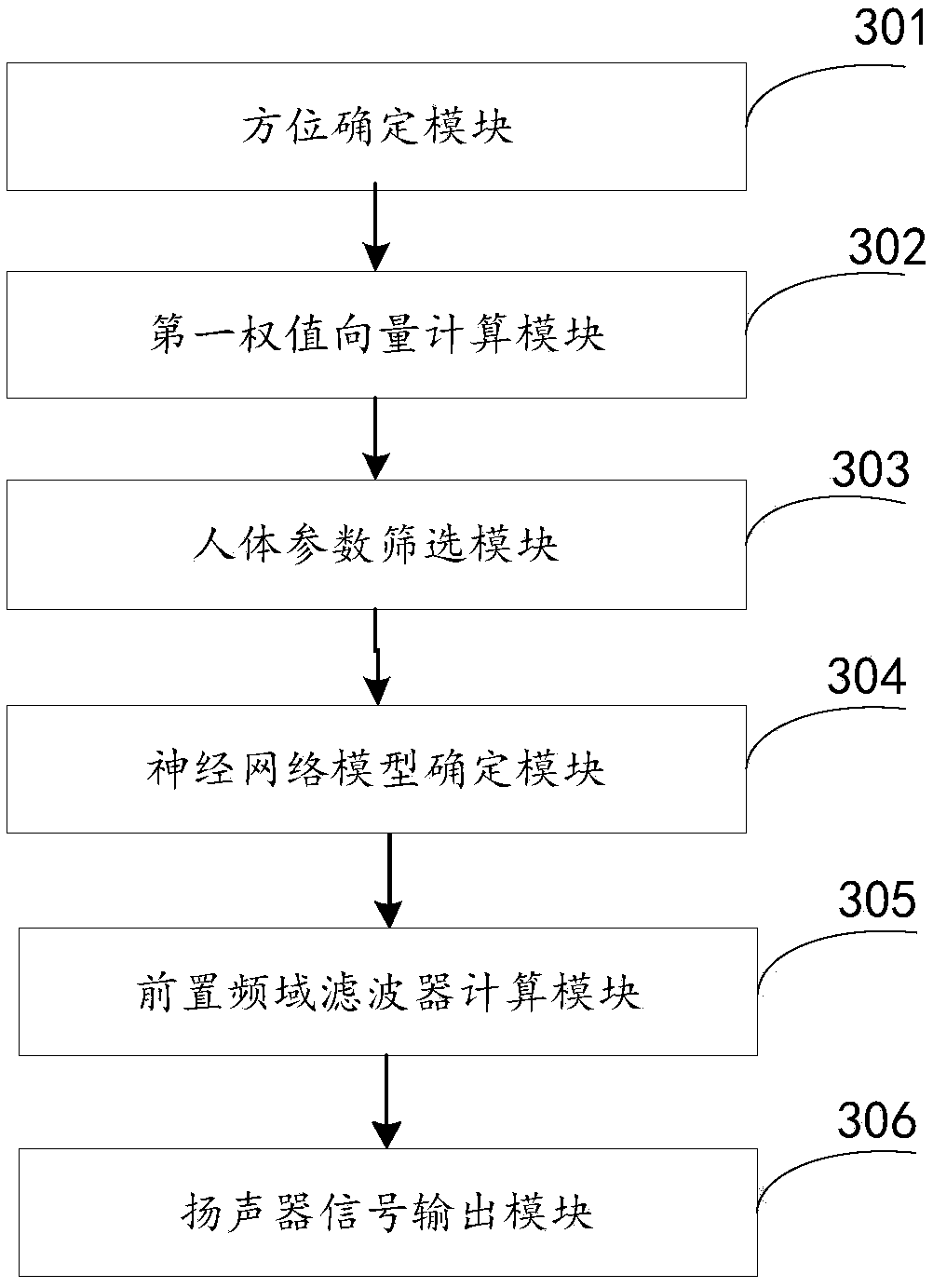

A loudspeaker-based audio-visual personalized reproduction method and device

ActiveCN109068262AImprove spatial perceptionReduce computational complexityFrequency response correctionLoudspeaker signals distributionPersonalizationSpatial perception

The invention provides a loudspeaker-based audio-visual personalized reproduction method and device, The method includes: first, determining the orientation of the speaker and the orientation of the target, and then calculating a first weight vector corresponding to the multi-loudspeakers based on the HRTF database, then screening the key human body parameters, next, designing the neural network to establish the mapping relationship between the first weight vector and the key human body parameters, then measuring the key human body parameters selected by the listener, predicting the corresponding second weight vector based on the neural network model, and calculating the pre-frequency domain filter of each loudspeaker according to the second weight vector, finally, filtering the sound source signal by the pre-frequency domain filter and outputting through the two loudspeakers. The invention realizes the technical effect of improving the spatial perception effect of the listener.

Owner:WUHAN UNIV

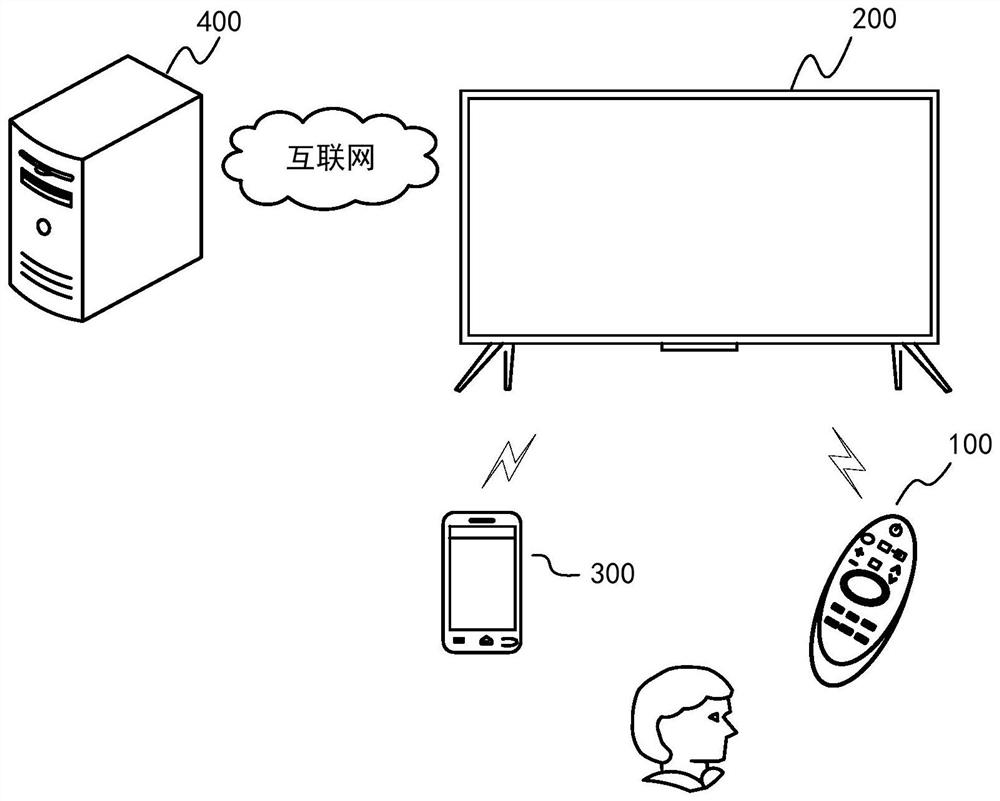

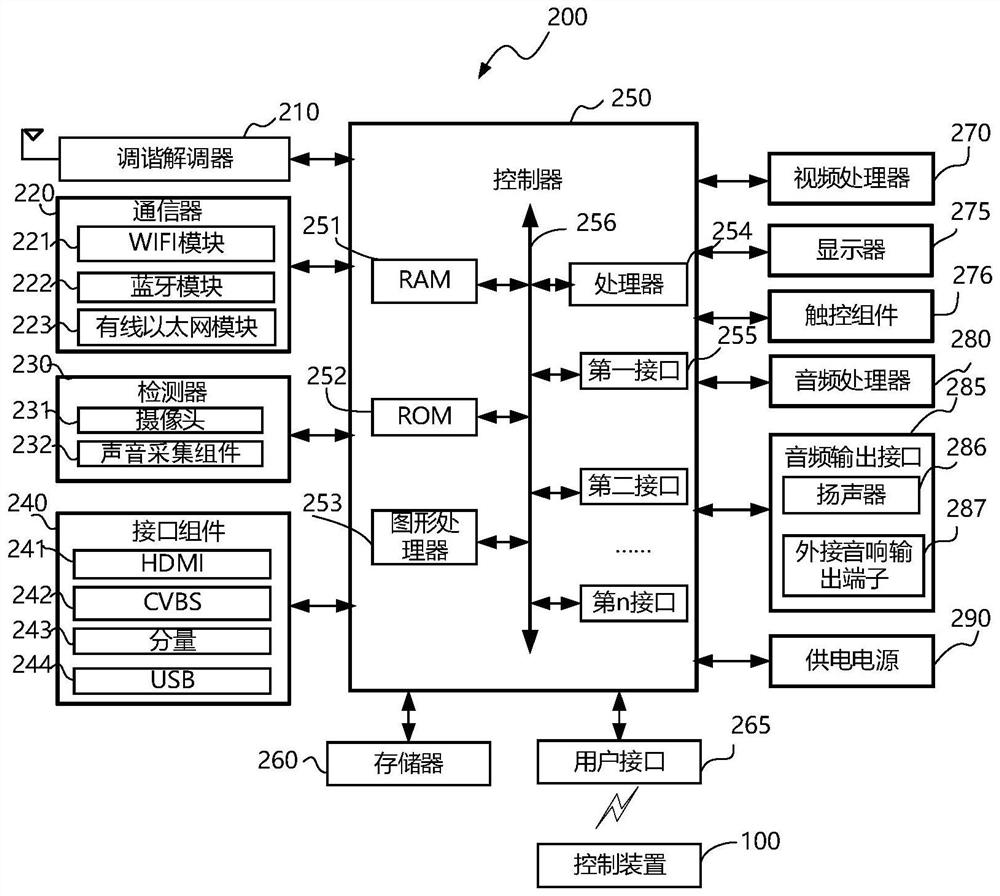

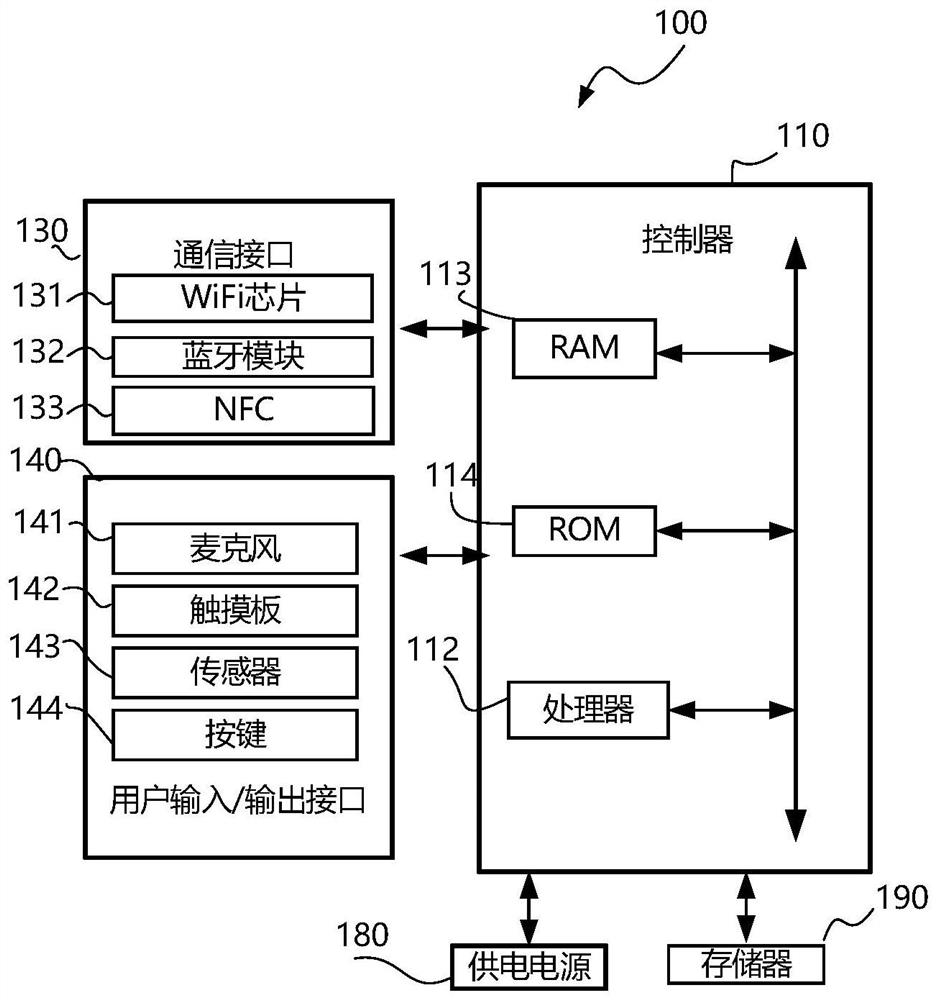

Display device and sound image figure positioning and tracking method

ActiveCN112866772APrecise positioningTelevision system detailsPosition fixationSound imageSpatial perception

The invention provides a display device and a sound image figure positioning and tracking method, and the method comprises the steps: obtaining a test audio signal to position a target orientation, calculating a rotation angle according to the target orientation and the current posture of a camera, generating a rotation instruction according to the rotation angle, and transmitting the rotation instruction to the camera. According to the method, the approximate position of a person can be determined by utilizing the spatial perception capability of sound source positioning, the camera is driven to face the sound source direction, then the person detection is carried out on the shot image by utilizing image analysis, the specific position is determined to drive the camera to carry out fine adjustment, accurate positioning is achieved, and the person shot by the camera can be focused and displayed in the image.

Owner:HISENSE VISUAL TECH CO LTD

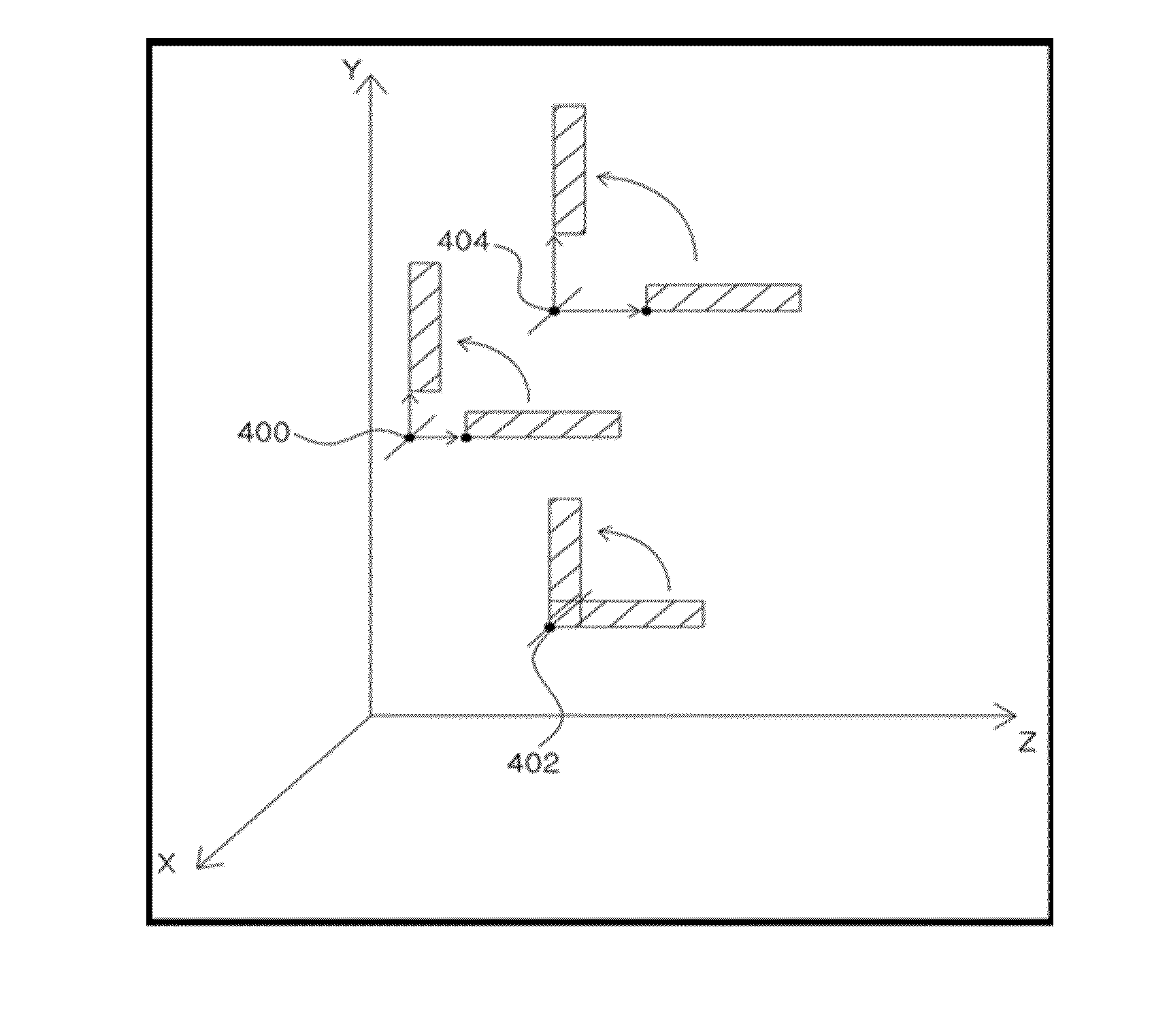

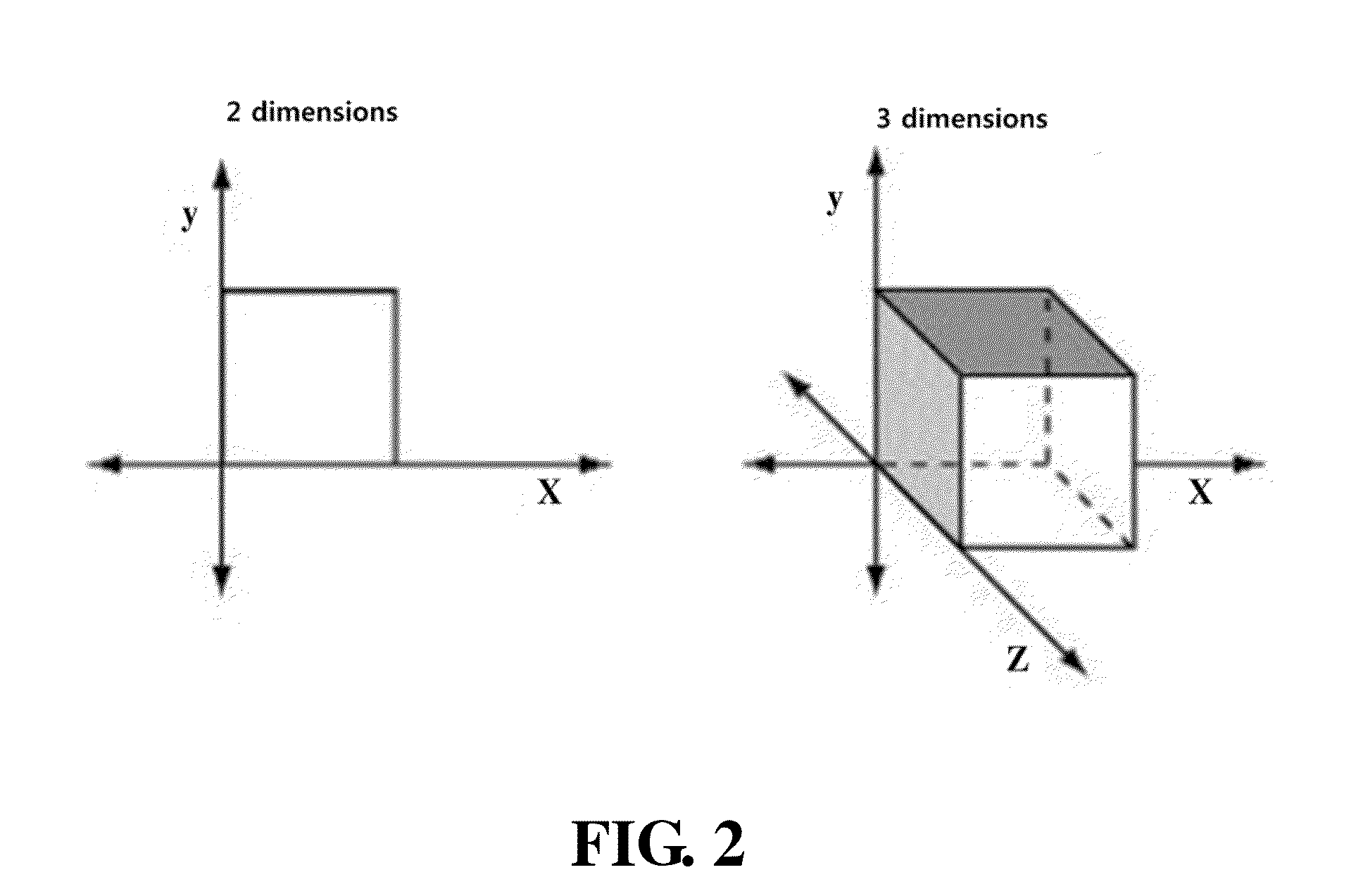

Method and recorded medium for providing 3D information service

ActiveUS20130174098A1Improve legibilityImprove depth perception2D-image generationSteroscopic systemsRotational axisSpatial perception

A method of providing a 3D information service at a user terminal includes: receiving a first request of a user for displaying information; and displaying information elements, which have different depths along the Z axis orthogonal to a screen (XY plane), by rotating the information elements about any one of the X axis and the Y axis, where the rotational axis of each of the information elements is set at different points on the YZ plane or the XZ plane. According to certain embodiments of the invention, the information elements on a screen may be shown as planar elements in a still screen for greater legibility, but when the information elements are in motion, such as for changing the screen or moving a content element, the motion is provided with differing speeds according to depth, thereby providing a sense of spatial perception unique to 3-dimensional images.

Owner:ALTIMEDIA CORP

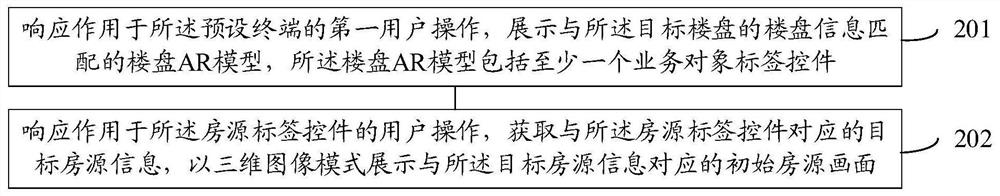

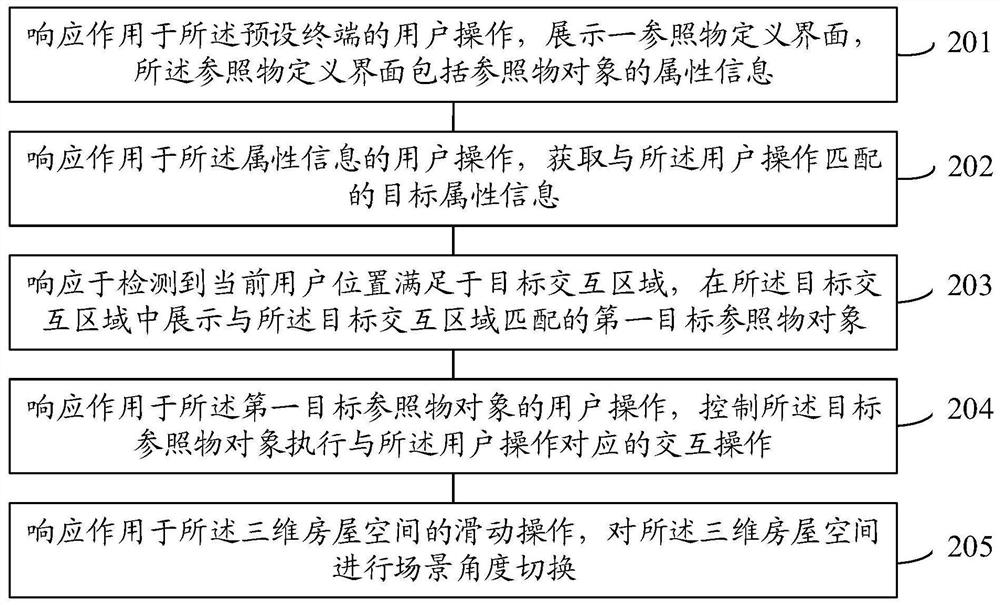

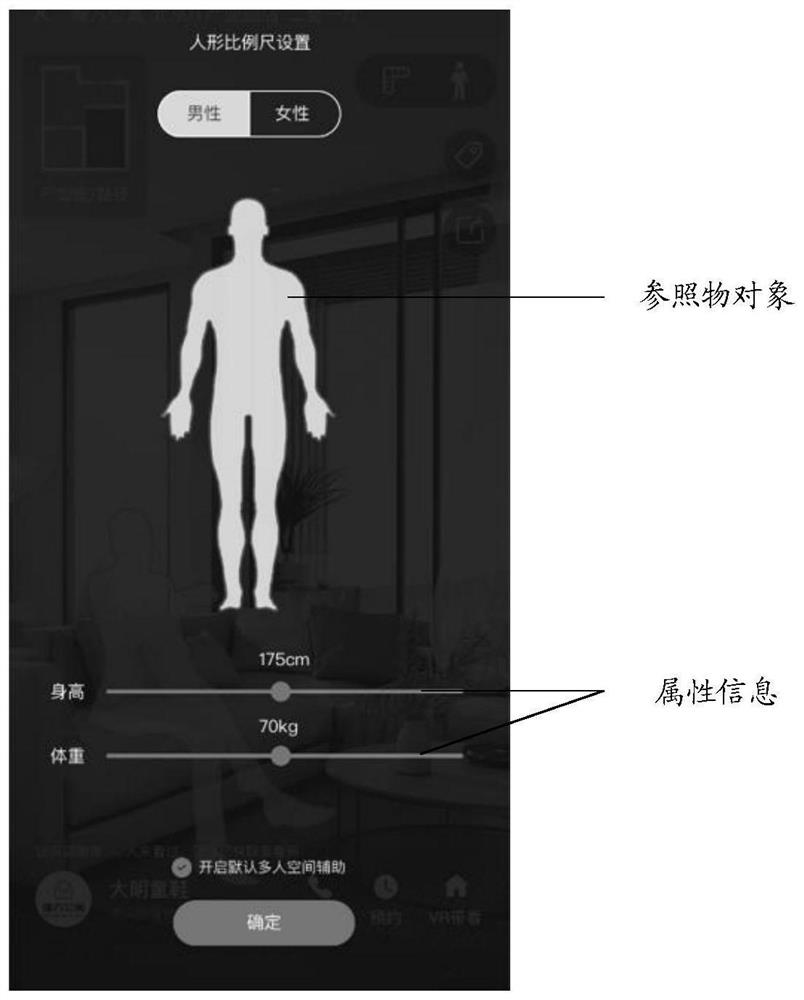

House resource interaction method and device

PendingCN112051956AIncrease spatial awarenessBuying/selling/leasing transactionsInput/output processes for data processingSpatial perceptionEngineering

The embodiment of the invention provides a house resource interaction method and device. A user terminal can establish a three-dimensional house space according to house resource data of a physical house resource and display the three-dimensional house space through an image user interface, the three-dimensional house space can comprise at least one interaction area, and the interaction area can correspond to at least one reference object. When the user browses the three-dimensional house space, the terminal can respond to a fact that the detected current position information of the user meetsa target interaction area, and the corresponding target reference object is displayed in the target interaction area so that in the process that the user browses the house resource, the reference object is provided in the three-dimensional house space, and spatial perception of the user to the current house resource can be effectively improved.

Owner:BEIJING 58 INFORMATION TTECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com