Patents

Literature

233 results about "Spatial parameter" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Spatial Parameters. Specifies whether to write geometry (planar data) or geography (geodetic data) when writing to tables. This parameter works only in combination with the Spatial Column parameter. Specifies the geometry or geography column to use when writing to tables.

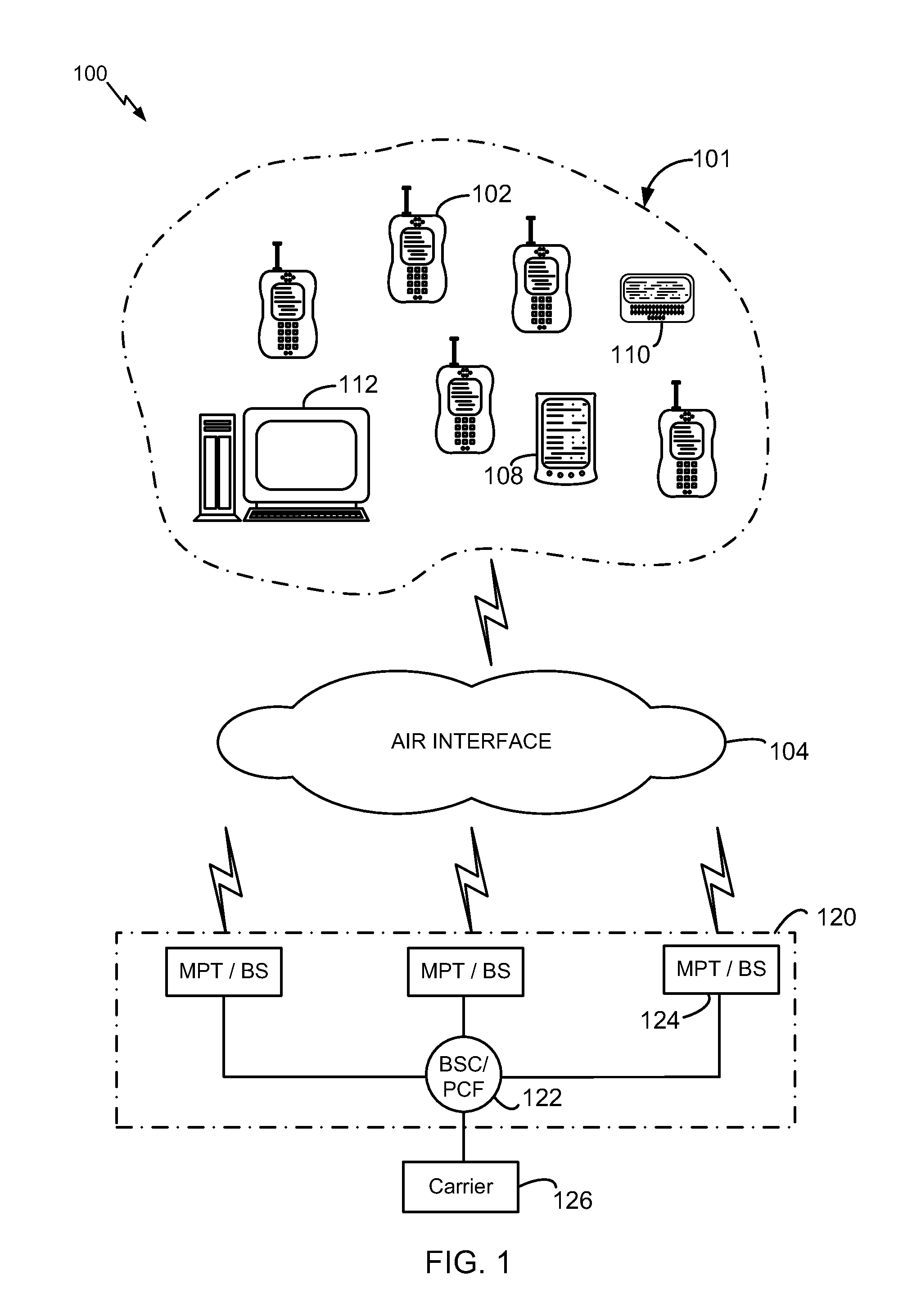

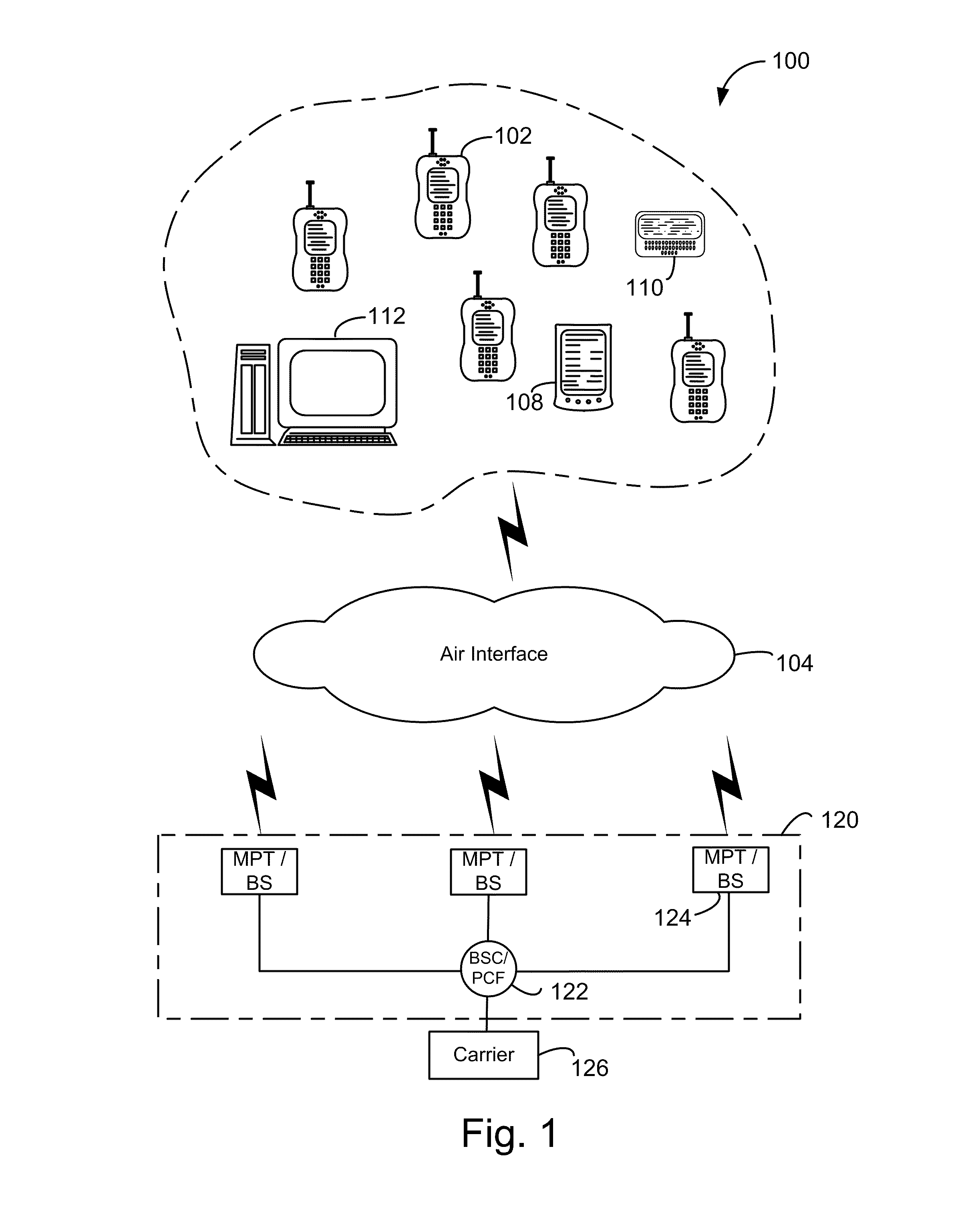

Optimizing content and communication in multiaccess mobile device exhibiting communication functionalities responsive of tempo spatial parameters

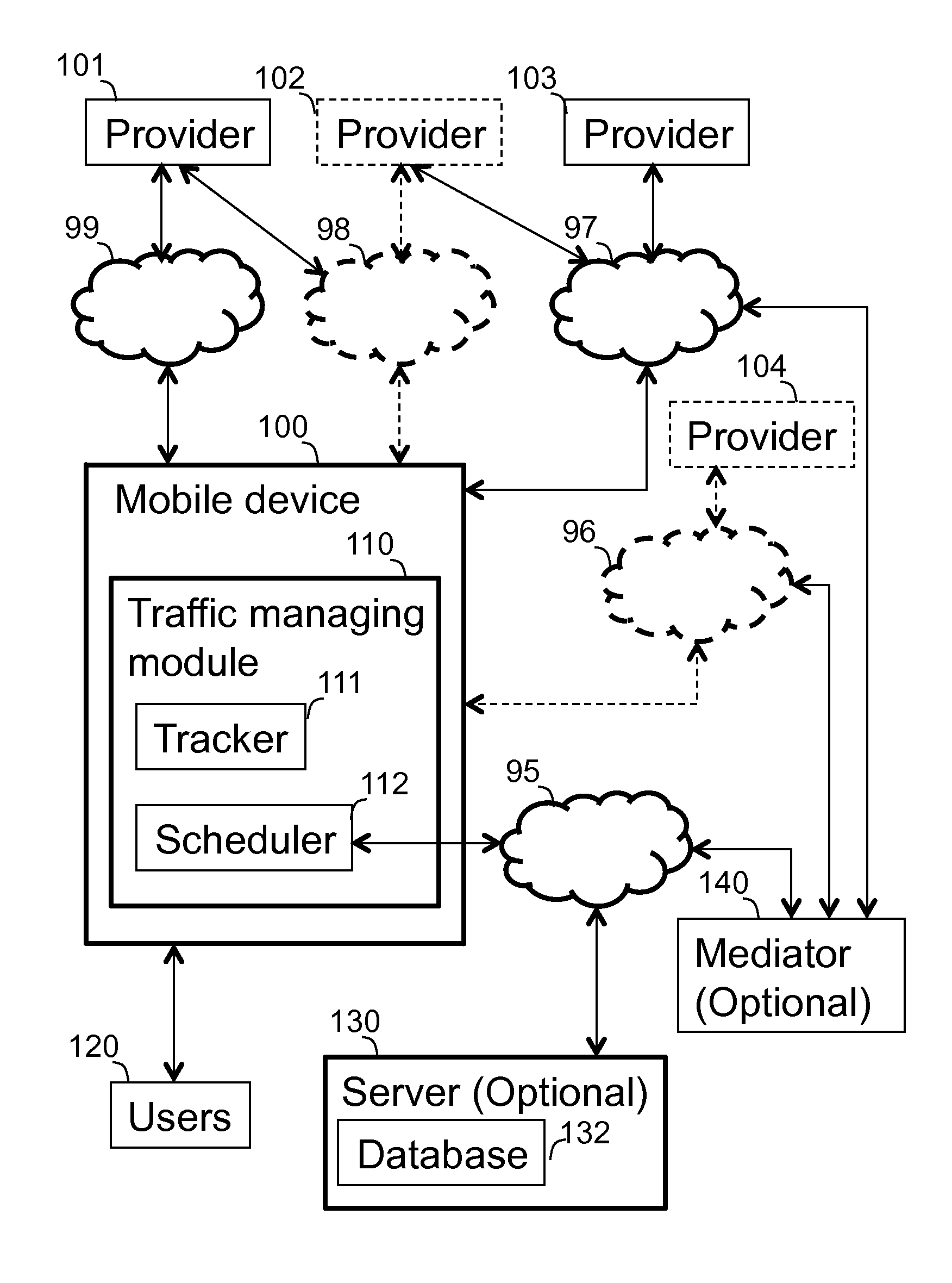

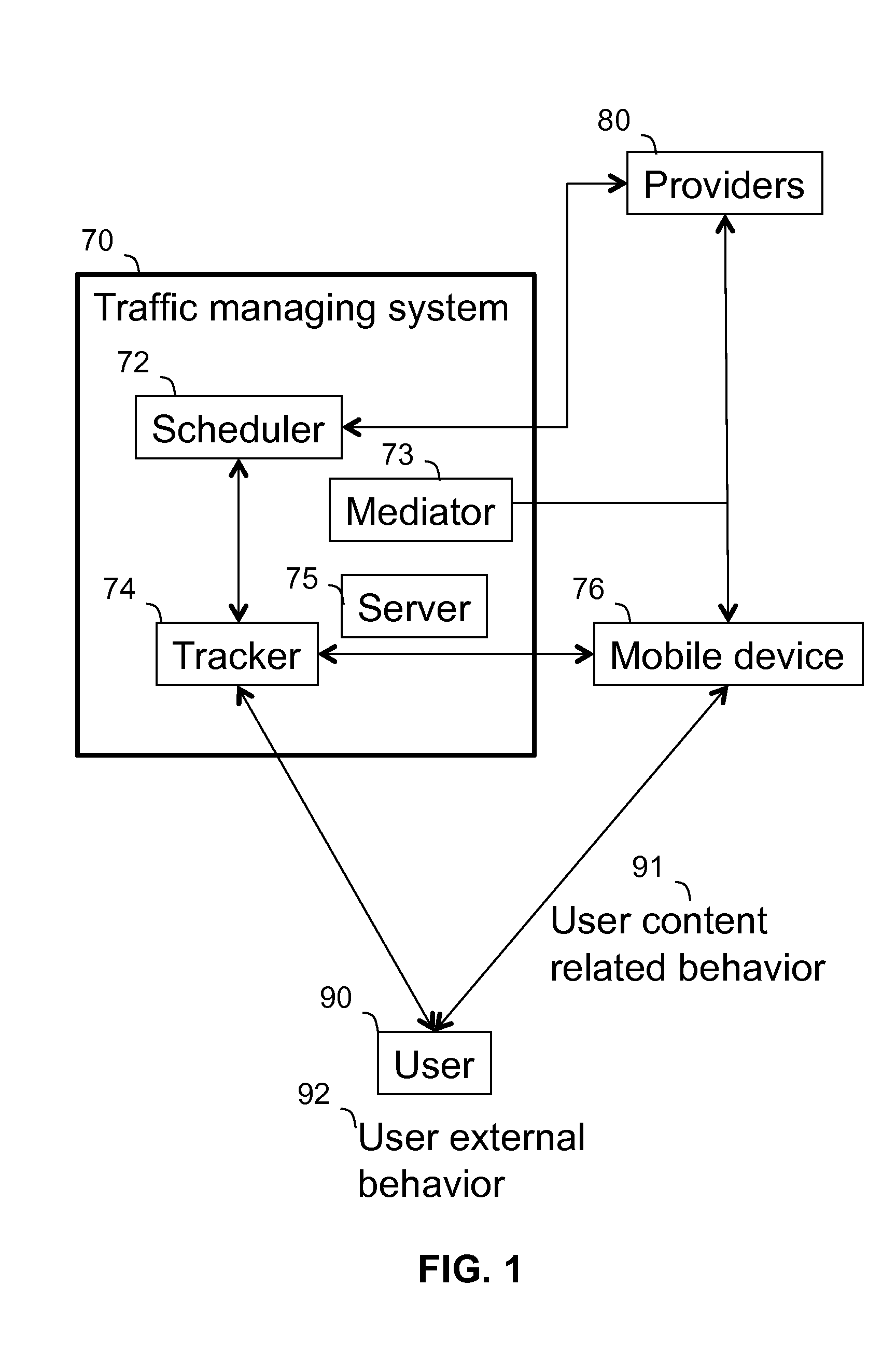

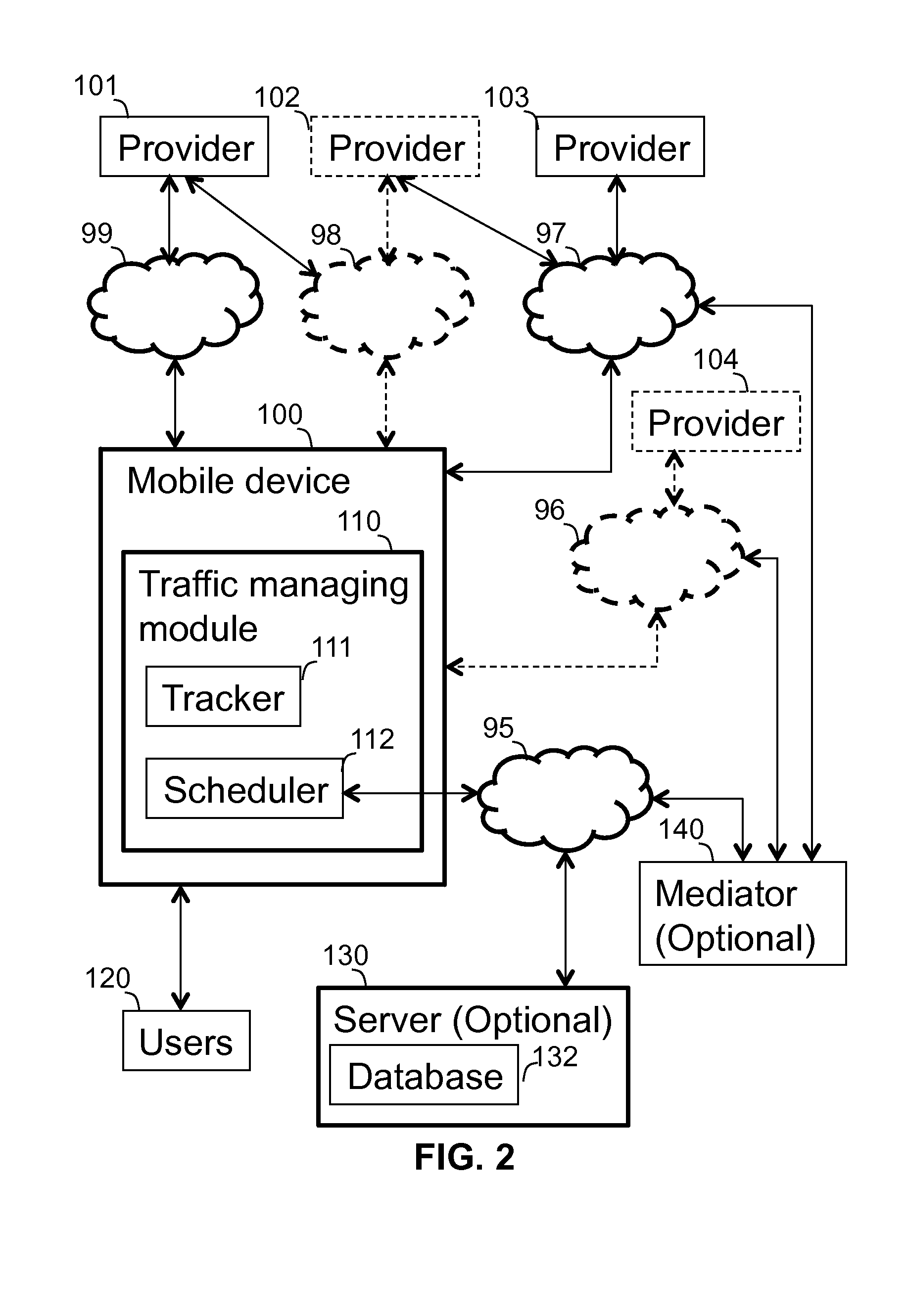

A content and traffic managing system operatively associated with and a computer implemented method of managing traffic of a mobile device exhibiting communication functionality. The mobile device is connectable to users and to content providers via communication links. The system tracks various parameters over time, and schedules communication, both in relation to predefined or projected content responsive of the following: users' content related behavior, users' communication behavior, users' external behavior, and parameters of communication links. The method comprises: (i) tracking users' content related behavior, communication behavior and users' external behavior over time; (ii) tracking parameters of communication links over time; (iii) scheduling and initiating communication related to predefined or projected content responsive of the above mentioned criteria at time slots selected such that the communication is performed in view of users' predefined or projected preferences in accordance with the parameters of communication links.

Owner:VELOCEE

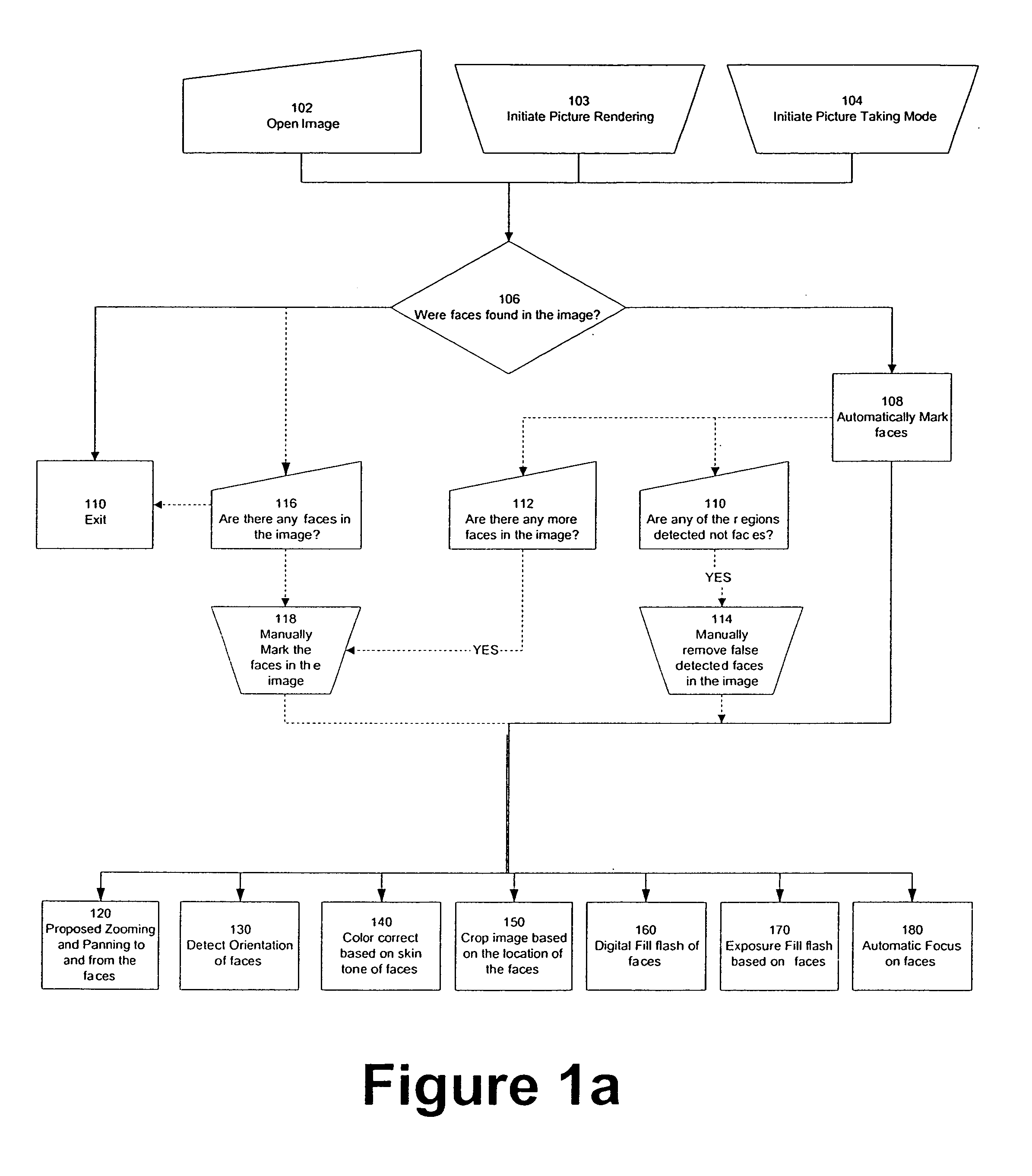

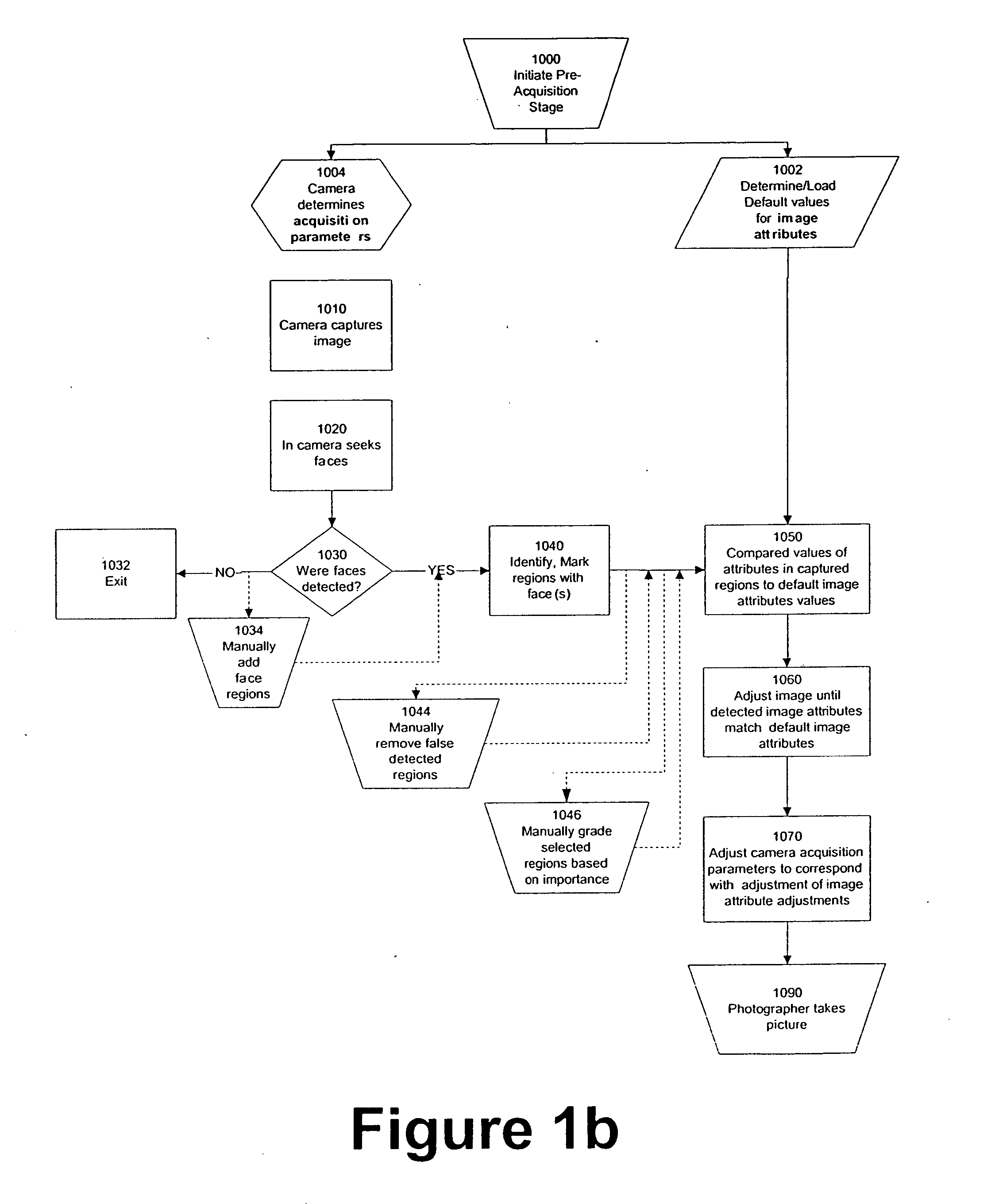

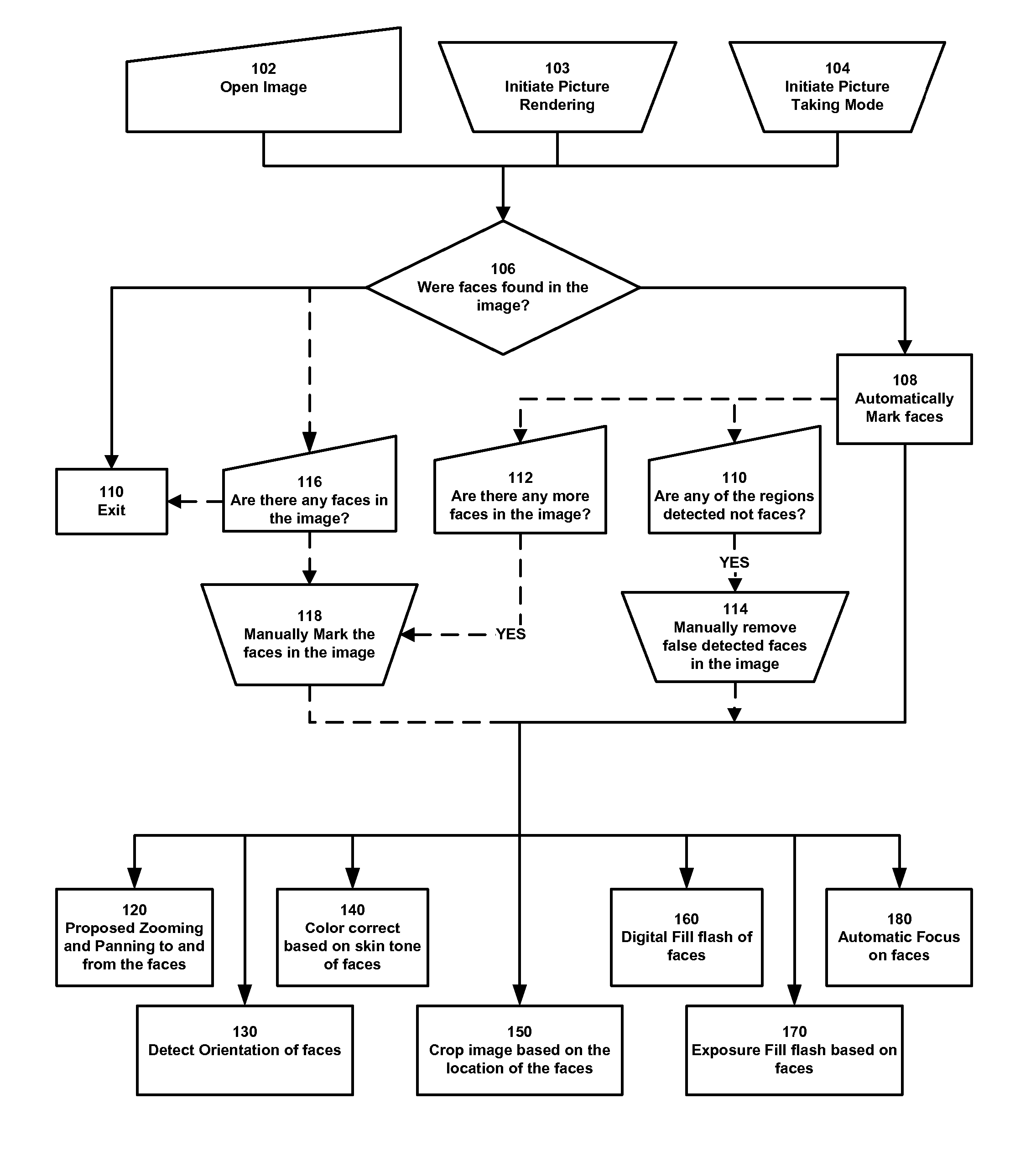

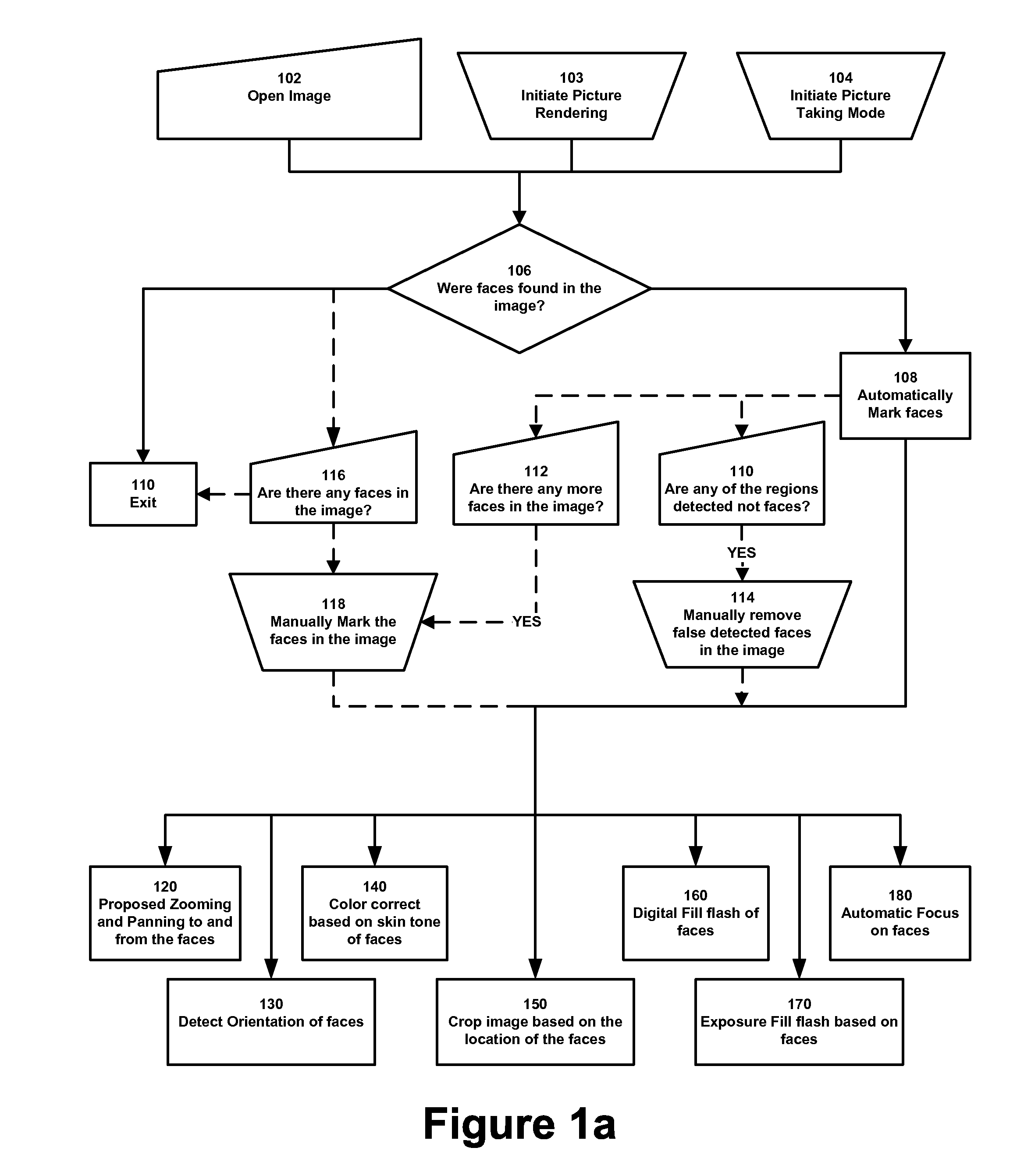

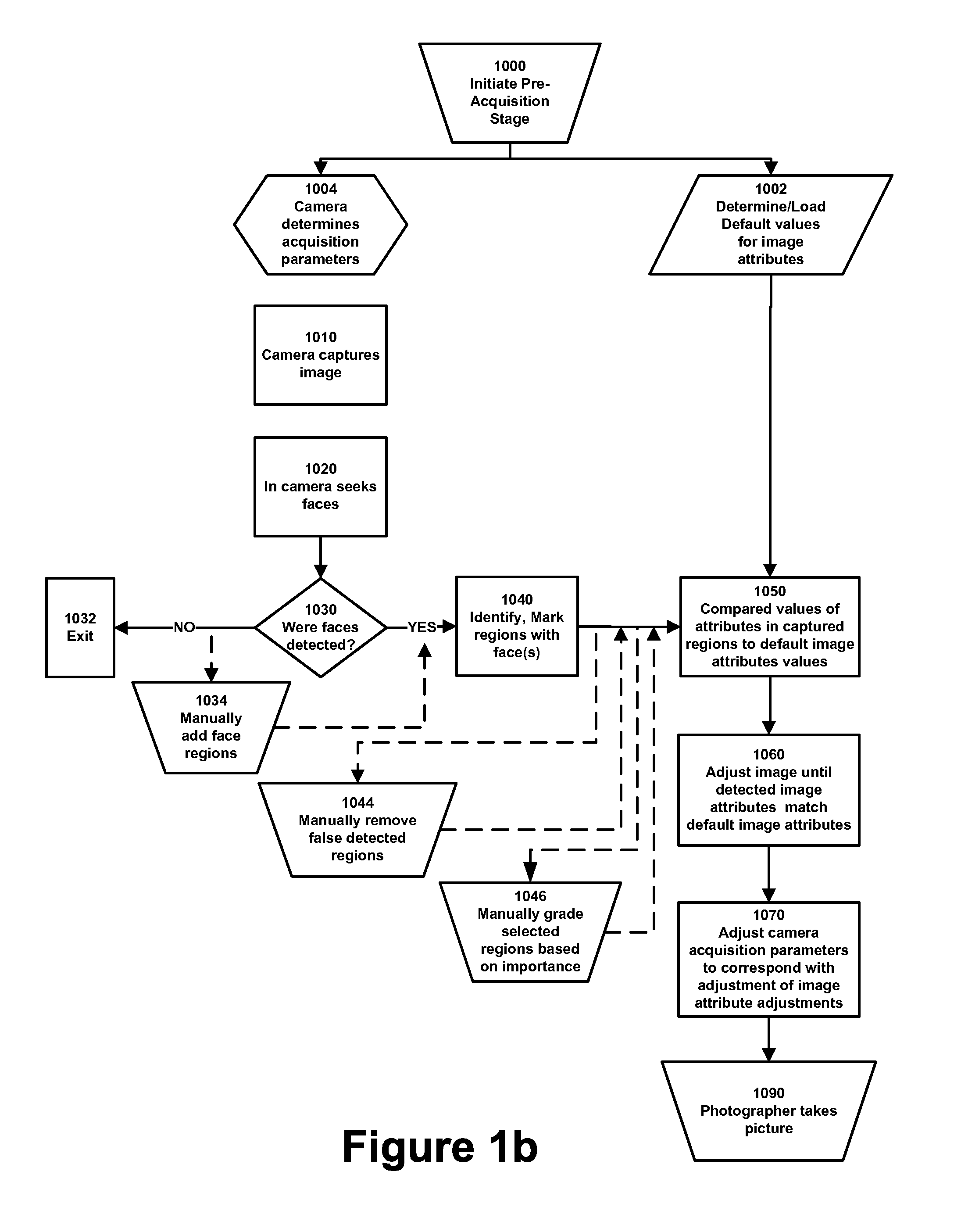

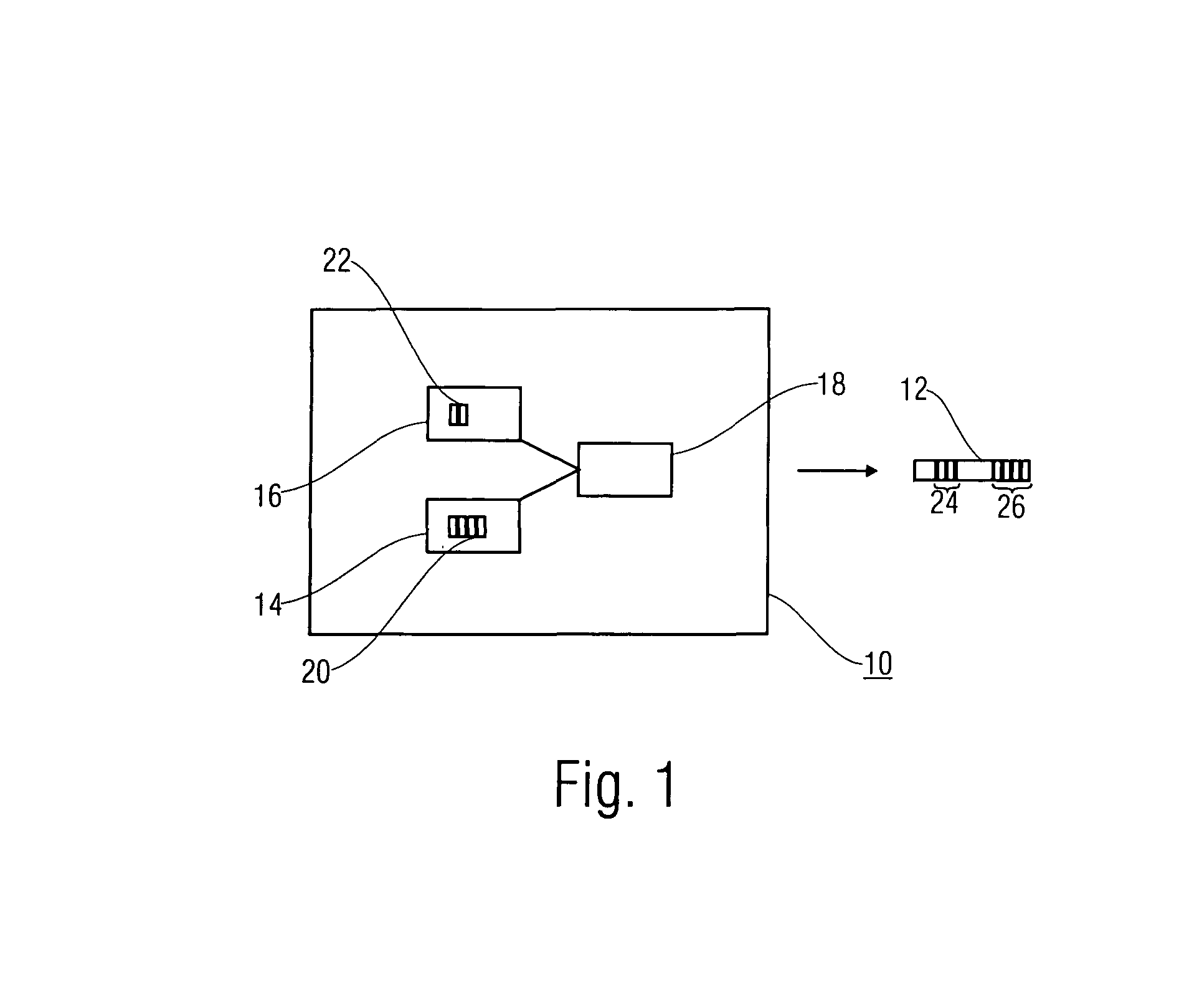

Modification of viewing parameters for digital images using face detection information

ActiveUS20060204034A1Generate imageImage enhancementTelevision system detailsFace detectionAnimation

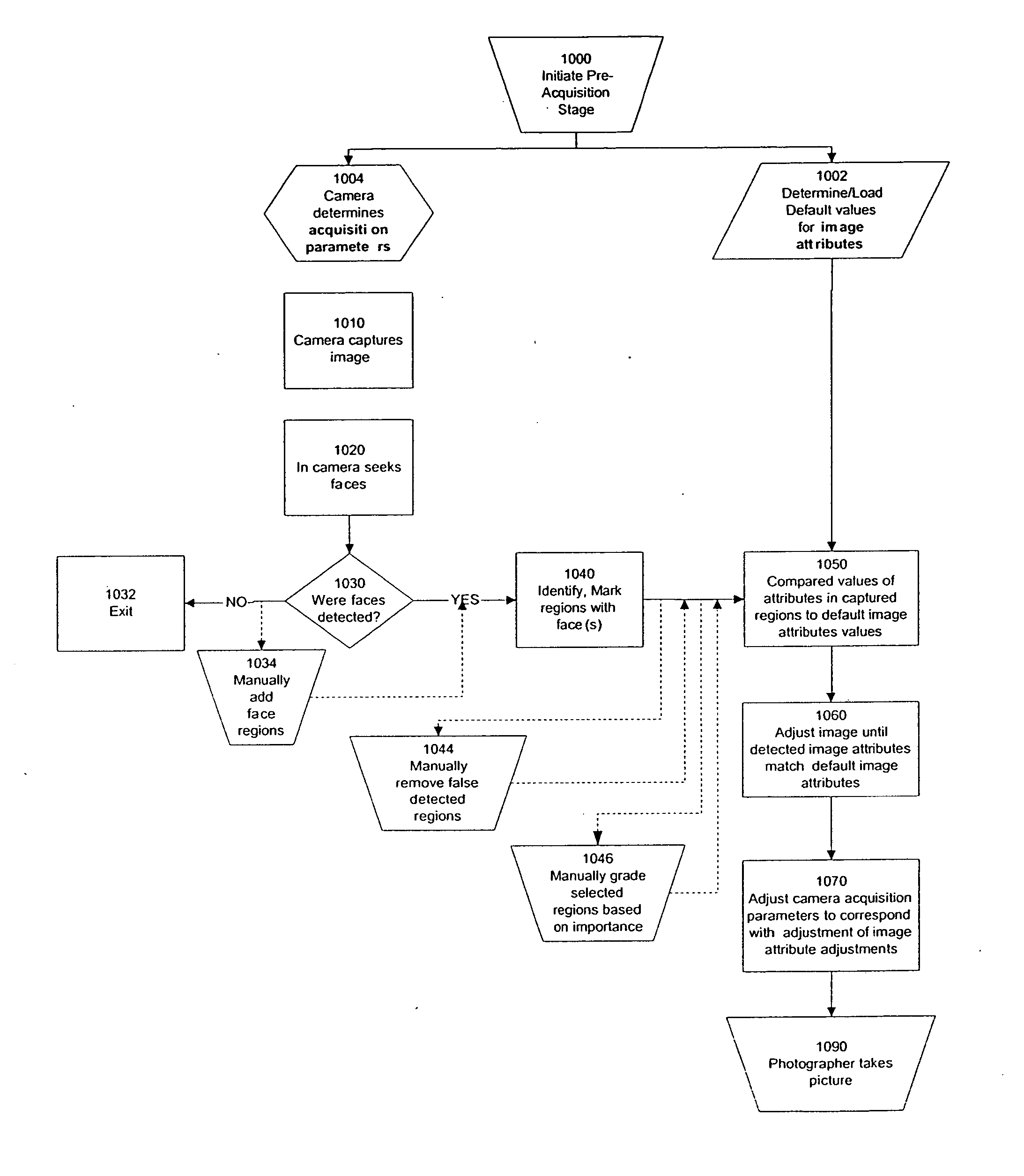

A method of modifying the viewing parameters of digital images using face detection for achieving a desired spatial parameters based on one or more sub-groups of pixels that correspond to one or more facial features of the face. Such methods may be used for animating still images, automating and streamlining application such as the creation of slide shows and screen savers of images containing faces.

Owner:FOTONATION LTD

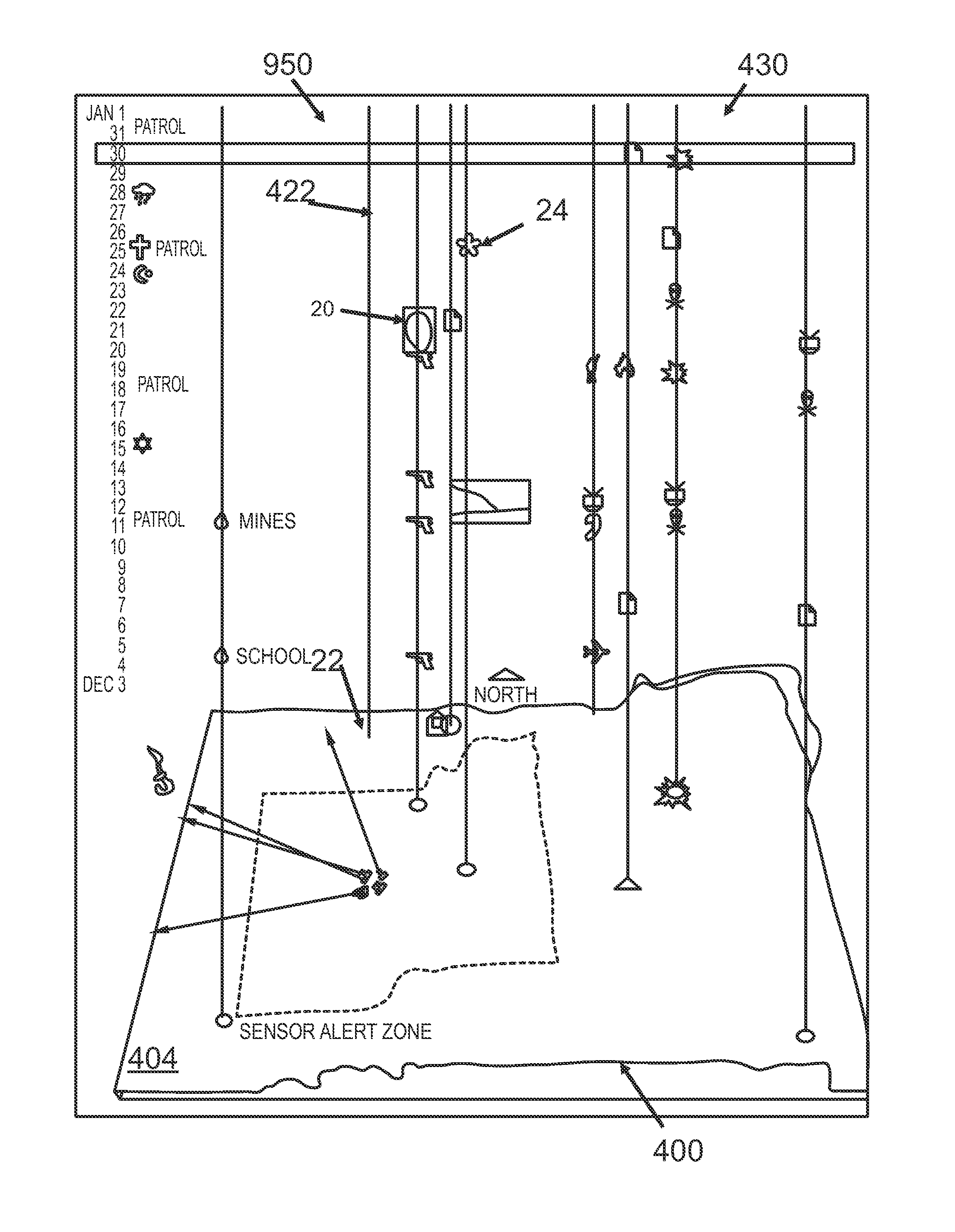

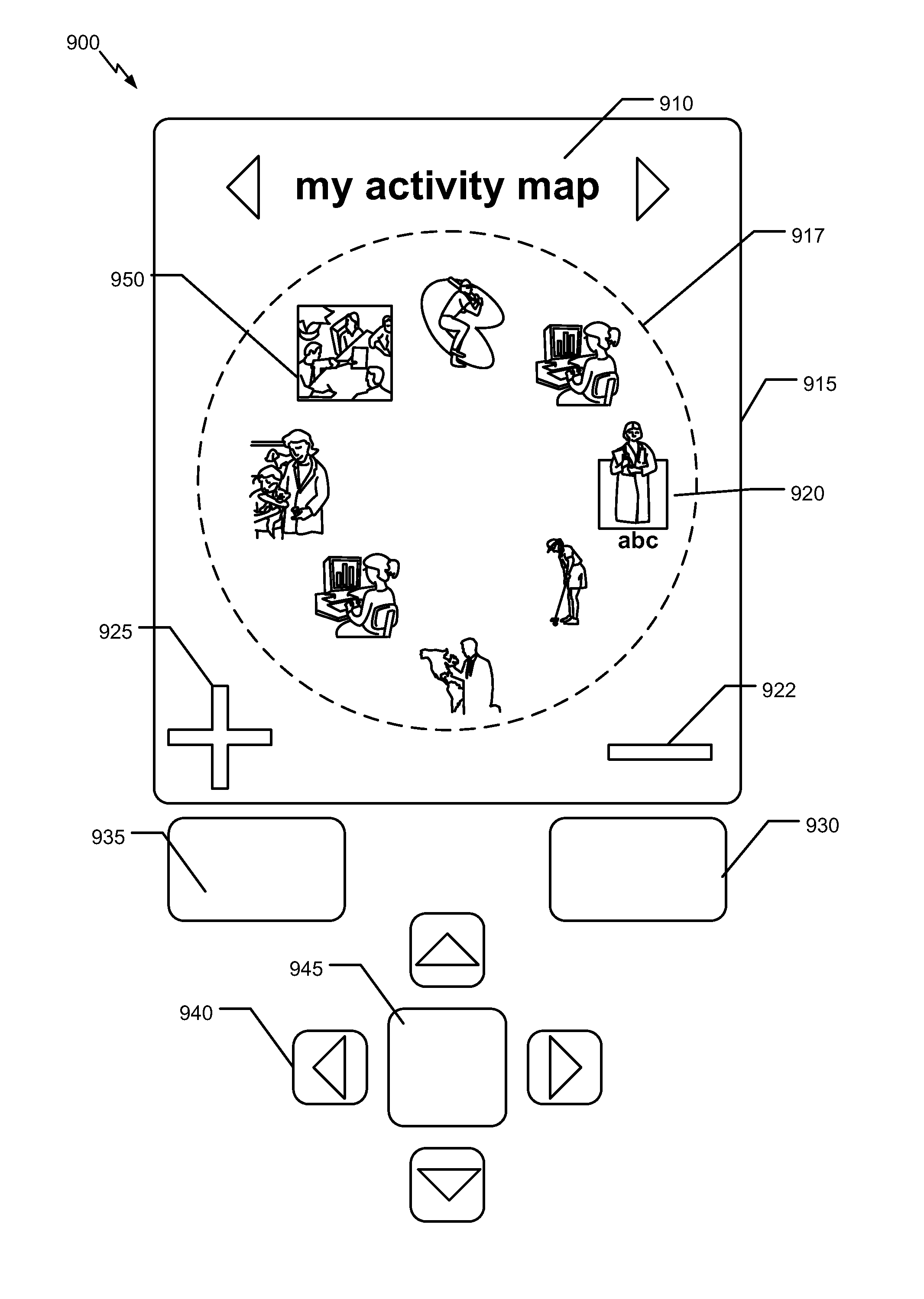

System and method for visualizing connected temporal and spatial information as an integrated visual representation on a user interface

ActiveUS20100185984A12D-image generationGeographical information databasesVisual perceptionComputer science

A system and method for configuring the presentation of a plurality of presentation elements in a visual representation on a user interface, the presentation elements having both temporal and spatial parameters, the method comprising the steps of: defining a time bar with a time scale having time indicators as subdivisions of the time scale and having a first global temporal limit and a second temporal global limit of the time scale for defining a temporal domain of the presentation elements, defining a focus range of the time bar such that the focus range has a first local temporal limit and a second local temporal limit wherein the first local temporal limit is greater than or equal to the first global temporal limit and the second local temporal limit is less than or equal to the second global temporal limit; defining a focus bar having a focus time scale having focus time indicators as subdivisions of the focus time scale and having the first and second local temporal limits as the extents of the focus time scale, such that the focus time scale is an expansion of the time scale; and displaying a set of presentation elements selected from the plurality of presentation elements based on the respective temporal parameter of each of the set of presentation elements is within the first and second local temporal limits.

Owner:PEN LINK LTD

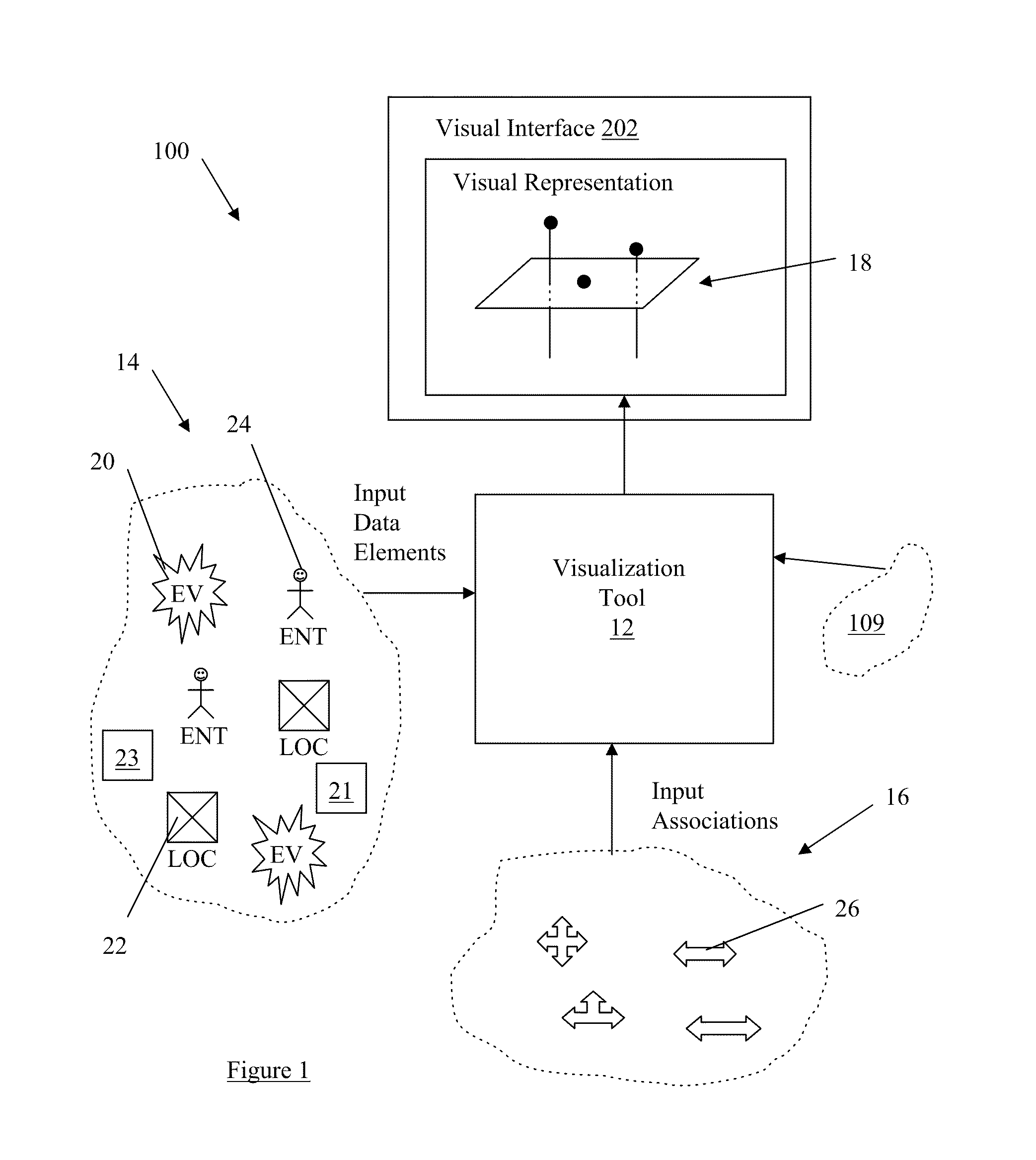

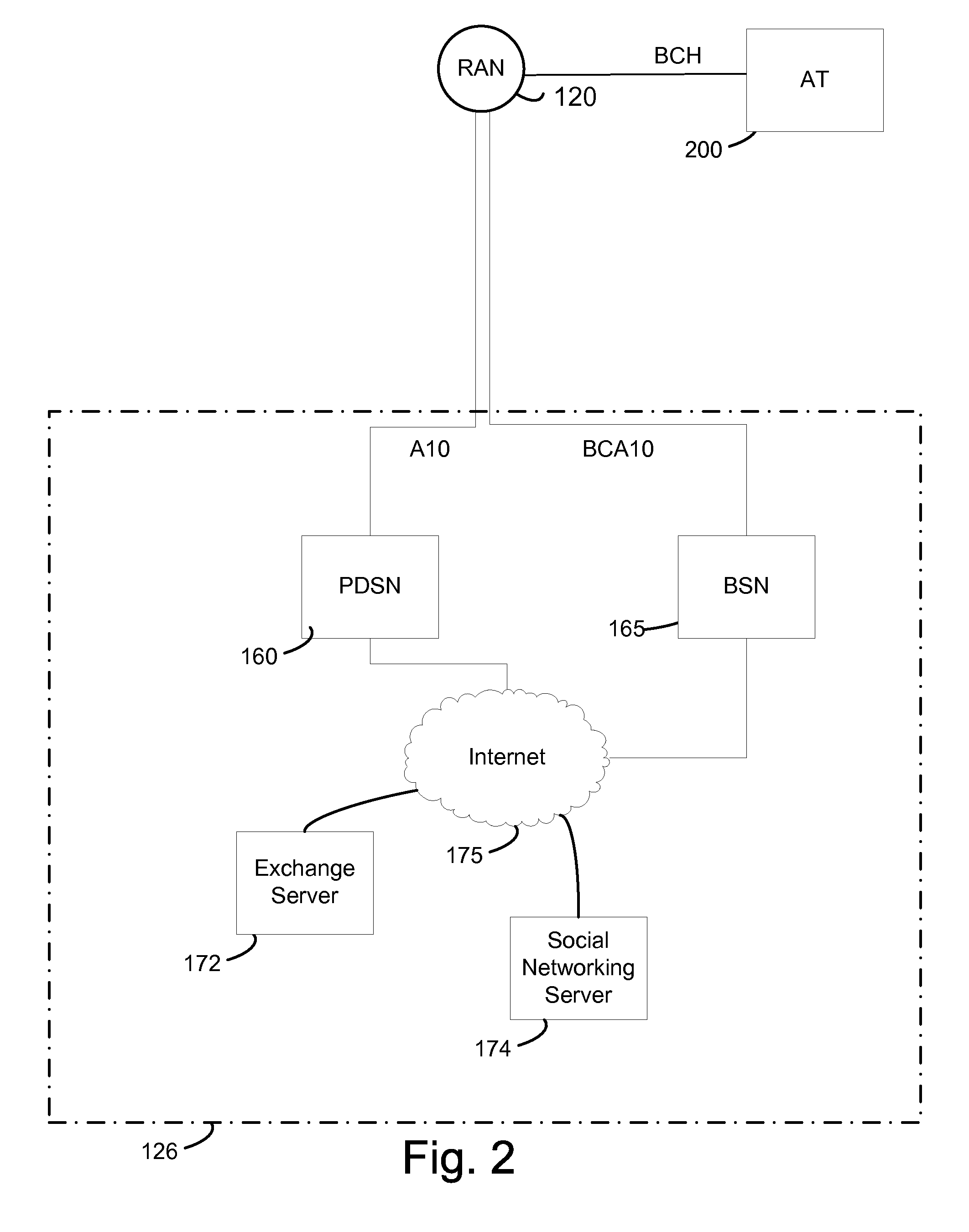

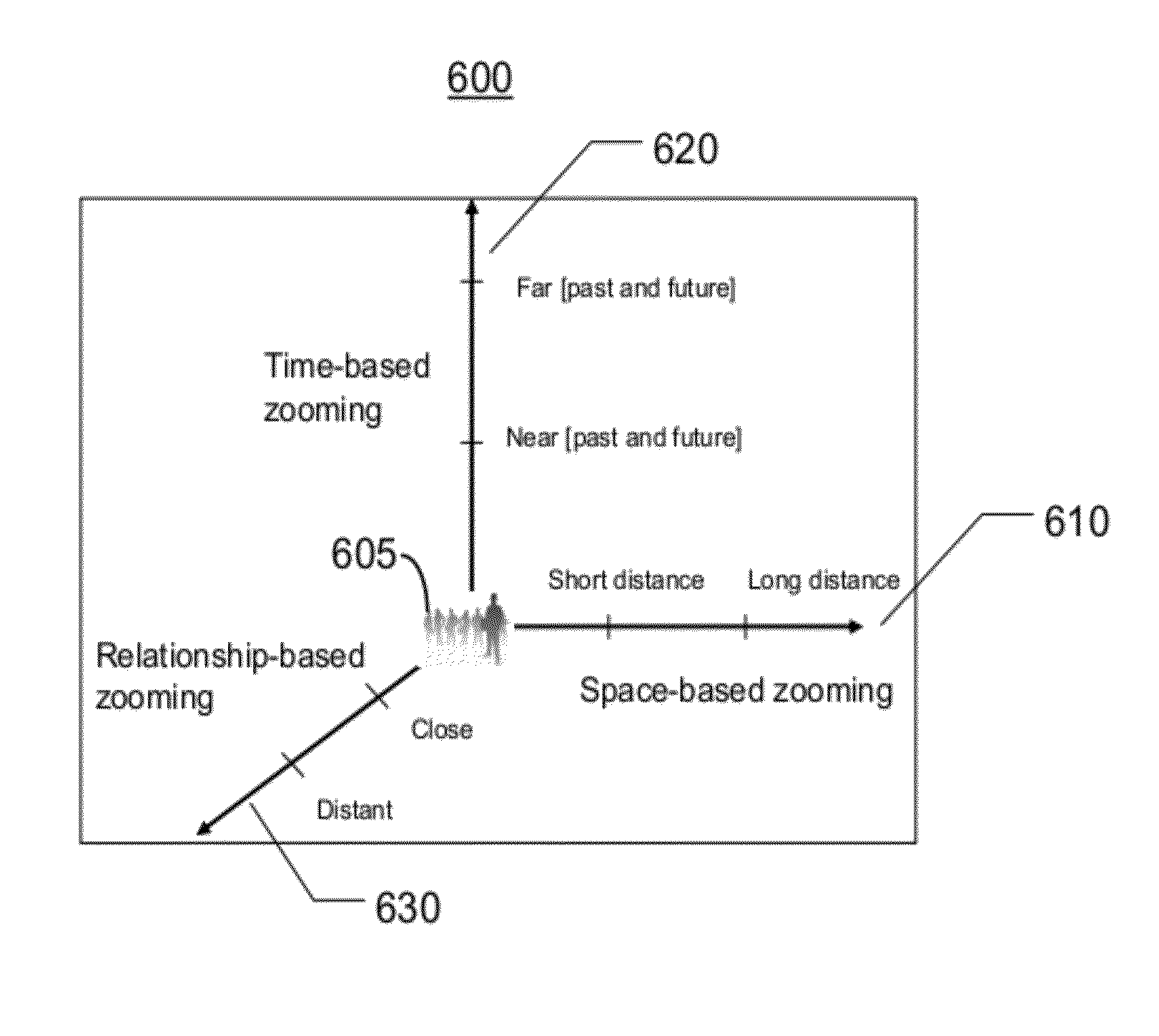

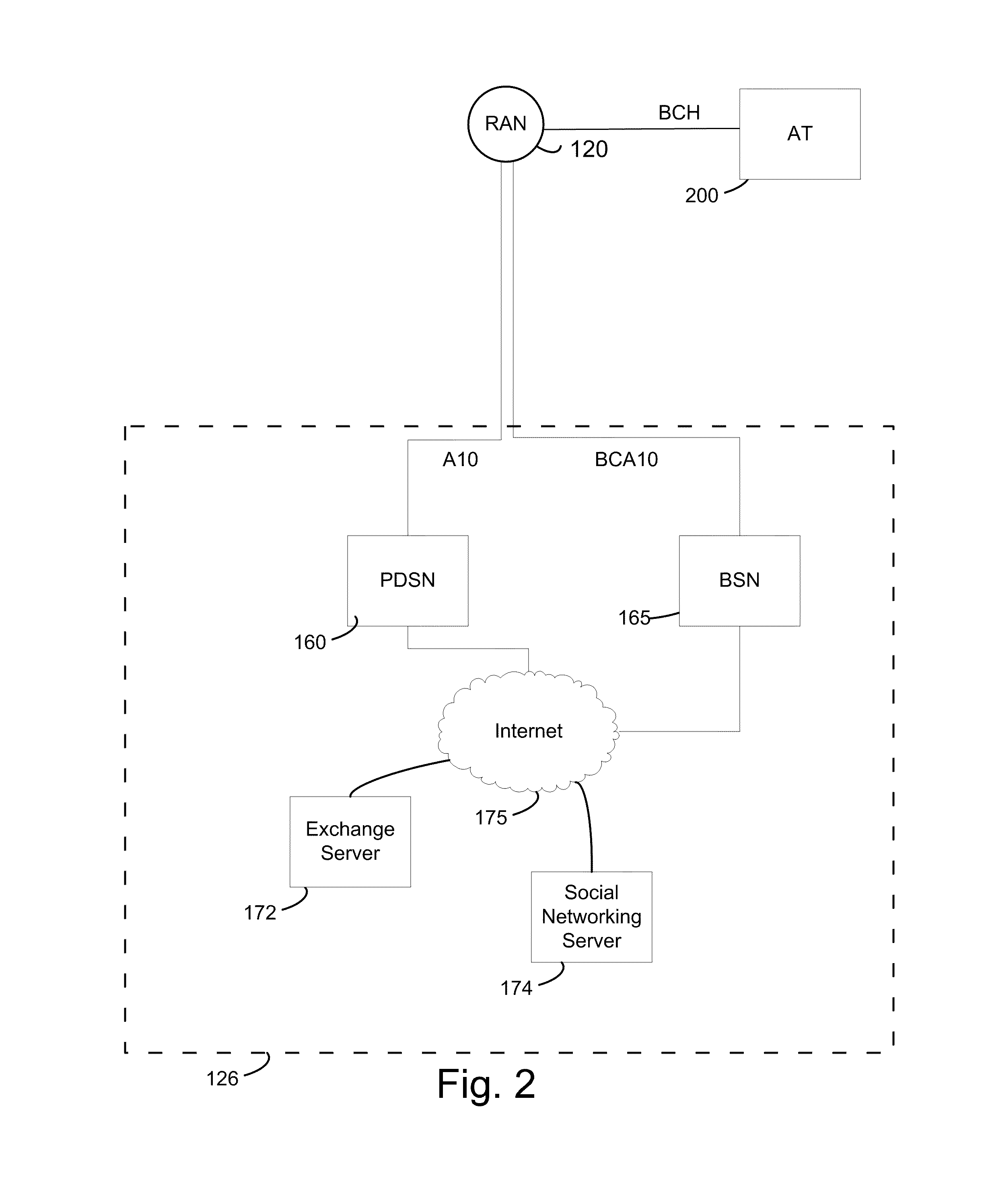

Integrated display and management of data objects based on social, temporal and spatial parameters

ActiveUS20100058196A1Multiple digital computer combinationsSubstation equipmentObject basedVisual perception

An embodiment is directed to displaying information to a user of a communications device. The communications device receives a query including a social parameter, a temporal parameter and a spatial parameter relative to the user that are indicative of a desired visual representation of a set of data objects. The communications device determines degrees to which the social, temporal and spatial parameters of the query are related to each of the set of data objects in social, temporal and spatial dimensions, respectively. The communications device displaying a first visual representation of at least a portion of the set of data objects to the user based on whether the determined degrees of relation in the social dimension, temporal dimension and spatial dimension satisfy the respective parameters of the query.

Owner:QUALCOMM INC

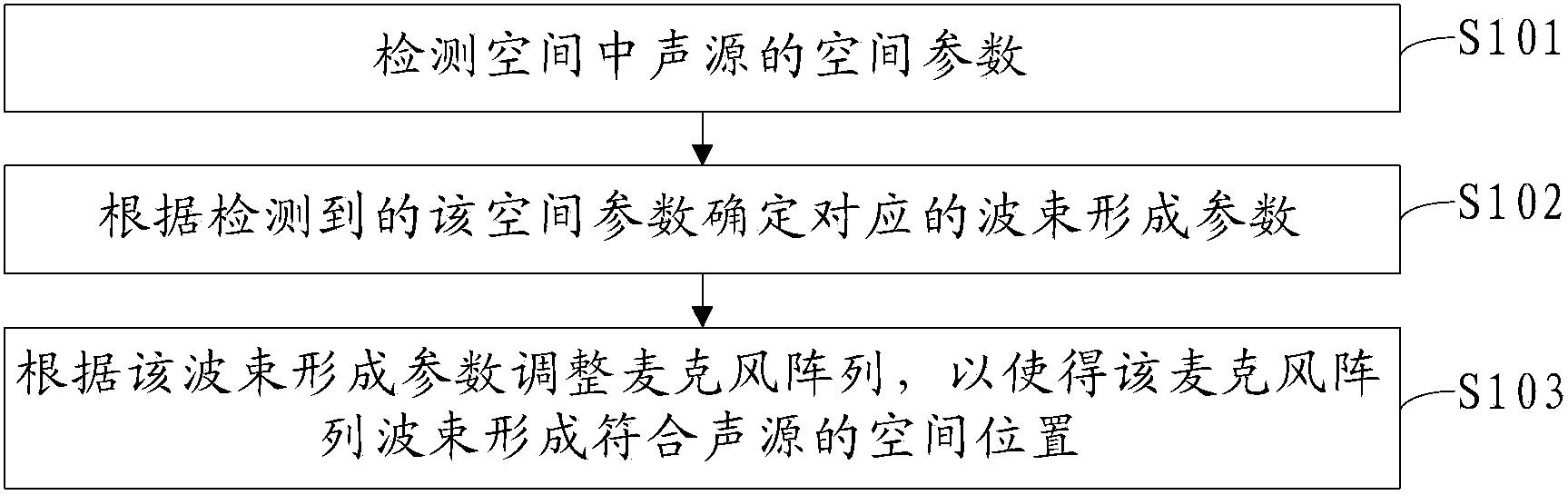

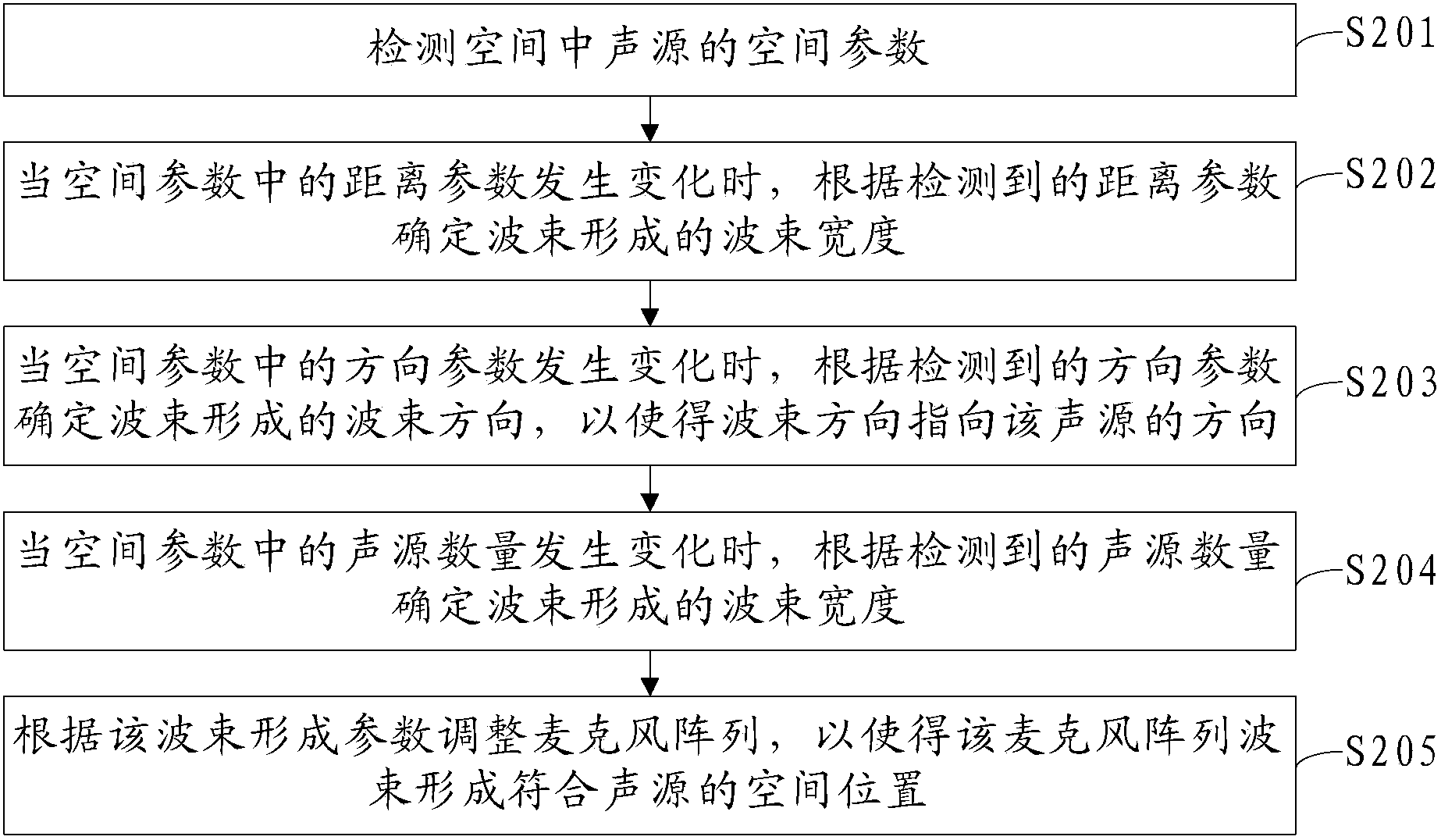

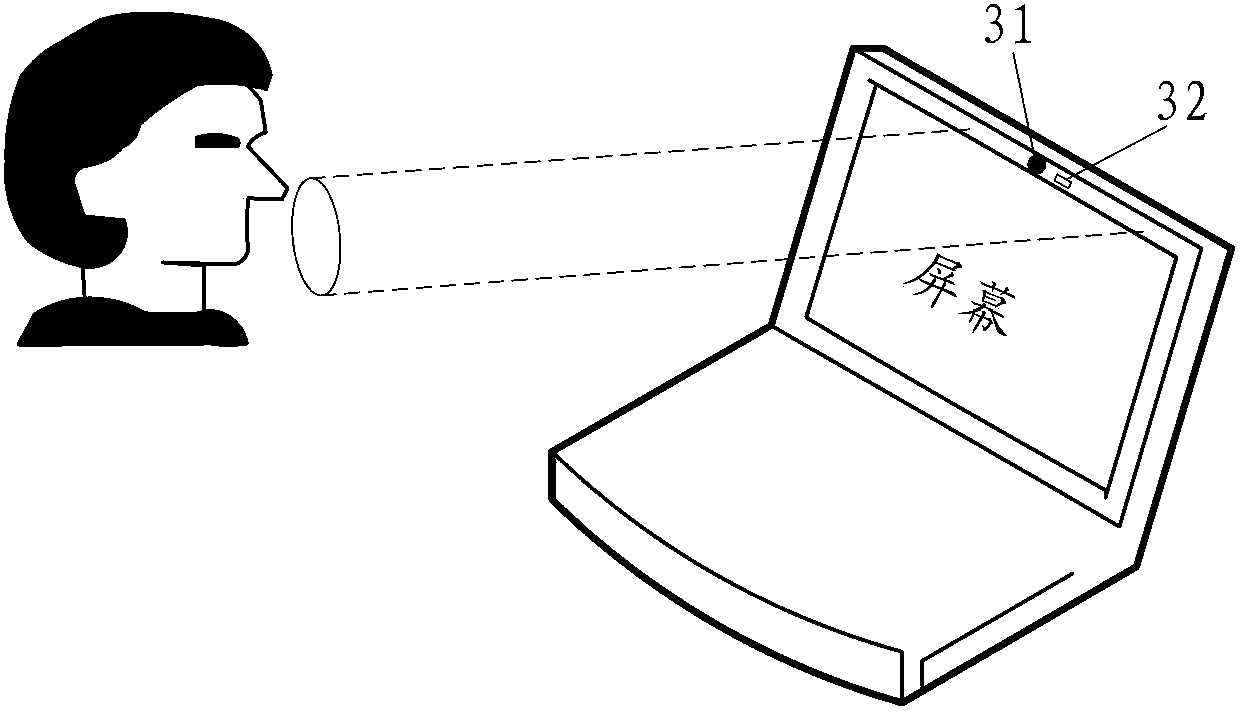

Microphone array adjustment method, microphone array and electronic device

InactiveCN104053088ARealize dynamic adjustmentEasy to controlTransducer circuitsDigital signal processingEngineering

The embodiment of the invention provides a microphone array adjustment method, a microphone array and an electronic device and relates to the field of digital signal processing so that wave beams can be adjusted dynamically according to a space position of an acoustic source and thus the quality of audio acquisition is improved. The microphone array adjustment method is applicable to the microphone array which includes at least one microphone used for collecting voices made by the acoustic source. The microphone array adjustment method includes the following steps: detecting a space parameter of the acoustic source in a space; determining a corresponding wave-beam forming parameter according to the detected space parameter; and according to the wave-beam forming parameter, adjusting the microphone array so as to enable the microphone array wave-beam forming to conform to the space position of the acoustic source.

Owner:LENOVO (BEIJING) CO LTD

Modification of Viewing Parameters for Digital Images Using Face Detection Information

A method of modifying the viewing parameters of digital images using face detection for achieving a desired spatial parameters based on one or more sub-groups of pixels that correspond to one or more facial features of the face. Such methods may be used for animating still images, automating and streamlining application such as the creation of slide shows and screen savers of images containing faces.

Owner:FOTONATION LTD

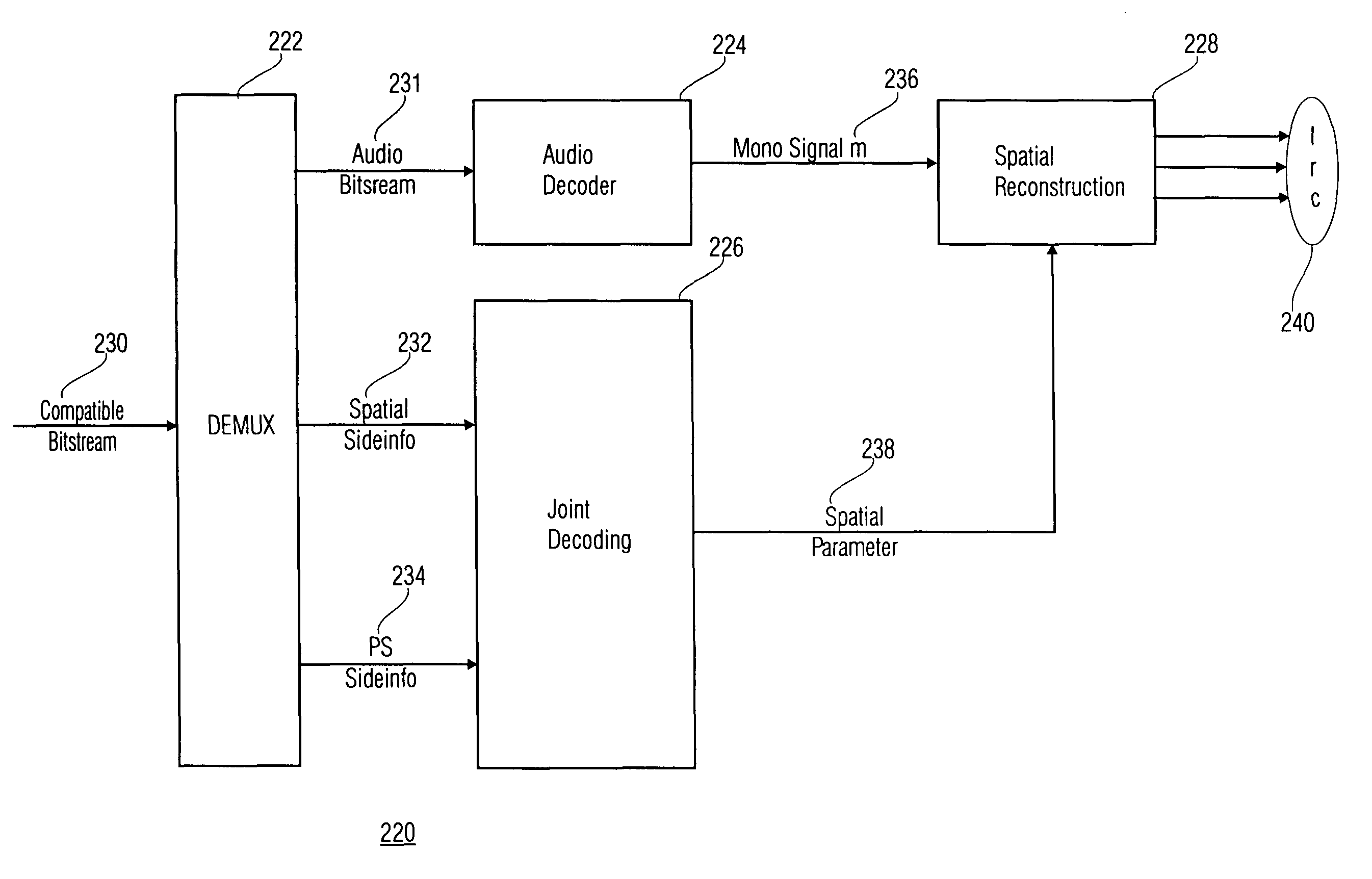

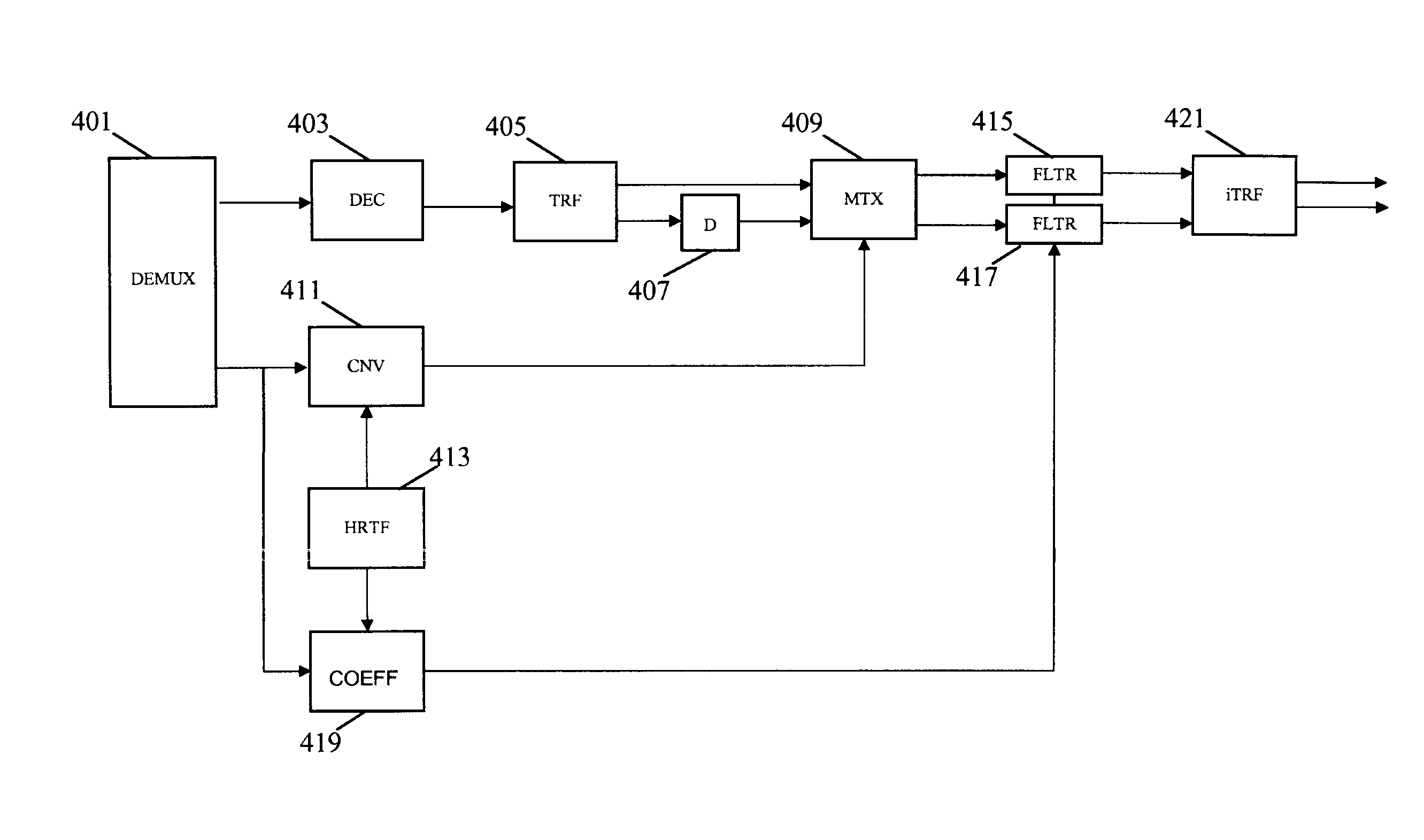

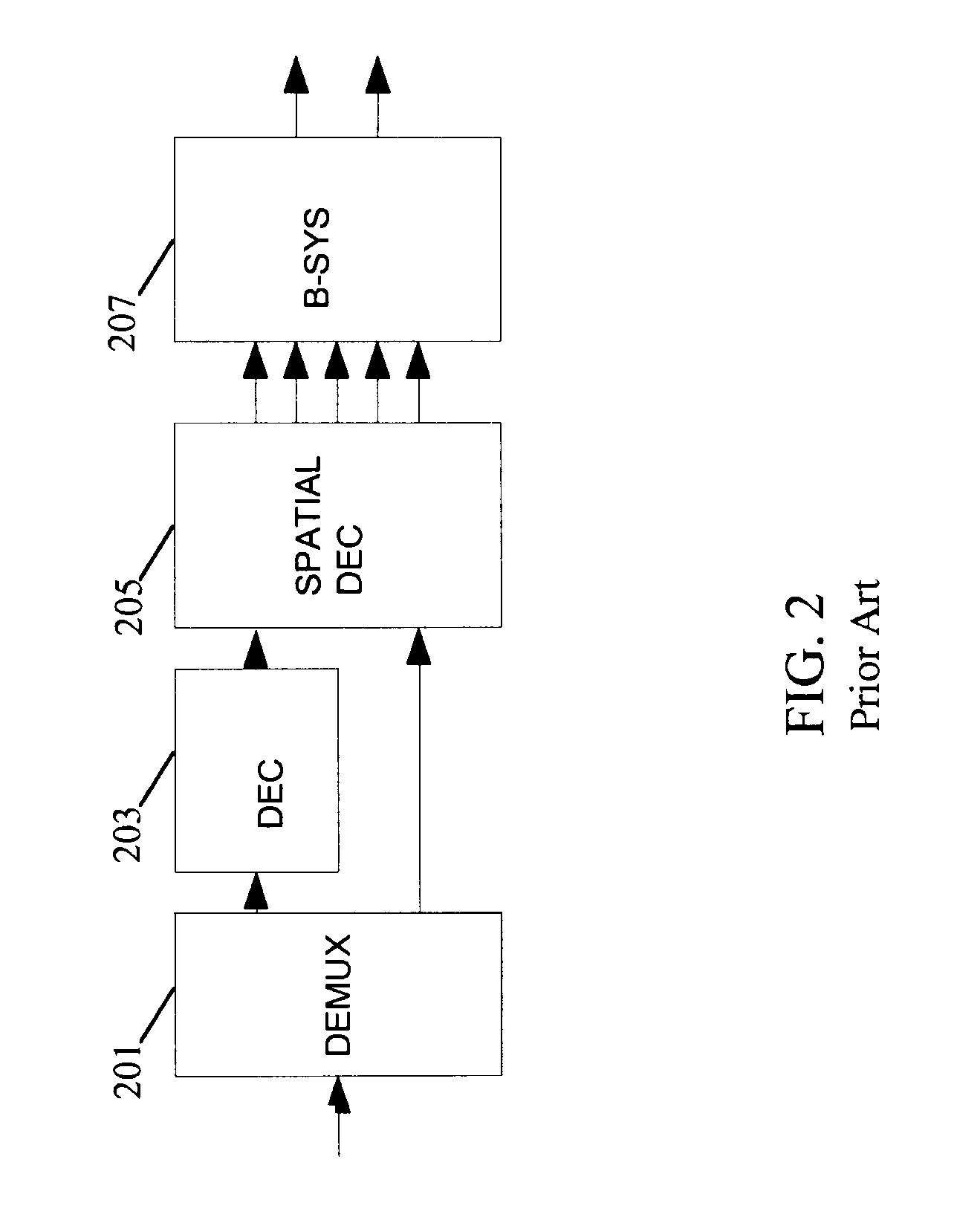

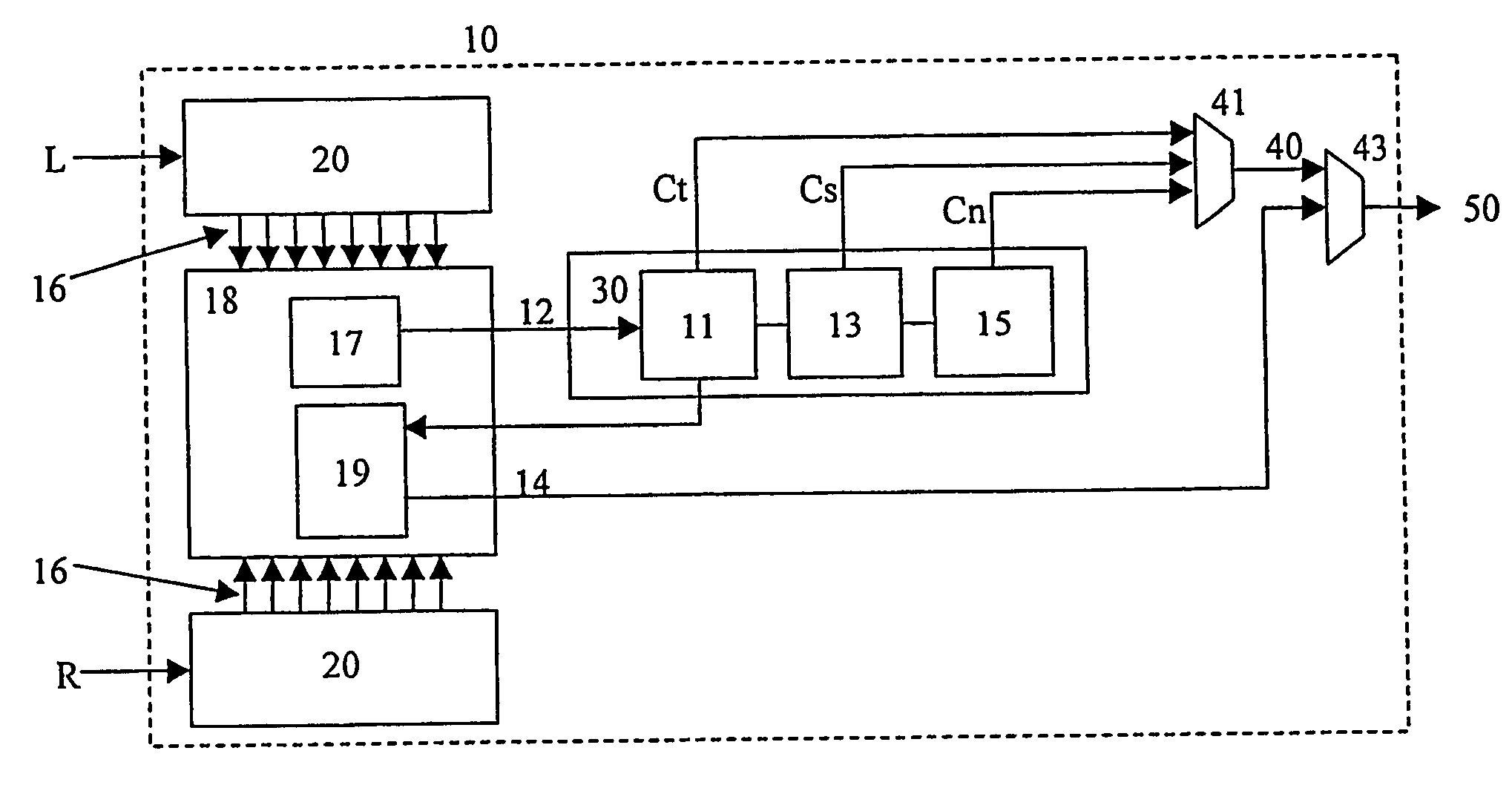

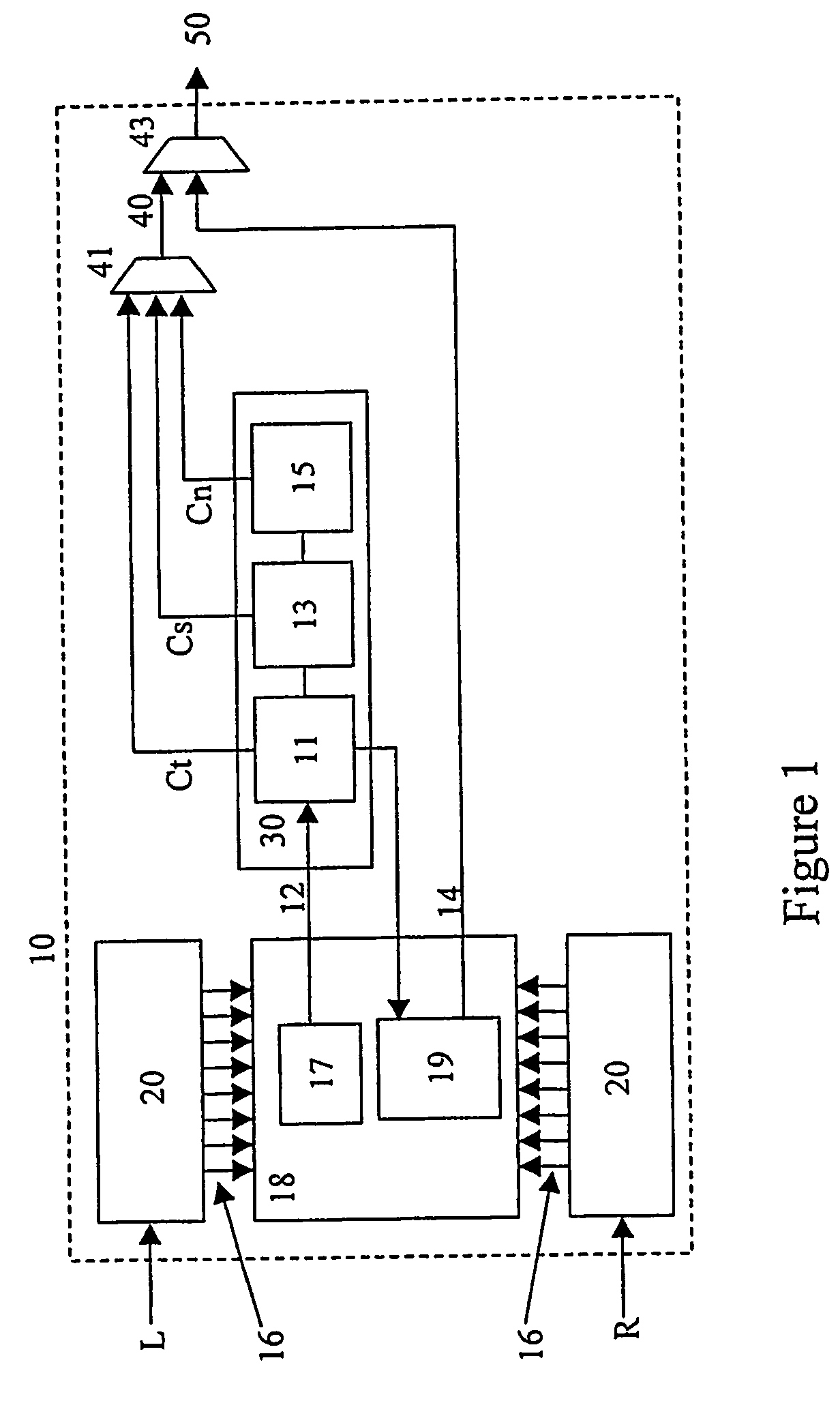

Method and apparatus for generating a binaural audio signal

ActiveUS20100246832A1Improved binauralReduce complexitySpeech analysisStereophonic systemsMultiplexerAudio frequency

An apparatus for generating a binaural audio signal includes a de-multiplexer and decoder which receives audio data comprising an audio M-channel audio signal which is a downmix of an N-channel audio signal and spatial parameter data for upmixing the M-channel audio signal to the N-channel audio signal. A conversion processor converts spatial parameters of the spatial parameter data into first binaural parameters in response to at least one binaural perceptual transfer function. A matrix processor converts the M-channel audio signal into a first stereo signal in response to the first binaural parameters. A stereo filter generates the binaural audio signal by filtering the first stereo signal. The filter coefficients for the stereo filter are determined in response to the at least one binaural perceptual transfer function by a coefficient processor. The combination of parameter conversion / processing and filtering allows a high quality binaural signal to be generated with low complexity.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV +1

System and method for controlling free space distribution by key range within a database

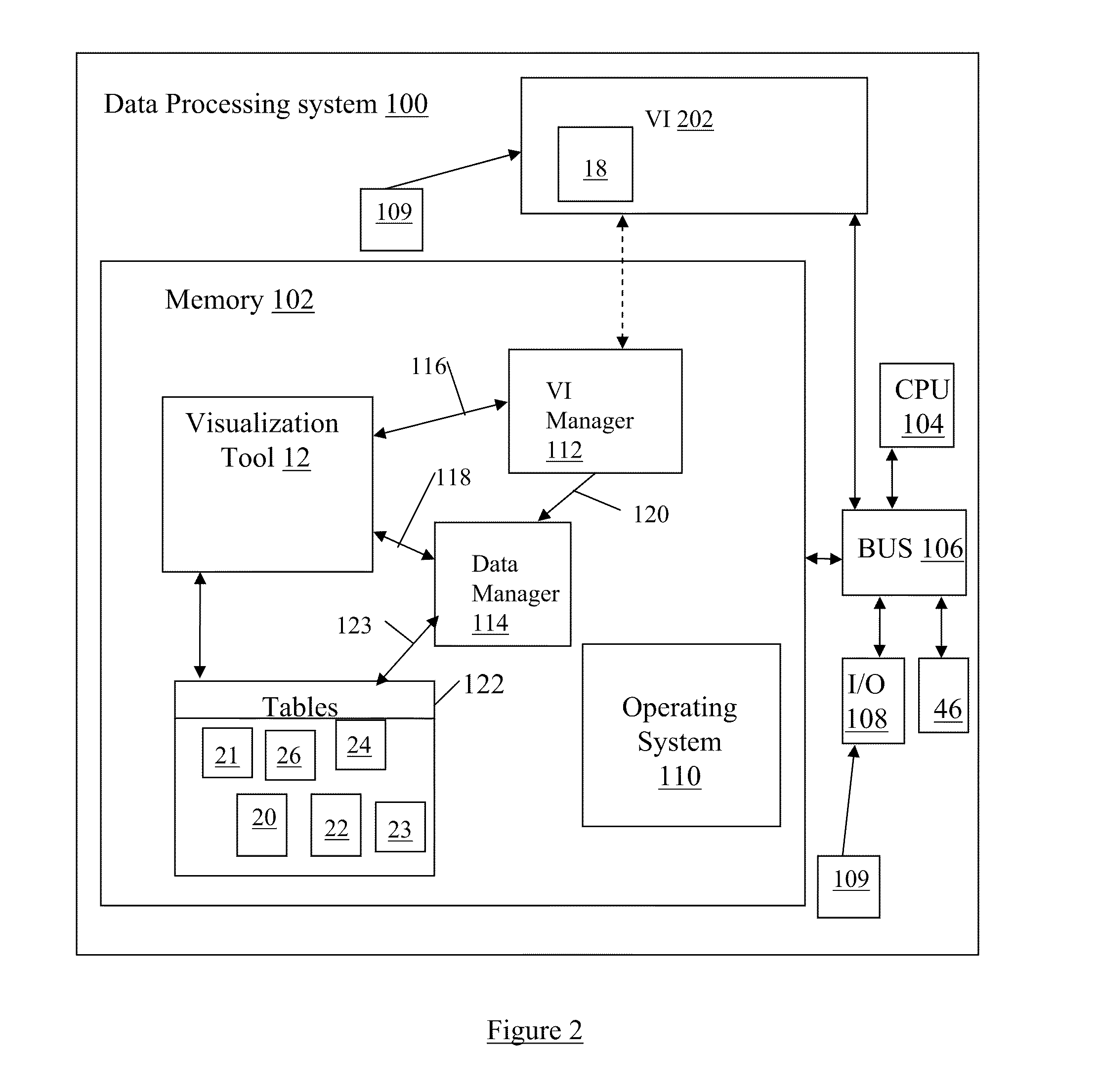

ActiveUS20030056082A1Data processing applicationsRelational databasesTheoretical computer scienceImproved method

An improved method and system for controlling free space distribution by key range within a database. In one embodiment, a data structure including key ranges of a plurality of database tables and indexes, and a plurality of key range free space parameters is created. The plurality of database tables and indexes may include a plurality of page sets, which may include rows of data and keys. Time values may be associated with the plurality of free space parameters. The key range free space parameters may have values assigned to them. The key range free space parameters may be user-defined or automatically generated using growth trend analysis, based on key range growth statistics. The rows of data and keys within the plurality of page sets may be redistributed by a reorganization process. The redistributing may reference the key ranges of the data structure and the key range free space parameters.

Owner:BMC SOFTWARE

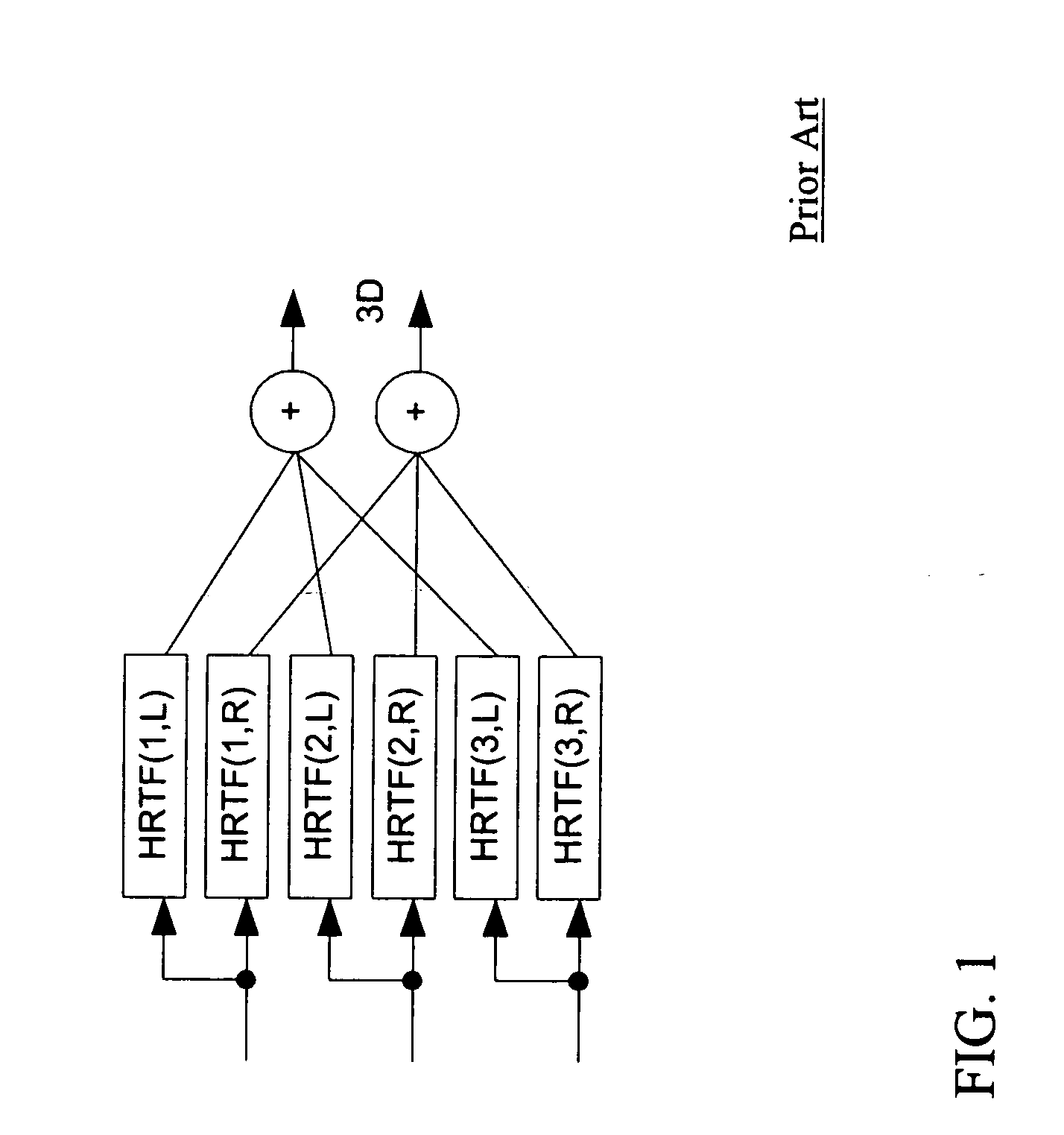

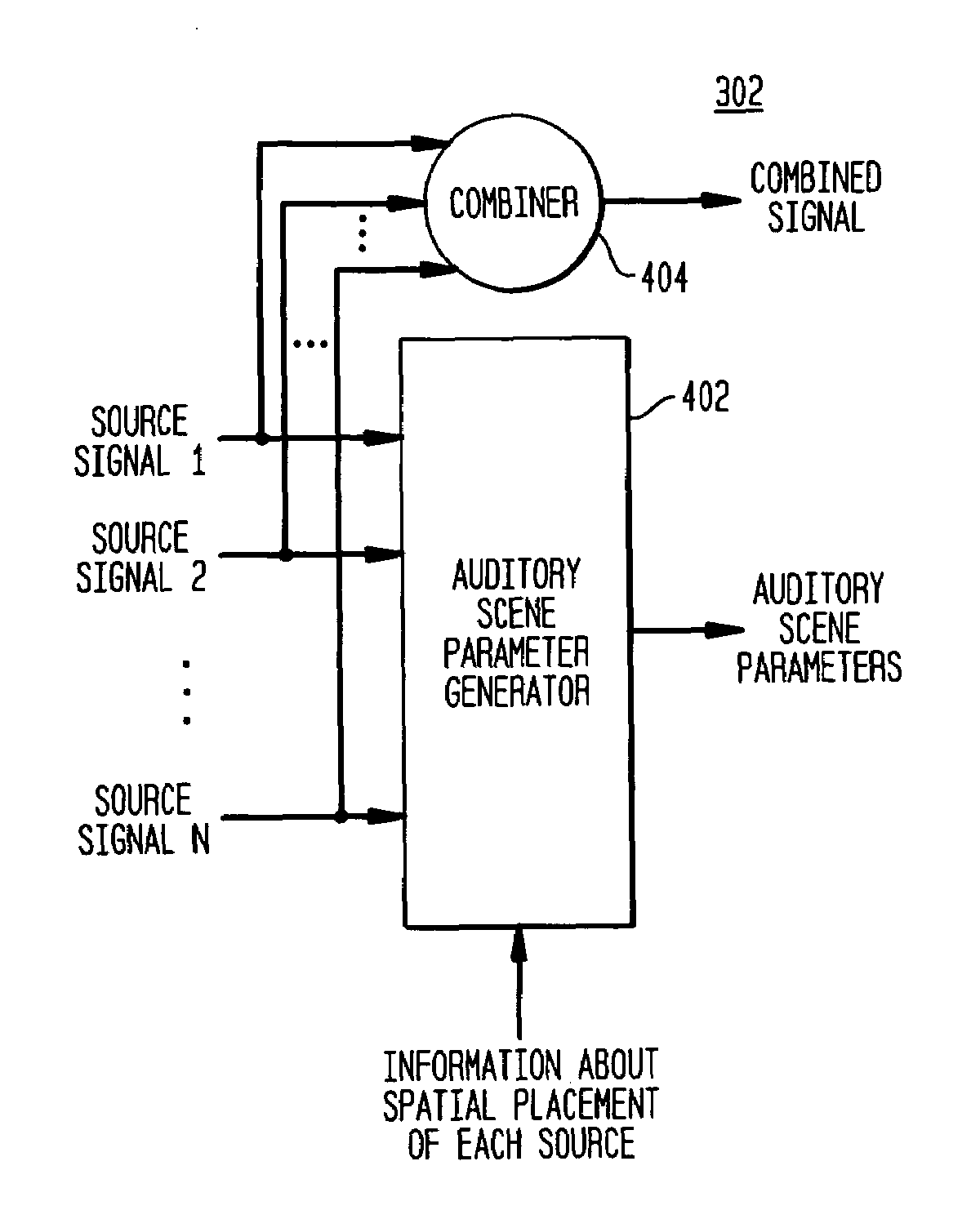

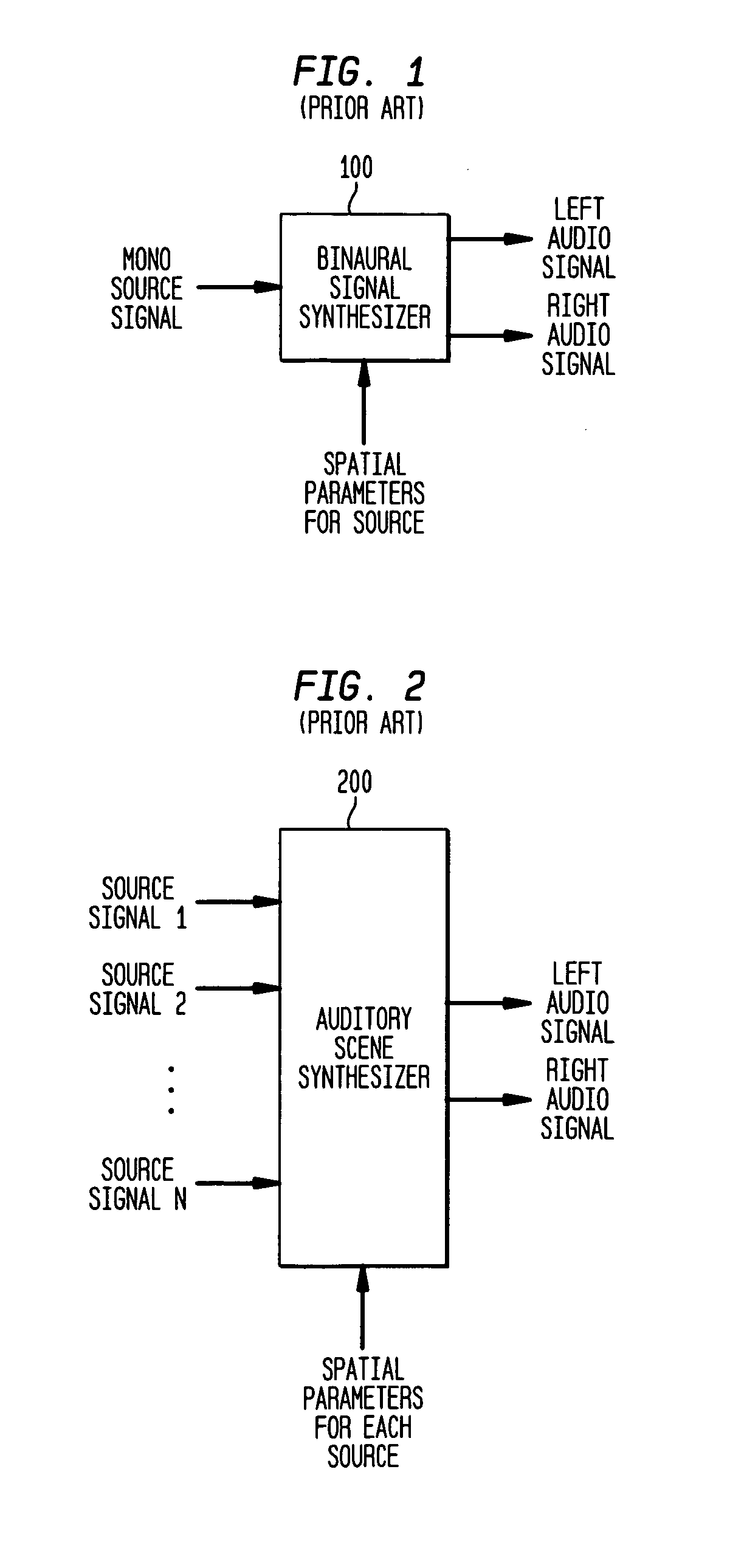

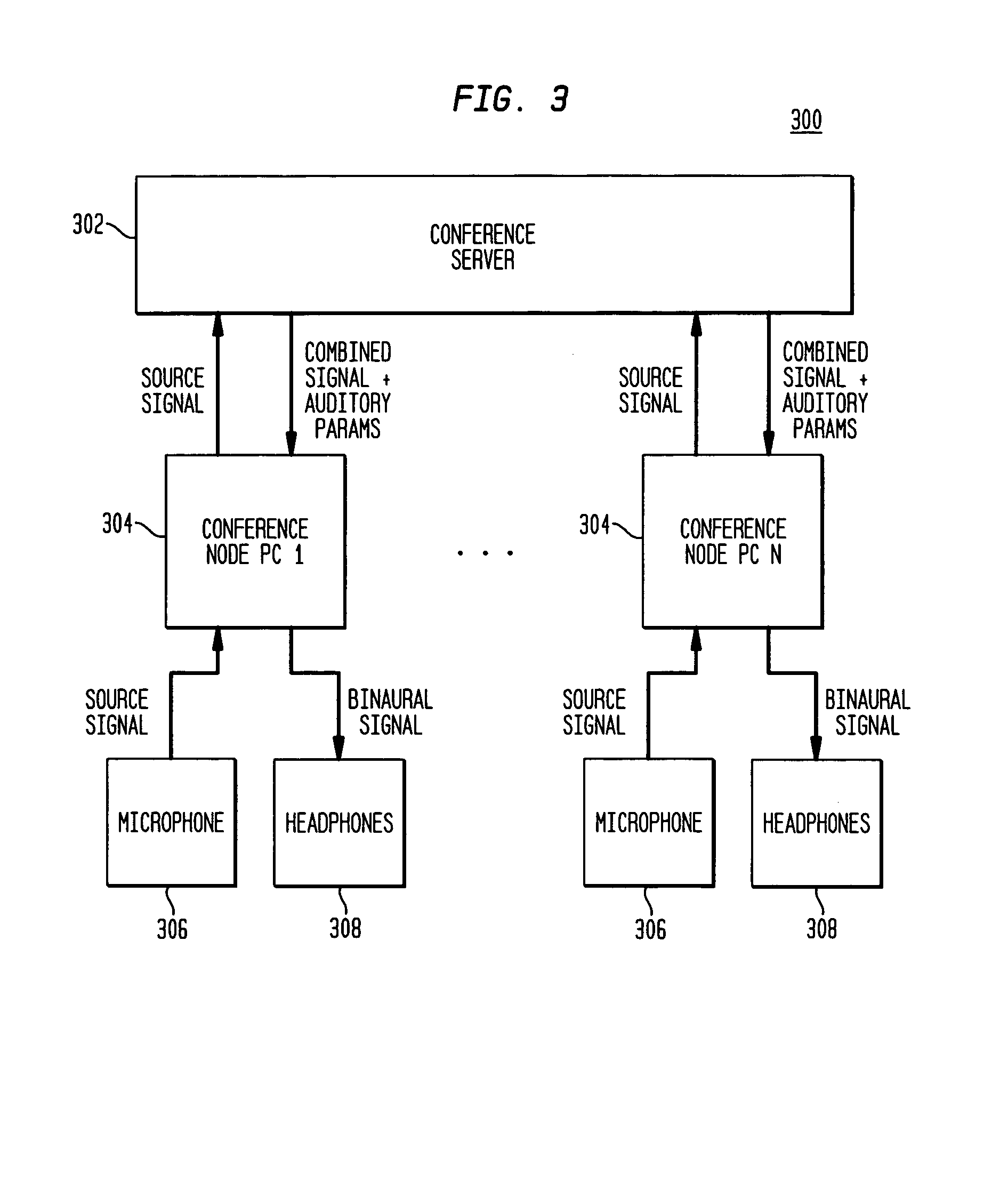

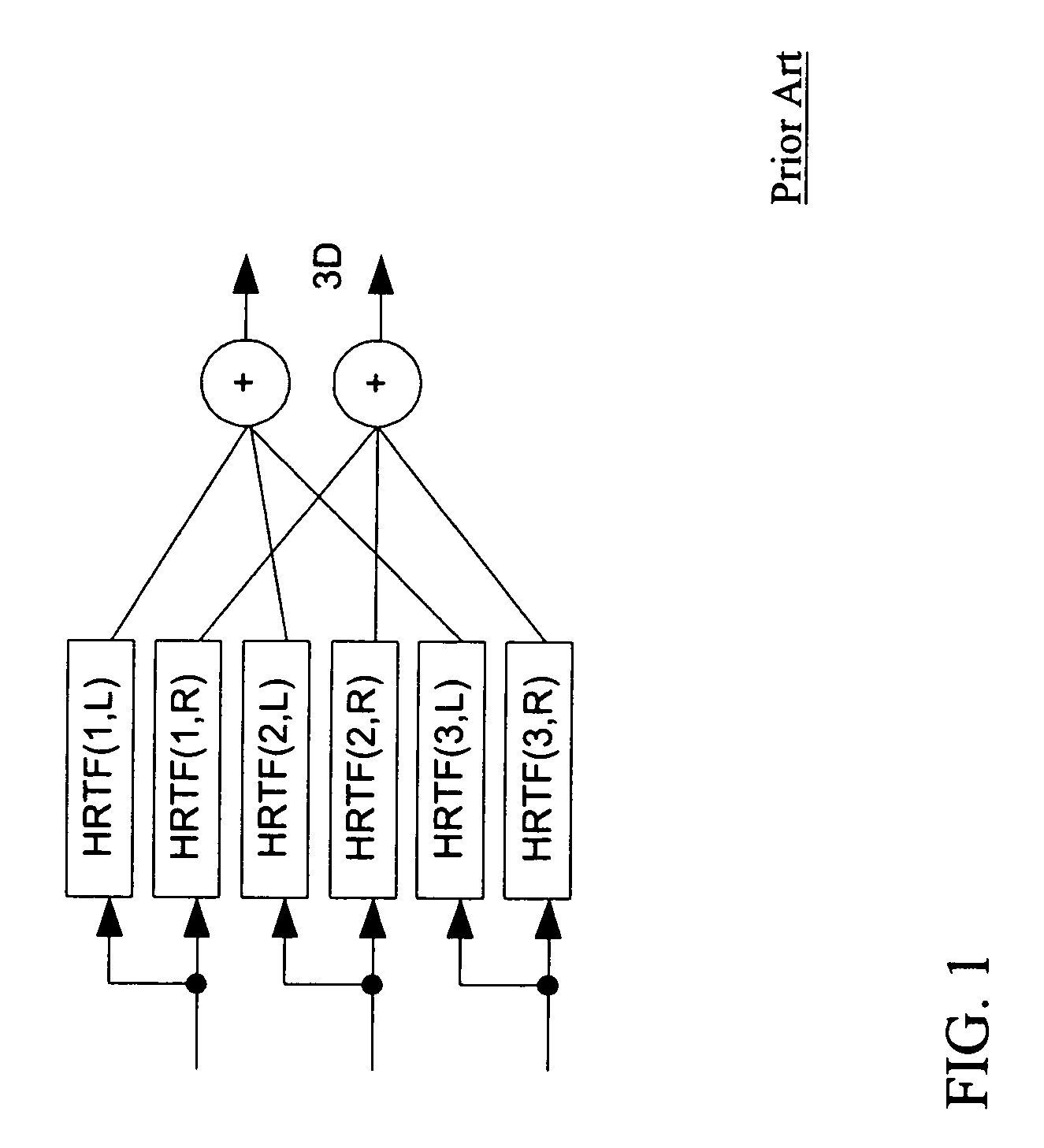

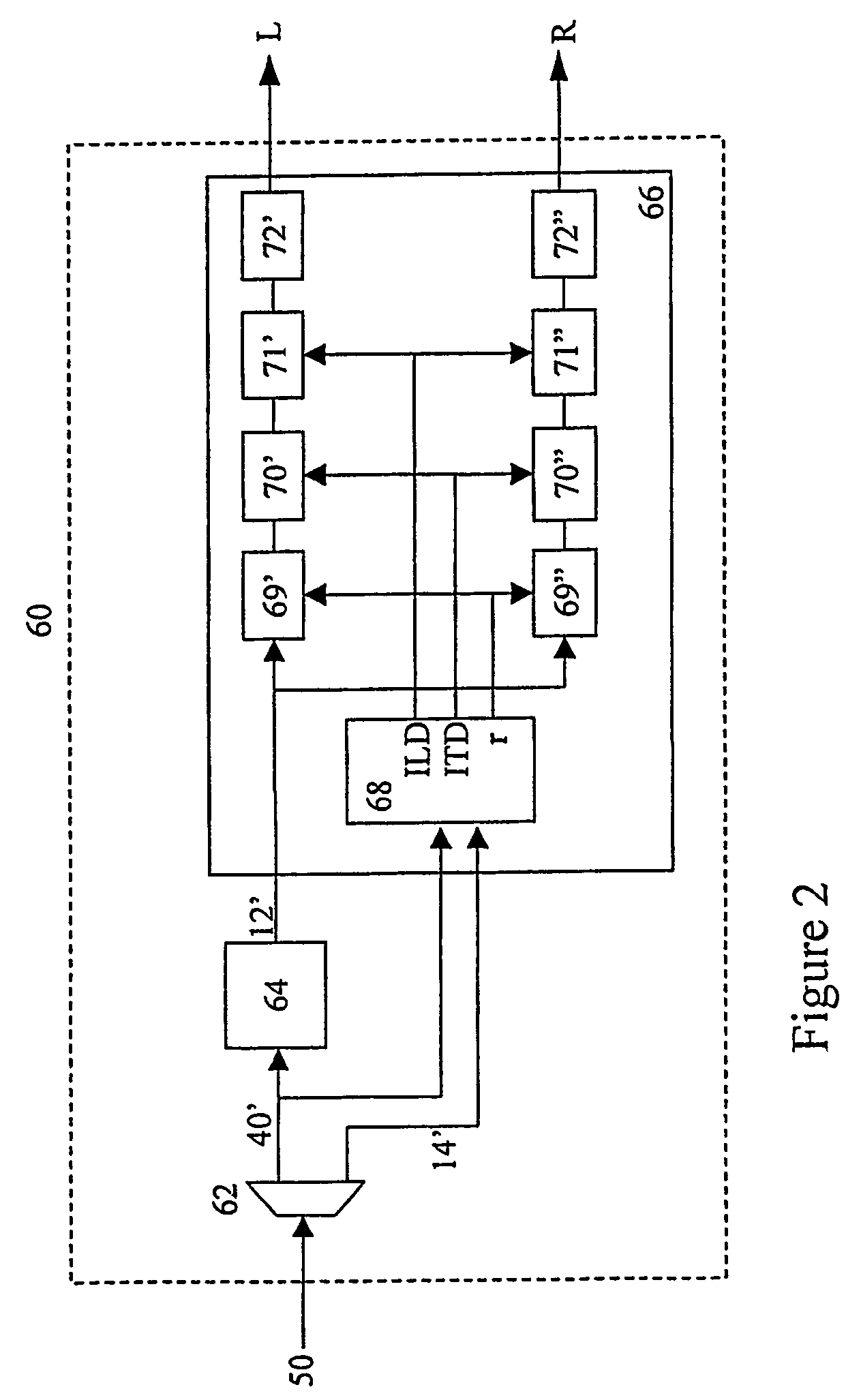

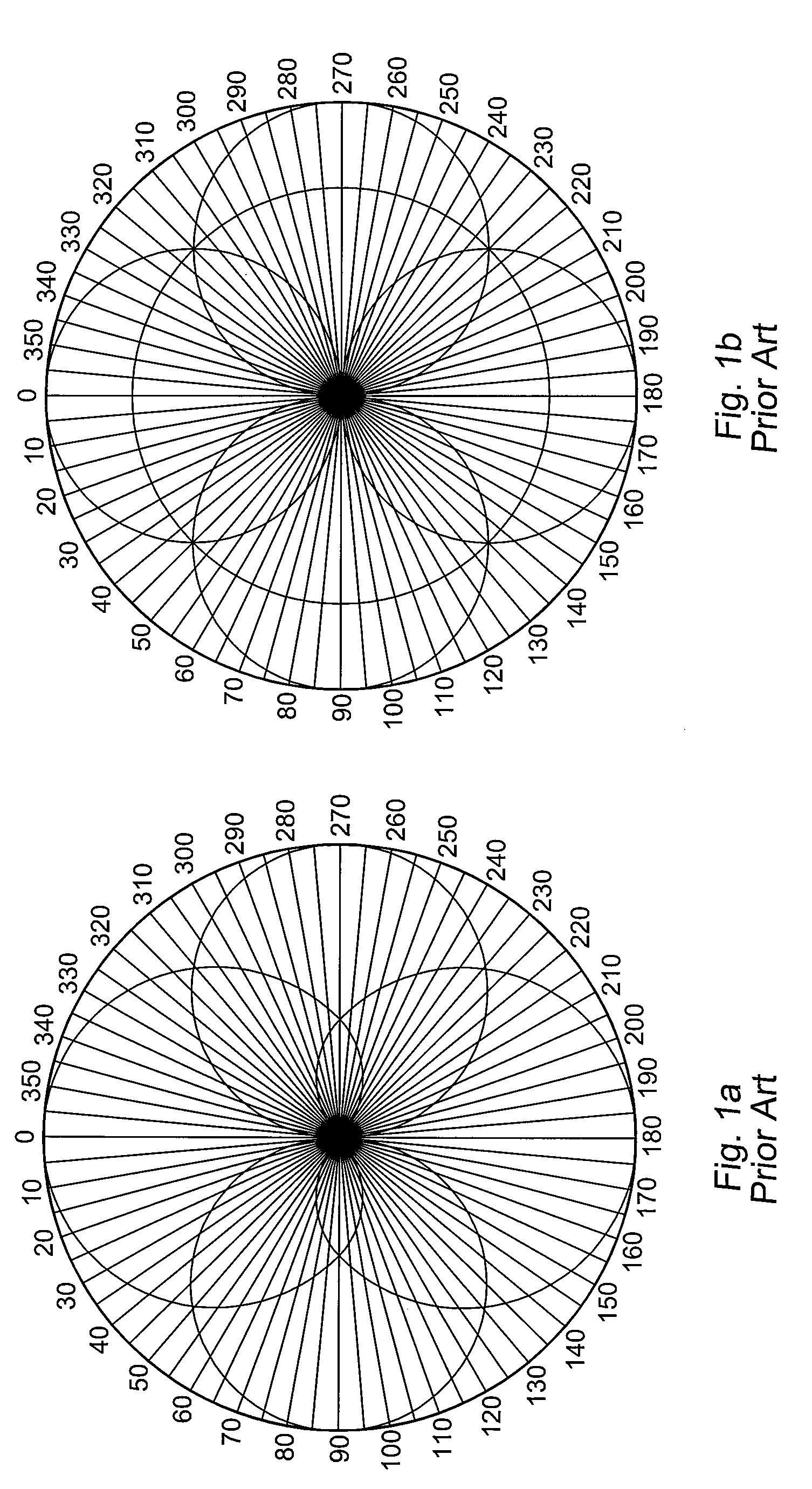

Perceptual synthesis of auditory scenes

InactiveUS7116787B2Special service for subscribersStereophonic systemsInteraural time differenceAudio signal flow

An auditory scene is synthesized by applying two or more different sets of one or more spatial parameters (e.g., an inter-ear level difference (ILD), inter-ear time difference (ITD), and / or head-related transfer function (HRTF)) to two or more different frequency bands of a combined audio signal, where each different frequency band is treated as if it corresponded to a single audio source in the auditory scene. In one embodiment, the combined audio signal corresponds to the combination of two or more different source signals, where each different frequency band corresponds to a region of the combined audio signal in which one of the source signals dominates the others. In this embodiment, the different sets of spatial parameters are applied to synthesize an auditory scene comprising the different source signals. In another embodiment, the combined audio signal corresponds to the combination of the left and right audio signals of a binaural signal corresponding to an input auditory scene. In this embodiment, the different sets of spatial parameters are applied to reconstruct the input auditory scene. In either case, transmission bandwidth requirements are reduced by reducing to one the number of different audio signals that need to be transmitted to a receiver configured to synthesize / reconstruct the auditory scene.

Owner:AVAGO TECH INT SALES PTE LTD

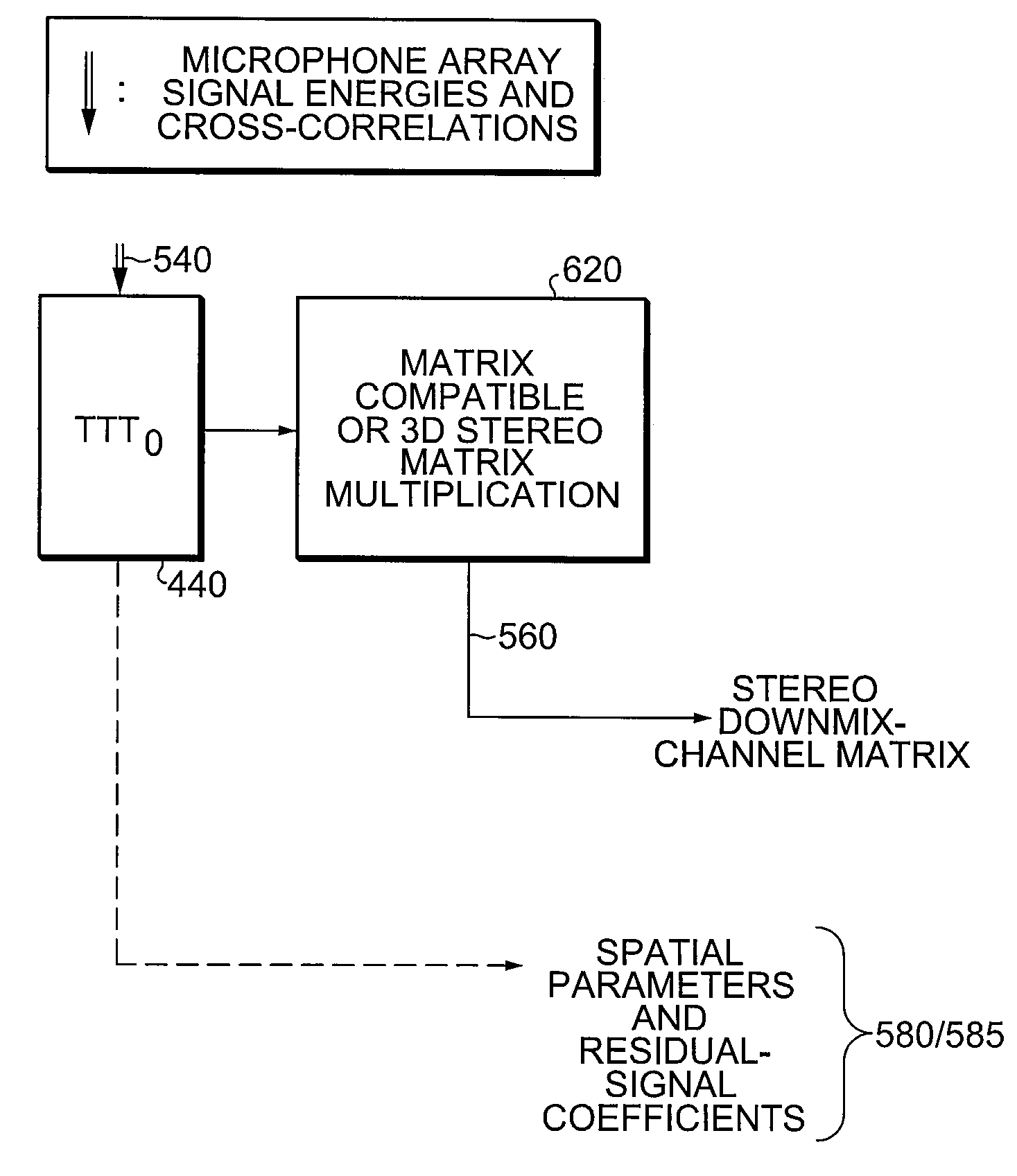

Stereo compatible multi-channel audio coding

A parametric representation of a multi-channel audio signal having parameters suited to be used together with a monophonic downmix signal to calculate a reconstruction of the multi-channel audio signal can efficiently be derived in a stereo-backwards compatible way when a parameter combiner is used to generate the parametric representation by combining a one or more spatial parameters and a stereo parameter resulting in a parametric representation having a decoder usable stereo parameter and an information on the one or more spatial parameters that represents, together with the decoder usable stereo parameter, the one or more spatial parameters.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV +2

Method and apparatus for generating a binaural audio signal

ActiveUS8265284B2Mitigate, alleviate or eliminate one orImproved binauralSpeech analysisStereophonic systemsMultiplexerLow complexity

An apparatus for generating a binaural audio signal includes a de-multiplexer and decoder which receives audio data comprising an audio M-channel audio signal which is a downmix of an N-channel audio signal and spatial parameter data for upmixing the M-channel audio signal to the N-channel audio signal. A conversion processor converts spatial parameters of the spatial parameter data into first binaural parameters in response to at least one binaural perceptual transfer function. A matrix processor converts the M-channel audio signal into a first stereo signal in response to the first binaural parameters. A stereo filter generates the binaural audio signal by filtering the first stereo signal. The filter coefficients for the stereo filter are determined in response to the at least one binaural perceptual transfer function by a coefficient processor. The combination of parameter conversion / processing and filtering allows a high quality binaural signal to be generated with low complexity.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV +1

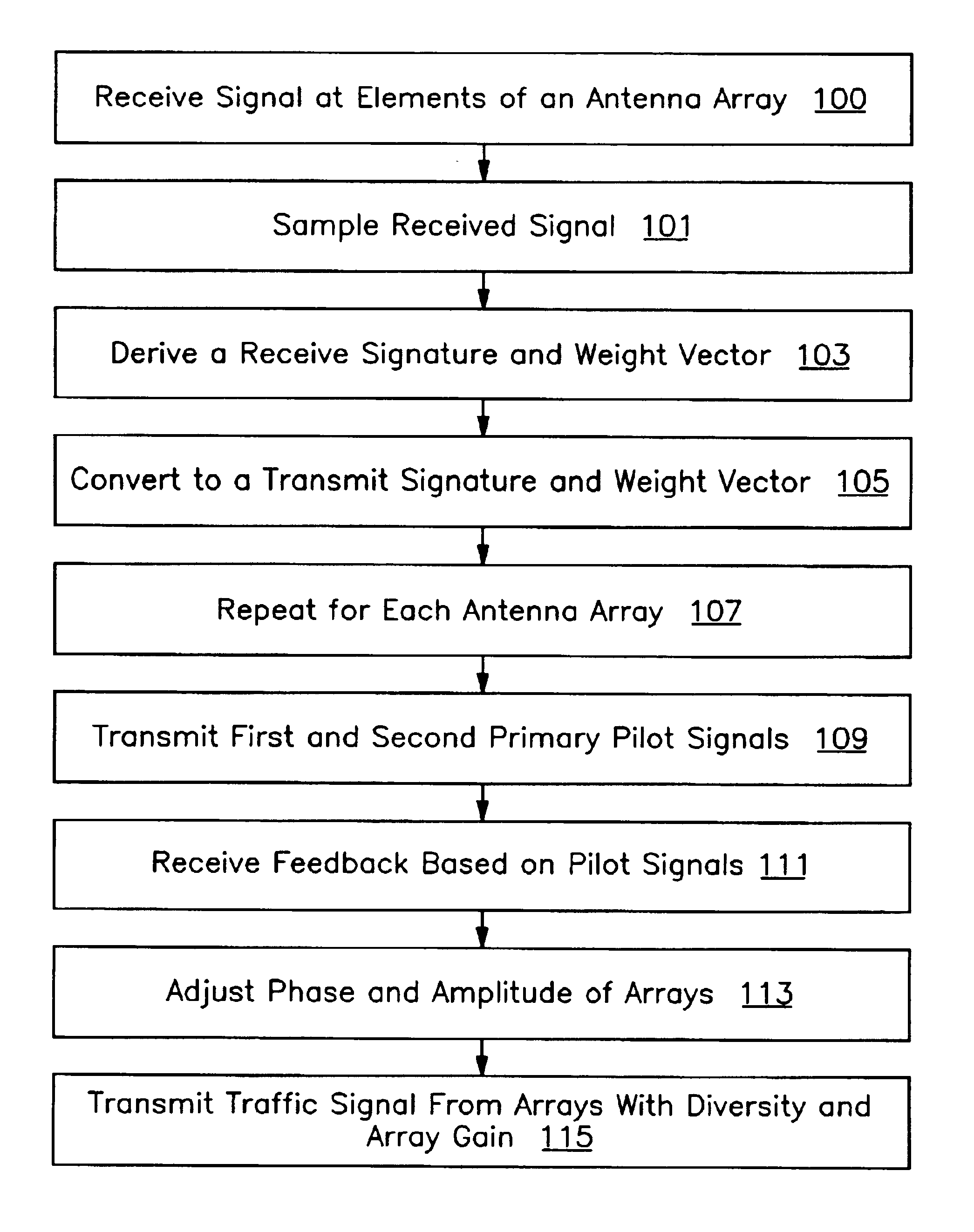

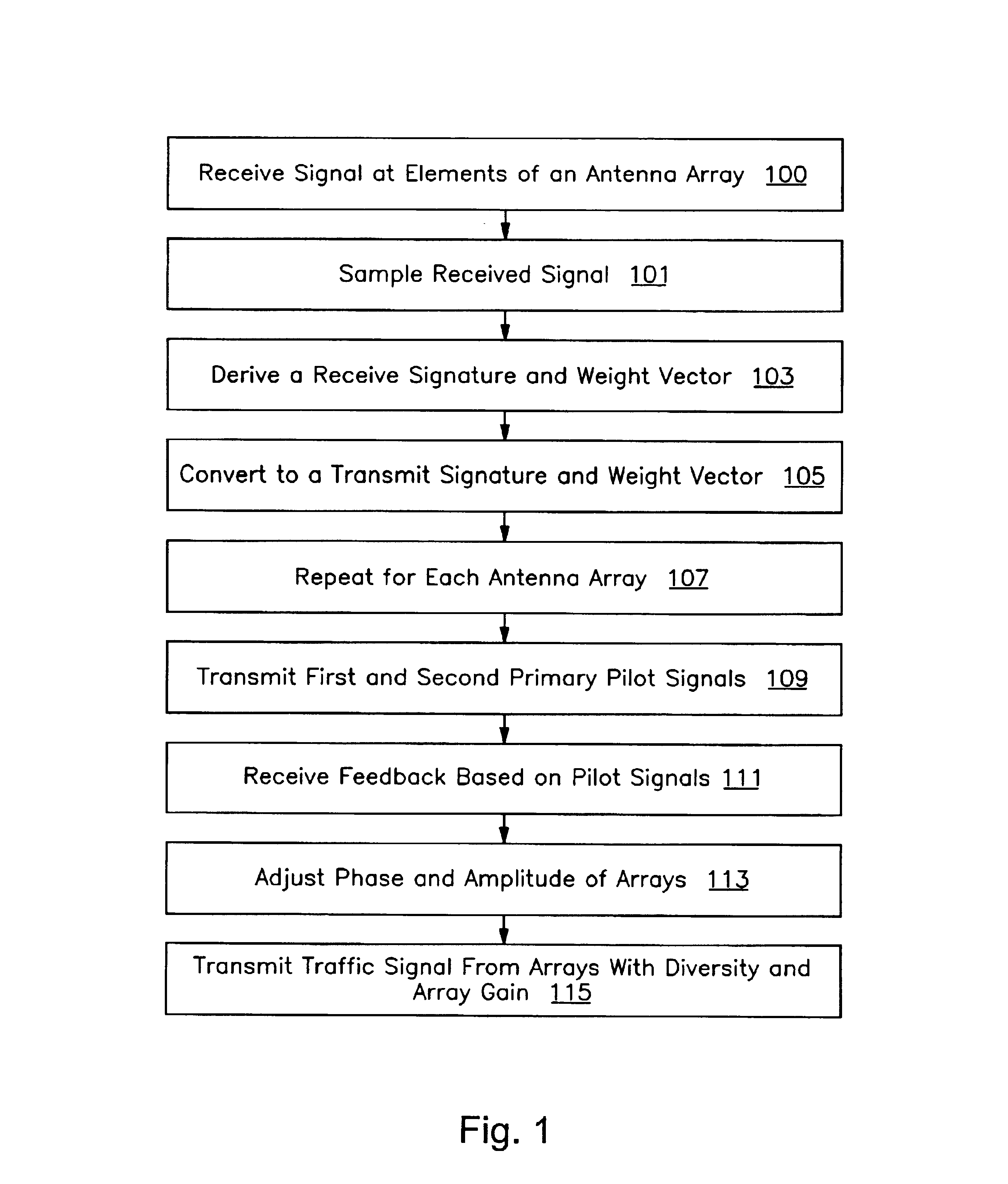

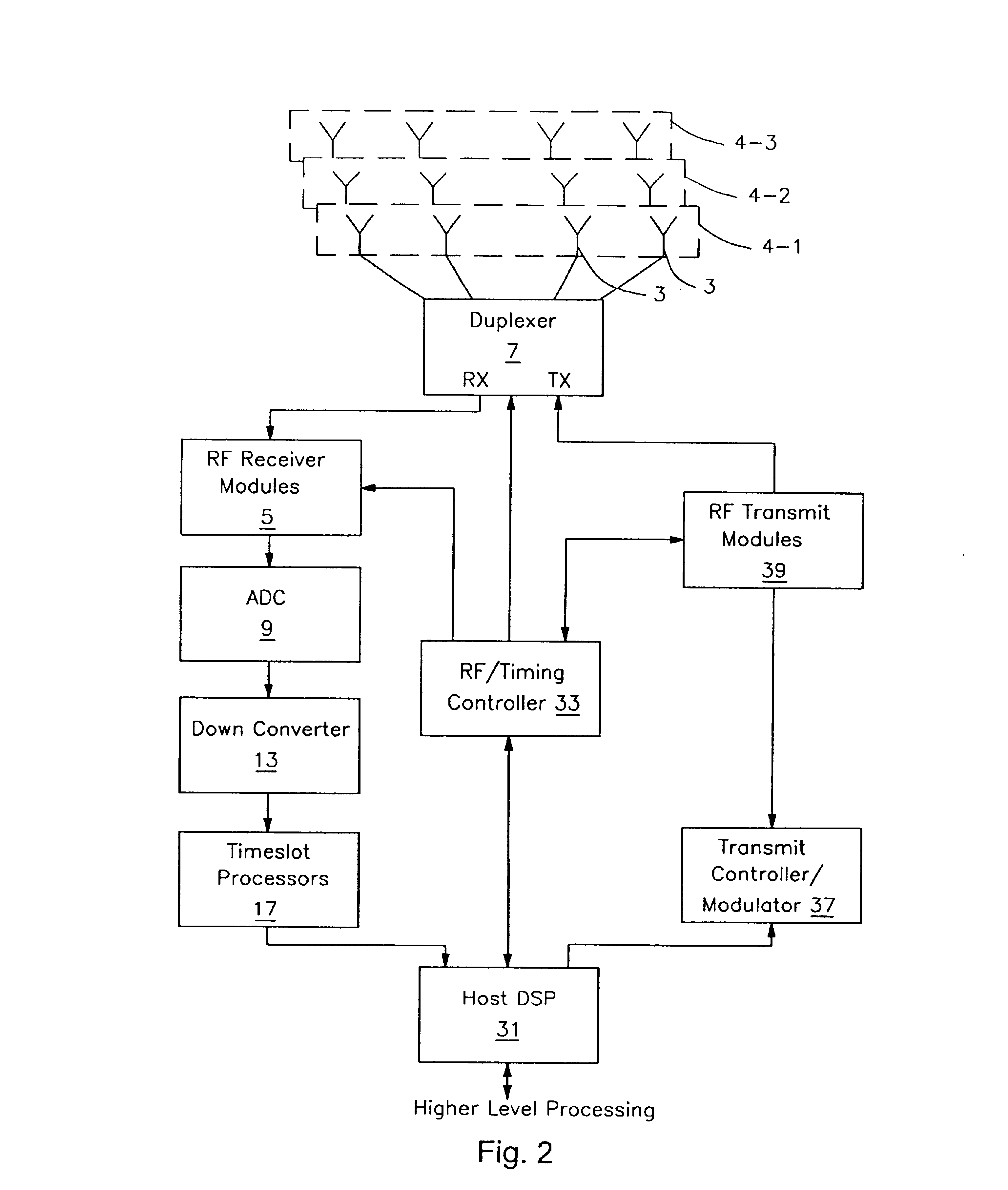

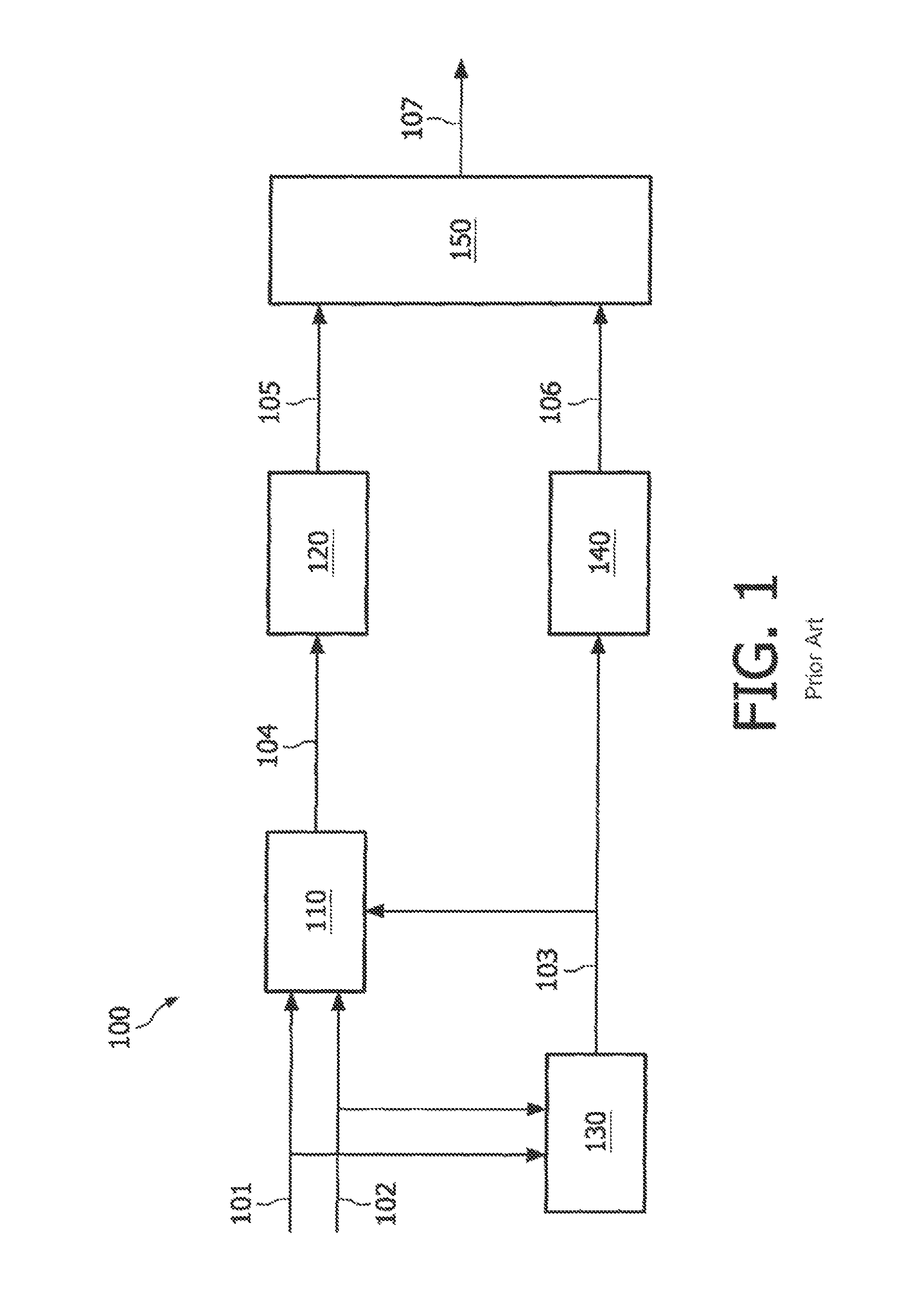

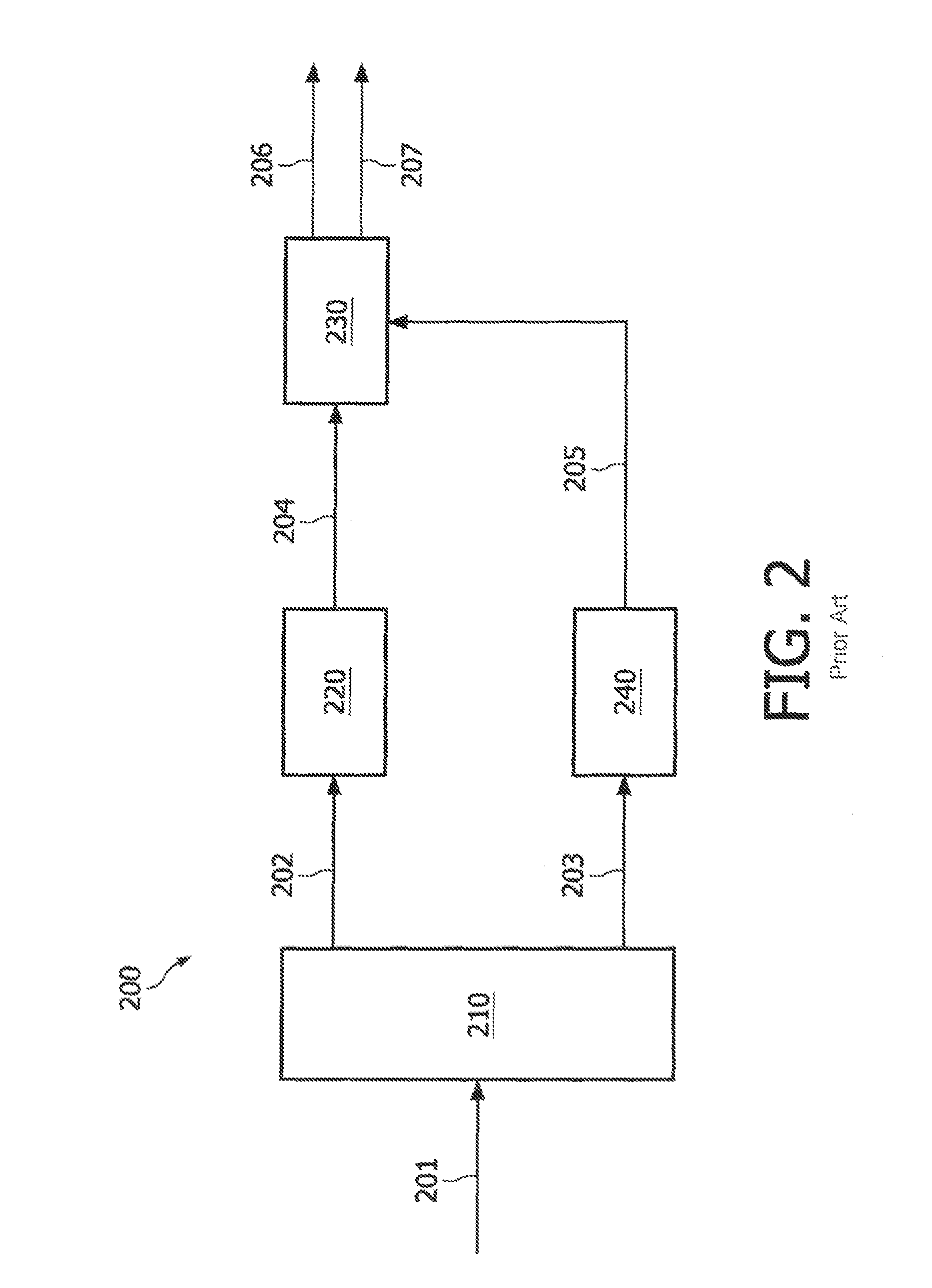

Combined open and closed loop beam forming in a multiple array radio communication system

InactiveUS6865377B1Spatial transmit diversityModulated-carrier systemsCommunications systemClosed loop

A method and apparatus are provided that can combine the benefits of beamforming with transmit diversity. In one embodiment, the invention includes sampling a signal received from a remote radio at elements of an antenna array, deriving spatial parameters for transmitting a signal to the remote radio from an antenna array, sampling the signal received from the remote radio at elements of at least one additional antenna array, and deriving spatial parameters for transmitting a signal to the remote radio from the at least one additional antenna array. The invention further includes generating diversity parameters for transmitting a signal to the remote radio using each antenna array as an element of a diversity array, and transmitting a signal to the remote radio using the spatial parameters and the diversity parameters.

Owner:INTEL CORP

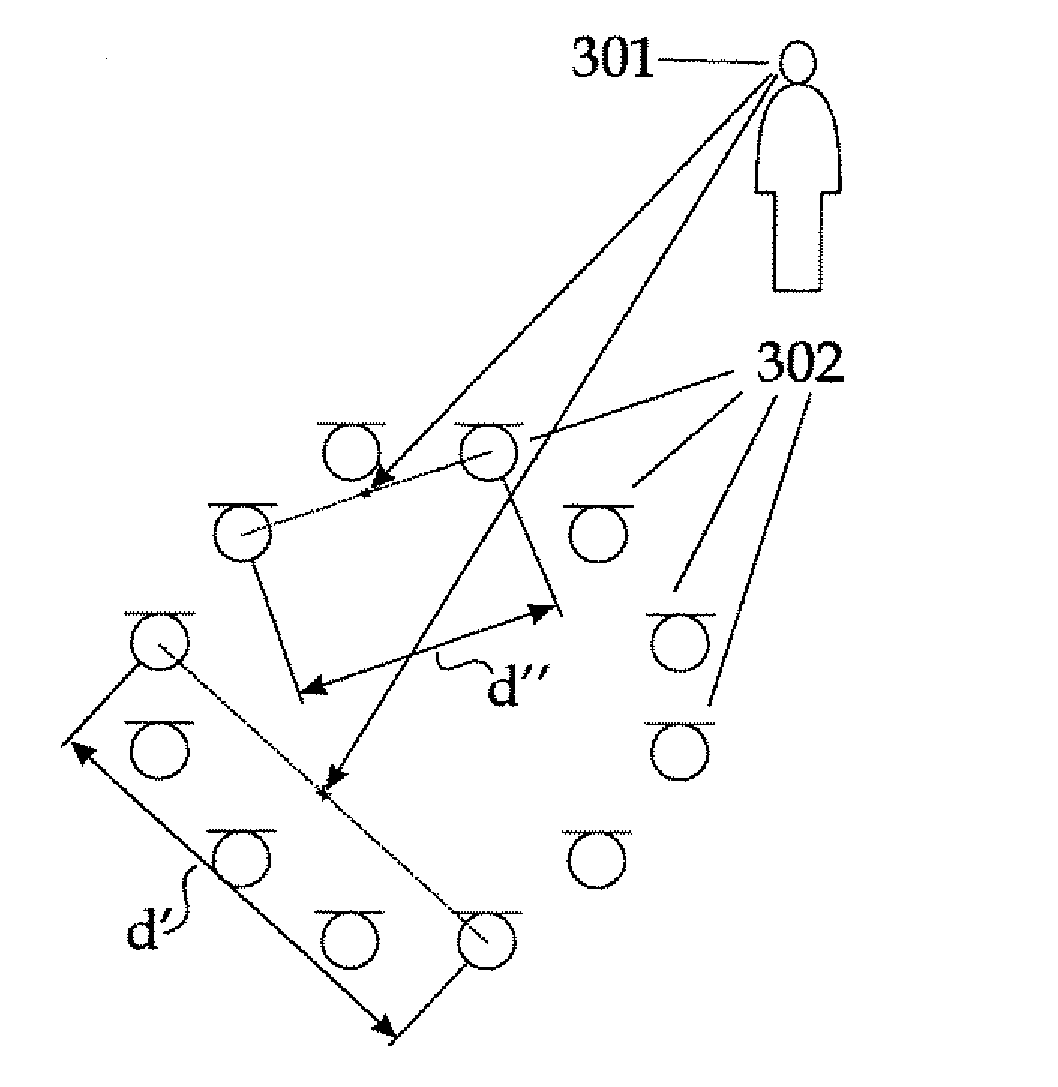

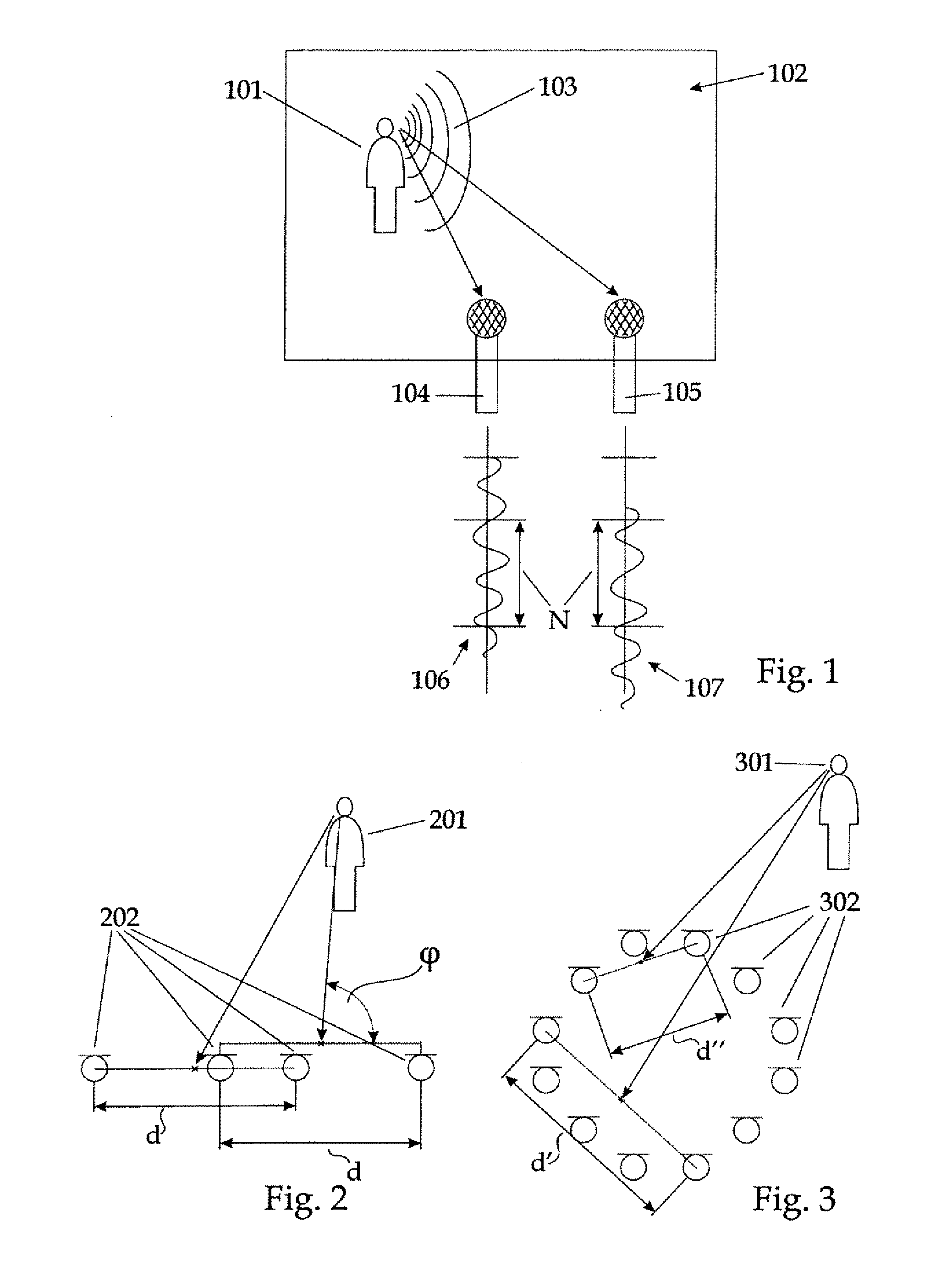

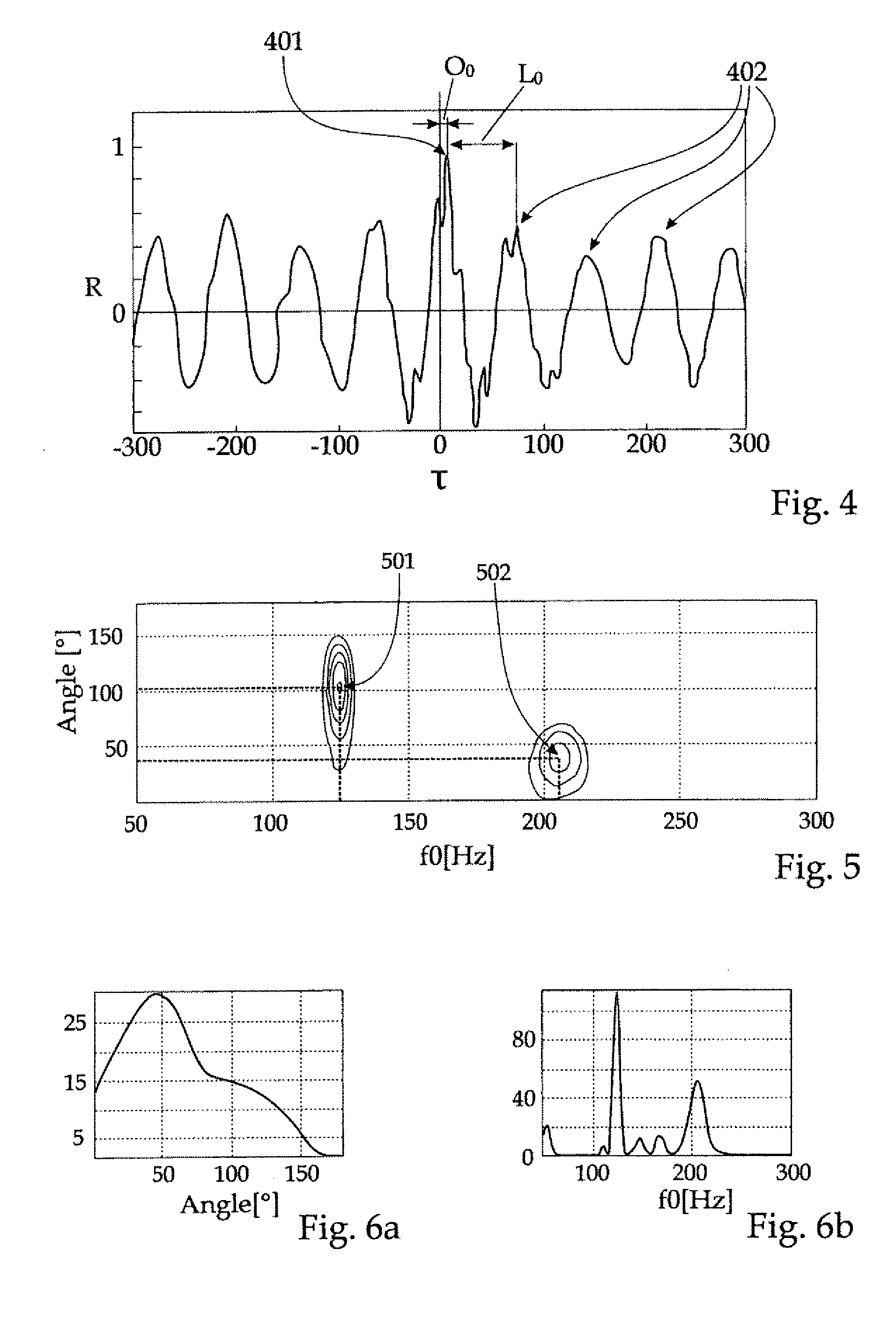

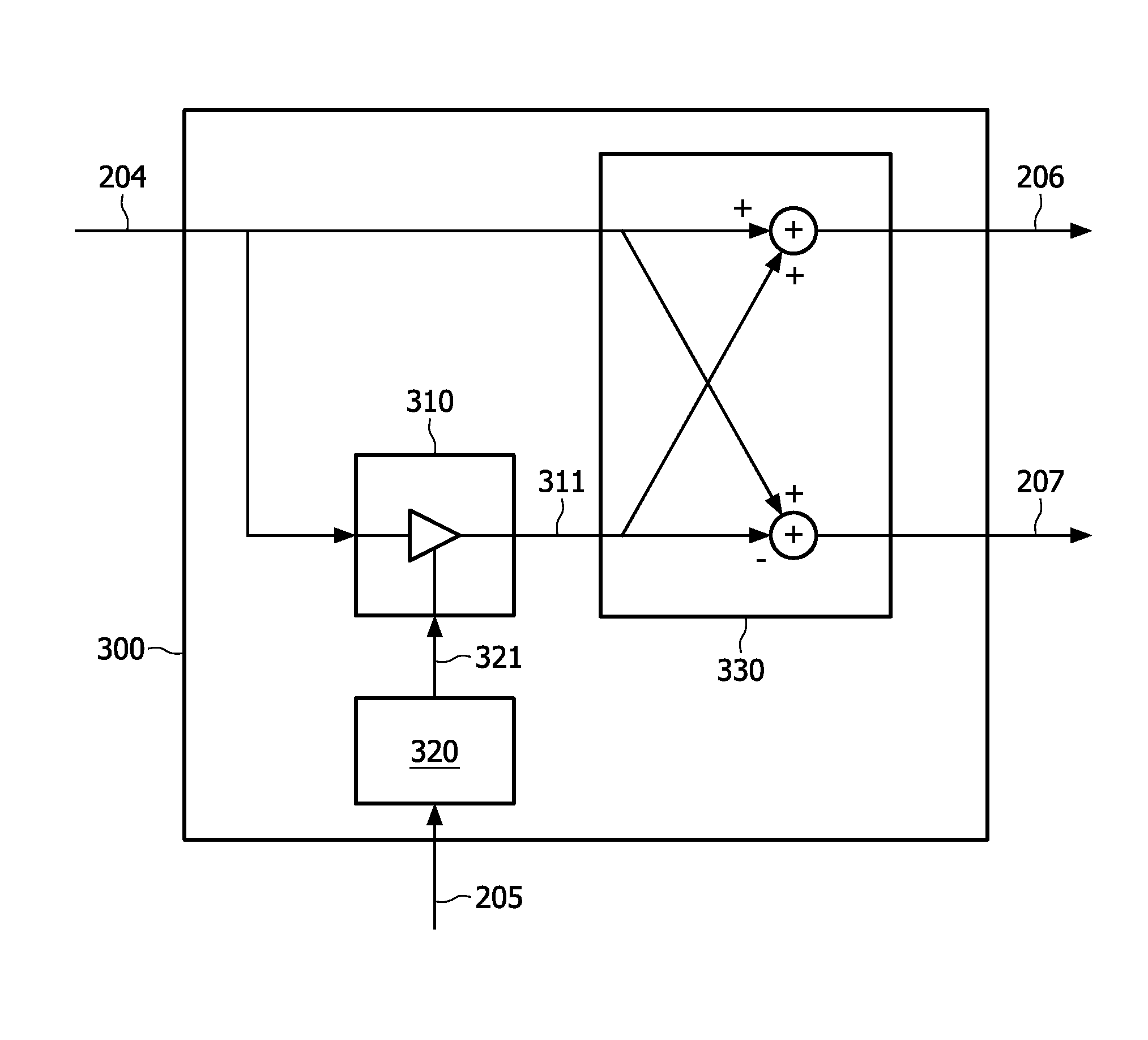

Joint position-pitch estimation of acoustic sources for their tracking and separation

ActiveUS20100142327A1Simple methodDirection finders using ultrasonic/sonic/infrasonic wavesSpeech analysisSound sourcesCorrelation function

The invention relates to a method for localizing and tracking acoustic sources (101) in a multi-source environment, comprising the steps of recording audio-signals (103) of at least one acoustic source (101) with at least two recording means (104, 105), creating a two- or multi-channel recording signal, partitioning said recording signal into frames of predefined length (N), calculating for each frame a cross-correlation function as a function of discrete time-lag values (τ) for channel pairs (106, 107) of the recording signal, evaluating the cross-correlation function by calculating a sampling function depending on a pitch parameter (f0) and at least one spatial parameter (φ0), the sampling function assigning a value to every point of a multidimensional space being spanned by the pitch-parameter and the spatial parameters, and identifying peaks in said multidimensional space with respective acoustic sources in the multi-source environment.

Owner:TECHN UNIV GRAZ

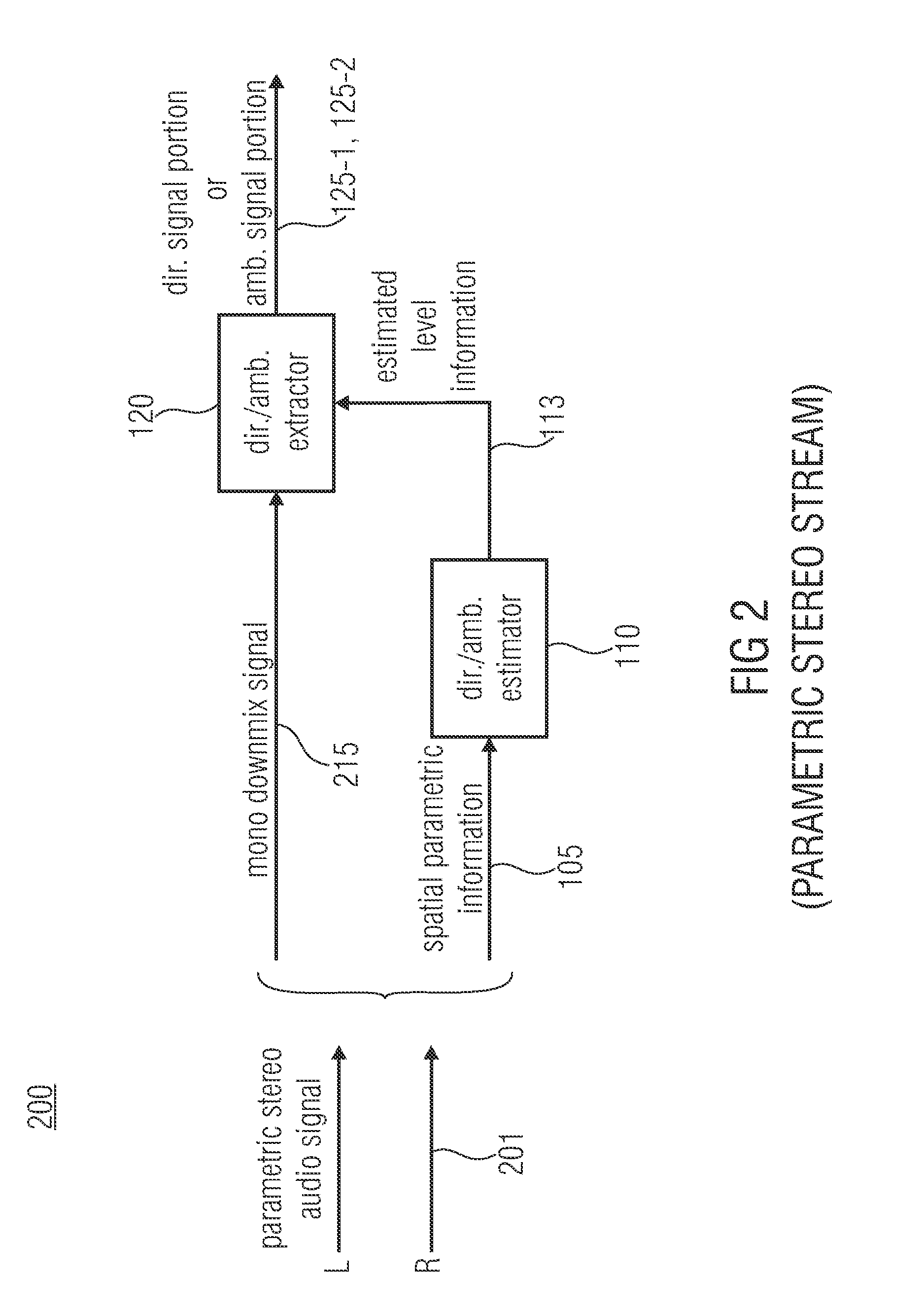

Parametric stereo upmix apparatus, a parametric stereo decoder, a parametric stereo downmix apparatus, a parametric stereo encoder

ActiveUS8811621B2Quality of left and rightLeft and right signalsSpeech analysisPseudo-stereo systemsVocal tractComputer vision

A parametric stereo upmix apparatus generates left and right signals from a mono downmix signal based on spatial parameters. The parametric stereo upmix includes a predictor configured to predict a difference signal including a difference between the left and right signals based on the mono downmix signal scaled with a prediction coefficient. The prediction coefficient is derived from the spatial parameters. The parametric stereo upmix apparatus further includes an arithmetic unit configured to derive the left and right signals based on a sum and a difference of the mono downmix signal and the difference signal.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

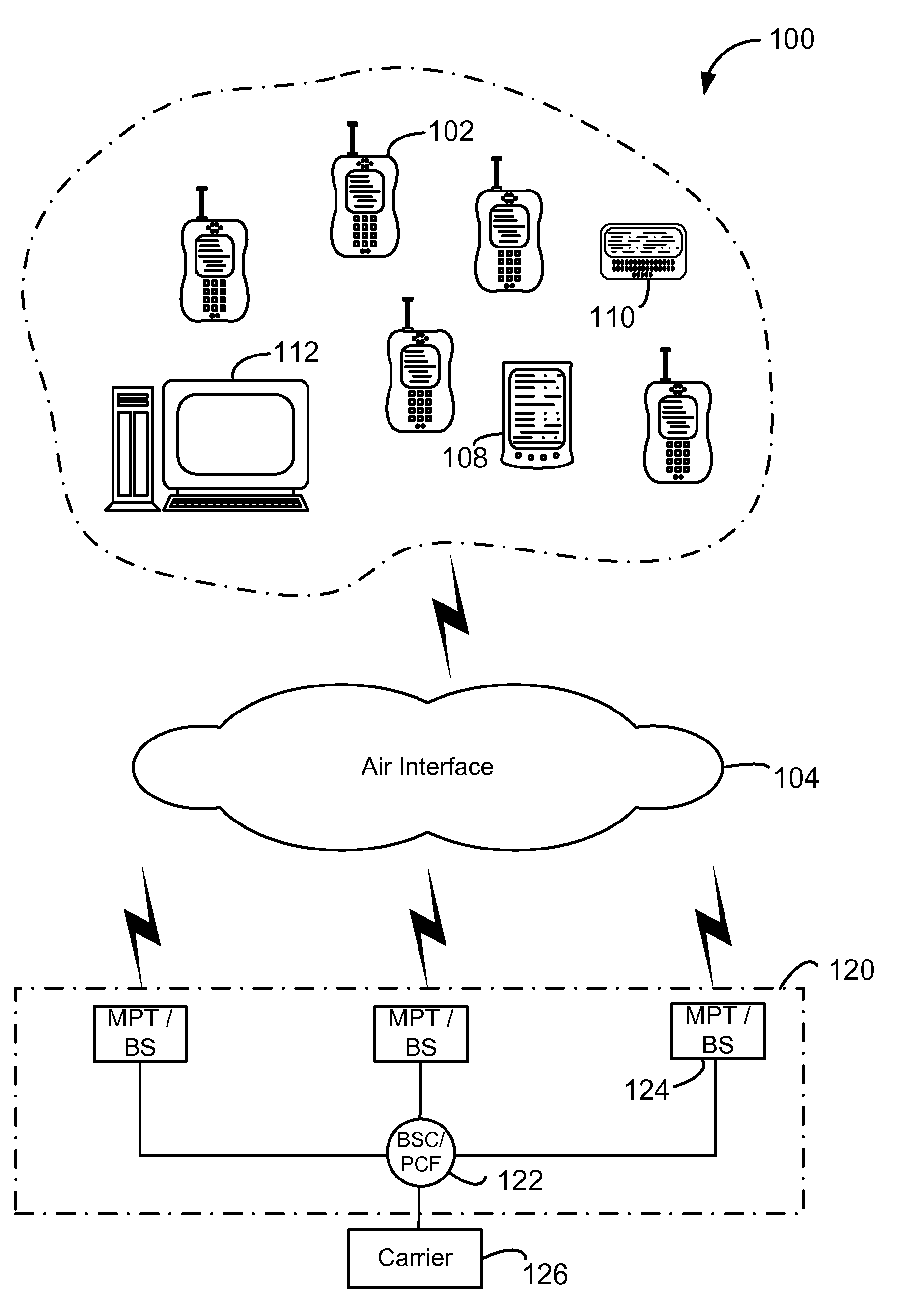

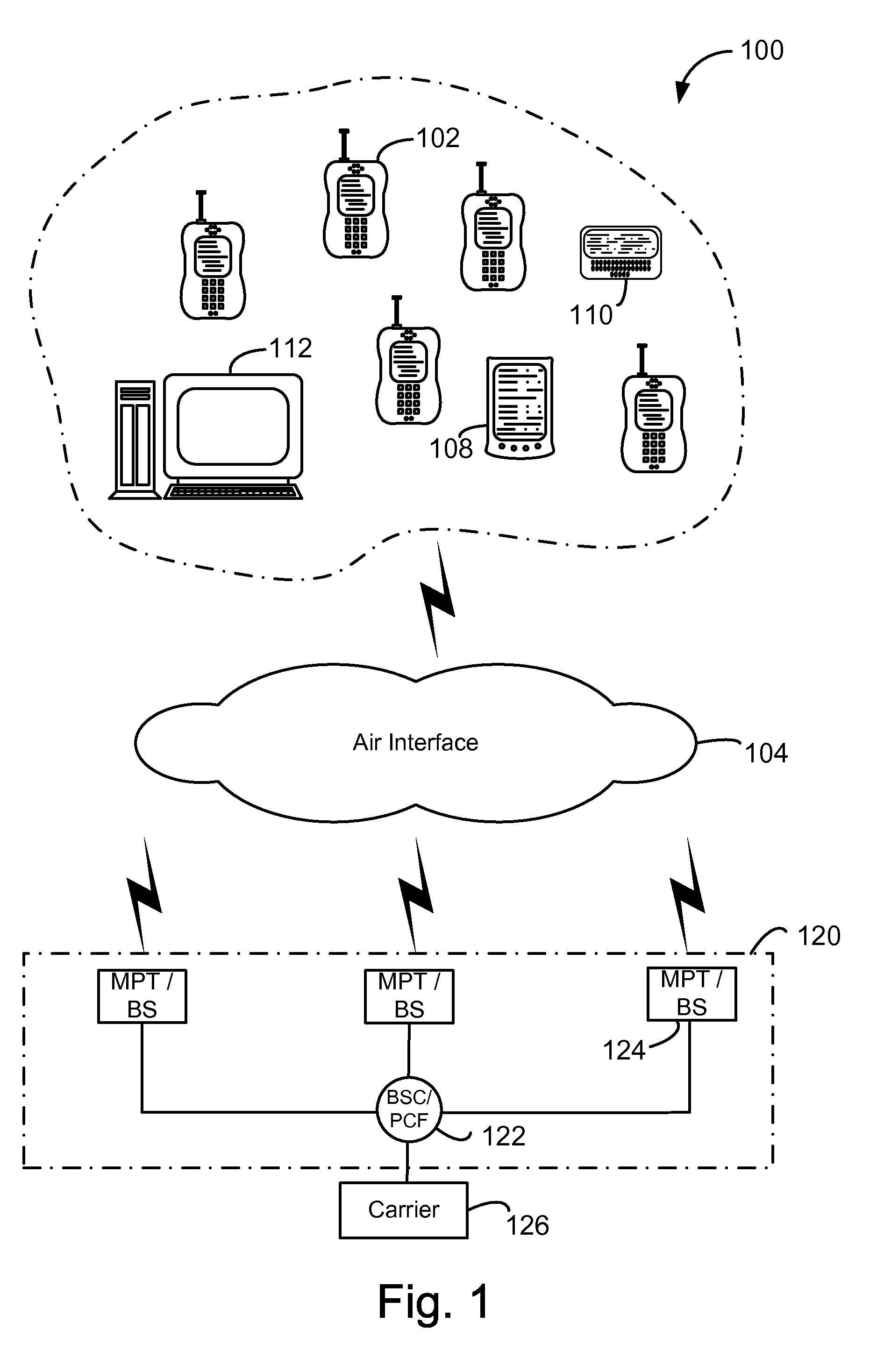

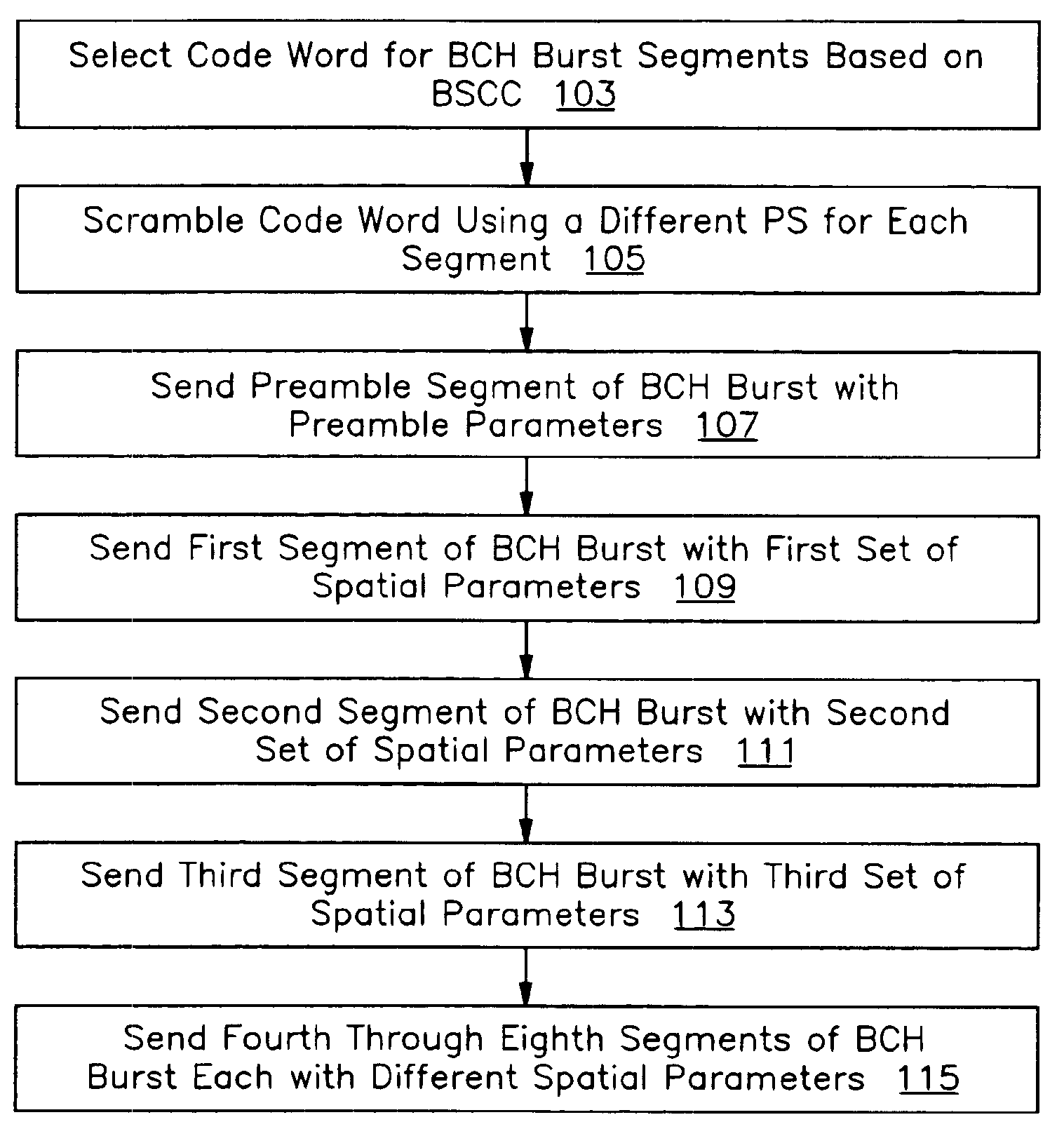

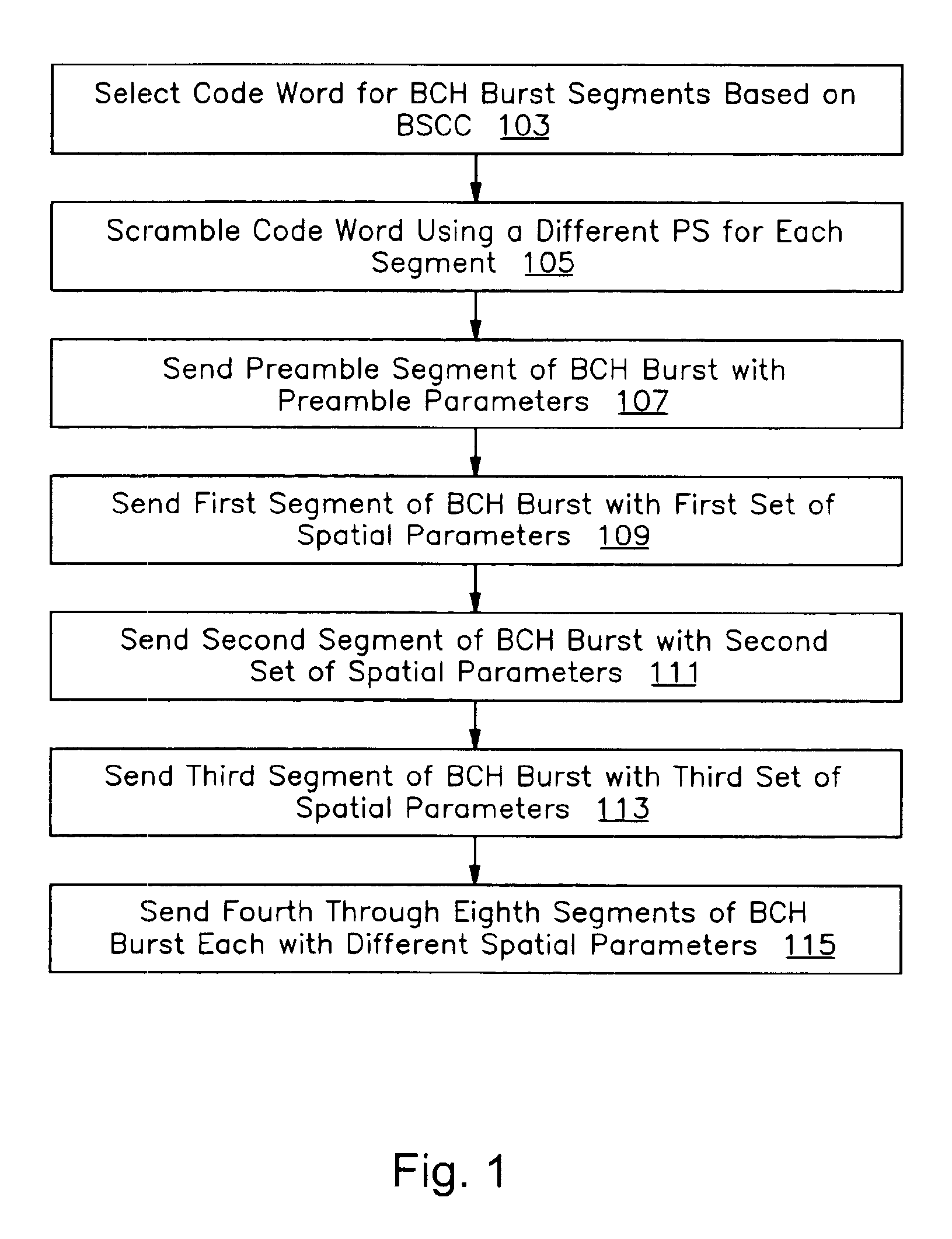

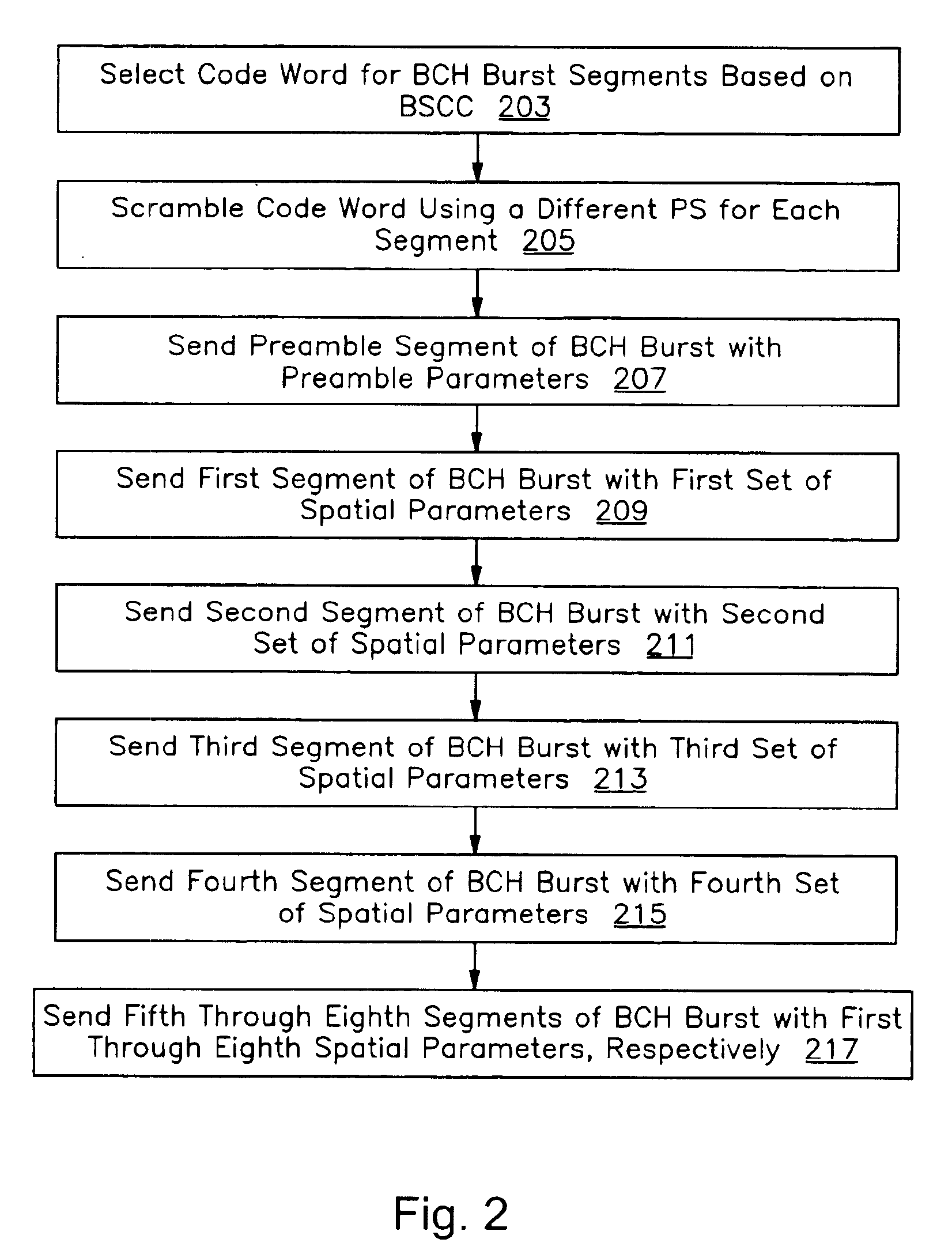

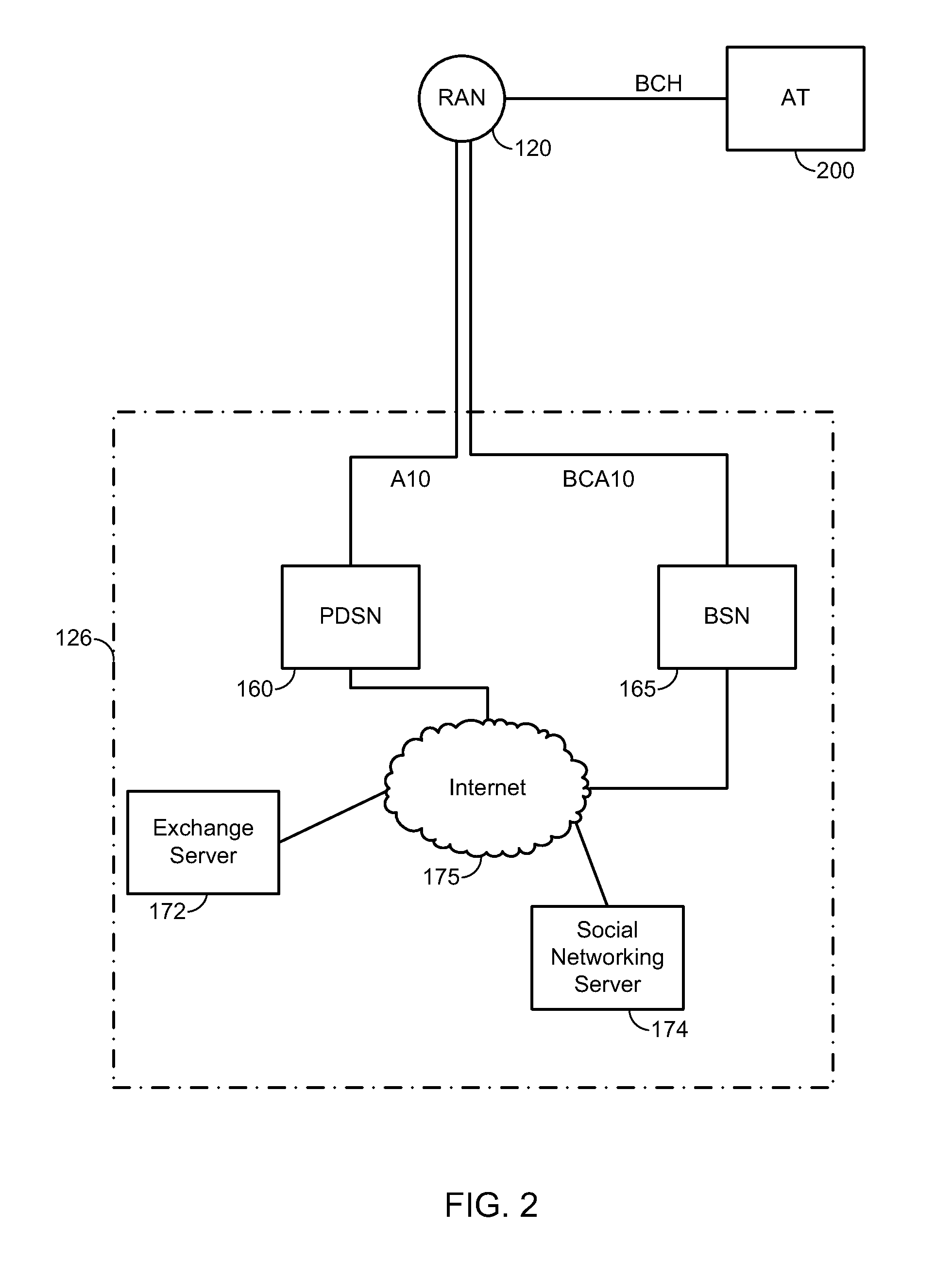

Efficient broadcast channel structure and use for spatial diversity communications

ActiveUS6928287B2Spatial transmit diversityTime-division multiplexBroadcast channelsComputer science

Owner:APPLE INC

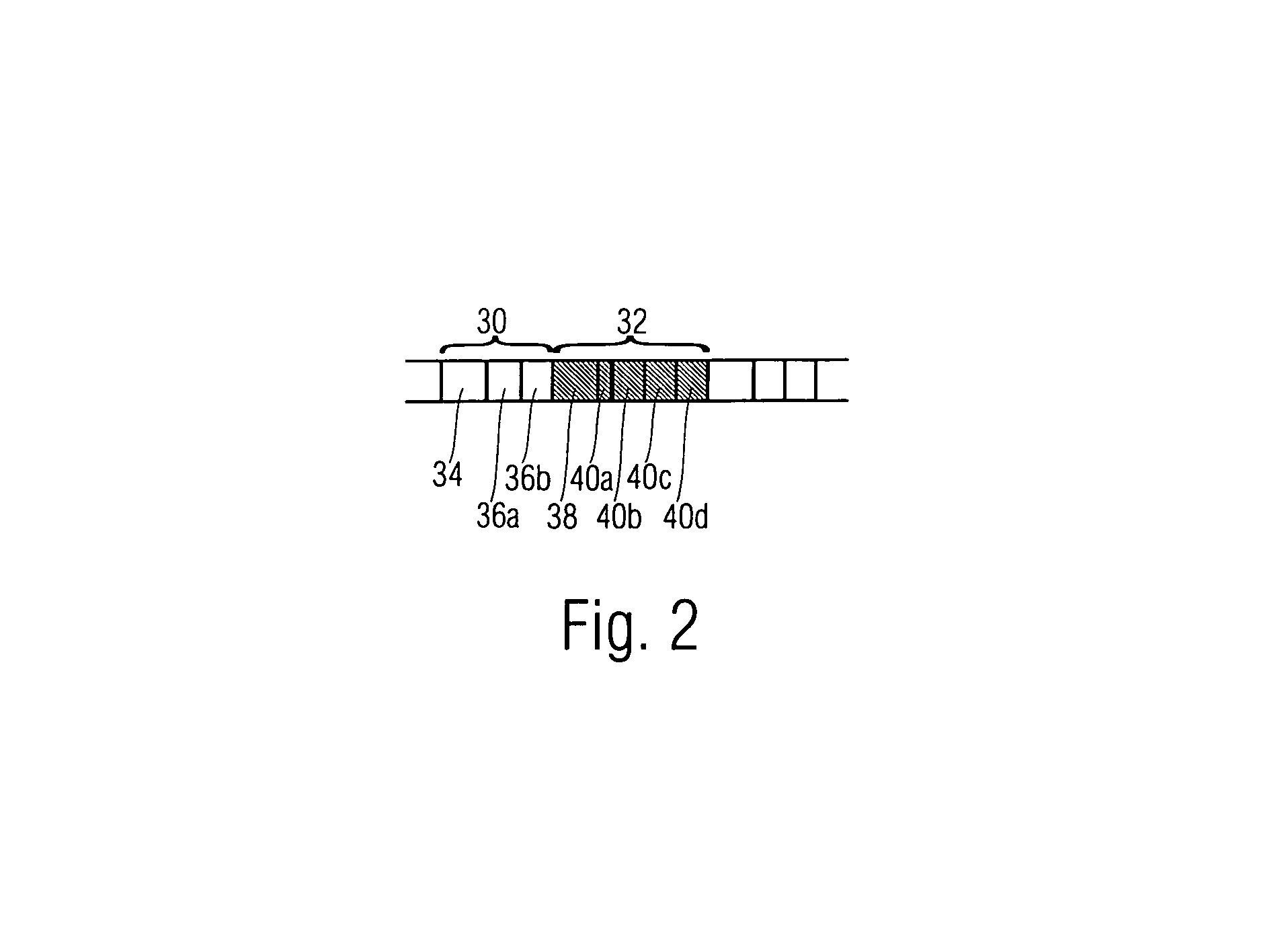

Audio coding/decoding with spatial parameters and non-uniform segmentation for transients

In binaural stereo coding, only one monaural channel is encoded. An additional layer holds the parameters to retrieve the left and right signal. An encoder is disclosed which links transient information extracted from the mono encoded signal to parametric multi-channel layers to provide increased performance. Transient positions can either be directly derived from the bit-stream or be estimated from other encoded parameters (e.g. window-switching flag in mp3).

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

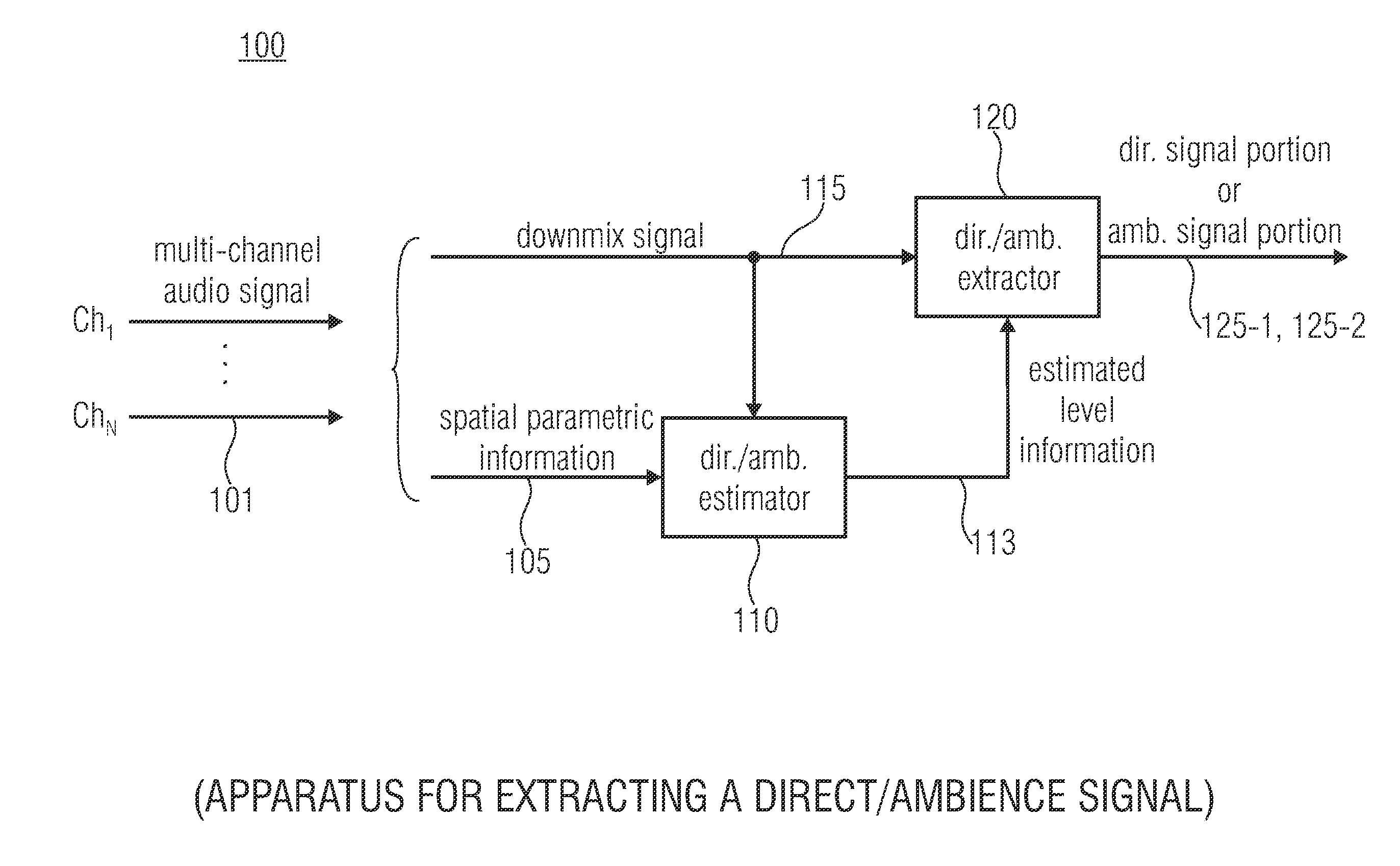

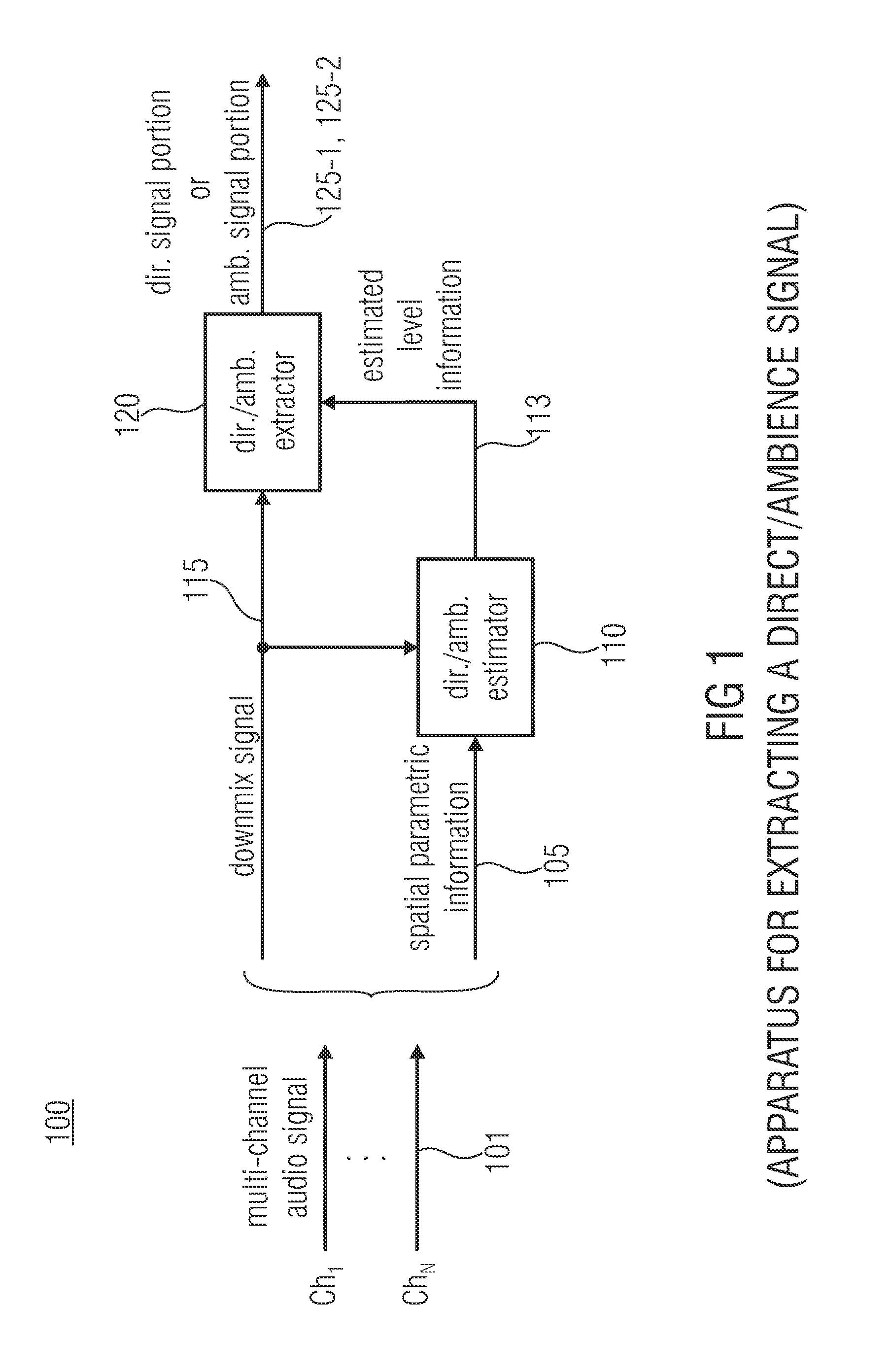

Apparatus and method for extracting a direct/ambience signal from a downmix signal and spatial parametric information

An apparatus for extracting a direct and / or ambience signal from a downmix signal and spatial parametric information, the downmix signal and the spatial parametric information representing a multi-channel audio signal having more channels than the downmix signal, wherein the spatial parametric information has inter-channel relations of the multi-channel audio signal, is described. The apparatus has a direct / ambience estimator and a direct / ambience extractor. The direct / ambience estimator is configured for estimating a level information of a direct portion and / or an ambient portion of the multi-channel audio signal based on the spatial parametric information. The direct / ambience extractor is configured for extracting a direct signal portion and / or an ambient signal portion from the downmix signal based on the estimated level information of the direct portion or the ambient portion.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

Integrated display and management of data objects based on social, temporal and spatial parameters

In an embodiment, a client device receives a query that specifies social, temporal and spatial parameters relative to a set of users (e.g., a source user or source user group). The client device determines degrees to which the specified parameters are related to a group of target users in social, temporal and spatial dimensions. The client device also determines an expected availability of one or more target users for interaction (e.g., interaction via particular types of communication session types, such as voice, video, text, etc.). The client device performs a processing function on at least one data object associated with the group of target users based on (i) whether the determined degrees of relation satisfy the specified parameters of the query, and (ii) the expected availability of the one or more target users in the group of target users.

Owner:QUALCOMM INC

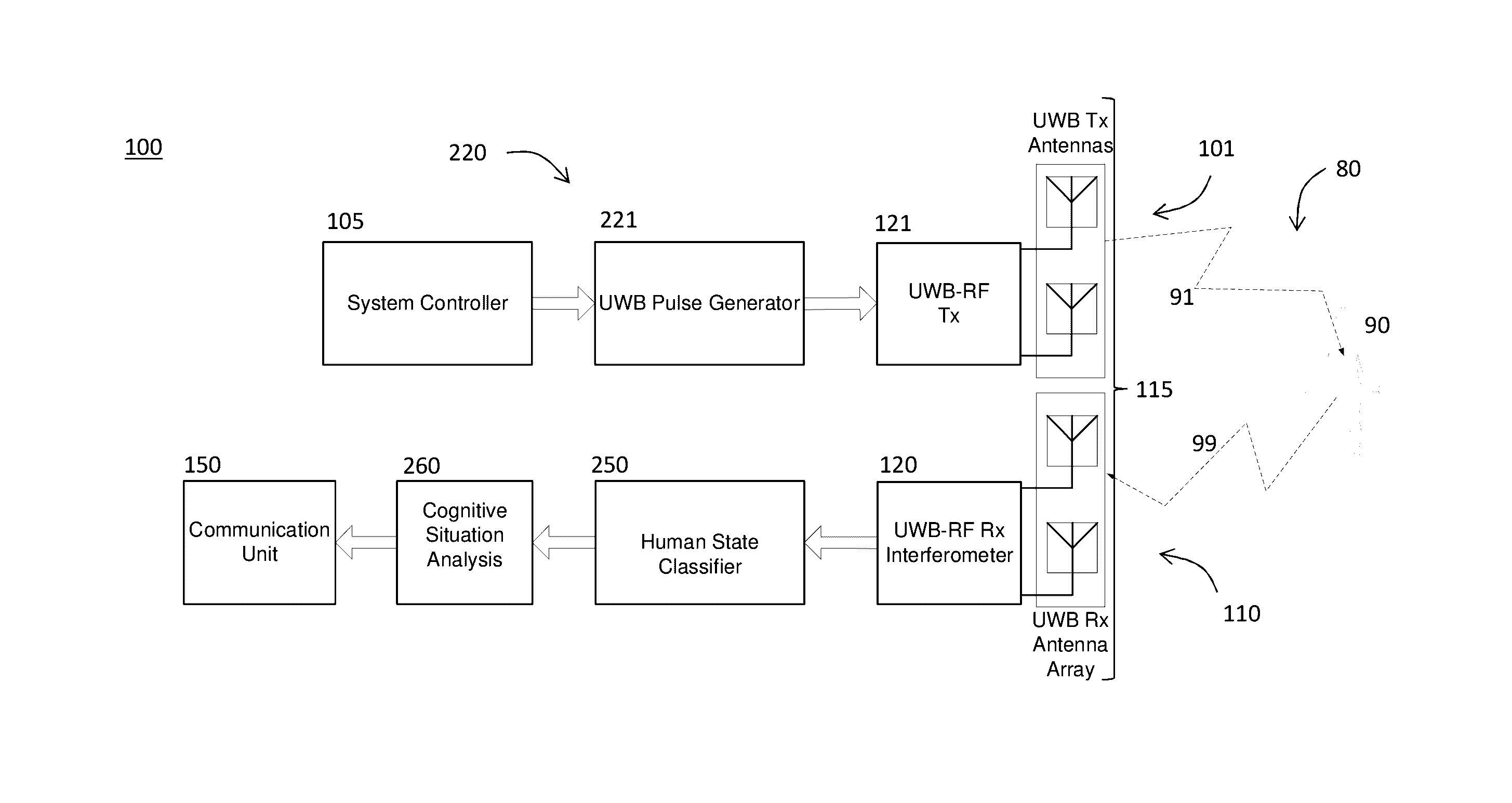

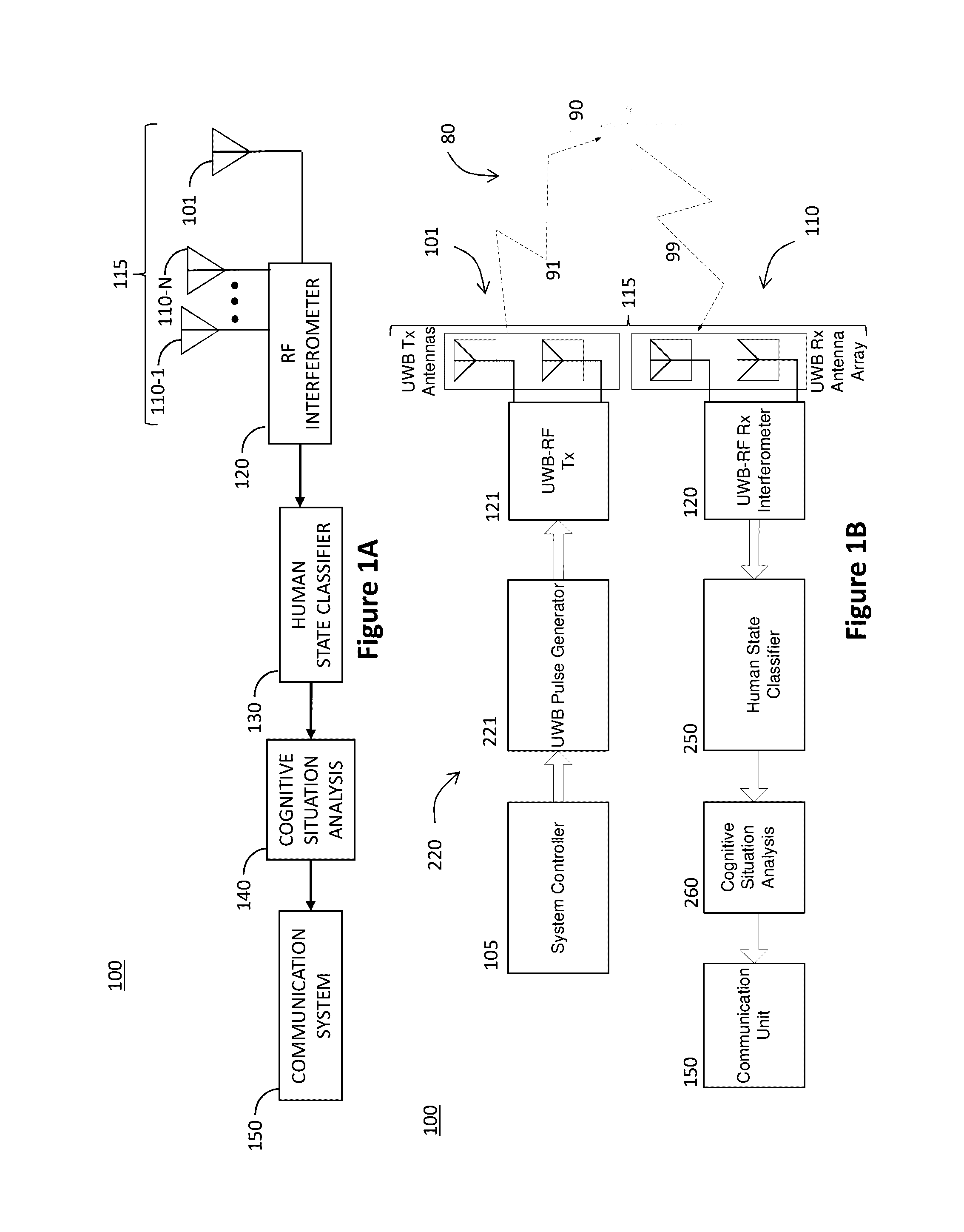

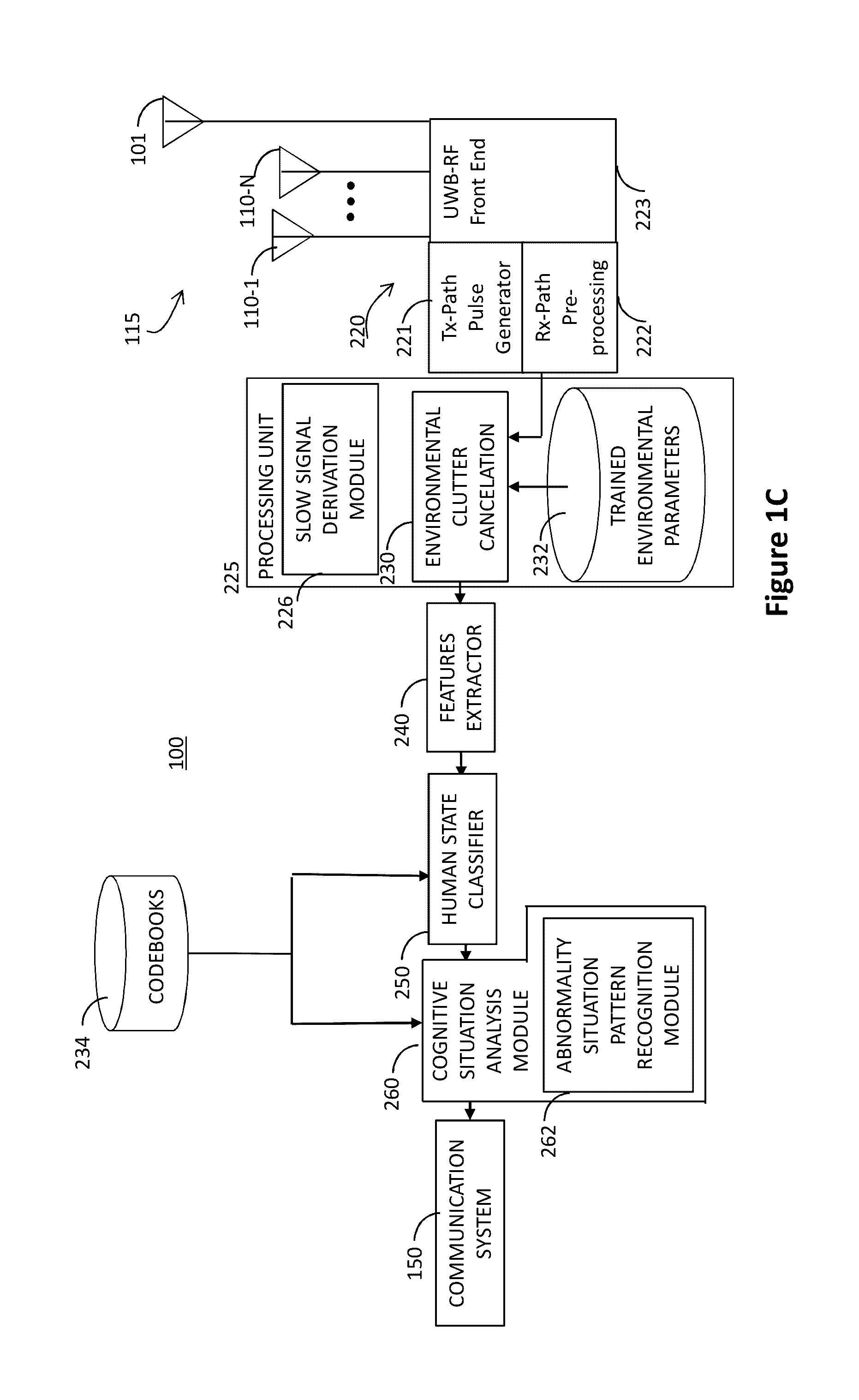

Human posture feature extraction in personal emergency response systems and methods

A non-wearable Personal Emergency Response System (PERS) architecture is provided, implementing RF interferometry using synthetic aperture antenna arrays to derive ultra-wideband echo signals which are analyzed and then processed by a two-stage human state classifier and abnormal states pattern recognition. Systems and methods transmit ultra-wide band radio frequency signals at, and receive echo signals from, the environment, process the received echo signals to derive a spatial distribution of echo sources in the environment using spatial parameters of the at least one transmitting and / or receiving antennas, and estimate postures human(s) in the environment by analyzing the spatial distribution with respect to echo intensity. The antennas may be arranged in several linear baselines, implement virtual displacements, and may be set into multiple communicating sub-arrays. The decision process is carried out based on the instantaneous human state (local decision) followed by abnormal states patterns recognition (global decision).

Owner:ECHOCARE TECH LTD

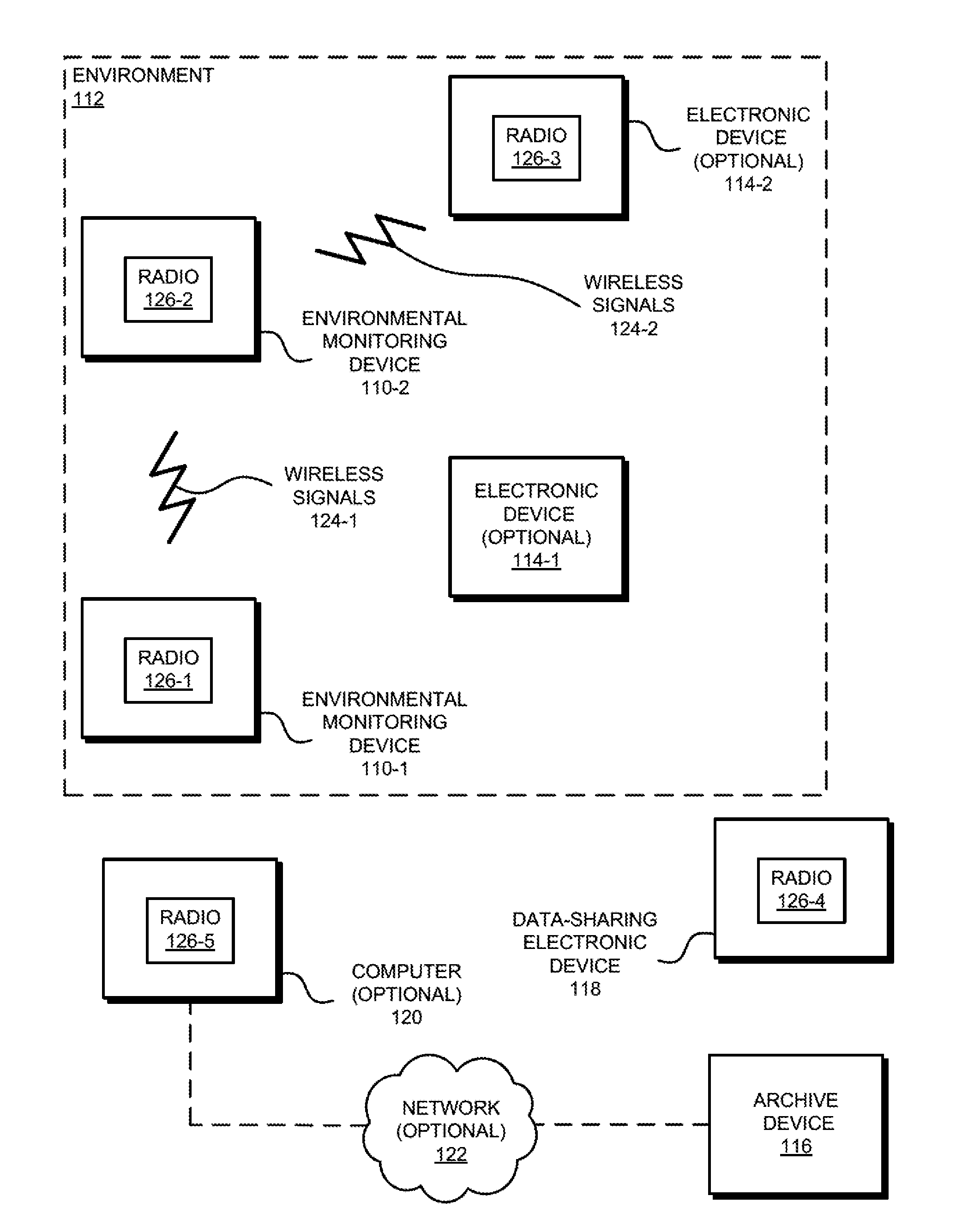

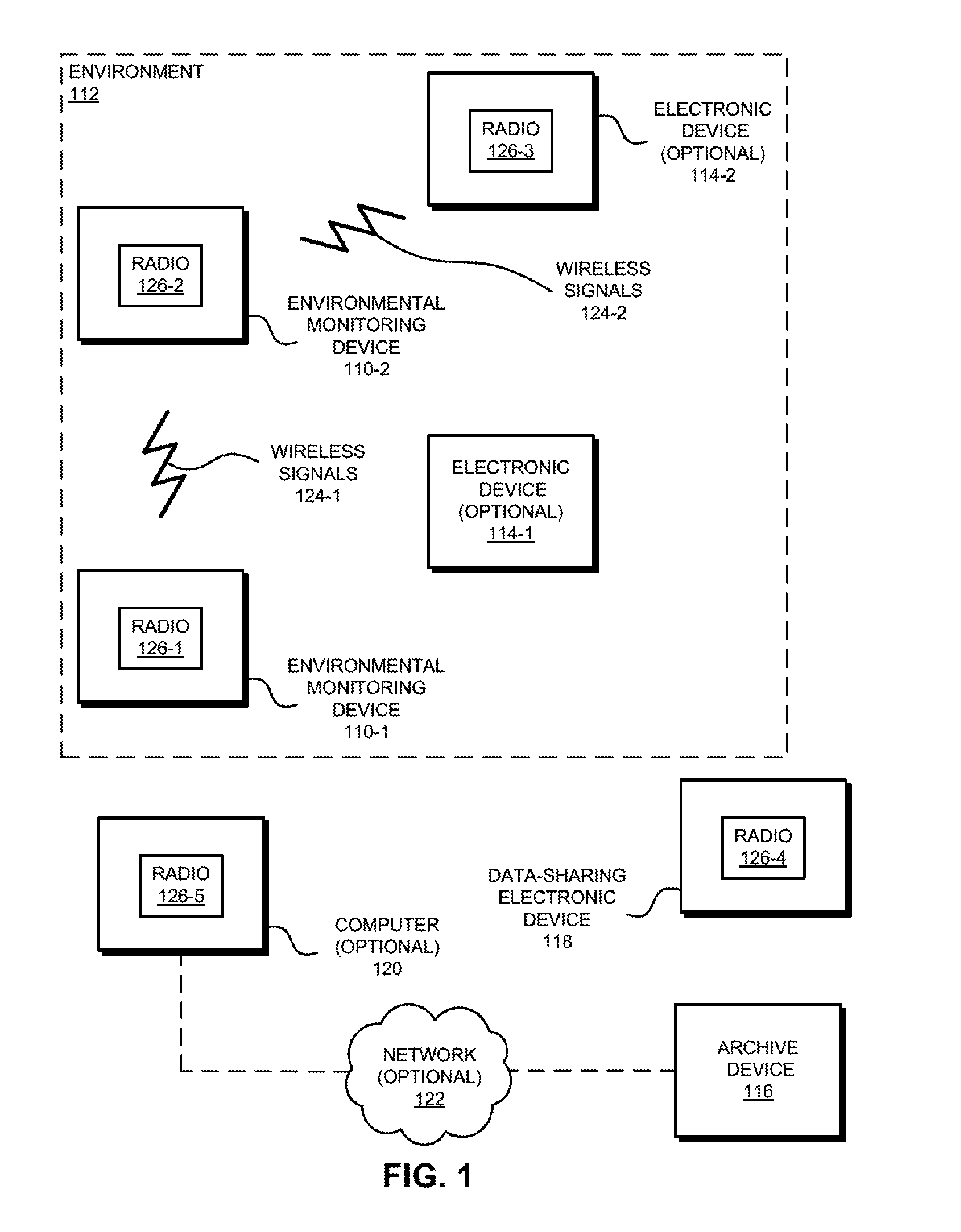

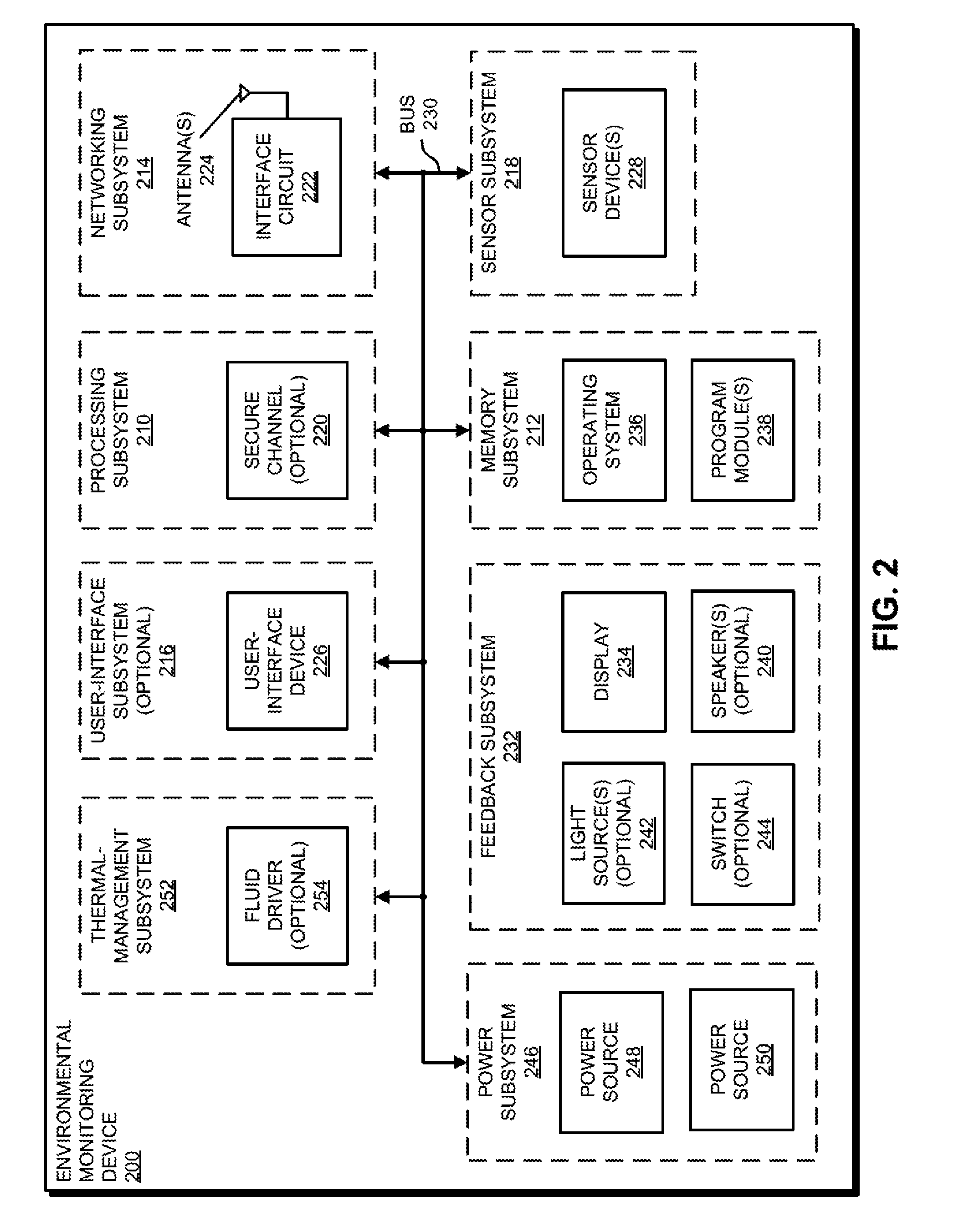

Electronic device with environmental monitoring

ActiveUS20150022357A1Engagement/disengagement of coupling partsMeasurement apparatus componentsCouplingEngineering

A mounting system for an electronic device is described. This mounting system includes a base that can be rigidly mounted on or underneath a wall. Moreover, the base can be remateably coupled to the electronic device. The remateable coupling may involve pins that are inserted into corresponding holes and rotated into a lock position. Alternatively, the remateable coupling may involve magnets that mechanically couple to each other so long as the electronic device and the base are within a predefined distance. The electronic device may receive power via the remateable coupling or via inductive charging. In addition, the electronic device may monitor a spatial parameter, such as: a location of the electronic device, a velocity of the electronic device and / or an acceleration of the electronic device. If this spatial parameter changes without the electronic device first receiving a security code, the electronic device provides an alert.

Owner:LEEO

Adaptive residual audio coding

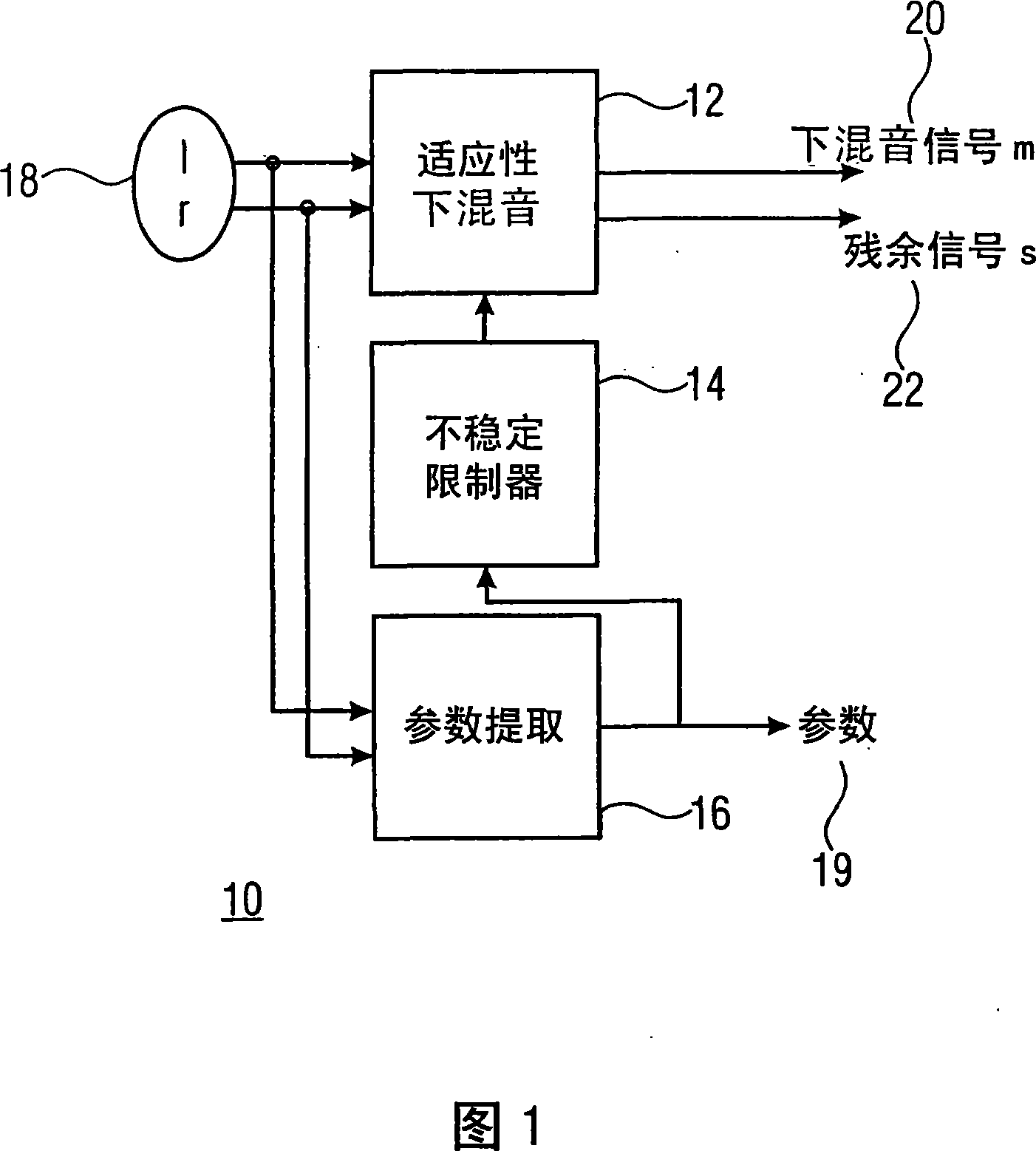

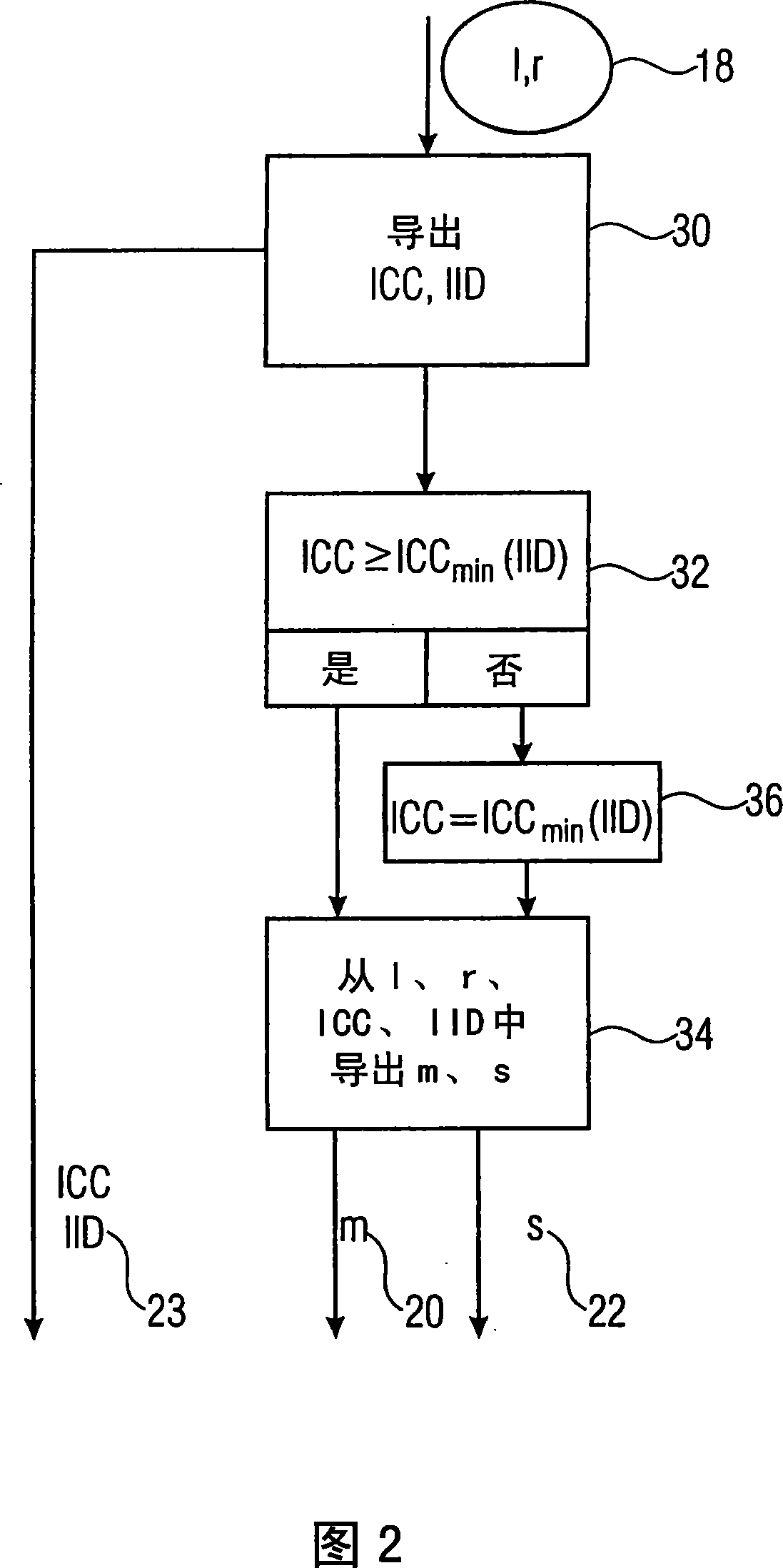

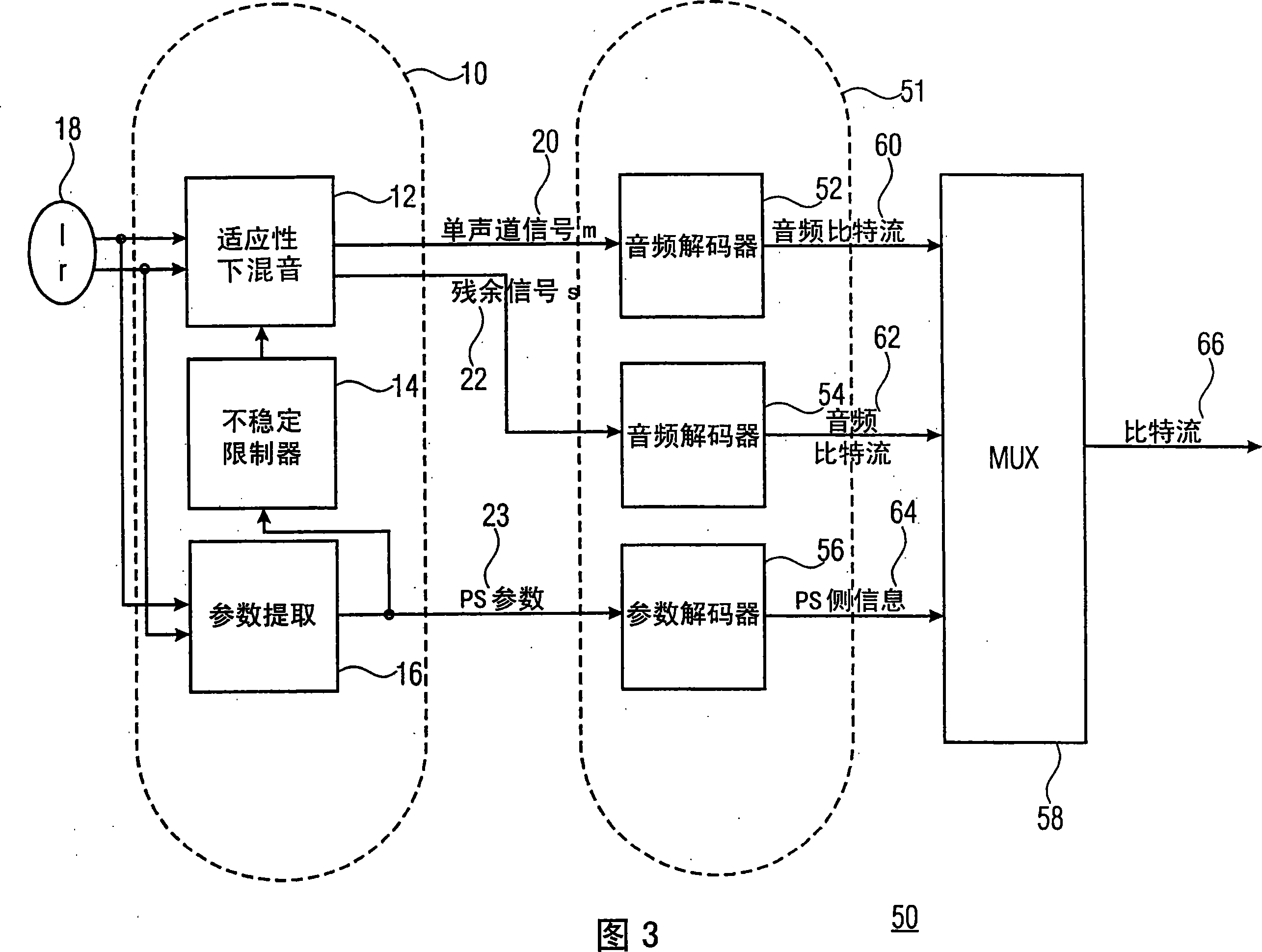

ActiveCN101160619ASave relationshipImplement the restriction logicSpeech analysisSpatial perceptionInstability

An audio signal having at least two channels can be efficiently down-mixed into a downmix signal and a residual signal, when the down-mixing rule used depends on a spatial parameter that is derived from the audio signal and that is post-processed by a limiter to apply a certain limit to the derived spatial parameter with the aim of avoiding instabilities during the up-mixing or down-mixing process. By having a down-mixing rule that dynamically depends on parameters describing an interrelation between the audio channels, one can assure that the energy within the down-mixed residual signal is as minimal as possible, which is advantageous in the view of coding efficiency. By post processing the spatial parameter with a limiter prior to using it in the down-mixing, one can avoid instabilities in the down- or up-mixing, which otherwise could result in a disturbance of the spatial perception of the encoded or decoded audio signal.

Owner:DOLBY INT AB +1

Integrated display and management of data objects based on social, temporal and spatial parameters

ActiveUS8302015B2Multiple digital computer combinationsSubstation equipmentObject basedVisual perception

An embodiment is directed to displaying information to a user of a communications device. The communications device receives a query including a social parameter, a temporal parameter and a spatial parameter relative to the user that are indicative of a desired visual representation of a set of data objects. The communications device determines degrees to which the social, temporal and spatial parameters of the query are related to each of the set of data objects in social, temporal and spatial dimensions, respectively. The communications device displaying a first visual representation of at least a portion of the set of data objects to the user based on whether the determined degrees of relation in the social dimension, temporal dimension and spatial dimension satisfy the respective parameters of the query.

Owner:QUALCOMM INC

Low complexity MPEG encoding for surround sound recordings

ActiveUS20100169102A1Reduce calculationReduce performanceSpeech analysisLoudspeaker spatial/constructional arrangementsMicrophone signalComputer science

The invention provides for the encoding of surround sound produced by any coincident microphone techniques with coincident-to-virtual microphone signal matrixing. An encoding scheme provides significantly lower computational demand, by deriving the spatial parameters and output downmixes from the coincident microphone array signals and the coincident-to-surround channel-coefficients matrix, instead of the multi-channel signals.

Owner:STMICROELECTRONICS ASIA PACIFIC PTE

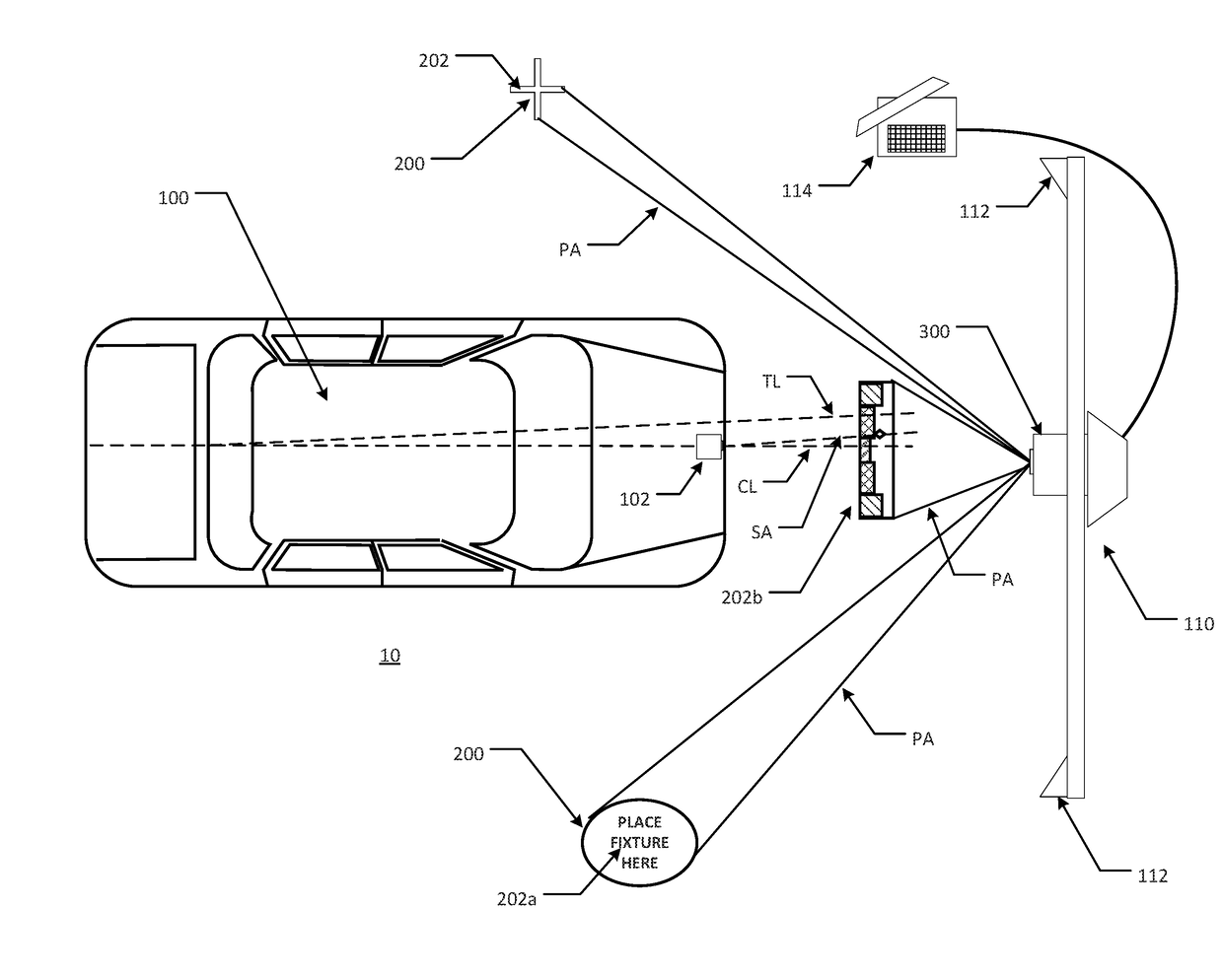

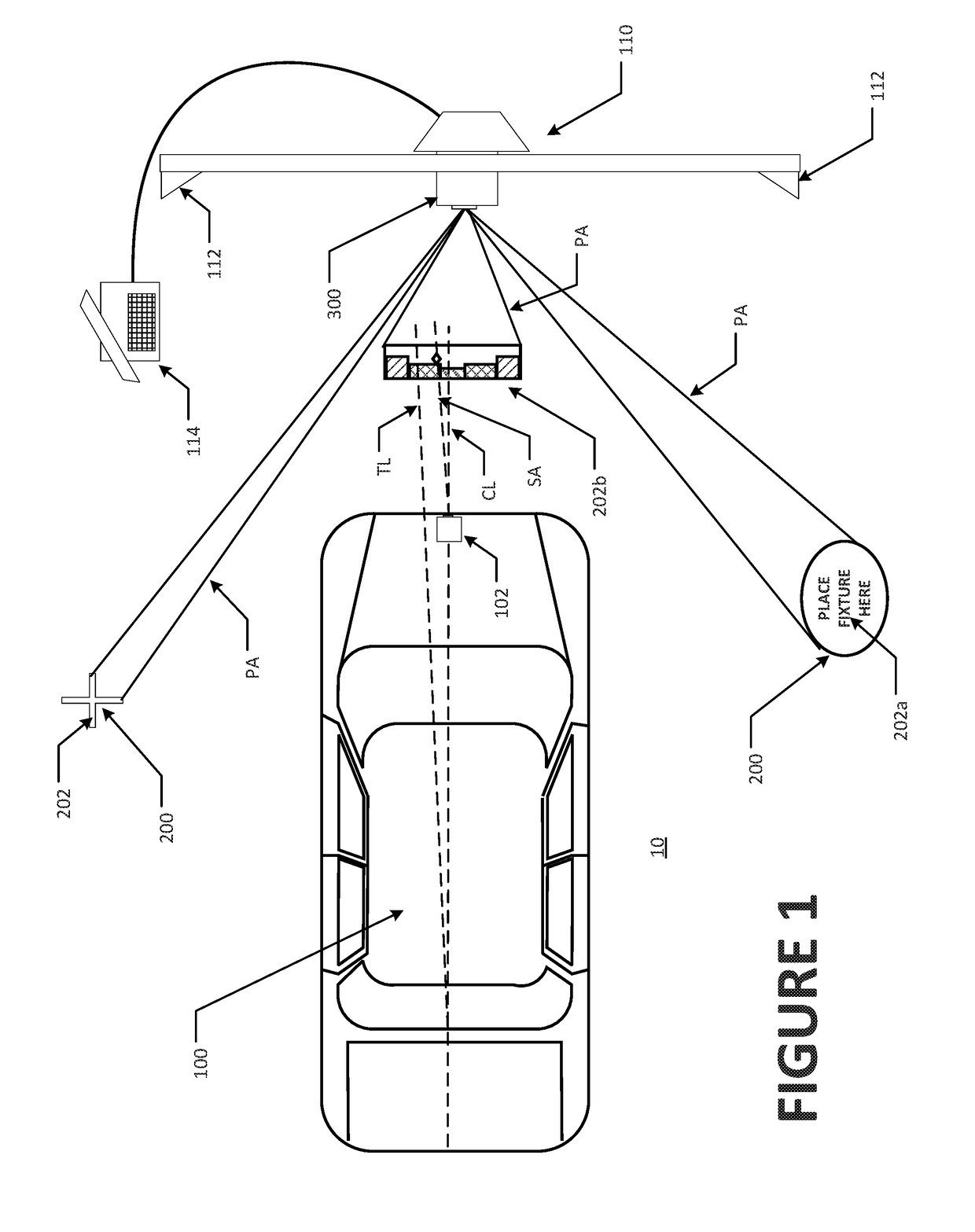

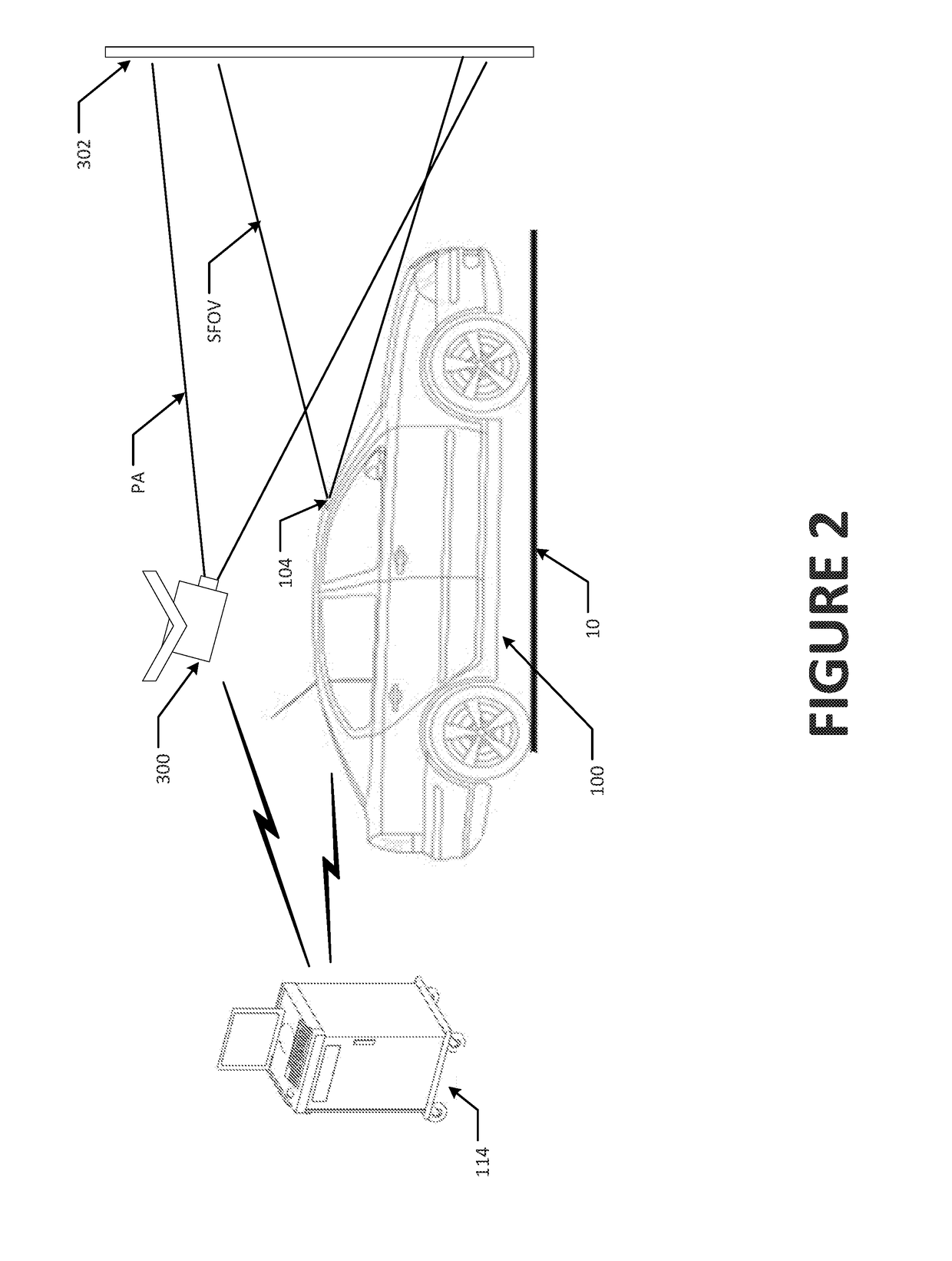

Method and Apparatus For Vehicle Inspection and Safety System Calibration Using Projected Images

ActiveUS20180100783A1Vehicle testingRegistering/indicating working of vehiclesSensor observationSpatial parameter

A vehicle service system and method to determine spatial parameters of a vehicle, employing a display system under processor control, to display or project visible indicia onto surfaces in proximity to a vehicle undergoing a safety system service or inspection identifying one or more locations, relative to the determined vehicle centerline or thrust line, at which a calibration fixture, optical target, or simulated test drive imagery is visible for observation by a sensor onboard the vehicle.

Owner:HUNTER ENG

System and method for providing secure communication between network nodes

ActiveUS20040166875A1Increase the difficultyDiminishing ability of communicationDirection finders using radio wavesNetwork traffic/resource managementSecure communicationComputer network

Owner:QUALCOMM INC

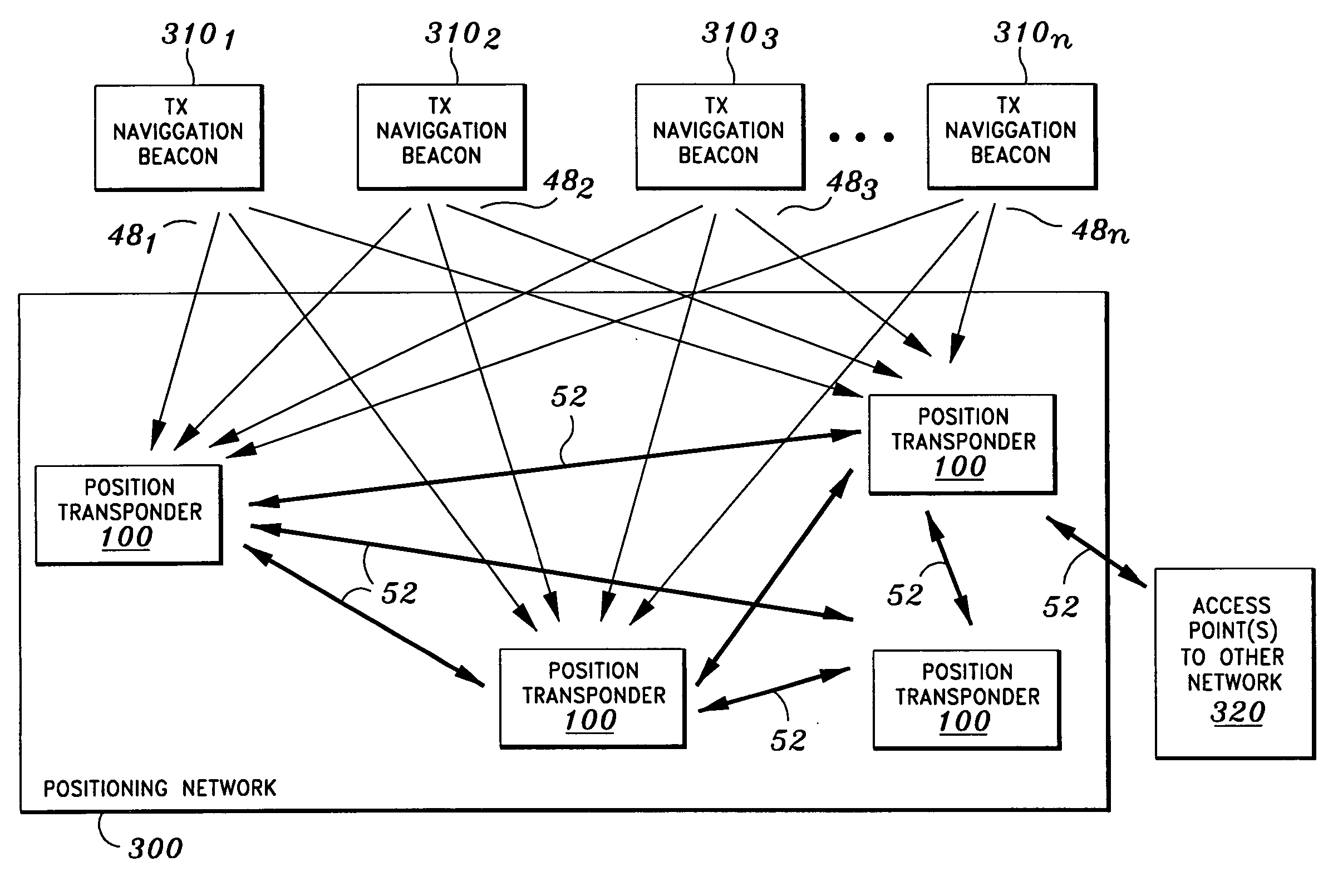

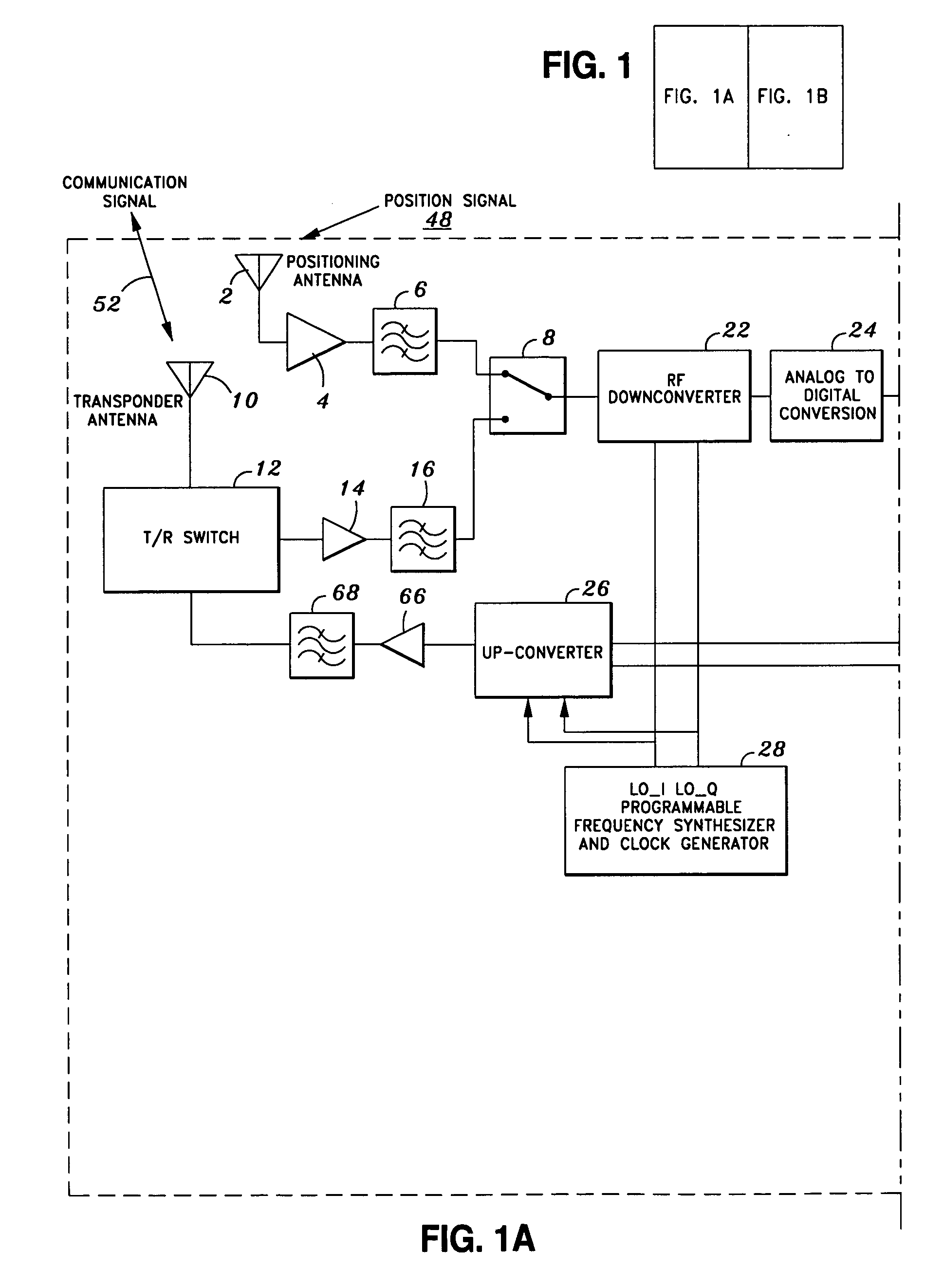

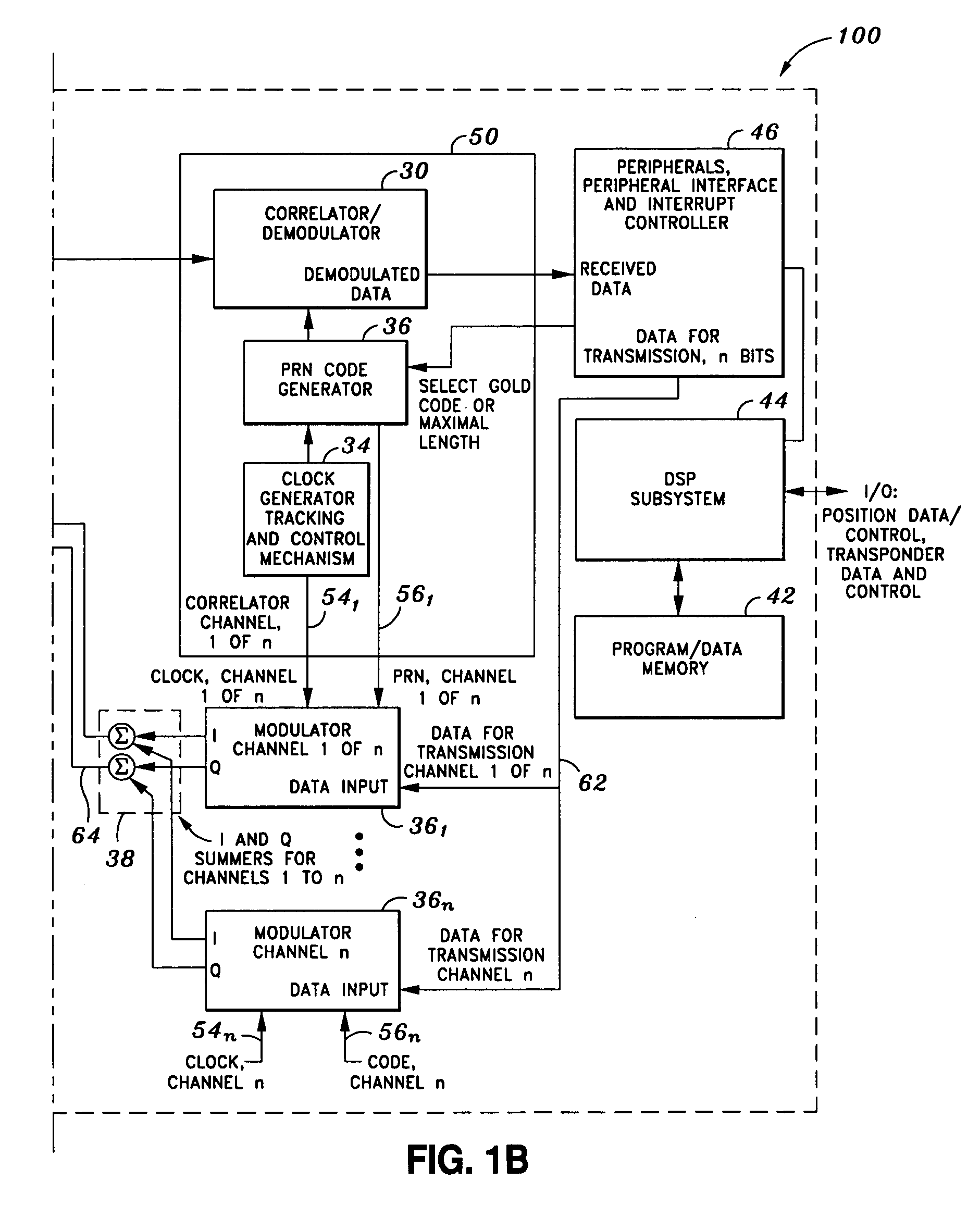

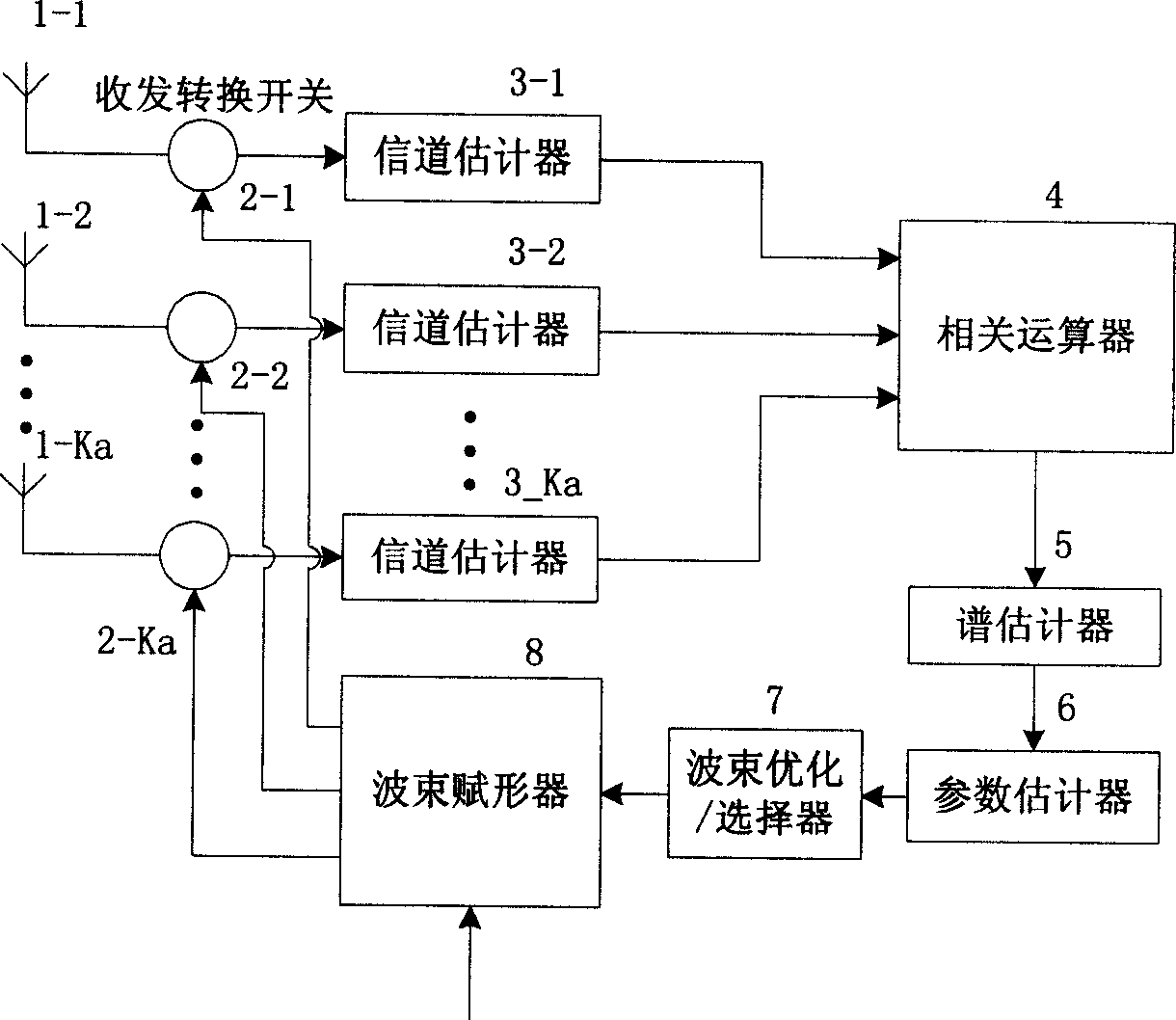

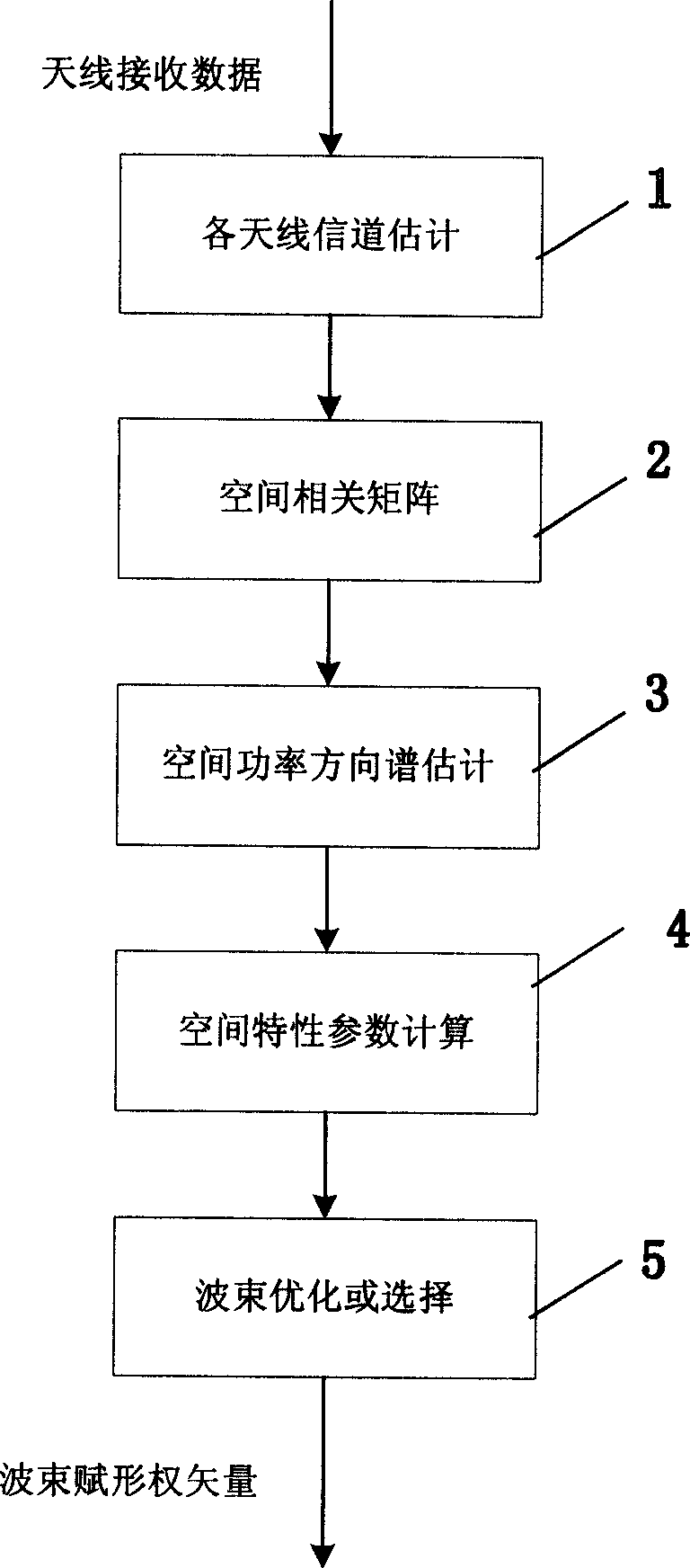

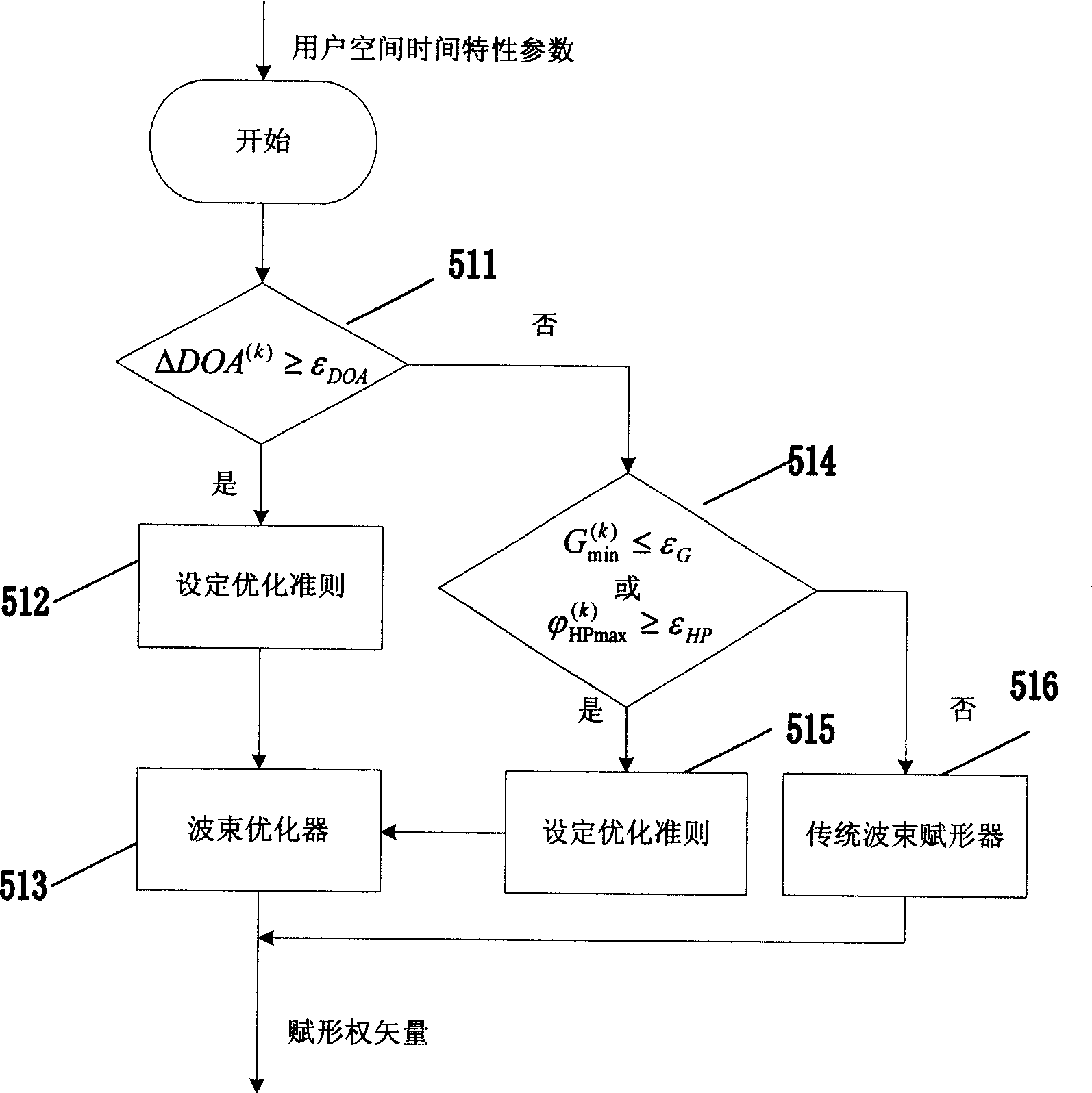

Down wave beam shaping method and device of radio channel

ActiveCN1658526ATransmission control/equalisingRadio transmission for post communicationRadio channelEngineering

This invention discloses an exciping method of down beam of wireless channel and the device. The method includes the following processes: estimate the channels of the antenna, estimate the user's space parameter according to the channel's estimation, estimate beam weight vector according to the space parameter's estimation, control the beam exciping according to the weight vector estimation. The device includes: several receiving and sending channel estimation devices to output the channel estimation; the relative devices to relate the several receiving and sending channel estimation; channel parameter estimation device to get the channel parameter estimation according to the relative results; the beam exciping device to excipe the beam according to the channel parameter estimation.

Owner:DATANG MOBILE COMM EQUIP CO LTD

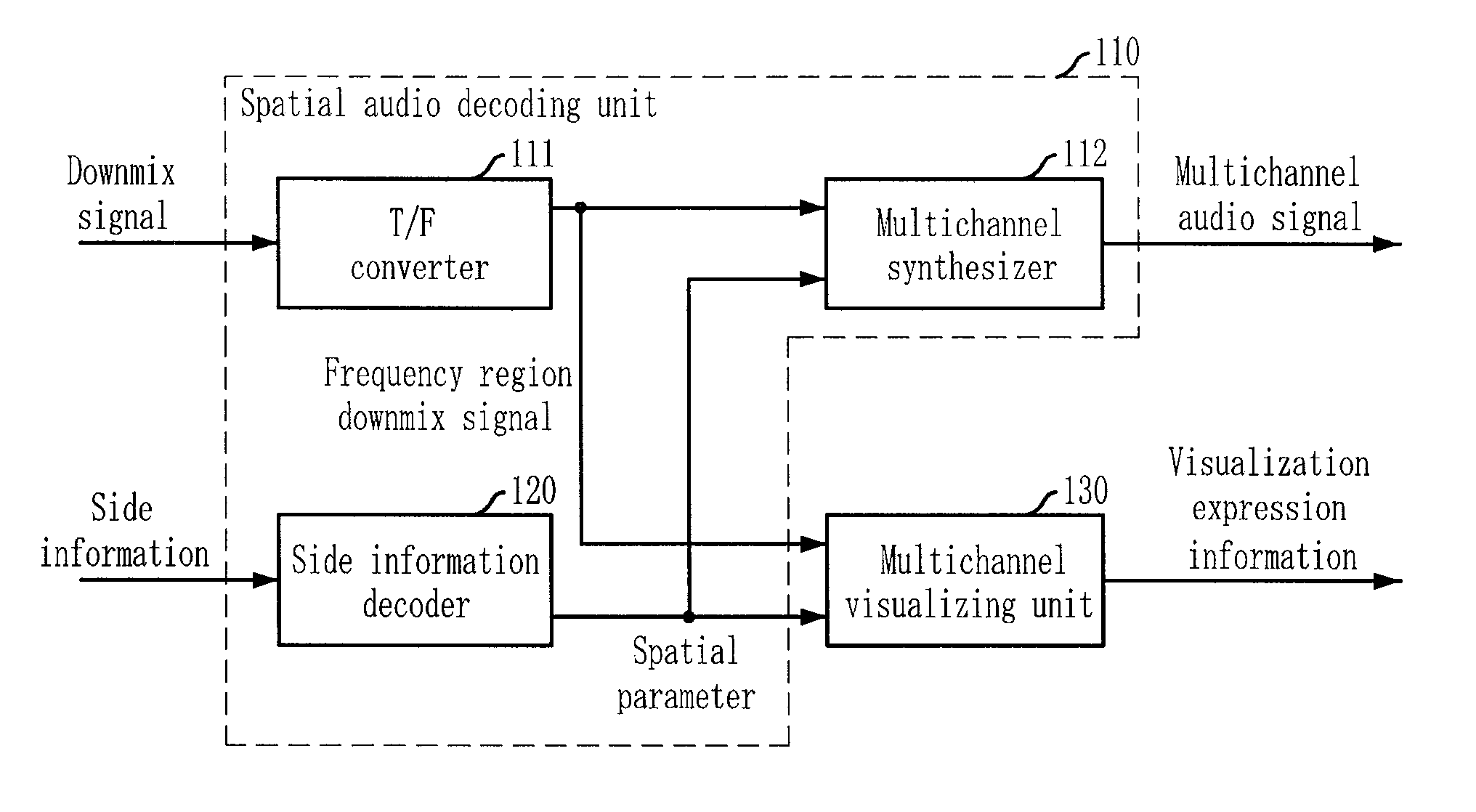

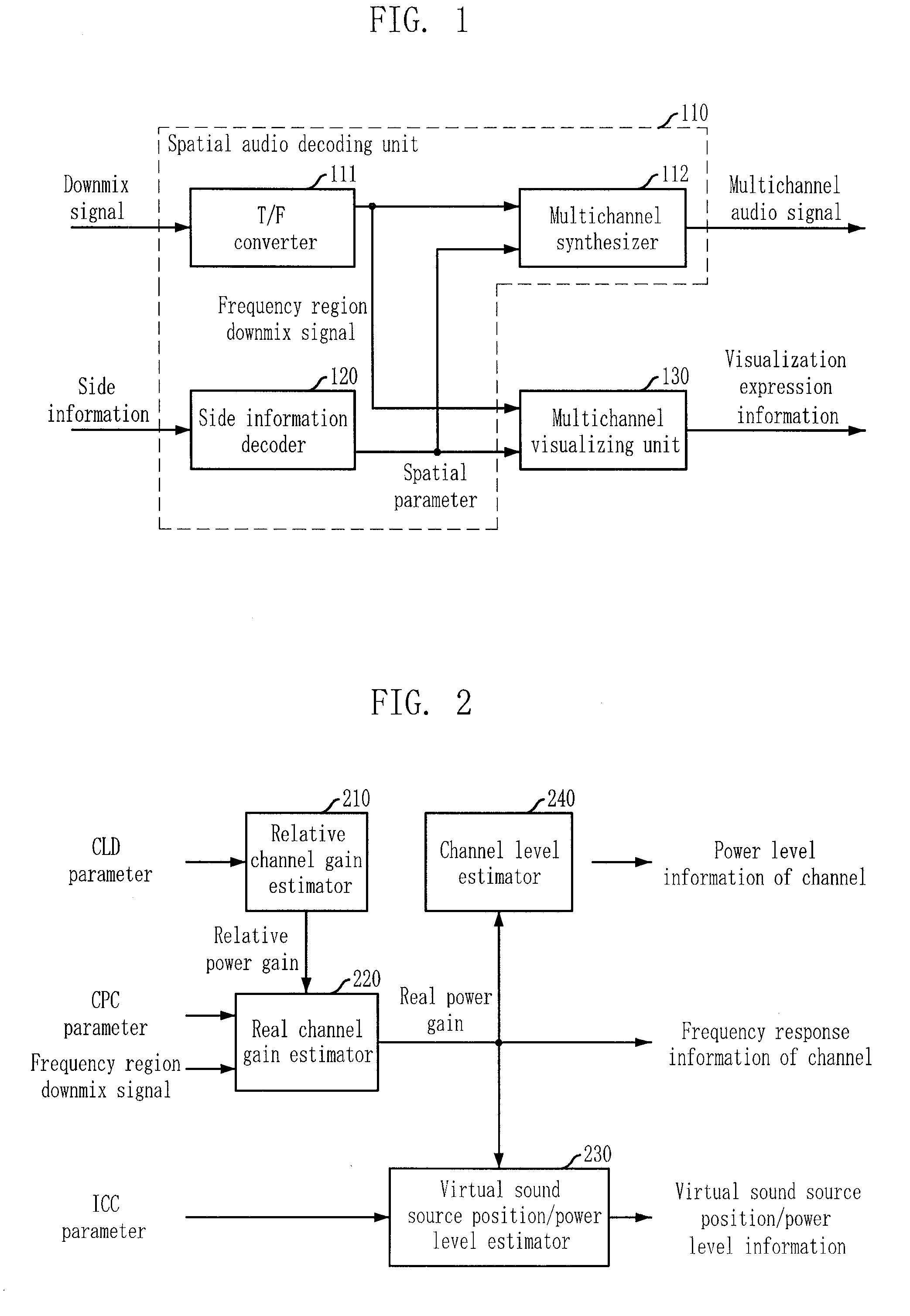

Apparatus and method for visualization of multichannel audio signals

Provided are an apparatus and method for visualizing multichannel audio signals. The apparatus includes a spatial audio decoding unit for receiving a downmix signal of a time domain, converting the downmix signal into a signal of a frequency domain to output a frequency domain downmix signal, and synthesizing a multichannel audio signal based on the spatial parameter and the downmix signal; and a multichannel visualizing unit for creating visualization information of the multichannel audio signal based on the frequency domain downmix signal and the spatial parameter.

Owner:ELECTRONICS & TELECOMM RES INST

Power control method, device and system

The invention provides a power control method, device and system, and the method is used for a first communication node, and comprises the steps: determining Y pieces of spatial parameter informationassociated with uplink transmission; and determining the sending power of X times of repeated transmission of uplink transmission according to the power control parameters associated with the Y piecesof space parameter information, wherein X and Y are integers greater than or equal to 1.

Owner:ZTE CORP

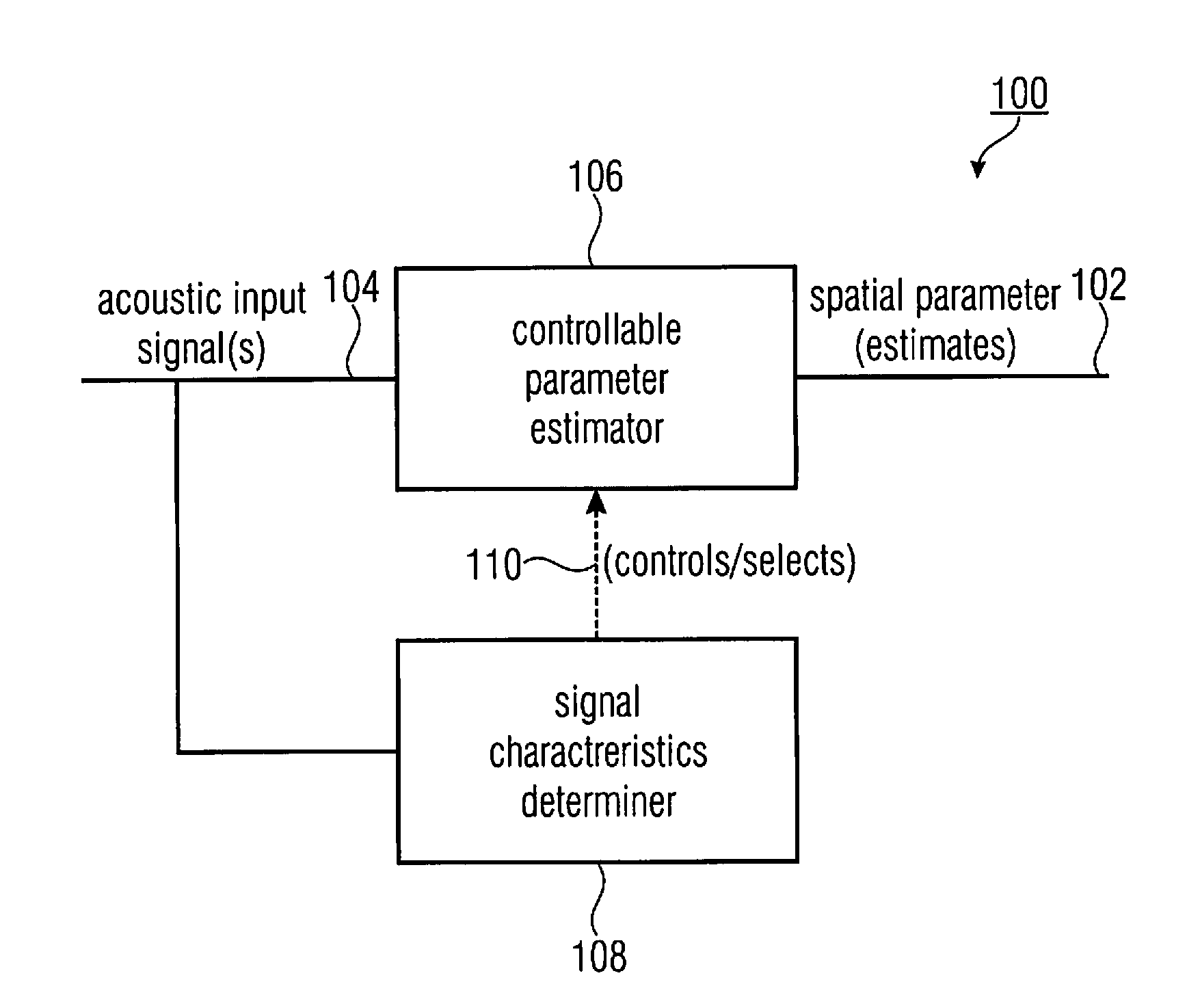

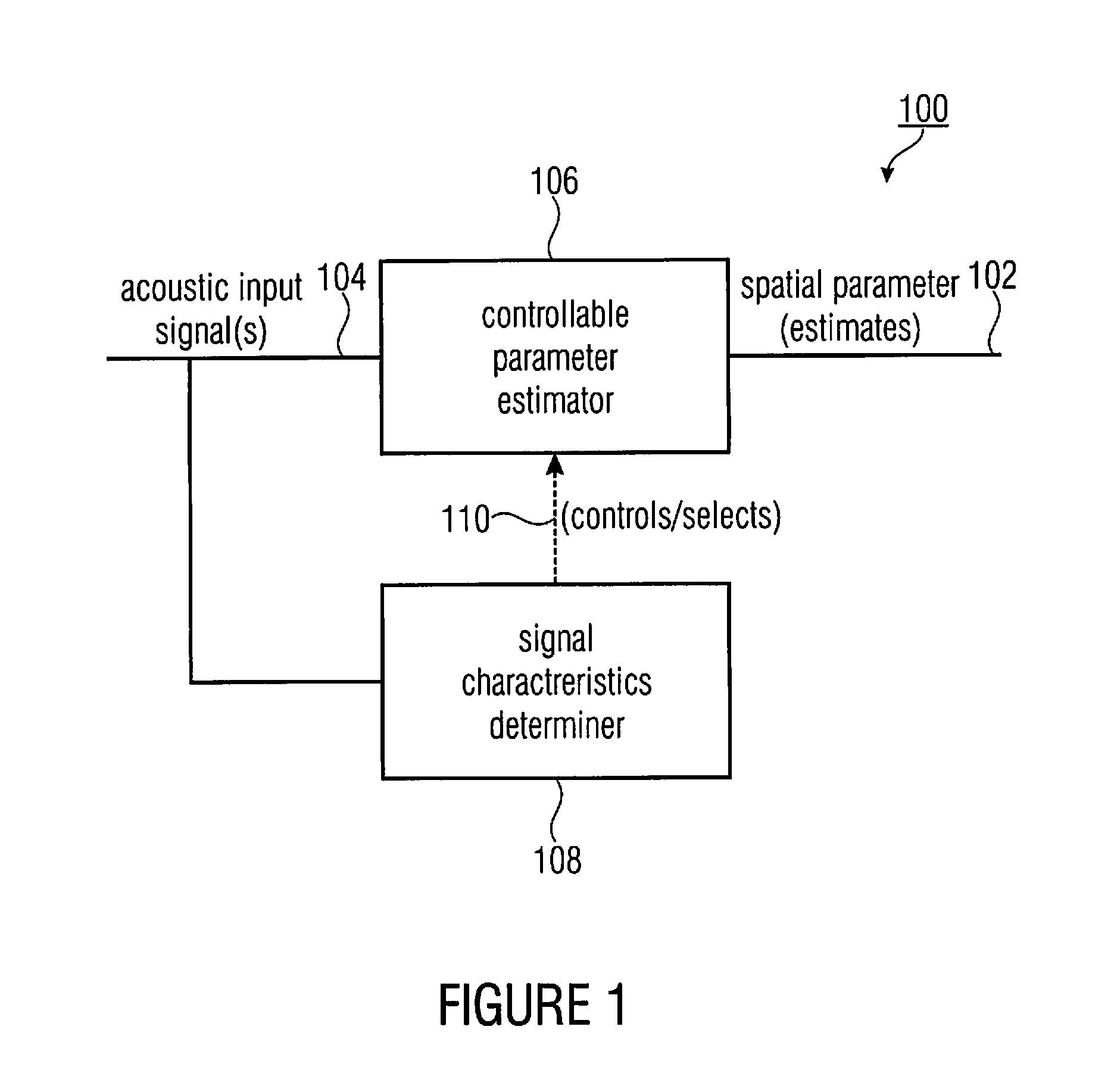

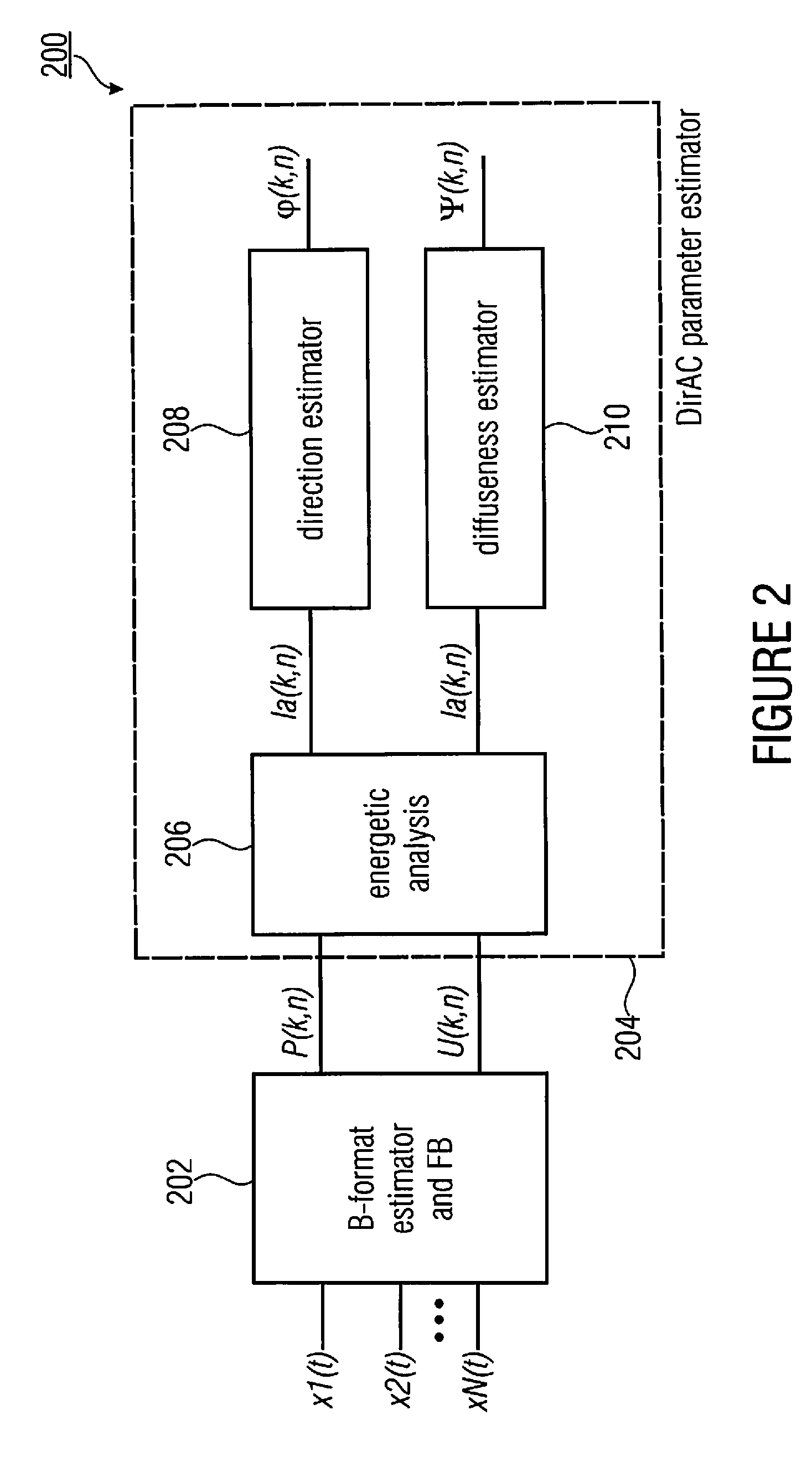

Spatial audio processor and a method for providing spatial parameters based on an acoustic input signal

ActiveUS20130022206A1Keep for a long timeReduces model mismatches caused by a temporal varianceSpeech analysisStereophonic systemsComputer scienceAudio frequency

A spatial audio processor for providing spatial parameters based on an acoustic input signal has a signal characteristics determiner and a controllable parameter estimator. The signal characteristics determiner is configured to determine a signal characteristic of the acoustic input signal. The controllable parameter estimator for calculating the spatial parameters for the acoustic input signal in accordance with a variable spatial parameter calculation rule is configured to modify the variable spatial parameter calculation rule in accordance with the determined signal characteristic.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

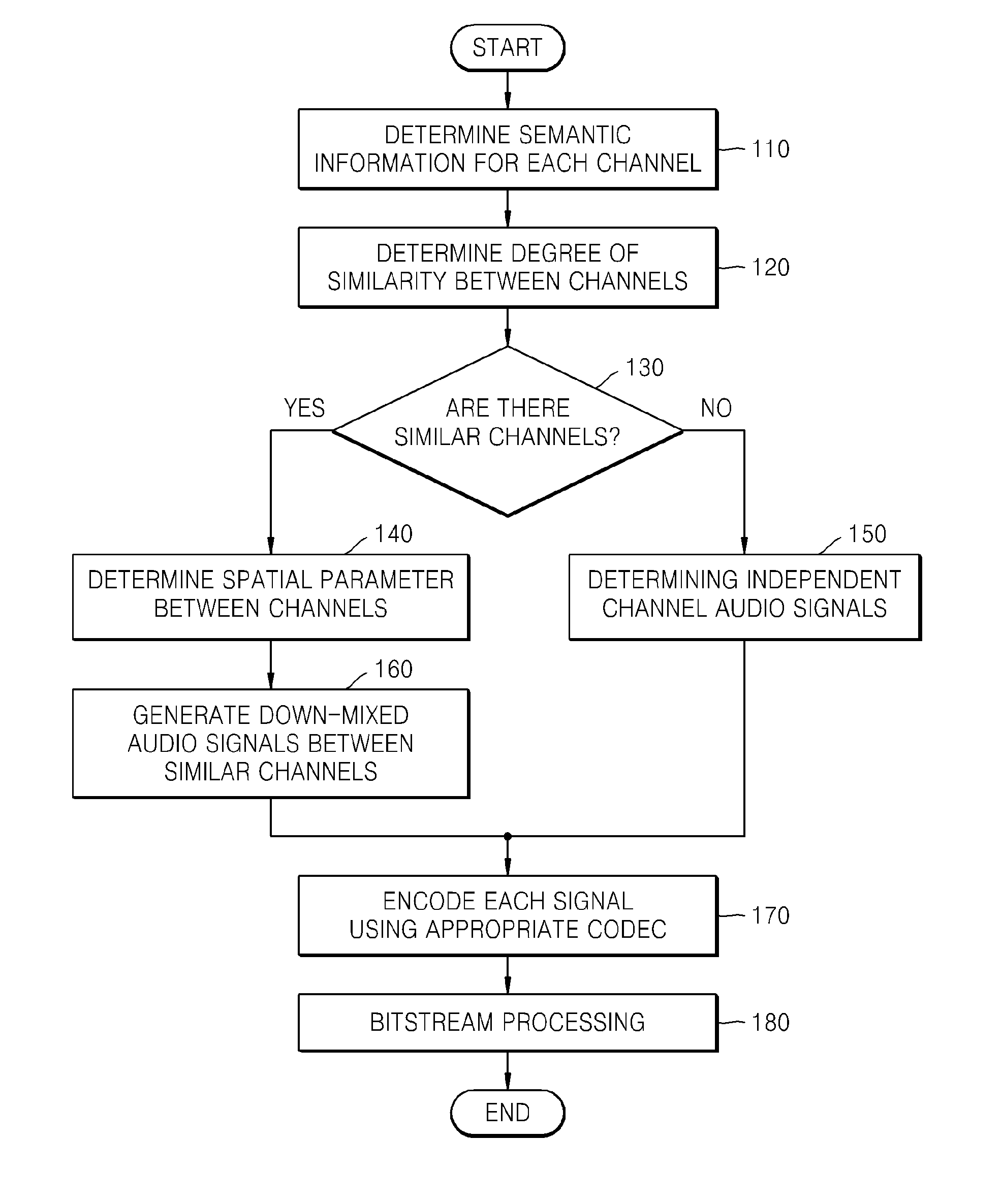

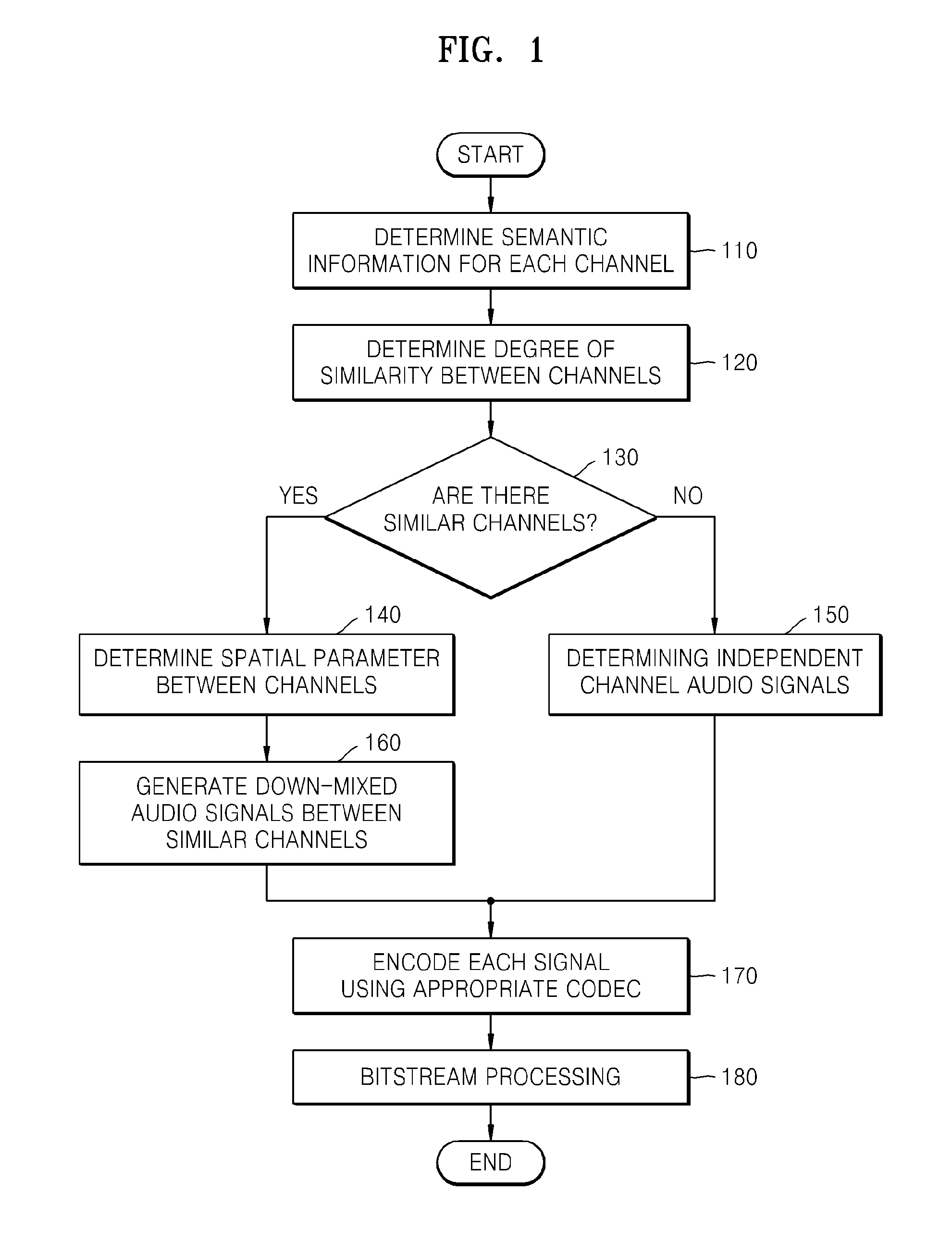

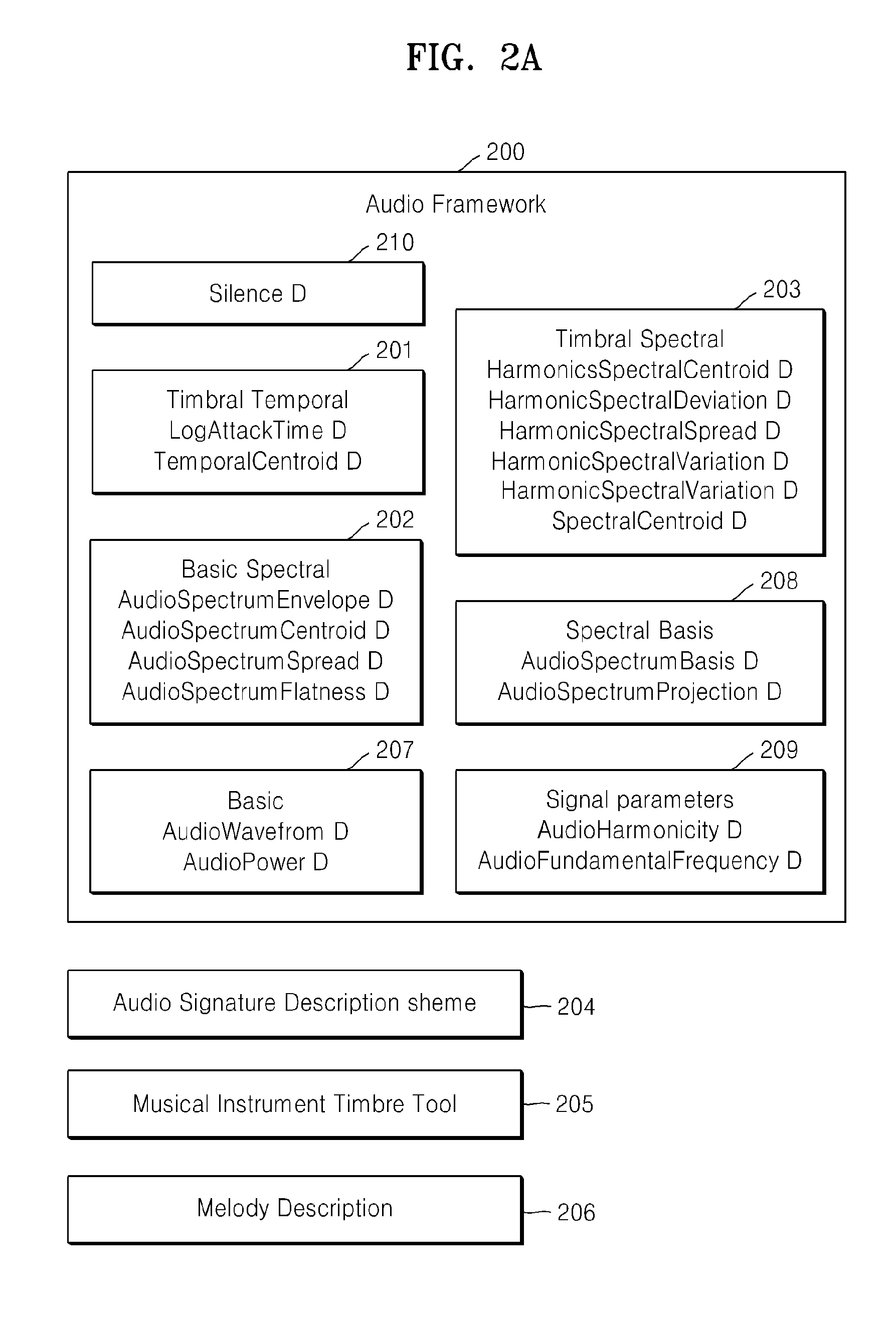

Method and apparatus for encoding/decoding multi-channel audio signal by using semantic information

ActiveUS20110038423A1Efficient compressionEfficiently restoreColor television with pulse code modulationPulse modulation television signal transmissionDecoding methodsVocal tract

A multi-channel audio signal encoding and decoding method and apparatus are provided. The multi-channel audio signal encoding method, the method including: obtaining semantic information for each channel; determining a degree of similarity between multi-channels based on the obtained semantic information for each channel; determining similar channels among the multi-channels based on the determined degree of similarity between the multi-channels; and determining spatial parameters between the similar channels and down-mixing audio signals of the similar channels.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com