Image marking method based on multi-mode deep learning

A deep learning and image annotation technology, applied in the field of image processing, can solve problems such as difficult to achieve satisfactory results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The invention will be described in further detail below in conjunction with the accompanying drawings.

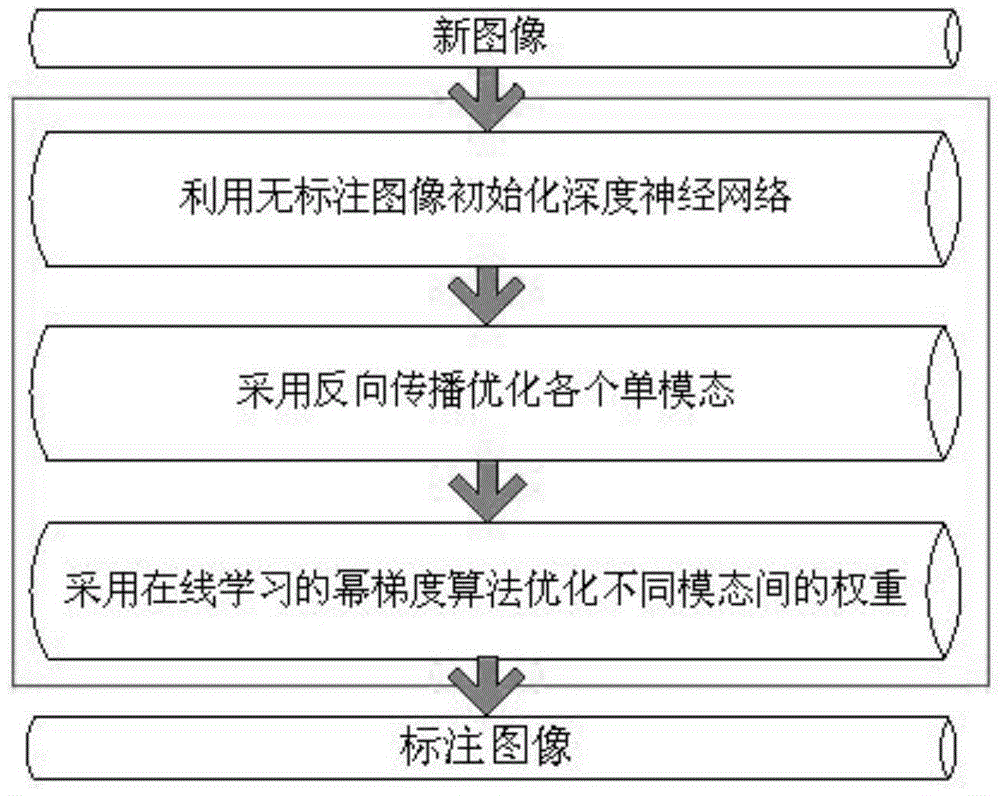

[0051] Such as figure 1 As shown, the present invention provides a method for image labeling based on multimodal deep learning. The method includes: first, using unlabeled images to train a deep neural network; secondly, using backpropagation to optimize each single modality; finally, using Power Gradient Algorithm for Online Learning to Optimize Weights Between Different Modalities.

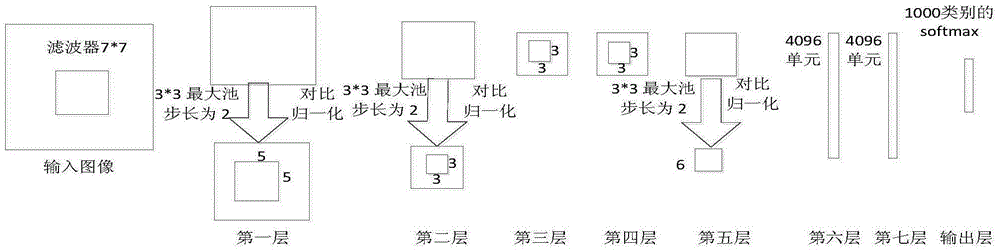

[0052] The deep neural network in the present invention adopts convolutional neural network, and its model structure is as follows figure 2 shown. The present invention evaluates the performance of the image labeling algorithm based on multimodal deep learning proposed by the present invention through a series of experiments.

[0053] Step 1: Introduce the dataset used to evaluate the performance of the algorithm.

[0054] The experiment adopts three public image datasets, includi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com