Audio segmentation based on spatial metadata

A metadata and audio technology, applied in the field of adaptive audio signal processing, which can solve the problem of not using spatial cues

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

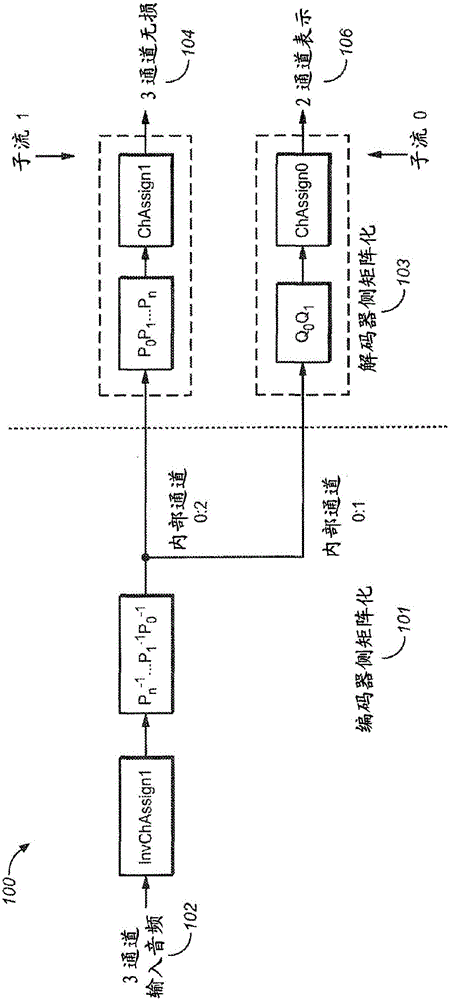

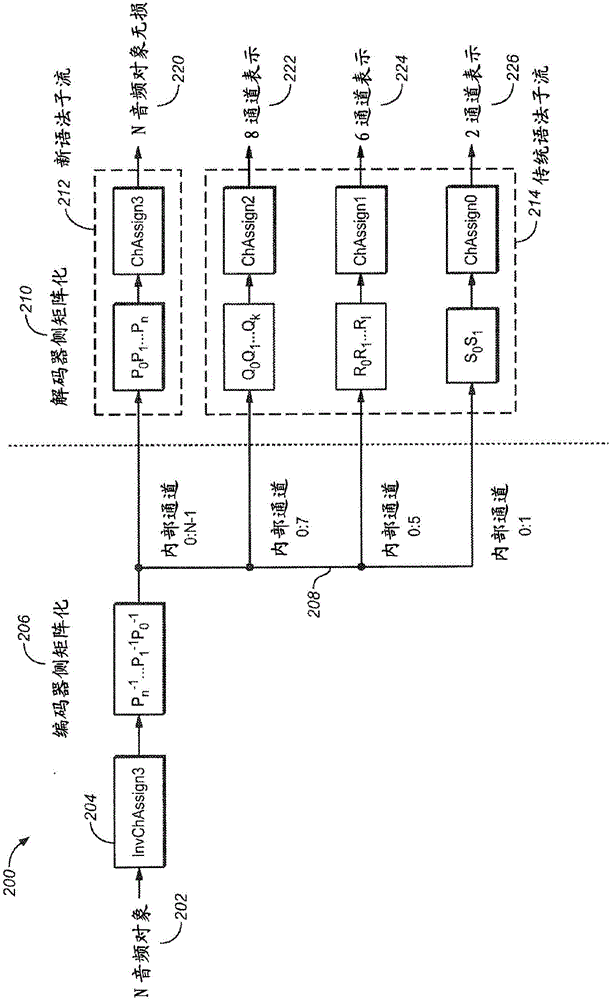

[0224] Embodiments are generally directed to systems and methods for segmenting audio into sub-segments over which a non-interpolable output matrix can remain constant, while achieving continuously varying norms through interpolation of input primitive matrices and being able to utilize triangular matrices update to correct the trajectory. Segments are designed such that matrices specified at the boundaries of these subsegments can be decomposed into primitive matrices in two different ways, one suitable for interpolation all the way to the boundary, and the other suitable from Boundaries start interpolating. This process also marks segments that need to fall back to not interpolating.

[0225] One approach to this approach involves keeping the primitive matrix channel sequence constant. As mentioned earlier, each primitive matrix is associated with the channel it operates on or modifies. For example, consider the order S of the primitive matrix 0 , S 1 , S 2 (The inve...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com