Method of motion vector derivation for video coding

A motion vector and motion information technology, which is applied in the field of video coding and can solve problems such as difficult actual motion and inability to capture complex motion.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] The following description is of the best contemplated mode of carrying out the invention. This description is to illustrate the general principles of the invention and should not be construed as limiting the invention. The scope of the invention can best be determined by reference to the appended claims.

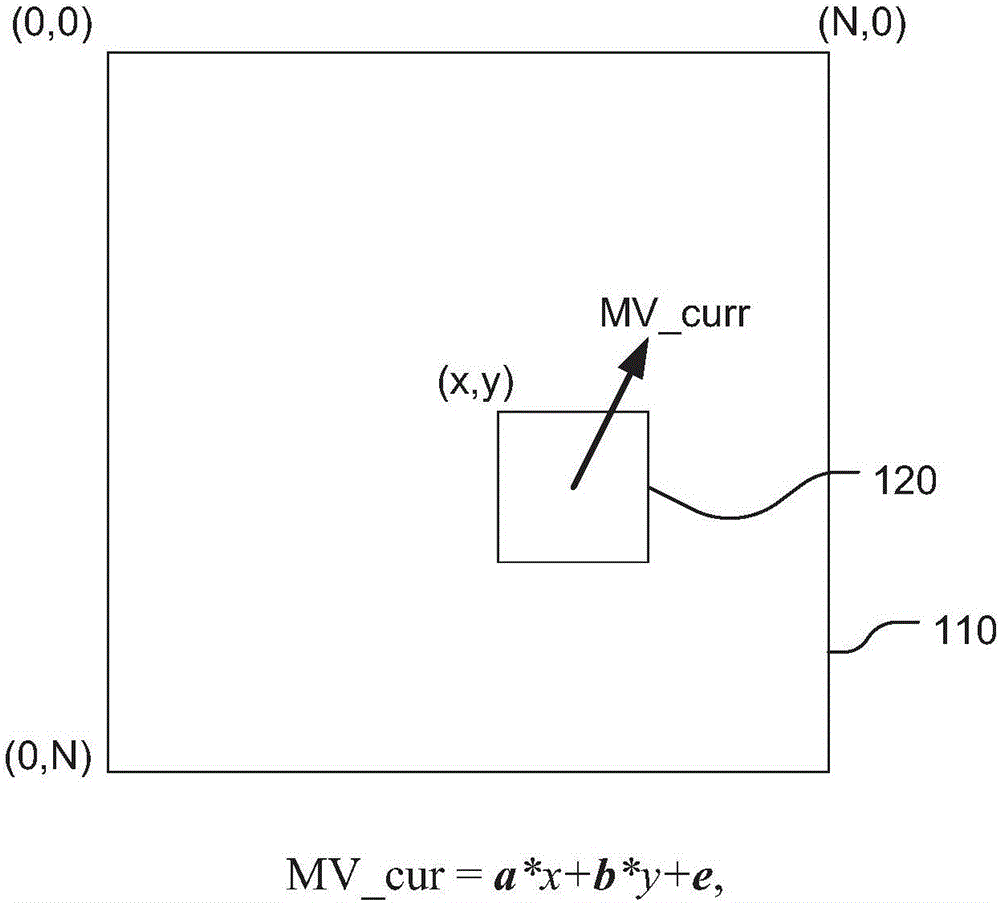

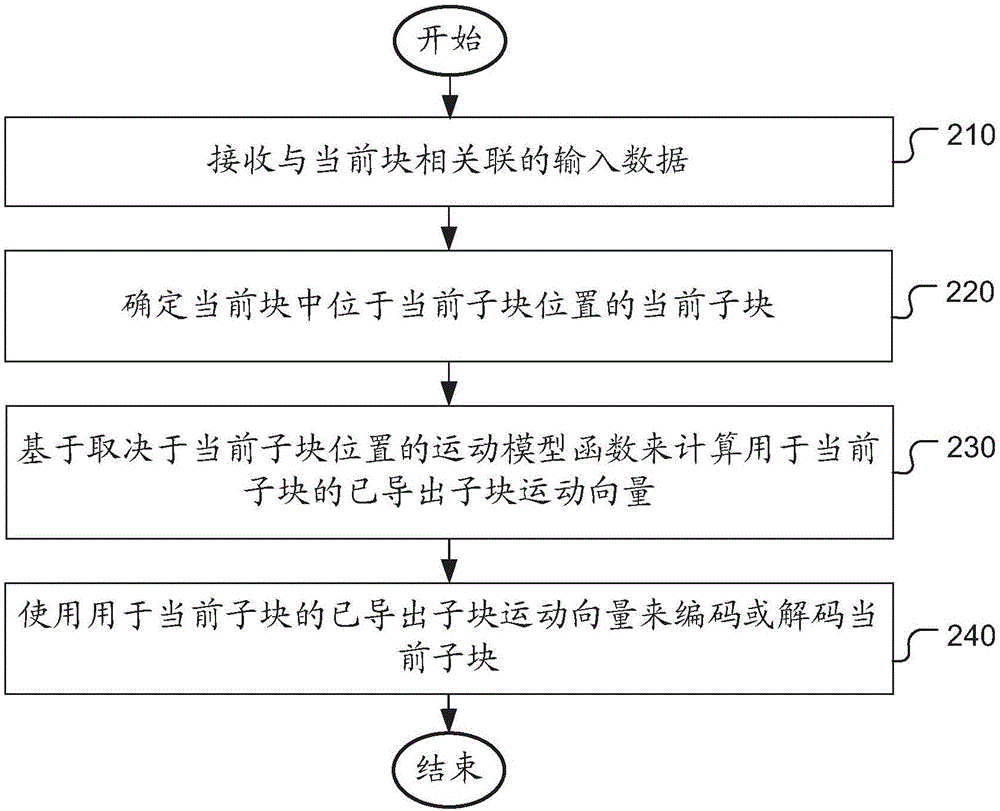

[0020] In order to provide an improved motion description for a block, according to an embodiment of the present invention, a current block (eg: PU in HEVC) is partitioned into multiple sub-blocks (eg, sub-PUs). An MV (also referred to as "derived sub-block's MV") is derived from the motion model for each sub-block. For example, each PU can be partitioned into multiple sub-PUs, and the MV for each sub-PU is derived from the motion model function F(x, y), where (x, y) is the position of the sub-PU, and F is A function representing a motion model.

[0021] In one embodiment, the derived sub-block motion vector MV_cur for a sub-PU is derived by an affine motion model:...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com