Video affine motion estimation method of adaptive factors

An adaptive factor, affine motion technology, applied in the direction of digital video signal modification, electrical components, image communication, etc., can solve problems such as inability to achieve motion estimation/compensation, limited practicality, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] The video affine motion estimation method of adaptive factor of the present invention is characterized in that carrying out according to the following steps:

[0057] Step 1. If all frames of the current Group of Picture (GOP) have been processed, the algorithm ends; otherwise, select an unprocessed frame in the current GOP as the current frame , and use its previous frame as the reference frame ;

[0058] Step 2. If the current frame All the macroblocks of have been processed, then go to step 1; otherwise, select an unprocessed macroblock of the current frame As the current macroblock, let its size be pixel, , Indicates the abscissa and ordinate of the pixel in the upper left corner of the current macroblock, is a preset constant, in this embodiment, let ;

[0059] Step 3. According to the definition of formula (1), use the diamond search method in the size of In the window of pixels, calculate the current macroblock The translational motion vector...

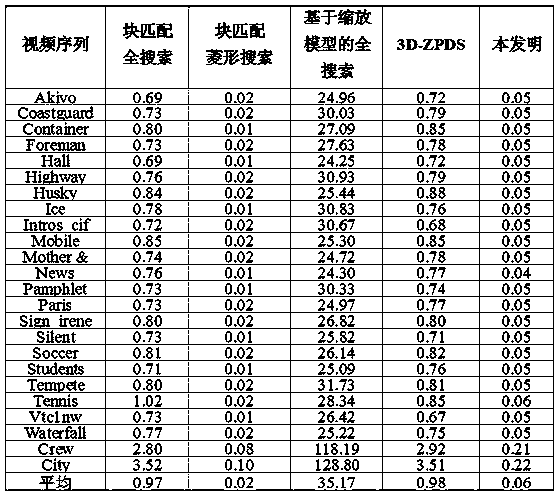

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com