Text emotion classification method based on the joint deep learning model

A technology of emotion classification and deep learning, which is applied in text database clustering/classification, unstructured text data retrieval, character and pattern recognition, etc. It can solve problems such as data sparseness and dimensionality disaster, and achieve improved classification effect and improved The effect of the classification effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

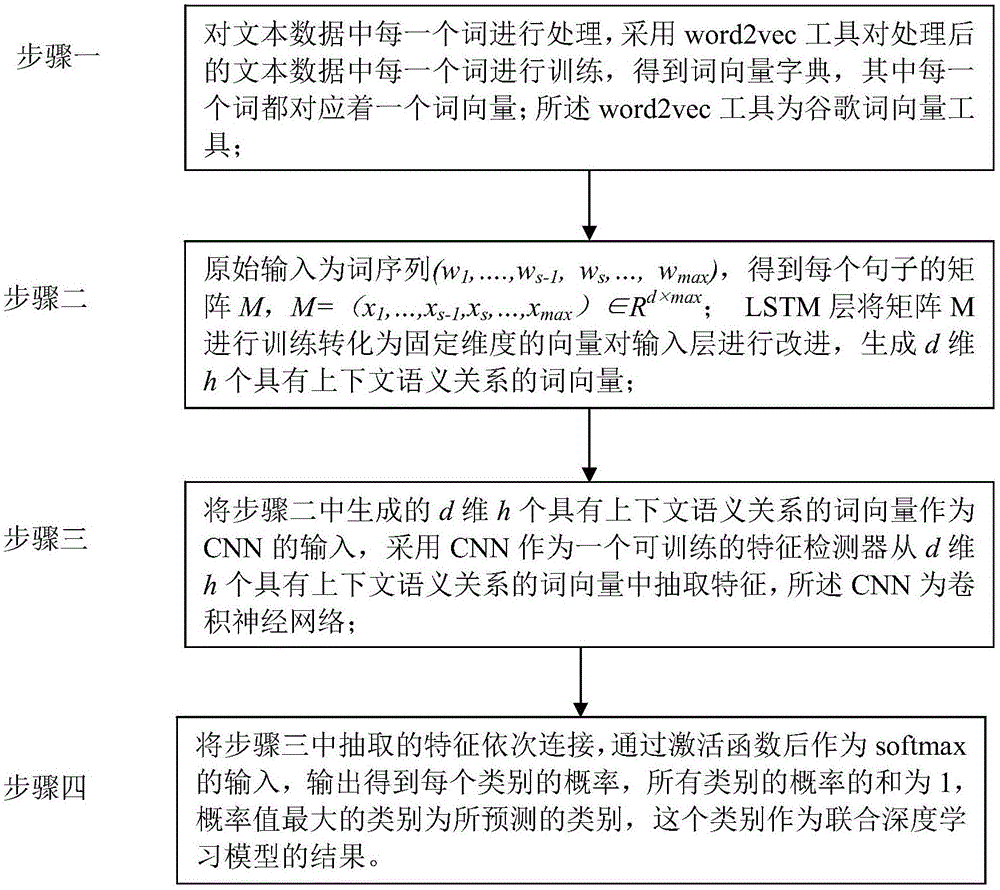

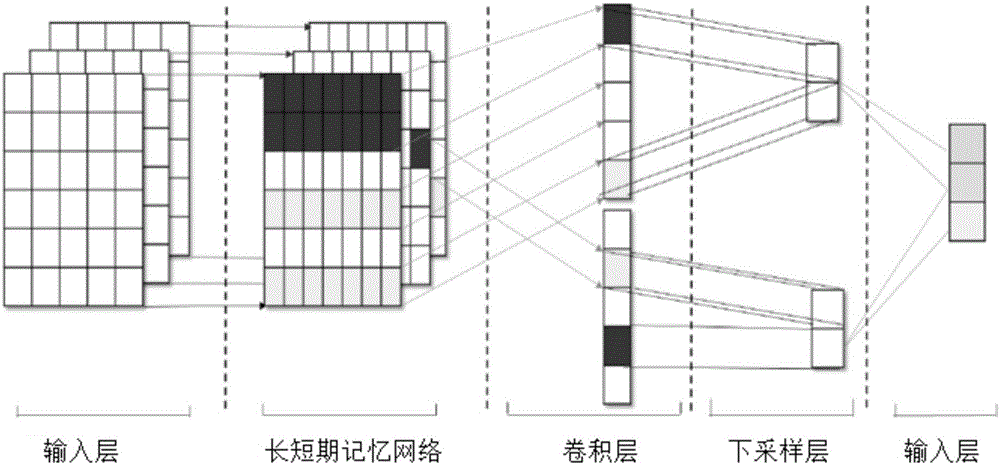

[0019] Specific implementation mode one: as figure 1 and figure 2 As shown, a text sentiment classification method based on a joint deep learning model includes the following steps:

[0020] Step 1: process each word in the text data, use the word2vec tool to train each word in the processed text data, and obtain a word vector dictionary, wherein each word corresponds to a word vector; the word2vec tool is Google Lyric Vector Tool;

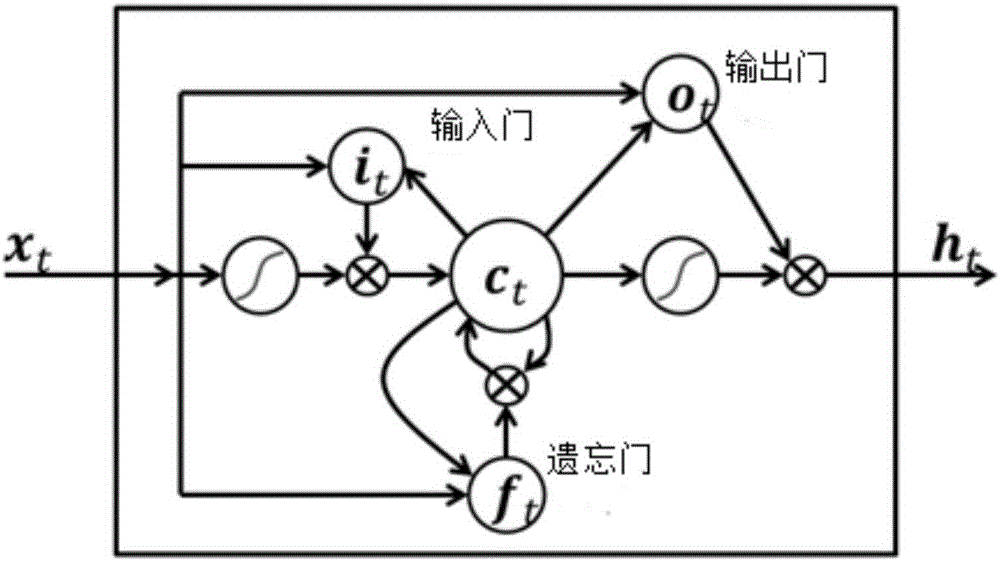

[0021] Step 2: The original input is a word sequence (w 1 ,...,w s-1 ,w s ,...,w max ), get the matrix M of each sentence, M=(x 1 ,...,x s-1 ,x s ,...,x max )∈R d×max ; The LSTM layer trains the fixed-length matrix M into a fixed-dimensional vector (embedding) input layer to improve, and generates d-dimensional h word vectors with contextual semantic relations (the word vectors will follow the training process during the training process) and change); where the w 1 ,...,w s-1 ,w s ,...,w max is the word label corresponding to each ...

specific Embodiment approach 2

[0027] Specific embodiment two: the difference between this embodiment and specific embodiment one is that it is characterized in that: the specific process of processing each word in the text data in the step one is:

[0028] Use the word segmentation program (stutter word segmentation) to segment the sentences in the text data, remove special characters, and retain Chinese characters, English, punctuation and emoticons (special characters such as phonetic symbols, special symbols, etc.).

[0029] Other steps and parameters are the same as those in Embodiment 1.

specific Embodiment approach 3

[0030] Specific embodiment three: the difference between this embodiment and specific embodiment one or two is: the original input is word sequence (w 1 ,...,w s-1 ,w s ,...,w max )Specifically:

[0031] All the words in the text data form a word dictionary, give each word an id label, replace all the words in the text data with the id label, and get the word sequence (w 1 ,...,w s-1 ,w s ,...,w max ), as the input of the joint deep learning model.

[0032] Other steps and parameters are the same as those in Embodiment 1 or Embodiment 2.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com