Online man-machine interaction method based on the E-SOINN network

An E-SOINN, human-computer interaction technology, applied in the field of online human-computer interaction based on the E-SOINN network, can solve problems such as difficult large-scale neural network operations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

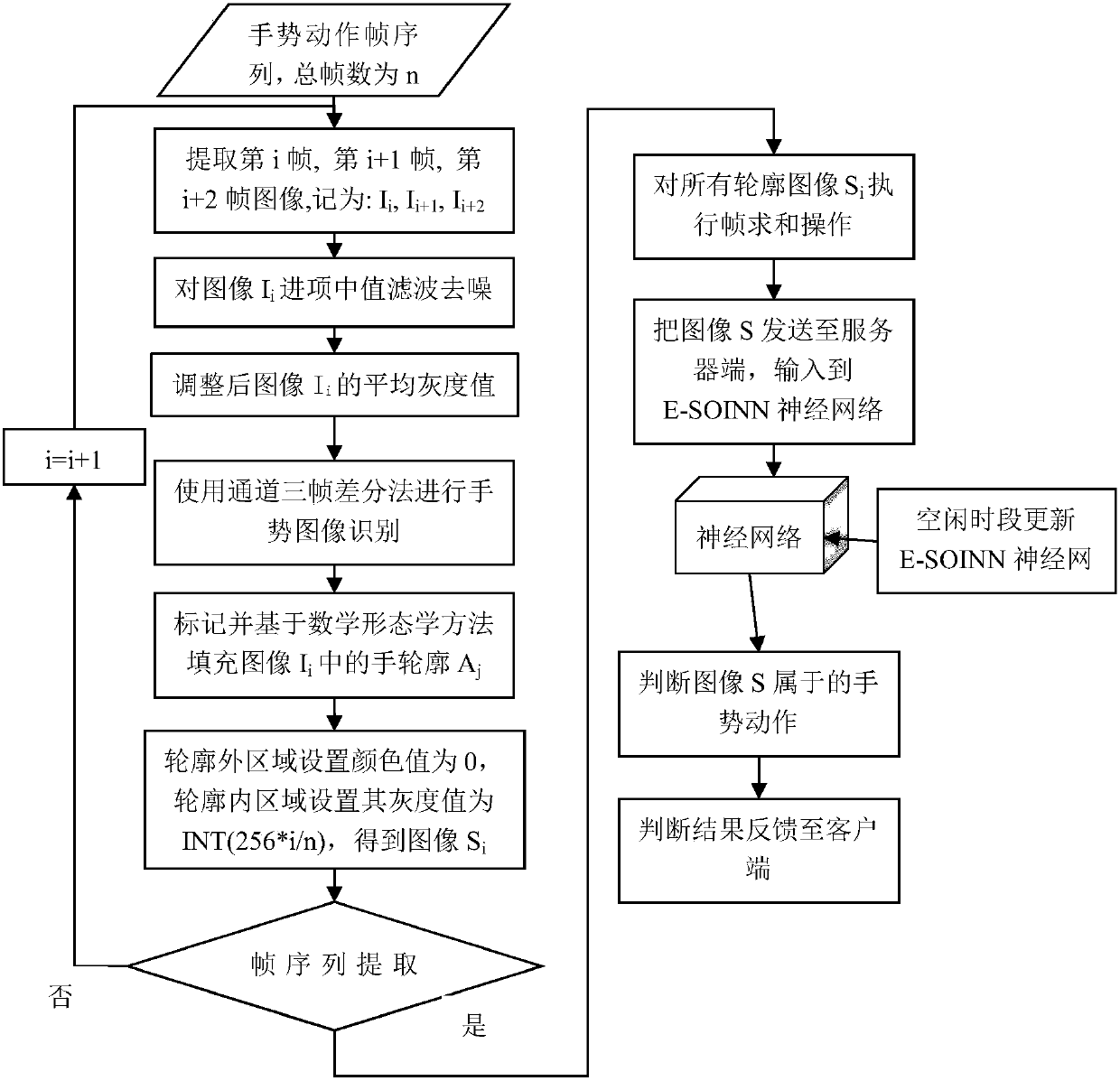

[0041] Such as figure 1 An online human-computer interaction method based on the E-SOINN network is shown, which is mainly divided into two steps: training neural network and gesture interaction recognition.

[0042] 1. The steps to train the neural network are as follows:

[0043] 1. Obtain a gesture frame sequence from the video library, assuming that the total number of frames is n.

[0044] 2. Perform median filtering and denoising to all images in the frame sequence to improve robustness.

[0045] 3. Extract the i frame from the frame sequence, the i+1 frame, the i+2 frame image, denoted as: I i , I i+1 , I i+2 (the initial value of i is 1).

[0046] 4. Suppose the image is an RGB three-channel image, according to the image I i The average value of each of the three components of R G B determines the image I i The average gray value of the image, and then adjust the image I i The RGB value of each pixel such that the adjusted image I i The average values of each ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com