Video data real-time processing method and device based on adaptive tracking frame segmentation

An adaptive tracking and video data technology, applied in the field of image processing, can solve problems such as poor segmentation effect, regardless of the proportion of foreground image, low processing efficiency and segmentation accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0120]Exemplary embodiments of the present disclosure will be described in more detail below with reference to the accompanying drawings. Although exemplary embodiments of the present disclosure are shown in the drawings, it should be understood that the present disclosure may be embodied in various forms and should not be limited by the embodiments set forth herein. Rather, these embodiments are provided for more thorough understanding of the present disclosure and to fully convey the scope of the present disclosure to those skilled in the art.

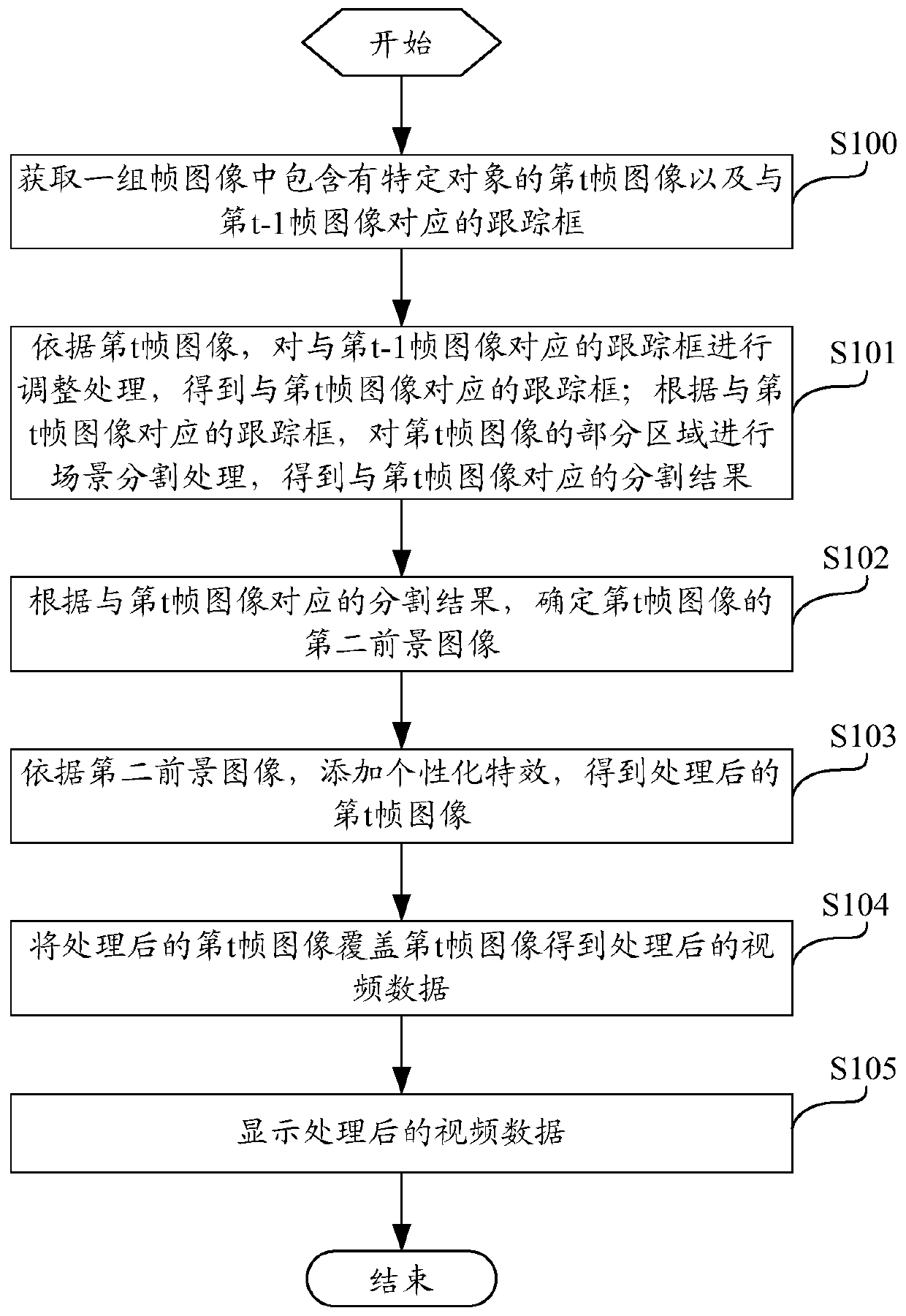

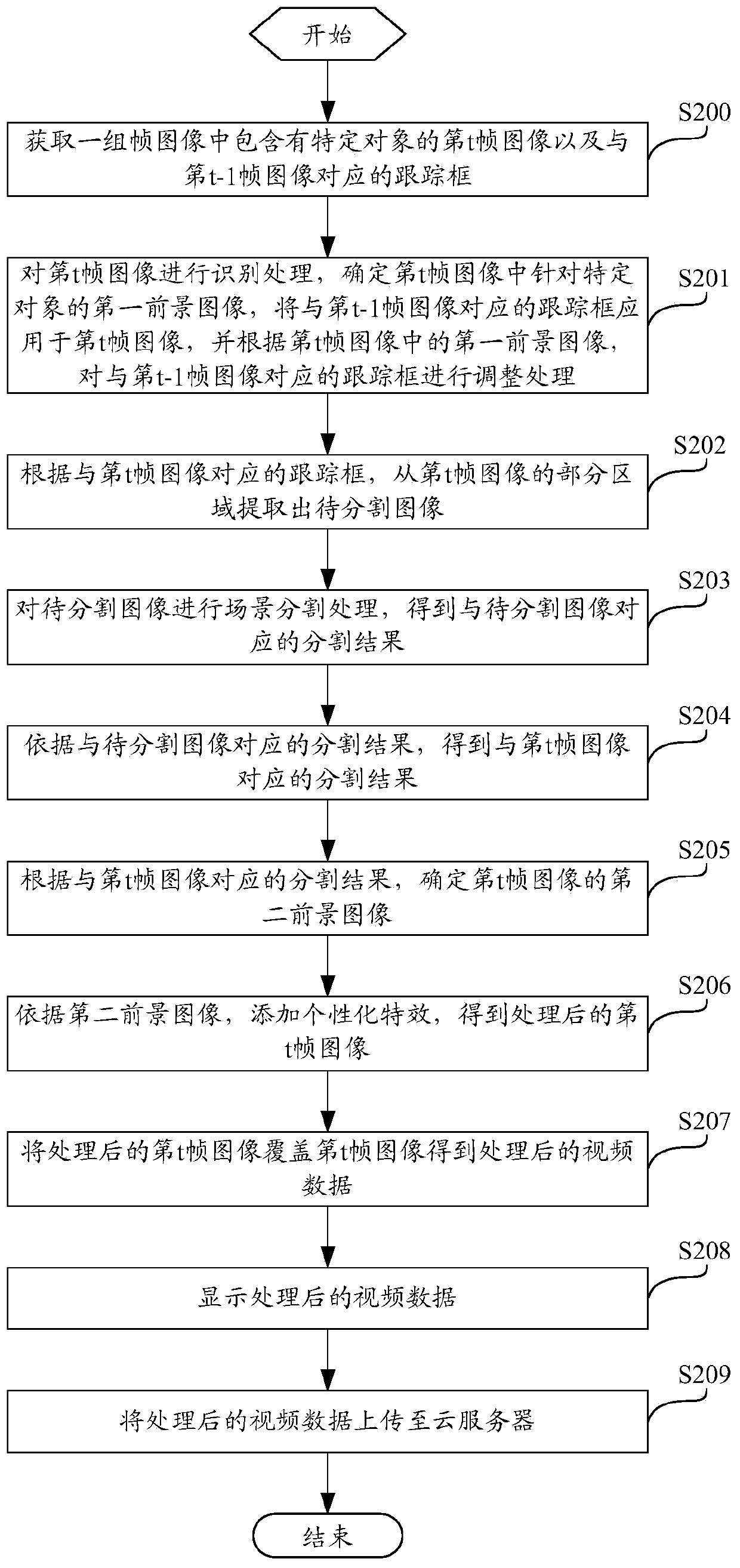

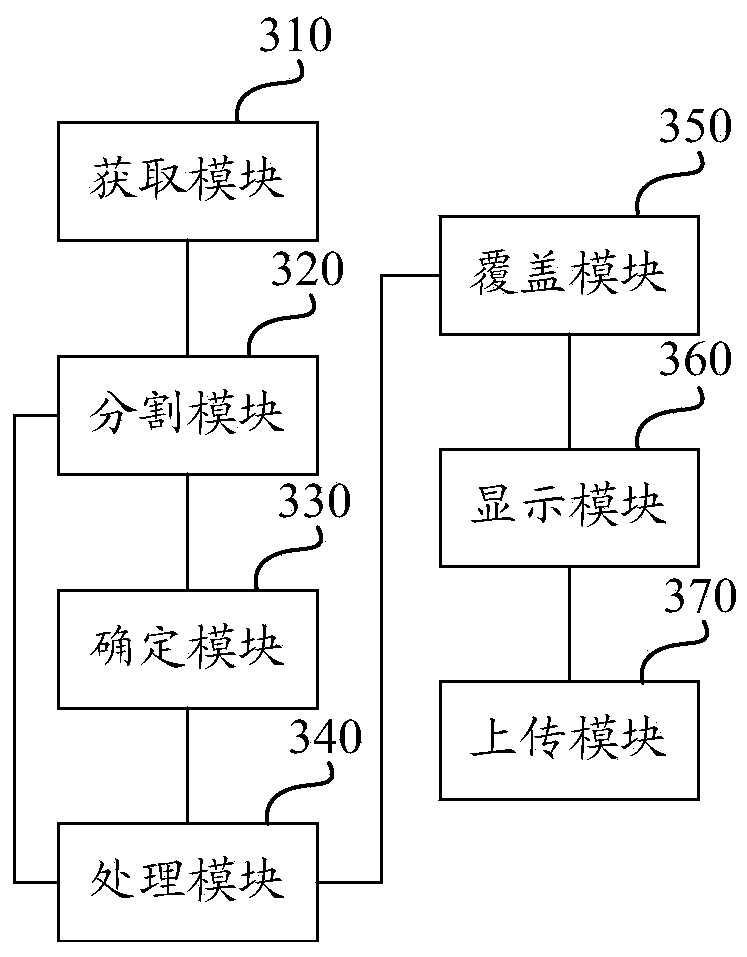

[0121] The present invention provides a real-time processing method of video data based on adaptive tracking frame segmentation, considering that during the process of video shooting or video recording, the number of specific objects photographed or recorded may change due to movement and other reasons. Changes, taking a specific object as a human body as an example, the number of photographed or recorded human bodies may increase or...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com