An Intra-frame Coding Optimization Method Based on Deep Learning

A technology of intra-frame coding and optimization method, which is applied in the field of video coding, can solve the problem that the coding quality and coding complexity cannot be balanced, and achieve the effect of reducing coding complexity, realizing real-time coding, and reducing the burden

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

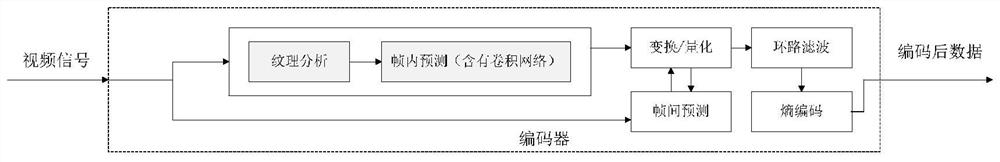

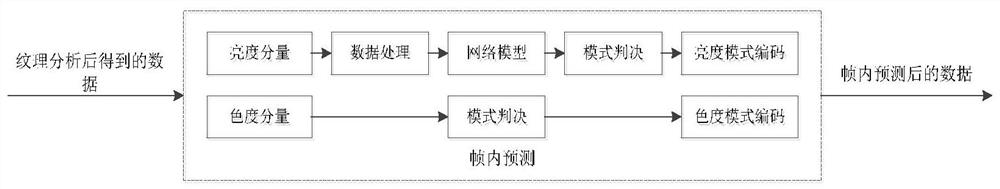

[0038] Such as figure 1 As shown, the deep learning-based intra-frame coding optimization method of this embodiment performs texture analysis on the input video data before intra-frame prediction, and directly assigns the corresponding prediction mode to the video data that can determine the prediction mode after the texture analysis. The video data with uncertain mode is put into the neural network for prediction and then given the corresponding prediction mode, and then the code corresponding to the mode is obtained, and finally the data after intra-frame prediction is obtained through these mode codes.

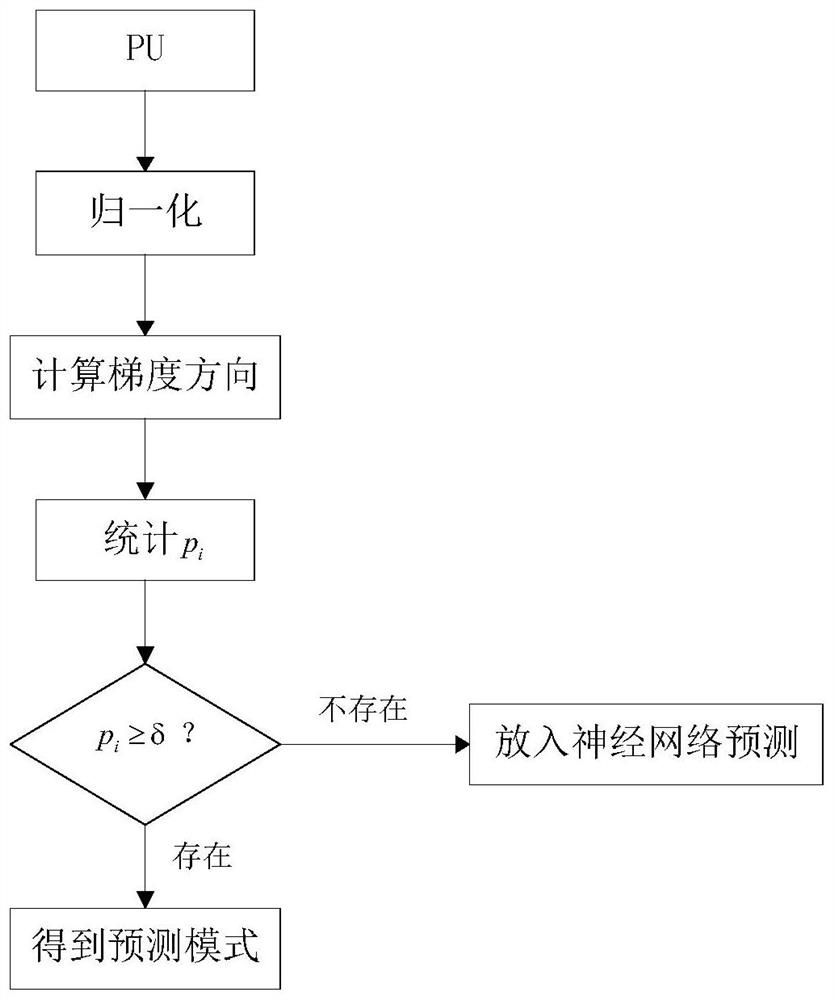

[0039] First, texture analysis is performed on the input video data before intra prediction, such as figure 2 As shown, this step includes the following steps:

[0040] S1: Divide the input video data into multiple prediction units (PU, Predict Unit);

[0041] S2: Normalize the brightness component in each prediction unit; normalize the image, mainly to reduce the influe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com