Heterogeneous image matching method based on deep learning

A heterogeneous image and deep learning technology, applied in the field of image processing, can solve problems such as difficulty in improving accuracy, unfavorable fusion of multi-source data in a dual-branch structure, loss of spatial information in cascaded feature vectors, etc., to improve accuracy, Accelerate network convergence and facilitate the effect of integration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

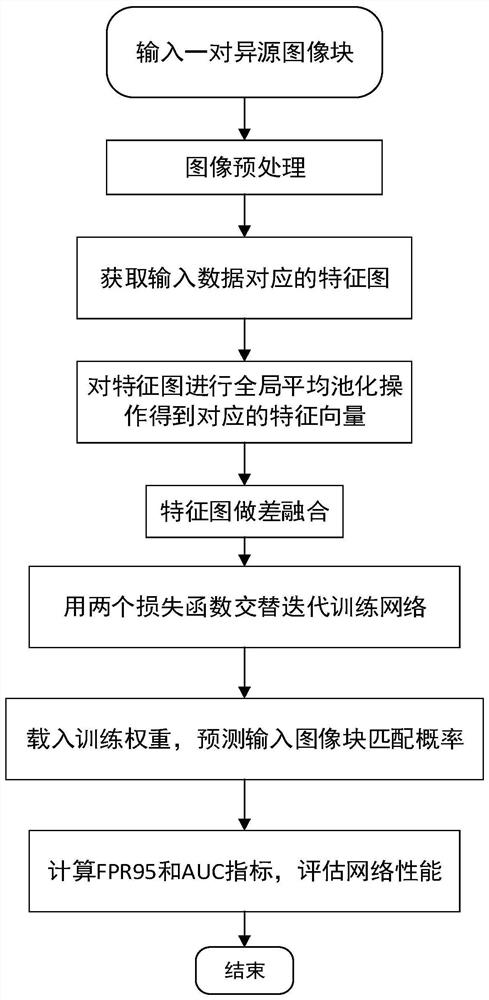

[0061] In view of the current situation described in the background technology, the present invention has carried out research and innovation, and proposed a new heterogeneous image matching method based on deep learning, see figure 1 , including the following steps:

[0062] (1) Make a data set according to the heterogeneous images that need to be matched:

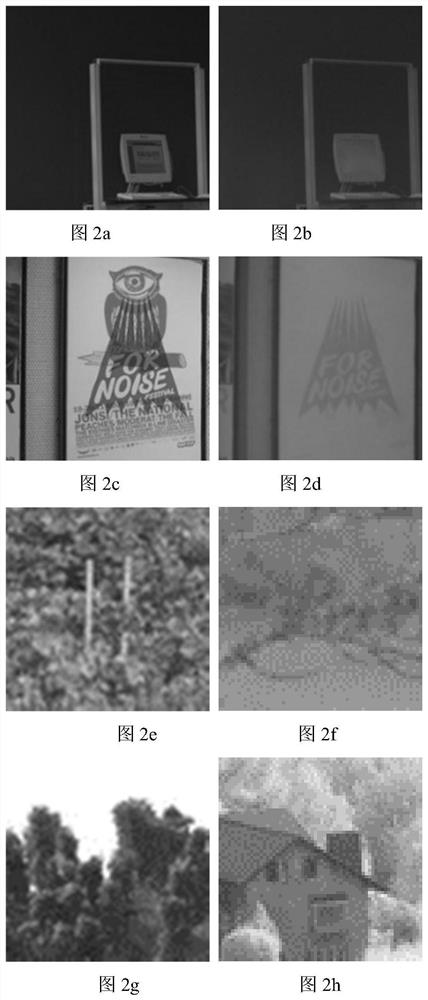

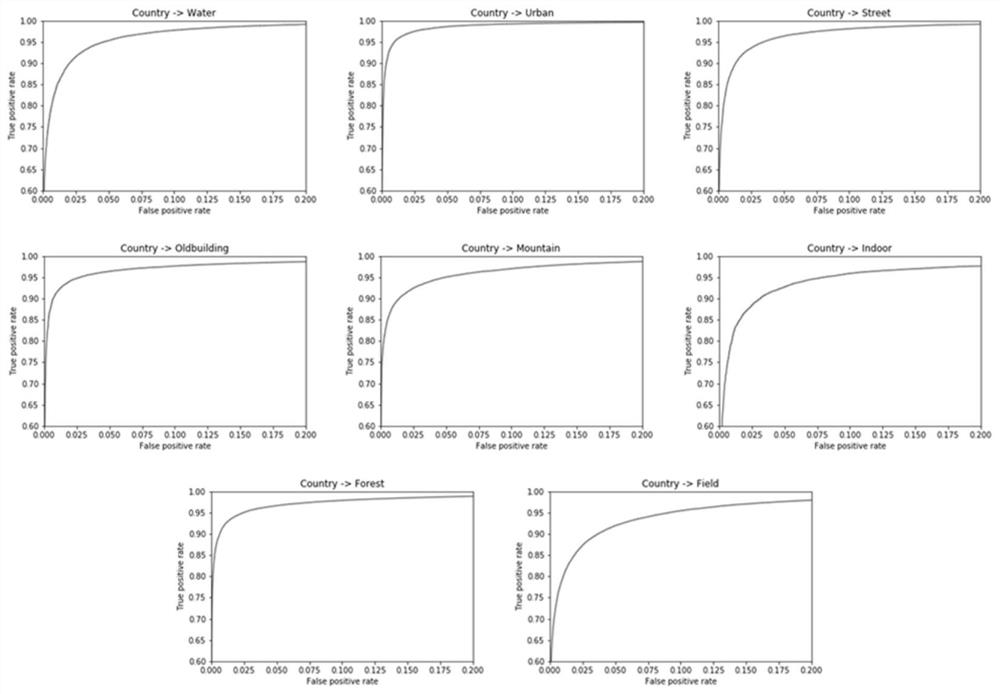

[0063] In order to make the effect of the algorithm more convincing, the present invention uses the public VIS-NIR data set; the data set has 9 groups, respectively: Country, Field, Forest, Indoor, Mountain, Oldbuilding, Street, Urban and Water, wherein , the matched heterogeneous image blocks and the unmatched heterogeneous image blocks in each data set account for half each. At the same time, the label corresponding to the matched heterogeneous image block is recorded as 1, and the label corresponding to the unmatched heterogeneous image block is 0.

[0064] See Table 1 for the size distribution of each group of data ...

Embodiment 2

[0081] The heterogeneous image matching method based on deep learning is the same as embodiment 1, and the method for feature map fusion in step (5) of the present invention specifically includes the following steps:

[0082] (5a) Note that the feature map corresponding to a single visible light image block is V, and the feature map corresponding to a single near-infrared image block is N, then the feature map after fusion is: F=N-V; wherein, V and N have the same size, and are three-dimensional matrices;

[0083] (5b) In order to prevent the existence of a large number of 0s in F and cause the gradient to disappear during the training process, we set the feature map of each batch β={F 1...m} for normalization:

[0084]

[0085]

[0086]

[0087]

[0088] where m represents the number of pairs of heterogeneous image patches input in each batch, and F i Represents the fused feature map corresponding to the i-th input data, γ and λ represent the scaling size and of...

Embodiment 3

[0091] The heterogeneous image matching method based on deep learning is the same as that in Embodiment 1-2, and the calculation process of the contrast loss in step (6a) of the present invention includes the following steps:

[0092] (6a1): Note that the feature vectors of feature map V and feature map N after global average pooling are v and n respectively; then the average Euclidean distance D(n,v) of the feature vector is:

[0093]

[0094] Wherein, k represents the dimension of the feature vector, and k is 512 in this embodiment.

[0095] (6a2): In order to make the D(n,v) corresponding to the matching heterogeneous image block as small as possible, and the D(n,v) corresponding to the unmatched heterogeneous image block as large as possible, then for a single sample we A contrastive loss function is designed:

[0096]

[0097] Among them, y represents the real label of the input data (when the input heterogeneous image block matches, y is 1; when it does not match,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com